Abstract

Purpose

To describe the impact of a tailored web based educational program designed to reduce excessive screening mammography recall.

Methods

Radiologists enrolled in one of four mammography registries in the U.S. were invited to take part and were randomly assigned to receive the intervention or to serve as controls. The controls were offered the intervention at the end of the study, and data collection included an assessment of their clinical practice as well. The intervention provided each radiologist with individual audit data for their sensitivity, specificity, recall rate, PPV and cancer detection rates compared to national benchmarks and peer-comparisons for the same measures; profiled breast cancer risk in each radiologist’s respective patient populations to illustrate how low breast cancer risk is in population based settings, and evaluated the possible impact of medical malpractice concerns on recall rates. Participants’ recall rates from actual practice were evaluated for three time periods: the nine months before the intervention was delivered to the Intervention Group (baseline period), the nine months between the Intervention and Control Groups (T1) and nine months after Completion of the intervention by the Controls (T2). Logistic regression models examining the probability a mammogram was recalled included indication of intervention versus control and the time periods (baseline, T1, T2). Interactions between the groups and time period were also included to determine if the association between time period and the probability of a positive result differed across groups.

Results

Thirty-one radiologists who completed the CME were included in the adjusted model comparing radiologists in the Intervention Group (n=22) to radiologists who completed the intervention in the Control Group (n=9). At T1 the Intervention Group had 12% higher odds of a positive mammogram compared to the Controls, after controlling for baseline (OR=1.12, 95% CI=1.00-1.27, p=0.0569). At T2 a similar association was found; however, it was not statistically significant (OR=1.10, 95% CI=0.96-1.25). No associations were found among radiologists in the Controls when comparing those who completed the CME (n=9) to those who did not (n=10). In addition, we found no associations between time-period and recall rate among radiologists who set realistic goals.

Conclusions

In conclusion, our study resulted in a null effect, which may indicate a single one-hour intervention is not adequate to change excessive recall among radiologists who undertook the intervention we were testing.

Introduction

Recall rates for screening mammography are higher in the U.S. compared to those in other countries (1, 2). Identification of the reasons for this difference has been complex (3-6). The harms associated with unnecessary work up are now well recognized (7, 8) and were part of the rationale for changing the U.S. Preventive Services Task Force screening mammography guidelines (9). If unnecessary recall rates could be diminished and recall brought below minimally acceptable cut-points (10), the number of false-positive examinations could be reduced by 880/100,000 women screened (10). Although several studies have illustrated improved interpretive performance (11-14), they combined several strategies, such as audit data review, participation in a self-assessment and case review program, and increasing interpretive volume. In two of these studies (13, 14,), the intervention content ranged from 8 to 32 hours, which is a significant time commitment for busy clinicians. The extent to which a single interactive audit component may assist in improving performance has not been well evaluated.

We developed an interactive web-based intervention designed to provide peer comparison audit data and to explore individualized factors that may increase recall rates without improving cancer detection. The intervention was implemented using a randomized wait-list study design to assess its impact on reducing excessive recall. The purpose of this paper is to report the findings from this study.

Methods

Performance Data

Four mammography registries of the Breast Cancer Surveillance Consortium (BCSC; http://breastscreening.cancer.gov) participated in this study: Carolina Mammography Registry; Group Health Breast Cancer Surveillance Project in Seattle, WA; New Hampshire Mammography Network; and Vermont Breast Cancer Surveillance System. Patient information and radiologists’ interpretation and follow-up recommendations according to the American College of Radiology Breast Imaging Reporting and Data System (BI-RADS) (15) are collected at all these registries and are later linked to regional cancer registries and/or pathology databases to determine cancer outcomes. All data are annually pooled at the BCSC Statistical Coordinating Center (SCC) located in Seattle, WA for analysis.

Each registry and the SCC received IRB approval for either active or passive consenting processes or a waiver of consent to enroll participants, link data, perform analytic studies, and for all study-related activities described here. All procedures are Health Insurance Portability and Accountability Act (HIPAA) compliant. All registries and the SCC have received a Federal Certificate of Confidentiality and other protection for the identities of women, physicians, and facilities that are subjects of this research (16). In addition, institutional review board approval was obtained at each participating site for all radiologists activities related to this intervention study.

Study Participants & Intervention Development

Radiologist recruitment and intervention development are reported in detail elsewhere (17, 18). Briefly, eligibility included actively interpreting mammograms at a facility at one of the four participating BCSC registries between January 2006 and September 2007. To characterize study participants, we administered a radiologist survey (19). Completion of the survey was not required to participate in the web-based intervention. One hundred and ninety-six radiologists were eligible to take part in the intervention. One hundred and twenty-two did not consent to the intervention, leaving 74 radiologists who did consent. Among these, 46/74 (62.2%) actually logged on to start the intervention, 41/46 (89.1%) of these completed it, and 40 radiologists additionally completed the radiologist survey. Eight radiologists did not have screening mammography interpretation data in the follow-up period and were excluded from analysis, as our outcome measure was reduction in excessive recall. This left 32 radiologists in the study. Of these, 23 were randomly assigned to the Intervention Group and 9 were assigned to the Control Group. Among radiologists who consented but did not complete the intervention, 10 were assigned to the Intervention Group and 12 were assigned to the Control Group.

Intervention development is reported in detail elsewhere (17, 18). Briefly, it was web-based and had three components. Module 1 provided audit data for sensitivity, specificity, recall rate, PPV and cancer detection rates individualized for each participating radiologist with comparisons to both national benchmarks and to peers for the same measures during the same time period. These data were derived from the respective mammography registries associated with the participating radiologists. Module 2 profiled breast cancer risk in each radiologist’s respective patient population, also ascertained from respective BCSC Sites, to illustrate how low breast cancer risk is in population-based settings, and Module 3 presented information on the possible impact of medical malpractice concerns on recall rates, which was shown in our previous research to influence recall rates (20, 21).

Knowledge questions were imbedded into the intervention system that we used to award CME credits. The entire program took an average of 1 hour to complete. Radiologists were able to insert their goals for changes they would like to make in their clinical practice, especially regarding recall rates, into a text field at the end of each module. We defined realistic goals as planned actions that, if implemented, would likely bring their recall rate closer to national targets (22). Using this definition, two authors (PAC, EAS) classified each radiologist’s goals as being realistic or unrealistic.

The main performance outcome in this study was recall rate, defined as the percent of screening mammograms given a BI-RADS assessment of 0, 4, 5,, or 3 with a recommendation for immediate follow-up (i.e. a positive result). For analyses we use a mammogram level binary outcome denoting positive result of the mammogram. We were specifically testing the hypothesis that the radiologists in the Intervention Group would have improved recall rates (lower) when compared to radiologists in the intervention group and that these improvements would be sustained over time. We also wanted to test the effects of the intervention after it was delivered to the Control radiologists.

Data Analyses

Baseline frequency distributions were computed within four subgroups of radiologists (completed/did not complete the intervention and Early/Late intervention group) for radiologist characteristics obtained from the radiologist baseline survey (19) and for radiologists with or without realistic goals to reduce excessive recall (18). Mean recall rates (and standard errors) were also computed at baseline (nine months prior to consent) for all groups. Chi-square tests were performed to compare frequencies and t-tests to compare means between intervention groups within completed/did not complete the intervention subgroup. P-values < 0.05 were considered statistically significant.

Two subpopulation analyses were performed for this study. In Analysis 1, we included radiologists who consented to the intervention but did not complete it. In Analysis 2, we included radiologists who consented and completed the intervention. Within each of these analyses, the radiologists were classified according to intervention (Early/Late) Group.

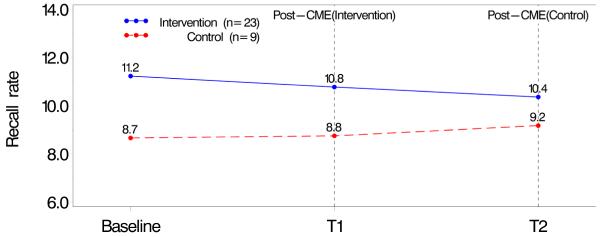

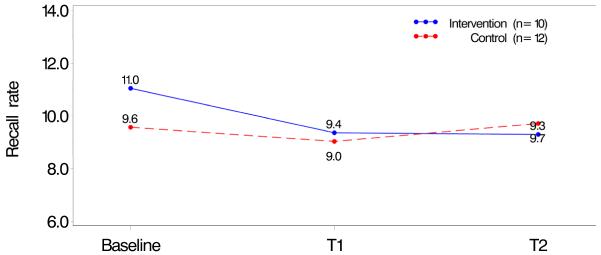

Recall rates were examined during three time periods. In both analyses “baseline” was considered to be the nine months prior to the date the radiologist consented to take the intervention. In Analysis 1, the three periods were: 1) baseline, 2) 0-9 months after consent (T1), and 3) 9-18 months after consent (T2). In Analysis 2, different time periods were used depending on study group assignment. For those in the Intervention Group, recall rate was computed during: 1) baseline, 2) 0-9 months after completion of the CME (T1), and 3) 9-18 months after completion (T2). For the Control Group, the time periods were: 1) baseline, 2) 0-9 months after consent (T1), and 3) 0-9 months after completion (T2). In each analysis recall rates were computed during the three time periods and then compared by intervention group. Figures 1 and 2 show these unadjusted recall rates for both analysis subpopulations.

Figure 1. Recall Rate for Radiologists Who Completed the CME.

Baseline (9 months prior to consent); T1 (Intervention: 0–9 months after completion, Control: 0–9 months after consent T2 (Intervention: 9–18 months after completion, control: 0–9 months after completion)

Figure 2. Recall Rate for Radiologist Who Did Not Complete the CME.

Baseline (9 months prior to consent); T1 (0–9 months after consent); T2 (9–18 months consent)

We compared recall rates across specific radiologist groups: 1) Intervention radiologists who completed the CME compared to Control radiologists, 2) Intervention radiologists who completed the CME compared to Control radiologists who completed the CME later, and 3) Control radiologists who completed the CME compared to the Control radiologists who did not complete the CME. A series of logistic regression models examining the probability a mammogram received a positive result included one of these three comparison groups and time period (baseline, T1, T2). Interactions between the Control group and time period were also included to determine if the association between time period and the probability of a positive result differed across study groups. Models were fit using a random effect for radiologist to account for correlation of multiple mammograms read per radiologist.

The series of increasing adjustment models were: 1) only include a radiologist random effect, 2), further adjust for mammography registry, 3) add characteristics of the women at the time of the mammogram (age group, use of HRT, time since last mammogram, history of a breast procedure), and 4) add radiologist characteristics (academic affiliation, years of interpretation, percent of time in breast imaging, percent of mammograms that are screening). Radiologists missing any of the radiologist characteristics were excluded from the last adjusted model.

Results from the logistic regression models were used to compute relative differences between two comparison groups. Odds ratios comparing the probability of a positive result at T1 and at baseline were computed for the two comparison groups (e.g., Intervention radiologists who completed the CME and Control radiologists). The ratio of these two odds ratios was considered the relative difference between the two groups at T1, while controlling for the probability of a positive result at baseline. Relative differences at T2 were computed similarly. All analyses were performed using SAS (23).

Results

The majority of those completing the intervention were not affiliated with an academic medical center, had not completed fellowship training, had been interpreting mammography for more than 10 years, and spent <40% of their time in breast imaging (Table 1). There were no statistical differences in radiologists’ characteristics according to study group assignment among those who consented and completed and those who consented but did not complete the intervention. Mean recall rates at baseline were 11% (Intervention Group) and 9.6% (Control Group) among radiologists who did not complete the intervention and 11.2% (Intervention Group) and 8.7% (Control Group) among those who completed the intervention (Table 1). There were also no significant differences in continuing medical education preferences, such as instructor versus self-directed interactive activities, willingness to take CME courses on the Internet and interest in free Category 1 CME on the use of audit reports to improve mammography interpretation (data not shown).

Table 1.

Radiologist Characteristics for 54 Radiologists According to Assigned Study Group

| Radiologists who Consented but Did Not Complete the CME |

Radiologists who Consented and Completed the CME |

|||||

|---|---|---|---|---|---|---|

| Characteristics |

Intervention

Group |

Control or

Late Intervention Group |

p

value |

Intervention

Group |

Control or

Late Intervention Group |

p

value |

| TOTAL | (n=10) | (n=12) | (n=23) | (n=9) | ||

| Demographics | ||||||

| Sex | % | % | % | % | ||

| Male | 70.0 | 75.0 | 0.79 | 52.2 | 44.4 | 0.69 |

| Female | 30.0 | 25.0 | 47.8 | 55.6 | ||

| Practice Type | ||||||

|

Primary Affiliation

with Academic Medical Center |

||||||

| No | 70.0 | 83.3 | 0.71 | 87.0 | 77.8 | 0.22 |

| Adjunct | 10.0 | 8.3 | 8.7 | 0.0 | ||

| Primary | 20.0 | 8.3 | 4.3 | 22.2 | ||

|

Breast Imaging

Experience |

||||||

| Fellowship Training | ||||||

| No | 80.0 | 100.0 | 0.10 | 95.7 | 100.0 | 0.53 |

| Yes | 20.0 | 0.0 | 4.3 | 0.0 | ||

|

Years interpreting

mammography |

||||||

| <10 | 30.0 | 8.3 | 0.34 | 13.0 | 44.4 | 0.15 |

| 10-19 | 40.0 | 66.7 | 43.5 | 33.3 | ||

| ≥20 | 30.0 | 25.0 | 43.5 | 22.2 | ||

|

% of time spent in

breast imaging |

||||||

| <20% | 33.3 | 10.0 | 0.23 | 26.1 | 11.1 | 0.13 |

| 20-39% | 22.2 | 30.0 | 39.1 | 22.2 | ||

| 40-79% | 0.0 | 30.0 | 26.1 | 22.2 | ||

| 80-100% | 44.4 | 30.0 | 8.7 | 44.4 | ||

| Missing | 1 | 2 | ||||

|

% of mammograms that are

screening, N (%) |

||||||

| < 85% | 55.6 | 66.7 | 0.60 | 59.1 | 44.4 | 0.46 |

| 85-100% | 44.4 | 33.3 | 40.9 | 55.6 | ||

| Missing | 1 | 1 | ||||

|

Performance

Outcome at Baseline |

||||||

| Mean Recall Rate (SE) |

11.0 (1.7) | 9.6 (1.2) | 0.49 | 11.2 (0.9) | 8.7 (1.5) | 0.11 |

Unadjusted recall rates in the time periods between baseline to T1 and then T2 among radiologists who consented to and completed the intervention ranged from 11.2% to 10.4% in the Intervention Group and from 8.7% to 9.2% in the Control Group (Figure 1) with no statistical differences between the groups. Recall rates in the same time periods among radiologists who consented to but did not complete the intervention ranged from 11.0 % to 9.4% in the Intervention Group and from 9.0% to 9.7% in the Control Group (Figure 2) with no statistical differences between the groups.

Forty-one radiologists were included in the final adjusted logistic regression model comparing radiologists who completed the intervention (n=22) in the Intervention Group to all radiologists (n=19) in the Control Group (Table 2). At T1 the radiologists in the Intervention Group who completed the CME had 11% higher odds of a positive mammogram compared to radiologists in the Control Group after controlling for baseline (OR=1.11, 95% CI=1.00-1.23, p=0.04). A similar association was found at T2 (OR=1.09, 95% CI=0.98-1.21) but was not statistically significant. Thirty-one radiologists who completed the CME were included in the adjusted model comparing radiologists in the Intervention Group (n=22) to radiologists who completed the intervention in the Control Group (n=9). At T1 the early radiologists had 12% higher odds of a positive mammogram compared to the late radiologists, after controlling for baseline (OR=1.12, 95% CI=1.00-1.27, p=0.0569). At T2 a similar association was found, however it was not statistically significant (OR=1.10, 95% CI=0.96-1.25). The difference between these results and those shown in Figure 1 is likely due to the adjustment for radiologist random effect in the models.

Table 2.

Modeling the Probability of Being Recalled Among Study Groups

| Model 1: Intervention vs. Controls |

Model 2: Intervention Early vs. Intervention Late (Controls) |

Model 3: Controls who did Intervention Late vs. Those Who Did Not |

|

|---|---|---|---|

| Ratio of ORs (95% CI) | Ratio of ORs (95% CI) | Ratio of ORs (95% CI) | |

| Adjusting for radiologist random effect | (n=44) | (n=32) | (n=21) |

| Relative change from baseline to time point 1 | 1.11 (1.01-1.23) | 1.13 (1.01-1.27) | 0.97 (0.83-1.12) |

| Relative change from baseline to time point 2 | 1.08 (0.98-1.19) | 1.12 (0.99-1.27) | 0.93 (0.79-1.09) |

| Adjusting for mammography registry a | (n=44) | (n=32) | (n=21) |

| Relative change from baseline to time point 1 | 1.11 (1.01-1.23) | 1.13 (1.01-1.27) | 0.97 (0.83-1.12) |

| Relative change from baseline to time point 2 | 1.08 (0.98-1.19) | 1.12 (0.99-1.27) | 0.93 (0.79-1.09) |

| Adjusting for mammogram-level variables b | (n=44) | (n=32) | (n=21) |

| Relative change from baseline to time point 1 | 1.12 (1.02-1.23) | 1.14 (1.01-1.28) | 0.97 (0.83-1.13) |

| Relative change from baseline to time point 2 | 1.08 (0.98-1.20) | 1.11 (0.98-1.26) | 0.94 (0.80-1.11) |

|

Adjusting for mammogram-level variablesb and

radiologist-level variables c |

(n=41) | (n=31) | (n=19) |

| Relative change from baseline to time point 1 | 1.11 (1.00-1.23) | 1.12 (1.00-1.27) | 0.97 (0.83-1.14) |

| Relative change from prior to consent to time point 2 | 1.09 (0.98-1.21) | 1.10 (0.96-1.25) | 0.98 (0.83-1.16) |

Baseline – 9 months prior to consenting

Time point 1 - NO CME: 0-9 months after consent, CME/EARLY: 0-9 months after completion, CME/LATE: 0-9 months after consent

Time point 2 - NO CME: 9-18 months after consent, CME/EARLY: 9-18 months after completion, CME/LATE: 0-9 months after completion

adjusting for mammography registry and radiologist random effect

mammogram-level variables: mammography registry, age group at time of mammogram, use of HRT, time since last mammogram, history of prior breast procedure; also adjusting for radiologist random effect

radiologist-level variables: academic affiliation, yrs of interpretation, percent of time in breast imaging, percent of mammograms that are screening; also adjusting for radiologist random effect. Models exclude radiologists missing radiologist survey

No associations were found among the late radiologists when comparing those who completed the CME (n=9) to those who did not (n=10) (Table 2). In addition, we found no associations between time point and recall among radiologists who set realistic goals (data not shown).

Discussion

Our study intervention, which was designed to reduce excessive recall, did not have a detectible effect. Recall rates decreased slightly among radiologists who completed the intervention in the Intervention Group and increased slightly in the Control Group, though these differences were not significant. In addition, recall rates differed in these two groups. Radiologists in the Intervention Group had recall rates about 2% higher in the baseline period compared to those in the Control Group. Adjustment for registry, patient and radiologist characteristics did not change our findings significantly. Though the majority of radiologists in our study had recall rates higher than the median 9.7% benchmark for screening mammography (22), the intervention did not reduce recall in actual clinical practice, even among those radiologists who developed realistic goals to do so. Our findings suggest that radiologists who developed goals either did not implement them or if they did, the goals did not reduce recall significantly.

Though MQSA requires all physicians who interpret mammograms to teach or complete at least 15 CME hours in mammography every 3 years, there is little evidence that CME-type interventions improve care (24). Several studies indicate that once physicians practice patterns are established, they are difficult to change (25-27). Numerous reviews have summarized efforts to change practice patterns, and have described six general strategies that have been applied, including education, feedback, participation, administrative rules, incentives, and penalties (28). Although there are examples where many strategies have been successful in isolation, changing physician behavior, given its complexity and susceptibility to multiple influences, will most likely require a combination of approaches (28).

Linver et al (13) found that attendance at a 3-4 day instructor-led educational program in breast imaging improved the cancer detection rate by 40%, although only 12 radiologists were included and this was the first-ever educational program ever attended by most of them. Nowadays all radiologists must earn 15 hours of CME credit every 3 years. In another study conducted by Berg, et al (14), a one-day CME course with a focus on BI-RADS, improved cancer detection by about 25% among 23 radiologists, as measured by a test set administered immediately before and after the intervention. While we are constantly striving to maximize efficiency and shorten the time required of busy practicing clinicians in educational programs, it is possible that our one-hour CME intervention might not have been long enough to have an impact compared to these interventions that required 8 to 32 hours of attendance.

The UK National Health Service Breast Screening Program (NHSBSP) sets very specific national quality assurance standards for mammogram interpretation, and regularly monitors adherence using a quality assurance network (29), which includes regional quality assurance coordinators who meet regularly with radiologists to review performance and outcomes of mammography screening, to share good practice, and to encourage continued improvements. Radiologists are required to rotate through screening and diagnostic clinics and to participate in all activities of the breast care team, including multidisciplinary meetings (30). A supportive environment for this is crucial (11). However, because all of these quality improvement approaches are provided to all NHSBSP radiologists, it is difficult to determine the individual contribution of each component towards improved performance. Our study focused specifically on using peer comparison data, increasing knowledge about screening performance benchmarks and factors that appear to unnecessarily increase recall with a strategy to develop goals for changing clinical practice. We were not successful in demonstrating any reduction in recall as a result of our intervention.

In the U.S., one study by Adhock (12) of radiologist performance derived from the Kaiser Permanente Colorado and the Colorado Tumor Registry compared radiologists’ clinical practice outcome measures to published benchmarks and radiologists received feedback on their results. Performance gaps were analyzed and targeted with specific interventions, such as securing second opinions from another radiologist until performance improved (12). In this study, a statistically significant increase in cancer detection and at an early-stage occurred within a few years without an increase in the number of recalls (12). However, our study suggests that peer comparison along with goal setting is not enough to establish a change in radiologist practice. It may be that a more formal process of ongoing assessment with stronger reinforcement is needed until the behavior change becomes more established. We tested a single intervention delivered in one point in time, and did not produce any change in recall rates.

The strengths of our study include that we developed an intervention based on adult learning theory and tested it using a randomized design that relayed actual clinical performance data to help radiologists assess their current interpretive practices, establish goals likely to reduce excessive recall, and recalibrate their thresholds for recall by illustrating that cancer incidence is low in their own patient populations. We also succeeded in recruiting 54 radiologists, 33.3% of who had screening mammography recall rates above 12% for screening mammography at baseline. Weaknesses include our small sample size, which affected our ability to power this study to detect meaningful differences in recall. While we were able to consent 54 radiologists to enroll in and provide data used in this analysis, we only succeeded in convincing 32 (59.3%) of them to complete the intervention. Clearly more work is needed to understand how best to influence radiologists practice. While several studies have focused on recruitment and retention of patients in clinical studies, studies on physician retention are limited (31) and we found no studies on retaining radiologists in studies on interpretive performance.

In conclusion, we developed and implemented an innovative, web-based educational program that take advantage of computerized registry data collected on community radiologists to provide them with individualized audit feedback. Our study resulted in a null effect, which may indicate a single intervention is not adequate to change excessive recall among radiologists who undertook the intervention we were testing. It is likely that more complex approaches are needed to change radiologists practice patterns.

Acknowledgements

This work was supported by the National Cancer Institute [1R01 CA107623, 1K05 CA104699; Breast Cancer Surveillance Consortium (BCSC): U01CA63740, U01CA86076, U01CA86082, U01CA63736, U01CA70013, U01CA69976, U01CA63731, U01CA70040], the Agency for Healthcare Research and Quality (1R01 CA107623), the Breast Cancer Stamp Fund, and the American Cancer Society, made possible by a generous donation from the Longaberger Company’s Horizon of Hope Campaign (SIRGS-07-271-01, SIRGS-07-272-01, SIRGS-07-273-01, SIRGS-07-274-01, SIRGS-07-275-01, SIRGS-06-281-01).

The collection of cancer data used in this study was supported in part by several state public health departments and cancer registries throughout the United States. For a full description of these sources, please see: http://breastscreening.cancer.gov/work/acknowledgement.html <http://breastscreening.cancer.gov/work/acknowledgement.html> . The authors had full responsibility in the design of the study, the collection of the data, the analysis and interpretation of the data, the decision to submit the manuscript for publication, and the writing of the manuscript. We thank the participating women, mammography facilities, and radiologists for the data they have provided for this study. A list of the BCSC investigators and procedures for requesting BCSC data for research purposes are provided at: http://breastscreening.cancer.gov/ <http://breastscreening.cancer.gov/> .

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Smith-Bindman R, Chu PW, Miglioretti DL, Sickles EA, Blanks R, Ballard-Barbash R, Bobo JK, Lee NC, Wallis M, Patnick J, Kerlikowske K. Comparison of screening mammography in the United States and the United kingdom. JAMA. 2003;290(16):2129–2137. doi: 10.1001/jama.290.16.2129. [DOI] [PubMed] [Google Scholar]

- 2.Hofvind S, Vacek PM, Skelly J, Weaver D, Geller BM. Comparing screening mammography for early breast cancer detection in Vermont and Norway. J Natl Cancer Inst. 2008 Aug 6;100(15):1082–91. doi: 10.1093/jnci/djn224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Elmore JG, Wells CK, Lee CH, Howard DH, Feinstein AR. Variability in radiologists’ interpretations of mammograms. N Engl J Med. 1994;331:1493–9. doi: 10.1056/NEJM199412013312206. [DOI] [PubMed] [Google Scholar]

- 4.Beam CA, Layde PM, Sullivan DC. Variability in the Interpretation of screening mammograms by US radiologists. Arch Intern Med. 1996;156:209–213. [PubMed] [Google Scholar]

- 5.Elmore JG, Miglioretti DL, Reisch LM, et al. Screening mammograms by community radiologists: variability in false-positive rates. J Natl Cancer Inst. 2002;94:1373–80. doi: 10.1093/jnci/94.18.1373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Smith-Bindman R, Chu P, Miglioretti DL, et al. Physician predictors of mammographic accuracy. J Natl Cancer Inst. 2005;97:358–67. doi: 10.1093/jnci/dji060. [DOI] [PubMed] [Google Scholar]

- 7.Woloshin S, Schwartz LM. The benefits and harms of mammography screening: understanding the trade-offs. JAMA. 2010 Jan 13;303(2):164–5. doi: 10.1001/jama.2009.2007. [DOI] [PubMed] [Google Scholar]

- 8.Jørgensen KJ, Klahn A, Gøtzsche PC. Are benefits and harms in mammography screening given equal attention in scientific articles? A cross-sectional study. BMC Medicine. 2007;5:12. doi: 10.1186/1741-7015-5-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Agency for Healthcare Research and Quality . Evidence Syntheses. No. 74. Agency for Healthcare Research and Quality (US); Rockville (MD): Nov, 2009. U.S. Preventive Services Task Force, Screening for Breast Cancer Systematic Evidence Review Update for the US Preventive Services Task Force. Report No.: 10-05142-EF-1. [PubMed] [Google Scholar]

- 10.Carney PA, Sickles E, Monsees B, Bassett L, Brenner J, Rosenberg R, Feig S, Browning S, Tran K, Berry J, Kelly M, Miglioretti DL. Identifying Minimally Acceptable Interpretive Performance Criteria for Screening Mammography. Radiology. 2010;255(2):354–61. doi: 10.1148/radiol.10091636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Perry NM. Breast cancer screening--the European experience. International Journal of Fertility & Women’s Medicine. 2004 Sep-Oct;49(5):228–30. [PubMed] [Google Scholar]

- 12.Adcock KA. Initiative to Improve Mammogram Interpretation. The Permanente Journal. 2004;Vol. 8(No. 2) doi: 10.7812/tpp/04.969. Spring. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Linver MN, Paster S, Rosenberg RD, Key CR, Stidley CA, King WV. Improvements in mammography interpretation skills in a community radiology practice after dedicated courses: 2-year medical audit of 38,633 cases. Radiology. 1992;184(1):39–43. doi: 10.1148/radiology.184.1.1609100. [DOI] [PubMed] [Google Scholar]

- 14.Berg WA, D’Orsi CJ, Jackson VP, et al. Does training in breast imaging reporting and data system (BI-RADS) improve biopsy recommendations of feature analysis agreement with experienced breast imagers at mammography? Radiology. 2002;224:871–880. doi: 10.1148/radiol.2243011626. [DOI] [PubMed] [Google Scholar]

- 15.American College of Radiology Breast Imaging Reporting and Data System (BI-RADS) Copyright 2004. [Google Scholar]

- 16.Carney PA, Geller BM, Moffett H, Ganger M, Sewell M, Barlow WE, Taplin SH, Sisk C, Ernster VL, Wilke HA, Yankaskas B, Poplack SP, Urban N, West MM, Rosenberg RD, Michael S, Mercurio TD, Ballard-Barbash R. Current Medico-legal and Confidentiality Issues in Large Multi-center Research Programs. American Journal of Epidemiology. 2000;152(4):371–378. doi: 10.1093/aje/152.4.371. [DOI] [PubMed] [Google Scholar]

- 17.Carney PA, Geller BM, Sickles EA, Miglioretti DL, Aiello Bowles E, Abraham L, Feig SA, Brown D, Cook A, Yankaskas BC, Elmore JG. Feasibility and Satisfaction Associated with Using a Tailored Web-based Intervention for Recalibrating Radiologists Thresholds for Conducting Additional Work-up. Academic Radiology. 2011;18(3):369–376. doi: 10.1016/j.acra.2010.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Carney PA, Aiello Bowles E, Sickles EA, Geller BM, Feig SA, Jackson S, Brown D, Cook A, Yankaskas B, Miglioretti DL, Elmore JG. Using a Tailored Web-based Intervention to Set Goals to Reduce Unnecessary Recall. Academic Radiology. 2011;Vol. 18(4):495–503. doi: 10.1016/j.acra.2010.11.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Elmore JG, Jackson SL, Abraham L, Miglioretti DL, Carney PA, Geller BM, Yankaskas BC, Kerlikowske K, Onega T, Rosenberg RD, Sickles EA, Buist DSM. Variability in Interpretive Performance of Screening Mammography and Radiologist Characteristics Associated with Accuracy. Radiology. 2009;253:641–651. doi: 10.1148/radiol.2533082308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Elmore JG, Taplin S, Barlow WE, Cutter GR, D’Orsi CJ, Hendrick RE, Abraham LA, Fosse JS, Carney PA. Does Litigation Influence Medical Practice? The Influence of Community Radiologists’ Medical Malpractice Perceptions and Experience on Screening Mammography. Radiology. 2005;236:37–46. doi: 10.1148/radiol.2361040512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Egger JR, Cutter GR, Carney PA, Taplin SH, Barlow WE, Hendrick RE, D’Orsi, Fosse JS, Abraham L, Elmore JG. Mammographers’ Perception of Women’s Breast Cancer Risk. Medical Decision Making. 2005;25:283–289. doi: 10.1177/0272989X05276857. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Rosenberg RD, Yankaskas BC, Abraham L, Sickles EA, Lehman C, Geller BM, Carney PA, Kerlikowske K, Buist DSM, Weaver DL, Barlow WE, Ballard-Barbash R. Performance Benchmarks for Screening Mammography. Radiology. 2006;241(1):55–66. doi: 10.1148/radiol.2411051504. [DOI] [PubMed] [Google Scholar]

- 23.SAS version 9.2. SAS Institute; Cary, NC: [Google Scholar]

- 24.Davis DA, Thomson MA, Oxman AD, Haynes B. Evidence for the Effectiveness of CME: A Review of 50 Randomized Controlled Trials. JAMA. 1992;268(9):1111–1117. [PubMed] [Google Scholar]

- 25.Flocke SA, Litaker D. Physician Practice Patterns and Variation in the Delivery of Preventive Services. J Gen Intern Med. 2007;22(2):191–196. doi: 10.1007/s11606-006-0042-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Greco PJ, Eisenberg JM. Changing physicians’ practices. N Engl J Med. 1993;329(17):1271–3. doi: 10.1056/NEJM199310213291714. [DOI] [PubMed] [Google Scholar]

- 27.Smith WR. Evidence for the Effectiveness of Techniques To Change Physician Behavior. Chest. 2000;118:8S–17S. doi: 10.1378/chest.118.2_suppl.8s. [DOI] [PubMed] [Google Scholar]

- 28.Eisenberg JM. Physician Utilization: The State of Research about Physicians’ Practice Patterns: HSR 84: Planning for the Third Decade of Health Services Research. Medical Care. 1985;23(5):461–483. [PubMed] [Google Scholar]

- 29. [accessed, 2011];UK National Health Services Breast Screening Program. http://www.cancerscreening.nhs.uk/breastscreen/index.html.

- 30.National Radiographers Quality Assurance Coordinating Group [accessed, 2011];2000: NHSBSP 63: Quality assurance guidelines for mammography: Including radiographic quality control. http://www.cancerscreening.nhs.uk/breastscreen/publications/nhsbsp63.html.

- 31.Center for Information and Study on Clinical Research Participation [Accessed 4/25/12]; http://www.ciscrp.org/professional/facts_pat.html.