Abstract

Purpose/Objectives

To develop and assess the reliability and validity of an observational instrument, the Process and Quality of Informed Consent (P-QIC).

Design

A pilot study of the psychometrics of a tool designed to measure the quality and process of the informed consent encounter in clinical research. The study used professionally filmed, simulated consent encounters designed to vary in process and quality.

Setting

A major urban teaching hospital in the northeastern region of the United States.

Sample

63 students enrolled in health-related programs participated in psychometric testing, 16 students participated in test-retest reliability, and 5 investigator-participant dyads were observed for the actual consent encounters.

Methods

For reliability and validity testing, students watched and rated videotaped simulations of four consent encounters intentionally varied in process and content and rated them with the proposed instrument. Test-retest reliability was established by raters watching the videotaped simulations twice. Inter-rater reliability was demonstrated by two simultaneous but independent raters observing an actual consent encounter.

Main Research Variables

The essential elements of information and communication for informed consent.

Findings

The initial testing of the P-QIC demonstrated reliable and valid psychometric properties in both the simulated standardized consent encounters and actual consent encounters in the hospital setting.

Conclusions

The P-QIC is an easy-to-use observational tool that provides a quick assessment of the areas of strength and areas that need improvement in a consent encounter. It can be used in the initial trainings of new investigators or consent administrators and in ongoing programs of improvement for informed consent.

Implications for Nursing

The development of a validated observational instrument will allow investigators to assess the consent process more accurately and evaluate strategies designed to improve it.

More than 107,800 registered clinical trials involving human participants currently are taking place in 174 countries (National Institutes of Health, 2011), representing a small portion of ongoing clinical research worldwide. Healthcare providers rely on clinical research to advance treatments, decrease incidence of reoccurrence, and inform strategies for primary prevention and early detection, particularly in cancer care. The Clinical Trials Cooperative Group Program, sponsored by the National Cancer Institute (NCI), registers more than 25,000 clinical research participants each year from more than 3,100 institutions and more than 14,000 individual investigators in the United States, Canada, and Europe (NCI, 2009).

For most protocols, participants sign a written consent form to provide evidence that they have read about and received an explanation of the research. However, data continue to demonstrate that participants are not able to recall essential information about the studies in which they have agreed to participate (Brown, Butow, Butt, Moore, & Tattersall, 2004; Santen, Rotter, & Hemphill, 2008). After increased government regulation (Shalala, 2000), attention in the media (Foderaro, 2009), and oversight by institutional review boards, little indication exists that participant comprehension has improved (Stepan et al., 2011).

Although written consent generally is highly standardized and structured (Grossman, Piantadosi, & Cohavey, 1994; National Patient Safety Agency, 2009), less is known about the content and quality of the verbal interaction during the consent process (Brown, Butow, Butt, et al., 2004). Tools to measure informed consent focus primarily on postconsent recall (Dresden & Levitt, 2001; Ferguson, 2002; Guarino, Lamping, Elbourne, Carpenter, & Peduzzi, 2006; Joffe, Cook, Cleary, Clark, & Weeks, 2001; Lavori, Wilt, & Sugarman, 2007; Miller, O’Donnell, Searight, & Barbarash, 1996). Lindegger et al. (2006) developed and compared four alternative methods for assessing a study participant’s understanding of informed consent: self-report, forced-choice checklist, vignettes, and narratives. Their study suggested that the levels of measured understanding are dependent on the methods of assessment used and that closed-ended measures such as self-reports and checklists may overestimate understanding compared to more open-ended measures. The authors concluded that although the open-ended assessments are more resource intensive, they may provide more accurate measures of what participants actually understand (p < 0.0005) (Lindegger et al., 2006).

In a small number of published studies, consent interactions have been tape-recorded (Brown, Butow, Ellis, Boyle, & Tattersall, 2004; Jenkins, Fallowfield, Souhami, & Sawtell, 1999; Tomamichel et al., 1995). Tomamichel et al. (1995) analyzed audiotapes using Meenveins’ model and found them to be useful for identifying pitfalls in communication. The authors concluded that greater attention should be paid to the indirect and implied messages that may affect participants’ decision making when considering clinical research (Tomamichel et al., 1995). In the study, the authors recommended that investigators become more skillful in providing adequate information and improve their methods of communication. Brown, Butow, Ellis, et al. (2004) concluded an observational tool was necessary to adequately assess nonverbal and indirect acts that were not captured on the audiotape. Albrecht, Blanchard, Ruckdeschel, Coovert, and Strongbow (1999) analyzed videotaped consent encounters, and Ness, Kiesling, and Lidz (2009) performed discourse analysis on videotaped consent interactions. Investigators in both studies documented a number of issues, including inadequate information being conveyed, failure to confirm that potential participants understood the research, therapeutic misconception, and ambiguity about voluntary consent. Albrecht et al. (1999) and Ness et al. (2009) identified the need for a quantitative, standardized observational tool so that investigators and researchers could identify areas of strength and weakness within the consent encounter. Lavori et al. (2007) concluded that observation of the actual informed consent process is feasible and ideal because it allows better understanding of the context of the encounter. Direct observation of the consent process offers some distinctive benefits. For example, if in a postrecall questionnaire participants do not remember the purpose of the study, it may be because they forgot, they were not told, they were told in a manner or language that they did not understand, or they received a mixed message from the investigator (Flory & Emanuel, 2004). Direct observation also could account for other causes, such as therapeutic misconception—the possibility that patients are interpreting the study as being of personal therapeutic benefit when it actually is designed to advance scientific knowledge in general (Henderson, 2006).

The authors conducted a critical review of the literature (Cohn & Larson, 2007) in which 25 interventional studies designed to improve informed consent were examined to evaluate the number of participants, outcome measures (e.g., tools used to measure the quality of the consent process), and other factors. Eighteen of the 25 studies (72%) used the required written elements of informed consent as an outcome (Council for International Organizations of Medical Services [CIOMS], 2002; U.S. Department of Health and Human Services, 2005), and 7 (28%) measured a variety of elements of the communication process; however, none assessed both. The authors concluded that inconsistencies existed in the definitions and ways of measuring the informed consent process and, therefore, intervention results could not be compared across studies. Results also indicated that a successful consent process must include, at a minimum, the use of various communication modes (e.g., written, verbal, asking the participant to repeat what he or she understands), and is likely to require one-on-one interaction with someone knowledgeable about the study, such as a consent educator. Based on these findings, the authors developed a model in which informed consent is positioned at the intersection of accurate factual information and effective communication (see Figure 1). The authors then sought to define the essential elements of information and communication.

Figure 1.

A Model of the Informed Consent Encounter

Information in Informed Consent

The essential informational elements of informed consent were derived from two source documents. The first was a consensus document that detailed a series of meetings of the CIOMS (2002) and Family Health International (Rivera & Borasky, 2009), an organization that conducts biomedical and social research worldwide. Critical informational elements of informed consent identified in the report included a statement that the study involved research, a description of the research, the expected duration of participation, the risks or discomforts, the benefits, the alternatives to the study, voluntary participation, compensation, confidentiality, and contacts for the investigator. The second source document was U.S. Department of Health and Human Services (2005) Federal Regulation Title 45, which identified the same informational elements as CIOMS as well as contact information for institutional review boards. Both documents recommend that consent forms be written below the high school reading level, aiming for the sixth- to eighth-grade level instead. In addition, both recommended that written consents not contain long sentences, words with more than three syllables, or medical terminology.

Communication in Informed Consent

Characterizing the essential elements of the communication process in informed consent posed a greater challenge. The authors used three source documents: The Essential Elements of Communication in Medical Encounters: The Kalamazoo Consensus Statement (Makoul, 2001); the Conversation Model of Informed Consent (Katz, 2002); and a Delphi consensus-building document that discussed the essential elements of comprehension in informed consent (Buccini, Caputi, Iverson, & Jones, 2009). Several published reports cite the strength of the conversation model (Delany, 2008) and components of the Kalamazoo Consensus Statement (Brown, Butow, Butt, et al., 2004; Ness et al., 2009) for use in clinical research because they stress equality and dialogue in the participant-researcher exchange. The authors incorporated specific actions for enhancing this process and developed them as the essential elements of communication, such as checking for understanding through the use of a playback (i.e., the participants repeat the details of the study in their own words, using easy-to-understand language, and avoiding medical jargon).

The authors found no tool in the literature that could measure an observed informed consent encounter quantitatively, so they sought to develop a new instrument, integrating the essential elements of factual information for informed consent with essential elements of communication to assess the consent process as a whole. The Process and Quality of Informed Consent (P-QIC) was designed to evaluate informed consent for research in the clinical setting and interventions designed to improve it. In addition, the tool can be used to educate researchers and others who obtain informed consent.

The aim of the current study was to develop and assess the reliability and validity the P-QIC.

Methods

Instrument Development and Testing

The initial version of the tool was adapted from an observer checklist developed by the institutional review board at Columbia University Medical Center in New York, NY, for quality-assessment purposes. The checklist consisted of 25 items that measured the informational aspects of consent but did not address the process of communication in the interaction. The checklist was reviewed for inclusiveness and validity by four experts in the institutional review board consent process and by five clinicians, including three physicians, a public health researcher, and a bioethicist, all of whom had expertise in communication as well as experience in obtaining consent. The checklist then was pilot tested by 13 additional participants. Pre- and post-testing established that the checklist accurately reflected information important to the consent process (content and construct validity). After slight modifications, the inter-rater reliability of the checklist was assessed by eight research assistants who had not been part of the development of the tool, but had extensive experience in obtaining informed consent. With regression analysis, intraclass correlations were calculated as a measure of the inter-rater agreement and found to be 0.89. After the initial work was completed at Columbia University, the checklist was sent to the Morehouse School of Medicine in Atlanta, GA, where it was reviewed by five individuals, including a principal investigator, two experienced research coordinators, an inexperienced research assistant, and a research participant, to ensure that racial and cultural considerations were taken into account. That review resulted in shortening and rewording questions and adding a Likert-type scale. The authors undertook a second iteration of the observational tool, adding indicators to measure the essential elements of the communication process (Katz, 2002; Makoul, 2001).

The new tool, the P-QIC, then was modified to reduce the number of items to 20 and combine similar indicators. The instrument was reviewed again by seven institutional review board members and six consent administrators from two large health systems, resulting in additional minor modifications. The final P-QIC has 20 items—14 mandated informational elements and 6 elements associated with increased communication skills (e.g., playback).

Approval from the Columbia University Medical Center institutional review board was obtained prior to the start of the study. The authors then used two methods to assess the psychometric properties of P-QIC: The tool was used to rate four video simulations that depicted scripted but realistic consent interactions and to observe actual consent interactions in the clinical setting.

Sample

The current study included three types of participants: graduate students of the health sciences schools (e.g., dentistry, medicine, nursing, public health), consent administrators, and study participants from Columbia University Medical Center. Those eligible to participate were graduate students, enrolled in a course for which knowledge of informed consent was a stated curriculum objective, who had completed the basic human participant research training modules required for conducting research at Columbia University Medical Center. The rationale for using these students was that they had a similar profile and background to research assistants, consent administrators, and study coordinators in the study institution and most academic hospitals. In the live consent observations, those eligible to participate were study coordinators from Columbia University Medical Center and patients who were being consented for participation in clinical research.

Development of the Standardized Simulations

With approval from the institutional review board and the principal investigator, a consent form from a National Institutes of Health–funded study was used as a template for the scenarios. Two sets of professionally filmed simulations were developed, each depicting a different consent encounter designed to vary from the others in process and quality. The intentional variation was accomplished by including and excluding essential elements of information and communication. The simulation scripts and scenarios were reviewed for content validity by nine consent administrators and members of the institutional review board from two large academic health systems.

The videos were recorded in mock examination rooms with volunteer actors. In each scenario, the consent administrator approached the potential participant, explained the study, and requested consent for participation. Two sets of four scenarios were produced, each with identical content and script, but in one set the participant was a 72-year-old Jewish man and, in the other, a 44-year-old Latina. Scenarios ranged in length from three to six minutes. The scenarios varied in the following ways.

The information scenario comprehensively incorporated the informational elements (e.g., purpose of the study, risks, benefits of participation), but intentionally lacked in communication skills.

The communication scenario incorporated the important aspects of communication (e.g., stopped, answered questions, used a playback method for checking understanding), but included only minimal information about the research.

The combination scenario included the majority of informational elements and used principles of communication.

The null scenario included only minimal information and did not demonstrate adequate communication processes.

To minimize response set bias, the authors designed all scenarios to include and exclude at least one essential element of informed consent, so that even the best scenario would not score 100% and the worst would not have a minimum score. Using videotaped simulations reduced the potential for variation that might have occurred with live, scripted encounters.

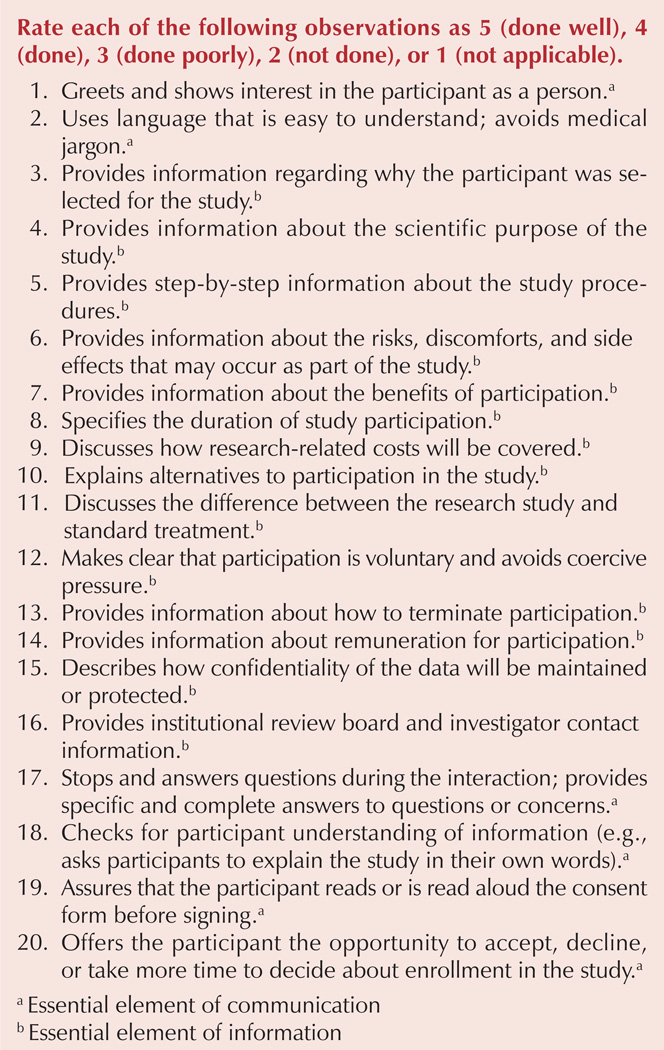

Finalized Instrument

The final P-QIC is a four-point (i.e., done well, done, done poorly, not done), 20-item Likert-type instrument with a total score ranging from 40–100, with a higher score indicating higher quality (see Figure 2). Three types of scores can be calculated from the tool: (a) a total score for the entire encounter, (b) an information score, and (c) a communication score. The score can be converted into a percentage score. The P-QIC also includes a nonapplicable category that, when used, changes the denominator of the calculation, although a percent still can be calculated accurately. The not applicable category was added because the tool is designed for observational use in clinical trials where some categories do not apply to the type of research being conducted. For example, with item 9, there may not be any research-related costs associated with the study, and that was found to be not applicable in some cases.

Figure 2.

The Process and Quality of Informed Consent Instrument

Sixty-three graduate health sciences students tested the final version of the P-QIC by using it to rate a set of four videotaped simulations, which were viewed in a random order. To calculate test-retest reliability, 16 of the students rated the simulations a second time after a two-week hiatus.

For final field testing of the P-QIC and inter-rater reliability assessment, the authors simultaneously but independently observed five actual consent encounters for institutional review board–approved clinical trials at the study institution (two involving patients in cardiology, two for a community-based study of home health aides, and one for an HIV vaccine trial). To conduct these observations, consent was obtained from the institutional review board, the principal investigator, the consent administrator, and the patient.

Results

Internal consistency was calculated by Cronbach alpha. One-way analysis of variance was used to test whether mean observer scores differed between the four scenarios (i.e., discriminant validity). Test-retest reliability for each of the simulations was calculated using Pearson correlation coefficients. Finally, agreement in scores for each P-QIC item between the two raters during actual consent interactions was calculated by Cohen’s kappa (Landis & Koch, 1977). The data were analyzed using SPSS®, version 14.0.

The Cronbach alpha was 0.98. The one-way analysis of variance for discriminant validity was (F3, F248 = 528), p < 0.001. For test–retest reliability for the scenarios, the correlation coefficients between time 1 and 2 varied by scenario. Mean scores for each scenario were as follows: null scenario, 28.6 (SD = 5.2); information scenario, 41.1 (SD = 7.2); communication scenario, 63.2 (SD = 7.9); and combination scenario, 70 (SD = 5.7) (see Table 1).

Table 1.

Validity and Reliability Testing of the Process and Quality of Informed Consent (P-QIC) Instrument

| Variable | Test Procedure | Result |

|---|---|---|

| Content validity | Informed consent users and expert panel on communications | 20 items were retained in the tool, with corrections as described. |

| Convergent validity | Intraclass correlation | Total for tool = 0.97 |

| Discriminant validity | Analysis of variance | Significant differences in scores between scenarios (p < 0.001 [F3, F248 = 528]) |

| Face validity | Expert reviews | P-QIC instrument was determined to accurately reflect the construct. |

| Internal consistency | Cronbach alpha | Cronbach alpha = 0.98 |

| Inter-rater reliability | Cohen’s kappa | k = 0.98 |

| Test-retest reliability | Pearson correlations for each simulation | Information: r = 0.899 Communication: r = 0.639 Combination: r = 0.998 Null: r = 0.83 |

Discussion

The process of consent is a complex and consequential interaction that requires attention and improvement. A first step in assessing the current process is the development of validated tools for observational measurement. In the current study, an observational instrument, the P-QIC, was developed and psychometrically tested to measure the quality and process of the informed consent encounter in two domains, information and communication. Overall, the P-QIC was found to have a high level of reliability and validity in standardized simulated testing as well as in clinical practice, thereby suggesting that the tool is useful for identifying areas of strength and weakness in the informed consent process. A validated observational tool, such as the P-QIC, will help investigators develop and test interventions to improve the process of informed consent.

Limitations

The current study contained several limitations. First, the P-QIC would benefit from additional testing in actual consent encounters. Future testing also should include cultural aspects relative to language. Second, the simulations were developed specifically for the current study and need additional evaluation. Third, despite the authors’ best efforts, elements included in the tool were noted to be representative of North American or European cultures and, therefore, modifications to the tool would be necessary for specific populations (e.g., incorporating the opinion of elders when working in tribal communities) (Woodsong & Karim, 2005). Like all Likert-type scales, the selection of categories (e.g., done well, done, done poorly) is somewhat arbitrary and subjective, and inter-rater reliability would need to be assessed under each use condition to ensure that observers are consistent in their interpretation of the categories. Finally, the P-QIC and simulations are in English, which limits research regarding language and culture.

Implications for Nursing

Informed consent is a complex encounter. The role of the nurse in consent encounters may be as the investigator who is obtaining the consent, or he or she may be part of the process of enrolling patients in clinical trials. In either role, nurses often are looked to by patients and families during the informed consent process to explain, clarify, and advise them on participation in clinical trials. The development and testing of the P-QIC allows investigators (and those assisting them) to identify specific areas that are critical in process and measure them.

Acknowledgments

This study was funded in part by a grant from the Alpha Omega Chapter of Sigma Theta Tau. Smith was supported by a grant from the National Center for Research Resources (NCRR) (5P20RR11104), a component of the National Institutes of Health (NIH). The contents of the article are solely the responsibility of the authors and do not necessarily represent the official views of NCRR or NIH.

References

- Albrecht TL, Blanchard C, Ruckdeschel JC, Coovert M, Strongbow R. Strategic physician communication and oncology clinical trials. Journal of Clinical Oncology. 1999;17:3324–3332. doi: 10.1200/JCO.1999.17.10.3324. [DOI] [PubMed] [Google Scholar]

- Brown RF, Butow PN, Butt DG, Moore AR, Tattersall MH. Developing ethical strategies to assist oncologists in seeking informed consent to cancer clinical trials. Social Science and Medicine. 2004;58:379–390. doi: 10.1016/s0277-9536(03)00204-1. [DOI] [PubMed] [Google Scholar]

- Brown RF, Butow PN, Ellis P, Boyle F, Tattersall MH. Seeking informed consent to cancer clinical trials: Describing current practice. Social Science and Medicine. 2004;58:2445–2457. doi: 10.1016/j.socscimed.2003.09.007. [DOI] [PubMed] [Google Scholar]

- Buccini LD, Caputi P, Iverson D, Jones C. Toward a construct definition of informed consent comprehension. Journal of Empirical Research on Human Research Ethics. 2009:17–23. doi: 10.1525/jer.2009.4.1.17. [DOI] [PubMed] [Google Scholar]

- Cohn E, Larson E. Improving participant comprehension in the informed consent process. Journal of Nursing Scholarship. 2007;39:273–280. doi: 10.1111/j.1547-5069.2007.00180.x. [DOI] [PubMed] [Google Scholar]

- Council for International Organizations of Medical Services. International ethical guidelines for biomedical research involving human subjects. Geneva, Switzerland: Council for International Organizations of Medical Sciences; 2002. [PubMed] [Google Scholar]

- Delany C. Making a difference: Incorporating theories of autonomy into models of informed consent. Journal of Medical Ethics. 2008;34:E3. doi: 10.1136/jme.2007.023804. [DOI] [PubMed] [Google Scholar]

- Dresden GM, Levitt MA. Modifying a standard industry clinical trial consent form improves patient information retention as part of the informed consent process. Academic Emergency Medicine. 2001;8:246–252. doi: 10.1111/j.1553-2712.2001.tb01300.x. [DOI] [PubMed] [Google Scholar]

- Ferguson PR. Patients’ perceptions of information provided in clinical trials. Journal of Medical Ethics. 2002;28:45–48. doi: 10.1136/jme.28.1.45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flory J, Emanuel E. Interventions to improve research participants’ understanding in informed consent for research: A systematic review. JAMA. 2004;292:1593–1601. doi: 10.1001/jama.292.13.1593. [DOI] [PubMed] [Google Scholar]

- Foderaro LW. Study refutes claims on pediatric AIDS drug trials. New York Times. 2009 Jan 28;:A26. Retrieved from http://nytimes.com/2009/01/28/nyregion/28foster.html. [Google Scholar]

- Grossman SA, Piantadosi S, Cohavey C. Are informed consent forms that describe clinical oncology research protocols readable by most patients and their families? Journal of Clinical Oncology. 1994;12:2211–2215. doi: 10.1200/JCO.1994.12.10.2211. [DOI] [PubMed] [Google Scholar]

- Guarino P, Lamping DL, Elbourne D, Carpenter J, Peduzzi P. A brief measure of perceived understanding of informed consent in a clinical trial was validated. Journal of Clinical Epidemiology. 2006;59:608–614. doi: 10.1016/j.jclinepi.2005.11.009. [DOI] [PubMed] [Google Scholar]

- Henderson GE, Easter MM, Zimmer C, King NM, Davis AM, Rothschild BB, Nelson DK. Therapeutic misconception in early phase gene transfer trials. Social Science and Medicine. 2006;62:239–253. doi: 10.1016/j.socscimed.2005.05.022. [DOI] [PubMed] [Google Scholar]

- Jenkins VA, Fallowfield LJ, Souhami A, Sawtell M. How do doctors explain randomised clinical trials to their patients? European Journal of Cancer. 1999;35:1187–1193. doi: 10.1016/s0959-8049(99)00116-1. [DOI] [PubMed] [Google Scholar]

- Joffe S, Cook EF, Cleary PD, Clark JW, Weeks JC. Quality of informed consent: A new measure among research subjects. Journal of the National Cancer Institute. 2001;93:139–147. doi: 10.1093/jnci/93.2.139. [DOI] [PubMed] [Google Scholar]

- Katz J. The silent world of doctor and patient. Baltimore, MD: Johns Hopkins University Press; 2002. [Google Scholar]

- Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159–174. [PubMed] [Google Scholar]

- Lavori PW, Wilt TJ, Sugarman J. Quality assurance questionnaire for professionals fails to improve the quality of informed consent. Clinical Trials. 2007;4:638–649. doi: 10.1177/1740774507085144. [DOI] [PubMed] [Google Scholar]

- Lindegger G, Milford C, Slack C, Quayle M, Xaba X, Vardas E. Beyond the checklist: Assessing understanding for HIV vaccine trial participation in South Africa. Journal of Acquired Immune Deficiency Syndromes. 2006;43:560–566. doi: 10.1097/01.qai.0000247225.37752.f5. [DOI] [PubMed] [Google Scholar]

- Makoul G. Essential elements of communication in medical encounters: The Kalamazoo consensus statement. Academic Medicine. 2001;76:390–393. doi: 10.1097/00001888-200104000-00021. [DOI] [PubMed] [Google Scholar]

- Miller CK, O’Donnell DC, Searight HR, Barbarash RA. The Deaconess Informed Consent Comprehension Test: An assessment tool for clinical research subjects. Pharmacotherapy. 1996;16:872–878. [PubMed] [Google Scholar]

- National Cancer Institute. NCI’s Clinical Trials Cooperative Group Program. 2009 Retrieved from http://www.cancer.gov/cancertopics/factsheet/NCI/clinical-trials-cooperative-group.

- National Institutes of Health. About clinicaltrials.gov. 2011 Retrieved from http://clinicaltrials.gov/ct2/info/about.

- National Patient Safety Agency. Information sheet and consent form guidance (Version 3.5) 2009 Retrieved from http://www.nres.npsa.nhs.uk/applications/guidance/consent-guidance-and-forms/ [Google Scholar]

- Ness DE, Kiesling SF, Lidz CW. Why does informed consent fail? A discourse analytic approach. Journal of American Academy of Psychiatry and the Law. 2009;37:349–362. [PubMed] [Google Scholar]

- Rivera R, Borasky D. Research ethics training curriculum. 2nd ed. Durham, NC: Family Health International; 2009. [Google Scholar]

- Santen SA, Rotter TS, Hemphill RR. Patients do not know the level of training of their doctors because doctors do not tell them. Journal of General Internal Medicine. 2008;23:607–610. doi: 10.1007/s11606-007-0472-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shalala D. Protecting research subjects: What must be done. New England Journal of Medicine. 2000;343:808–810. doi: 10.1056/NEJM200009143431112. [DOI] [PubMed] [Google Scholar]

- Stepan KA, Gonzalez AP, Dorsey VS, Frye DK, Pyle ND, Smith RF, Cantor SB. Recommendations for enhancing clinical trials education: A review of the literature. Journal of Cancer Education. 2011;26:64–71. doi: 10.1007/s13187-010-0160-4. [DOI] [PubMed] [Google Scholar]

- Tomamichel M, Sessa C, Herzig S, de Jong J, Pagani O, Willems Y, Cavalli F. Informed consent for phase 1 studies: Evaluation of quantity and quality of information provided to patients. Annals of Oncology. 1995;6:363–369. doi: 10.1093/oxfordjournals.annonc.a059185. [DOI] [PubMed] [Google Scholar]

- U.S. Department of Health and Human Services. Protection of human subjects, Title 45 C.F.R. § 46. 2005 Retrieved from http://www.hhs.gov/ohrp/humansubjectsWoodsong.

- Woodsong C, Karim QA. A model designed to enhance informed consent: Experiences from the HIV prevention trials network. American Journal of Public Health. 2005;95:412–419. doi: 10.2105/AJPH.2004.041624. [DOI] [PMC free article] [PubMed] [Google Scholar]