Abstract

Neural representations of the effort deployed in performing actions, and the valence of the outcomes they yield, form the foundation of action choice. To discover whether brain areas represent effort and outcome valence together or if they represent one but not the other, we examined these variables in an explicitly orthogonal way. We did this by asking human subjects to exert one of two levels of effort to improve their chances of either winning or avoiding the loss of money. Subjects responded faster both when exerting greater effort and when exerting effort in anticipation of winning money. Using fMRI, we inspected BOLD responses during anticipation (before any action was executed) and when the outcome was delivered. In this way, we indexed BOLD signals associated with an anticipated need to exert effort and its affective consequences, as well as the effect of executed effort on the representation of outcomes. Anterior cingulate cortex and dorsal striatum (dorsal putamen) signaled the anticipation of effort independently of the prospect of winning or losing. Activity in ventral striatum (ventral putamen) was greater for better-than-expected outcomes compared with worse-than-expected outcomes, an effect attenuated in the context of having exerted greater effort. Our findings provide evidence that neural representations of anticipated actions are sensitive to the expected demands, but not to the expected value of their consequence, whereas representations of outcome value are discounted by exertion, commensurate with an integration of cost and benefit so as to approximate net value.

Introduction

We continually have to integrate the costs and benefits of our actions. One such cost is the effort we expend, something individuals attempt to minimize (Walton et al., 2006). Frontostriatal networks are thought to be crucial in supporting effortful action to gain reward (Phillips et al., 2007), but precisely how effort affects the neural coding of reward value is unclear. The fact that options associated with greater costs are in some cases associated with greater value (Johnson and Gallagher, 2011) suggests considerable potential complexities when we integrate cost and benefit. Because fMRI studies show that ventral striatal BOLD covaries with value-based prediction errors (Berns et al., 2001; O'Doherty et al., 2003), this region presents an obvious target for a putative integrator of effort and value.

Reward is not the only benefit that accrues from making an effort. One can also act vigorously to avoid punishment. However, we are unaware of any human study that has orthogonalized effort and valence. This is important given recent findings demonstrating a seemingly mandatory tie between effort and valence. Such a tie has been described as Pavlovian, in that instrumental behavior is likely to be enhanced by anticipation of reward but inhibited or suppressed by anticipation of a negatively valenced event regardless of whether it is instrumentally appropriate (Deakin and Graeff, 1991; Boureau and Dayan, 2011). Active avoidance has long troubled behavioral theorists, as for example in the issues surrounding safety signaling (Mowrer, 1960; Rescorla and Lolordo, 1965; Bolles, 1970; Dinsmoor, 1977; Dayan, 2012). A recent task (Crockett et al., 2009) in which action requirement and outcome valence were orthogonalized has led to the conclusion that anticipatory responses in striatum and SN/VTA are dominated by the representation of action over valence (Guitart-Masip et al., 2011, 2012a). However, how such signals are influenced by effort costs is unclear, and that is the key question addressed here.

We studied human participants while they performed a cue-predictive instrumental task in which they might have to squeeze a handgrip to either earn or avoid losing money. We explicitly orthogonalized effort and valence and, furthermore, by adding trials in which motor execution was entirely obviated, we dissociated anticipation from both execution and outcome. Finally, we used stochastic outcomes so we could index the effects of effort on the coding of associated prediction errors.

Our analysis focused on two critical time points, namely when potential effort requirements were initially cued and when an outcome was actually delivered. For the former, we expected that an enhanced BOLD signal in anterior cingulate cortex (ACC) would reflect anticipated effort (Croxson et al., 2009; Prévost et al., 2010). However, based on results from Guitart-Masip et al. (2011), we were also interested in finding a response, if any, in the striatum. At outcome, we predicted that effort would influence a ventral striatal prediction error in an additive or interactive manner, as was shown previously for the integration of reward and punishment (Talmi et al., 2009).

Materials and Methods

Participants

Nineteen right-handed participants (8 females, mean age = 21.7 ± 2.7 years) were recruited through a university database. All attended the laboratory on two consecutive days (day 1: training, day 2: scanning, ∼24 h apart) and were told that they would receive payment at the end of second day based on their performance on both days. Their reward scheme was adjusted such that all subjects received £30 for the time spent in the laboratory. The study was approved by a University College London ethics committee.

Experimental paradigm

The task required participants to squeeze a handgrip with either low or high effort in order probabilistically either to gain or to avoid losing money. Before they emitted an action, one of four predictive fractal images that signaled the required effort and outcome valence was presented. After completion of the action, subjects were shown the resulting win/loss outcome.

At the start of each trial, participants saw a fixation cross followed by 1 of 4 fractal images for 1 s (anticipatory phase). Then, after a brief, jittered delay of between 0.5 and 3.5 s, they squeezed a handgrip to reach 1 of 2 effort targets (25% or 65% of maximum effort; fmax) within 1.5 s. In this 1.5 s period, the effort target level was indicated by a white tick mark on a screen (execution phase). Participants had to reach the target level before 1 s had elapsed, and then maintain the force for another 0.5 s. If participants successfully attained and maintained the squeeze level, a red line would appear at the top of the force level indicator informing them that their motor execution was successful. After a further 1 s delay they were presented with a 1 s presentation of a monetary outcome, which could either be 20, 0, or −20 pence (outcome phase). Outcome proportions for correct responses, that is successfully attaining and maintaining the required force level, were 0.8/0.2, such that in the win condition, the outcome was 20 pence 80% of the time, and otherwise 0 pence; while in the avoid loss condition, the outcome was 0 pence 80% of the time, and −20 pence otherwise. Incorrect responses always led to 0 pence in the win and −20 pence in the avoid loss condition. There was a jittered ITI between 0.75 and 1.5 s, before the next trial commenced. Thus in this task, the subjects made the exact same action (say low effort), but with very different expectations (80% probability of winning vs 80% probability of avoiding losing) of different outcome valences. Equally, two different actions (low and high effort) would have the same (large) positive state values in the win condition and same (small) negative state values in the avoid loss condition.

Subjects were trained the day before scanning. During training, we presented both white tick marks for low and high effort during the 1.5 s execution phase such that participants had to learn through trial and error which level of effort was associated with each fractal cue. After participants had performed 60 trials per condition, postlearning performance was tested in a block of 5 trials per condition, and if needed, participants performed 10 more trials per condition, such that all contingencies were fully learned by the end of training day.

Critically, in half the trials in the scanning session (but not the training session) the requirement for a hand squeeze was omitted (Fig. 1). Therefore, at the start of the trial, fractal images specified the outcome valence (win vs loss avoidance) and the potential effort requirement (low vs high effort). However, unpredictably for the participants, the actual emitting of an effortful squeeze was only required in half of the trials. In these no-squeeze trials, participants saw a green bar moving upward indicating a computer-executed trial with the outcome valence presented as if the trial had been successful (gain in win and zero in avoid loss). We implemented this manipulation to disambiguate BOLD responses associated with effort and valence anticipation from actual movement execution, because it decorrelated the anticipation signal from the movement signal (Guitart-Masip et al., 2011).

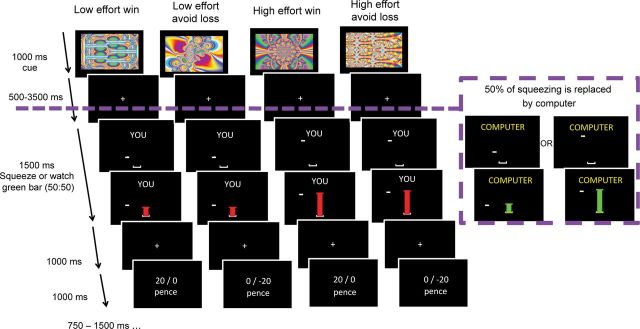

Figure 1.

Cue predictive task dissociates anticipation and outcome processing from motor execution. In each trial, one of four possible images that predicts effort-valence combination appeared on the screen and, after a jittered delay, participants executed the squeeze, followed by a fixed delay and a probabilistic outcome (20/0 pence for win, 0/−20 pence for avoid loss condition). After a randomized intertrial interval of 0.75–1.5 s length, the next trial commenced. At grip onset, participants saw a “YOU” message giving them 1.5 s to respond by squeezing either to the low or high effort level (indicated by a white tick mark). The purple dotted line in the schema indicates that half of the trials did not include actual squeezing, but instead participants saw a green bar moving upward, indicating that these were computer-executed trials. Participants knew that whether they had to squeeze was probabilistic and that this fact was only indicated when they saw the “YOU” or “COMPUTER” text at the end of the fixation period. Participants fully learned the contingencies between the different fractal images and task requirements before scanning.

Stimuli and apparatus

Cues comprised four fractal stimuli each randomly assigned to one of four contingencies and presented at the center of a screen, crossing between effort level (25% vs 65% fmax) and outcome valence (win vs avoid loss). As a cue to guide squeezing, a red bar stimulus that moved vertically provided veridical real-time visual feedback of squeeze force (as used previously in our laboratory; Kurniawan et al., 2010). All experimental stimuli were presented via MATLAB 6.5 (www.mathworks.com) using Cogent 2000 (http://www.fil.ion.ucl.ac.uk/ and http://www.icn.ucl.ac.uk/) and Cogent Graphics (John Romaya, Wellcome Department of Imaging Neuroscience, London). The experiment was run on a Windows-based PC.

Procedure

Force measurements.

Participants used their dominant hand to squeeze. For force calibration, we measured fmax three times and calibrated grip levels based on the highest value of the three.

Training day.

Participants completed a 240-trial learning block (60 trials for each fractal image) and a short testing block to confirm that they had learned the contingencies. If they did not perform well at test, they were explicitly informed about the contingencies and then completed another short learning block (10 trials per condition) and a testing block (five trials per condition). By the end of the training session, all participants had learned the cue-condition contingencies. Participants were also informed that the contingencies would stay the same on the scanning day.

Participants used a cursor to rate the likability of the fractal images on a visual scale from 0 to 100 both before and at the end of training (missing data from three subjects). Using the same visual scale, participants also rated the feeling of effortfulness for each force level. High effort was indeed rated as more effortful than low effort (MHighGrip = 69.66 (4.04), MLowGrip = 39.30 (4.86), t(18) = 6.17, p < 0.0001).

Scanning day.

On the scanning day, we both asked and reminded subjects about the cue-condition contingencies to ensure stable, nonlearning performance in the scanner. During preparation in the scanner they were given 12 practice trials in which they experienced six no-squeeze trials. Participants underwent 4 scanning sessions with a rest period (up to 3 min) between the sessions. Each scanning block had 20 continuous, fully randomized repetitions of 4 conditions, presented with a 5 s rest every 12 trials. Overall, there were 320 trials (80 trials for each fractal image) lasting 45 min. During debriefing, they estimated the amount of force, best money outcome, and its probability of being associated with each cue. These estimations were repeated at the end of training and both datasets confirmed that participants had understood the contingency (day 2 mean estimated force: 30% and 76% fmax for low and high effort, mean estimated best outcome: 19 and 0 pence for win and avoid loss conditions).

Behavioral data analysis

Using data both from training and scanning days, we calculated mean response accuracy (as the percentage correct) and time to reach target (in milliseconds). We converted the latter into a measure of target speed, calculated as force at target divided by time to target (in force/second). In addition, using smoothed force from the scanning day, we calculated time to reach asymptote (msec) and time to start squeezing (reaction time [RT] in milliseconds). Similarly, we converted time to asymptote into a measure of asymptote speed, calculated as force at asymptote divided by time to asymptote. This normalizes time to target and time to asymptote for the different values of fmax, but leads to equivalent effects.

To implement smoothing, we took force in each trial of the scanning session and used an optimization procedure that finds the minimum squared distance between the force data and a sigmoid function. This was possible because on scanning day (by which time subjects had mastered the task), force closely resembled a sigmoid shape, where value was around zero at grip onset for ∼300–500 ms, increased over time to the level required by the target, and stayed at asymptote from 1 s after grip onset until grip offset. The resulting best fit lines matched actual force very closely (averaged squared distance of 0.02% fmax; SD = 0.002%). We could not smooth force during the training day because subjects still made errors and force shape was not yet typical. From this fitting procedure, we calculated the first derivatives of these best fit lines and created a velocity function shaped like a mountain, with its peak signifying the point at which change of force was at its maximum.

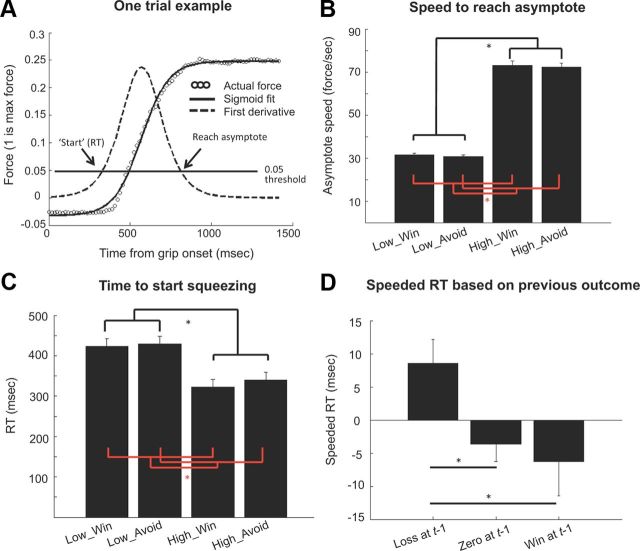

To illustrate the shape of force data and the functions, we show an exemplar trial in Figure 2A, with actual force level from grip onset lasting 1500 ms, the sigmoid shaped fitting line and its first derivative (velocity function). We set a threshold of 5% fmax to determine time to asymptote and RT (for comparison, we also report results from thresholds of 1%, 2.5%, 7.5%, and 10% fmax).

Figure 2.

A,. Force data from one trial from one subject with its sigmoid fit line and first derivative to smooth the data. From this function, we use a threshold to find the asymptote speed (force at asymptote/time to asymptote) and RT (in milliseconds). B, C, Group-averaged asymptote speed in force/second (B) and RT in milliseconds (C) showing significant main effects of effort and valence. Interactions were nonsignificant. D, Speeded RT based on outcome of previous trial. Participants speeded their response after a loss, speeding after loss was significantly higher than speeding after both zero and win. Error bars indicate SEM.

Statistical analyses on the dependent variables (subjective liking, accuracy, target and asymptote speed and RT) were conducted using SPSS and MATLAB. To test for effects of effort, valence, and their interaction on the squeeze variables, we ran two-way repeated-measures ANOVAs with effort and valence as independent variables, and added “block” as a third independent variable in a three-way repeated-measures ANOVA on accuracy data to inspect evidence of learning. Significant effects were followed up using repeated-measures ANOVA or paired samples t tests where appropriate.

Image acquisition

We used a 3T TRIO system (Siemens) with a 12-channel head coil to acquire T1-weighted anatomical images and T2*-weighted MRI transverse echoplanar images (EPIs; 64 × 64 mm, TR/TE = 3.36 s/30 ms) with BOLD contrast. The sequence of EPI optimized signal in the orbitofrontal cortex (Weiskopf et al., 2006); each EPI has 48 3-mm-thick contiguous axial slices taken every 3 mm, covering the orbitofrontal cortex, striatum, anterior cingulate, and motor cortices. In total for each participant, 212–220 volumes were acquired in each session, discarding the first four volumes to allow for T1 equilibration. We acquired field maps between the second and third scanning sessions. For the structural images, we acquired a standard high-resolution T1-weighted anatomical image with acquisition matrix 256 × 240, TR/TE/flip angle = 7.92 ms/2.48 ms/16°, voxel size 1 × 1 × 1 mm, 176 axial slices (Deichmann et al., 2004).

Imaging analysis

We used Statistical Parametric Mapping (SPM8b; Wellcome Trust Centre for Neuroimaging, London, http://www.fil.ion.ucl.ac.uk/spm) for imaging analyses. fMRI time series were analyzed in five preprocessing steps involving intramodal realignment and unwarping, intermodal coregistration, segmentation, normalization, and resampling to 3 × 3 × 3 mm3, and smoothing as described in Kurniawan et al. (2010). The fMRI time series data were high-pass filtered (cutoff = 128 s) and whitened using an AR(1) model. For each subject, a statistical model was computed by applying a canonical hemodynamic response function combined with time derivatives. We performed random-effect, event-related statistical analyses. We specified separate first-level general linear models (GLMs) for each participant by creating sets of regressors time locked to fractal image (action anticipation) and outcome presentation (outcome evaluation), with four scanning sessions concatenated into one.

We created three GLMs using stick functions. In GLM1, to highlight activity correlating with anticipation of effort and valence, we defined four regressors of interest representing the four event types at cue onset (each signaled by a distinct fractal image) that varied in effort and valence: low effort-win (LowWin), low effort-avoid loss (LowAvoid), high effort-win (HighWin), and high effort-avoid loss (HighAvoid).

To confirm motor activity during effort, we defined three grip regressors representing the three handgrip events at grip onset (signaled by a screen containing the red or green bar with the text “YOU” or “COMPUTER”): squeeze_low, squeeze_high, and comp_squeeze (collapsing low and high effort trials). Including these grip regressors for the onset of the motor response ensured that variance explained by the performance of the motor response would not be attributed to the fractal images.

To highlight activity during outcome evaluation that correlated with effort, valence, and actual outcome reflecting whether subjects received outcomes better than expected (80% of the time) or worse than expected (20% of the time), we defined eight regressors of interest representing events at outcome onset that followed actual motor execution. These regressors were as follows: LowWinBetter, LowWinWorse, LowAvoidBetter, LowAvoidWorse, HighWinBetter, HighWinWorse, HighAvoidBetter, and HighAvoidWorse. These outcome regressors were moderately orthogonal from the grip regressors (absolute values of cosine of angle between regressors <0.35; fully colinear, cos = ±1; fully orthogonal, cos = 0), consistent with variance explained by outcome regressors being over and above that explained by the grip regressors. We included all onsets of outcome periods that followed computer-executed periods in one regressor of no interest (collapsing low and high effort, win and loss, and better and worse trials). GLM1 contained 42 columns (16 regressors each with temporal derivative + 6 motion parameters + 3 dummy regressors for session number + mean).

GLM2 and GLM3 were constructed to validate the results from GLM1 that pertain to action anticipation. GLM2 included one regressor for the onsets of all fractals (collapsing across conditions), parametrically modulated by expected value and expected effort (each with two possible values). The idea here was to increase sensitivity to valence effects by allowing them to generalize across different fractals according to their values. The remaining regressors were the same as in GLM1. GLM2 thus contained 40 columns (13 regressors + 2 modulators each with temporal derivative + 6 motion parameters + 3 dummy regressors for session number + mean).

In GLM3, to validate effort effects with and without subsequent motor production, we separated the fractal onsets into two regressors, one for those followed by own squeezing (red bar) and one by no squeezing (green bar). These were again parametrically modulated by expected value and expected effort. The intent of this separation was to guarantee that any BOLD signal observed in response to the fractal images was not induced by performance of a motor response, and thus purely reflected anticipation. Note, though, that GLM1 already includes a regressor for the onset of a motor response and, therefore, any variance explained by the performance of a motor response would not be attributed to the fractal images. GLM3 contained 46 columns (14 regressors + 4 modulators each with temporal derivative + 6 motion parameters+ 3 dummy regressors for session number + mean).

We tested for regionally specific condition effects by using linear contrasts for each subject and each condition (first-level analysis). The resulting contrast images were entered into a second-level random-effects analysis. We specified four separate second level design matrices for GLM1, one to evaluate brain responses to the anticipatory phase, one to evaluate brain responses to outcomes, and two to evaluate brain responses during effort investment. The first test (anticipation) corresponded to a 2 × 2 ANOVA with Effort (high vs low) and Valence (win vs loss avoidance). The second test (outcome processing) corresponded to a 2 × 2 × 2 ANOVA with Effort (high vs low) × Valence (win vs loss avoidance) × Outcome (better than expected vs worse than expected). The final two (effort investment) corresponded to a one-way ANOVA with comp_squeeze vs squeeze_low vs squeeze_high and a t test for squeeze_low vs squeeze_high. For GLM2 and GLM3, we conducted second level t tests to examine the correlation between brain activity and each parametric modulator (expected value and expected effort).

As a validity check on our data, we tested for effects of squeezing (effort investment) on brain activity. Using a peak-level threshold at p = 0.05 family-wise error (FWE) corrected, we confirmed activity in regions related to movement, spanning bilateral cerebellum, peaking on the right (MNI space coordinates, 18, −52, −20; peak Z score, >8; 664 voxels, p < 0.001 FWE), left rolandic operculum (MNI space coordinates, −39, −4, 16; peak Z score, 7.35; 1567 voxels, p < 0.001 FWE), left middle cingulate (MNI space coordinates, −3, 5, 40; peak Z score, 6.93; 320 voxels, p < 0.001 FWE) and in left primary motor area (MNI space coordinates, −54, −28, 40; peak Z score, 6.46; 755 voxels, p < 0.001 FWE) that was greater for own squeeze periods (low and high effort averaged) than for computer “squeeze” periods. The contrast between high and low effort also yielded similar activation in bilateral cerebellum (right: MNI space coordinates, 30, −55, −23; peak Z score, 6.24; 172 voxels, p < 0.001 FWE; left: MNI space coordinates, −24, −55, −23; peak Z score, 5.44; 40 voxels, p = 0.001 FWE) and bilateral midbrain (left: MNI space coordinates, 15, −19, −11; peak Z score, 5.95; 41 voxels, p < 0.001 FWE; right: MNI space coordinates, −15, −22, −11; peak Z score, 5.82; 96 voxels, p < 0.001 FWE) that was greater for high effort than for low effort. These results confirm there was motor brain activity during squeezing.

Whole-brain results were thresholded at p=0.001 uncorrected, but only those surviving peak-level FWE correction at p = 0.05 are reported. Activations in the ACC and the striatum were tested using small volume correction (SVC) using anatomically defined regions of interest. Therefore, results are reported using FWE correction for respective small volume at p < 0.05. Those suprathreshold areas showing main effects were further tested for the presence of orthogonal interactions using a functional region of interest (ROI) approach. To achieve this, we drew functional masks derived from the significant clusters centered at the peaks of each suprathreshold cluster showing the main effects (threshold was FWE p < 0.05). We then extracted the signal in these ROIs and tested for orthogonal main effects and the interaction using an ANOVA on the extracted signal.

Results

Subjective liking for cues

Before training, likability ratings were equal across all four images (means ranging between 47 and 55). We found no systematic differences in initial likability for cues that later were associated with effort and valence conditions (p > 0.16. We observed that training affected likability ratings (change in rating as means ± SEM): MLowEffortWin = 18.3 ± 5.76; MLowEffortLoss = −19.1 ± 6.47; MHighEffortWin = 16.15 ± 6.60; MHighEffortLoss = −5.87 ± 4.37. However, whereas liking for reward cues increased after training and liking for punishment cues decreased after training (main effect of valence F(1,15) = 4.88, p = 0.044), there was no significant effect of either effort (p = 0.28) or the interaction between effort and valence (p = 0.17).

Accuracy

Overall, the accuracy rate on fractal/squeeze level performance was >65% during training and 95% during scanning (target visually indicated) in all four conditions. There was no effect of effort, valence, or interaction on accuracy on either the training (Feffort(1,18) = 2.59, p = 0.12; Fvalence(1,18) = 0.13, p = 0.71; Feffort×valence(1,18) = 0.55, p = 0.46) or the scanning day (Feffort(1,18) = 0.71, p = 0.40; Fvalence(1,18) = 0.94, p = 0.34; Feffort×valence(1,18) = 1.89, p = 0.18).

In the first 10 training trials, accuracy was higher in low effort compared with high effort conditions (MLowEffort = 66% ± 5% vs MHighEffort = 54% ± 3%), and in win compared with avoid loss conditions (MWin = 65% ± 3% vs MAvoidLoss = 56% ± 4%; Feffort(1,18) = 4.52, p = 0.04; Fvalence(1,18) = 7.62, p = 0.01). There was no significant interaction in the first 10 trials (p = 0.77). Performance improvement was evident across six blocks of 10 trials (Fblock(5,14) = 9.99, p < 0.0001), but accuracy was >70%, showing no improvement between fifth and sixth blocks (pblock5–6 > 0.8).

In sum, our accuracy data suggest that effort and valence had an effect on performance very early during learning, with more correct responses for low effort conditions and in win conditions. However, learning then stabilized rapidly and no further differences in accuracy were observed between conditions either later during learning or during the scanning session.

Speed to reach target and asymptote

During training, the speed with which the target force was reached was significantly influenced by effort (F(1,18) = 481.38, p < 0.0001), with target speed being threefold faster for high (113.68 ± 3.43 force/s) compared with low effort (35.84 ± 0.70 force/s). Valence or interaction effects were nonsignificant (p > 0.4).

During scanning, target speed was influenced by both effort and valence (Feffort(1,18) = 254.48, p < 0.0001, Fvalence(1,18) = 6.41, p = 0.02; interaction was nonsignificant, p = 0.4). Target speed for high effort was greater (68.72 ± 1.85 force/s) than for low effort (36.30 ± 1.64 force/s), and greater for the prospect of reward (53.34 ± 1.58 force/s) than for the prospect of avoiding a punishment (51.68 ± 1.34 force/s). To look more finely at the topography of effort, we fit a sigmoid to the subjects' force output for each trial and calculated the first derivatives to index the time to asymptote (here equivalent to effort target), converted into asymptote speed (force/second, based on a threshold of 5% fmax for force initiation; Fig. 2A). Confirming our unsmoothed data, we found that participants reached asymptote at a greater speed for high compared with low effort target (F(1,18) = 593.18, p < 0.0001) and greater for reward compared with punishment (F(1,18) = 5.78, p = 0.02; Fig. 2B). Note that these findings were robust to the use of other threshold levels (1%, 2.5%, 7.5%, except for valence effect for 10% fmax: F > 480; p < 0.03). Our results provide validation for the smoothing technique.

Behavioral data from both training and scanning days suggest that participants reached target (and asymptote) at a greater speed for high as opposed to low effort, but an effect of valence only emerged on the second day, by which time participants had overlearned the task. This is important because it suggests that, during scanning, participants indeed anticipated valence along with the level of effort when they saw the fractal images.

RT

We used the same smoothing technique to index the time participants started to squeeze, namely RT. We found that RT was faster for high compared with low effort (F(1,18) = 83.34, p < 0.0001; Fig. 2C). These findings were robust to the use of other threshold levels (1%, 2.5%, 7.5%, 10% fmax: F > 14; p < 0.001).

Valence also influenced RT, rendering it shorter for the prospect of a reward than a punishment (F(1,18) = 4.46, p = 0.048). However, for other initiation thresholds, this was not significant. Given the significant effect of valence on overall time to reach the target force, one possibility is that there might have been a small effect of valence early during squeezing, rendering sensitivity to the threshold. We found no significant interaction between effort and valence in all behavioral measures (p > 0.06).

In addition, we looked at the influence of outcome in the previous trial (loss, zero, or win) on RT in the current trial, where a positive value indicated a faster response on current trial. We regressed outcome at trialt−1 against speeded RT at trialt (trialt−1 − trialt) for each subject and tested the resulting βs on one-sample t test. The βs were significantly different from a distribution with a mean of zero (t(18) = 2.44, p = 0.02), suggesting that the outcome of the previous trial had a significant impact on speeded response in the current trial. This was also supported by a one-way ANOVA of averaged speeded RTs across trials for each subject, suggesting that averaged speeded RT was influenced by outcome on previous trial (F(2,18) = 3.11, p = 0.05; Fig. 2D). Follow-up paired t tests show that participant quickened their response after a loss, and this was significantly different from either receiving nothing or a win (tloss-nothing(18) = 2.73, p = 0.01, tloss-win(18) = 2.54, p = 0.02), with no difference between outcome of zero and win (p = 0.35).

In sum, as predicted, high-effort target led to faster RTs (judged by when subjects started to squeeze). The same analyses suggested that the prospect of reward had a limited influence. Nevertheless, participants were faster to reach the target and, to a limited extent, responded faster in rewarding trials, demonstrating that they anticipated the valence associated with the fractals along with the level of effort required. We also demonstrated that the previous outcome had an impact on the response on the next trial, with a speeded response after a loss, but not after either receiving neutral or positive outcomes.

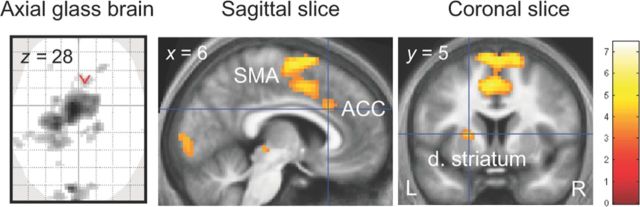

Anticipatory brain responses for effort

A whole-brain, voxel-based, analysis of effort anticipation using GLM1 revealed a main effect (high > low effort) in a large cluster involving a local maximum in left supplementary motor area (MNI space coordinates, −6, −7, 64; peak Z score, 6.16; 1333 voxels, p < 0.001 FWE; Fig. 3) and another in left calcarine gyrus (MNI space coordinates, −6, −85, −8; peak Z score, 5.24; 151 voxels, p = 0.003 FWE). Specifically, activity in this cluster was greater for fractal images predicting high effort compared with those predicting low effort. This contrast revealed two local maxima within ACC and striatum that survived SVC within the anatomical whole ACC and whole striatum (including putamen, caudate, and nucleus accumbens). The peaks of these foci were located in the right ACC (MNI space coordinates, 6, 23, 28; peak Z score, 3.74; 13 voxels, p = 0.025 FWE) and left putamen (MNI space coordinates, −21, 5, 7; peak Z score, 4.09; 26 voxels; p = 0.012 FWE).

Figure 3.

SPM of brain activity for cue presentation. Fractal images indicating high effort elicited greater activity in supplementary motor area (SMA), anterior cingulate cortex (ACC), and dorsal striatum (dorsal putamen) than fractal images indicating low effort did (p < 0.05 FWE; whole brain, SVC within bilateral ACC ROI, SVC within bilateral striatum ROI, respectively). Coordinates are given in MNI space. L indicates left; R, right. For illustrative purposes, voxels displayed in gray on glass brain and in yellow on slices survived a threshold of p < 0.001, uncorrected.

Serving as a cross-check, GLM2 revealed a similar pattern of activity correlating with the expected effort associated with the fractal images. Moreover, such a pattern remained in GLM3 for expected effort that was not followed by an actual effort investment (i.e., fractals followed by green bar), peaking in middle cingulate (MNI space coordinates, 6, 11, 43; peak Z score, 4.79; 312 voxels, p < 0.001 FWE). Activity in these clusters correlated positively with expected effort, increasing as the expected effort increased. Whereas in the pooled data in GLM1 and GLM2, the signal in left dorsal striatum correlated with expected effort, in GLM3 when no actual squeezing followed, this correlation was only significant for the right dorsal striatum (MNI space coordinates, 30, 11, −2; peak Z score, 3.53; 21 voxels, p = 0.03 FWE). In sum, these results confirm that anticipatory activity was not contaminated by actual effort investment, highlighting greater striatal activity invoked by anticipated, but not realized, effort.

The opposite contrast (low > high effort) yielded significant activity in visual areas, bilateral lingual gyri, with greater response for fractal images indicating low compared with high effort (right, MNI space coordinates, 6, −70, 4; peak Z score, 5.73; 70 voxels; p < 0.001 FWE; left, MNI space coordinates, −6, 73, 1; peak Z score, 5.13; p = 0.005 FWE).

We next searched for a complementary main effect of valence during presentation of fractal images, but failed to find a significant effect. This was true for whole-brain and SVC analyses using ROI masks covering either the entire anatomical striatum or more selective 8 mm spheres with center coordinates from three previous studies that reported valence, reward, or prediction error effects in ventral striatum (vSTR; p > 0.3; Guitart-Masip et al., 2011; Klein-Flügge et al., 2011; Niv et al., 2012). Neither did we find significant effort-by-valence interaction (p > 0.6). In a final analysis, GLM2 did not reveal any effect for expected value. Therefore, brain anticipatory activity reflected expected effort but not valence despite a significant behavioral effect of valence on the time at which the target force is reached on trials in which force production was required.

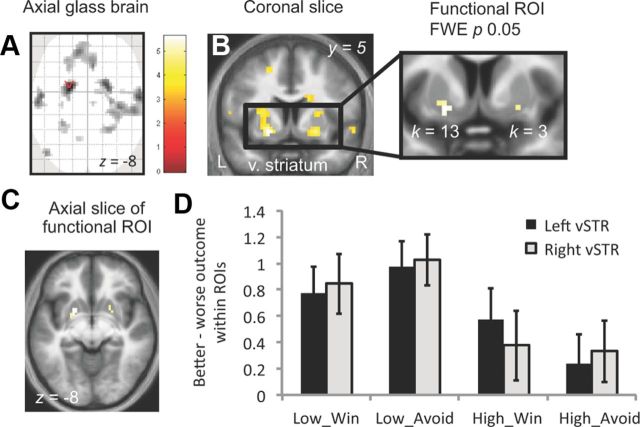

Effort modulates vSTR response to outcomes better than expected

We next focused on responses related to the outcome period. Specifically, we examined how the level of (actual) effort required to obtain an outcome affected the neuronal processing of affective and aversive outcomes. We achieved this by identifying voxels that showed a positive response to better-than-expected outcomes compared with worse-than-expected outcomes and to valence differences.

We first identified voxels that showed a greater response to better-than-expected outcomes compared with outcomes that were worse than expected across all conditions (main effect of outcome: (win-neutral in winning trials) + (neutral-loss in avoid losing trials)). As expected, the vSTR showed a main effect of outcome peaking in bilateral ventral putamen (left: MNI space coordinates, −21, 5, −8; peak Z score, 5.32; 157 voxels, p = 0.001 FWE; right: MNI space coordinates, 21, 8, −8; peak Z score, 4.65; 110 voxels, p = 0.028 FWE), a contrast that also revealed a maximum in right superior temporal gyrus (MNI space coordinates, 60, −7, −2; peak Z score, 4.74; 228 voxels, p = 0.02 FWE). This result is consistent with the frequent observations that BOLD in the vSTR is sensitive to positive (i.e., appetitive) prediction errors during the outcome phase (Berns et al., 2001; O'Doherty et al., 2004; Pessiglione et al., 2006). The voxels we identified in vSTR were then used as a functional ROI (Fig. 4B, inset) within which we subsequently assessed the orthogonal effects of valence and effort-by-outcome and valence-by-outcome interactions.

Figure 4.

SPM of brain activity at the time of outcome presentation. A, B, Glass brain and coronal slice show that activity in bilateral vSTR was stronger for better outcomes than worse outcomes, regardless of valence, corrected for FWE (p < 0.05), peak voxels; Left: −21, 5, −8, Right: 21, 8, −8. For illustrative purposes, voxels displayed in gray on glass brain and in yellow on coronal slice survived a threshold of p 0.001, uncorrected. B, Inset, Functional ROI's at left and right vSTR (putamen) from FWE-corrected voxels (p < 0.05) from whole-brain analysis centered at the peak of activity. Left, 13 voxels. Right, Three voxels. C, Axial slice of the same functional ROIs shown in B, inset. Coordinates are given in MNI space. L indicates left; R, right. D, Extracted activity within functional ROIs shown in B, inset, and in C, revealing an effort-by-outcome interaction. Regardless of valence, the difference between better > worse outcomes after expending low effort is significantly greater than after expending high effort. Error bars indicate SEM.

A three-way ANOVA on the extracted signal with effort, valence, and (actual) outcomes as factors revealed a significant effort-by-outcome interaction (FleftvSTR(1,18) = 5.37, p = 0.03; FrightvSTR(1,18) = 5.15, p = 0.03 in the absence of either a valence effect; p > 0.054), an effort-by-valence (p > 0.44) or an effort-by-valence-by-outcome interaction (p > 0.29). Note the marginally significant main effect of valence (driven by a win > avoid loss) is consistent with the idea that the vSTR responds more strongly to outcomes with positive than to those with negative expectations (Haber and Knutson, 2010). Pairwise t tests on the signal difference (better minus worse) between each effort (regardless of valence) show significantly greater signal difference after low than after high effort (tLeftvSTR(18) = 2.65, p = 0.016; tRightvSTR(18) = 2.60, p = 0.017; Fig. 4D). These significant follow-up t tests and the nonsignificant interaction with valence suggest an attenuation of appetitive prediction error signal in the vSTR by high effort, regardless of valence.

Next, we searched for voxels that responded more strongly to outcomes after reward fractals compared with outcomes after punishment fractals across all conditions (main effect of valence: win-avoid loss regardless of effort). We found an uncorrected cluster in the ventromedial prefrontal cortex, but this did not survive FWE correction at the peak level. A positive response in the vmPFC for win > avoid loss is consistent with the literature and our expectation for the encoding of affective events; however, because this did not satisfy our stringent peak-level threshold, we did not follow up on this result to test our effort modulation hypothesis.

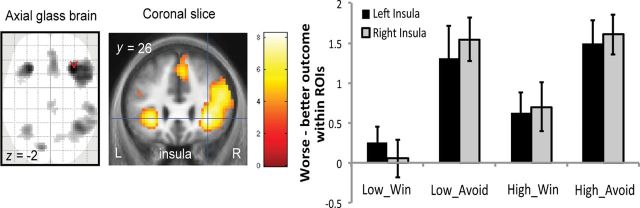

Valence modulates insular response to outcomes worse than expected

We next examined how effort and valence affected the processing of worse-than-expected outcomes. We first identified voxels that showed a greater response to worse-than-expected outcomes compared with those better than expected across all conditions as follows: reversed main effect of outcome: (neutral–win in winning trials) + (loss-neutral in avoid losing trials). As expected, the insula showed the strongest response for a (reversed) main effect of outcome, worse > better outcomes, with two maxima in right insula extending dorsally into right inferior frontal gyrus (both pars opercularis and triangularis; Fig. 5; MNI space coordinates, 33, 26, −2; peak Z score, 7.38; 1189 voxels, p < 0.001 FWE) and left insula (MNI space coordinates, −30, 23, −5; peak Z score, 6.84; 270 voxels, p < 0.001 FWE). This contrast also revealed five other maxima, two in right inferior parietal lobule (MNI space coordinates, 39, −52, 43; peak Z score, 5.99; 643 voxels, p < 0.001 FWE) and left inferior parietal lobule (MNI space coordinates, −33, −55, 40; peak Z score, 4.85; 138 voxels, p = 0.012 FWE), and three in left cerebellum (MNI space coordinates, −12, −79, −29; peak Z score, 5.30; 70 voxels, p = 0.002 FWE), right superior medial gyrus (MNI space coordinates, 6, 32, 46; peak Z score, 5.13; 289 voxels, p = 0.004 FWE), and right medial temporal gyrus (MNI space coordinates, 57, −28, −5; peak Z score, 5.12; 88 voxels, p = 0.004 FWE).

Figure 5.

SPM of brain activity at the time of outcome presentation showing greater signal in bilateral insula when receiving worse outcomes than better outcomes, regardless of effort (p < 0.05 FWE). For illustrative purposes, voxels displayed in gray on glass brain and in yellow on coronal slice survived a threshold of p < 0.001, uncorrected. Coordinates are given in MNI space. L indicates left; R, right. The bar graph on the right depicts a valence-by-outcome interaction of extracted activity within insula ROIs. Regardless of effort, the difference between worse > better outcomes is greater in avoid losing conditions compared with in winning conditions. Error bars indicate SEM.

Driven by previous findings in relation to the role of the insula in aversive representation, we constrained our mask using a functional ROI by drawing the overlapping regions between anatomical masks of left and right insula and the whole-brain, SPM-derived clusters for left and right insula. The resulting ROIs clearly depicted two masks in left and right anterior insula, confirming that these regions were sensitive to outcomes that were worse than expected compared with those better than expected. We subsequently assessed the complementary main effects of effort and valence and their interactions with the main effect of outcome.

A three-way ANOVA of effort, valence, and outcome revealed a significant main effect of valence in bilateral insula, such that insular activity was greater for punishment than reward (Fleft(1,18) = 12.83, p = 0.002; Fright(1,18) = 8.16, p = 0.01). We found a significant valence-by-outcome interaction (Fleft(1,18) = 8.43, p = 0.009; Fright(1,18) = 18.83, p < 0.0001) in the absence of an effort-by-valence, an effort-by-outcome, or an effort-by-valence-by-outcome interaction. Pairwise t tests on the signal difference (worse minus better) between winning and avoid losing trials (regardless of effort) show significantly greater signal difference in avoid loss than win (tLeftINSULA(18) = 3.22, p = 0.004; tRightINSULA(18) = 4.65, p = 0.0001; Fig. 5). This effect was not dependent on the level of effort exerted. Our results are consistent with previous suggestions that the insula robustly represents aversive events such as punishment (Seymour et al., 2004), pain for others (Singer et al., 2004), or risk (Preuschoff et al., 2008). In particular, we show a magnified insular response to actual losses compared with receiving nothing when the outcome could have been a gain.

Finally, we searched for voxels that responded more strongly to outcomes after punishment fractals compared with reward fractals across all conditions (reversed main effect of valence: avoid loss-win regardless of effort) and no suprathreshold voxel was detected.

Discussion

We confirmed that anterior cingulate cortex, supplementary motor area, and striatum encode anticipated effort regardless of outcome valence. Critically, activity in the striatum associated with valence was only seen when an outcome was revealed and not during its anticipation. In the vSTR, effect sizes of differential response to better and to worse outcomes were attenuated by the amount of effort just expended, a pattern akin to a neural signal for net valuation.

Anticipatory activity reflects effort but not valence

There is ample evidence indicating dopamine neurons in the SN/VTA report a reward prediction error, a signal also expressed in the pattern of BOLD response in the vSTR (Berns et al., 2001; O'Doherty et al., 2004; Pessiglione et al., 2006). Furthermore, in rats, phasic changes in dopamine concentration in vSTR reflect predicted rewards but not required effort (Gan et al., 2010). However, complexities in the coupling between valence and vigor (Boureau and Dayan, 2011; Cools et al., 2011) necessitate orthogonalizing expected value from requirements for action or effort to test these relationships comprehensively (Crockett et al., 2009). In this context, we observed effort trumping valence during anticipation. This extends findings from previous experiments (Guitart-Masip et al., 2011, 2012a, 2012b) from a binary action requirement to a situation where the requirement is expenditure of one of two different force levels. This reinforces a growing idea that one primary driver of BOLD activity in the striatum is an anticipated invigoration of action.

Our previous experiment involved a contrast between Go and NoGo, in which we observed an action-by-valence interaction with better performance for Go for a prospect of winning and NoGo (which was the alternative choice there) for a prospect of punishment (Huys et al., 2011; Guitart-Masip et al., 2012b). In the present study, we had two Go responses (low and high effort), and so did not expect or find any such behavioral coupling. Instead, we show robust behavioral effects of valence. Anticipation of reward differed from anticipation from punishment, with better performance early in training and faster accomplishment of target force later during scanning. Note that the effect of reward shifted from boosting accuracy when participants were still unfamiliar with the contingencies, to boosting the speed with which they responded and completed the action when they had mastered the task.

One might argue that the lack of a valence effect in the anticipatory brain activity reflects the fact that valence does not provide relevant information about the instrumental response. This seems unlikely, because paradigms such as Pavlovian to Instrumental Transfer (Estes, 1948; Talmi et al., 2008) and the monetary incentive delay task (Knutson et al., 2005) clearly show an effect of valence on behavior even when it is irrelevant (or even damaging; Dayan et al., 2006). Furthermore, our own behavioral results demonstrated that participants anticipated the valence associated with fractals along with the levels of effort. One possible explanation for this apparent brain-behavior discrepancy is that the signal that is truly uniquely attributable to valence is distributed or localized outside of the striatum, leading to it going undetected due to application of a stringent whole-brain threshold. However, this implies that the representation of valence is weaker than the representation of effort that, in contrast, did survive the same threshold. In addition, in our previous imaging data (Guitart-Masip et al., 2011) we detected a main effect of action without a valence effect in most striatal ROIs that were analyzed and in the right putamen we found an interaction in which an effect of action was only significant in the avoid loss, but not in the winning condition.

During anticipation, before participants engaged in a motor response, the supplementary motor cortex, ACC, and dorsal striatum showed higher BOLD responses in anticipation of high effort. This is consistent with previous reports for the involvement of ACC (Walton et al., 2002; Floresco and Ghods-Sharifi, 2007; Croxson et al., 2009; Prévost et al., 2010) and dorsal striatum (Kurniawan et al., 2010 but see Braun and Hauber, 2011) in effort.

Effort modulation on outcome valuation

Despite the absence of a valence effect during anticipation, we confirmed the conventional observation that BOLD in the vSTR was correlated with positive (i.e., appetitive) prediction errors at the time of outcome. Here our novel contribution is to show that this prediction error signal was sensitive to the amount of effort invested. If one interprets high effort as being more costly, the effect of this sensitivity is akin to creating a form of net valuation signal, such that relative to worse outcomes, the incremental value of better outcomes after high effort is attenuated and leads to smaller appetitive prediction errors compared with those after low effort. This result mimics the attenuated activity in the vSTR for reward prediction error at reception of a painful outcome compared with a benign tactile stimulation (Talmi et al., 2009). However in light of recent demonstration that phasic dopaminergic signals to the vSTR were insensitive to anticipated effort (Gan et al., 2010), this effort modulation in the vSTR is unlikely to be dependent on the dopaminergic system.

In principle, this suppression could have been driven either by anticipated or by actual effort. The former seems unlikely because, if anything, we saw increased BOLD response in the striatum in anticipation of high effort. Concerning the latter, the net valuation signal in the vSTR could have been derived from a comparison between signals for state values and signals from other regions concerning recently expended vigor. For example, there have been previous reports that activity in ACC ramps up during effort investment as subjects are closer in time to reward delivery (Kennerley et al., 2008; Croxson et al., 2009) and that accumulating information about cost that governs periods of effort and rest is correlated with BOLD response in the insula (Meyniel et al., 2013). Therefore, one might speculate that information about effort that ramps in these two structures is transmitted to, and modifies, activity in a brain valuation system including vSTR. Unfortunately, given the long TR, we could not test this hypothesis directly. In addition, perhaps due to overtraining, there was no trial-wise correlation between movement metrics and averaged extracted ventral striatal activity. These neural-neural and behavioral-neural predictions are straightforward targets for future experiments. Note, however, that the metrics of movement are different for high versus low effort, making it hard to distinguish motor anticipation from effort anticipation, with both being relevant as correlates of striatal and cingulate activity.

The interval between effort and outcome onsets was short (2.5 s), raising the issue of whether BOLD during outcome phase was a simple artifact of motor activity happening 2.5 s previously. We refrained from testing the main effect of effort at outcome phase for this reason, because outcome and grip regressors would not have been orthogonal. Importantly, several lines of reasoning militate against this potential confound, on top of the observation of larger positive prediction errors after the exertion of low compared with high effort. First, our GLM approach was conservative in that each outcome regressor was moderately orthogonal from the grip regressors (see GLM1 in Materials and Methods) and, indeed, motor regions showed significant effects of squeezing. Therefore, the suprathreshold activity that we saw in vSTR is variance uniquely explained by the outcome regressors over and above variance explained by the grip regressors.

A further reason to think that motor activity was not responsible for our finding is that the ventral aspect of putamen alone, and not the dorsal aspect, was significant in the effect on the positive prediction error signal. This is consistent with previous functional segregations of striatum, with more dorsal (and lateral) aspects of striatum encoding discrete reinforced, or even habitized responses, and more ventral (and medial) aspects encoding stimulus-action-reward associations and outcome predictions (Pennartz et al., 1994; Voorn et al., 2004; Haber and Knutson, 2010; Kurniawan et al., 2011), and it is also consistent with the conventional role of vSTR in valuation (Mogenson et al., 1980; Schmidt et al., 2012).

Our findings are consistent with a general view that effort discounts value. There are effects such as state-dependent valuation (Aw et al., 2011) and within-trial contrast (Clement et al., 2000; Gipson et al., 2009) that suggest that rewards achieved after greater effort are more highly valued than the same rewards earned with less effort, but these effects rely on a temporal separation between effort and reward consumption. Future experiments could directly test for preference for stimuli associated with gain or loss after high or low effort.

To conclude, we confirm here the involvement of effort in neural anticipation of action. Notably, our results show that physical effort, a paradigmatic embodied cost, affects the neural valuation of action outcomes by modulating the brain's response to desired events. Our results complement conventional emphases on the role of vSTR in reward processing and suggest a way that outcome signaling is refined to allow cost dependence in choice.

Footnotes

This work was supported by the Wellcome Trust (Senior Investigator Award #098362/Z/12/Z to R.J.D.). The Wellcome Trust Centre for Neuroimaging is supported by a Wellcome Trust Strategic Award (091593/Z/10/Z). P.D. is supported by the Gatsby Charitable Foundation. We thank Lionel Rigoux for suggestions for analysis on hand force data, Mathias Pessiglione and Robb Rutledge for helpful comments on previous versions of the manuscript, and to two anonymous referees for reviewing the manuscript.

The authors declare no competing financial interests.

References

- Aw JM, Vasconcelos M, Kacelnik A. How costs affect preferences: Experiments on state dependence, hedonic state and within-trial contrast in starlings. Anim Behav. 2011;81:1117–1128. [Google Scholar]

- Berns GS, McClure SM, Pagnoni G, Montague PR. Predictability modulates human brain response to reward. J Neurosci. 2001;21:2793–2798. doi: 10.1523/JNEUROSCI.21-08-02793.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolles RC. Species-specific defense reactions and avoidance learning. Psychol Rev. 1970;77:32–48. [Google Scholar]

- Boureau YL, Dayan P. Opponency revisited: Competition and cooperation between dopamine and serotonin. Neuropsychopharmacol. 2011;36:74–97. doi: 10.1038/npp.2010.151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braun S, Hauber W. The dorsomedial striatum mediates flexible choice behavior in spatial tasks. Behav Brain Res. 2011;220:288–293. doi: 10.1016/j.bbr.2011.02.008. [DOI] [PubMed] [Google Scholar]

- Clement TS, Feltus JR, Kaiser DH, Zentall TR. “Work ethic” in pigeons: Reward value is directly related to the effort or time required to obtain the reward. Psychon B Rev. 2000;7:100–106. doi: 10.3758/bf03210727. [DOI] [PubMed] [Google Scholar]

- Cools R, Nakamura K, Daw ND. Serotonin and dopamine: Unifying affective, activational, and decision functions. Neuropsychopharmacol. 2011;36:98–113. doi: 10.1038/npp.2010.121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crockett MJ, Clark L, Robbins TW. Reconciling the role of serotonin in behavioral inhibition and aversion: Acute tryptophan depletion abolishes punishment-induced inhibition in humans. J Neurosci. 2009;29:11993–11999. doi: 10.1523/JNEUROSCI.2513-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Croxson PL, Walton ME, O'Reilly JX, Behrens TE, Rushworth MF. Effort-based cost-benefit valuation and the human brain. J Neurosci. 2009;29:4531–4541. doi: 10.1523/JNEUROSCI.4515-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dayan P. Instrumental vigour in punishment and reward. Eur J Neurosci. 2012;35:1152–1168. doi: 10.1111/j.1460-9568.2012.08026.x. [DOI] [PubMed] [Google Scholar]

- Dayan P, Niv Y, Seymour B, Daw ND. The misbehavior of value and the discipline of the will. Neural networks. 2006;19:1153–1160. doi: 10.1016/j.neunet.2006.03.002. [DOI] [PubMed] [Google Scholar]

- Deakin J, Graeff FG. 5-HT and mechanisms of defence. J Psychopharmacol. 1991;5:305–315. doi: 10.1177/026988119100500414. [DOI] [PubMed] [Google Scholar]

- Deichmann R, Schwarzbauer C, Turner R. Optimisation of the 3D MDEFT sequence for anatomical brain imaging: technical implications at 1.5 and 3 T. Neuroimage. 2004;21:757–767. doi: 10.1016/j.neuroimage.2003.09.062. [DOI] [PubMed] [Google Scholar]

- Dinsmoor JA. Escape, avoidance, punishment: Where do we stand? J Exp Anal Behav. 1977;28:83–95. doi: 10.1901/jeab.1977.28-83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Estes WK. Discriminative conditioning. II. Effects of a Pavlovian conditioned stimulus upon a subsequently established operant response. J Exp Psychol. 1948;38:173–177. doi: 10.1037/h0057525. [DOI] [PubMed] [Google Scholar]

- Floresco SB, Ghods-Sharifi S. Amygdala-prefrontal cortical circuitry regulates effort-based decision making. Cereb Cortex. 2007;17:251–260. doi: 10.1093/cercor/bhj143. [DOI] [PubMed] [Google Scholar]

- Gan JO, Walton ME, Phillips PE. Dissociable cost and benefit encoding of future rewards by mesolimbic dopamine. Nat Neurosci. 2010;13:25–27. doi: 10.1038/nn.2460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gipson CD, Miller HC, Alessandri JJD, Zentall TR. Within-trial contrast: The effect of probability of reinforcement in training. Behav Process. 2009;82:126–132. doi: 10.1016/j.beproc.2009.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guitart-Masip M, Fuentemilla L, Bach DR, Huys QJ, Dayan P, Dolan RJ, Duzel E. Action dominates valence in anticipatory representations in the human striatum and dopaminergic midbrain. J Neurosci. 2011;31:7867–7875. doi: 10.1523/JNEUROSCI.6376-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guitart-Masip M, Chowdhury R, Sharot T, Dayan P, Duzel E, Dolan RJ. Action controls dopaminergic enhancement of reward representations. Proc Natl Acad Sci U S A. 2012a;109:7511–7516. doi: 10.1073/pnas.1202229109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guitart-Masip M, Huys QJ, Fuentemilla L, Dayan P, Duzel E, Dolan RJ. Go and no-go learning in reward and punishment: Interactions between affect and effect. Neuroimage. 2012b;62:154–166. doi: 10.1016/j.neuroimage.2012.04.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber SN, Knutson B. The reward circuit: linking primate anatomy and human imaging. Neuropsychopharmacol. 2010;35:4–26. doi: 10.1038/npp.2009.129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huys QJ, Cools R, Gölzer M, Friedel E, Heinz A, Dolan RJ, Dayan P. Disentangling the roles of approach, activation and valence in instrumental and Pavlovian responding. PLoS Comput Biol. 2011;7:1–14. doi: 10.1371/journal.pcbi.1002028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson AW, Gallagher M. Greater effort boosts the affective taste properties of food. P Roy Soc B-Biol Sci. 2011;278:1450–1456. doi: 10.1098/rspb.2010.1581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Dahmubed AF, Lara AH, Wallis JD. Neurons in the frontal lobe encode the value of multiple decision variables. J Cognitive Neurosci. 2008;21:1162–1178. doi: 10.1162/jocn.2009.21100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klein-Flügge MC, Hunt LT, Bach DR, Dolan RJ, Behrens TE. Dissociable reward and timing signals in human midbrain and ventral striatum. Neuron. 2011;72:654–664. doi: 10.1016/j.neuron.2011.08.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. J Neurosci. 2005;25:4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurniawan IT, Seymour B, Talmi D, Yoshida W, Chater N, Dolan RJ. Choosing to make an effort: the role of striatum in signaling physical effort of a chosen action. J Neurophysiol. 2010;104:313–321. doi: 10.1152/jn.00027.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kurniawan IT, Guitart-Masip M, Dolan RJ. Dopamine and effort-based decision making. Front Neurosci. 2011;5:81. doi: 10.3389/fnins.2011.00081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyniel F, Sergent C, Rigoux L, Daunizeau J, Pessiglione M. Neurocomputational account of how the human brain decides when to have a break. Proc Natl Acad Sci U S A. 2013;110:2641–2646. doi: 10.1073/pnas.1211925110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mogenson GJ, Jones DL, Yim CY. From motivation to action: Functional interface between the limbic system and the motor system. Prog Neurobiol. 1980;14:69–97. doi: 10.1016/0301-0082(80)90018-0. [DOI] [PubMed] [Google Scholar]

- Mowrer OH. Learning theory and behavior. New York: Wiley; 1960. [Google Scholar]

- Niv Y, Edlund JA, Dayan P, O'Doherty JP. Neural prediction errors reveal a risk-sensitive reinforcement-learning process in the human brain. J Neurosci. 2012;32:551–562. doi: 10.1523/JNEUROSCI.5498-10.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38:329–337. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- Pennartz CM, Groenewegen HJ, Lopes da Silva FH. The nucleus accumbens as a complex of functionally distinct neuronal ensembles: an integration of behavioural, electrophysiological and anatomical data. Prog Neurobiol. 1994;42:719–761. doi: 10.1016/0301-0082(94)90025-6. [DOI] [PubMed] [Google Scholar]

- Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phillips PEM, Walton ME, Jhou TC. Calculating utility: preclinical evidence for cost-benefit analysis by mesolimbic dopamine. Psychopharmacol (Berl) 2007;191:483–495. doi: 10.1007/s00213-006-0626-6. [DOI] [PubMed] [Google Scholar]

- Preuschoff K, Quartz SR, Bossaerts P. Human insula activation reflects risk prediction errors as well as risk. J Neurosci. 2008;28:2745–2752. doi: 10.1523/JNEUROSCI.4286-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prévost C, Pessiglione M, Météreau E, Cléry-Melin M-L, Dreher J-C. Separate valuation subsystems for delay and effort decision costs. J Neurosci. 2010;30:14080–14090. doi: 10.1523/JNEUROSCI.2752-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rescorla RA, Lolordo VM. Inhibition of avoidance behavior. J Comp Physiol Psych. 1965;59:406–412. doi: 10.1037/h0022060. [DOI] [PubMed] [Google Scholar]

- Schmidt L, Lebreton M, Cléry-Melin ML, Daunizeau J, Pessiglione M. Neural mechanisms underlying motivation of mental versus physical effort. PLoS Biol. 2012;10:1–13. doi: 10.1371/journal.pbio.1001266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seymour B, O'Doherty JP, Dayan P, Koltzenburg M, Jones AK, Dolan RJ, Friston KJ, Frackowiak RS. Temporal difference models describe higher-order learning in humans. Nature. 2004;429:664–667. doi: 10.1038/nature02581. [DOI] [PubMed] [Google Scholar]

- Singer T, Seymour B, O'Doherty J, Kaube H, Dolan RJ, Frith CD. Empathy for pain involves the affective but not sensory components of pain. Science. 2004;303:1157–1162. doi: 10.1126/science.1093535. [DOI] [PubMed] [Google Scholar]

- Talmi D, Seymour B, Dayan P, Dolan RJ. Human Pavlovian–Instrumental Transfer. J Neurosci. 2008;28:360–368. doi: 10.1523/JNEUROSCI.4028-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talmi D, Dayan P, Kiebel SJ, Frith CD, Dolan RJ. How humans integrate the prospects of pain and reward during choice. J Neurosci. 2009;29:14617–14626. doi: 10.1523/JNEUROSCI.2026-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Voorn P, Vanderschuren LJ, Groenewegen HJ, Robbins TW, Pennartz CM. Putting a spin on the dorsal–ventral divide of the striatum. Trends Neurosci. 2004;27:468–474. doi: 10.1016/j.tins.2004.06.006. [DOI] [PubMed] [Google Scholar]

- Walton ME, Bannerman DM, Rushworth MF. The role of rat medial frontal cortex in effort-based decision making. J Neurosci. 2002;22:10996–11003. doi: 10.1523/JNEUROSCI.22-24-10996.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton ME, Kennerley SW, Bannerman DM, Phillips PE, Rushworth MF. Weighing up the benefits of work: behavioral and neural analyses of effort-related decision making. Neural networks. 2006;19:1302–1314. doi: 10.1016/j.neunet.2006.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiskopf N, Hutton C, Josephs O, Deichmann R. Optimal EPI parameters for reduction of susceptibility-induced BOLD sensitivity losses: a whole-brain analysis at 3 T and 1.5 T. Neuroimage. 2006;33:493–504. doi: 10.1016/j.neuroimage.2006.07.029. [DOI] [PubMed] [Google Scholar]