Abstract

Paralysis following spinal cord injury (SCI), brainstem stroke, amyotrophic lateral sclerosis (ALS) and other disorders can disconnect the brain from the body, eliminating the ability to carry out volitional movements. A neural interface system (NIS)1–5 could restore mobility and independence for people with paralysis by translating neuronal activity directly into control signals for assistive devices. We have previously shown that people with longstanding tetraplegia can use an NIS to move and click a computer cursor and to control physical devices6–8. Able-bodied monkeys have used an NIS to control a robotic arm9, but it is unknown whether people with profound upper extremity paralysis or limb loss could use cortical neuronal ensemble signals to direct useful arm actions. Here, we demonstrate the ability of two people with long-standing tetraplegia to use NIS-based control of a robotic arm to perform three-dimensional reach and grasp movements. Participants controlled the arm over a broad space without explicit training, using signals decoded from a small, local population of motor cortex (MI) neurons recorded from a 96-channel microelectrode array. One of the study participants, implanted with the sensor five years earlier, also used a robotic arm to drink coffee from a bottle. While robotic reach and grasp actions were not as fast or accurate as those of an able-bodied person, our results demonstrate the feasibility for people with tetraplegia, years after CNS injury, to recreate useful multidimensional control of complex devices directly from a small sample of neural signals.

The study participants, referred to as S3 and T2 (a 58 year-old woman, and a 65 year-old man, respectively), were each tetraplegic and anarthric as a result of a brainstem stroke. Both were enrolled in the BrainGate2 pilot clinical trial (see Methods). Neural signals were recorded using a 4 × 4 mm, 96-channel microelectrode array, which was implanted in the dominant MI hand area (for S3, in November 2005, 5.3 years prior to the beginning of this study; for T2, in June 2011, 5 months prior to this study). Participants performed sessions on a near-weekly basis to carry out point-and-click actions of a computer cursor using decoded MI ensemble spiking signals7. Across four sessions in her sixth year post-implant (trial days 1952–1975), S3 used these neural signals to perform reach and grasp movements of either of two differently purposed right-handed robot arms. The DLR Light-Weight Robot III (German Aerospace Center, Oberpfaffenhofen, Germany, Fig 1b, left)10 is designed to be an assistive device that can reproduce complex arm and hand actions. The DEKA Arm System (DEKA Research and Development Corp., Manchester, NH, Fig 1b right) is a prototype advanced upper limb replacement for people with arm amputation11. T2 controlled the DEKA prosthetic limb on one session day (day 166). Both robots were operated under continuous user-driven neuronal ensemble control of arm endpoint (hand) velocity in 3D space; a simultaneously decoded neural state executed a hand action. S3 had used the DLR robot on multiple occasions over the prior year for algorithm development and interface testing, but she had no exposure to the DEKA arm prior to the sessions reported here. T2 participated in three DEKA arm sessions for similar development and testing prior to the session reported here but had no other robotic arm experience.

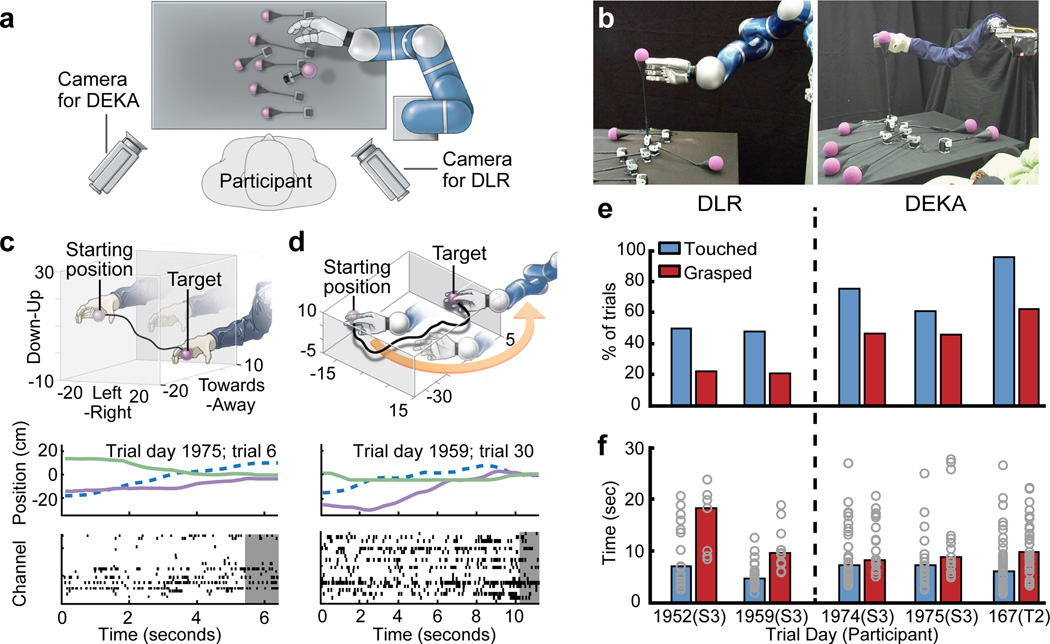

Figure 1.

Experimental setup and performance metrics. (a) Diagram showing an overhead view of participant’s location at the table (grey rectangle) from which the targets (purple spheres) were elevated by a motor. The robotic arm was positioned to the right and slightly in front of the participant (the DLR and DEKA arms were mounted in slightly different locations to maximize the correspondence of their workspaces over the table; for details, see Supplementary Fig. 9). Both video cameras were used for all DLR and DEKA sessions; labels indicate which camera was used for the photographs in (b). (b) Photographs of the DLR (left panel) and DEKA (right panel) robots. (c) Reconstruction of an example trial in which the participant moved the DEKA arm in all three dimensions to successfully reach and grasp a target. The top panel illustrates the trajectory of the hand in 3D space. The middle panel shows the position of the wrist joint for the same trajectory decomposed into each of its three dimensions relative to the participant: the left-to-right axis (dashed blue line), the near-to-far axis (purple line) and the up-down axis (green line). The bottom panel shows the threshold crossing events from all units that contributed to decoding the movement. Each row of tick marks represents the activity of one unit and each tick mark represents a threshold crossing. The grey shaded area shows the first 1 sec of the grasp. (d) An example trajectory from a DLR session in which the participant needed to move the robot hand, which started to the left of the target, around and to the right of the target in order to approach it with the open part of the hand. The middle and bottom panels are analogous to (c). (e) Percentage of trials in which the participant successfully touched the target with the robotic hand (blue bars) and successfully grasped the target (red bars). (f) Average time required to touch (blue bars) or grasp (red bars) the targets. Each circle shows the acquisition time for one successful trial.

To decode movement intentions from neural activity, electrical potentials from each of the 96 channels were filtered to reveal extracellular action potentials (i.e., ‘unit’ activity). Unit threshold crossings (see Methods) were used to calibrate decoders that generated velocity and hand state commands. Signals for reach were decoded using a Kalman filter12 to continuously update an estimate of the participant’s intended hand velocity. The Kalman filter was initialized during a single “open-loop” filter calibration block (< 4 min) in which the participants were asked to imagine controlling the robotic arm as they watched it undergo a series of regular, pre-programmed movements while the accompanying neural activity was recorded. This open-loop filter was then iteratively updated during four to eight “closed-loop” calibration blocks while the participant actively controlled the robot under visual feedback, with gradually decreasing levels of computer-imposed error attenuation (see Methods). To discriminate an intended hand state, a linear discriminant classifier was built on signals from the same recorded units while the participant imagined squeezing his or her hand8. On average, the decoder calibration procedure lasted ~ 31 minutes (ranging from 20–48 minutes, exclusive of time between blocks).

After decoder calibration, we assessed whether each participant could use the robotic arm to reach for and grasp 6 cm diameter foam ball targets, presented in 3D space one at a time by motorized levers (Fig. 1a–c, and Supplementary Fig. 1b). Because hand aperture was not much larger than the target size (only 1.3× larger for DLR, and 1.8× larger for DEKA) and hand orientation was not under user control, grasping targets required the participant to maneuver the arm within a narrow range of approach angles with the hand open while avoiding the target support rod below. Targets were mounted on flexible supports; brushing them with the robotic arm resulted in target displacements. Together, these factors increased task difficulty beyond simple point-to-point movements and frequently required complex curved paths or corrective actions (Fig. 1d, Supplementary Movies 1–3). Trials were judged successful or unsuccessful by two independent visual inspections of video data (see Methods). A successful “touch” trial occurred when the participant contacted the target with the hand; a successful “grasp” trial occurred when the participant closed the hand while any part of the target or the top of its supporting cone was within the volume enclosed by the hand.

In the 3D reach-and grasp task, S3 performed 158 trials across 4 sessions and T2 performed 45 trials in a single session (Table 1; Fig. 1e,f). S3 touched the target within the allotted time in 48.8% of the DLR and 69.2% of the DEKA trials, and T2 touched the target within the allotted time in 95.6% of trials (Supplementary Movies 1–3, Supplementary Fig. 2). Of the successful touches, S3 grasped the target 43.6% (DLR) and 66.7% (DEKA) of the time, while T2 grasped the target 65.1% of the time. Of all trials, S3 grasped the target 21.3% (DLR) and 46.2% (DEKA) of the time, and T2 grasped the target 62.2% of the time. In all sessions from both participants, performance was significantly higher than expected by chance alone (Supplementary Fig. 3). For S3, times to touch were approximately the same for both robotic arms (Fig. 1f, blue bars; median 6.2 +/− 5.4 sec) and were comparable to times for T2 (6.1 +/− 5.5 sec). The times for combined reach and grasp were similar for both participants (S3, 9.4 +/− 6.2 sec; T2, 9.5 +/− 5.5 sec), although for the first DLR session, times were about twice as long.

Table 1.

Summary of neurally-controlled robotic arm target acquisition trials

| Trial Day 1952 S3 (DLR) |

Trial Day 1959 S3 (DLR) |

Trial Day 1974 S3 (DEKA) |

Trial Day 1975 S3 (DEKA) |

Trial Day 166 T2 (DEKA) |

|

|---|---|---|---|---|---|

| Number of Trials | 32 | 48 | 45 | 33 | 45 |

| Targets Contacted Grasped Time to Touch (s) Time to Grasp (s) Touched only Time to Touch (s) |

16 (50.0%) 7 (21.9%) 5.4 ± 6.9 18.2 ± 6.4 9 (28.1%) 7.0 ± 6.2 |

23 (47.9%) 10 (20.8%) 5.4 ± 2.3 9.5 ± 4.5 13 (27.1%) 4.6 ± 3.0 |

34 (75.6%) 21 (46.7%) 6.1 ± 4.9 8.2 ± 4.9 13 (28.9%) 10.7 ± 6.5 |

20 (60.6%) 15 (45.5%) 6.8 ± 3.6 8.8 ± 8.0 5 (15.1%) 9.4 ± 8.0 |

43 (95.6%) 28 (62.2%) 5.5 ± 4.7 9.5 ± 5.5 15 (33.3%) 7.1 ± 6.8 |

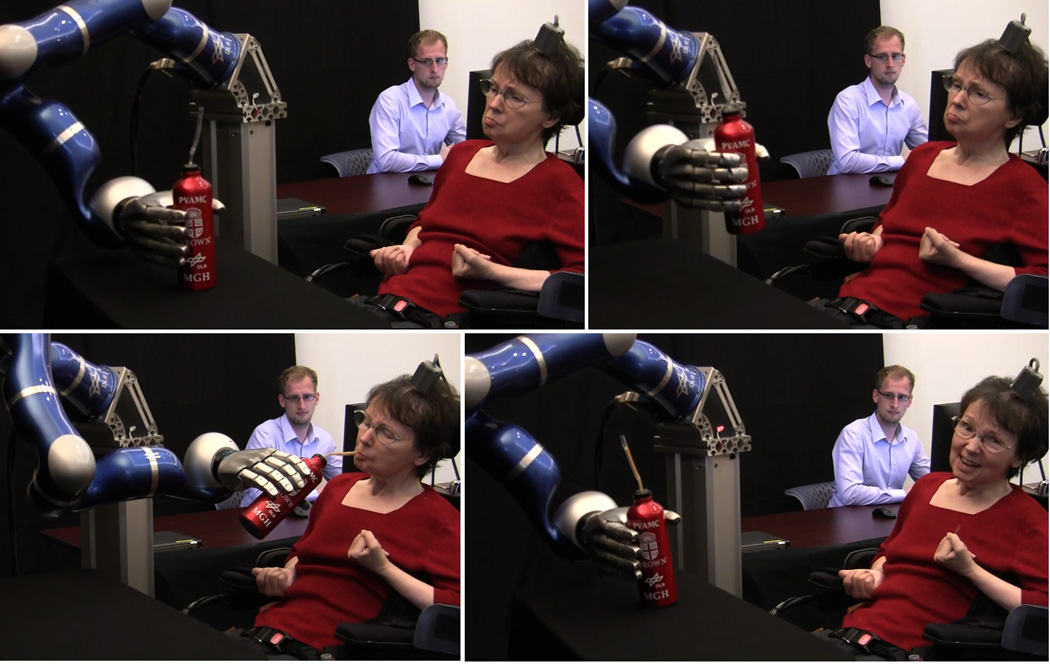

To explore the utility of NISs for facilitating activities of daily living for people with paralysis, we also assessed how well S3 could control the DLR arm as an assistive device. We asked her to reach for and pick up a bottle of coffee, and then drink from it through a straw and place it back on the table. For this task, we restricted velocity control to the 2D tabletop plane and we used the simultaneously decoded grasp state as a sequentially activated trigger for one of four different hand actions that depended upon the phase of the task and the position of the hand (see Methods). Because the 7.2 cm bottle diameter was 90% of the DLR hand aperture, grasping the bottle required even greater alignment precision than grasping the targets in the 3D task described above. Once triggered by the state switch, robust finger position and grasping of the object was achieved by automated joint impedance control. We familiarized the participant with the task for approximately 14 minutes (during which we made adjustments to the robot hand grip force, and the participant learned the physical space in which the state decode and directional commands would be effective in moving the bottle close enough to drink from a straw). After this period, the participant successfully grasped the bottle, brought it to her mouth, drank coffee from it through a straw, and replaced the bottle on the table, on 4 of 6 attempts over the next 8.5 minutes (Fig. 2, Supplementary Fig. 4 and Supplementary Movie 4). The two unsuccessful attempts (#2 and 5 in sequence) were aborted to prevent the arm from pushing the bottle off the table (because the hand aperture was not properly aligned with the bottle). This was the first time since the participant’s stroke more than 14 years earlier that she had been able to bring any drinking vessel to her mouth and drink from it solely of her own volition.

Figure 2.

Participant S3 drinking from a bottle using the DLR robotic arm. (a) Four sequential images from the first successful trial showing participant S3 using the robotic arm to grasp the bottle, bring it towards her mouth, drink coffee from the bottle through a straw (her standard method of drinking), and place the bottle back on the table. The researcher in the background was positioned to monitor the participant and robotic arm. (See Supplementary Movie 1 from which these frames are extracted).

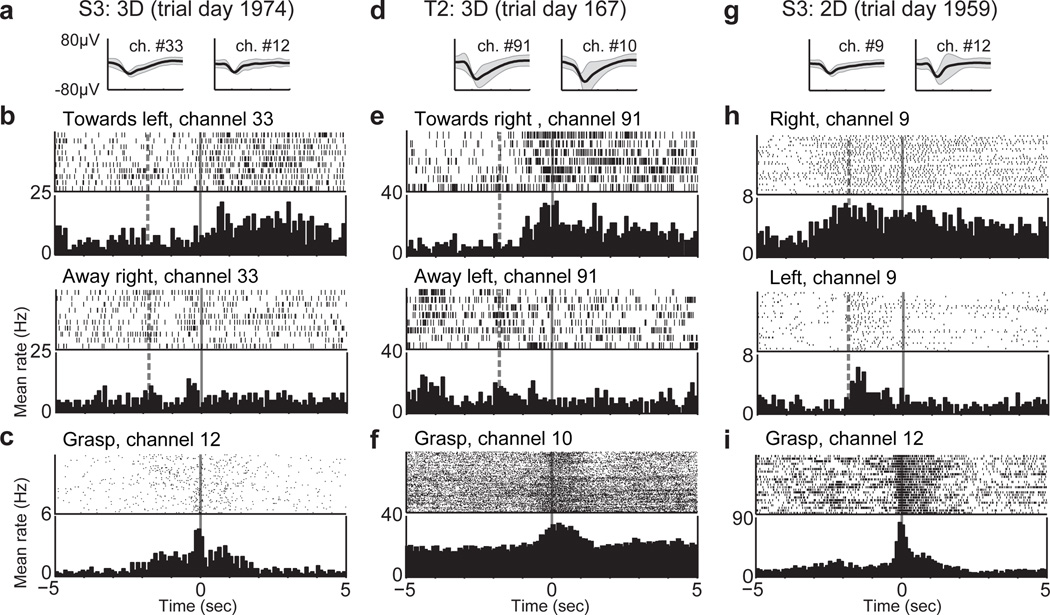

The use of NISs to restore functional movement will become practical only if chronically implanted sensors function for many years. It is thus notable that S3’s reach and grasp control was achieved using signals from an intracortical array implanted over 5 years earlier. This result, supported by multiple demonstrations of successful chronic recording capabilities in animals13–15, suggests that the goal of creating long-term intracortical interfaces is feasible. At the time of this study, S3 had lower recorded spike amplitudes and fewer channels contributing signals to the filter than during her first years of recording. Nevertheless, the units included in the Kalman filters were sufficiently directionally tuned and modulated to allow neural control of reach and grasp (Fig. 3 and Supplementary Figs. 5 and 6). S3 sometimes experiences stereotypic limb flexion. These movements did not appear to contribute in any way to her multidimensional reach and grasp control, and the neural signals used for this control exhibited waveform shapes and timing characteristics of unit spiking (Fig. 3 and Sup. Fig. 7). Furthermore, T2 produced no consistent volitional movement during task performance, which further substantiates the intracortical origin of his neural control.

Figure 3.

Examples of neural signals from three sessions and two participants: a 3D reach and grasp session from S3 (a–c) and T2 (d–f), and the 2D drinking session from S3 (g–i). (a,d,g) Average waveforms (thick black lines) ± 2 standard deviations (grey shadows) from two units from each session with a large directional modulation of activity. (b,e,h) Rasters and histograms of threshold crossings showing directional modulation. Each row of tick marks represents a trial, and each tick mark represents a threshold crossing event. The histogram summarizes the average activity across all trials in that direction. Rasters are displayed for arm movements to and from the pair of opposing targets that most closely aligned with the selected units’ preferred directions. (b) and (e) include both closed-loop filter calibration trials and assessment trials and (h) includes only filter calibration trials. Time 0 indicates the start of the trial. The dashed vertical line 1.8 seconds before the start of the trial identifies the time when the target for the upcoming trial began to rise. Activity occurring before this time corresponded to the end of the previous trial, which often included a grasp, followed by the lowering of the previous target and the computer moving the hand to the next starting position if it wasn’t already there. (c,f,i) Rasters and histograms from calibration and assessment trials for units that modulated with intended grasp state. During closed-loop filter calibration trials, the hand automatically closed starting at time 0, cueing the participant to grasp; during assessment trials, the grasp state was decoded at time 0. Expanded data appear in Supplementary Fig 5.

We have shown that two people with no functional arm control due to brainstem stroke used the neuronal ensemble activity generated by intended arm and hand movements to make point-to-point reaches and grasps with a robotic arm across a natural human arm workspace. Moreover, S3 used these neurally-driven commands to perform an everyday task. These findings extend our previous demonstrations of point and click neural control by people with tetraplegia7,16 and show that neural spiking activity recorded from a small MI intracortical array contains sufficient information to allow people with longstanding tetraplegia to perform even more complex manual skills. This result suggests the feasibility of using cortically-driven commands to restore lost arm function for people with paralysis. In addition, we have demonstrated considerably more complex robotic control than previously demonstrated in able-bodied non-human primates (NHPs)9,17,18. Both participants operated human-scale arms in a 3D target task that required curved trajectories and precise alignments over a volume that was 1.4 to 7.7 times greater than has been used by NHPs. The drinking task, while only 2D + state control, required both careful positioning and correctly-timed hand state commands to accomplish the series of actions necessary to retrieve the bottle, drink from it, and return it to the table.

Both participants performed these multidimensional actions after longstanding paralysis. For S3, signals were adequate to achieve control 14 years and 11 months after her stroke, showing that MI neuronal ensemble activity remains functionally engaged despite subcortical damage of descending motor pathways. Future clinical research will be needed to establish whether more signals19–22, signals from additional or other areas2,23–25, better decoders, explicit participant training, or other advances (see Supplementary Materials) will provide more complex, flexible, independent, and natural control. In addition to the robotic assistive device shown here, MI signals might also be used by people with paralysis to reanimate paralyzed muscles using functional electrical stimulation (FES)27–29 or by people with limb loss to control prosthetic limbs. Whether MI signals are suitable for people with limb loss to control an advanced prosthetic arm (such as the device shown here) remains to be tested and compared to other control strategies11,26. Though further developments might enable people with tetraplegia to achieve rapid, dexterous actions under neural control, at present, for people who have no or limited volitional movement of their own arm, even the basic reach and grasp actions demonstrated here could be substantially liberating, restoring the ability to eat and drink independently.

Methods Summary

See Supplementary Information for additional Methods.

Permission for these studies was granted by the US Food and Drug Administration (Investigational Device Exemption; CAUTION: Investigational Device. Limited by Federal Law to Investigational Use Only) and the Partners Healthcare/Massachusetts General Hospital Institutional Review Board. Core elements of the investigational BrainGate system have been described previously6,7.

During each session, participants were seated in a wheelchair with their feet located near or underneath the edge of the table supporting the target placement system. The robotic arm was positioned to the participant’s right (Fig. 1a). Raw neural signals for each channel were sampled at 30 kHz and fed through custom Simulink (Mathworks Inc., Natick, MA) software in 100 ms bins (S3) or 20 ms bins (T2) to extract threshold crossing rates2,30; these threshold crossing rates were used as the neural features for real-time decoding and for filter calibration. Open and closed-loop filter calibration was performed over several blocks, which were each 3 to 6 minutes long and contained 18–24 trials. Targets were presented using a custom, automated target placement platform. On each trial, one of 7 servos placed its target (a 6 cm diameter foam ball supported by a spring-loaded wooden dowel rod attached to the servo) in the workspace by lifting it to its task-defined target location (Fig. 1b). Between trials, the previous trial’s target was returned to the table-top while next target was raised. Due to variability in the position of the target-placing platform from session to session and changes in the angles of the spring-loaded rods used to hold the targets, visual inspection was used for scoring successful grasp and successful touch trials. Further details on session setup, signal processing, filter calibration, robot systems, and target presentations are given in Methods.

Methods

Permission for these studies was granted by the US Food and Drug Administration (Investigational Device Exemption; CAUTION: Investigational Device. Limited by Federal Law to Investigational Use) and the Partners Healthcare/Massachusetts General Hospital Institutional Review Board. The two participants in this study, S3 and T2, were enrolled in a pilot clinical trial of the BrainGate Neural Interface System (additional information about the clinical trial is available at http://www.clinicaltrials.gov/ct2/show/NCT00912041).

At the time of this study, S3 was a 58-year-old woman with tetraplegia caused by brainstem stroke that occurred nearly 15 years earlier. As previously reported7,31, she is unable to speak (anarthria) and has no functional use of her limbs. She has occasional bilateral or asymmetric flexor spasm movements of the arms that are intermittently initiated by any imagined or actual attempt to move. S3’s sensory pathways remain intact. She also retains some head movement and facial expression, has intact eye movement, and breathes spontaneously. On November 30, 2005, a 96-channel intracortical silicon microelectrode array (1.5mm electrode length, produced by Cyberkinetics Neurotechnology Systems, Inc, and now by its successor, Blackrock Microsystems, Salt Lake City, UT) was implanted in the arm area of motor cortex as previously described6,7. One month later, S3 began regularly participating in ~1–2 research sessions per week during which neural signals were recorded and tasks were performed toward the development, assessment, and improvement of the neural interface system. The data reported here are from S3’s trial days 1952 to 1975, more than 5 years after implant of the array. Participant S3 has provided permission for photographs, videos and portions of her protected health information to be published for scientific and educational purposes.

The second study participant, referred to as T2, is a 65 year-old ambidextrous man with tetraplegia and anarthria as a result of a brainstem stroke that occurred in 2006, five and a half years prior to the collection of the data presented in this report. He has a tracheostomy and percutaneous gastrostomy (PEG) tube; he receives supportive mechanical ventilation at night but breathes without assistance during the day, and receives all nutrition via PEG. He has a left abducens palsy with intermittent diplopia. He can rotate his head slowly over a limited range of motion. With the exception of unreliable and trace right wrist and index finger extension (but not flexion), he is without voluntary movement at and below C5. Occasional coughing results in involuntary hip flexion, and intermittent, rhythmic chewing movements occur without alteration in consciousness. Participant T2 also had a 96 channel Blackrock array with 1.5mm electrodes implanted into the dominant arm-hand area of motor cortex; the array was placed 5 months prior to the session reported here.

Setup

During each session, the participant was seated in her/his wheelchair with her/his feet located underneath the edge of the table supporting the target placement system. The robot arm was positioned to the participant’s right (Fig. 1a). A technician used aseptic technique to connect the 96-channel recording cable to the percutaneous pedestal and then viewed neural signal waveforms using commercial software (Cerebus Central, Blackrock Microsystems, Salt Lake City, UT). The waveforms were used to identify channels that were not recording signals and/or were contaminated with noise; for S3, those channels were manually excluded and remained off for the remainder of the recording session.

Robot systems

We used two robot systems with multi-joint arms and hands during this study. The first was the DLR Light-Weight Robot III10,32 with the DLR Five-Finger Hand33 developed at the German Aerospace Center (DLR). The arm weighs 14 kg and has 7 degrees of freedom (DoF). The hand has 15 active DoF which were combined into a single DoF (hand open/close) to execute a grasp for these experimental sessions. Torque sensors are embedded in each joint of the arm and hand, allowing the system to operate under impedance control, and enabling it to handle collision safely, which is desirable for human-robot interactions34. The hand orientation was fixed in Cartesian space. The second robotic system was the DEKA Generation 2 prosthetic arm system, which weighs 3.64 kg and has 6 DoF in the arm (shoulder abduction, shoulder flexion, humeral rotation and elbow flexion, wrist flexion, wrist rotation), and 4 DoF in the hand (also combined into a single DoF to execute a grasp for these experimental sessions). The DEKA hand orientation was kept fixed in joint space; therefore, it could change in the Cartesian space depending upon the posture of other joints derived from the inverse kinematics.

Both robotic arms were controlled in endpoint velocity space while a parallel state switch, also under neural control from the same cortical ensemble, controlled grasp. Virtual boundaries were placed in the workspace as part of the control software to avoid collisions with the tabletop, support stand, and participant. Of the 158 trials performed by S3, 80 were carried out during the first two sessions using the DLR arm and 78 during the two sessions using the DEKA arm.

Target presentation

Targets were defined using a custom, automated servo-based robotic platform. On each trial, one of the 7 servos placed its target (a 6 cm diameter foam ball attached to the servo via a spring-loaded wooden dowel rod) in the workspace by lifting it to its task-defined target location. Between trials, the previous target was returned to the table while the next target was raised to its position. The trials alternated between the lower right ‘home’ target and one of the other six targets. The targets circumscribed an area of 30 cm from left to right, 52 cm in depth, and 23 cm vertically (see Supplementary Figs. 1 and 9).

Due to variability in the position of the target-placing platform from session to session and changes in the angles of the spring-loaded rods used to hold the targets, estimates of true target locations in physical space relative to the software-defined targets were not exact. This target placement error had no impact on the 3D reach and grasp task because the goal of the task was to grab the physical target regardless of its exact location. However, for this reason, it was not possible to use an automated method for scoring touches and grasps. Instead, scoring was performed by visual inspection of the videos: for S3, by a group of three investigators (N.Y.M., D.B., and B.J.) and independently by a fourth investigator (L.R.H.); for T2, independently by four investigators (J.D.S., D.B., and B.J. and L.R.H.). Of 203 trials, there was initial concordance in scoring in 190 of them. The remaining 13 were re-reviewed using a second video taken from a different camera angle, and either a unanimous decision was reached (n = 10) or when there was any unresolved discordance in voting, the more conservative score was assigned (n = 3).

Signal acquisition

Raw neural signals for each channel were sampled at 30 kHz and fed through custom Simulink (Mathworks Inc., Natick, MA) software in 100 ms bins (for participant S3) or 20 ms bins (for participant T2). For participant T2, coincident noise in the raw signal was reduced using common-average referencing: from the 50 channels with the lowest impedance, we selected the 20 with the lowest firing rates. The mean signal from these 20 channels was subtracted from all 96 channels.

To extract threshold crossing rates2,30, signals in each bin were then filtered with a 4th order Butterworth filter with corners at 250 and 5000 Hz, temporally reversed, and filtered again. Neural signals were buffered for 4 ms before filtering to avoid edge effects. This symmetric (non-causal) filter is better matched to the shape of a typical action potential35, and using this method led to better extraction of low-amplitude action potentials from background noise and higher directional modulation indices than would be obtained using a causal filter. Threshold crossings were counted as follows. For computational efficiency, signals were divided into 2.5 ms (for S3) or 0.33 ms (for T2) sub-bins, and in each sub-bin, the minimum value was calculated and compared to a threshold. For S3, this threshold was set at −4.5 times the filtered signal’s root-mean-square (RMS) value in the previous block. For T2, this threshold was set at −5.5 times the RMS of the distribution of minimum values collected from each sub-bin. (Offline analysis showed that these two methods produced similar threshold values relative to noise amplitude). To prevent large spike amplitudes from inflating the RMS estimate for both S3 and T2, signal values were capped between 40 µV and −40 µV before calculating this threshold for each channel. The number of minima that exceeded the channel’s threshold was then counted in each bin, and these threshold crossing rates were used as the neural features for real-time decoding and for closed-loop filter calibration.

Filter calibration

Filter calibration was performed at the beginning of each session using data acquired over several “blocks” of 18–24 trials (each block lasting approximately 3 to 6 minutes). The process began with one open-loop filter initialization block, in which the participant was instructed to imagine that s/he was controlling the movements of the robot arm as it performed pre-programmed movements along the cardinal axes. The trial sequence was a center-out-back pattern. Each block began with the endpoint of the robot arm at the “home” target in the middle of the workspace. The hand would then move to a randomly selected target (distributed equidistant from the home target on the cardinal axes), pause there for 2 seconds, and then move back to the home target. This pattern was repeated 2 to 3 times for each target. To initialize the Kalman filter12,36, a tuning function was estimated for each unit by regressing its threshold crossing rates against instantaneous target directions (see below). In participant T2, a 0.3 sec. exponential smoothing filter was applied to the threshold crossing rates before filter calibration.

Open-loop filter initialization was followed by several blocks of closed-loop filter calibration (adapted to the Kalman filter from Taylor et al.37 and Jarosiewicz et al.38), in which the participant actively controlled the robot to acquire targets, in a similar home-out-back paradigm, but with the home target at the right of the workspace (Supplementary Fig. 1). In each closed-loop filter calibration block, the error in the participant’s decoded trajectories was attenuated by scaling down decoded movement commands orthogonal to the instantaneous target direction by a fixed percentage, similar to the technique used by Velliste et al.9. The amount of error attenuation was decreased across filter calibration blocks until it was zero, giving the participant full 3D control of the robot.

During each closed-loop filter calibration block, the participant’s intended movement direction at each moment was inferred to be from the current endpoint of the robot hand toward the center of the target. Time bins from 0.2 to 3.2 seconds after the trial start were used to calculate tuning functions and the baseline rates (see below) by regressing threshold crossing rates from each bin against the corresponding unit vector pointing in the intended movement direction; using this time period was meant to isolate the initial portion of each trial, during which the participant’s intended movement direction was less likely to be influenced by error correction. Times when the endpoint was within 6 cm of the target were also excluded, because angular error in the estimation of the intended direction is magnified as the endpoint gets closer to the target.

The state decoder used to control the grasping action of the robot hand was also calibrated during the same open-loop and closed-loop blocks. During open-loop blocks, after each trial ending at the home target, the robot hand would close for 2 seconds. During this time, the participant was instructed to imagine that s/he was closing his/her own hand. State decoder calibration was similar during closed-loop calibration blocks: after each home target trial, the hand moved to the home target if the participant hadn’t already moved it there, and an auditory cue instructed the participant to imagine closing his/her own hand. In closed-loop grasp calibration blocks using the DLR arm, the robot hand would only close if the state decoder successfully detected a grasp intention from the participant’s neural activity. In closed-loop calibration blocks using the DEKA arm, the hand always closed during grasp calibration irrespective of the decoded grasp state.

Sequential activation of DLR robot hand actions during the drinking task

In the drinking task, when participant S3 activated a grasp state, one of four different hand/arm actions were activated, depending upon the phase of the task and the position of the hand: 1) close the hand around the bottle and raise it off the table, 2) stop arm movement and pronate the wrist to orient the bottle towards the participant, 3) supinate the wrist back to its original position and re-enable arm movement, or 4) lower the bottle to the table and withdraw the hand.

Tracking baseline firing rates

Endpoint velocity and grasp state were decoded based on the deviation of each unit’s neural activity from its baseline rate; thus, errors in estimating the baseline rate itself may create a bias in the decoded velocity or grasp state. To reduce such biases despite potential drifts in baseline rates over time, the baseline rates were re-estimated after every block using the previous block’s data.

During filter calibration, in which the participant was instructed to move the endpoint of the hand directly towards the target, we determined the baseline rate of a channel by modeling neural activity as a linear function of the intended movement direction plus the baseline rate. Specifically, the following equation was fit: z = baseline + Hd z = baseline + Hd, where z is the threshold crossing rate, H is the channel’s preferred direction, and d is the intended movement direction. As described above for the filter calibration, only data during the initial portion of the trial, from 0.2 to 3.2 seconds after trial start, was used to fit the model. Only the last block’s data was used to estimate each unit’s baseline rate for use during decoding in the following block (unless the last block was aborted for a technical reason, in which case the baseline rates were taken from the last full block).

This method for baseline rate tracking was not used for S3’s drinking demonstration or for the blocks in which the participant was instructed to reach and grasp the targets because it could no longer be assumed that the participant was intending to move the endpoint of the hand directly towards the target (Fig. 1d). For these blocks, the mean threshold crossing rate of each unit across the entire block was used as a proxy for its baseline rate. Mean rates did not differ substantially from baseline rates calculated from the same block (data not shown).

Hand velocity and grasp filters

During closed-loop blocks, the endpoint velocity of the robot arm and the state of the hand were controlled in parallel by decoded neural activity, and were updated every 100 ms for S3, and every 20 ms for T2. The desired endpoint velocity was decoded using a Kalman filter7,8,12,36. The Kalman filter requires four sets of parameters, two of which were calculated based on the mean-subtracted (and for T2, smoothed with a 0.3 sec exponential filter) threshold crossing rate, z̅, and the intended direction, d, while the other two parameters were hard coded. The first parameter was the directional tuning, H, calculated as H = z̅dT (ddT)−1. The second parameter, Q, was the error variance in linearly reconstructing the neural activity, Q = (z̅ − Hd)(z̅ − Hd)T. The two hard-coded parameters were the state transition matrix A, which predicts the intended direction given the previous estimate d(t) = Ad(t − 1), and the error in this model,

These values were set to A = 0.965I for both S3 and T2, and W = 0.03I for S3 and W = 0.012I for T2, where I is the identity matrix (W was set to a lower value for T2 to achieve a similar endpoint “inertia” as for S3 despite the smaller bin size used for T2). From past experience, it was found that fitting these two parameters from the perfectly smoothed open-loop kinematics data produced too much inertia in the commanded movement to properly control the robot arm, though this may have been a function of the relative paucity of signals rather than a suboptimal component of the decoding algorithm.

To select channels to be included in the filter, we first defined a “modulation index” as the magnitude of a unit’s modeled preferred direction vector (i.e., the amplitude of its cosine fit from baseline to peak rate), in Hz. When unit vectors are used for the intended movement direction in the filter calibration regression, this is equivalent to ‖Hi‖, where Hi is the row of the running model matrix H that corresponds to channel i. We further defined a “normalized modulation index” as the modulation index normalized by the standard deviation of the residuals of the unit’s cosine fit. Thus, a unit with no directional tuning would have normalized modulation index of 0, a unit whose directional modulation is equal to the standard deviation of its residuals would have a normalized modulation index of 1, and a unit whose directional modulation is larger than the standard deviation of its residuals would have a normalized modulation index greater than 1. We included all channels with baseline rates below 100 Hz and with normalized modulation indices above 0.1 for S3 and 0.05 for T2. For T2, we included a maximum of 50 channels; channels with the lowest normalized modulation indices were excluded if this limit was exceeded. Across the six sessions, the number of channels included in the Kalman filter ranged from 13 to 50 (see Supplementary Table 1 and Supplementary Fig. 8).

The state decoder used for hand grasp was built using similar methods, as previously described8. Briefly, threshold crossings were summed over the previous 300 ms, and linear discriminant analysis was used to separate threshold crossing counts arising when the participant was intending to close the hand from times that s/he was imagining moving the arm. For the state decoder, we used all channels that were not turned off at the start of the session (see Setup in Methods) and whose baseline threshold crossing rates, calculated from the previous block, were between 0.5 Hz and 100 Hz. Additionally for T2, we only included channels if the difference in mean rates during grasp vs. move states divided by the firing rate standard deviation (the d-prime score) was above 0.05. As for the Kalman filter, we included a maximum of 50 channels in the state decoder for T2; channels with the lowest d-prime scores were excluded if this limit was exceeded. Across the six sessions, the number of channels included in the state decoder ranged from 16 to 50 (see Supplementary Table 1). Immediately after a grasp was decoded, the Kalman prior was reset to zero. For both robot systems, at the end of a trial, velocity commands were suspended and the arm was repositioned under computer control to the software-expected position of the current target, in order to prepare the arm to enable the collection of metrics for the next 3D point-to-point reach. Additionally, during the DEKA sessions, 3D velocity commands were suspended during grasps (which lasted 2 sec).

Bias correction

For T2, a bias correction method was implemented to reduce biases in the decoded velocity caused by within-block nonstationarities in the neural signals. At each moment, the velocity bias was estimated by computing an exponentially-weighted running mean (with a 30 second time constant) of all decoded velocities whose speeds exceeded a predefined threshold. The threshold was set to the 66th percentile of the decoded speeds estimated during the most recent filter calibration, which was empirically found to be high enough to include movements caused by biases as well as “true” high-velocity movements, but importantly, to exclude low-velocity movements generated in an effort to counteract any existing biases. This exponentially-weighted running mean was subtracted from the decoded velocity signals to generate a bias-corrected velocity that commanded the endpoint of the DEKA arm.

Supplementary Material

Acknowledgements

We thank participants S3 and T2 for their dedication to this research. We thank Michael Black for initial guidance in the BrainGate-DLR research. We thank Erin Gallivan, Etsub Berhanu, David Rosler, Laurie Barefoot, Katherine Centrella, and Brandon King for their contributions to this research. We thank Kim Knoper for assistance with illustrations. We thank Dirk Van Der Merwe and DEKA Research and Development for their technical support. The contents do not represent the views of the Department of Veterans Affairs or the United States Government. Research supported by the Rehabilitation Research and Development Service, Office of Research and Development, Department of Veterans Affairs (Merit Review Awards: B6453R and A6779I; Career Development Transition Award B6310N). Support also provided by NIH: NINDS/NICHD (RC1HD063931), NIDCD (R01DC009899), NICHD-NCMRR (N01HD53403 and N01HD10018), NIBIB (R01EB007401), NINDS-Javits (NS25074); a Memorandum of Agreement between the Defense Advanced Research Projects Agency (DARPA) and the Department of Veterans Affairs; the Doris Duke Charitable Foundation; the MGH-Deane Institute for Integrated Research on Atrial Fibrillation and Stroke; Katie Samson Foundation; Craig H. Neilsen Foundation; the European Commission’s Seventh Framework Programme through the project The Hand Embodied (grant 248587). The pilot clinical trial into which participant S3 was recruited was sponsored in part by Cyberkinetics Neurotechnology Systems, Inc. (CKI).

JPD is a former Chief Scientific Officer and director of CKI; he held stocks and received compensation. LRH received research support from Massachusetts General and Spaulding Rehabilitation Hospitals, which in turn received clinical trial support from CKI. JDS received compensation as a consultant for CKI. CKI ceased operations in 2009, prior to the collection of data reported in this manuscript. The BrainGate pilot clinical trials are now administered by Massachusetts General Hospital.

Footnotes

Supplementary Information is linked to the online version of the paper at www.nature.com/nature.

Author Contributions: J.P.D. and L.R.H. conceived, planned and directed the BrainGate research and the DEKA sessions. J.P.D., L.R.H. and P.vdS. conceived, planned and directed the DLR robot control sessions. J.P.D. and P.vdS. are co-senior authors. D.B., B.J., N.Y.M., J.D.S., and J.V. contributed equally and are listed alphabetically. J.D.S., J.V., and D.B. developed the BrainGate-DLR interface. D.B., J.D.S., and J.L. developed the BrainGate-DEKA interface. D.B. and J.V. created the 3-D motorized target placement system. B.J., N.Y.M., and D.B. designed the behavioral task, the neural signal processing approach, the filter building approach, and the performance metrics. B.J., N.Y.M. and D.B. performed data analysis, further guided by L.R.H., J.D.S., and J.P.D. N.Y.M., L.R.H., and J.P.D. drafted the manuscript, which was further edited by all authors. D.B. and J.D.S. engineered the BrainGate Neural Interface System/Assistive Technology System. J.V., and S.H. developed the reactive planner for the LWR. S.H. developed the internal control framework of the LWR. The internal control framework of the DEKA arm was developed by DEKA. L.R.H. is principal investigator of the pilot clinical trial. S.S.C. is clinical co-investigator of the pilot clinical trial and assisted in the clinical oversight of these participants.

Author Information

Reprints and permissions information is available at www.nature.com/reprints.

The authors declare competing financial interests: details accompany the full-text HTML version of the paper at (url of journal website).

References

- 1.Donoghue JP. Bridging the brain to the world: a perspective on neural interface systems. Neuron. 2008;60:511–521. doi: 10.1016/j.neuron.2008.10.037. [DOI] [PubMed] [Google Scholar]

- 2.Gilja V, et al. Challenges and Opportunities for Next-Generation Intra-Cortically Based Neural Prostheses. IEEE Trans Biomed Eng. 2011 doi: 10.1109/TBME.2011.2107553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Schwartz AB, Cui XT, Weber DJ, Moran DW. Brain-controlled interfaces: Movement restoration with neural prosthetics. Neuron. 2006;52:205–220. doi: 10.1016/j.neuron.2006.09.019. [DOI] [PubMed] [Google Scholar]

- 4.Nicolelis MAL, Lebedev MA. Principles of neural ensemble physiology underlying the operation of brain-machine interfaces. Nature Reviews Neuroscience. 2009;10:530–540. doi: 10.1038/nrn2653. [DOI] [PubMed] [Google Scholar]

- 5.Green AM, Kalaska JF. Learning to move machines with the mind. Trends Neurosci. 2011;34:61–75. doi: 10.1016/j.tins.2010.11.003. [DOI] [PubMed] [Google Scholar]

- 6.Hochberg LR, et al. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006;442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 7.Simeral JD, Kim SP, Black MJ, Donoghue JP, Hochberg LR. Neural control of cursor trajectory and click by a human with tetraplegia 1000 days after implant of an intracortical microelectrode array. J Neural Eng. 2011;8:025027. doi: 10.1088/1741-2560/8/2/025027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kim SP, et al. Point-and-click cursor control with an intracortical neural interface system by humans with tetraplegia. IEEE Trans Neural Syst Rehabil Eng. 2011;19:193–203. doi: 10.1109/TNSRE.2011.2107750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Velliste M, Perel S, Spalding MC, Whitford AS, Schwartz AB. Cortical control of a prosthetic arm for self-feeding. Nature. 2008;453:1098–1101. doi: 10.1038/nature06996. [DOI] [PubMed] [Google Scholar]

- 10.Albu-Schaffer A, et al. The DLR lightweight robot: design and control concepts for robots in human environments. Ind Robot. 2007;34:376–385. [Google Scholar]

- 11.Resnik L. Research update: VA Study to Optimize the DEKA Arm. Journal of Rehabilitation Research and Development. 2010;47:Ix–X. [PubMed] [Google Scholar]

- 12.Wu W, Gao Y, Bienenstock E, Donoghue JP, Black MJ. Bayesian population decoding of motor cortical activity using a Kalman filter. Neural Comput. 2006;18:80–118. doi: 10.1162/089976606774841585. [DOI] [PubMed] [Google Scholar]

- 13.Suner S, Fellows MR, Vargas-Irwin C, Nakata GK, Donoghue JP. Reliability of signals from a chronically implanted, silicon-based electrode array in non-human primate primary motor cortex. IEEE Trans Neural Syst Rehabil Eng. 2005;13:524–541. doi: 10.1109/TNSRE.2005.857687. [DOI] [PubMed] [Google Scholar]

- 14.Chestek CA, et al. Long-term stability of neural prosthetic control signals from silicon cortical arrays in rhesus macaque motor cortex. J Neural Eng. 2011;8:045005. doi: 10.1088/1741-2560/8/4/045005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kruger J, Caruana F, Volta RD, Rizzolatti G. Seven years of recording from monkey cortex with a chronically implanted multiple microelectrode. Front Neuroeng. 2010;3:6. doi: 10.3389/fneng.2010.00006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kim SP, Simeral JD, Hochberg LR, Donoghue JP, Black MJ. Neural control of computer cursor velocity by decoding motor cortical spiking activity in humans with tetraplegia. J Neural Eng. 2008;5:455–476. doi: 10.1088/1741-2560/5/4/010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Burrow M, Dugger J, Humphrey DR, Reed DJ, Hochberg LR. International Conference on Rehabilitation Robotics; 1997. pp. 83–86. [Google Scholar]

- 18.Shin HC, Aggarwal V, Acharya S, Schieber MH, Thakor NV. Neural decoding of finger movements using Skellam-based maximum-likelihood decoding. IEEE Trans Biomed Eng. 2010;57:754–760. doi: 10.1109/TBME.2009.2020791. [DOI] [PubMed] [Google Scholar]

- 19.Vargas-Irwin CE, et al. Decoding Complete Reach and Grasp Actions from Local Primary Motor Cortex Populations. Journal of Neuroscience. 2010;30:9659–9669. doi: 10.1523/JNEUROSCI.5443-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mehring C, et al. Inference of hand movements from local field potentials in monkey motor cortex. Nat Neurosci. 2003;6:1253–1254. doi: 10.1038/nn1158. [DOI] [PubMed] [Google Scholar]

- 21.Stark E, Abeles M. Predicting movement from multiunit activity. J Neurosci. 2007;27:8387–8394. doi: 10.1523/JNEUROSCI.1321-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bansal AK, Vargas-Irwin CE, Truccolo W, Donoghue JP. Relationships among low-frequency local field potentials, spiking activity, and three-dimensional reach and grasp kinematics in primary motor and ventral premotor cortices. J Neurophysiol. 2011;105:1603–1619. doi: 10.1152/jn.00532.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Musallam S, Corneil BD, Greger B, Scherberger H, Andersen RA. Cognitive control signals for neural prosthetics. Science. 2004;305:258–262. doi: 10.1126/science.1097938. [DOI] [PubMed] [Google Scholar]

- 24.Mulliken GH, Musallam S, Andersen RA. Decoding Trajectories from Posterior Parietal Cortex Ensembles. Journal of Neuroscience. 2008;28:12913–12926. doi: 10.1523/JNEUROSCI.1463-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Santhanam G, Ryu SI, Yu BM, Afshar A, Shenoy KV. A high-performance brain-computer interface. Nature. 2006;442:195–198. doi: 10.1038/nature04968. [DOI] [PubMed] [Google Scholar]

- 26.Kuiken TA, et al. Targeted reinnervation for enhanced prosthetic arm function in a woman with a proximal amputation: a case study. Lancet. 2007;369:371–380. doi: 10.1016/S0140-6736(07)60193-7. [DOI] [PubMed] [Google Scholar]

- 27.Moritz CT, Perlmutter SI, Fetz EE. Direct control of paralysed muscles by cortical neurons. Nature. 2008;456:639–642. doi: 10.1038/nature07418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Pohlmeyer EA, et al. Toward the Restoration of Hand Use to a Paralyzed Monkey: Brain-Controlled Functional Electrical Stimulation of Forearm Muscles. Plos One. 2009;4 doi: 10.1371/journal.pone.0005924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Chadwick EK, et al. Continuous neuronal ensemble control of simulated arm reaching by a human with tetraplegia. J Neural Eng. 2011;8:034003. doi: 10.1088/1741-2560/8/3/034003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Fraser GW, Chase SM, Whitford A, Schwartz AB. Control of a brain-computer interface without spike sorting. Journal of Neural Engineering. 2009;6 doi: 10.1088/1741-2560/6/5/055004. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.