Abstract

Motivated by recent work studying massive imaging data in the neuroimaging literature, we propose multivariate varying coefficient models (MVCM) for modeling the relation between multiple functional responses and a set of covariates. We develop several statistical inference procedures for MVCM and systematically study their theoretical properties. We first establish the weak convergence of the local linear estimate of coefficient functions, as well as its asymptotic bias and variance, and then we derive asymptotic bias and mean integrated squared error of smoothed individual functions and their uniform convergence rate. We establish the uniform convergence rate of the estimated covariance function of the individual functions and its associated eigenvalue and eigenfunctions. We propose a global test for linear hypotheses of varying coefficient functions, and derive its asymptotic distribution under the null hypothesis. We also propose a simultaneous confidence band for each individual effect curve. We conduct Monte Carlo simulation to examine the finite-sample performance of the proposed procedures. We apply MVCM to investigate the development of white matter diffusivities along the genu tract of the corpus callosum in a clinical study of neurodevelopment.

Keywords and phrases: Functional response, Global test statistic, Multivariate varying coefficient model, Simultaneous confidence band, Weak convergence

1. Introduction

With modern imaging techniques, massive imaging data can be observed over both time and space [41, 17, 37, 4, 19, 25]. Such imaging techniques include functional magnetic resonance imaging (fMRI), electroencephalography (EEG), diffusion tensor imaging (DTI), positron emission tomography (PET), and single photon emission-computed tomography (SPECT) among many other imaging techniques. See, for example, a recent review of multiple biomedical imaging techniques and their applications in cancer detection and prevention in Fass [17]. Among them, predominant functional imaging techniques including fMRI and EEG have been widely used in behavioral and cognitive neuroscience to understand functional segregation and integration of different brain regions in a single subject and across different populations [19, 18, 29]. In DTI, multiple diffusion properties are measured along common major white matter fiber tracts across multiple subjects to characterize the structure and orientation of white matter structure in human brain in vivo [2, 3, 54].

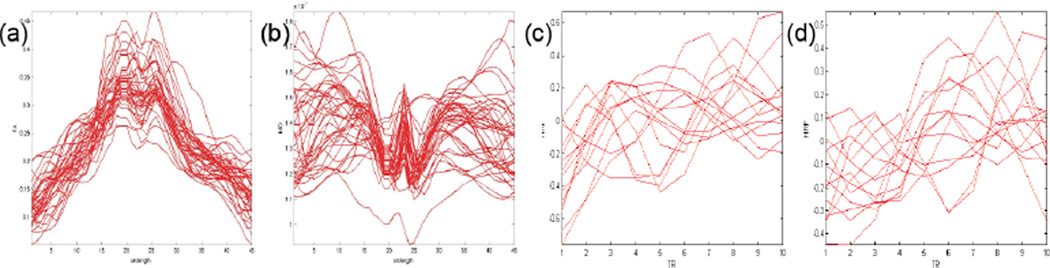

A common feature of many imaging techniques is that massive functional data are observed/calculated at the same design points, such as time for functional images (e.g., PET and fMRI). As an illustration, we present two smoothed functional data as an illustration and a real imaging data in Section 6, that we encounter in neuroimaging studies. First, we plot two diffusion properties, called fractional anisotropy (FA) and mean diffusivity (MD), measured at 45 grid points along the genu tract of the corpus callosum (Figs. 1 (a) and (b)) from 40 randomly selected infants from a clinical study of neurodevelopment with more than 500 infants. Scientists are particularly interested in delineating the structure of the variability of these functional FA and MD data and their association with a set of covariates of interest, such as age. We will systematically investigate the development of FA and MD along the genu of the corpus callosum tract in Section 6. Secondly, we consider the BOLD fMRI signal, which is based on hemodynamic responses secondary to neural activity. We plot the estimated hemodynamic response functions (HRF) corresponding to two stimulus categories from 14 randomly selected subjects at a selected voxel of a common template space from a clinical study of Alzheimer’s disease with more than 100 infants. Although the canonical form of the HRF is often used, when applying fMRI in a clinical population with possibly altered hemodynamic responses (Figs. 1 (c) and (d)), using the subject’s own HRF in fMRI data analysis may be advantageous because HRF variability is greater across subjects than across brain regions within a subject [34, 1]. We are particularly interested in delineating the structure of the variability of the HRF and their association with a set of covariates of interest, such as diagnostic group [33].

Fig 1.

Representative functional neuroimaging data: (a) and (b) FA and MD along the genu tract of the corpus callosum from 40 randomly selected infants; and (c) and (d) the estimated hemodynamic response functions (HRF) corresponding to two stimulus categories from 14 subjects.

A varying-coefficient model, which allows its regression coefficients to vary over some predictors of interest, is a powerful statistical tool for addressing these scientific questions. Since it was systematically introduced to statistical literature by Hastie and Tibshirani [24], many varying-coefficient models have been widely studied and developed for longitudinal, time series, and functional data [13, 48, 12, 15, 44, 26, 38, 28, 27, 51, 23]. However, most varying-coefficient models in the existing literature are developed for univariate response. Let yi(s) = (yi1(s), …, yiJ (s))T be a J-dimensional functional response vector for subject i, i = 1, …, n, and xi be its associated p × 1 vector of covariates of interest. Moreover, s varies in a compact subset of Euclidean space and denotes the design point, such as time for functional images and voxel for structural and functional images. For notational simplicity, we assume s ∈ [0, 1], but our results can be easily extended to higher dimensions. A multivariate varying coefficient model (MVCM) is defined as

| (1.1) |

where Bj(s) = (bj1(s), …, bjp(s))T is a p × 1 vector of functions of s, εij(s) are measurement errors, and ηij(s) characterizes individual curve variations from . Moreover, {ηij(s) : s ∈ [0, 1]} is assumed to be a stochastic process indexed by s ∈ [0, 1] and used to characterize the within-curve dependence. For image data, it is typical that the J functional responses yi(s) are measured at the same location for all subjects and exhibit both the within-curve and between-curve dependence structure. Thus, for ease of notation, it is assumed throughout this paper that yi(s) was measured at the same M location points s1 = 0 ≤ s2 ≤ … ≤ sM = 1 for all i.

Most varying coefficient models in the existing literature coincide model (1.1) with J = 1 and without the within-curve dependence. Statistical inferences for these varying coefficient models have been relatively well studied. Particularly, Hoover et al. [26] and Wu, Chiang and Hoover [47] were among the first to introduce the time-varying coefficient models for analysis of longitudinal data. Recently, Fan and Zhang [15] gave a comprehensive review of various statistical procedures proposed for many varying coefficient models. It is of particular interest in data analysis to construct simultaneous confidence bands (SCB) for any linear combination of Bj instead of point-wise confidence intervals and to develop global test statistics for the general hypothesis testing problem on Bj. For univariate varying coefficient models without the within-curve dependence, Fan and Zhang [14] constructed SCB using the limit theory for the maximum of the normalized deviation of the estimate from its expected value. Faraway [16], Chiou, Muller and Wang [8], and Cardot [5] proposed several varying coefficient models and their associated estimators for univariate functional response, but they did not give functional central limit theorem and simultaneous confidence band for their estimators. It has been technically difficult to carry out statistical inferences including simultaneous confidence band and global test statistic on Bj in the presence of the within-curve dependence.

There have been several recent attempts to solve this problem in various settings. For time series data, which may be viewed as a case with n = 1 and M → ∞, asymptotic SCB for coefficient functions in varying coefficient models can be built by using local kernel regression and a Gaussian approximation result for non-stationary time series [52]. For sparse irregular longitudinal data, Ma, Yang and Carroll [35] constructed asymptotic SCB for the mean function of the functional regression model by using piecewise constant spline estimation and a strong approximation result. For functional data, Degras [9] constructed asymptotic SCB for the mean function of the functional linear model without considering any covariate, while Zhang and Chen [51] adopted the method of “smoothing first, then estimation” and propose a global test statistic for testing Bj, but their results cannot be used for constructing SCB for Bj. Recently, Cardot et al. [7], Cardot and Josserand [6] built asymptotic SCB for Horvitz-Thompson estimators for the mean function, but their models and estimation methods differ significantly from ours.

In this paper, we propose an estimation procedure for the multivariate varying coefficient model (1.1) by using local linear regression techniques, and derive a simultaneous confidence band for the regression coefficient functions. We further develop a test for linear hypotheses of coefficient functions. The major aim of this paper is to investigate the theoretical properties of the proposed estimation procedure and test statistics. The theoretical development is challenging but of great interest for carrying out statistical inferences on Bj. The major contributions of this paper are summarized as follows. We first establish the weak convergence of the local linear estimator of Bj, denoted by B̂j, by using advanced empirical process methods [42, 31]. We further derive the bias and asymptotic variance of B̂j. These results provide insight into how the direct estimation procedure for Bj using observations from all subjects outperforms the estimation procedure with the strategy of “smoothing first, then estimation.”. After calculating B̂j, we reconstruct all individual functions ηij and establish their uniform convergence rates. We derive uniform convergence rates of the proposed estimate for the covariance matrix of ηij and its associated eigenvalue and eigenvector functions by using related results in Li and Hsing [32]. Using the weak convergence of the local linear estimator of Bj, we further establish the asymptotic distribution of a global test statistic for linear hypotheses of the regression coefficient functions, and construct an asymptotic SCB for each varying coefficient function.

The rest of this paper is organized as follows. In Section 2, we describe MVCM and its estimation procedure. In Section 3, we propose a global test statistic for linear hypotheses of the regression coefficient functions and construct an asymptotic SCB for each coefficient function. In Section 4, we discuss the theoretical properties of estimation and inference procedures. Two sets of simulation studies are presented in Section 5 with the known ground truth to examine the finite sample performance of the global test statistic and SCB for each individual varying coefficient function. In Section 6, we use MVCM to investigate the development of white matter diffusivities along the genu tract of the corpus callosum in a clinical study of neurodevelopment.

2. Estimation Procedure

Throughout this paper, we assume that εi(s) = (εi1(s), …, εiJ (s))T and ηi(s) = (ηi1(s), …, ηiJ (s))T are mutually independent, and ηi(s) and εi(s) are independent and identical copies of SP(0, Ση) and SP(0, Σε), respectively, where SP(μ, Σ) denotes a stochastic process vector with mean function μ(t) and covariance function Σ(s, t). Moreover, εi(s) and εi(t) are assumed to be independent for s ≠ t and Σε(s, t) takes the form of Sε(t)1(s = t), where Sε(t) = (sε,jj′(t)) is a J × J matrix of functions of t and 1(·) is an indicator function. Therefore, the covariance structure of yi (s), denoted by Σy(s, t), is given by

| (2.1) |

2.1. Estimating varying coefficient functions

We employ local linear regression [11] to estimate the coefficient functions Bj. Specifically, we apply the Taylor expansion for Bj(sm) at s as follows

| (2.2) |

where zh (sm − s) = (1, (sm − s)/h)T and Aj(s) = [Bj(s) h1j Ḃj(s)] is a p × 2 matrix, in which Ḃj(s) = (ḃj1(s), …, ḃjp(s))T is a p × 1 vector and ḃjl(s) = dbjl(s)/ds for l = 1, …, p. Let K(s) be a kernel function and Kh(s) = h−1K(s/h) be the rescaled kernel function with a bandwidth h. We estimate Aj(s) by minimizing the following weighted least squares function:

| (2.3) |

Let us now introduce some matrix operators. Let a⊗2 = aaT for any vector a and C⊗D be the Kronecker product of two matrices C and D. For an M1 × M2 matrix C = (cjl), denote vec(C) = (c11, …, c1M2, …, cM11, …, cM1M2)T. Let Âj(s) be the minimizer of (2.3). Then

| (2.4) |

where . Thus, we have

| (2.5) |

where Ip is a p × p identity matrix.

In practice, we may select the bandwidth h1j by using leave-one-curve-out-cross-validation. Specifically, for each j, we pool the data from all n subjects and select a bandwidth h1j, denoted by ĥ1j, by minimizing the cross-validation score given by

| (2.6) |

where B̂j(s, h1j)(−i) is the local linear estimator of Bj(s) with the bandwidth h1j based on data excluding all the observations from the i-th subject.

2.2. Smoothing individual functions

By assuming certain smoothness conditions on ηij(s), we also employ the local linear regression technique to estimate all individual functions ηij(s) [11, 43, 49, 38, 45, 51]. Specifically, we have the Taylor expansion for ηij(sm) at s:

| (2.7) |

where dij(s) = (ηij(s), h2jη̇ij(s))T is a 2 × 1 vector. We develop an algorithm to estimate dij(s) as follows. For each i and j, we estimate dij(s) by minimizing the weighted least squares function.

| (2.8) |

Then, ηij(s) can be estimated by

| (2.9) |

where K̃h2j (s) are the empirical equivalent kernels and d̂ij(s) is given by

Finally, let Sij be the smoother matrix for the j-th measurement of the i-th subject [11], we can obtain

| (2.10) |

where .

A simple and efficient way to obtain h2j is to use generalized cross-validation method. For each j, we pool the data from all n subjects and select the optimal bandwidth h2j, denoted by ĥ2j, by minimizing the generalized cross-validation score given by

| (2.11) |

Based on ĥ2j, we can use (2.9) to estimate ηij(s) for all i and j.

2.3. Functional principal component analysis

We consider a spectral decomposition of Ση(s, t) = (Ση,jj′(s, t)) and its approximation. According to Mercer’s theorem [36], if Ση(s, t) is continuous on [0, 1] × [0, 1], then Ση,jj(s, t) admits a spectral decomposition. Specifically, we have

| (2.12) |

for j = 1, …, J, where λj1 ≥ λj2 ≥ … ≥ 0 are ordered values of the eigenvalues of a linear operator determined by Ση,jj with and the ψjl(t)’s are the corresponding orthonormal eigenfunctions (or principal components) [32, 50, 22]. The eigenfunctions form an orthonormal system on the space of square-integrable functions on [0, 1] and ηij(t) admits the Karhunen-Loeve expansion as is referred to as the (jl)-th functional principal component scores of the i-th subject. For each fixed (i, j), the ξijls are uncorrelated random variables with E(ξijl) = 0 and . Furthermore, for j ≠ j′, we have

After obtaining η̂i(s) = (η̂i1(s), …, η̂iJ (s))T, we estimate Ση(s, t) by using the empirical covariance of the estimated η̂i(s) as follows:

Following Rice and Silverman [39], we can calculate the spectral decomposition of Σ̂η,jj(s, t) for each j as follows:

| (2.13) |

where λ̂j1 ≥ λ̂j2 ≥ … ≥ 0 are estimated eigenvalues and the ψ̂jl(t)’s are the corresponding estimated principal components. Furthermore, the (j, l)-th functional principal component scores can be computed using for i = 1, …, n. We further show the uniform convergence rate of Σ̂η(s, t) and its associated eigenvalues and eigenfunctions. This result is useful for constructing the global and local test statistics for testing the covariate effects.

3. Inference Procedure

In this section, we study global tests for linear hypotheses of coefficient functions and SCB for each varying coefficient function. They are essential for statistical inference on the coefficient functions.

3.1. Hypothesis test

Consider the linear hypotheses of B(s) as follows:

| (3.1) |

where B(s) = [B1(s),…,BJ (s)], C is a r × Jp matrix with rank r, and b0(s) is a given r ×1 vector of functions. Define a global test statistic Sn as

| (3.2) |

where and d(s) = Cvec(B̂(s) − bias(B̂(s))) − b0(s).

To calculate Sn, we need to estimate the bias of B̂j (s) for all j. Based on (2.5), we have

| (3.3) |

By using Taylor’s expansion, we have

where B̈j(s) = d2Bj(s)/ds2 and B⃛j(s) = d3Bj(s)/ds3. Following the preasymptotic substitution method of Fan and Gijbels [11], we replace Bj(sm) − Âj(s)zh1j (sm − s) by , in which are estimators obtained by using local cubic fit with a pilot bandwidth selected in (2.6).

It will be shown below that the asymptotic distribution of Sn is quite complicated and it is difficult to directly approximate the percentiles of Sn under the null hypothesis. Instead, we propose using a wild bootstrap method to obtain critical values of Sn. The wild bootstrap consists of the following three steps.

Step 1. Fit model (1.1) under the null hypothesis H0, which yields B̂* (sm), for i = 1, …, n and m = 1, …, M.

Step 2. Generate a random sample and τi(sm)(g) from a N(0, 1) generator for i = 1, …, n and m = 1, …, M and then construct

Then, based on ŷi(sm)(g), we recalculate B̂(s)(g), bias(B̂(s)(g)), and d(s)(g) = Cvec(B̂(s)(g) − bias(B̂(s)(g))) − b0(s). We also note that Cvec(B̂(s)(g)) ≈ b0 and Cvec(bias(B̂(s)(g))) ≈ 0. Thus, we can drop the term bias(B̂(s)(g)) in d(s)(g) for computational efficiency. Subsequently, we compute

Step 3. Repeat Step 2 G times to obtain and then calculate . If p is smaller than a pre-specified significance level α, say 0.05, then one rejects the null hypothesis H0.

3.2. Simultaneous confidence bands

Construction of SCB for coefficient functions is of great interest in statistical inference for model (1.1). For a given confidence level α, we construct SCB for each bjl(s) as follows:

| (3.4) |

where are the lower and upper limits of SCB. Specifically, it will be shown below that a 1 − α simultaneous confidence band for bjl(s) is given as follows:

| (3.5) |

where Cjl(α) is a scalar. Since the calculation of b̂jl(s) and bias(b̂jl(s)) has been discussed in (2.5) and (3.3), the next issue is to determine Cjl(α).

Although there are several methods of determining Cjl(α) including random field theory [46, 40], we develop an efficient resampling method to approximate Cjl(α) as follows [53, 30].

We calculate for all i, j, and m.

- For g = 1, …, G, we independently simulate from N(0, 1) and calculate a stochastic process Gj(s)(g) given by

We calculate sups∈[0, 1] |elGj(s)(g)| for all g, where el be a p × 1 vector with the l-th element 1 and 0 otherwise, and use their 1 − α empirical percentile to estimate Cjl(α).

4. Asymptotic Properties

In this section, we systematically examine the asymptotic properties of B̂(s), η̂ij(s), Σ̂η(s, t), and Sn developed in Sections 2 and 3. Let us first define some notation. Let ur(K) = ∫ tr K(t)dt and υr(K) = ∫ tr K2(t)dt, where r is any integer. For any smooth functions f(s) and g(s, t), define ḟ(s) = df(s)/ds, f̈(s) = d2f(s)/ds2, f⃛ (s) = d3f(s)/ds3, and g(a,b)(s, t) = ∂a+bg(s, t)/∂as∂bt, where a and b are any nonnegative integers. Let H = diag(h11, …, h1J), B(s) = [B1(s), …, BJ (s)], B̂ (s) = [B̂1(s), …, B̂J (s)] and B̈(s) = [B̈1(s), …, B̈J (s)], where B̈j(s) = (b̈j1(s), …, b̈jp(s))T is a p × 1 vector. Let S = {s1, …, sM}.

4.1. Assumptions

Throughout the paper, the following assumptions are needed to facilitate the technical details, although they may not be the weakest conditions. We need to introduce some notation. Let N(μ, Σ) be a normal random vector with mean μ and covariance Σ. Let Ω1(h, s) = ∫(1, h−1(u − s))⊗2 K(u − s, h)π(u)du. Moreover, we do not distinguish the differentiation and continuation at the boundary points from those in the interior of [0, 1]. For instance, a continuous function at the boundary of [0, 1] means that this function is left continuous at 0 and right continuous at 1.

Assumption (C1). For all j = 1, …, J, supsm E[|εij(sm)|q] < ∞ for some q > 4 and all grid points sm.

Assumption (C2). Each component of {η (s) : s ∈ [0, 1]}, {η(s)η(t)T : (s, t) ∈ [0, 1]2}, and {xηT (s) : s ∈ [0, 1]} are Donsker classes.

Assumption (C3). The covariate vectors xis are independently and identically distributed with Exi = μx and ‖xi‖∞ < ∞. Assume that is positive definite.

Assumption (C4). The grid points 𝒮 = {sm, m = 1, …, M} are randomly generated from a density function π(s). Moreover, π(s) > 0 for all s ∈ [0, 1] and π(s) has continuous second-order derivative with the bounded support [0, 1].

Assumption (C4b). The grid points 𝒮 = {sm, m = 1, …, M} are prefixed according to π(s) such that for M ≥ m ≥ 1. Moreover, π(s) > 0 for all s ∈ [0, 1] and π(s) has continuous second-order derivative with the bounded support [0, 1].

Assumption (C5). The kernel function K (t) is a symmetric density function with a compact support [−1, 1], and is Lipschitz continuous. Moreover, 0 < infh∈(0,h0],s∈[0, 1] det(Ω1(h, s)), where h0 > 0 is a small scalar and det(Ω1(h, s)) denotes the determinant of Ω1(h, s).

Assumption (C6). All components of B(s) have continuous second derivatives on [0, 1].

Assumption (C7). Both n and M converge to ∞, maxj h1j = o(1), Mh1j → ∞, and for j = 1, …, J, where q1 ∈ (2, 4).

Assumption (C7b). Both n and M converge to ∞, maxj h1j = o(1), Mh1j → ∞, and log(M) = o(Mh1j). There exists a sequence of γn > 0 such that γn → ∞, and n−1/2γn log(M) = o(1).

Assumption (C8). For all j, maxj(h2j)−4(log n/n)1−2/q2 = o(1) for q2 ∈ (2, ∞), maxj h2j = o(1), and Mh2j → ∞ for j = 1, …, J.

Assumption (C9a). The sample path of ηij(s) has continuous second-order derivative on [0, 1] and and E{sups∈[0,1][‖η̇ (s)‖2+‖η̈(s)‖2]r2} < ∞ for some r1, r2 ∈ (2,∞), where ‖·‖2 is the Euclidean norm.

Assumption (C9b). for some r1 ∈ (2, ∞) and all components of Ση(s, t) have continuous second-order partial derivatives with respect to (s, t) ∈ [0, 1]2 and infs∈[0,1] Ση(s, s) > 0.

Assumption (C10). There is a positive fixed integer Ej < ∞ such that λj,1 > … > λj,Ej > λj,Ej+1 ≥ … ≥ 0 for j = 1, …, J.

Remark. Assumption (C1) requires the uniform bound on the high-order moment of εij(sm) for all grid points sm. Assumption (C2) avoids smoothness conditions on the sample path η(s), which are commonly assumed in the literature [9, 51, 22]. Assumption (C3) is a relatively weak condition on the covariate vector and the boundedness of ‖xi‖2 is not essential. Assumption (C4) is a weak condition on the random grid points. In many neuroimaging applications, M is often much larger than n and for such large M, a regular grid of voxels is fairly well approximated by voxels generated by a uniform distribution in a compact subset of Euclidean space. For notational simplicity, we only state the theoretical results for the random grid points throughout the paper. Assumption (C4b) is a weak condition on the fixed grid points. We will prove several key results for the fixed grid point case in Lemma 8 of the supplementary document. The bounded support restriction on K(·) in Assumption (C5) is not essential and can be removed if we put a restriction on the tail of K(·). Assumption (C6) is the standard smoothness condition on B(s) in the literature [13, 48, 12, 15, 44, 26, 38, 28, 27, 51, 23]. Assumptions (C7)–(C8) on bandwidths are similar to the conditions used in [32, 10]. Assumptions (C7b) is a weak condition on n, M, h1j, and γn for the fixed grid point case. For instance, if we set γn = n1/2 log(M)−1−c0 for a positive scalar c0 > 0, then we have and n−1/2γn log(M) = log(M)−c0 = o(1). As shown in Theorem 1 below, if h1j = O((nM)−1/5) and γn = n1/2 log(M)−1−c0, reduces to n6/5−q/2 log(M)(1+c0)(q−1)M1/5. For relatively large q in Assumption (C1), n6/5−q/2 log(M)(1+c0)(q−1)M1/5 can converge to zero. Assumptions (C9a) and (C3) are sufficient conditions of assumption (C2). Assumption (C9b) on the sample path is the same as Condition C6 used in [32]. Particularly, if we use the method for estimating Ση(s, s′) considered in Li and Hsing [32], then the differentiability of η(s) in Assumption (C9a) can be dropped. Assumption (C10) on simple multiplicity of the first Ej eigenvalues is only needed to investigate the asymptotic properties of eigenfunctions.

4.2. Asymptotic properties of B̂(s)

The following theorem establishes the weak convergence of {B̂(s), s ∈ [0, 1]}, which is essential for constructing global test statistics and SCB for B(s).

Theorem 1. Suppose that Assumptions (C1)–(C7) hold. The following results hold:

converges weakly to a centered Gaussian process G(·) with covariance matrix , where ΩX = E[x⊗2] and U2(K; s, H) is a J × J diagonal matrix, whose diagonal elements will be defined in Lemma 5 in Appendix.

The asymptotic bias and conditional variance of B̂j(s) given 𝒮 for s ∈ (0, 1) are given by , respectively.

Remarks. 1. The major challenge in proving Theorem 1 (i) is dealing with within-subject dependence. This is because the dependence between η(s) and η(s′) in the newly proposed multivariate varying coefficient model does not converge to zero due to the within-curve dependence. It is worth noting that for any given s, the corresponding asymptotic normality of B̂(s) may be established by using related techniques in Zhang and Chen [51]. However, the marginal asymptotic normality does not imply the weak convergence of B̂(s) as a stochastic process in [0, 1], since we need to verify the asymptotic continuity of {B̂(s) : s ∈ [0, 1]} to establish its weak convergence. In addition, Zhang and Chen [51] considered “smoothing first, then estimation”, which requires a stringent assumption such that n = O(M4/5). Readers are referred to Condition A.4 and Theorem 4 in Zhang and Chen [51] for more details. In contrast, directly estimating B(s) using local kernel smoothing avoids such stringent assumption on the numbers of grid points and subjects.

2. Theorem 1 (ii) only provides us the asymptotic bias and conditional variance of B̂j(s) given 𝒮 for the interior points of (0, 1). The asymptotic bias and conditional variance at the boundary points 0 and 1 are given in Lemma 5. The asymptotic bias of B̂j(s) is of the order , as the one in nonparametric regression setting. Moreover, the asymptotic conditional variance of B̂j(s) has a complicated form due to the within-curve dependence. The leading term in the asymptotic conditional variance is of order n−1, which is slower than the standard nonparametric rate (nMh1j)−1 with the assumption h1j → 0 and Mh1j → ∞.

3. Choosing an optimal bandwidth h1j is not a trivial task for model (1.1). Generally, any bandwidth h1j satisfying the assumption h1j → 0 and Mh1j → ∞ can ensure the weak convergence of {B̂(s) : s ∈ [0, 1]}. Based on the asymptotic bias and conditional variance of B̂(s), we can calculate an optimal bandwidth for estimating B(s), h1j = Op((nM)−1/5). In this case, and (nM)−1h1j reduce to Op(n−7/5M−2/5) and (nM)−6/5, respectively, and their contributions depend on the relative size of n over M.

4.3. Asymptotic properties of η ^ij(s)

We next study the asymptotic bias and covariance of η̂ij(s) as follows. We distinguish between two cases. The first one is conditioning on the design points in 𝒮, X, and η. The other is conditioning on the design points in 𝒮 and X. We define K* ((s − t)/h) = ∫ K(u)K(u + (s − t)/h)du.

Theorem 2. Under Assumptions (C1) and (C3)–(C8), the following results hold for all s ∈ (0, L).

- Conditioning on (𝒮, X, η), we have

- The asymptotic bias and covariance of η̂ij(s) conditioning on 𝒮 and X are given by

- The mean integrated squared error (MISE) of all η̂ij(s) is given by

(4.1) - The optimal bandwidth for minimizing MISE (4.1) is given by

(4.2) - The first order LPK reconstructions η̂ij(s) using ĥ2j in (4.2) satisfy

for i = 1, …, n.(4.3)

Remark. Theorem 2 characterizes the statistical properties of smoothing individual curves ηij(s) after first estimating Bj(s). Conditioning on individual curves ηij(s), Theorem 2 (a) shows that Bias[η̂ij(s)|𝒮,X,η] is associated with , which is the bias term of B̂j(s) introduced in the estimation step, and is introduced in the smoothing individual functions step. Without conditioning on ηij(s), Theorem 2 (b) shows that the bias of η̂ij(s) is mainly controlled by the bias in the estimation step. The MISE of η̂ij(s) in Theorem 2 (c) is the sum of introduced by the estimation of Bj(s) and introduced by the reconstruction of ηij(s). The optimal bandwidth for minimizing the MISE of η̂ij(s) is a standard bandwidth for LPK. If we use the optimal bandwidth in Theorem 2 (d), then the MISE of η̂ij(s) can achieve the order of .

4.4. Asymptotic properties of Σ̂η(s, t)

In this section, we study the asymptotic properties of Σ̂η(s, t) and its spectrum decomposition.

Theorem 3. (i) Under Assumptions (C1) and (C3)–(C9a), it follows that

(ii) Under Assumptions (C1) and (C3)–(C10), if the optimal bandwidths hmj for m = 1, 2 are used to reconstruct B̂j(s) and η̂ij(s) for all j, then for l = 1, …,Ej, we have the following results:

;

.

Remark. Theorem 3 characterizes the uniform weak convergence rates of Σ̂η(s, t), ψ̂jl, and λ̂jl for all j. It can be regarded as an extension of Theorems 3.3–3.6 in Li and Hsing [32], which established the uniform strong convergence rates of these estimates with the sole presence of intercept and J = 1 in model (1.1). Another difference is that Li and Hsing [32] employed all cross products yijyik for j ≠ k and then used the local polynomial kernel to estimate Ση(s, t). As discussed in Li and Hsing [32], their approach can relax the assumption on the differentiability of the individual curves. In contrast, following Hall, Müller and Wang [22] and Zhang and Chen [51], we directly fit a smooth curve to ηij(s) for each i and estimate Ση(s, t) by the sample covariance functions. Our approach is computationally simple and can ensure that all Σ̂η,jj(s, t) are positive semi-definite, whereas the approach in Li and Hsing [32] cannot. This is extremely important for high-dimensional neuroimaging data, which usually contains a large number of locations (called voxels) on a two-dimensional (2D) surface or in a 3D volume. For instance, the number of M can number in the tens of thousands to millions, and thus it can be numerically infeasible to directly operate on Σ̂η(s, s′).

We use Σ̃η(s, s′) to denote the local linear estimator of Ση(s, s′) proposed in Li and Hsing [32]. Following the arguments in Li and Hsing [32], we can easily obtain the following result.

Corollary 1. Under Assumptions (C1)–(C8) and (C9b), it follows that

4.5. Asymptotic properties of the inference procedures

In this section, we discuss the asymptotic properties of the global statistic Sn and the critical values of SCB. Theorem 1 allows us to construct SCB for coefficient functions bjl(s). It follows from Theorem 1 that

| (4.4) |

where ⇒ denotes weak convergence of a sequence of stochastic processes and Gjl(s) is a centered Gaussian process indexed by s ∈ [0, 1]. Therefore, let XC(s) be a centered Gaussian process, we have

| (4.5) |

We define Cjl(α) such that P(sups∈[0,1] |Gjl(s)| ≥ Cjl(α)) = 1 − α. Thus, the confidence band given in (3.5) is a 1 − α simultaneous confidence band for bjl(s).

Theorem 4. If Assumptions (C1)–(C9a) are true, then we have

| (4.6) |

Remark. Theorem 4 is similar to Theorem 7 of Zhang and Chen [51]. Both characterize the asymptotic distribution of Sn. In particular, Zhang and Chen [51] delineate the distribution of as a χ2-type mixture. All discussions associated Theorem 7 with Zhang and Chen [51] are valid here and therefore, we do not repeat them for the sake of space.

We consider conditional convergence for bootstrapped stochastic processes. We focus on the bootstrapped process {Gj(s)(g) : s ∈ [0, 1]} as the arguments for establishing the wild bootstrap method for approximating the null distribution of Sn and the bootstrapped process {Gj(s)(g) : s ∈ [0, 1]} are similar.

Theorem 5. If Assumptions (C1)–(C9a) are true, then Gj(s)(g)(s) converges weakly to Gj(s) conditioning on the data, where Gj(s) is a centered Gaussian process indexed by s ∈ [0, 1].

Remark. Theorem 5 validates the bootstrapped process of Gj(s)(g). An interesting observation is that the bias correction for B̂j(s) in constructing Gj(s)(g) is unnecessary. It leads to substantial computational saving.

5. Simulation Studies

In this section, we present two simulation example to demonstrate the performance of the proposed procedures.

Example 1. This example is designed to evaluate the Type I error rate and power of the proposed global test Sn using Monte Carlo simulation. In this example, the data were generated from a bivariate MVCM as follows:

| (5.1) |

where sm ~ U[0, 1], (εi1(sm), εi2(sm))T ~ N((0, 0)T, , and xi = (1, xi1, xi2) for all i = 1, …, n and m = 1, …, M. Moreover, (xi1, xi2)T ~ N((0, 0)T, diag(1−2−0.5, 1−2−0.5)+2−0.5(1, 1)⊗2) and ηij(s) = ξij1 ψj1(s) + ξij2 ψj2(s), where ξijl ~ N(0, λjl) for j = 1, 2 and l = 1, 2. Furthermore, sm, (xi1, xi2), ξi11, ξi12, ξi21, ξi22, εi1(sm), and εi2(sm) are independent random variables.We set and the functional coefficients and eigenfunctions as follows:

Then, except for (b13(s), b23(s)) for all s, we fixed all other parameters at the values specified above, whereas we assumed (b13(s), b23(s)) = c(4s(1 − s) − 0.4, 4s(1 − s) − 0.4), where c is a scalar specified below.

We want to test the hypotheses H0 : b13(s) = b23(s) = 0 for all s against H1 : b13(s) ≠ 0 or b23(s) ≠ 0 for at least one s. We set c = 0 to assess the Type I error rates for Sn, and set c = 0.1, 0.2, 0.3, and 0.4 to examine the power of Sn. We set M = 50, n = 200 and 100. For each simulation, the significance levels were set at α = 0.05 and 0.01, and 100 replications were used to estimate the rejection rates.

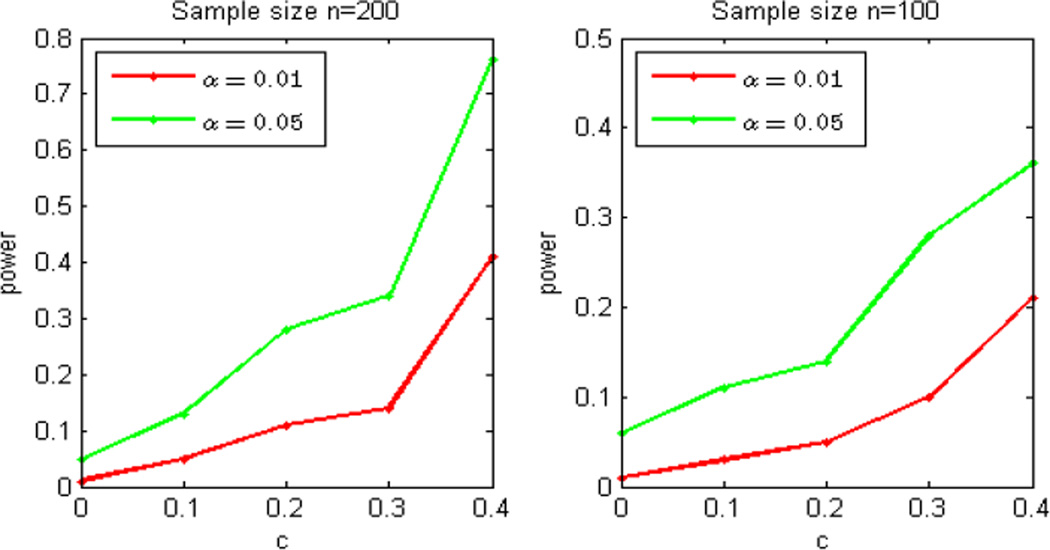

Fig. 2 depicts the power curves. It can be seen from Fig. 2 that the rejection rates for Sn based on the wild bootstrap method are accurate for moderate sample sizes, such as (n = 100, or 200) at both significance levels (α = 0.01 or 0.05). As expected, the power increases with the sample size.

Fig 2.

Plot of Power Curves. Rejection rates of Sn based on the wild bootstrap method are calculated at five different values of c (0, 0.1, 0.2, 0.3, and 0.4) for two sample sizes of n (100 and 200) subjects at 5% (green) and 1% (red) significance levels.

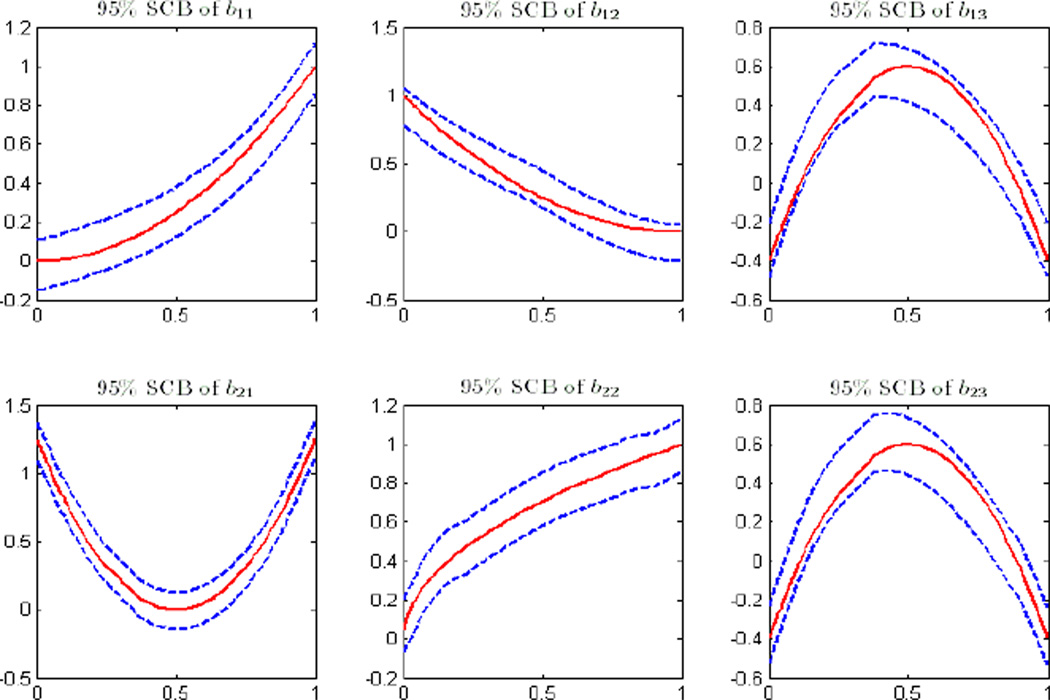

Example 2. This example is used to evaluate the coverage probabilities of SCB of the functional coefficients B(s) based on the wild bootstrap method. The data were generated from model (5.1) under the same parameter values. We set n = 500 and M = 25, 50, and 75 and generated 200 datasets for each combination. Based on the generated data, we calculated SCB for each component of B1(s) and B2(s). Table 1 summarizes the empirical coverage probabilities based on 200 simulations for α = 0.01 and α = 0.05. The coverage probabilities improve with the number of grid points M. When M = 75, the differences between the coverage probabilities and the claimed confidence levels p are fairly acceptable. The Monte Carlo errors are of size for α = 0.05. Fig. 3 depicts typical simultaneous confidence bands, where n = 500 and M = 50. Additional simulation results are given in the supplementary document.

Table 1.

Empirical coverage probabilities of 1 − α SCB for all components of B1(·) and B2(·) based on 200 simulated data sets.

| α = 0.05 | ||||||

|---|---|---|---|---|---|---|

| M | b11 | b12 | b13 | b21 | b22 | b23 |

| 25 | 0.915 | 0.930 | 0.945 | 0.920 | 0.915 | 0.945 |

| 50 | 0.925 | 0.940 | 0.945 | 0.930 | 0.925 | 0.950 |

| 75 | 0.945 | 0.950 | 0.955 | 0.945 | 0.945 | 0.955 |

| α = 0.01 | ||||||

| 25 | 0.985 | 0.965 | 0.985 | 0.985 | 0.990 | 0.980 |

| 50 | 0.995 | 0.980 | 0.985 | 0.985 | 0.995 | 0.985 |

| 75 | 0.990 | 0.985 | 0.990 | 0.995 | 0.990 | 0.990 |

Fig 3.

Typical simultaneous confidence bands with n = 500 and M = 50. The red solid curves are the true coefficient functions, and the blue dashed curves are the confidence bands.

6. Real Data Analysis

The data set consists of 128 healthy infants (75 males and 53 females) from the neonatal project on early brain development. The gestational ages of these infants range from 262 to 433 days and their mean gestational age is 298 days with standard deviation 17.6 days. The DTIs and T1-weighted images were acquired for each subject. For the DTIs, the imaging parameters were as follows: the six non-collinear directions at the b-value of 1000 s/mm2 with a reference scan (b = 0), the isotropic voxel resolution=2 mm, and the in-plane field of view=256 mm in both directions. A total of five repetitions were acquired to improve the signal-to-noise ratio of the DTIs.

The DTI data were processed by two key steps including a weighted least squares estimation method [2, 54] to construct the diffusion tensors and a DTI atlas building pipeline [20, 55] to register DTIs from multiple subjects to create a study specific unbiased DTI atlas, to track fiber tracts in the atlas space, and to propagate them back into each subject’s native space by using registration information. Subsequently, diffusion tensors (DTs) and their scalar diffusion properties were calculated at each location along each individual fiber tract by using DTs in neighboring voxels close to the fiber tract. Fig. 1 (a) displays the fiber bundle of the genu of the corpus callosum (GCC), which is an area of white matter in the brain. The GCC is the anterior end of the corpus callosum, and is bent downward and backward in front of the septum pellucidum; diminishing rapidly in thickness, it is prolonged backward under the name of the rostrum, which is connected below with the lamina terminalis. It was found that neonatal microstructural development of GCC positively correlates with age and callosal thickness.

The two aims of this analysis are to compare diffusion properties including FA and MD along the GCC between the male and female groups and to delineate the development of fiber diffusion properties across time, which is addressed by including the gestational age at MRI scanning as a covariate. FA and MD, respectively, measure the inhomogeneous extent of local barriers to water diffusion and the averaged magnitude of local water diffusion. We fitted model (1.1) to the FA and MD values from all 128 subjects, in which xi = (1, G, Age)T, where G represents gender. We then applied the estimation and inference procedures to estimate B(s) and calculate Sn for each hypothesis test. We approximated the p-value of Sn using the wild bootstrap method with G = 1, 000 replications. Finally, we constructed the 95% simultaneous confidence bands for the functional coefficients of Bj(s) for j = 1, 2.

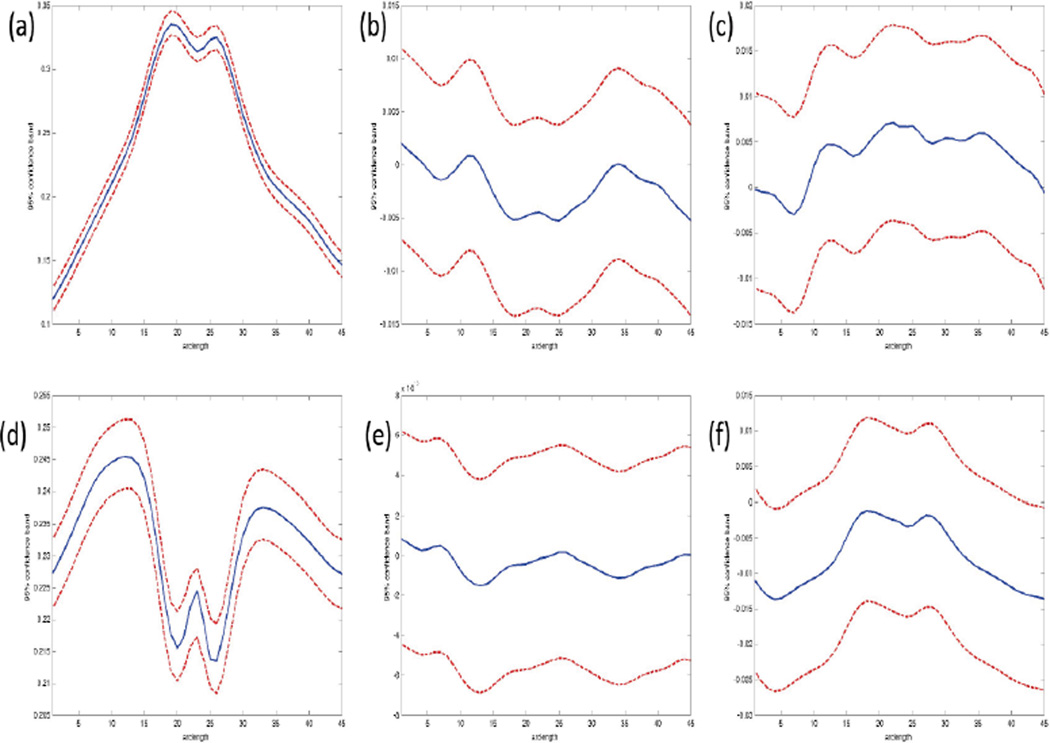

Fig. 4 presents the estimated coefficient functions corresponding to 1, G, and Age associated with FA and MD (blue solid lines in all panels of Fig. 4). The intercept functions (all panels in the first column of Fig. 4) describe the overall trend of FA and MD. The gender coefficients for FA and MD in the second column of Fig. 4 are negative at most of the grid points, which may indicate that compared with female infants, male infants have relatively smaller magnitudes of local water diffusivity along the genu of the corpus callosum. The gestational age coefficients for FA (panel (c) of Fig. 4) are positive at most grid points, indicating that FA measures increase with age in both male and female infants, whereas those corresponding to MD (panel (f) of Fig. 4) are negative at most grid points. This may indicate a negative correlation between the magnitudes of local water diffusivity and gestational age along the genu of the corpus callosum.

Fig 4.

Plot of estimated effects of intercept, gender, and age (from left to right) and their 95% confidence bands. The upper panels are for FA and the lower panels are for MD. The blue solid curves are the estimated coefficient functions and the red dashed curves are the confidence bands.

We statistically tested the effects of gender and gestational age on FA and MD along the GCC tract. To test the gender effect, we computed the global test statistic Sn = 144.63 and its associated p-value (p = 0.078), indicating a weakly significant gender effect, which agrees with the findings in panels (b) and (e) of Fig. 4. A moderately significant age effect was found with Sn = 929.69 (p-value< 0.001). This agrees with the findings in panel (f) of Fig. 4, indicating that MD along the GCC tract changes moderately with gestational age. Furthermore, for FA and MD, we constructed the 95% simultaneous confidence bands of the varying-coefficients for Gi and agei (Fig. 4).

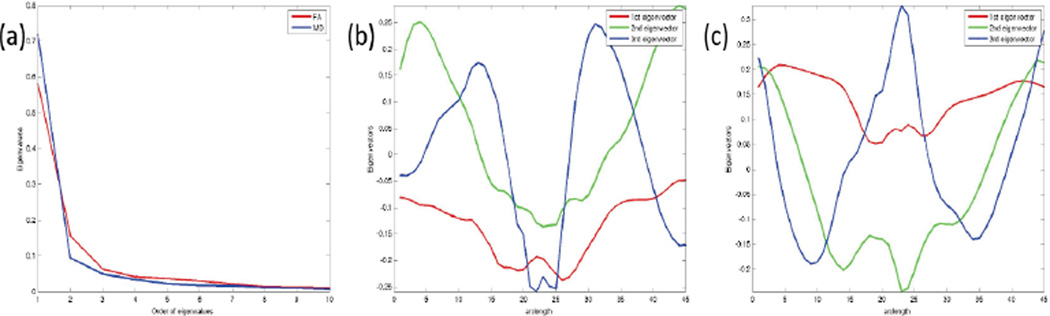

Fig. 5 presents the first 10 eigenvalues and 3 eigenfunctions of Σ̂η,jj(s, t) for j = 1, 2. The relative eigenvalues of Σ̂η,jj defined as the ratios of the eigenvalues of Σ̂η,jj(s, t) over their sum have similar distributional patterns (panel (a) of Fig. 5). We observe that the first three eigenvalues account for more than 90% of the total and the others quickly vanish to zero. The eigenfunctions of FA corresponding to the largest three eigenvalues (Fig. 5 (b)) are different from those of MD (Fig. 5 (c)).

Fig 5.

Plot of the first 10 eigenvalues (a) and the first 3 eigenfunctions for FA (b) and MD (c).

In the supplementary document, we further illustrate the proposed methodology by an empirical analysis of another real data set.

Supplementary Material

Acknowledgments

The authors are grateful to the Editor Peter Bühlmann, the Associate Editor, and three anonymous referees for valuable suggestions, which have greatly helped to improve our presentation.

Appendix

We introduce some notation. We define

| (6.1) |

where . Throughout the proofs, Cks stand for a generic constant, and it may vary from line to line.

The proofs of Theorems 1–5 rely on the following lemmas whose proofs are given in the supplementary document.

Lemma 1. Under Assumptions (C1), (C3)–(C5), and (C7), we have that for each j,

| (6.2) |

Lemma 2. Under Assumptions (C1), (C4), (C5), and (C7), we have that for any r ≥ 0 and j,

where ΠM(·) is the sampling distribution function based on 𝒮 = {s1,…, sM} and Π(·) is the distribution function of sm.

Lemma 3. Under Assumptions (C2)–(C5), we have

| (6.3) |

Lemma 4. If Assumptions (C1) and (C3)–(C6) hold, then we have

| (6.4) |

where en(s) = Op((Mh1j)−1/2) with E[en(s)] = 0.

Lemma 5. If Assumptions (C1) and (C3)–(C6) hold, then for s = 0 or 1, we have

| (6.5) |

Lemma 6. Under Assumptions (C1)–(C9a), we have

Lemma 7. Under Assumptions (C1)–(C9a), we have

We present only the key steps in the proof of Theorem 1 below.

Proof of Theorem 1. Define

According to the definition of vec(Âj(s)), it is easy to see that

| (6.6) |

| (6.7) |

The proof of Theorem 1 (i) consists of two parts.

Part 1 is to show that holds uniformly for all s ∈ [0, 1] and j = 1, …, J.

Part 2 is to show that converges weakly to a Gaussian process G(·) with mean zero and covariance matrix for each j.

In part 1, we show that

| (6.8) |

It follows from Lemma 1 that

hold uniformly for all s ∈ [0, 1]. It follows from Lemma 2 that

| (6.9) |

hold uniformly for all s ∈ [0, 1]. Based on these results, we can finish the proof of (6.8).

In part 2, we show the weak convergence of for j = 1, …, J. The part 2 consists of two steps. In Step 1, it follows from the standard central limit theorem that for each s ∈ [0, 1],

| (6.10) |

where →L denotes convergence in distribution.

Step 2 is to show the asymptotic tightness of . By using (6.9) and (6.1), can be approximated by the sum of three terms (I), (II), and (III) as follows:

| (6.11) |

We investigate the three terms on the right hand side of (6.11) as follows. It follows from Lemma 3 that the first term on the right hand side of (6.11) converges to zero uniformly. We prove the asymptotic tightness of (II) as follows. Define

Thus, we only need to prove the asymptotic tightness of X̂n,j(s). The asymptotic tightness of X̂n,j(s) can be proved using the empirical process techniques [42]. It follows that

Thus, X̂n,j(s) can be simplified as

We consider a function class . Due to Assumption C2, ℰη is a P−Donsker class.

Finally, we consider the third term (III) on the right hand side of (6.11). It is easy to see that (III) can be written as

Using the same argument of proving the second term (II), we can show the asymptotic tightness of . Therefore, for any h1j → 0,

| (6.12) |

It follows from Assumptions (C5) and (C7) and (6.12) that (III) converges to zero uniformly. Therefore, we can finish the proof of Theorem 1 (i). Since Theorem 1 (ii) is a direct consequence of Theorem 1 (i) and Lemma 4, we finish the proof of Theorem 1.

Proof of Theorem 2. Proofs of Parts (a)—(d) are completed by some straightforward calculations. Detailed derivation is given in the supplemental document. Here we prove Part (e) only. Let K̃M,h(s) = K̃M(s/h)/h, where K̃M(s) is the empirical equivalent kernels for the first-order local polynomial kernel [11]. Thus, we have

| (6.13) |

We define

It follows from (6.13) that

| (6.14) |

It follows from Lemma 2 and a Taylor’s expansion that

Since weakly converges to a Gaussian process in ℓ∞([0, 1]) as n → ∞, is asymptotically tight. Thus, we have

Combining these results, we have

This completes the proof of Part (e).

Proof of Theorem 3. Recall that η̂ij(s) = ηij(s) + Δi,j(s), we have

| (6.15) |

This proof consists of two steps. The first step is to show that the first three terms on the right hand side of (6.15) converge to zero uniformly for all (s, t) ∈ [0, 1]2 in probability. The second step is to show the uniform convergence of to Ση(s, t) over (s, t) ∈ [0, 1]2 in probability.

We first show that

| (6.16) |

Since

| (6.17) |

it is sufficient to focus on the three terms on the right-hand side of (6.17). Since

we have

Similarly, we have

It follows from Lemma 6 that . Similarly, we can show that .

We can show that

| (6.18) |

Note that

holds for any (s1, t1) and (s2, t2), the functional class {ηj(u)ηj(υ) : (u, υ) ∈ [0, 1]2} is a Vapnik and Cervonenkis (VC) class [42, 31]. Thus, it yields that (6.18) is true.

Finally, we can show that

| (6.19) |

With some calculations, for a positive constant C1, we have

It follows from Lemma 7 that

Since we have . Furthermore, since , we have

Note that the arguments for (6.16)–(6.19) hold for Σ̂η,jj′(·, ·) for any j ≠ j′. Thus, combining (6.16)–(6.19) leads to Theorem 3 (i).

To prove Theorem 3 (ii), we follow the same arguments in Lemma 6 of Li and Hsing [32]. For completion, we highlight several key steps below. We define

| (6.20) |

Following Hall and Hosseini-Nasab [21] and the Cauchy-Schwarz inequality, we have

which yields Theorem 3 (ii.a).

Using (4.9) in Hall, Müller and Wang [22], we have

which yields Theorem 3 (ii.b). This completes the proof.

Proof of Theorem 5. The proof of Theorem 5 is given in the supplementary material of this paper.

Footnotes

The research of Zhu and Kong was supported by NIH grants RR025747-01, P01CA142538-01, MH086633, EB005149-01 and AG033387.

Li’s research was supported by NSF grant DMS 0348869, NIH grants P50-DA10075 and R21-DA024260 and NNSF of China 11028103. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NSF or the NIH.

SUPPLEMENTARY MATERIAL

Supplement to “Multivariate Varying Coefficient Model and its Application to Neuroimaging Data”: (http://www.bios.unc.edu/research/bias/documents/MVMCSuplemental.pdf). This supplemental material includes the proofs of all theorems and lemmas.

Contributor Information

Hongtu Zhu, Email: hzhu@bios.unc.edu, Departments of Biostatistics and Biomedical Research Imaging Center, University of North Carolina at Chapel Hill, Chapel Hill, NC 27599, USA.

Runze Li, Email: rli@stat.psu.edu, Department of Statistics, The Pennsylvania State University, University Park, PA 16802.

Linglong Kong, Email: llkong@bios.unc.edu, Departments of Biostatistics and Biomedical Research Imaging Center, University of North Carolina at Chapel Hill, Chapel Hill, NC 27599, USA.

References

- 1.Aguirre GK, Zarahn E, D’Esposito M. The variability of human, BOLD hemodynamic responses. NeuroImage. 1998;8:360–369. doi: 10.1006/nimg.1998.0369. [DOI] [PubMed] [Google Scholar]

- 2.Basser PJ, Mattiello J, LeBihan D. Estimation of the effective self- diffusion tensor from the NMR spin echo. Journal of Magnetic Resonance Ser. B. 1994a;103:247–254. doi: 10.1006/jmrb.1994.1037. [DOI] [PubMed] [Google Scholar]

- 3.Basser PJ, Mattiello J, LeBihan D. MR diffusion tensor spectroscopy and imaging. Biophysical Journal. 1994b;66:259–267. doi: 10.1016/S0006-3495(94)80775-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Buzsaki G. Rhythms of The Brain. Oxford University Press; 2006. [Google Scholar]

- 5.Cardot H. Conditional functional principal components analysis. Scandinavian J. of Statistics. 2007;34:317–335. [Google Scholar]

- 6.Cardot H, Josserand E. Horvitz-Thompson estimators for functional data: asymptotic confidence bands and optimal allocation for stratified sampling. Biometrika. 2011;98:107–118. [Google Scholar]

- 7.Cardot H, Chaouch M, Goga C, Labruère C. Properties of design-based functional principal components analysis. J. of Statistical Planning and Inference. 2010;140:75–91. [Google Scholar]

- 8.Chiou J, Muller H, Wang J. Functional response models. Statistica Sinica. 2004;14:675–693. [Google Scholar]

- 9.Degras DA. Simultaneous confidence bands for nonparametric regression with functional data. Statistica Sinica. 2011;21:1735–1765. [Google Scholar]

- 10.Einmahl U, Mason DM. An empirical process approach to the uniform consistency of kernel-type function estimators. Journal of Theoretical Probability. 2000;13:1–37. [Google Scholar]

- 11.Fan J, Gijbels I. Local Polynomial Modelling and Its Applications. London: Chapman and Hall; 1996. [Google Scholar]

- 12.Fan J, Yao Q, Cai Z. Adaptive varying-coefficient linear models. J. R. Stat. Soc. Ser. B Stat. Methodol. 2003;65:57–80. [Google Scholar]

- 13.Fan J, Zhang W. Statistical estimation in varying coefficient models. Ann. Statist. 1999;27:1491–1518. [Google Scholar]

- 14.Fan J, Zhang W. Simultaneous confidence bands and hypothesis testing in varying-coefficient models. Scand J. Statist. 2000;27:715–731. [Google Scholar]

- 15.Fan J, Zhang W. Statistical methods with varying coefficient models. Stat. Interface. 2008;1:179–195. doi: 10.4310/sii.2008.v1.n1.a15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Faraway JJ. Regression analysis for a functional response. Technometrics. 1997;39:254–261. [Google Scholar]

- 17.Fass L. Imaging and cancer: a review. Molecular Oncology. 2008;2:115–152. doi: 10.1016/j.molonc.2008.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Friston KJ. Statistical Parametric Mapping: the Analysis of Functional Brain Images. London: Academic Press; 2007. [Google Scholar]

- 19.Friston KJ. Modalities, modes, and models in functional neuroimaging. Science. 2009;326:399–403. doi: 10.1126/science.1174521. [DOI] [PubMed] [Google Scholar]

- 20.Goodlett CB, Fletcher PT, Gilmore JH, Gerig G. Group analysis of DTI fiber tract statistics with application to neurodevelopment. NeuroImage. 2009;45:S133–S142. doi: 10.1016/j.neuroimage.2008.10.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hall P, Hosseini-Nasab M. On properties of functional principal components analysis. Journal of the Royal Statistical Society B. 2006;68:109–126. [Google Scholar]

- 22.Hall P, Müller H-G, Wang J-L. Properties of principal component methods for functional and longitudinal data analysis. Ann. Statist. 2006;34:1493–1517. [Google Scholar]

- 23.Hall P, Müller H-G, Yao F. Modelling sparse generalized longitudinal observations with latent Gaussian processes. J. R. Stat. Soc. Ser. B Stat. Methodol. 2008;70:703–723. [Google Scholar]

- 24.Hastie TJ, Tibshirani RJ. Varying-coefficient models. J. Roy. Statist. Soc. B. 1993;55:757–796. [Google Scholar]

- 25.Heywood I, Cornelius S, Carver S. An Introduction to Geographical Information Systems. 3rd ed. Prentice Hall; 2006. [Google Scholar]

- 26.Hoover DR, Rice JA, Wu CO, Yang L-P. Nonparametric smoothing estimates of time-varying coefficient models with longitudinal data. Biometrika. 1998;85:809–822. [Google Scholar]

- 27.Huang JZ, Wu CO, Zhou L. Varying-coefficient models and basis function approximations for the analysis of repeated measurements. Biometrika. 2002;89:111–128. [Google Scholar]

- 28.Huang JZ, Wu CO, Zhou L. Polynomial spline estimation and inference for varying coefficient models with longitudinal data. Statist. Sinica. 2004;14:763–788. [Google Scholar]

- 29.Huettel SA, Song AW, McCarthy G. Functional Magnetic Resonance Imaging. London: Sinauer Associates, Inc; 2004. [Google Scholar]

- 30.Kosorok MR. Bootstraps of sums of independent but not identically distributed stochastic processes. J. Multivariate Anal. 2003;84:299–318. [Google Scholar]

- 31.Kosorok MR. Introduction to Empirical Processes and Semiparametric Inference. New York: Springer; 2008. [Google Scholar]

- 32.Li Y, Hsing T. Uniform Convergence Rates for Nonparametric Regression and Principal Component Analysis in Functional/Longitudinal Data. The Annals of Statistics. 2010;38:3321–3351. [Google Scholar]

- 33.Lindquist M. The Statistical Analysis of fMRI Data. Statistical Science. 2008;23:439–464. [Google Scholar]

- 34.Lindquist M, Loh JM, Atlas L, Wager T. Modeling the Hemodynamic Response Function in fMRI: Efficiency, Bias and Mis-modeling. NeuroImage. 2008;45:S187–S198. doi: 10.1016/j.neuroimage.2008.10.065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ma S, Yang L, Carroll RJ. A simultaneous confidence band for sparse longitudinal regression. Statistica Sinica. 2011;21:95–122. doi: 10.5705/ss.2010.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Mercer J. Functions of positive and negative type, and their connection with the theory of integral equations. Philos. Trans. Roy. Soc. London Ser. A. 1909;209:415–446. [Google Scholar]

- 37.Niedermeyer E, da Silva FL. Electroencephalography: Basic Principles, Clinical Applications, and Related Fields. Lippincot Williams & Wilkins; 2004. [Google Scholar]

- 38.Ramsay JO, Silverman BW. Functional Data Analysis. New York: Springer-Verlag; 2005. [Google Scholar]

- 39.Rice JA, Silverman BW. Estimating the mean and covariance structure nonparametrically when the data are curves. J. Roy. Statist. Soc. Ser. B. 1991;53:233–243. [Google Scholar]

- 40.Sun J, Loader CR. Simultaneous Confidence Bands for Linear Regression and Smoothing. The Annals of Statistics. 1994;22:1328–1345. [Google Scholar]

- 41.Towle VL, Bolaños J, Suarez D, Tan K, Grzeszczuk R, Levin DN, Cakmur R, Frank SA, Spire JP. The spatial location of EEG electrodes: locating the best-fitting sphere relative to cortical anatomy. Electroencephalogr Clin Neurophysiol. 1993;86:1–6. doi: 10.1016/0013-4694(93)90061-y. [DOI] [PubMed] [Google Scholar]

- 42.van der Vaar AW, Wellner JA. Weak Convergence and Empirical Processes. Springer-Verlag Inc; 1996. [Google Scholar]

- 43.Wand MP, Jones MC. Kernel Smoothing. London: Chapman and Hall; 1995. [Google Scholar]

- 44.Wang L, Li H, Huang JZ. Variable selection in nonparametric varying-coefficient models for analysis of repeated measurements. J. Amer. Statist. Assoc. 2008;103:1556–1569. doi: 10.1198/016214508000000788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Welsh AH, Yee TW. Local regression for vector responses. Journal of Statistical Planning and Inference. 2006;136:3007–3031. [Google Scholar]

- 46.Worsley KJ, Taylor JE, Tomaiuolo F, Lerch J. Unified univariate and multivariate random field theory. NeuroImage. 2004;23:189–195. doi: 10.1016/j.neuroimage.2004.07.026. [DOI] [PubMed] [Google Scholar]

- 47.Wu CO, Chiang CT, Hoover DR. Asymptotic confidence regions for kernel smoothing of a varying-coefficient model with longitudinal data. J. Amer. Statist. Assoc. 1998;93:1388–1402. [Google Scholar]

- 48.Wu CO, Chiang C-T. Kernel smoothing on varying coefficient models with longitudinal dependent variable. Statist. Sinica. 2000;10:433–456. [Google Scholar]

- 49.Wu HL, Zhang JT. Nonparametric Regression Methods for Longitudinal Data Analysis. Hoboken, New Jersey: John Wiley & Sons, Inc; 2006. [Google Scholar]

- 50.Yao F, Lee TCM. Penalized spline models for functional principal component analysis. J. R. Stat. Soc. Ser. B Stat. Methodol. 2006;68:3–25. [Google Scholar]

- 51.Zhang J, Chen J. Statistical inference for functional data. The Annals of Statistics. 2007;35:1052–1079. [Google Scholar]

- 52.Zhou Z, Wu WB. Simultaneous inference of linear models with time varying coefficients. J. R. Statist. Soc. B. 2010;72:513–531. [Google Scholar]

- 53.Zhu HT, Ibrahim JG, Tang N, Rowe DB, Hao X, Bansal R, Peterson BS. A statistical analysis of brain morphology using wild bootstrapping. IEEE Trans Med Imaging. 2007a;26:954–966. doi: 10.1109/TMI.2007.897396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Zhu HT, Zhang HP, Ibrahim JG, Peterson BG. Statistical analysis of diffusion tensors in diffusion-weighted magnetic resonance image data (with discussion) Journal of the American Statistical Association. 2007b;102:1085–1102. [Google Scholar]

- 55.Zhu HT, Styner M, Tang NS, Liu ZX, Lin WL, Gilmore JH. FRATS: Functional Regression Analysis of DTI Tract Statistics. IEEE Transactions on Medical Imaging. 2010;29:1039–1049. doi: 10.1109/TMI.2010.2040625. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.