Abstract

Purpose

This study examined the effects of deafness and sign language use on the distribution of attention across the top and bottom halves of faces.

Methods

In a composite face task, congenitally deaf signers and typically hearing controls made same/different judgments of the top or bottom halves of faces presented with the halves aligned or spatially misaligned, while event-related potentials (ERPs) were recorded.

Results

Both groups were more accurate when judging misaligned than aligned faces, which indicates holistic face processing. Misalignment affected all ERP components examined, with effects on the N170 resembling those of face inversion. Hearing adults were similarly accurate when judging the top and bottom halves of the faces, but deaf signers were more accurate when attending to the bottom than the top. Attending to the top elicited faster P1 and N170 latencies for both groups; within the deaf group, this effect was greatest for individuals who produced the highest accuracies when attending to the top.

Conclusions

These findings dovetail with previous research by providing behavioral and neural evidence of increased attention to the bottom half of the face in deaf signers, and by documenting that these effects generalize to a speeded task, in the absence of gaze shifts, with neutral facial expressions.

Keywords: face perception, deafness, event-related potentials, N170

1. Introduction

Behavioral and neural mechanisms of face perception have a protracted developmental time course, maturing throughout childhood and into late adolescence. (see Gauthier & Nelson, 2001, and Nelson, 2001 for reviews). They are also plastic over the course of development (Taylor, McCarthy, et al 1999; Passarotti, Smith, et al 2007; Scherf, Behrmann, et al 2007) and throughout adulthood (Gauthier & Bukach, 2007; Tarr & Gauthier, 2000), as well as with explicit training (Rossion, Gauthier, et al 2002; Tarr & Gauthier, 2000). The development of face perception can be affected by atypical visual sensory experience early in life, such as congenital cataracts in infancy (Le Grand, Mondloch, et al 2004). Some research also documents effects of atypical auditory experience (i.e., congenital deafness) on face processing, indicating that it is product of multimodal experience, rather than a purely visual domain.

There are several reasons why deafness might affect basic mechanisms of face perception. In the absence of information from others’ voices, deaf individuals must pay particular attention to visual cues from faces and facial expressions in order to recognize individuals and their emotional states. In addition, for those who communicate via sign language, nonmanual markers articulated on the face are a crucial linguistic component of American Sign Language (ASL). Rapid changes in facial features, often involving changes in eyebrow or mouth shape, are used to demarcate clauses and signal prosodic changes, and often contain semantic meaning separate from manual signs (Baker-Shenk, 1985; Corina, 1989; Corina, Bellugi, et al 1999). Thus, sensory, social, and linguistic factors may increase the salience of specific aspects of faces for deaf signers.

A number of studies have documented effects of deafness and sign language use on a variety of face perception tasks and techniques. Studies employing the Benton Test of Facial Recognition, which taps recognition of the same individual photographed from different angles, reported that deaf signing children and adults outperformed hearing nonsigners (Bellugi et al., 1990; Bettger, Emmorey et al, 1997), as did hearing individuals who were native signers (born to deaf, signing parents; Bettger, Emmorey et al, 1997). Additional research has shown that deaf signers outperformed hearing nonsigners on a delayed match-to-sample task in which the foil sample stimulus differed from the target on a single feature – the eyes, nose or mouth (McCullough & Emmorey, 1997). As in the Benton task studies, hearing native signers outperformed their nonsigning counterparts. Finally, behavioral (Szelag & Wasilewski, 1992; Vargha-Khadem, 1983) and fMRI studies (McCullough, Emmorey, et al 2005) showed that deafness and sign language use alters the typical left visual field/right hemispheric lateralization of responses to faces, resulting in a more bilateral distribution. Thus, empirical evidence supports the notion that deafness and sign language use induce changes in behavioral and neural organization of face processing.

A highly systematic and robust finding in the literature for typically hearing individuals is the distribution of gaze and attention across the face. In Western cultures, typically hearing individuals fixate nearly exclusively on the top half of the face, particularly the eyes (Henderson, Williams, et al 2005; Janik, Wellens, et al 1978; Schwarzer, Huber, et al 2005), even during speech-reading tasks that involve word identification from audio-visual face stimuli (Buchan, Pare, et al 2007). This gaze pattern can vary when attention to emotional facial expressions is required, when some fixations are directed toward the mouth (Letourneau & Mitchell, 2008, 2011). These general gaze patterns are reflected in the relative salience of information in the top of the face as compared to the bottom. Typically hearing adults discriminate changes made to the eyes in photographs of faces more accurately than changes made to the mouth (O’Donnell & Bruce, 2001), and recognition performance drops when the eyes are masked or hidden (McKelvie, 1976; Schyns, Bonnar, et al 2002). Further, studies using the “Bubbles” technique, which systematically limits the information and spatial extent of an image available to the viewer, show that information around the eyes is necessary for face identity judgments while information around the mouth is necessary for emotion judgments (Schyns, Bonnar, et al 2002).

Evidence suggests that deafness and sign language use change this distribution of gaze and attention across the face, but do not result in face processing deficiencies. When deaf signers view a video clip of another signer from the waist up, they do not focus on the hands but rather on the face, which is the site of important nonmanual markers in ASL (McCullough & Emmorey, 1997). Eye tracking recordings during signed communication show that although most of deaf signers’ fixations are to the eye region, a reliable percentage of fixation time is also devoted to the lower half of the face (Agrafiotis et al., 2003; De Filippo & Lansing, 2006; Emmorey, Thompson, et al 2009; Muir & Richardson, 2005; Siple, 1978). Importantly, this gaze pattern is not limited to viewing ASL. Studies employing still photographs of full faces document that when provided with 2-3 seconds of viewing time, deaf signers direct a slight majority of fixations to the top half of the face (generally the eyes), but devote nearly equal time and fixations to the bottom of the half of the face (generally the mouth; Letourneau & Mitchell, 2011; Watanabe, Matsuda, et al 2011). This pattern of gaze does not vary with task demands, as it was observed when deaf signers attended to face identity as well as when they attended to emotional expression (Letourneau & Mitchell, 2011). Finally, deaf signers’ fixation patterns do not appear to vary with culture as they do for hearing nonsigners. Deaf signers in both Eastern and Western cultures distribute their gaze between the top and bottom halves of the face, hearing nonsigners in Western cultures devote comparably more gaze to the top half and less to the bottom, while hearing nonsigners in Eastern cultures devote comparably less gaze to the top and more to the bottom than deaf signers (Letourneau & Mitchell, 2011; Watanabe, et al., 2011). Thus, deafness and sign language use, appear to induce a shift in gaze toward the bottom half of the face and this generalizes beyond culture and beyond linguistic tasks to tasks requiring attention to face identity and facial expression.

In addition to devoting significant fixations to the bottom half of the face, deaf signers devote relatively more attention to that region than hearing nonsigners do and, in some instances, less attention to information in the top half. In the eye-tracking study reviewed above (Letourneau & Mitchell, 2011), deaf signers and hearing nonsigners were also asked to make judgments of the identity and emotional expression in whole faces as well as in isolated top halves and isolated bottom halves. This manipulation allowed for an examination of the relative salience of information contained in each part of the face. Deaf signers and hearing nonsigners performed similarly when presented with full faces and when presented with isolated bottom halves. However, when presented with isolated top halves of faces, deaf signers were less accurate than hearing nonsigners. This decrease in the salience of information in the top half of the face for deaf signers, therefore, was directly linked to their relative increase in the number of fixations directed to the bottom half of whole faces.

The relative increase in salience of the bottom half of the face may be task-dependent for deaf signers. In a match-to-sample task described earlier (McCullough & Emmorey, 1997), deaf signers made fewer errors than either hearing signers or hearing nonsigners when the foil sample contained a differing mouth. However, they also made fewer errors than either group when detecting changes made to the eyes. The difference between these results and Letourneau and Mitchell (2011) may be due to the demands of the tasks in each study. McCullough and Emmorey (1997) required the discrimination of individual features within three highly similar faces that did not vary in expression, while Letourneau and Mitchell (2011) required recognition and labeling of identities or emotions in individual faces. The match-to-sample task may provide opportunities to compare stimuli in a manner not possible in a recognition/identification task with individual stimuli, which may have enabled deaf subjects to discriminate equally well across facial features. By contrast, the recognition and/or identification of a single face and its emotional expression may evoke less feature-based processing and may reveal differences in the relative salience of individual features or regions of the face.

Together these studies indicate that deafness and/or sign language use have the potential to alter the salience of information contained in different parts of the face. It is as yet unknown whether the group differences documented in the literature are observed only when linguistic analysis of faces and facial gestures is required, therefore the current study was designed to examine effects of deafness and sign language use on processing of faces posing neutral expressions devoid of social, emotional or linguistic cues. The current study was also designed to determine whether increased salience of information in the bottom half of the face is observed when controlling for gaze shifts and using short stimulus durations, or whether it is dependent upon shifts in gaze directed specifically to that region of the face. Results of the current study will shed light on whether lifelong deafness and ASL experience result in plasticity of basic neural and behavioral mechanisms of face perception that extend beyond communicative interactions to impact a broad array of face processing tasks. Such a finding would provide important evidence for multimodal plasticity in the neurocognitive system devoted to face processing.

Deaf signers and hearing nonsigners in this study completed a composite face task (Young, Hellawell, et al 1987) to examine holistic face processing and the relative salience of information in the top and bottom halves of the face in the two populations. The composite face task taps holistic processing, or the tendency to perceive and encode the face as a single unit rather than as a group of features (Tanaka & Farah, 1991, 1993). Participants are presented with a pair of composite faces that are created by combining the top half of one person’s face with the bottom half of another. These composites are presented either with the two halves spatially aligned as an intact whole, or with the top and bottom spatially misaligned. Participants must attend selectively and judge whether only the top or bottom halves of the two composites are the same or different (Hole, 1994; Hole, George, et al 1999). Because the unattended halves of the faces are always different, evidence of holistic processing is manifested in reduced accuracies in detecting that attended halves are actually the same. This task is particularly challenging when the top and bottom halves are aligned as intact faces as compared to when the halves are misaligned. In the former case, holistic processing typically binds the two halves of the face together which increases the difficulty of selectively attending, while in the latter case spatially misaligning the two halves disrupts holistic processing which results in improved performance. The current study takes a relatively unique approach by using this task to also examine the relative salience of the top and bottom halves of the face by comparing performance when attention is directed to the top to performance when attention is directed to the bottom. If accuracies are relatively higher when attending selectively to the bottom half of the face than the top, for example, this suggests that information in the bottom half is relatively more salient and that participants are less able to limit their attention to the top half. We predicted that deaf signers would demonstrate higher accuracies when attending to the bottom half of the face than the top, while hearing nonsigners would not differ across the two conditions. We also predicted that both participant groups would process faces holistically. However, if deaf signers are better able to attend selectively to parts of faces than are hearing nonsigners, then they may show smaller alignment effects in this task.

Neural effects of deafness and sign language use during this task were examined with event-related potentials (ERPs). Previous research has shown that spatial misalignment of the face halves in the composite task results in effects on the N170 and N250 components that resemble those elicited by facial inversion; that is, misaligned faces elicited larger and slower peaks than intact faces (Jacques & Rossion, 2009, 2010; Kuefner, Jacques, et al 2010; Letourneau & Mitchell, 2008). We predicted that the two participant groups should produce similar effects of misalignment on face-sensitive ERPs. Further, attending to the top half of the face during this task elicits faster latencies of the P1 and N170 components than attending to the bottom in typically hearing adults, relative to the bottom half (Letourneau & Mitchell, 2008). This suggests that a processing advantage might be afforded to the eye region of the face, perhaps because of its salience during many face perception tasks. If deaf adults direct less attention to the top half of the face, they may show smaller ERP processing advantages for the eye region, compared to the hearing group.

ERP data were also examined for effects of deafness and sign language use on the laterality of face processing. The effects of misalignment described above are greater in the right hemisphere than the left (Jacques & Rossion, 2009; Letourneau & Mitchell, 2008), thus the composite task is likely to elicit a reliable right hemispheric asymmetry. In addition, the absence of linguistic facial expressions should reduce the involvement of the left hemisphere in the neural processing of the stimuli in this task. Thus, the current study poses a strong test of whether deafness and sign language use affect the fundamental right hemispheric asymmetry for face processing. If a reduction in the right hemispheric asymmetry in deaf signers is fundamental and present from very early stages of visual perception, even during the perception of static neutral faces, we should observe similar ERP amplitudes over the right and left hemispheres in the deaf group, only.

2. Methods

2.1. Participants

Twelve hearing adults (6 female) between the ages of 25-38 (mean age 31.25), and 12 congenitally deaf adults (7 female) aged 23-51 (mean age 32.5) participated in the study. Subjects completed a background questionnaire that included questions about their language background and experience, and questions for deaf individuals about use of and experience with assistive devices. All subjects had normal or corrected-to-normal vision, as assessed by a “Tumbling-E” Snellen-type chart, and self reported to have no neurological or psychological diagnoses. As shown in Table, 1, deaf subjects were severely to profoundly deaf, most had been diagnosed within the first year of life, and were exposed to ASL prior to age 5. None reported to have had progressive hearing loss and all but four deaf subjects were daily hearing aid users; of those four, two had used hearing aids until adolescence or adulthood and two had never used any assistive device. Hearing subjects self-reported to have normal hearing and no knowledge of ASL or experience communicating with deaf people. Subjects provided informed consent under a protocol approved by the University of Massachusetts Medical School and were paid for their participation. Instructions were given in ASL for all deaf participants.

Table 1.

Deaf participant demographics.

| # | Sex | Deaf Family Members | Cause of Deafness | Age when Diagnosed |

Age of first exposure to ASL* |

|---|---|---|---|---|---|

| 1 | M | Parents, siblings (4th generation deaf family) |

Genetic | <1 | Birth |

| 2 | F | Parents, siblings (4th generation deaf family) |

Genetic | <1 | Birth |

| 3 | F | Father and siblings, hard of hearing mother |

Genetic | <1 | Birth |

| 4 | F | Parents and other family members |

Genetic | <1 | Birth |

| 5 | F | Parents and others (5th generation deaf family) |

Genetic | <1 | Birth |

| 6 | F | None | Kniest dysplasia | <1 | 1 |

| 7 | M | None | Unknown | <1 | 1 |

| 8 | M | None | Unknown | 1 | 1 |

| 9 | F | None | Unknown | 3.5 | 4 |

| 10 | F | None | Unknown | 1.5 | SEE:3, ASL:5 |

| 11 | M | None | Unknown | <1 | 5 |

| 12 | M | One sibling | Unknown | <1 | 5 |

Deaf individuals with deaf parents or older siblings were exposed to ASL at home from birth. For deaf individuals without deaf family members, the age at which they began learning ASL is listed. This usually took place at deaf community centers and deaf schools. SEE=Signed Exact English. All deaf participants used ASL daily as their primary means of communication.

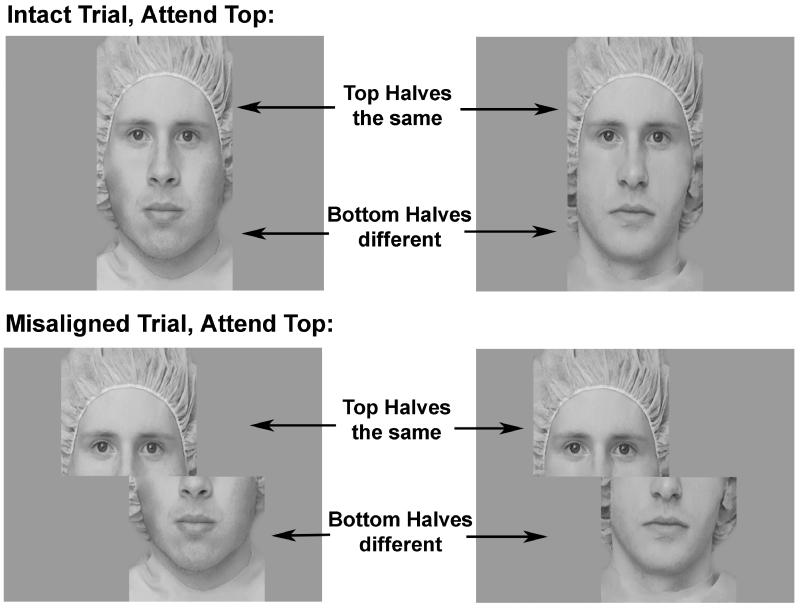

2.2. Stimuli

Fifty-two face composites were created from gray scale digitized images of adult Caucasian faces (see Le Grand, et al., 2004). Photographs were taken of male and female models wearing no jewelry, glasses or makeup, with a surgical cap covering their hair and ears. Using Adobe Photoshop, face composites were created by splitting face images in half horizontally across the middle of the nose, and then recombining the faces using the top and the bottom halves of different individuals. In the aligned condition, the top and bottom face segments were properly aligned to resemble a normal, intact face (see Figure 1, top panel). In the misaligned condition, the top half of each face was misaligned by shifting it horizontally to the left so that the right-most edge of the top half was aligned with the middle of the nose in the bottom half of the image (see Figure 1, bottom panel). A previous study found no effects of the direction (left/right) of misalignment on behavioral measures of holistic processing or any ERP components (Letourneau & Mitchell, 2008).

Figure 1.

Composite face stimuli.

The same face composites were used in the aligned and misaligned conditions. Stimuli in the attend top and attend bottom conditions were taken from images of the same individuals. Thus, stimulus identity was balanced between the aligned and misaligned, and attend top and attend bottom blocks. On all trials the unattended half of the faces differed; on fifty percent of the trials the attended half differed (“different” trials) and on the other fifty percent the attended half was the same (“same” trials). Same and different trials were intermixed randomly within each block. Each block contained 48 trials.

Within each trial, faces were centered on the screen, and the location of the face halves remained constant. Stimuli in the aligned condition were 20 cm wide by 30 cm high presented on a 21” color CRT monitor, subtending a visual angle of 5.7 × 8.6 degrees at a distance of 200 cm. Stimuli in the misaligned condition were 30 cm wide by 30 cm high, subtending a visual angle of 8.6 × 8.6 degrees. Although the misaligned stimuli occupied a wider horizontal visual angle, the face halves were of identical size in both conditions.

2.3. Study Design & Procedure

The study design is a 2 by 2, alignment (intact vs. misaligned faces) by attended half (top vs. bottom). Four blocks were presented, two presenting intact faces and two presenting misaligned faces, and these conditions were counterbalanced across subjects. Within each condition, subjects were instructed in one block to base their judgment on only the top halves and to ignore the bottom, and were instructed in the other block to base their judgment on only the bottom halves and to ignore the top. The order of presentation of the “attend top” and “attend bottom” blocks was also evenly counterbalanced between subjects.

Subjects sat in a dimly lit, sound attenuating room 200 cm from a 21” CRT computer monitor. Prior to each block, subjects received nine practice trials with feedback. The faces in the first three practice trials were presented side by side for comparison, and remained on the screen until the response was made. The faces in the remaining six practice trials were presented sequentially, similar to the experimental trials. In the practice trials, the first face was presented for 360 milliseconds and the second face remained on the screen until the response was made. On all experimental trials the faces were presented sequentially. For the 192 total experimental trials, the first face was presented for 200 ms, followed by a 500 ms inter-stimulus interval, and the second face remained on the screen until the response. Subjects were asked to judge as quickly and accurately as possible whether the attended halves of the two faces were the same or different and were explicitly told to ignore the unattended half (the experimenter specified which half was to be attended prior to each block). Subjects used their right hand to press one button on a game pad to indicate the attended halves of the two faces were the same, and a different button to indicate that the attended halves were different. Accuracy and reaction times (RTs) were recorded. Short breaks between blocks were provided.

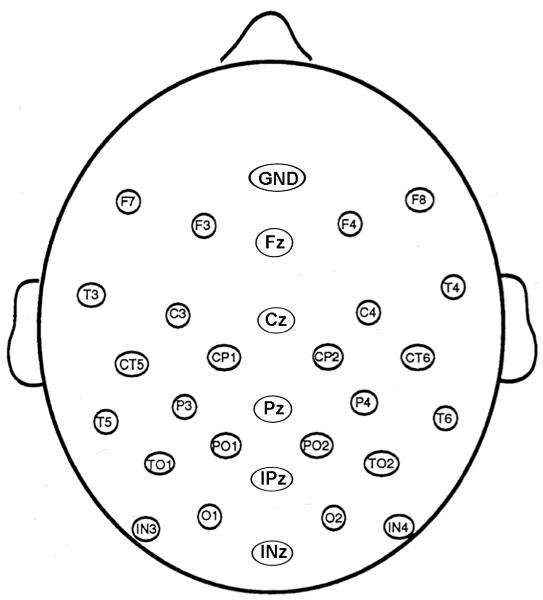

2.4. ERP Procedure and Data Acquisition Parameters

An appropriately fitting cap (ElectroCap, Int’l.) with tin electrodes sewn into it was placed on the participant’s head. Electroencephalographic (EEG) data were recorded throughout the task from 29 scalp electrodes in a montage, shown in Figure 2, comprised of 10 sites from the International 10-20 system and an additional 19 sites interspersed between these to form a regular grid covering mostly the posterior portion of the head. An additional channel recorded from the right mastoid and all channels were referenced to the left mastoid during recording. Vertical eye movements were monitored with one electrode under the left eye that was also referenced to the left mastoid and horizontal eye movements were recorded with a bipolar recording from single electrodes placed lateral to each eye. Impedances of mastoid electrodes were maintained below 2 kΩ, scalp electrodes below 5 kΩ, and face electrodes below 10 kΩ. The EEG from each channel was digitized at 250 Hz, recorded at a bandwidth of 0.1 to 100 Hz, and amplified 20,000 times. Data were low-pass filtered offline at 60 Hz. The EEG was divided into epochs timelocked to the presentation of the second face in the trial, from 100 ms prior to stimulus onset to 500 ms after stimulus onset. These epochs were then averaged according to alignment and attended half. Trials containing eye movement and/or muscle artifact were rejected using threshold-based software routines and by trial-by-trial visual inspection of the data. Approximately 5.7% of trials were lost during artifact rejection. ERP results below were time-locked to the presentation of the second face in each trial and include only correct trials.

Figure 2.

Montage of 29 scalp electrodes.

2.5. Data Analysis

Behavioral accuracy and reaction time (RT) were analyzed with mixed model, repeated-measures ANOVAs with alignment (intact vs. misaligned), attended half (top vs. bottom), and trial type (attended halves the same vs. different) as within-subjects factors and group (deaf, hearing) as the between-subjects factor. Amplitudes and latencies of the ERP components described above were analyzed for the selected electrodes with mixed model, repeated-measures ANOVAs, with alignment, attended half, trial type, and hemisphere (left vs. right) as within-subjects factors and group as the between-subjects factor. For all dependent measures, additional ANOVAs were run within each of the two participant groups in order to examine group differences in main effects and interactions between factors.

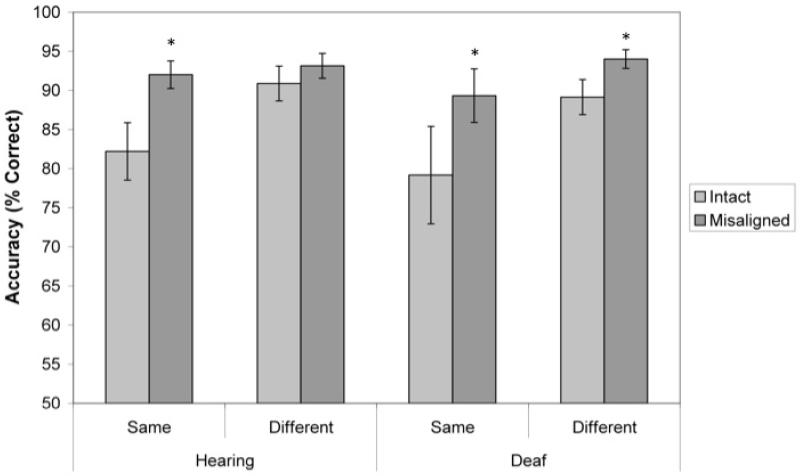

3. Results

3.1. Behavior

Means and standard errors of accuracies and RTs are presented in Tables 2 and 3, respectively. Holistic processing of composite stimuli should lead participants to make more errors and respond more slowly when the attended halves were actually the same because unattended halves of the faces always differed within each pair. These effects should be reduced by spatially misaligning the face halves. As expected, participants were faster (F (1, 22) = 28, p < .001) and more accurate (F (1, 22) = 22, p < .001) when judging misaligned faces than intact faces. These effects were also significant in separate analyses of the two groups for accuracy (Hearing: F(1,11)=17.314, p=0.002; Deaf: F(1,11)=9.392, p=0.011) and RT (Hearing: F(1,11)=20.380, p=0.001; Deaf: F(1,11)=10.087, p=0.009). Furthermore, an Alignment by Trial type interaction indicated that alignment of the face halves decreased accuracy specifically when the attended halves were the same (F (1, 22) = 5.4, p = .029; see Figure 3); for RT, this effect was marginal (p = .07). Within group analyses showed that the deaf group produced significant alignment effects for both trial types (same trials, p=0.010, different trials, p=0.009), while the hearing group only showed significant alignment effects for “same” trials (p=0.012; “different” trials, p=0.194).

Table 2.

Accuracies across conditions in hearing and deaf groups.

| Intact | Misaligned | ||||

|---|---|---|---|---|---|

|

|

|||||

| Mean | SE | Mean | SE | ||

| Hearing | Attend Top | 85.50 | 2.53 | 91.75 | 1.29 |

| Attend Bottom | 87.59 | 2.43 | 93.40 | 1.35 | |

| Deaf | Attend Top | 81.86 | 3.59 | 89.41 | 1.88 |

| Attend Bottom | 86.46 | 3.29 | 93.92 | 1.52 | |

SE = Standard error of the mean

Table 3.

Reaction Times across conditions in hearing and deaf groups.

| Intact | Misaligned | ||||

|---|---|---|---|---|---|

|

|

|||||

| Mean | SE | Mean | SE | ||

| Hearing | Attend Top | 943.12 | 56.89 | 788.58 | 45.93 |

| Attend Bottom | 943.64 | 64.43 | 808.33 | 54.68 | |

| Deaf | Attend Top | 936.55 | 90.09 | 755.68 | 49.53 |

| Attend Bottom | 880.51 | 93.23 | 781.12 | 54.54 | |

SE = Standard error of the mean

Figure 3.

Effects of misalignment and trial type on accuracy in hearing and deaf groups. Both hearing and deaf groups show higher accuracies for trials with misaligned than intact faces, especially when the attended halves are the same. Error bars indicate standard error of the mean. Asterisks indicate significant alignment effects (p<.05).

Overall accuracies were also higher when attending to the bottom than the top half of the face(F (1, 22) = 6.7, p = .017), but within-group analyses showed that this effect was significant only for the deaf group (F(1,11)=7.657, p=0.018) and not the hearing (p = .3). Between-group differences in accuracy within each condition failed to reach significance.

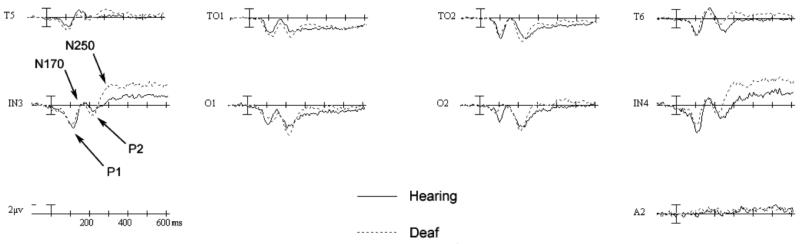

3.2. ERP Results

Peak amplitudes and latencies were measured for four ERP components of interest, which are labeled on Figure 4: the P1 (90-130ms post stimulus onset), N170 (140-200ms), P2 (200-275ms), and N250 (240-340ms). All peaks were measured in electrodes O1, O2, IN3, IN4, T5, T6, TO1, and TO2. Electrodes TO1 and TO2 are located 3 cm posterior to T5 and T6, respectively, on the same horizontal plane. Electrodes IN3 and IN4 are located 6 cm below the horizontal plane of T5 and T6, halfway between T5/O1 and T6/O2, respectively.

Figure 4.

Grand mean averages comparing hearing and deaf participants. ERPs shown are the averaged response to the second face in intact, attend top trials in lateral, posterior electrode sites. A2 = right mastoid.

Statistics of main effects are presented in tabular form, with alignment effects presented in Table 4, hemispheric asymmetries presented in Table 5, and attended half effects presented in Table 6. As with the behavioral results, the two participant groups were directly compared in a mixed-effects, repeated measures ANOVA and additional ANOVAs were performed within each of the two groups.

Table 4.

Alignment effects

| Peak | Measure | Both Groups | Hearing Group | Deaf Group |

|---|---|---|---|---|

| P1 | Amplitude | F(1,22)=4.723, p=0.041 | n.s. | n.s. |

| N170 | Amplitude | F(1,22)=41.342, p<0.001 | F(1,11)=35.162, p<0.001 | F(1,11)=16.584, p=0.002 |

| Latency | F(1,22)=21.439, p<0.001 | F(1,11)=6.765, p=0.025 | F(1,11)=14.834, p=0.003 | |

| P2 | Amplitude | F(1,22)= 4.953, p=0.037 | F(1,11)=3.909, p=0.074 | n.s. |

| N250 | Amplitude | F(1,22)=10.152, p=0.004 | F(1,11)=7.069, p=0.022 | F(1,11)=3.759, p=0.079 |

| Latency | F(1,22)=8.974, p=0.007 | n.s. | F(1,11)=9.507, p=0.010 |

n.s. = not significant. No significant alignment effects were observed for P1 or P2 latencies.

Table 5.

Right hemispheric asymmetry

| Peak | Measure | Both Groups | Hearing Group | Deaf Group |

|---|---|---|---|---|

| P1 | Amplitude | n.s. | F(1,11)=3.884, p=0.074 | n.s. |

| Latency | n.s. | n.s. | F(1,11)=11.711, p=0.006 (for bottom halves only) |

|

| N170 | Amplitude | F(1,22)=16.840, p<0.001 | F(1,11)=5.749, p=0.035 | F(1,11)=15.296, p=0.002 |

| Latency | F(1,22)=11.333, p=0.003 | n.s. | F(1,11)=12.093, p=0.005 | |

| P2 | Amplitude | F(1,22)=3.990, p=0.058 | F(1,11)=4.442, p=0.059 | n.s. |

n.s. = Not significant. No significant hemisphere effects were observed for P2 latency, N250 amplitude, or N250 latency

Table 6.

Attended half effects

| Peak | Measure | Both Groups | Hearing Group | Deaf Group |

|---|---|---|---|---|

| P1 | Latency | F(1,22)=6.329, p=0.020 | F(1,11)=9.815, p=0.010 | n.s. |

| N170 | Latency | F(1,22)=21.920, p<0.001 |

F(1,11)=17.615, p=0.001 |

F(1,11)=5.853, p=0.034 |

| P2 | Latency | F(1,22)=10.868, p=0.003 |

n.s. | F(1,11)=11.284, p=0.006 |

| Amplitude | F(1,22)=6.507, p=0.018 | n.s. | F(1,11)=8.275, p=0.015 | |

| N250 | Latency | F(1,22)=4.738, p=0.041 | n.s. | F(1,11)=11.337, p=0.006 |

n.s. = Not significant. No significant half effects were observed for P1, N170, and N250 amplitudes.

3.2.1. P1

Analysis of all participants indicated that the P1 was larger in response to misaligned than intact faces (see Table 4). However, this effect was significant only when the two groups were combined, and not for either group of subjects individually. Therefore alignment only weakly affected P1 amplitudes.

Hearing subjects showed marginally greater P1 amplitudes in the right hemisphere than left overall (see Table 5). This hemisphere effect was significant specifically during misaligned trials and when attending to the bottom half of the face (Alignment × half × hemisphere: Hearing: F(1,11)=10.515, p=0.008, hemisphere effect for misaligned bottom, p=0.004). The deaf group did not show any effects of hemisphere, alignment or half on P1 amplitude.

In the hearing group, the P1 was also faster when subjects attended to the top halves of faces, compared to the bottom (see Table 6 and Figure 5), especially when faces were misaligned (Alignment × Half interaction: F(1,11)=4.448, p=0.059), and during intact, same trials (Alignment × Trial type interaction: F(1,11)=7.342, p=0.020). For the deaf group, the P1 was also faster for top halves than bottom halves, but only over the LH (Half × hemisphere interaction: F(1,11)=11.711, p=0.006), and this half effect did not vary by alignment, as observed in the hearing group.

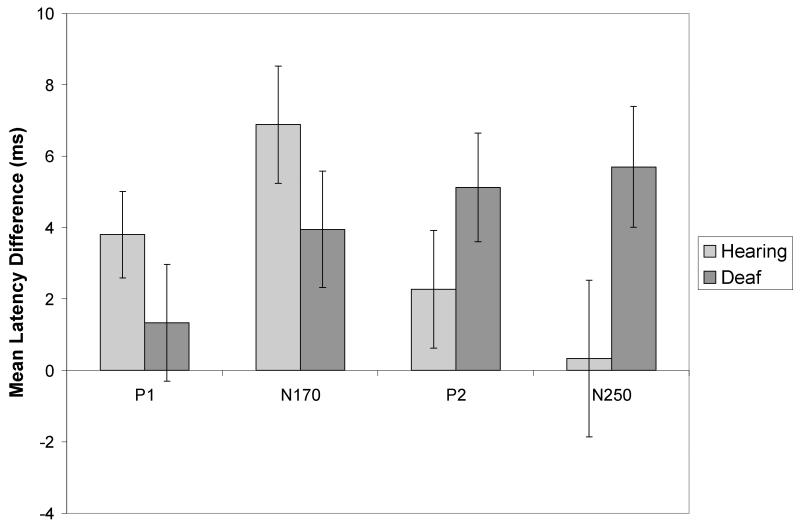

Figure 5.

Attended half effects in ERP components indicate processing advantages for the top half of the face at different perceptual stages in hearing and deaf groups. The mean latency differences shown are subtractions of the mean latency for the attend top conditions from the mean latency for the attend bottom conditions, such that positive values reflect a top half bias. Error bars are standard error of the mean.

In sum, the P1 latencies were earlier for the top than the bottom halves overall in the hearing group, especially when the face halves were separated by misalignment. The effects of face half on P1 latencies in the deaf group were restricted to the left hemisphere and did not increase when the top half of the face was spatially separated from the bottom half. These results support an early processing advantage for the eye region that is stronger in the hearing group than the deaf group.

3.2.2. N170

The N170 for both groups was larger and appeared later when subjects were presented with misaligned rather than intact faces (see Table 4). Both groups also showed larger N170 amplitudes in the RH than the LH (see Table 5). When the two groups were combined, the N170 was also significantly faster in the RH than the LH, but this hemisphere effect was driven by the deaf group. Both groups also produced faster N170 latencies when attention was directed to the top of the face, relative to the bottom (see Table 6 and Figure 5).

The N170 response to the two trial types (same vs different) varied by group. The deaf group showed a classic repetition effect for latencies, which were faster for “same” trials than “different” trials overall (F(1,11)=7.094, p=0.022). In contrast, the hearing group showed slower latencies for “same” than “different” trials (the opposite of a typical repetition effect) under some conditions: specifically, over the right hemisphere and only when viewing misaligned faces (p=0.051). Finally, hearing participants produced a typical reduction in N170 amplitudes with repetition, but only when attending to the top half of the face (Half × trial type F(1,11)=7.195, p=0.021). These results suggest that the overall patterns of holistic face perception are similar in the two groups at this stage of processing, with similar alignment effects, hemisphere asymmetries, and processing advantages for the eye region.

3.2.3. P2

The P2 was larger in response to misaligned than intact trials, when both groups were combined (see Table 4). However, when the two groups were tested individually, this effect was marginally significant only for the hearing group. In addition, only the hearing group showed a right hemisphere asymmetry in P2 amplitudes (see Table 5).

The deaf group showed unique effects of attended half on the P2, with larger amplitudes (F(1,11)=8.275, p=0.015) and faster latencies (see Table 6 and Figure 5) when attention was directed to the top half of the face than the bottom.

These results suggest that the processing advantage for the eyes may appear later in processing in the deaf group compared to the hearing, and that the right hemisphere asymmetry may decrease in the deaf group following the N170.

3.2.4. N250

Misalignment of the face stimuli caused significant increases in amplitude of the N250 in the hearing group and similar but marginal increases in the deaf group (see Table 4). The deaf group showed an overall alignment effect on latency, with faster N250s for misaligned than intact stimuli. A similar latency alignment effect was found in the hearing group, but only over the right hemisphere (Alignment × hemisphere interaction: F(1,11)=5.656, p=0.037).

In addition, the latency of the N250 was affected by attended half only in the deaf group (see Figure 5). Specifically, it was faster for attend top than attend bottom conditions (see Table 6), especially during “different” trials (Half × Trial type interaction: F(1,11)=4.767, p=0.052).

Finally, the N250 was only marginally larger overall for deaf than hearing participants (p = .08).

3.2.5. Correlations between ERP measures and accuracy

The relationship between behavioral performance and ERPs was explored in correlational analyses. First, an index of the electrophysiological processing advantage for attending to the top half of the face was calculated by subtracting N170 latency recorded during attend bottom blocks from N170 latency recorded during attend top blocks; a higher score indicated faster processing of the same composite stimulus when attention was devoted to the top half as compared to the bottom half. This index was then correlated with percent correct on attend top blocks to examine whether the electrophysiological processing advantage is reflected in better behavioral outcomes. Participants who showed larger N170 processing advantages for the top half were also more accurate in misaligned attend top blocks (r=0.503, p=0.012). N170 half effects were also correlated with accuracies on “same” trials during intact (r=0.431, p=0.036) and misaligned (r=0.515, p=0.010) attend top blocks. These effects were driven by the deaf group, in large part because there was less variability in hearing participants’ accuracies. When the two groups were examined individually, these effects were significant or marginal for the deaf group (Intact top same: r=0.546, p=0.066; Misaligned top same: r=0.571, p=0.053; Misaligned top overall: 0.701, p=0.011), but not for the hearing group (Intact top same: r=0.225, p > .05; Misaligned top same: r=0.492, p > .05; Misaligned top overall: r = .026, p > .05). Together, these results indicate that for deaf participants, larger processing advantages for the top halves for the N170 component were associated with higher accuracies during attend top blocks, especially when top halves were separated by misalignment.

4. Discussion

This study was designed to examine effects of deafness and lifelong sign language use on holistic processing of face identity and on the relative salience of information in the top versus the bottom halves of the face. Participants completed a same/different task in which pairs of composite faces were presented and participants were asked to attend selectively to only the top or bottom half of the face. In addition to behavioral measures, ERPs were collected to examine effects of deafness and sign language use on neural signatures of face processing.

We hypothesized that both groups would show evidence of holistic processing in the composite task. Consistent with this idea, aligning top and bottom face halves interfered with both groups’ abilities to correctly identify when the attended halves of two faces were the same. However, alignment effects were larger in deaf participants than hearing participants, and this was true regardless of whether the attended halves were the same or different. In other words, even when both the top and bottom halves of the two faces within a trial were different, deaf signers were less accurate than hearing nonsigners in judging that the attended halves were different. Participants were not told that unattended halves of the stimuli differed on every trial, but they were told explicitly to ignore the unattended halves. Thus, deaf participants were less accurate than hearing participants in judging information in only one half of the face at a time, indicating greater effects of holistic binding of the differing unattended halves to the attended halves. These results lend support to the notion that deafness and sign language use do not attenuate holistic processing; on the contrary, they may strengthen the tendency to process faces as gestalt wholes.

This study was also designed to investigate whether the bottom half of the face was relatively more salient than the top for deaf individuals, as compared to hearing individuals. As in a previous report (Letourneau & Mitchell, 2008), alignment effects and overall accuracies in the hearing group did not vary when judging the top versus the bottom half. In contrast, deaf subjects were less accurate overall when judging the top halves of the composite faces compared to the bottom, and this was most pronounced when stimuli were presented as intact wholes, a condition that elicits holistic face processing. In other words, holistic processing bound the two halves of the face more strongly when attention was directed to the top. Why would holistic processing differ depending upon which half of the face is attended? We hypothesize that this pattern of results implies that the bottom half of the face is more salient for deaf signers than the top.

The hypothesis that the bottom half of the face is more salient for deaf signers is supported by previous research in our laboratory that documented increased attention as well as eye gaze to the bottom half of the face in deaf signers (Letourneau & Mitchell, 2011). In that study, eye gaze was captured as deaf signers and hearing nonsigners observed a single face for a full two seconds and judged either its identity or its emotional expression. Hearing nonsigners very rarely fixated on the bottom half, and tended to do so only when judging emotion. By contrast, deaf signers directed more fixations to the bottom half of the face, generally the mouth region, than hearing nonsigners did, regardless of the task. Relative salience of information in the top versus bottom was assessed in the study by also having subjects make judgments of identity or expression of isolated top and bottom face halves. Deaf signers and hearing nonsigners were similarly accurate in response to whole faces and isolated bottom halves, but deaf signers were less accurate in making judgments, especially of emotion, of isolated top halves. Thus, deaf signers devoted a significant portion of their fixations to the bottom half of whole faces and their judgments, particularly of emotion, suffered when that information was absent. The current study demonstrates that these findings are observed not only with long stimulus exposures that encourage gaze shifts but also during a speeded-response task with short stimulus durations that do not allow for multiple fixations across the face. Note that eye movements were recorded throughout the composite task in this study, therefore it is known that participants rarely made gaze shifts during the trials.

The present study also extends previous findings by documenting greater salience of information in the bottom half of the face in the absence of linguistic or emotional information. Our previous research both presented and required the identification of various emotional expressions (Letourneau & Mitchell, 2011). By contrast, the current study presented faces posing a neutral expression and required a simple same/different judgment, both manipulations that should have minimized the possibility that deaf signers assigned some linguistic value even to these emotionally and linguistically “neutral” stimuli. Together these results suggest that the increased salience of information in the bottom half of the face in deaf signers generalizes to a broader array of tasks and stimuli and is not dependent on the emotional or linguistic expressiveness of the face stimuli.

Electrophysiological results revealed similarities and differences between deaf and hearing groups. Misalignment of the face halves affected ERPs similarly for the deaf and hearing groups. In all subjects, spatial misalignment of face halves elicited larger and slower N170s, much like face inversion does. This result replicates previous findings from our laboratory and others (Jacques & Rossion, 2009, 2010; Kuefner, et al., 2010; Letourneau & Mitchell, 2008). These effects are hypothesized to result from the fact that initial encoding of the face is a holistic process that is disrupted by spatial misalignment, requiring extra effort to “reassemble” the misaligned stimulus into an intact whole. The current findings suggest that initial structural encoding of the face are not affected by deafness or lifelong sign language use. Spatial misalignment also affected the N250 similarly for the two groups, but in this instance it elicited larger amplitudes and faster latencies. These effects have also previously been observed in an independent sample of hearing adults in our laboratory (Letourneau & Mitchell, 2008). The reduction of N250 latencies with misalignment reflected the improvement in behavioral performance seen in response to misaligned faces.

We hypothesized that group differences in salience of information in the top versus the bottom half of the face would be reflected in ERPs. Previous research documented a latency advantage for attending to the top in a composite task with a larger sample of hearing adults (Letourneau & Mitchell, 2008) and this effect was replicated in the current study. We predicted that this effect would not be observed in deaf signers, but attending to the top half of the face elicited reliably faster ERP latencies early in the epoch than attention to the bottom in both groups. An index of this advantage was calculated by subtracting latencies recorded during attend bottom blocks from latencies from attend top blocks. Sufficient variability in this index was observed in the deaf group to yield a significant positive correlation with accuracy on attend-top trials. Thus, while hearing subjects all produced a high attend-top advantage in N170 latencies and high accuracy in the attend-top blocks, only some deaf subjects did.

The variability in the attend-top advantage for deaf signers could not be explained by age of exposure to ASL; two of the poorest performers with smallest ERP top half advantages and two of the best performers with the largest advantages were at least second-generation deaf who had learned ASL natively. In addition, all deaf participants had been exposed to sign language by the time they were five years of age. The variability also could not be explained by level of hearing loss. Although audiograms were not taken for the deaf sample, they differed little in their self-reported degree of hearing loss. One possible explanation for the variability is experience with lipreading. While all the deaf signers reported that they communicated primarily by sign in their daily lives, it is unknown to what degree lipreading skill or practice may have varied in this sample. If lipreading increases attention to and processing of information in the bottom half of the face, it could affect behavioral performance on this task as well as the ERP latency advantage for attending to the top half of the face. Future studies contrasting performance of “oral deaf”, or those who rely exclusively on lipreading and speech, with deaf signers would shed light on independent contributions of the two modes of communication on the distribution of attention across the top and bottom halves of the face.

A latency advantage for attending to the top half of the face extended later in the ERP epoch for deaf signers, only. For them, both the P2 and N250 were faster when attention was directed to the top than the bottom. This finding is challenging to interpret. It is atypical, as it was not observed for hearing subjects, and it did not correlate with deaf signers’ behavioral data; this result was observed for all deaf subjects. It was not related to task difficulty because conditions that elicited lower accuracies (in this instance, attending to the top) tended to elicit slower, not faster, latencies. Evidence from visual search paradigms provide evidence that faster ERP latencies can be linked to greater effort. In classic visual search paradigms, search for a simple feature is known to elicit faster and more accurate behavioral responses than search for a target defined by a conjunction of features, yet conjunctive targets elicit shorter P1 and N1 latencies than targets defined by a single feature (Lobaugh, Chevalier, et al 2005; Taylor, 2002). Thus, there is facilitation of the neural response to a conjunctively defined target due to top-down binding of the multiple features even though the behavioral responses are slower. The results of the current study may reflect effects of inhibition such that faster N250 and P2 latencies may result from deaf signers expending additional effort to suppress attention to the bottom half of the face when attending to the top.

This study was also designed to test the hypothesis that deafness and sign language experience may reduce the RH asymmetry typically observed during face perception tasks. The importance of facial gestures in ASL may increase the involvement of LH language and face perception regions in a range of face processing tasks. Previous studies have documented bilateral hemispheric or visual field processing in deaf signers during the perception of expressive faces (McCullough, et al., 2005; Szelag & Wasilewski, 1992; Szelag, Wasilewski, et al 1992) or a RVF asymmetry (Vargha-Khadem, 1983), while other work has documented that laterality is highly task rather than stimulus dependent for this population (Corina, 1989). We observed subtle differences in overall hemispheric asymmetry between deaf and hearing participants. Both groups produced the typical RH asymmetry in N170 amplitudes. However, the hearing group also produced a RH amplitude asymmetry in the P1 and P2 amplitudes, while the deaf group produced no other amplitude asymmetries. On the other hand, deaf signers did produce faster P1 and N170 latencies in the RH than the LH, while hearing nonsigners produced no latency asymmetries. Thus, while RH asymmetries in amplitude appeared in a greater number of ERP components, stretching over a longer duration, in the hearing group than the deaf group, the overall RH asymmetry in deaf signers may be manifest more in the speed of processing than the magnitude of activity. These results suggest that the documented reduction in the RH/LVF asymmetry for face processing in deaf signers is driven by the presence of linguistically relevant stimuli (McCullough, et al., 2005; Szelag & Wasilewski, 1992; Szelag, et al., 1992) or task demands (Corina, 1989). In the absence of a linguistic context, faces elicited a RH asymmetry that was similar but not identical to that observed in hearing nonsigners.

5. Summary/Conclusions

In the typically hearing population, the eyes have a special status in face processing (Emery, 2000). This special status does not mean that attention cannot be directed away from this region, but it does mean that when attention is directed toward the eyes, fast and efficient processes appear to be elicited. The current study demonstrates that this special status of the eye region may be altered by a lifetime of deafness and/or sign language use. Based on our previous work (Letourneau & Mitchell, 2011), we hypothesize that these perceptual and experiential factors might increase the salience of information in the bottom half of the face, resulting in deaf signers devoting more attention and eye gaze toward that region than hearing nonsigners. The current study shows that the bottom half of the face is more salient for deaf signers even when exposure to the face is extremely short and in the absence of gaze shifts. Furthermore, ERP findings from this study raise the possibility that deaf signers may expend additional effort to attend selectively to the top of the face, resulting in a unique pattern of neural activity. On the basis of this work and previous behavioral and eye tracking findings (Letourneau & Mitchell, 2011), we hypothesize information in the bottom half of the face may increase in salience over developmental time as a product of a lifelong tendency to distribute relatively more fixations and attention to the bottom half of the face and less to the top.

The current study cannot address whether the increased attention and gaze to the bottom half of the face in deaf signers is driven by auditory deprivation or by ASL experience. Some face perception studies have compared deaf signers and hearing nonsigners with a third group of hearing adults who were native sign language users, born to deaf parents. This crucial third group allows for a separation of effects of sign language use from auditory deprivation itself. In a delayed match-to-sample procedure with two sample faces, deaf signers outperformed both hearing signers and nonsigners when detecting changes made to the mouth (McCullough & Emmorey, 1997). In contrast, both deaf and hearing signers performed slightly better than hearing nonsigners when detecting changes made to the eyes. These results from hearing signers suggest that ASL experience is associated with better discrimination of changes within the eye region while deafness itself is associated with improved discrimination of the mouth. Thus, the increased salience of information in the bottom half of the face in deaf signers may be due to auditory deprivation and may stem from the need to rely on the face for emotional and social information that is typically contained in vocal tone and affect, or from aspects of language perception that are not specific to ASL, including lipreading and other facial cues during conversation. If this is true, then deaf individuals who rely on oral communication should produce evidence of increased salience of the bottom of the face, as well. Further, if ASL experience does not drive attention and gaze to the bottom of the face, then the effect should not be observed in hearing native signers.

The role that lipreading may play in these processes is also unknown. All of the deaf participants in the current study relied primarily on ASL to communicate in their daily lives, but it is likely that many had formal or informal experience with lipreading as well. Studies of deaf individuals who do not know or use any form of sign language would help to clarify the relative impact that lipreading skills might have on face perception mechanisms. In either case, we hypothesize that this is a developmental effect; the need to extract more and/or different information from the face may impose pressure on the neurocognitive system devoted to face processing, causing it to assume additional functions that are normally subsumed by other systems (Mitchell, 2008; Mitchell & Maslin, 2007). These “functional pressures” may induce changes in the behavioral and neural organization of face processing that highlight its inherent plasticity.

References

- Agrafiotis D, Canagarajah N, Bull DR, Dye M, Twyford H, Kyle J, et al. Optimised sign language video coding based on eye-tracking analysis. Visual Communications and image processing. 2003;5150:1244–1252. [Google Scholar]

- Baker-Shenk C. The facial behavior of deaf signers: evidence of a complex language. Am Ann Deaf. 1985;130(4):297–304. doi: 10.1353/aad.2012.0963. [DOI] [PubMed] [Google Scholar]

- Bellugi U, O’Grady L, Lillo-Martin D, O’Grady Hynes M, van Hoek K, Corina D. Enhancement of spatial cognition in deaf children. In: Volterra V, Erting CJ, editors. From Gesture to Language in Hearing and Deaf Children. Springer-Verlag; New York: 1990. pp. 278–298. [Google Scholar]

- Bettger J, Emmorey K, McCullough S, Bellugi U. Enhanced facial discrimination: Effects of expertise with American Sign Lanuguage. Journal of Deaf Studies and Deaf Education. 1997;2(4):223–233. doi: 10.1093/oxfordjournals.deafed.a014328. [DOI] [PubMed] [Google Scholar]

- Buchan JN, Pare M, Munhall KG. Spatial statistics of gaze fixations during dynamic face processing. Soc Neurosci. 2007;2(1):1–13. doi: 10.1080/17470910601043644. [DOI] [PubMed] [Google Scholar]

- Corina DP. Recognition of affective and noncanonical linguistic facial expressions in hearing and deaf subjects. Brain & Cognition. 1989;9(2):227–237. doi: 10.1016/0278-2626(89)90032-8. [DOI] [PubMed] [Google Scholar]

- Corina DP, Bellugi U, Reilly J. Neuropsychological studies of linguistic and affective facial expressions in deaf signers. Lang Speech. 1999;42(Pt 2-3):307–331. doi: 10.1177/00238309990420020801. [DOI] [PubMed] [Google Scholar]

- De Filippo CL, Lansing CR. Eye Fixations of Deaf and Hearing Observers in Simultaneous Communication Perception. Ear and Hearing. 2006;27(4):331–352. doi: 10.1097/01.aud.0000226248.45263.ad. [DOI] [PubMed] [Google Scholar]

- Emery NJ. The eyes have it: the neuroethology, function and evolution of social gaze. Neuroscience & Biobehavioral Reviews. 2000;24(6):581–604. doi: 10.1016/s0149-7634(00)00025-7. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Thompson R, Colvin R. Eye Gaze During Comprehension of American Sign Language by Native and Beginning Signers. Journal of Deaf Studies and Deaf Education. 2009;14(2):237–243. doi: 10.1093/deafed/enn037. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Bukach C. Should we reject the expertise hypothesis? Cognition. 2007;103(2):322–330. doi: 10.1016/j.cognition.2006.05.003. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Nelson CA. The development of face expertise. Current Opinion in Neurobiology. 2001;11(2):219–224. doi: 10.1016/s0959-4388(00)00200-2. [DOI] [PubMed] [Google Scholar]

- Henderson JM, Williams CC, Falk RJ. Eye movements are functional during face learning. Memory & Cognition. 2005;33(1):98–106. doi: 10.3758/bf03195300. [DOI] [PubMed] [Google Scholar]

- Hole GJ. Configurational factors in the perception of unfamiliar faces. Perception. 1994;23(1):65–74. doi: 10.1068/p230065. [DOI] [PubMed] [Google Scholar]

- Hole GJ, George PA, Dunsmore V. Evidence for holistic processing of faces viewed as photographic negatives. Perception. 1999;28(3):341–359. doi: 10.1068/p2622. [DOI] [PubMed] [Google Scholar]

- Jacques C, Rossion B. The initial representation of individual faces in the right occipito-temporal cortex is holistic: Electrophysiological evidence from the composite face illusion. Journal of Vision. 2009;9(6) doi: 10.1167/9.6.8. [DOI] [PubMed] [Google Scholar]

- Jacques C, Rossion B. Misaligning face halves increases and delays the N170 specifically for upright faces: Implications for the nature of early face representations. Brain Research. 2010;1318:96–109. doi: 10.1016/j.brainres.2009.12.070. [DOI] [PubMed] [Google Scholar]

- Janik SW, Wellens AR, Goldberg ML, Dell’Osso LF. Eyes as the center of focus in the visual examination of human faces. Perceptual & Motor Skills. 1978;47(3 Pt 1):857–858. doi: 10.2466/pms.1978.47.3.857. [DOI] [PubMed] [Google Scholar]

- Kuefner D, Jacques C, Prieto EA, Rossion B. Electrophysiological correlates of the composite face illusion: Disentangling perceptual and decisional components of holistic face processing in the human brain. Brain and Cognition. 2010;74(3):225–238. doi: 10.1016/j.bandc.2010.08.001. [DOI] [PubMed] [Google Scholar]

- Le Grand R, Mondloch C, Maurer D, Brent H. Impairment in holistic face processing following early visual deprivation. Psychological Science. 2004;15(11):762–768. doi: 10.1111/j.0956-7976.2004.00753.x. [DOI] [PubMed] [Google Scholar]

- Letourneau SM, Mitchell TV. Behavioral and ERP measures of holistic face processing in a composite task. Brain and Cognition. 2008;67(2):234–245. doi: 10.1016/j.bandc.2008.01.007. [DOI] [PubMed] [Google Scholar]

- Letourneau SM, Mitchell TV. Gaze patterns during identity and emotion judgments in hearing adults and deaf users of American Sign Language. Perception. 2011;40(5):563–575. doi: 10.1068/p6858. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lobaugh NJ, Chevalier H, Batty M, Taylor MJ. Accelerated and amplified neural responses in visual discrimination: Two features are processed faster than one. NeuroImage. 2005;26(4):986–995. doi: 10.1016/j.neuroimage.2005.03.007. [DOI] [PubMed] [Google Scholar]

- McCullough S, Emmorey K. Face processing by deaf ASL signers: evidence for expertise in distinguished local features. Journal of Deaf Studies & Deaf Education. 1997;2:212–222. doi: 10.1093/oxfordjournals.deafed.a014327. [DOI] [PubMed] [Google Scholar]

- McCullough S, Emmorey K, Sereno M. Neural organization for recognition of grammatical and emotional facial expressions in deaf ASL signers and hearing nonsigners. Cognitive Brain Research. 2005;22(2):193–203. doi: 10.1016/j.cogbrainres.2004.08.012. [DOI] [PubMed] [Google Scholar]

- McKelvie SJ. The role of eyes and mouth in the memory of a face. The American Journal of Psychology. 1976;89(2):311–323. [Google Scholar]

- Mitchell TV. Cross-modal plasticity in development: the case of deafness. In: Luciana C. A. N. a. M., editor. Handbook of Developmental Cognitive Neuroscience. 2nd Edition MIT Press; Cambridge, MA: 2008. pp. 439–452. [Google Scholar]

- Mitchell TV, Maslin MT. How vision matters for individuals with hearing loss. International Journal of Audiology. 2007;46:500–511. doi: 10.1080/14992020701383050. [DOI] [PubMed] [Google Scholar]

- Muir LJ, Richardson IE. Perception of sign language and its application to visual communications for deaf people. J Deaf Stud Deaf Educ. 2005;10(4):390–401. doi: 10.1093/deafed/eni037. [DOI] [PubMed] [Google Scholar]

- Nelson CA. The development and neural bases of face recognition. Infant and Child Development. 2001;10(1-2):3–18. doi: 10.1002/icd.239. [Google Scholar]

- O’Donnell C, Bruce V. Familiarisation with faces selectively enhances sensitivity to changes made to the eyes. Perception. 2001;30(6):755–764. doi: 10.1068/p3027. [DOI] [PubMed] [Google Scholar]

- Schwarzer G, Huber S, Dummler T. Gaze behavior in analytical and holistic face processing. Memory & Cognition. 2005;33(2):344–354. doi: 10.3758/bf03195322. [DOI] [PubMed] [Google Scholar]

- Schyns PG, Bonnar L, Gosselin F. Show me the features! Understanding recognition from the use of visual information. Psychol Sci. 2002;13(5):402–409. doi: 10.1111/1467-9280.00472. [DOI] [PubMed] [Google Scholar]

- Schyns PG, Bonnar L, Gosselin F. Show Me the Features! Understanding Recognition from the Use of Visual Information. Psychological Science. 2002;13(5):402–409. doi: 10.1111/1467-9280.00472. [DOI] [PubMed] [Google Scholar]

- Siple P. Visual constraints for sign language communication. Sign Language Studies. 1978;19:95–110. [Google Scholar]

- Szelag E, Wasilewski R. The effect of congenital deafness on cerebral asymmetry in the perception of emotional and non-emotional faces. Acta Psychologica. 1992;79(1):45–57. doi: 10.1016/0001-6918(91)90072-8. [DOI] [PubMed] [Google Scholar]

- Szelag E, Wasilewski R, Fersten E. Hemispheric differences in the perceptions of words and faces in deaf and hearing children. Scandinavian Journal of Psychology. 1992;33(1):1–11. doi: 10.1111/j.1467-9450.1992.tb00807.x. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Farah MJ. Second-order relational properties and the inversion effect: testing a theory of face perception. Percept Psychophys. 1991;50(4):367–372. doi: 10.3758/bf03212229. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Farah MJ. Parts and wholes in face recognition. Quarterly Journal of Experimental Psychology: Human Experimental Psychology. 1993;46A(2):225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- Tarr MJ, Gauthier I. FFA: a flexible fusiform area for subordinate-level visual processing automatized by expertise. Nat Neurosci. 2000;3(8):764–769. doi: 10.1038/77666. [DOI] [PubMed] [Google Scholar]

- Taylor MJ. Non-spatial attentional effects on P1. Clinical Neurophysiology. 2002;113(12):1903–1908. doi: 10.1016/s1388-2457(02)00309-7. [DOI] [PubMed] [Google Scholar]

- Vargha-Khadem F. Visual field asymmetries in congenitally deaf and hearing children. British Journal of Developmental Psychology. 1983;1(4):375–387. [Google Scholar]

- Watanabe K, Matsuda T, Nishioka T, Namatame M. Eye Gaze during Observation of Static Faces in Deaf People. PLoS ONE. 2011;6(2):e16919. doi: 10.1371/journal.pone.0016919. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young AW, Hellawell D, Hay DC. Configurational information in face perception. Perception. 1987;16(6):747–759. doi: 10.1068/p160747. [DOI] [PubMed] [Google Scholar]