Abstract

Computer Decision Support Tools (CDSTs) can support clinicians at various stages of the care process and improve healthcare, but implementation of these tools has been difficult. In this study we examine the need for, the use of, and barriers and facilitators to the use of a CDST from a human factor perspective. Results show that there is a need for CDSTs, and that physicians do use well-developed CDSTs. However, there are also barriers against the use, such usability issues and problems fitting them into the clinical workflow.

Keywords: Health Information Technology, Computer Decision Support Tool, Usefulness, Usability, Workflow

1. Introduction

Health information technology shows great promise for improving the efficiency, quality, and safety of medical care. Electronic health records (EHR) have the potential to facilitate patient care and benefit clinicians by improving access to information at various service points within the system. Computer provider order entry (CPOE) with clinical decision support tools (CDST) has been shown to decrease medication ordering errors [1]. There are several CDS tools such as: Computerized alerts and reminders, clinical guidelines, order sets, patient data reports and dashboards, documentation templates, diagnostic support, and clinical workflow tools.

CDST can provide support to clinicians at various stages in the care process, from preventive care through diagnosis and treatment to monitoring and follow-up, but results with CDST implementation have been mixed. CDSTs have been found to improve measures of clinician performance in the diagnosis [2, 3], prevention and management [4] of a number of different health problems. CDST systems can improve clinical practice and prevent adverse drug events [5]. Despite these benefits, there are many barriers to successful CDST implementation [6, 7]. Although several different CDSTs exist in health care, most have been unsuccessfully implemented in clinical practice [6]. In addition to intrinsic characteristics of the CDST, incorporation and integration in the clinical workflow remains one of the largest barriers to success [8].

In this study we examine a CDST in family practices from a human factor perspective. We examine the need for a CDST to assess the risk of a cardiac event in the next 10 years; we assess the current use of such tools, and we examine barriers, barriers, and possible improvements to a specific tool, HeartDecision (HD), to assess cardiac risks.

HD is an easy to use online assessment tool, both in a stand-alone version and as part of an Electronic Health Record (EHR) system. The tool consists of 6 web pages: (1) Data Entry Page (automatically populated as part of the EHR); (2) a Risk Page that displays the risk of a cardiac event in the next 10 years; (3) a Goal Page that summarizes the specific risks (e.g. triglycerides are high) and possible causes for these risks (e.g. elevated triglycerides are associated with elevated body weight, a diet high in carbohydrates, including alcohol, and genetic disorders); (4) an Ideal Graph Page that graphically represents the risk in the next ten years that also allows for manipulation of several variables (e.g. blood pressure, triglycerides, etc.) that are used to calculate the risk; (5) a Hand Out Screen on which several hand-outs can be selected, such as information on fish oil capsules, etc, that can be printed out and handed to the patient, and (6) a Summary Page, that summarizes the information for patient and physician..

2. Methods

2.1. Setting

The study was performed with the aid of the Wisconsin Research and Education Network (WREN), a network of primary care clinicians in the state of Wisconsin; the Department of Family Medicine (DFM); and the Department of Medicine (DOM) at the University of Wisconsin. WREN consists of more than 40 practices and over 100 clinicians in 24 communities, from 17 different healthcare organizations distributed across Wisconsin in an array very similar to the distribution of Wisconsin’s population.

2.2. Design

Data was collected in a cross-sectional design, using a time study, observations, and interviews during a standardized patient visit in the clinics; and a web-based questionnaire. Use data was also collected, but not used in this paper.

2.3. Sample

The time study, observation and interview data was collected in 8 clinics of the WREN network. Questionnaire data is collected in the Department of Family Medicine (DFM) and the Department of Medicine (DoM). In this paper we use the preliminary data of 130 physicians (response rate 31%) who filled out the survey: 78 faculty, 33 residents, 13 interns and 5 others. Questionnaire data collection is still ongoing.

2.4. Data collection instruments

A stop watch was used to measure the time the physicians spent on the different pages of the tool. Physicians were observed by both a human factors specialist and a medical specialist. At the end of the standardized patient visit the physicians were asked several questions about the use of the tool.

2.4.1. Questionnaire

We developed a questionnaire that was part based on existing questionnaires that have been proven to be valid and reliable in previous research [9, 10], and partly based on the results of the observations and interviews to collect additional information about the need for such tools, use of the tool, barriers against use of the tool, facilitators, and possible improvements.

3. Results

3.1 Time study

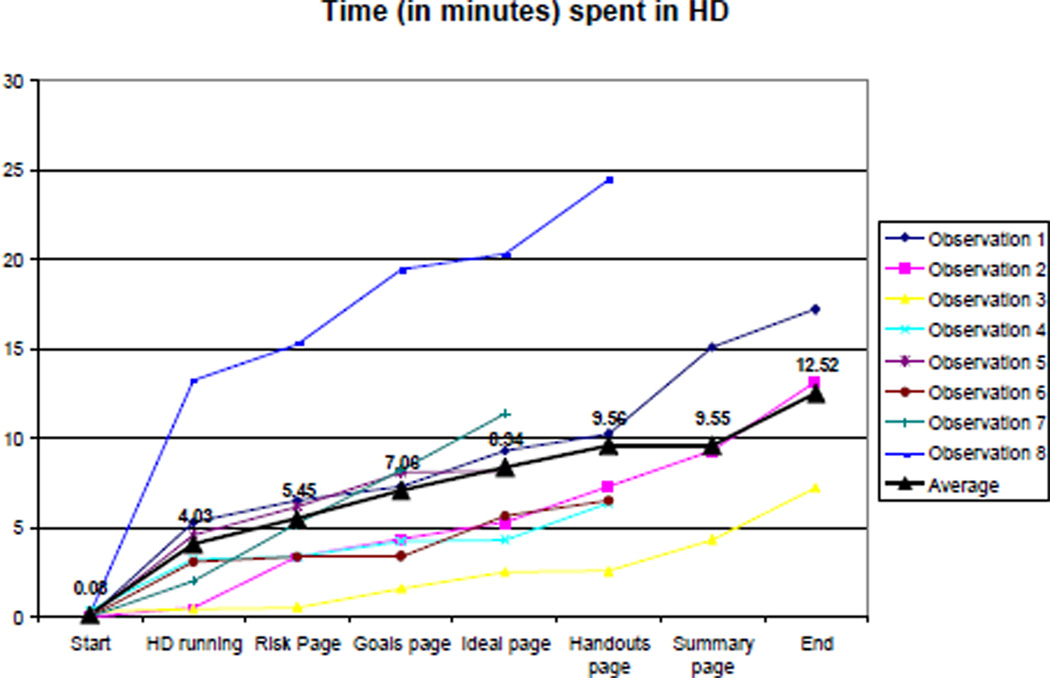

Results of the time study in the 8 clinics are shown in Figure 1. Results show that during the standardized patient visit, the physicians spent on an average nearly 13 minutes using the tool.

Figure 1.

Results of the time study

3.2. Observation and Interviews

Most primary care physicians found the tool easy to use, and had apparently few problems navigating the tool. They made comments about aspects of the tool that they liked and did not like: “It’s useful. The graphic display I think is useful. It is a little slow. I mean, we had to wait maybe 15, 20 seconds for it to come up. It’s very nice that it sort of incorporates the existing values, kind of, you know, so I don’t have to re-enter data”. “Each time I use it I discover sort of new things about it, which is, is kind of neat, so I think it's one of those things you get as much out of it as you put into it. You know, the more, the more you work with, with it and with patients using it, you kind of just go wow”. But there were also some barriers against the use of the program: “Ninety percent easy and then the 10% that wasn’t easy being things like, if I wanted to go back and put in a cholesterol level of 300, I didn’t quite know how to do that”; “Yes. I mean that is what I have found is usually a tax on more time”; and “So, the one part is the data. Why can’t the blood sugar just flow in? Why do I have to go look for it? Okay, that drives me a little crazy”.

3.3. Questionnaire

Results show that most of the respondents use the HD tool, either as part of the EHR or online (see Table 1). Twenty-nine percent use another online tool. Some respondents use paper to calculate the risks (7%), and other respondents never calculate these risks (15%).

Table 1.

Do you regularly calculate your patient’s cardiovascular risk manually or with the aid of an online tool (Please check all that apply)?

| No | 15% |

| Yes, manually on paper | 7% |

| Yes, with an online tool other than HeartDecision | 29% |

| Yes, with the HD tool as part of the EHR | 53% |

| Yes, with the HD online (website) | 8% |

| Other | 2% |

Respondents use the tool relatively frequently: nearly half of the respondents (42%) use the tool at least 2–3 times per month (see Table 2).

Table 2.

How frequently do you use the HeartDecision tool?

| Never | 38% |

| Less than once a month | 14% |

| Once a month | 7% |

| 2–3 Times a month | 18% |

| Once a week | 8% |

| 2–3 Times a week | 14% |

| Daily | 2% |

| Total | 100% |

3.3.1. Facilitators, barriers and improvements

In the questionnaire 35 questions were asked about possible facilitators, barriers against CDSTs, and possible improvements to the tool. Results show that overall respondents like the tool, and especially the fact that data are automatically populated (Table 3); that the biggest barriers against using the tool is that physicians sometimes they have to go back to the EHR system for additional data; that they experience time pressure when using the tool; that the tool is occasionally delayed, and that they forget about it (Table 4). Finally, respondents also had suggestions for improvement (Table 5), such as alerts, being able to print out the information on the different web pages, and suggestions for treatment/medication.

Table 3.

Facilitators, average score on a scale from 1 (strongly disagree) to 5 (Strongly agree)

| 1. It is convenient that all patient data are automatically populated into the tool. | 4.81 |

| 2. I understand the purpose of the HeartDecision tool. | 4.76 |

| 3. I like using the Heart Decision tool. | 4.73 |

| 4. I like that you can use the (ideal) graph to show patients what happens if for example they reduce their cholesterol | 4.54 |

| 5. I like the hand-outs (information on diets, exercise, smoking cessation, etc.) in the tool. | 4.41 |

Table 4.

Barriers, average score on a scale from 1 (strongly disagree) to 5 (Strongly agree)

| 1. I often have to go back to the EHR to access the patient's list of medications. | 3.48 |

| 2. I feel like I am under time pressure when I assess a patient with the HeartDecision tool. | 3.25 |

| 3. Often I have to go back to the EHR to obtain additional information when I use the tool. | 3.17 |

| 4. When I open up the tool, the tool is occasionally delayed. | 3.11 |

| 5. I often forget to use this tool with appropriate patients. | 2.90 |

Table 5.

Improvements, average score on a scale from 1 (strongly disagree) to 5 (Strongly agree)

| 1. It would be helpful to print out the information on the Summary Page. | 4.20 |

| 2. It would be helpful if the (Ideal) Graph could be printed. | 4.10 |

| 3. It would be helpful to add medication recommendations to treat cholesterol in patients with certain risk in the tool. | 4.05 |

| 4. There should be an alert in the EHR to notify me that a patient has an increased risk of a cardiac event. | 3.50 |

| 5. It would be helpful if the EHR had an alert noting a high risk of a cardiac event, or cholesterol above goal. | 3.5 |

4. Discussion

Results of this study show that there is a certain need for Computer Decision Support Tools such as HeartDecison (HD). Most of the physicians in the sample have either calculated the risks on paper or used a tool like HD. Most of the respondents have actually used the HD tool, either as part of the EHR or the online version. Results of the time study showed that on average, physicians spent 13 minutes using the tool, which is too long for a regular patient visit, which lasts on an average 10 minutes [11].

Results of the observations showed overall, physicians had little trouble using the tool, although they were not always 100% sure about all the functionalities on the different web pages. Results of the interviews confirmed the observations. A large majority of physicians (98%) indicated that they liked using the tool and 94% indicated that the tool was easy to use. However, they also pointed out some barriers against using the tool, such as the lack of a list of medications the patient is currently on, and the fact that they sometimes had to go back to find additional data.

Based on the observations and the interviews, we developed a questionnaire, in order to quantify the results of the limited (N=8) observations and interviews. Results of the questionnaire survey confirmed most of the findings of the observations and interviews.

Overall, results of the questionnaire study show that most of the respondents liked using the tool. The tool is relatively easy to use and avoids duplication such as data entry. Data is automatically populated from the EHR. However, there are also several barriers against using the tool. A main barrier is that the tool is relatively time consuming and thus it is difficult to fit in a physician’s workflow. Second, despite data being automatically populated by the EHR, physicians sometimes need to go back to obtain additional information. Third, occasionally the tool is delayed. Finally, respondents had several suggestions for improvement such as making the different pages in the tool printable, providing automated alerts in the EHR when a patient has a certain risk for a cardiac event, and recommendations for treatment.

4.1. Improvements

One of the main barriers against use of the tool is that it is relatively time consuming. Physicians only have only about 10 minutes to see a patient, unless it is a follow-up visit. Therefore, the designers of the tool have decided –based on the results of this study- to redesign the tool. The newly designed tool will be simpler, and necessary information will be displayed on one page. This does not mean that the underlying structure of the tool will change dramatically: physicians still have access to the different pages, but only if they choose to go to those pages by clicking a link on the main page. More recommendations will be added to the tool.

4.2. Study limitations

Evidently the sample size for the time study, the observations and the interviews was small (N=8). However, by using a questionnaire in order to quantify the data in the qualitative part of the study, we were able to confirm most of the results.

Response rate for the questionnaire was relatively low (31%), and particularly in the Department of Family Medicine (24%). The literature shows that response rates for physicians in survey research are often low. Based on a review of the literature, Asch et al. [12] calculated an average response rate of 54% in 68 studies. Results of a second review by Asch et al. [13] showed that responses are especially low for staff-model HMO physicians and solo practice physicians (38% and 35% respectively).

4.3. Conclusion

Results of this study show that Computer Decision Support Tools can play a role in healthcare, provided that they are adapted to the needs of the users. The tools need to be useful, easy to use, and have to fit in the physician’s workflow. In this study medical specialists and human factors specialists teamed up to test a tool, and based on the results of this study, the tool will be redesigned.

Acknowledgements

This research was made possible by funding from the Department of Medicine at UW-Madison. PI: Jon Keevil, MD. We would like to thank the Wisconsin Research & Education Network (WREN) and the clinicians who participated in this study. This investigation was supported by the National Institutes of Health, under Ruth L. Kirschstein National Research Service Award T32 HL 07936 from the National Heart Lung and Blood Institute to the University of Wisconsin-Madison Cardiovascular Research Center. The project described was supported by the Clinical and Translational Science Award (CTSA) program through the National Center for Research Resources (NCRR) grant 1UL1RR025011. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

References

- 1.Ammenwerth E, et al. The effect of electronic prescribing on medication errors and adverse drug events: a systematic review. J Am Med Inform Assoc. 2008;vol. 15:585–600. doi: 10.1197/jamia.M2667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Selker HP, et al. Use of the acute cardiac ischemia time-insensitive predictive instrument (ACI-TIPI) to assist with triage of patients with chest pain or other symptoms suggestive of acute cardiac ischemia. A multicenter, controlled clinical trial. Ann Intern Med. 1998 Dec 1;vol. 129:845–855. doi: 10.7326/0003-4819-129-11_part_1-199812010-00002. [DOI] [PubMed] [Google Scholar]

- 3.Holt TA, et al. Automated electronic reminders to facilitate primary cardiovascular disease prevention: randomised controlled trial. Br J Gen Pract. 2010 Apr;vol. 60:e137–e143. doi: 10.3399/bjgp10X483904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Demakis JG, et al. Improving residents' compliance with standards of ambulatory care: results from the VA Cooperative Study on Computerized Reminders. JAMA. 2000 Sep 20;vol. 284:1411–1416. doi: 10.1001/jama.284.11.1411. [DOI] [PubMed] [Google Scholar]

- 5.Kawamoto K, et al. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005 Apr 2;vol. 330:765. doi: 10.1136/bmj.38398.500764.8F. 2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Payne TH. Computer decision support systems. Chest. 2000 Aug;vol. 118:47S–52S. doi: 10.1378/chest.118.2_suppl.47s. [DOI] [PubMed] [Google Scholar]

- 7.Hoonakker PLT, et al. Healthcare Systems Ergonomics and Patient Safety (HEPS) 2011. Spain: Oviedo; 2011. Drug Safety Alerts Override from a Human factors Perspective; pp. 367–371. [Google Scholar]

- 8.Bertoni AG, et al. Impact of a multifaceted intervention on cholesterol management in primary care practices: guideline adherence for heart health randomized trial. Arch Intern Med. 2009 Apr 13;vol. 169:678–686. doi: 10.1001/archinternmed.2009.44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lee F, et al. Implementation of physician order entry: user satisfaction and self- reported usage patterns. J Am Med Inform Assoc. 1996 Jan 1;vol. 3:42–55. doi: 10.1136/jamia.1996.96342648. 1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hoonakker P, et al. Measurement of CPOE end-user satisfaction among ICU physicians and nurses. Applied Clinical Informatics. 2010;vol. 1:268–285. doi: 10.4338/ACI-2010-03-RA-0020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Yawn B, et al. Time use during acute and chronic illness visits to a family physician. Family Practice. 2003 Aug 1;vol. 20:474–477. doi: 10.1093/fampra/cmg425. 2003. [DOI] [PubMed] [Google Scholar]

- 12.Asch DA, et al. Response rates to mail surveys published in medical journals. Journal of Clinical Epidemiology. 1997;vol. 50:1129–1136. doi: 10.1016/s0895-4356(97)00126-1. [DOI] [PubMed] [Google Scholar]

- 13.Asch DA, et al. Problems in recruiting community-based physicians for health services research. Journal of General Internal Medicine. 2000;vol. 15:591–599. doi: 10.1046/j.1525-1497.2000.02329.x. [DOI] [PMC free article] [PubMed] [Google Scholar]