Abstract

Background

The federal government is promoting adoption of electronic health records (EHRs) through financial incentives for EHR use and implementation support provided by regional extension centers. Small practices have been slow to adopt EHRs.

Objectives

Our objective was to measure time to EHR implementation and identify factors associated with successful implementation in small practices receiving financial incentives and implementation support. This study is unique in exploiting quantitative implementation time data collected prospectively as part of routine project management.

Methods

This mixed-methods study includes interviews of key informants and a cohort study of 544 practices that had worked with the Primary Care Information Project (PCIP), a publicly funded organization that since 2007 has subsidized EHRs and provided implementation support similar to that supplied by the new regional extension centers. Data from a project management database were used for a cohort study to assess time to implementation and predictors of implementation success.

Results

Four hundred and thirty practices (79%) implemented EHRs within the analysis period, with a median project time of 24.7 weeks (95% CI: 23.3 – 26.4). Factors associated with implementation success were: fewer providers, practice sites, and patients; fewer Medicaid and uninsured patients; having previous experience with scheduling software; enrolling in 2010 rather than earlier; and selecting an integrated EHR plus practice management product rather than two products. Interviews identified positive attitude toward EHRs, resources, and centralized leadership as additional practice-level predictors of success.

Conclusions

A local initiative similar to current federal programs successfully implemented EHRs in primary care practices by offsetting software costs and providing implementation assistance. Nevertheless, implementation success was affected by practice size and other characteristics, suggesting that the federal programs can reduce barriers to EHR implementation but may not eliminate them.

Keywords: Electronic health records, ambulatory care/primary care, implementation and deployment, facilitators and barriers, quantitative methods, mixed methods

1. Background

Electronic health records (EHRs) are expected to improve the quality and efficiency of healthcare, yet adoption rates have lagged in small ambulatory practices and among organizations that serve low-income populations (1-7). Two major barriers to EHR adoption with particular relevance to the small practice setting – implementation costs and lack of technical expertise in selection and implementation – are being addressed in the US by the federal Health Information Technology for Economic and Clinical Health (HITECH) Act of 2009. The act provides financial incentives for providers who adopt EHRs and meet "meaningful use" requirements, intended to promote the use of EHRs to improve the quality of healthcare. The act also establishes regional extension centers (RECs) to provide skilled implementation support (8-10). Nationwide, the 62 RECs are expected to help an estimated 100,000 providers through 2014 adopt EHRs and reach "meaningful use" (8, 9).

These HITECH initiatives are rapidly changing the context in which small ambulatory practices implement EHRs in the US. Rather than being required to provide all the expertise and bear the costs for EHR selection and implementation, many providers will have the opportunity to receive REC implementation support and to offset some of their startup and implementation costs through the "meaningful use" incentives. As a result, previous research on EHR implementation in the ambulatory setting needs to be revisited. The literature on lessons learned about EHR implementation in large and small ambulatory settings largely predates the era of EHR subsidies and REC implementation support (11-18), although Torda et al have described EHR adoption lessons provided by leaders of RECs and similar programs (19) and Fleurant and colleagues have published survey data on factors perceived to be associated with difficult EHR implementations (20). In addition, the EHR implementation literature does not yet provide quantitative data about time to implementation and predictors of implementation success in small ambulatory settings under the conditions established by the HITEC act of 2009.

We sought to address these gaps through an analysis of quantitative and qualitative data from the Primary Care Information Project (PCIP) of the New York City Department of Health and Mental Hygiene, a publicly funded organization that has served as an extension center to provide subsidized EHRs and EHR implementation support to primary care practices since 2007 (21). PCIP, one of the earliest programs to provide EHR subsidies and support, has been cited as an influence on the development of the REC initiative (8, 21, 22). To date, the organization has assisted 2900 providers in more than 600 practices, with a focus on providers in medically underserved areas.

2. Objectives

Our objective was to measure time to EHR implementation and predictors of successful implementation among small private physician practices receiving EHR subsidies and extension center support. We conducted a mixed-methods study to integrate qualitative findings with a cohort study of participating practices. This cohort study is unique in exploiting quantitative implementation time data collected prospectively as part of routine project management.

3. Methods

3.1 Setting and participants

The New York City Department of Health and Mental Hygiene has been providing implementation support to New York City primary care practices through PCIP since 2007, in a program developed by Farzad Mostashari, MD, MPH, before he assumed his current position as National Coordinator for Health Information Technology (21). During the period of this study, private primary care practices, federally qualified health centers, and outpatient practices affiliated with small community hospitals in New York City were eligible for EHR support through this organization if a minimum of 10% of their patients were either uninsured or covered by Medicaid (the public insurance program for the needy); this policy was meant to ensure that implementation assistance went primarily to practices serving the poorest populations, which might otherwise have insufficient resources to transition to EHRs. Small practices were those with 10 or fewer physicians. For participating practices, PCIP purchased EHR licenses, subsidized maintenance and support costs for 2 years, provided guidance on hardware purchases and IT support needs, assisted with workflow redesign, managed implementation in cooperation with the EHR vendor, and supervised EHR training (8 hours for every staff member and 16 hours for each provider). During the study period, PCIP worked exclusively with one EHR vendor (eClinicalWorks), although subsequently additional products were added. In 2009, the department, in collaboration with the Fund for Public Health in New York, won a REC award and established the NYC Regional Electronic Adoption Center for Health (REACH).

3.2 Methods overview

We conducted in-person semistructured interviews to (A) understand PCIP processes and identify qualitative themes associated with implementation success, and (B) identify the potential value of structured data available in PCIP’s project management database. We then exported data from the project management database for a cohort study to assess time to implementation and predictors of implementation success.

3.3 Human subjects protections

Our university Institutional Review Board approved the study, and all interview participants provided informed consent.

3.4 Qualitative data collection and analysis

Implementation staff and managers at PCIP who worked small practices were eligible for interviews as key informants (n = 10). Two researchers developed a codebook using a grounded theory approach (23), coded each transcript separately, and met to reach consensus on all transcripts. To elicit feedback on validity and comprehensiveness of the findings, we performed member checking by presenting qualitative findings back to participants (24, 25). The list of final themes did not change after member checking, but in a few cases participants suggested clarified wording to describe these themes.

3.5 Quantitative data collection and data remediation

Details on EHR implementation were routinely collected by PCIP staff in a project management database (www.salesforce.com). The several hundred variables in the database included names and contact information for practices; names and contact information of physicians and staff associated with each practice; practice characteristics such as size and payer mix; readiness questionnaire results; dates of e-mail, telephone, and in-person visits by implementation staff; dates of implementation and contracting milestones; task lists and free-text note fields; and questionnaires.

The interviews conducted in the qualitative study revealed that different implementation staff tended to use different subsets of these variables to support their own workflows and priorities, that only a very few data points were considered required by all implementation staff, and that even when data were collected from the practices (such as the readiness assessment questionnaires) it was acceptable for the practices to skip questions. As a result, missing data were common (26).

The interviews also suggested potential outcome variables related to EHR go-live, a milestone recorded in the database at the completion of the implementation and training process described above; it was contractually obligatory to collect this data point and there was no missing data. Our primary endpoint was project time, defined as time between a practice joining PCIP and its EHR go-live. Although implementation time (time from the start of EHR implementation to EHR go-live) would have been an appropriate endpoint, the start date for implementation time had been collected only on an optional basis and as a result had a high rate of missing data, and in a few instances multiple dates were recorded. Where possible, the correct start date was confirmed by manual review of records by a team consisting of two PCIP staff members and one EHR vendor employee.

This manual review included reading free-text notes in the records and checking records against data at the EHRs vendor. Nevertheless, missing data remained common and so this was considered a secondary outcome. To address problems of missing data in the explanatory variables, we conducted a manual review of PDF readiness assessment questionnaires attached to each practice's database record; in cases where the data from this PDF did not appear in the project management database's structured fields, we manually input the questionnaire responses into the database.

3.6 Quantitative analysis

For each explanatory variable, median project time and 95% confidence intervals were calculated using product-limit estimates of the survivor function. In addition, a subset analysis was performed to model implementation time on the practices with relevant data available. We also constructed a multivariate Cox proportional hazards model of project time as a secondary analysis. We conducted a 4-step screening process to select explanatory variables for the model. Potential explanatory variables had to (A) have less than 30% missing data; (B) be associated with at least a 2-week difference in median project time (as the interviews suggested this was more meaningful than depending on a statistical significance threshold); and (C) not be collinear with another explanatory variable (by Pearson correlation or chi-squared test). After all variables meeting these criteria were identified, (D) sensitivity analysis was performed to assess differences in project time between practices with complete data and practices with missing data to evaluate whether the data were missing at random; variables with statistically significant differences were excluded from the multivariate model. In the multivariate model, all explanatory variables were tested for the proportional hazards assumption. All met this assumption with one exception. The exception (►Table 1) was whether the practice selected the vendor EHR product alone or the combined EHR /practice management system (PMS), so an interaction term between this variable and time was added. The Akaike information criterion (AIC) (27) was used to evaluate whether any variables could be dropped from the multivariate model without impairing fit. Analyses were performed in SAS version 9.2 (SAS Inc., Cary, NC).

Table 1.

Characteristics of 544 practices in the process of implementing EHRs

| Characteristics | N = 544 | Implemented N = 430 | Median project time | ||||

|---|---|---|---|---|---|---|---|

| n | % | n | % | Weeks | 95% CI | ||

| Practice characteristics | |||||||

| Number of practice sites | |||||||

| One Two or more Missing |

423 116 5 |

77.8 21.3 0.9 |

345 83 2 |

80.2 19.3 0.5 |

24.1 30.3 27.1 |

22.7-25.1 25.3-32.9 22.3-31.9 |

|

| Number of providers | |||||||

| One Two or more Missing |

293 196 55 |

53.9 36.0 10.1 |

246 175 9 |

57.1 40.7 2.1 |

22.6 25.0 N/A1, 2 |

21.4-24.3 23.1-27.3 |

|

| Number of patients | |||||||

| <1000 1000 to <2000 2000 to <3000 ≥3000 |

55 100 81 139 169 |

10.1 18.4 14.9 25.6 31.1 |

41 80 64 119 126 |

9.5 18.6 14.9 27.7 29.3 |

23.1 24.1 24.4 25.6 25.1 |

19.0-31.4 22.0-27.9 21.7-29.0 22.9-27.1 22.4-27.7 |

|

| Missing Percent of patients covered by Medicaid or uninsured | |||||||

| < 28% 28% to < 50% 50% to < 80% ≥ 80% Missing |

130 123 140 136 15 |

23.9 22.6 25.7 25.0 2.8 |

107 99 101 113 10 |

24.9 23.0 23.5 26.3 2.3 |

23.1 23.3 27.1 26.3 21.4 |

21.3-25.1 21.7-27.3 23.9-29.4 23.4-28.3 9.9-31.1 |

|

| Previous technology and billing experience | |||||||

| Billing software | |||||||

| Completed or In Progress Planned or None Missing |

171 159 214 |

31.4 29.2 39.3 |

119 122 189 |

27.7 28.4 44.0 |

24.4 24.4 25.0 |

22.7-27.0 22.3-28.3 23.1-27.3 |

|

| Scheduling software | |||||||

| Completed or In Progress Planned or None Missing |

148 176 220 |

27.2 32.4 40.4 |

105 131 194 |

24.4 30.5 45.1 |

23.1 26.3 25.0 |

21.4-25.6 23.3-28.4 23.1-27.0 |

|

| Previous EHR | |||||||

| Completed or In Progress Planned or None Missing |

50 364 130 |

9.2 66.9 23.9 |

43 279 108 |

10.0 64.9 25.1 |

23.1 24.6 25.1 |

18.3-27.3 23.1-26.6 22.9-28.3 |

|

| Billing clearinghouse | |||||||

| Completed or In Progress Planned or None Missing |

161 383 0 |

29.6 70.4 0.0 |

157 273 0 |

36.5 63.5 0.0 |

24.3 25.3 |

22.0-25.9 23.3-27.3 |

|

| Front desk e-mail | |||||||

| Completed or In Progress Planned or None Missing |

193 239 112 |

35.5 43.9 20.6 |

156 181 93 |

36.3 42.1 21.6 |

25.0 23.1 25.7 |

23.3-27.4 22.0-25.9 23.3-28.3 |

|

| Clinician e-mail | |||||||

| Completed or In Progress Planned or None Missing |

200 232 112 |

36.8 42.7 20.6 |

156 181 93 |

36.3 42.1 21.6 |

24.7 24.3 25.0 |

23.1-27.3 22.3-26.4 22.4-28.1 |

|

| Implementation process characteristics | |||||||

| EHR system architecture | |||||||

| Remote hosting by vendor Local hosting Missing |

253 257 34 |

46.5 47.2 6.3 |

181 235 14 |

42.1 54.7 3.3 |

25.0 23.4 122.6 |

23.1-27.3 23.1-27.3 31.9-NE2, 3 |

|

| Implemented vendor practice management system (PMS) | |||||||

| Yes No Missing |

432 112 0 |

79.4 20.6 0.0 |

380 50 0 |

88.4 11.6 0.0 |

23.3 43.0 |

22.4-24.7 32.4-NE3 |

|

| Year agreement signed | |||||||

| 2007 2008 2009 2010 Missing |

87 167 202 88 0 |

16.0 30.7 37.1 16.2 0.0 |

84 147 171 28 0 |

19.5 34.2 39.8 6.5 0.0 |

23.3 27.0 25.4 19.7 |

19.6-25.0 24.7-28.7 23.1-28.3 16.4-23.3 |

|

| Post-implementation characteristics | |||||||

| Quality improvement pay-for-performance initiative | |||||||

| Yes No Missing |

138 406 0 |

25.4 74.6 0.0 |

138 292 0 |

32.1 67.9 0.0 |

21.4 27.0 |

20.1-23.9 24.6-28.3 |

|

1 Did not reach median

2 Not missing at random

3 NE = not estimable because after the median project time, more practices were censored than reached go-live.

4. Results

By August 2010, 544 small practices had signed agreements to join PCIP. Of these, 430 (79%) had achieved go-live by the time of data collection, with a median project time of 24.7 weeks (95% CI: 23.3-26.4).

4.1 Qualitative Findings

Seven implementation specialists, representing the majority (70%) of the PCIP implementation staff, participated in semistructured interviews about facilitators and barriers to implementation. The major findings from the qualitative analysis were grouped into 4 themes: the practice's attitude, money and resources, organizational leadership, and other barriers.

Theme 1 Practice Staff Attitude

All implementation staff repeatedly mentioned attitude toward potential benefits of the EHR. Successful practices were those that showed "engagement" or "excitement," or were "motivated," "gung ho," or "on board." Positive attitudes helped practices cope with the inevitable inconveniences associated with implementation. Positive attitudes were generally associated with a clinical champion as well as with the expectation that the EHR would bring long-term quality and efficiency improvements. Conversely, implementation was slowed by "resistance" or staff who were "apprehensive" or even "belligerent" or displayed "technophobia."

Theme 2 Money and resources

Despite the EHR software subsidies, lack of resources posed a barrier. Practices encountered delays when they were unable to afford hardware, high-quality Internet service and IT support, or the cost of reducing patient load during training. A related barrier mentioned by all participants was poor physical infrastructure. Small practices encountered problems when old buildings could not be wired for Internet access or had offices too small for the computers.

Theme 3 Organizational leadership

Practices were more successful if they displayed strong clinical leadership, along with unambiguous division of responsibilities, centralized communication, and enforcement of deadlines. One respondent said, "If you go in and you see ... they already have workflow sheets, they have a point person for every single process or processes, you know it’s going to go well." Some of the perceived advantages of strong leaders were that they helped enforce the EHR training requirements among the staff and reduced patient load during training.

Theme 4 Barriers

In addition to these three themes, participants listed specific challenges.

Billing

Retrieving data from previous billing systems and updating electronic billing capabilities were both time-consuming.

IT support

Lack of responsive and timely IT support was common, and practices that relied upon IT support from family or friends were frequently disappointed in its quality.

Undiscovered work and changes in project plan

Disruptive changes to the project plan sometimes stemmed from newly discovered problems (e.g., unreliable internet connections) or an unexpected client decision to switch from the EHR product alone to a combined EHR/PMS product.

Unexpected events

Unexpected events that hampered implementation included vacation, illness, seasonal workflow (e.g., start of school year for a pediatrician), dissolution of a practice partnership, a move to new quarters, and renovations.

Implementers’ procedures

Workflow sometimes entered a bottleneck when multiple practices enrolled simultaneously. Conversely, PCIP developed more effective procedures over time.

4.2. Application of qualitative findings to quantitative analysis

After analyzing the qualitative data, we reviewed the project management database for quantitative variables associated with these themes (►Table 1). These included descriptive characteristics, responses to a pre-implementation questionnaire about use of technologies such as e-mail and billing systems, and implementation process variables (e.g., whether the practice chose to access an EHR hosted remotely by the vendor or install it on a server in-house). Staff attitude and organizational leadership could not be mapped to any specific quantitative variables. However, one available variable seemed potentially related to both themes: whether the practice enrolled in a voluntary quality improvement project after EHR go-live. (The voluntary quality improvement project, known as the eHearts project, was initiated by the New York City Department of Health to focus on cardiac care quality measurement among primary care practices.)

4.3. Quantitative results

We analyzed data from 544 practices that had joined PCIP by August 2010, 430 of which (79%) had achieved go-live during the study period. The practices were small, with a median of 1 healthcare provider and 1 site (►Table 1). The proportion of patients uninsured or covered by Medicaid was very high (►Table 1).

Median project time for the 430 practices that had reached go-live was 24.7 weeks (95% CI: 23.3 – 26.4). As demonstrated in ►Table 1, several variables were associated with more than 2 weeks improvement in project time: having fewer practice sites; having fewer providers; having fewer patients; having smaller proportion of Medicaid or uninsured patients; having previous experience with scheduling software; joining PCIP in 2010 (compared to previous years); and selecting an integrated EHR plus practice management product rather than two separate products. ►Table 1 also shows that practices enrolled in a voluntary quality improvement initiative after EHR implementation had had shorter EHR project times.

Subset analysis on implementation time

Implementation time was available in only 212 of the 430 practices. Among these practices, median implementation time was 17.4 weeks (95% CI: 17.0-19.0). Project time did not differ significantly between the practices with complete implementation time data (25.3 weeks; 95% CI: 23.1-27.4) and those for whom implementation time could not be computed (24.7 weeks; 95% CI: 22.4-26.4).

Multivariate model

As a secondary analysis, a multivariate model of project time was constructed that included number of practice sites, percent of patients covered by Medicaid or uninsured, implementation of the vendor practice management system, interaction of the practice management system with time, and year the agreement was signed. In this model, which included 525 of the 544 practices, year was a statistically significant predictor of implementation success, with the likelihood of success about twice as high in 2010 as in 2007 (HR = 2.24; 95% CI: 1.42-3.53). Practices with 2 or more sites were somewhat less likely to achieve success than practices with one site, an effect that was close to statistical significance (HR = 0.81; 95% CI: 0.64-1.04; p = 0.10). Although PMS itself was not significant, the PMS X time interaction term was statistically significant. This suggests that the choice of the integrated EHR/PMS system made little difference at baseline but increased the chances of implementation success over the lifetime of each implementation project. The AIC for the multivariate model was 4408.22, and reducing this model by removing any variable increased the AIC, suggesting that dropping variables would not increase model fit.

5. Discussion

This mixed-methods analysis produced estimates of implementation time and identified facilitators and barriers to EHR implementation among small practices receiving software subsidies and extension center support under conditions similar to those established by the HITECH Act of 2009.

Among these practices, the median EHR project time was 24.7 weeks; analysis of a subset of practices suggested that the actual implementation process involved about 17 of these weeks. The qualitative findings suggested that EHR implementation was facilitated by a positive attitude toward EHRs among providers and staff, stemming from clinical leadership and an expectation that the EHR would bring long-term quality benefits (a factor that has been called "computer optimism" (28)). The extension center matured over time as improved procedures were developed to facilitate implementation, a result reflected both qualitatively and quantitatively. Smaller practices (measured in terms of practice sites, number of providers, or number of patients) tended to implement more quickly than larger ones. Practices with smaller proportions of Medicaid and uninsured patients had shorter project times, a quantitative finding that was reflected in the qualitative data suggesting that material resources posed continuing challenges for small practices even in a setting where software and implementation support were subsidized. In addition, we found a relationship between implementation success and choice of an integrated EHR/PMS system rather than two systems from different vendors. The qualitative data also suggested other predictors, such as strong organizational leadership, that had no parallel in the database and thus could not be evaluated quantitatively.

Although other researchers have identified factors that influence adoption of or interest in adopting EHRs (1, 2, 6, 7) as well as successful EHR implementation strategies (28, 29), our work is novel in presenting detailed quantitative data on implementation time among small practices receiving implementation support. The Massachusetts eHealth Collaborative (MAeHC), an early extension program that influenced the federal REC initiative, recently reported that implementation time among 138 practices ranged from 16 weeks to 36 weeks (21). No more detailed descriptive statistics were published, although 16 weeks was described as more typical for a small office practice (22). This appears similar to our median implementation time of 17 weeks, in a data set dominated by very small office practices. However, comparing the MAeHC statistic to the PCIP data in this study is challenging, as it is not clear whether start and end dates of implementation were defined in the same way. In particular, at PCIP, the implementation process required a relatively lengthy training period before the EHR was considered live, which may have lengthened the total project time. Shorter project times might be achieved by shortening EHR training requirements, although this may not be advisable in light of widespread consensus about the importance of training to the successful implementation and use of health IT (8, 12, 15, 17, 18). Overall, the goal of the current paper was not to identify ways to reduce project times but rather to provide estimates of project times and identify potential hurdles for planning purposes.

This research is important as it was conducted in a setting very similar to that established by HITECH, in which small practices can receive both financial and technical assistance. The cost of the package offered by PCIP during the period covered by the current study averaged about $41,500 (software licenses, training, support and maintenance, and interfaces), plus extensive professional staff support for the implementation and quality improvement process. As a result, the PCIP program created an environment similar to that later created by the HITECH Act, combining financial offsets for the EHR with technical implementation support. Interestingly, however, just as with pre-HITECH research, financial concerns surrounding health IT were associated with small practices (3). Our findings suggest that even with the financial incentives provided by the "meaningful use" program, financial barriers may continue to be problematic for small practices adopting EHRs.

The importance of clinical champions and widespread buy-in throughout the organization have also been reported by others describing small-practice EHR implementations (16, 19), as well as in a rich literature from the perspective of diffusion of innovation theory (30) and change management (18, 31). Lack of buy-in among providers and non-provider staff can be a significant barrier to implementation among small practices (31, 32). In the words of one expert, members of an organization who resist implementation can bring even an excellent system "to its knees" (31). Disruption, complexity, and expense associated with EHR implementation are often higher than expected, so strong leadership and commitment are important in helping the organization weather the disruptions (18, 31, 32). Our findings are also consistent with literature showing that strong implementation and workflow redesign support are needed for small practices to ensure success in implementation (18, 22, 32).

5.1 Limitations

The study was conducted in a single EHR implementation organization focusing on small urban practices with a large proportion of Medicaid and uninsured patients, and using a standard process to implement a single EHR product. Unique features of this process, such as the length of the required training period, may have affected implementation time. Quantitative data were not collected for research purposes but rather from a database populated by implementation staff as part of their daily project management responsibilities and thus had a large amount of missing data (26). In particular, missing data limited our ability to calculate implementation time, so we performed our primary analysis on total project time, defined as starting with the date that the practice joined PCIP.

In this work, EHR go-live was defined as having occurred as soon as the technical install was complete, interfaces with payers were tested, and staff training had been accomplished. This does not ensure that all features of the EHR (such as decision-support) are being used to maximum impact, nor that practices have undergone transformation to maximize the quality impacts of EHRs. Following practices as they accomplish the multiple stages of the "meaningful use" program, or accomplish other goals such as patient-centered medical home status, may be necessary in order to capture the potential quality impacts of health IT-supported transformation.

5.2 Conclusions and implications

The current study provides a unique set of qualitative and quantitative data from a local initiative, which, like programs created by the HITECH Act of 2009, offered small private healthcare providers financial assistance to offset the cost of EHRs plus expert implementation assistance from an extension center. This initiative was successful in promoting EHR implementation in a large number of independent primary care practices serving relatively poor populations. These types of practices have previously been among the least likely to adopt EHRs. Even with this level of assistance, however, financial barriers and characteristics of the practices influenced the chance of implementation success. This implies that the HITECH Act programs have the potential to markedly reduce barriers to EHR adoption and implementation but may not completely eliminate them.

Clinical Relevance Statement

A local initiative anticipated the HITECH Act of 2009 by offering small private healthcare providers financial assistance to offset the cost of EHRs plus expert implementation assistance from an extension center. Findings from this initiative suggest that the HITECH Act programs will likely markedly reduce barriers to EHR adoption and implementation but may not completely eliminate them.

Conflict of Interest

Two of the co-authors were employed at PCIP when this study was being conducted. One of the coauthors is an employee of the EHR vendor described in this study. The authors have no other potential conflict of interest to report.

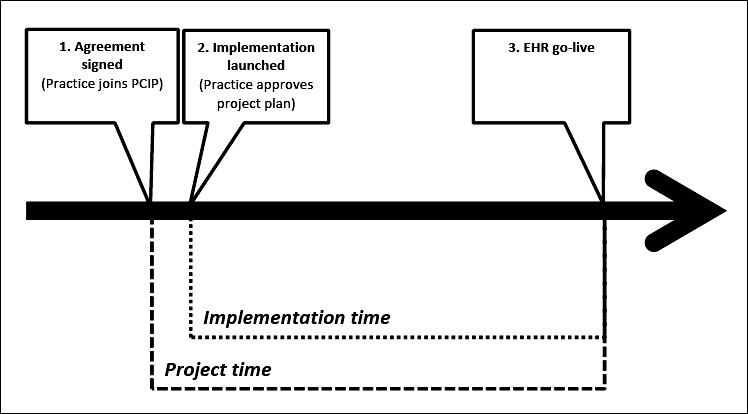

Fig. 1.

PCIP Implementation Timeline and Milestones The EHR project began on the day that the medical practice signed the agreement to join PCIP. Shortly afterwards (generally within 2 weeks), the practice’s stakeholders participated in a teleconference to review and approve a project plan developed by eClinicalWorks and PCIP, and then the EHR implementation was considered to have begun. The PCIP implementation process included: a review of the practice’s current hardware, recommendations for additional hardware and software purchases (including Internet service); review of available IT support and recommendations for IT support services if needed; integration of EHR with an existing project management system (PMS) or import of data from the existing PMS into the EHR; testing of electronic billing with private and public payers; and training (8 hours for every staff member and 16 hours for each healthcare provider). EHR go-live (transition to the EHR by all healthcare providers) was scheduled immediately after training was completed for all staff and providers. The PCIP implementation process did not include the integration of laboratory results delivery interfaces during the time covered in the current study. PCIP staff continued to be involved with the practices after EHR go-live to provide technical support, help with problem tickets, and assist with quality improvement and other initiatives.

References

- 1.DesRoches CM, Campbell EG, Rao SR, Donelan K, Ferris TG, Jha A, et al. Electronic health records in ambulatory care: A national survey of physicians. New England Journal of Medicine 2008; 359(1): 50-60 [DOI] [PubMed] [Google Scholar]

- 2.Bramble JD, Galt KA, Siracuse MV, Abbott AA, Drincic A, Paschal KA, et al. The relationship between physician practice characteristics and physician adoption of electronic health records. Health Care Management Review 2010; 35(1): 55-64 [DOI] [PubMed] [Google Scholar]

- 3.Rao SR, DesRoches C, Donelan K, Campbell E, Miralles P, Jha A.Electronic health records in small physician practices: Availability, use, and perceived benefits. JAMIA 2011; 18: 271-275 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jha AK, DesRoches CM, Campbell EG, Donelan K, Rao SR, Ferris TG, et al. Use of electronic health records in U.S. hospitals. The New England journal of medicine 2009Apr 16;360(16):1628-38 PubMed PMID: 19321858. Epub 2009/03/27 [DOI] [PubMed] [Google Scholar]

- 5.Gans D, Kralewski J, Hammons T, Dowd B.Medical groups’ adoption of electronic health records and information systems. Health Affairs (Millwood) 2005; 24(5): 1323-1333 [DOI] [PubMed] [Google Scholar]

- 6.Simon SR, Kaushal R, Cleary PD, Jenter CA, Volk LA, Poon EG, et al. Correlates of electronic health record adoption in office practices: a statewide survey. J Am Med Inform Assoc 2007; 14(1): 110-117 PubMed PMID: 17068351 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Simon SR, Kaushal R, Cleary PD, Jenter CA, Volk LA, Orav EJ, et al. Physicians and electronic health records: a statewide survey. Arch Intern Med 2007; 167(5): 507-512 PubMed PMID: 17353500 [DOI] [PubMed] [Google Scholar]

- 8.Maxson E, Jane S, Kendall M, Mostashari F, Blumenthal D.The regional extension center program: helping physicians meaningfully use health information technology. Annals of Internal Medicine 2010; 153: 666-670 [DOI] [PubMed] [Google Scholar]

- 9.Blumenthal D, Tavenner M.The "meaningful use" regulation for electronic health records. New England Journal of Medicine 2010; 363: 501-504 [DOI] [PubMed] [Google Scholar]

- 10.Medicare and Medicaid Programs Electronic Health Record Incentive Program, Final Rule. 75 Federal Register 144 (28 July 2010), 2010. [PubMed] [Google Scholar]

- 11.Scott J, Rundall T, Vogt T, Hsu J.Kaiser Permanente’s experience of implementing an electronic medical record: A qualitative study. British Medical Journal 2005; 331: 1313-1316 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.McAlaerney A, Song P, Robbins J.Moving from good to great in ambulatory electronic health record implementation. Journal of Healthcare Quality 2010; 32(5): 41-50 [DOI] [PubMed] [Google Scholar]

- 13.Yoon-Flannery K, Zandieh S, Kuperman GJ, Langsam D, Hyman D, Kaushal R. A.qualitative analysis of an electronic health record (EHR) implementation in an academic ambulatory setting. Informatics in Primary Care 2008; 16: 277-284 [DOI] [PubMed] [Google Scholar]

- 14.Fullerton C, Aponte P, Hopkins R, Bragg D, Ballard DJ.Lessons learned from pilot site implementation of an ambulatory electronic health record. Proceedings of the Baylor University Medical Center 2006; 19: 303-310 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Baron RJ, Fabens EL, Schiffman M, Wolf E.Electronic health records: Just around the corner? Or over the Cliff? Annals of Internal Medicine 2005; 143(3): 222-226 [DOI] [PubMed] [Google Scholar]

- 16.Miller RH, Sim I, Newman J.Electronic medical records: Lessons from small physician practices. Oakland CA: California HealthCare Foundation, 2003. [Google Scholar]

- 17.Ludwick D, Manca D, Doucette J.Primary care physicians’ experiences with electronic medical records: Implementation experience in community, urban, hospital, and academic family medicine. Canadian Family Physician 2010; 56: 40-47 [PMC free article] [PubMed] [Google Scholar]

- 18.Lorenzi NM, Kouroubali A, Detmer DE, Bloomrosen M.How to successfully select and implement electronic health records (EHR) in small ambulatory practice settings. BMC Medical Informatics and Decision Making 2010; 9(15). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Torda P, Han ES, Scholle SH.Easing the adoption and use of electronic health records in small practices. Health Affairs (Millwood) 2010; 29(4): 668-675 [DOI] [PubMed] [Google Scholar]

- 20.Fleurant M, Kell R, Jenter C, Volk LA, Zhang F, Bates DW, et al. Factors associated with difficult electronic health record implementation in office practice. Journal of the American Medical Informatics Association 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mostashari F, Tripathi M, Kendall M. A.tale of two large community electronic health record extension projects. Health Aff (Millwood) 2009; 28(2): 345-356 PubMed PMID: 19275989 [DOI] [PubMed] [Google Scholar]

- 22.Safran C, Bloomrosen M, Hammond WE, Labkoff S, Markel-Fox S, Tang PC, et al. Toward a national framework for the secondary use of health data: An American Medical Informatics Association white paper. JAMIA 2007; 14(1): 1-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Strauss A, Corbin J.Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. 2nd Thousand Oaks, CA: Sage; 1998. [Google Scholar]

- 24.Lincoln YS, Guba EG.Naturalistic Inquiry. Newbury Park, CA: Sage Publications, Inc.; 1985. [Google Scholar]

- 25.Crabtree BF, Miller WL.Doing Qualitative Research. 2nd Thousand Oaks, CA: Sage Publications; 1999. [Google Scholar]

- 26.Ancker JS, Shih S, Singh MP, Snyder A, Edwards A, Kaushal R, et al. Root causes underlying challenges to secondary use of data. Proceedings / AMIA Annual Fall Symposium 2011; 2011: 57-62 [PMC free article] [PubMed] [Google Scholar]

- 27.Akaike H.A new look at the statistical model identification. IEEE Transactions on Automatic Control 1974; 19(6): 716-723 [Google Scholar]

- 28.Dennehy P, White MP, Hamilton A, Pohl JM, Tanner C, Onifade TJ, et al. A partnership model for implementing electronic health records in resource-limited primary care settings: experiences from two nurse-managed health centers. Journal of the American Medical Informatics Association 2011; 18(6): 820-826 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Goldberg DG, Kuzel AJ, Feng LB, DeShazo JP, Love LE.EHRs in primary care practices: benefits, challenges, and successful strategies. American Journal of Managed Care 2012; 18(2): e48-e54 [PubMed] [Google Scholar]

- 30.Rogers EM.Diffusion of innovations. New York,, Free Press; 1962/2003 [Google Scholar]

- 31.Lorenzi NM, Riley RT.Managing change: an overview. Journal of the American Medical Informatics Association 2000; 7: 116-124 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Prokosch H-U, Ganslandt T.Reusing the electronic medical record for clinical research. Methods of Information in Medicine 2009; 48: 38-44 [PubMed] [Google Scholar]