Abstract

Facial expressions serve as cues that encourage viewers to learn about their immediate environment. In studies assessing the influence of emotional cues on behavior, fearful and angry faces are often combined into one category, such as “threat-related,” because they share similar emotional valence and arousal properties. However, these expressions convey different information to the viewer. Fearful faces indicate the increased probability of a threat, whereas angry expressions embody a certain and direct threat. This conceptualization predicts that a fearful face should facilitate processing of the environment in order to gather information to disambiguate the threat. Here, we tested whether fearful faces facilitated processing of neutral information presented in close temporal proximity to the faces. In Experiment 1, we demonstrated that compared to neutral faces, fearful faces enhanced memory for neutral words presented in the experimental context, whereas angry faces did not. In Experiment 2, we directly compared the effects of fearful and angry faces on subsequent memory for emotional faces vs. neutral words. We replicated the findings of Experiment 1 and extended them by showing that participants remembered more faces from the angry face condition relative to the fear condition, consistent with the notion that anger differs from fear in that it directs attention towards the angry individual. Because these effects cannot be attributed to differences in arousal or valence processing, we suggest they are best understood in terms of differences in the predictive information conveyed by fearful and angry facial expressions.

Keywords: facial expressions, memory, attention, emotion

A wealth of literature suggests that emotional cues can modulate memory by enhancing perception and attention during encoding (Christianson & Loftus, 1987; Easterbrook, 1959; McGaugh, 2004; Phelps, Ling, & Carrasco, 2006; Talmi, Anderson, Riggs, Caplan, & Moscovitch, 2008; Tulving, 2002). While many experiments probing emotional enhancement of memory employ highly arousing negative stimuli that elicit strong emotional responses from viewers, other experiments demonstrate that subtle stimuli communicating emotional states, such as pictures of facial expressions, can also enhance memory (Sergerie, Lepage, & Armony, 2007; Vuilleumier, 2002). Many of these studies employ a dimensional approach to assessing emotion (see Hamann, 2009), emphasizing the effects of either emotional valence (e.g., Maratos, Dolan, Morris, Henson, & Rugg, 2001; Sergerie, et al., 2007) or emotional arousal (e.g., Anderson, Wais, & Gabrieli, 2006; Bradley, Greenwald, Petry, & Lang, 1992; Cahill & McGaugh, 1998) on memory.

Recent research has gone beyond examining the modulatory effects of valence and arousal on information processing, to consider the communicative value of specific emotions such as fear. Fearful facial expressions are social cues that signal to the viewer that there is a potential threat in the environment. Consistent with this notion, viewing pictures of fearful faces facilitates sensory processing by enhancing participants’ ability to discriminate other visual stimuli present in the experimental context (Becker, 2009; Phelps, et al., 2006). Interestingly, the act of displaying a fearful expression modulates an individual's sensory intake. Susskind and colleagues (2008) showed that the physical facial feature changes that comprise a fearful expression (i.e., expanding the eyes and nostrils) enhance one's ability to gather information from the environment.

While fearful facial expressions serve as a warning signal to viewers, they provide no information about the source of threat, that is, what in the immediate environment may have elicited the expression. We have suggested previously that fearful expressions should facilitate processing of the immediate context (Whalen, 1998), defined broadly as information spatially or temporally proximal to the fearful expression. The current study evaluated the extent to which the communicative value of fear, beyond its negative and arousing qualities, facilitates contextual information processing. To do so, we compared the effects of fearful and angry facial expressions on memory for contextual information. Fearful and angry faces are equally negative and arousing, but hold a divergent communicative signal. Specifically, in contrast to fearful faces, angry faces embody the very threat that they predict - an individual expressing anger communicates aggression toward the target of their gaze (e.g., the viewer). Previous research has shown that static pictures of fearful and angry facial expressions can be equated in terms of subjective reports of negative valence (Ekman, 1997; Johnsen, Thayer, & Hugdahl, 1995; Matsumoto, Kasri, & Kooken, 1999), and both subjective reports and objective measures (e.g., electrodermal activity) of emotional arousal elicited by the face presentations (Johnsen, et al., 1995).

The present experiments evaluated the extent to which the presence of fearful and angry facial expressions would modulate information processing in accordance with the differential communicative signals they express to the viewer. To this end, neutral words were embedded within blocks of fearful and angry facial expressions. Though context is commonly manipulated in a concurrent, spatial domain (e.g., Easterbrook, 1959), contextual information presented immediately before and after the expression is consistent with the manner in which information gathering occurs in real life. For example, observing a fearful facial expression might lead to immediately surveying the environment, whereas observing an angry face might lead one to focus their attention on the individual producing the expression.

In Experiment 1, we assessed memory for neutral words presented within separate blocks of fearful, angry and neutral faces. We hypothesized that in comparison to neutral face blocks, memory for contextual words would be enhanced during fearful face blocks but not during angry face blocks. We had a clear prediction that angry faces would not facilitate memory for neutral words in the same way as fearful faces, and since previous research has shown that they capture attention (Fox, Russo, Bowles, & Dutton, 2001; Fox, Russo, & Dutton, 2002; Holmes, Bradley, Kragh Nielsen, & Mogg, 2009; Maratos, Mogg, & Bradley, 2008; Mogg & Bradley, 1999; Schupp, et al., 2004; Williams, Moss, Bradshaw, & Mattingley, 2005)}, we thought their presence might even attenuate memory for neutral words. In Experiment 2, we directly tested this idea by comparing the effects of fearful and angry facial expressions on memory for the contextual words versus the faces themselves. We hypothesized that memory would be facilitated for information present in the temporal context (i.e., the neutral words) embedded in fearful face blocks, and that memory would be facilitated for the faces in the angry face blocks.

Experiment 1

Method

Eighty healthy adults (ages 18-45, 40 males) participated in this experiment. Participants were screened for psychiatric illness using an abbreviated version of the Non-Patient edition of the Structured Clinical Interview for DSM Disorders (First, Spitzer, Williams, & Gibbon, 1995), which assessed for current and past history of major depressive disorder, dysthymia, hypomania, bipolar disorder, specific phobia, social anxiety disorder, generalized anxiety disorder, and obsessive compulsive disorder. Informed consent was obtained in accordance with the guidelines of the Human Subjects Committee of the University of Wisconsin-Madison.

Stimuli

Face stimuli (Ekman & Friesen, 1976) consisted of 10 angry, 10 fearful, and 10 neutral faces as defined by high normative consensus ratings associated with this stimulus set. To control for gender, attractiveness, and other lower-level aspects of the face stimuli, the same 10 identities (50% male) comprised each of the emotion categories. Faces were aligned vertically with the middle of the pupils and horizontally with the nose in the center, and matched on contrast and brightness.

Word stimuli were chosen from the MRC Psycholinguistic Database (www.psy.uwa.edu.au/MRCDataBase/mrc2.html#CONC) and consisted of 20 neutral words split into two lists of 10. Word lists were matched on concreteness, familiarity, imageability, written frequency, number of letters, and number of syllables (all p's > 0.30), and were counterbalanced with respect to face conditions across participants. Stimuli were presented in grayscale on a black background using MacStim software (http://www.brainmapping.org/WhiteAnt/).

Task structure

An experimental block consisted of alternating presentations of face and word stimuli, with a given block containing a single emotion type. Modeled after prior neuroimaging studies by this group (Whalen, et al., 2001), each block contained a 200 ms face presentation immediately followed by an 800 ms word presentation, with faces and words alternating continuously until each of the 10 word and face stimuli were displayed (duration: 10 seconds) (Figure 1). Emotion (angry or fearful) and neutral blocks each contained unique sets of words so that participants saw a particular word embedded only within one type of block, and word lists were counterbalanced so that all words appeared in both the emotional and neutral face blocks across participants. A 5s fixation period was presented between each block. The type of emotion block (angry or fearful) was varied between subjects such that half (40) of the participants viewed only angry and neutral face blocks, and the other half viewed only fearful and neutral face blocks. Each block was presented twice, meaning that participants viewed each face and word stimulus twice during the experiment. Thus, each participant viewed a total of four blocks of faces and words in an ABBA design that was counterbalanced across participants (i.e., Emotion/Neutral/Neutral/Emotion or Neutral/Emotion/Emotion/Neutral), where all stimuli were repeated in the final two blocks.

Figure 1.

Experiment 1 paradigm design. In a between subjects design, 50% of participants viewed blocks of fearful faces alternating with neutral words, and blocks of neutral faces alternating with neutral words. The other 50% of participants viewed blocks of angry faces alternating with neutral words, and blocks of neutral faces alternating with neutral words. Faces were presented for 200ms, and words were presented for 800ms. A five second fixation period separated blocks.

Procedure

Participants were told that they would passively view a series of words and faces on a computer screen, and that following the task they would be asked to complete some questionnaires. Participants then completed the Edinburgh Handedness Inventory (Oldfield, 1971) as a brief distracter. Finally, they were given a surprise free recall test, where they were asked to write down all of the words they remembered from the computer task. This recall task was self-paced, and the time that elapsed between the study and test phases was approximately 10 minutes.

Upon completion of the experiment, participants provided explicit valence (“How positive or negative is the expression on this face?” rated on a scale from 1, “Very Positive” to 9, “Very Negative”) and arousal (“How much emotional arousal do you feel when looking at this face?” 1, “The least amount of emotional arousal I have ever felt” to 9, “The most emotional arousal I have ever felt”) ratings for the faces.

Data Analysis

Valence and arousal ratings were evaluated using a 2 (Group: Fear, Anger; Between) X 2 (Condition: Emotional, Neutral; Within) ANOVA. We predicted no main effect of group, indicating that participants who viewed the fearful face blocks did not rate any of the faces as more positive or negative than participants who viewed the angry face blocks. In contrast, we predicted a main effect of emotion, with participants rating the emotional faces as both more arousing and more negative than the neutral faces.

Recall data were corrected for intrusions by calculating (hits–false alarms)/(10, which is the total number of old words). A 2 (Group: Fear, Anger; Between) X 2 (Condition: Emotional, Neutral; Within) ANOVA was computed to evaluate differences in recall for words. Directionality of significant interactions was evaluated using post-hoc paired samples t tests (1-tailed). We predicted an interaction where word recall would be enhanced for fearful compared to neutral face blocks, but not in angry compared to neutral face blocks.

Results

Stimulus Ratings

There was a main effect of Condition (Emotional vs. Neutral faces) for both the valence, F(1,78) = 529.712, p < 0.001 and arousal, F(1,78) = 156.411, p < 0.001 ratings, but no interaction between Group and Condition for either the valence, F(1,78) = 0.967, p = 0.328 or arousal, F(1,78) = 2.722, p = 0.103 ratings. On average, participants rated the emotional faces more negatively and more emotionally arousing than the neutral faces [fearful mean (standard error) valence=6.692 (0.110); arousal=3.995 (0.217); angry valence=6.813 (0.107), arousal=4.153 (0.261); neutral valence (for participants who saw fearful and neutral faces)=4.873 (0.053); arousal=2.256 (0.167); neutral valence (for participants who saw angry and neutral faces)=4.830 (0.078), arousal=2.820 (0.177)].

Consistent with fearful and angry faces being equated on valence and arousal, there was no main effect of Group (Fear vs. Angry) for valence, F(1,78) = 0.161, p = 0.689 or arousal, F(1,78) = 1.797, p = 0.184 ratings. Direct comparison of valence and arousal ratings of fearful and angry faces further supported that the stimuli were matched on valence (t(78) = -0.783, p = 0.436) and arousal level (t(78) = -0.464, p = 0.644). The variability of ratings was also comparable for fearful and angry stimuli as indicated by nonsignificant results of Levene's tests for equality of variance (valence: p > .9; arousal: p > .12).

Memory

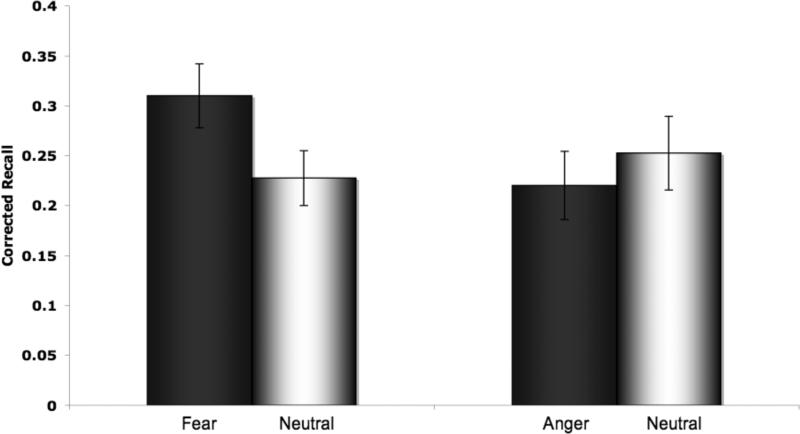

See Table 1 for the full descriptive statistics of Experiment 1 memory findings. A 2 (Group; Fear, Anger) X 2 (Condition; Emotional, Neutral) ANOVA revealed a significant interaction between Group and Condition, F(1,78) = 4.189, p = 0.044, η2 = 0.050. (This interaction was equivalently significant when we used hits uncorrected for false alarms as the dependent variable.) There was no main effect of Group, F(1,78) = 0.774, p = 0.382, η2 = 0.010 and no main effect of Condition, F(1,78) = 0.792, p = 0.376, η2 = 0.010 on corrected word recall. Paired samples t tests indicated that subjects recalled more words from the fearful compared to neutral blocks t(39) = 2.196, p = 0.018, dz = 0.343, and that there was no difference in the number of words recalled in the angry compared to neutral blocks, t(39) = -0.786, p = .219, dz = -0.124 (Figure 2).

Table 1.

Raw proportion of words recalled by block type in Experiment 1.

| Fear Sample | Anger Sample | |||||

|---|---|---|---|---|---|---|

| Fear | Neutral | False Alarms (#) | Anger | Neutral | False Alarms (#) | |

| Mean | .34 | .26 | .33 | .27 | .30 | .5 |

| Standard Dev | .17 | .21 | .53 | .18 | .21 | .78 |

Mean values for Fear, Neutral and Anger represent proportion correctly recalled. Mean values for False Alarm represent mean number of false alarms.

Figure 2.

Experiment 1. Participants recalled more words from the fearful compared to neutral face blocks, and there was no difference in number of words recalled in the angry compared to neutral face blocks. Error bars represent standard error of the mean.

Experiment 2

Experiment 1 provided initial evidence supporting the prediction that fearful faces enhance processing of contextual information. Interestingly, angry faces did not facilitate memory for contextual information compared to neutral faces, though they were rated equally with fearful faces on emotional valence and arousal. Indeed, since angry faces provide direct information about the source of threat (i.e., the person making the facial expression), they might call for more direct attention toward the threatening information itself, compared to the diffuse attention for contextual information called for by fearful expressions. This pattern of results motivates the hypothesis that angry faces should encourage people to attend to and learn about the faces themselves, while fearful expressions should encourage people to learn about the immediate context.

In Experiment 2, we expanded the number of face identities in a within-subject design to directly address word and face memory in fearful compared to angry face blocks. We predicted that participants would recognize more words in the fearful compared to angry face blocks, but more faces in the angry compared to fearful face blocks.

Method

Twenty-seven healthy adults (11 males, ages 18-25) participated in this experiment. Participants were screened for psychiatric illness as in Experiment 1. Of these, 24 completed the valence and arousal ratings. Informed consent was obtained in accordance with the guidelines of the Committee for Protection of Human Subjects at Dartmouth College.

Stimuli

Face stimuli consisted of 20 angry (10 targets, 10 foils) and 20 fearful (10 targets, 10 foils) faces with unique identities compiled from four stimulus sets (Ekman & Friesen, 1976; Lundqvist, Flykt, & Ohman, 1998; Matsumoto & Ekman, 1988; Tottenham, et al., 2009), and counterbalanced so that identity was not repeated between conditions (i.e., fear/angry). Gender of faces was equal (50% male) within and across conditions. Word stimuli consisted of 40 neutral words split into four groups of 10 (20 targets and 20 foils). Word lists were matched on concreteness, familiarity, imageability, written frequency, number of letters, and number of syllables as in Experiment 1 (all p's > 0.30), and were counterbalanced across conditions. Stimuli were presented in the center of the screen in grayscale on a black background using E-Prime software (Psychology Software Tools, Pittsburgh, PA).

Procedure

During a block of trials, participants viewed 10 fearful or angry faces (200ms) interleaved with 10 neutral words (800ms) as described in Experiment 1 with the following key differences. In contrast to Experiment 1, the face identities in the Fearful and Angry conditions were different (the words presented in the Fearful and Angry face blocks were also different, as in Experiment 1). Each block was repeated once, so that participants viewed each stimulus twice during the experiment (Figure 3). Thus, each participant viewed four blocks of faces (10 faces per condition) and words that were counterbalanced across participants (Fear/Angry/Angry/Fear or Angry/Fear/Fear/Angry). Participants then completed the State-Trait Anxiety Inventory (Spielberger, Gorsuch, & Lushene, 1988) as a brief distracter, followed by surprise word and face recognition tasks. In the recognition test, subjects were presented with 20 pseudorandomly intermixed target stimuli and 20 foils and were asked to indicate whether they had seen the stimulus earlier in the experiment. Face stimuli were presented one at a time in the center of the screen in grayscale on a black background using E-Prime software (Psychology Software Tools, Pittsburgh, PA) and participants pressed one of two keys indicating whether the face was “Old” or “New.” Word stimuli were all presented on one sheet of paper and participants were asked to circle the words they saw during the experiment. Both the face and word recognition tasks were self-paced. Order of the recognition tasks was counterbalanced across participants, and no feedback was provided. The time that elapsed between the study and test phases was approximately 10 minutes.

Figure 3.

Experiment 2 design. In a within subjects design, participants viewed blocks of fearful faces alternating with neutral words, and blocks of angry faces alternating with neutral words. Faces were presented for 200ms, and words were presented for 800ms. A five second fixation period separated blocks.

Upon completion of the experiment, participants provided explicit valence (“How positive or negative is the expression on this face?” on a scale from 1, “Very Positive” to 9, “Very Negative”) and arousal (“How much emotional arousal do you feel when looking at this face?” on a scale from 1, “The least amount of emotional arousal I have ever felt” to 9, “The most emotional arousal I have ever felt”) ratings for the faces.

Data Analysis

Recognition data were corrected for false alarms by calculating (hits–false alarms)/(total number of old stimuli (words or faces)). A 2 (Stimulus: Faces, Words) X 2 (Emotion: Fear, Anger) within subjects ANOVA was computed to evaluate the predicted interaction, and post-hoc contrasts were evaluated using paired samples t tests (1-tailed). Valence and arousal ratings were evaluated using paired samples t tests.

Results

Stimulus Ratings

As predicted, there were no significant differences in valence, t(23) = -0.017, p=0.987 or arousal, t(23) = -0.230, p = 0.820 ratings for fearful [average (standard error) valence=5.583 (0.161), arousal=3.60 (0.303)] and angry [average (standard error) valence=5.585 (0.153), arousal=3.565(0.293)] faces. The variability of ratings was also comparable for fearful and angry stimuli as indicated by nonsignificant results of Pitman tests for equality of variance among correlated samples (valence: p > .6; arousal: p > .6). Note that the magnitude of both the valence and arousal ratings for fearful and angry faces decreased compared to Experiment 1, perhaps due to a lack of neutral faces in the present paradigm - thereby rendering these expressions less distinctive (see Dewhurst & Parry, 2000; Rajaram, 1996).

See Table 2 for descriptive recognition and error rate data. A 2 (Stimulus: Word, Face) X 2 (Emotion: Fear, Anger) within-subjects ANOVA revealed a significant main effect of Stimulus, F(1,26) = 23.982, p < 0.001, η2 = 0.332, where words were better remembered than faces, and no main effect of Emotion F(1,26) = 0.109, p = 0.743, η2 = 0.0004. The interaction between Stimulus and Emotion was significant, F(1,26) = 6.371, p = 0.015, η2 = 0.038. Paired samples t tests indicated that participants recognized more faces from the Anger compared to Fear blocks, t(26) = 2.076, p = 0.024, dz = 0.399, and more words from the Fear compared to Anger blocks, t(26) = 1.749, p = 0.046, dz = 0.337 (Figure 4).

Table 2.

Raw recognition rates by block type in Experiment 2.

| Fear Blocks | Anger Blocks | ||||||

|---|---|---|---|---|---|---|---|

| Face Hits | Face FA | Word Hits | Face Hits | Face FA | Word Hits | Word FA | |

| Mean | .25 | .15 | .34 | .27 | .13 | .30 | 1.59 |

| Standard Dev | .11 | .11 | .09 | .09 | .08 | .11 | 1.62 |

Mean values for Hits represent proportion correctly recalled. Mean values for false alarms (FA) represent mean number of false alarms.

Figure 4.

Experiment 2. Participants recognized more words from the fearful face blocks, and more faces from the angry face blocks. Error bars represent standard error of the mean.

Discussion

Though fearful and angry facial expressions both convey negative valence and elicit equivalent levels of emotional arousal from viewers, their communicative signals vary in terms of the source of threat they imply. The studies presented here tested the hypothesis that fearful and angry facial expressions would differentially modulate memory in accordance with their ecological signal value. Whereas fearful expressions call for diffuse attentional modulation to quickly and nonspecifically gather information to identify the source of threat, angry facial expressions communicate a clear threat signal and draw focused attention to the individual expressing anger. In Experiment 1, fearful faces facilitated memory for information in the immediate temporal context (i.e., words), while angry faces did not. In Experiment 2, we extended these findings by directly comparing the effects of fearful and angry expressions on memory for the expressor's identities as well as words in the temporal context. Results replicated the initial finding that fearful expressions enhanced memory for contextual word information, and additionally demonstrated that angry facial expressions enhanced memory for the faces themselves. Moreover, we observed a reduction in false alarms for angry compared to fearful face recognition, suggesting that participants more accurately encoded the angry faces. Given that these expression categories were matched for emotional valence and arousal, we propose that these subtle but significant effects on memory resulted from the fact that fearful and angry facial expressions promote different foci of attention within the environment in accordance with their communicative value.

Fearful and angry facial expressions both predict negative outcomes, but differ in the amount of information they provide regarding the source of threat in the environment. Specifically, angry facial expressions provide certain information about the source of threat, whereas the context-dependence of fearful expressions leaves the source of threat more uncertain. The dimensions of emotional valence and arousal traditionally used to categorize facial expressions do not capture these differences in predictive information conveyed by fearful and angry expressions. Instead, a certainty-uncertainty dimension might prove useful in characterizing such differences, and in making predictions about differential behaviors encouraged by these expressions.

The idea that the fearful expressions of others call for greater contextual monitoring (Whalen, 1998) is consistent with previous studies showing that fearful expressions enhance contextual perceptual discrimination (Phelps, et al., 2006; see also Bocanegra & Zeelenberg, 2009) and contextual target detection (Becker, 2009). Whereas most experiments manipulate context in the spatial domain comparing centrally versus peripherally presented information, the present work considers context in a temporal domain, that is information (i.e., words) presented immediately prior or subsequent to the facial expressions. Temporal context is a meaningful manipulation because in the real world, it is likely that individuals look to the environment after encountering an emotional expression in order to disambiguate its source. Therefore, the present data showing that fearful faces also produce better memory for information within a close temporal context is consistent with the idea that fear calls for greater attention to the environment, perhaps to gather clues about the source of threat. Conversely, better memory for the anger faces themselves is consistent with the idea that, relative to fear, these expressions call for greater attention to the individual producing the expression (Whalen, 1998; Whalen et al., 2001) because the source of the threat is clear and localized to that individual.

Prior research has demonstrated differential brain response patterns elicited by viewing fearful and angry faces despite their uniformly negative and arousing properties. In one study, a ventral portion of the amygdala showed responses of equal magnitude to fearful and angry faces when compared to neutral faces, consistent with their equal negative valence. In contrast, a more dorsal portion of the amygdala showed greater responses to fearful faces when directly compared to angry faces, potentially consistent with their uncertain vs. certain information value (Whalen et al., 2001). Given that the amygdala has also been consistently implicated in orchestrating modulatory influences on perception, attention, and memory (Cahill & Mcgaugh, 1998; Phelps, Lin, & Carrasco, 2006; Vuilleumier, 2005), we predicted that the differences in amygdala response to fearful and angry faces observed by Whalen and colleagues (2001) would manifest as different behavioral responses to these faces.

Though the reported effects were observed in memory performance, the mechanism underlying these effects could potentially lie at numerous stages in the information-processing stream. One interesting possibility is that the information conveyed by angry and fearful faces directs attention toward different aspects of the environment, differentially modulating the focus of attentional networks on expression (i.e., focused) versus contextual (i.e., diffuse) features of the environment.

While the neural systems supporting emotion and attention can act independently, they are also interactive. It has been suggested that one function of emotion is to direct our attention in a way that shapes perception rather than simply imbuing our experiences with meaning (Vuilleumier, 2009). Several lines of evidence suggest that emotional stimuli, and threat stimuli in particular, preferentially capture visual attention. For example, in visual search tasks, subjects are faster at detecting negative targets compared to positive or emotionally neutral targets (Eastwood, Smilek, & Merikle, 2001; Fox, et al., 2000; Fox, et al., 2002; Hansen & Hansen, 1988; Lucas & Vuilleumier, 2008; Ohman, Flykt, & Esteves, 2001; Tipples, Atkinson, & Young, 2002; Williams, Moss, Bradshaw, & Mattingley, 2005). Emotional versions of the Stroop paradigm also support the idea that emotional stimuli capture attention preferentially (Algom, Chajut, & Lev, 2004; Mathews & MacLeod, 1985; Pratto & John, 1991; Watts, McKenna, Sharrock, & Trezise, 1986). Visual search and continuous flash suppression tasks indicate that negative facial expressions of emotion, particularly fearful faces, capture early attention (Ohman, et al., 2001; West, Anderson, & Pratt, 2009; Yang, Zald, & Blake, 2007) and an emotional go-nogo task suggests that this capture of early attention has important influences on top-down control of behavior (Hare, et al., 2008). Additional research suggests that physiological arousal associated with viewing positive and negative emotional stimuli plays a large role in emotional capture of attention (Anderson, 2005; Bradley, et al., 2003; Keil & Ihssen, 2004; Schimmack & Derryberry, 2005; Schupp, et al., 2004), and that in some cases emotional capture of attention can disrupt encoding of neutral stimuli (Angelini, Capozzoli, Lepore, Grossi, & Orsini, 1994; Detterman, 1975; Hurlemann, et al., 2005; Strange, Hurlemann, & Dolan, 2003; Tulving, 1969). Here, we extend this body of work to show that although threat related facial expressions capture early attention (Ohman, et al., 2001; West, et al., 2009; Yang, et al., 2007), different expressions may subsequently modulate attention and memory in ways consistent with their ecological meaning.

The present study garners its strength from the a priori nature of these predictions (Whalen, 1998), but such a finding also calls for further experimentation. Future behavioral and neuroimaging studies could shed light on whether differential brain activations to fearful vs. angry faces implicate perceptual, attentional, encoding and/or memory consolidation circuitries. Such studies might lead to alternative explanations for the present effects including a) differential habituation rates for fearful vs. angry faces, b) differential attentional blink effects for anger and neutral faces compared to fear, and/or c) differential sensory intake for angry vs. fearful faces (Susskind & Anderson, 2008; Susskind, et al., 2008). Moreover, previous research shows that changeable aspects of the facial expressions themselves, such as eye gaze, can impact behavioral and neural responses to facial expressions (Adams, Gordon, Baird, Ambady, & Kleck, 2003; Adams & Kleck, 2003)and may have important influences on the way that information is encoded or remembered.

Conclusion

The facial expressions of fear and anger, though matched here for intensity of negative valence and arousal ratings, are social cues that communicate divergent messages. While fearful expressions signal that the expressor has detected something threatening in the surrounding environment, an angry face suggests the expressor him or herself is the direct source of threat. Here we demonstrated that fearful and angry facial expressions influence memory differentially, in a manner consistent with this characterization. Fearful expressions enhanced memory for contextual information, whereas angry expressions enhanced memory for the faces themselves. We propose that these differential effects on memory result from the fact that the information conveyed by angry and fearful facial expressions differs on a certainty (i.e., angry) - uncertainty (i.e., fear) dimension, eliciting focused versus diffuse attention from viewers, respectively.

Acknowledgements

The authors would like to thank George Wolford for statistical consultation and Alison Duffy for assistance in data collection. Supported by MH069315 and MH080716.

References

- Adams RB, Gordon HL, Baird AA, Ambady N, Kleck RE. Effects of gaze on amygdala sensitivity to anger and fear faces. Science. 2003;300(5625):1536. doi: 10.1126/science.1082244. doi: 10.1126/science.1082244. [DOI] [PubMed] [Google Scholar]

- Adams RB, Kleck RE. Perceived gaze direction and the processing of facial displays of emotion. Psychol Sci. 2003;14:644–647. doi: 10.1046/j.0956-7976.2003.psci_1479.x. [DOI] [PubMed] [Google Scholar]

- Algom D, Chajut E, Lev S. A rational look at the emotional stroop phenomenon: a generic slowdown, not a stroop effect. J Exp Psychol Gen. 2004;133(3):323–338. doi: 10.1037/0096-3445.133.3.323. doi: 10.1037/0096-3445.133.3.323. [DOI] [PubMed] [Google Scholar]

- Anderson AK. Affective influences on the attentional dynamics supporting awareness. J Exp Psychol Gen. 2005;134(2):258–281. doi: 10.1037/0096-3445.134.2.258. doi: 2005-04168-008. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Wais PE, Gabrieli JD. Emotion enhances remembrance of neutral events past. Proc Natl Acad Sci U S A. 2006;103(5):1599–1604. doi: 10.1073/pnas.0506308103. doi: 0506308103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angelini R, Capozzoli F, Lepore P, Grossi D, Orsini A. “Experimental amnesia” induced by emotional items. Percept Mot Skills. 1994;78(1):19–28. doi: 10.2466/pms.1994.78.1.19. [DOI] [PubMed] [Google Scholar]

- Becker MW. Panic Search: Fear Produces Efficient Visual Search for Nonthreatening Objects. Psychol Sci. 2009 doi: 10.1111/j.1467-9280.2009.02303.x. doi: PSCI2303. [DOI] [PubMed] [Google Scholar]

- Bocanegra BR, Zeelenberg R. Emotion Improves and Impairs Early Vision. Psychol Sci. 2009 doi: 10.1111/j.1467-9280.2009.02354.x. doi: PSCI2354. [DOI] [PubMed] [Google Scholar]

- Bradley MM, Greenwald MK, Petry MC, Lang PJ. Remembering pictures: pleasure and arousal in memory. J Exp Psychol Learn Mem Cogn. 1992;18(2):379–390. doi: 10.1037//0278-7393.18.2.379. [DOI] [PubMed] [Google Scholar]

- Bradley MM, Sabatinelli D, Lang PJ, Fitzsimmons JR, King W, Desai P. Activation of the visual cortex in motivated attention. Behav Neurosci. 2003;117(2):369–380. doi: 10.1037/0735-7044.117.2.369. [DOI] [PubMed] [Google Scholar]

- Cahill L, McGaugh JL. Mechanisms of emotional arousal and lasting declarative memory. Trends Neurosci. 1998;21(7):294–299. doi: 10.1016/s0166-2236(97)01214-9. doi: S0166-2236(97)01214-9. [DOI] [PubMed] [Google Scholar]

- Christianson SA, Loftus EF. Memory for traumatic events. Applied Cognitive Psychology. 1987;1:225–239. [Google Scholar]

- Detterman DK. The von Restorff effect and induced amnesia: production by manipulation of sound intensity. J Exp Psychol Hum Learn. 1975;1(5):614–628. [PubMed] [Google Scholar]

- Dewhurst SA, Parry LA. Emotionality, distinctiveness, and recollective experience. European Journal of Cognitive Psychology. 2000;12(4):541–551. [Google Scholar]

- Easterbrook JA. The effect of emotion on cue utilization and the organization of behavior. Psychol Rev. 1959;66(3):183–201. doi: 10.1037/h0047707. [DOI] [PubMed] [Google Scholar]

- Eastwood JD, Smilek D, Merikle PM. Differential attentional guidance by unattended faces expressing positive and negative emotion. Percept Psychophys. 2001;63(6):1004–1013. doi: 10.3758/bf03194519. [DOI] [PubMed] [Google Scholar]

- Ekman P. Should we call it expression or communication? Innovations in Social Science Research. 1997;10:333–344. [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Consulting Psychologists Press; Palo Alto: 1976. [Google Scholar]

- Fox E, Lester V, Russo R, Bowles RJ, Pichler A, Dutton K. Facial Expressions of Emotion: Are Angry Faces Detected More Efficiently? Cogn Emot. 2000;14(1):61–92. doi: 10.1080/026999300378996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E, Russo R, Bowles R, Dutton K. Do threatening stimuli draw or hold visual attention in subclinical anxiety? J Exp Psychol Gen. 2001;130(4):681–700. [PMC free article] [PubMed] [Google Scholar]

- Fox E, Russo R, Dutton K. Attentional Bias for Threat: Evidence for Delayed Disengagement from Emotional Faces. Cogn Emot. 2002;16(3):355–379. doi: 10.1080/02699930143000527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- First M, Spitzer M, Williams J, Gibbon M. Structured Clinical Interview for DSM-V (SCID) American Psychiatric Association; Washington, D.C.: 1995. [Google Scholar]

- Hamann . The human amygdala and memory. In: Whalen PJ, Phelps EA, editors. The Human Amygdala. The Guilford Press; New York, NY: 2009. pp. 177–203. [Google Scholar]

- Hansen CH, Hansen RD. Finding the face in the crowd: an anger superiority effect. J Pers Soc Psychol. 1988;54(6):917–924. doi: 10.1037//0022-3514.54.6.917. [DOI] [PubMed] [Google Scholar]

- Hare TA, Tottenham N, Galvan A, Voss HU, Glover GH, Casey BJ. Biological substrates of emotional reactivity and regulation in adolescence during an emotional go-nogo task. Biol Psychiatry. 2008;63(10):927–934. doi: 10.1016/j.biopsych.2008.03.015015. doi: S0006-3223(08)00359-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmes A, Bradley BP, Kragh Nielsen M, Mogg K. Attentional selectivity for emotional faces: Evidence from human electrophysiology. Psychophysiology. 2009;46(1):62–68. doi: 10.1111/j.1469-8986.2008.00750.x. doi: PSYP750. [DOI] [PubMed] [Google Scholar]

- Hurlemann R, Hawellek B, Matusch A, Kolsch H, Wollersen H, Madea B, et al. Noradrenergic modulation of emotion-induced forgetting and remembering. J Neurosci. 2005;25(27):6343–6349. doi: 10.1523/JNEUROSCI.0228-05.2005. doi: 25/27/6343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnsen BH, Thayer JF, Hugdahl K. Affective judgment of the Ekman faces: A dimensional approach. Journal of psychophysiology. 1995;9:193–202. [Google Scholar]

- Keil A, Ihssen N. Identification facilitation for emotionally arousing verbs during the attentional blink. Emotion. 2004;4(1):23–35. doi: 10.1037/1528-3542.4.1.23. doi: 10.1037/1528-3542.4.1.23. [DOI] [PubMed] [Google Scholar]

- Lucas N, Vuilleumier P. Effects of emotional and non-emotional cues on visual search in neglect patients: evidence for distinct sources of attentional guidance. Neuropsychologia. 2008;46(5):1401–1414. doi: 10.1016/j.neuropsychologia.2007.12.027. doi: S0028-3932(07)00450-2. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Ohman A. The Karolinska Directed Emotional Faces - KDEF, CD ROM from the Department of Clinical Neuroscience, Psychology section: Karolinska Institutet. 1998.

- Maratos EJ, Dolan RJ, Morris JS, Henson RN, Rugg MD. Neural activity associated with episodic memory for emotional context. Neuropsychologia. 2001;39(9):910–920. doi: 10.1016/s0028-3932(01)00025-2. doi: S0028-3932(01)00025-2. [DOI] [PubMed] [Google Scholar]

- Mathews A, MacLeod C. Selective processing of threat cues in anxiety states. Behav Res Ther. 1985;23(5):563–569. doi: 10.1016/0005-7967(85)90104-4. doi: 0005-7967(85)90104-4. [DOI] [PubMed] [Google Scholar]

- Matsumoto D, Ekman P. Japanese and caucasian facial expressions of emotion (JACFEE) and Neutral faces (JACNeuF) Department of Psychology, San Francisco State University; San Francisco, CA: 1988. [Google Scholar]

- Matsumoto D, Kasri F, Kooken K. American-Japanese cultural differences in judgments of expression intensity and subjective experience. Cognition and Emotion. 1999;13(201-218) [Google Scholar]

- McGaugh JL. The amygdala modulates the consolidation of memories of emotionally arousing experiences. Annu Rev Neurosci. 2004;27:1–28. doi: 10.1146/annurev.neuro.27.070203.144157. doi: 10.1146/annurev.neuro.27.070203.144157. [DOI] [PubMed] [Google Scholar]

- Mogg K, Bradley BP. Orienting of Attention to Threatening Facial Expressions Presented Under Conditions of Restricted Awareness. Cognition and Emotion. 1999;13(6):713–740. [Google Scholar]

- Ohman A, Flykt A, Esteves F. Emotion drives attention: detecting the snake in the grass. J Exp Psychol Gen. 2001;130(3):466–478. doi: 10.1037/0096-3445.130.3.466. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9(1):97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Phelps EA, Ling S, Carrasco M. Emotion facilitates perception and potentiates the perceptual benefits of attention. Psychol Sci. 2006;17(4):292–299. doi: 10.1111/j.1467-9280.2006.01701.x. doi: PSCI1701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pratto F, John OP. Automatic vigilance: the attention-grabbing power of negative social information. J Pers Soc Psychol. 1991;61(3):380–391. doi: 10.1037//0022-3514.61.3.380. [DOI] [PubMed] [Google Scholar]

- Rajaram S. Perceptual effects on remembering: recollective processes in picture recognition memory. J Exp Psychol Learn Mem Cogn. 1996;22(2):365–377. doi: 10.1037//0278-7393.22.2.365. [DOI] [PubMed] [Google Scholar]

- Schimmack U, Derryberry D. Attentional interference effects of emotional pictures: threat, negativity, or arousal? Emotion. 2005;5(1):55–66. doi: 10.1037/1528-3542.5.1.55. doi: 2005-02259-005. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Ohman A, Junghofer M, Weike AI, Stockburger J, Hamm AO. The facilitated processing of threatening faces: an ERP analysis. Emotion. 2004;4(2):189–200. doi: 10.1037/1528-3542.4.2.189. doi: 10.1037/1528-3542.4.2.189. [DOI] [PubMed] [Google Scholar]

- Sergerie K, Lepage M, Armony JL. Influence of emotional expression on memory recognition bias: a functional magnetic resonance imaging study. Biol Psychiatry. 2007;62(10):1126–1133. doi: 10.1016/j.biopsych.2006.12.024. doi: S0006-3223(07)00005-4. [DOI] [PubMed] [Google Scholar]

- Spielberger CD, Gorsuch RL, Lushene RE. STAI - Manual for the state trait anxiety inventory. 3rd ed. Consulting Psychologists Press; Palo Alto, CA: 1988. [Google Scholar]

- Strange BA, Hurlemann R, Dolan RJ. An emotion-induced retrograde amnesia in humans is amygdala- and beta-adrenergic-dependent. Proc Natl Acad Sci U S A. 2003;100(23):13626–13631. doi: 10.1073/pnas.1635116100. doi: 10.1073/pnas.1635116100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Susskind JM, Anderson AK. Facial expression form and function. Commun Integr Biol. 2008;1(2):148–149. doi: 10.4161/cib.1.2.6999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Susskind JM, Lee DH, Cusi A, Feiman R, Grabski W, Anderson AK. Expressing fear enhances sensory acquisition. Nat Neurosci. 2008;11(7):843–850. doi: 10.1038/nn.2138. doi: nn.2138. [DOI] [PubMed] [Google Scholar]

- Talmi D, Anderson AK, Riggs L, Caplan JB, Moscovitch M. Immediate memory consequences of the effect of emotion on attention to pictures. Learn Mem. 2008;15(3):172–182. doi: 10.1101/lm.722908. doi: 15/3/172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tipples J, Atkinson AP, Young AW. The eyebrow frown: a salient social signal. Emotion. 2002;2(3):288–296. doi: 10.1037/1528-3542.2.3.288. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, et al. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Res. 2009;168(3):242–249. doi: 10.1016/j.psychres.2008.05.006. doi: S0165-1781(08)00148-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tulving E. Retrograde amnesia in free recall. Science. 1969;164(875):88–90. doi: 10.1126/science.164.3875.88. [DOI] [PubMed] [Google Scholar]

- Tulving E. Episodic memory: From mind to brain. Annual Review of Psychology. 2002;53:1–25. doi: 10.1146/annurev.psych.53.100901.135114. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. Facial expression and selective attention. Current Opinion in Psychiatry. 2002;15:291–300. [Google Scholar]

- Vuilleumier P. Cognitive science: staring fear in the face. Nature. 2005;433(7021):22–23. doi: 10.1038/433022a. doi: 433022a. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. The role of the human amygdala in perception and attention. In: Whalen PJ, Phelps EA, editors. The human amygdala. Vol. 1. The Guilford Press; New York, NY: 2009. pp. 220–249. [Google Scholar]

- Watts FN, McKenna FP, Sharrock R, Trezise L. Colour naming of phobia-related words. Br J Psychol. 1986;77(Pt 1):97–108. doi: 10.1111/j.2044-8295.1986.tb01985.x. [DOI] [PubMed] [Google Scholar]

- West GL, Anderson AA, Pratt J. Motivationally significant stimuli show visual prior entry: evidence for attentional capture. J Exp Psychol Hum Percept Perform. 2009;35(4):1032–1042. doi: 10.1037/a0014493. doi: 2009-11357-003. [DOI] [PubMed] [Google Scholar]

- Whalen PJ. Fear, vigilance, and ambiguity: initial neuroimaging studies of the human amygdala. Current Directions in Psychological Science. 1998;7(6):177–188. [Google Scholar]

- Whalen PJ, Shin LM, McInerney SC, Fischer H, Wright CI, Rauch SL. A functional MRI study of human amygdala responses to facial expressions of fear versus anger. Emotion. 2001;1(1):70–83. doi: 10.1037/1528-3542.1.1.70. [DOI] [PubMed] [Google Scholar]

- Williams M, Moss S, Bradshaw J, Mattingley J. Look at me, I'm smiling: Visual Search for Threatening and Nonthreatening Facial Expressions. Visual Cognition. 2005;12(1):29–50. [Google Scholar]

- Yang E, Zald DH, Blake R. Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion. 2007;7(4):882–886. doi: 10.1037/1528-3542.7.4.882. doi: 2007-17748-021. [DOI] [PMC free article] [PubMed] [Google Scholar]