Abstract

Gestures are common when people convey spatial information, for example, when they give directions or describe motion in space. Here we examine the gestures speakers produce when they explain how they solved mental rotation problems (Shepard & Meltzer, 1971). We asked whether speakers gesture differently while describing their problems as a function of their spatial abilities. We found that low-spatial individuals (as assessed by a standard paper-and-pencil measure) gestured more to explain their solutions than high-spatial individuals. While this finding may seem surprising, finer-grained analyses showed that low-spatial participants used gestures more often than high-spatial participants to convey “static only” information but less often than high-spatial participants to convey dynamic information. Furthermore, the groups differed in the types of gestures used to convey static information: high-spatial individuals were more likely than low-spatial individuals to use gestures that captured the internal structure of the block forms. Our gesture findings thus suggest that encoding block structure may be as important as rotating the blocks in mental spatial transformation.

Keywords: Mental rotation, individual differences, gesture

The gestures speakers spontaneously produce when they talk offer a window onto their unspoken thoughts (Goldin-Meadow, 2003). Gestures, taken together with speech, can reveal people's mental representations of a task, offering a good method for examining their problem-solving strategies on a wide range of tasks, for example, mathematical equivalence (Perry, Church, & Goldin-Meadow, 1988), conservation of quantity (Church & Goldin-Meadow, 1986; Ping & Goldin-Meadow, 2010), the Tower of Hanoi (Garber & Goldin-Meadow, 2002; Beilock & Goldin-Meadow, 2010), block placement in a puzzle (Emmorey & Casey, 2001), mental rotation (Chu & Kita, 2008, 2011; Hostetter, Alibali, & Bartholomew, 2011; Ehrlich, Levine & Goldin-Meadow, 2006), mechanical reasoning (Hegarty, Mayer, Kriz, & Keehner, 2005), and rotating gears (Alibali, Spencer, Knox, & Kita, 2011; Perry & Elder, 1997; Schwartz & Black, 1996, 1999).

Gesture occurs particularly often when speakers talk about space (Feyereisen & Havard, 1999). For example, Lavergne and Kimura (1987) found that adults used twice as many gestures when asked to talk about space (e.g., describe a route between two points) than when asked to talk about other topics (e.g., describe a story in a favorite book, or a school day routine). As another example, speakers produce more gestures when their speech contains many spatial prepositions (Alibali, Heath, & Myers, 2001) than when it does not. In addition, speech is slower and involves more dysfluencies when speakers' gestures are restricted and they are asked to convey spatial information than when their gestures are restricted and they are asked to convey non-spatial information (Rauscher, Krauss, & Chen, 1996).

Although many studies have explored gesture's role in spatial thinking (Alibali, Spencer, Knox, & Kita, 2011; Chu & Kita, 2008, 2011; Hegarty, Mayer, Kriz, & Keehner, 2005; Hostetter, Alibali, & Bartholomew, 2011), few have examined individual differences in the rate and types of gestures speakers use when describing spatial problems. One exception is Hostetter and Alibali (2007), who found that individuals with low verbal skills and high-spatial visualization skills gestured more than individuals with high verbal skills and low-spatial skills (see also Vanetti & Allen, 1988). Good spatial ability and/or poor verbal ability may lead problem-solvers to approach problems spatially, which may, in turn, be reflected in the speakers' high gesture rate. However, gesturing could also encourage spatial problem solving and lead to improved spatial skills (Alibali, 2005; Alibali, Spencer, Knox, & Kita, 2011).

Mental rotation is defined as the ability to imagine rotating 2-D or 3-D representations of objects (Shepard and Metzler, 1971) and is a key spatial skill that has been widely investigated for group-level patterns (e.g., Cooper, 1976; Frick, Daum, Walser, & Mast, 2009; Just, Carpenter, Maguire, Diwadkar, & McMains, 2001; Kosslyn, Margolis, Barrett, Goldknopf, & Daly, 1990) and for individual differences (e.g., Casey, Nuttall, Pezaris, & Benbow, 1995; Collins & Kimura, 1997; Jansen-Osmann & Heil, 2007; Peters, Lehmann, Takaira, Takeuchi, & Jordan, 2006; Shepard & Cooper, 1982). A great deal of research has focused on the link between motor processes (e.g., hand movements on a joystick, knob, or stick that rotates an object) and mentally rotating the object (e.g., Schwartz & Holton, 2000; Sekiyama, 1982; Wexler, Kossyln, & Berthoz, 1998; Wohlschlager & Wohlschlager, 1998). For example, Wexler and colleagues (1998) asked adults to mentally rotate 2-D geometric figures while holding a joystick with their right hand. The participants were instructed to turn the joystick in a clockwise or counterclockwise direction as they solved each problem. Error rates were lower and reaction times faster when the direction of their hand movement and the direction in which they mentally rotated the figure were congruent (see also Wohlschlager & Wohlschlager, 1998).

Gesture is an action and, as such, is a natural medium for reflecting mental representations involving action (Goldin-Meadow & Beilock, 2010). For example, Hostetter, Alibali and Bartholomew (2011) found that adults produce more co-speech gestures when they imagine a rotated arrow in motion than when they imagine the arrow in its static end state. Chu and Kita (2008) explored gestures produced without speech (i.e., co-thought gestures) in a mental rotation study, and found that participants produced gestures representing a hand manipulating an object as it moved (e.g., the index finger and thumb held as if holding and moving the object) early in the experiment. However, over the course of the experiment, the type, frequency, and location of the gestures changed; participants began producing gestures that represented only the movement of the object (e.g., a flat hand rotating as the object would) and not the agent of the action. Chu and Kita argue that the change in gestures over time reflects a change in motor strategy, from one involving the agent's hand moving the object to one involving only the moving object (i.e., a deagentivized motor strategy). Analysis of the verbal descriptions produced during the mental rotation task supported this hypothesis – people who produced many agentivized gestures also produced sentences with active transitive verbs and agent-explicit descriptions; in contrast, people who produced deagentivized gestures or who did not gesture at all produced sentences with intransitive verbs that omitted references to the agent.

The aim of our study was to examine whether individuals who differ in their ability to perform a mental transformation task also differ in the gestures they produce when describing how they solved the mental rotation task. Our study thus differs from that of Chu and Kita (2008), which focused on how gesturing changes over the course of a mental transformation experiment independent of the gesturer's spatial abilities. Our goal was to explore the gestures people produce as a function of their spatial abilities.

A mental rotation task involves four main phases: visually encoding the objects, rotating one object, comparing the two objects, and responding (Cooper & Shepard, 1973; Wright, Thompson, Ganis, Newcombe, & Kosslyn, 2008; see also Xu & Franconeri, in press). A recent training study by Wright and colleagues (2008) on spatial transformation skills showed that adults were able to transfer skills learned on a mental rotation task and a mental paper-folding task to novel stimuli. Crucially, the training effects were evident only for the reaction time intercepts and not for the slopes, which reflect spatial transformation processes. Wright et al. suggest that training has its greatest impact on the initial phase of the mental transformation process – encoding object structure (which is reflected in the intercepts) – and not on later phases – rotating the objects, or comparing the objects. Given these findings, individuals might be expected to differ not only in rotating or comparing objects, but also in encoding object structure. We might then expect to find differences between low- and high-spatial individuals in the initial phase of the mental transformation task (i.e., in object encoding), differences that might be reflected in their gestures.

If, as the findings from the Wright et al. (2008) study suggest, the way objects are encoded is a key feature that differentiates low- and high-spatial ability individuals, high-spatial ability individuals should represent static aspects of objects (specifically, those involving the parts of the blocks relevant to the later comparison) better than low-spatial ability individuals. If this difference is reflected in gesture, we might expect the high-spatial group to use more object-property gestures reflecting the detailed structure of the blocks used in Shepard and Metzler (1971) task. In contrast, if high and low-spatial ability groups differ in the second phase (rotating the object) and those differences are reflected in gesture, the high-spatial ability individuals might use more gestures reflecting the dynamic aspects of mental transformation involved in the block rotation than the low-spatial ability individuals.

Method

Participants

Thirty-three monolingual English-speaking adults participated in this study for course credit. All participants had normal or corrected to normal vision. The participants ranged in age from 18 to 25. Five participants produced no gestures; their data were consequently excluded from analyses1.

Stimuli

Mental Rotations Test – A (MRT-A)

We used the Mental Rotations Test form-A (Peters, Laeng, Latham, Jackson, Zaiyouna, & Richardson, 1995), redrawn from the Vandenberg and Kuse Mental Rotation Test (Vandenberg & Kuse, 1978) to assess the spatial ability of individuals. This paper and pencil test contains figures taken from the Shepard and Metzler (1971) task. Each test item contains 5 figures: one target object and four test objects. Two of the test objects represent figures that are rotated versions of the target object. Participants need to find and mark the two figures that match the target figure. Answers were considered correct only if both figures were correctly identified. There were a total of 24 test items.

Mental Rotation Block Test

The objects used in this study were based on the stimuli in Shepard and Metzler (1971). The objects were created by gluing together 2.5 cm wooden cubes (visual angle 1.6 arc°). Visual angle for the individual parts of the figures ranged from 3.2 to 6.4°, based on the number of blocks (2, 3 or 4) each part contained. Ten different objects, their corresponding isomorphic matches (mirror reflections), and their identical matches were constructed (see Figure 1 for samples of objects). For each trial, a pair of solid wooden objects was presented. Each participant received 10 same and 10 different pairs of objects. For both the same and different trials, one of the objects was rotated 0°, 45°, 90°, 135°, or 180° around the y-axis. That is, each rotation angle was presented twice in same and different pairs, yielding 20 trials in total.

Figure 1.

Sample stimuli from the Mental Rotation block test

The objects were presented on a 36 cm × 15 cm × 28 cm platform. The platform subtended 22.6°of visual angle in width and 9.5° of visual angle in height. A 49.5 cm × 51 cm × 36 cm screen was constructed to block the presentation platform from view between trials.

Procedure

Each participant was tested individually in a quiet room. After completing the consent forms and the MRT-A, participants were seated at a table where the objects were presented on a platform, which was approximately at the participant's eye level. The experimenter stood behind the platform across from the participant. Before each trial, a screen blocked the presentation from the participant's view. During this interval, the experimenter placed two objects on the platform according to a preset trial order. Objects were positioned using previously prepared cardboard templates, which were placed on the top of the platform and indicated the exact position of each object for that trial. The experimenter then removed the screen to reveal the pair of the objects. Each object pair was visible for ten seconds. The participant's task was to determine whether the objects were the same or not. After ten seconds, with the objects still visible, the experimenter asked the participant, “Can you explain to me how you solved this problem?” No feedback was given to the participants regarding the accuracy of their responses. The same procedure was repeated for 20 trials, which were presented in a randomized order.

Coding

Speech

Native English speakers transcribed all speech verbatim for participants' responses to each trial. Speech for each trial was then coded for the type of information (static vs. dynamic) participants used when describing their solution strategy. Static components involved object (e.g., blocks, arms) and location (e.g., right side) information; dynamic components involved information about the movement of the objects, either rotation (e.g., rotate, turn) or direction (e.g., from left to right). Table 1 shows samples of speech coding.

Table 1.

Sample coding of two individuals' speech and gesture (one individual from each spatial group). Each sentence was transcribed and coded for the type of information (static vs. dynamic) it conveyed. The location of each gesture was marked by a number in the speech transcript), and each gesture was classified according to type (pointing vs. iconic), referent (static vs. dynamic), and its relationship to speech (same or different information).

| Group | Speech | Speech Coding | Gesture | Gesture Coding | Gesture-Speech Match |

|---|---|---|---|---|---|

| Low-spatial Ability | I just moved [1 the one on the right] [2 a little bit] to [3 more downward] and they look the same | Static: The one on the right Dynamic: A little bit to more downward |

|

|

|

| High-spatial Ability | Flipped [1 the left one] again, [2 rotated it] about 45 degrees, [3] put them together in my head and found that they're the same | Static: The left one Dynamic: Rotated it about 45 degrees |

|

|

|

Gesture

Each participant's gestures were transcribed for each trial. A change in the shape of the hand or motion signaled the end of a gesture. Two types of gestures were coded: (1) Pointing gestures involved extending the index finger to indicate an object or location (e.g., using the index finger to point to the right block). (2) Iconic gestures indicated the shape of an object, its placement in space, and/or its motion (e.g., moving an index finger in a circular movement following a clockwise direction).

Gestures were then classified according to the components of the mental rotation task captured by the hands: Static gestures referred to objects, either pointing to one of the blocks or illustrating a property of the block (e.g., making a curved hand shape as the palm faces up); dynamic gestures referred to the rotation (e.g., a circular movement) or direction (e.g., a movement along one directional axis, from left to right or back and forth) of the movement. Although static gestures could have been used to represent the endpoint of a rotation action, they were not used for this purpose in our sample. Static iconic gestures were further categorized according to whether they referred to the whole object or to a piece of the object. We coded a static gesture as referring to the whole object when all four fingers of the hand were used (e.g., four fingers curved to represent the entire curved block), and as referring to a piece of the object when a subset of fingers were used (e.g., L shape, index finger and thumb together referring to top part of the object). Table 1 presents two examples of participants' gestures.

Reliability

The first author coded all speech and gesture. To test the reliability of the coding system, a second coder randomly chose and coded participants' responses for 14% of the trials. Agreement between coders was 96% (k = .93, n = 93 trials) for coding speech and 88% (k = .82, n = 65) for coding gesture.

Results

We divided participants into low-scoring and high-scoring spatial groups using a median split on the MRT-A scores. The average MRT-A score for the whole group was 37.64 (n = 28, SD = 18.42) with a median of 35.5 (range: 0 – 87.5). The mean MRT-A scores were 23.49 (SD = 9.16) and 51.79 (SD = 13.75) for low- and high-scoring groups, respectively.2

Do the two spatial groups differ in their spoken explanations?

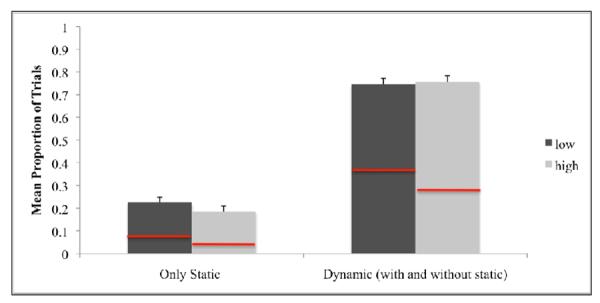

First, we analyzed the type of information low- and high-spatial groups produced in their speech. We found that whenever participants mentioned dynamic information in a trial, they also tended to mention static information (fewer than 1% of trials contained dynamic only information without any static information), although the converse was not the case. As a result, we calculated the mean proportion of static only information in speech and the mean proportion of dynamic information (with or without static information) in speech for each participant. There were no differences in how often the two groups conveyed static alone or dynamic with or without static information in speech, F(1, 26) = .198, p > .05. Figure 2 presents the mean proportion of trials produced by each spatial group, categorized by whether the speech conveyed static only information or dynamic information (with or without static information).

Figure 2.

The proportion of trials on which low-spatial (dark grey bars) and high-spatial (light grey bar) participants produced only static information or dynamic (with or without static) information in speech. The portion below the red line indicates the proportion of trials that was accompanied by a gesture.

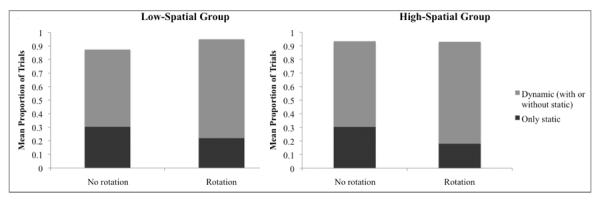

Second, we analyzed whether the rotation angle had an effect on the type of information conveyed in speech. Our preliminary analyses indicated that there were no differences in the type of speech used across angles that involved rotation (45°, 90°, 135°, and 180°), F(3, 78) = 2.24, p > .05. Thus, we collapsed the speech across these angle rotations. We then compared how often speech contained static only information vs. dynamic information with or without static information for no rotation (0°) vs. rotation (all angles) in the two groups. Results showed a main effect of angle, F(1, 26) = 10.84, p < .01, but no interaction between angle and spatial group, p > .05 (see Figure 3). Individuals in both spatial groups produced more dynamic language when there was some rotation than when there was no rotation.

Figure 3.

The proportion of trials on which low-spatial (left panel) and high-spatial (right panel) participants produced only static information or dynamic (with or without static) information in speech as a function of angle rotation (i.e., no rotation vs. rotation).

Do the two spatial groups differ in their co-speech gestures?

Overall, participants produced gestures on 239 of the 580 trials (41. 2%, M = 8.53, SD = 6.23, range: 1 – 20 trials). Thus, participants gestured on fewer than half the trials. We found that the low-spatial group produced gestures on more trials than the high-spatial group. The low-scorers gestured on 139 of their 280 trials (50%), compared to 100 of the 280 trials for the high-scorers (36%), t (558) = 4.71, p = .001.

In all but 24 of the 239 (90%) trials containing gesture, the participants conveyed the same information in gesture as they conveyed in speech; 14 of these 24 trials were produced by the high-scorers, 10 by the low-scorers. When gesture did not convey the same information as speech (i.e., in these 24 trials), it added specificity to the static or dynamic information participants presented in speech. For example, one participant mentioned the direction of rotation (counterclockwise or clockwise) in gesture, while indicating only the movement in speech (i.e., “I turned the object”); another conveyed a specific part of the block with her hand shape (e.g., making a gesture of `3' to present the top 3 pieces of the block), while indicating the whole object in speech (i.e., “The right block”).

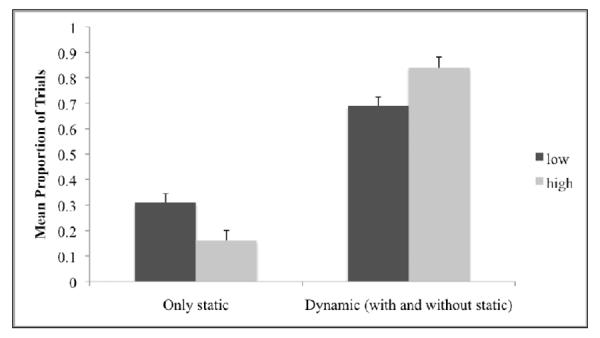

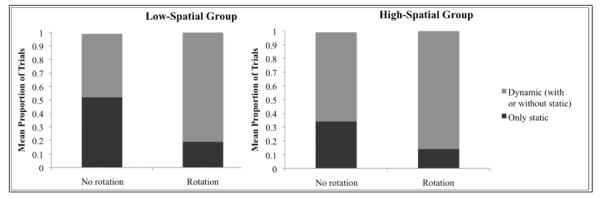

As in our speech analyses, we examined how often low and high-spatial groups conveyed static only information and dynamic information (with or without static information) in gesture. Figure 4 displays the mean proportion of gesture trials produced by each spatial group, classified according to the information conveyed in gesture. The low-scoring spatial group produced a higher proportion of static only information in gesture (e.g., pointing to the blocks when talking about blocks), and consequently a lower proportion of dynamic information (e.g., producing a turning gesture when talking about turning a block), than the high-scoring spatial group, F (1,26) = 4.10, p < .05, ηp2 = .14. In addition, dynamic gestures were produced more frequently when there was an angle of rotation than when there was no rotation, F(1,18) = 7.91 p < .05, ηp2 = .31, and this effect did not differ for the two groups (see Figure 5).

Figure 4.

The proportion of trials on which low-spatial (dark grey bars) and high-spatial (light grey bar) participants produced only static or dynamic (with or without static) information in gesture.

Figure 5.

The proportion of trials on which low-spatial (left panel) and high-spatial (right panel) scorers produced only static or dynamic (with or without static) information in gesture as a function of angle rotation (i.e., no rotation vs. rotation).

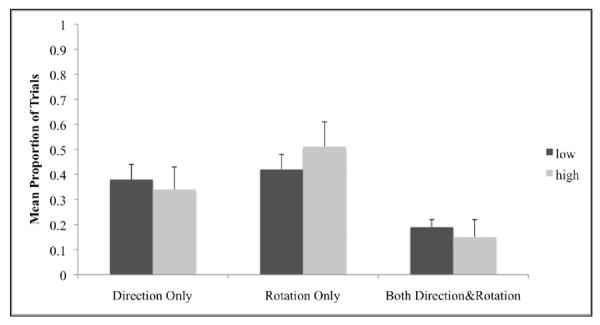

Having found a difference in how much static vs. dynamic information the two spatial groups conveyed in gesture, we then analyzed the types of dynamic gestures (direction, rotation, or both) and static gestures (pointing, iconic, or both) individuals in each group produced. For each speech + gesture trial conveying dynamic information, we calculated whether low and high-spatial individuals used direction (e.g., a left to right movement of the hand), rotation (e.g., a circular movement of the hand) or both in gesture. There were no reliable differences in the mean proportion of direction and rotation gestures the groups produced, ts < .72, ps > .05 (see Figure 6). Not surprisingly, given that the participants produced few gestures that conveyed different information from the accompanying speech, we found that the participants' speech for the types of dynamic information paralleled the gesture patterns and that the groups did not differ in the mean proportion of rotation or dynamic information used in speech, ts < 1.25, ps > .05.

Figure 6.

The proportion of trials containing dynamic gestures, categorized according to the type of dynamic information (direction only, rotation only, both direction & rotation), conveyed by participants in the low (dark grey bars) and high (light grey bar) spatial ability groups.

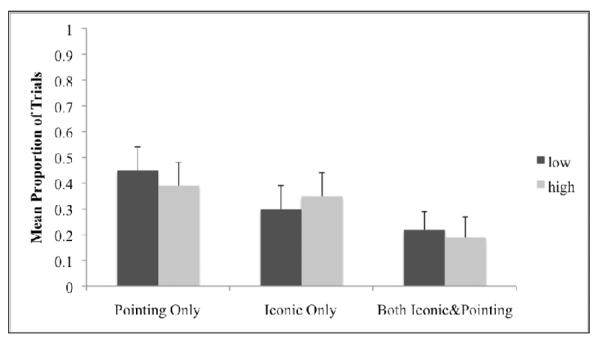

For each speech + gesture trial conveying static information, we calculated whether low and high-spatial individuals used pointing gestures, iconic gestures, or both pointing and iconic gestures. There were no reliable differences in the mean proportion of iconic and pointing gestures the groups produced, ts < .72, ps > .05 (Figure 7).

Figure 7.

The proportion of trials containing static gestures categorized according to the type of static information (pointing only, iconic only, both iconic & pointing) conveyed by participants in the low (dark grey bars) and high (light grey bar) spatial ability groups.

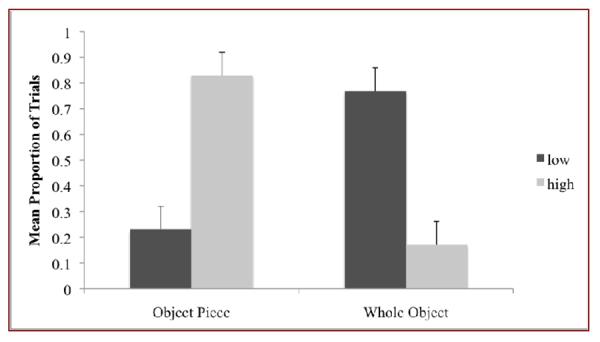

To examine whether groups differed in the types of object gestures they produced, we calculated the proportion of static iconic gestures that each participant used to represent whole objects vs. pieces of the object, and then (after subjecting the proportions to an arc-sine transformation) used a t-test to compare the low- vs. high-spatial groups. The high-scoring spatial group produced proportionally more gestures highlighting object parts (e.g., an L-handshape representing the arms of the blocks), and consequently fewer whole object gestures, than the low-scoring spatial ability group, t(23) = 4.24, p < .01. As shown in Figure 8, the low-scoring spatial ability group generally gestured about the whole block (e.g., a curved handshape represented the left object); only 23% (15 out of 67) of their iconic gestures specified a part or a spatial relation in an object. In contrast 83% (35 out of 42) of the high-spatial group's static gestures referred to a part or relation in an object. Here again we found a parallel pattern in speech—the high-scoring spatial group used a greater proportion of words conveying static information to refer to specific object parts, and as a result a smaller proportion of words to refer to the whole object, than the low-scoring spatial group, t(23) = 3.87, p < .01

Figure 8.

The proportion of trials containing iconic gestures, categorized according to whether the gesture represent the whole object or a piece of the object, produced by participants in the low (dark grey bars) and high (light grey bar) spatial ability groups.

Discussion

This study investigated differences in how individuals describe the solution strategies they used on a mental rotation task. More specifically, we asked whether the information conveyed in gesture varied as a function of the spatial abilities of the speaker.

Speakers of low- vs. high-spatial ability differed in the number and types of gestures they produced on the task. On average, low-spatial ability individuals actually produced more gestures than high-spatial ability individuals. However, the low-spatial participants frequently used gestures simply to convey static information and gestured less often about dynamic information than the high-spatial participants. Furthermore, the low-spatial participants' static gestures were often iconic gestures that highlighted the entire structure of the block (e.g., a curved handshape gesture), whereas the high-spatial participants' iconic gestures emphasized the internal structure of the blocks (e.g., an L shaped gesture). These findings thus provide support for the two hypotheses with which we began our study: (1) Low- vs. high-spatial individuals differed in the types of static gestures they used to represent objects, thus reflecting a difference between groups in the first phase of the mental rotation task (visually encoding properties of the objects); (2) Low- vs. high-spatial individuals differed in the proportion of dynamic gestures they used, thus reflecting a difference between groups in the second phase of the mental rotation task (dynamically moving the objects).

Wright and colleagues (2008) demonstrated that training on a mental rotation task had a more substantial impact on the initial process of mental transformation (encoding the object structure) than on later processes (i.e., rotating or comparing the objects). Our results align nicely with the idea that encoding the object structure is crucial to successful performance on a mental transformation task. Low- vs. high-spatial individuals differed in the types of object property static gestures they used, but not in the types of dynamic gestures, even though, overall, high-spatial individuals produced more dynamic gestures. The specific-feature object gestures that the high-spatial group preferentially used may reflect their processing of important spatial information in preparation for transformation; encoding the block structure may be crucial to rotating the blocks in an informative way (Amorim, Isableu, & Jarraya, 2006; Hyun & Luck, 2007). In other words, referring to the bottom three pieces of the whole block in gesture, and thus representing the detailed structure of the object, might make it easier to mentally rotate that object. The fact that the low-spatial individuals preferentially use whole-object gestures suggests that their representation of the object's structure may not contain the spatial detail necessary to successfully perform the transformation task. We speculate that the improvement in Wright's study may have been in low-spatial individuals who, as a result of training, began encoding the internal structure of the forms during the first phase of the task.

A recent developmental study by Ehrlich and colleagues (2006) on spatial transformations is consistent with our findings. Five-year-olds were tested on a mental transformation task that included items requiring rotation; the children were shown a two-dimensional divided shape and asked to choose (out of four possibilities) the whole shape that these two pieces would make if they were moved together. The children were also asked to describe how they solved the problem and most children gestured spontaneously during their descriptions. Children who performed better on this spatial task typically represented the movement of the pieces in dynamic gesture. Like the high-spatial adults in our study, who were more likely to gesture about dynamic information, encoding the movement in gesture (phase two of a mental transformation task) seemed to be associated with better performance in young children. In fact, requiring a different group of children to perform these dynamic gestures led to improvement on the same mental transformation task (although letting them observe an adult performing dynamic gestures did not, Goldin-Meadow et al., in press). This developmental pattern also aligns with findings in adults, who improved on a mental transformation task when instructed to gesture on the task (Chu & Kita, 2011).

Our results do, however, appear to differ from Hostetter and Alibali (2007), who found that individuals with high-spatial visualization skills gestured more than individuals with low-spatial skills, although the different coding schemes used in the two studies make it difficult to directly compare the findings. Hostetter and Alibali focused exclusively on the numbers of representational gestures that their participants produced, whereas we analyzed the types of gestures, as well as the numbers, that our participants produced. In addition, Hostetter and Alibali (2007) assessed spatial skills in relation to verbal fluency and found that individuals with high spatial skills and low verbal skills gestured more than individuals with low spatial skills and high verbal skills. Since we assess only spatial abilities in our participants, it is difficult to directly compare our findings to Hostetter and Alibali's.

People's actions can influence how they think (e.g., Barsalou, 1999; Beilock et al., 2008; Wilson, 2002) and gesture, which is a type of action, is no exception. Gesturing has been found to have a causal effect on solving spatial problem by adding action information to mental representations (Beilock & Goldin-Meadow, 2010; Goldin-Meadow & Beilock, 2010), and has also been found to improve spatial reasoning by aiding spatial working memory (e.g., Chu & Kita, 2011). For example, both children and adults remember more verbal and visuospatial items if they gesture while explaining their solutions to a math problem than if they do not gesture (Goldin-Meadow, Nusbaum, Kelly, & Wagner, 2001; Ping & Goldin-Meadow, 2010; Wagner, Nusbaum, & Goldin-Meadow, 2004).

In order to make causal claims about gesture's function, it is necessary to manipulate gesture. As mentioned earlier, our study did not manipulate the gestures that the participants produced and, in fact, the participants produced their gestures after solving the problem. Thus, although the gestures the participants produced may have reflected their problem-solving skills, those gestures could not have played a role in the application of those skills (but see Goldin-Meadow et al., in press, who did manipulate gesturing in children and found that producing gesture improved children's performance on a mental rotation task). One interesting question for future research is whether encouraging low-spatial individuals to use their gestures to represent the internal structure of blocks (as our high-spatial group did) would change their performance on a mental rotation task.

Lastly, our study finds a tight coupling between the information conveyed in gesture and speech in a task involving mental transformation, as suggested by the Chu and Kita (2008) findings. Of the 239 trials on which the participants produced gestures, only 12 (5%) were produced without speech (i.e., gesture always occurred with speech). Moreover, the gestures that the participants produced conveyed the same information as was conveyed in the accompanying speech in all but 24 of the 239 trials (10%). The speech-gesture pattern in our study is thus different from many previous studies of spatial tasks, for example, Emmorey and Casey's (2001) study of adults telling another person where to place blocks, and Sauter and colleagues' (2012) study of children and adults telling another person about the layout of a simple space. Both groups found that the speaker often conveyed spatial information in gesture that was not found in the accompanying speech. Future work is needed to understand when gesture does, or does not, go beyond speech.

In conclusion, we examined the gestures used in a mental rotation task by both low- and high-spatial ability adults. High-spatial individuals produced more dynamic gestures and fewer static gestures overall than low-spatial individuals, but their static gestures were more likely to convey the structure of objects. These findings suggest that encoding object structure may be as important as rotating the object to succeed on a mental spatial transformation task.

Acknowledgement

This research was supported in part by a grant to the Spatial Intelligence and Learning Center, funded by the National Science Foundation (grant numbers SBE-0541957 and SBE-1041707), and by NICHD R01 HD47450 and NSF BCS-0925595 (to SGM), and by a National Science Foundation Fostering Interdisciplinary Research on Education grant (DRL- 1138619). We would like to thank Shannon Fitzhugh, Dominique Dumay for their assistance in collecting data, Michelle Chery for reliability coding, and the RISC group at Temple University for their helpful comments in this research.

Footnotes

Two of these five individuals were in the low-spatial group based on their MRT-A scores.

Although some researchers using MRT-A identify individuals as high ability if they score > 50% and low ability if they score ≤ 50% (e.g., Geiser, Lehmann, & Eid, 2006), we used the median split to divide participants into low- and high-scoring groups because of the distribution of scores in this sample (range: 0 – 87.5).

References

- Alibali MW, Heath DC, Myers HJ. Effects of visibility between speaker and listener on gesture production: Some gestures are meant to be seen. Journal of Memory and Language. 2001;44:169–188. [Google Scholar]

- Alibali MW. Gesture in spatial cognition: Expressing, communicating and thinking about spatial information. Spatial Cognition and Computation. 2005;5:307–331. [Google Scholar]

- Alibali MW, Spencer RC, Knox L, Kita S. Spontaneous gestures influence strategy choices in problem solving. Psychological Science. 2011;22:1138–1144. doi: 10.1177/0956797611417722. [DOI] [PubMed] [Google Scholar]

- Amorim M-L, Isableu B, Jarraya M. Embodied spatial transformations: “Body analogy” for the mental rotation of objects. Journal of Experimental Psychology: General. 2006;135:327–347. doi: 10.1037/0096-3445.135.3.327. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behavioral and Brain Sciences. 1999;22:577–660. doi: 10.1017/s0140525x99002149. [DOI] [PubMed] [Google Scholar]

- Beilock SL, Lyons IM, Mattarella-Micke A, Nusbaum HC, Small SL. Sports experience changes the neural processing of action language. Proceedings of the National Academy of Sciences USA. 2008;105:13269–13273. doi: 10.1073/pnas.0803424105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beilock SL, Goldin-Meadow S. Gesture changes thought by grounding it in action. Psychological Science. 2010;21:1605–1611. doi: 10.1177/0956797610385353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casey MB, Nuttall R, Pezaris E, Benbow CP. The influence of spatial ability on gender differences in mathematics college entrance test scores across diverse samples. Developmental Psychology. 1995;31:697–705. [Google Scholar]

- Chu M, Kita S. Spontaneous gestures during mental rotation tasks: Insights into the microdevelopment of the motor strategy. Journal of Experimental Psychology: General. 2008;137:706–723. doi: 10.1037/a0013157. [DOI] [PubMed] [Google Scholar]

- Chu M, Kita S. The nature of gestures' beneficial role in spatial problem solving. Journal of Experimental Psychology: General. 2011;140:102–116. doi: 10.1037/a0021790. [DOI] [PubMed] [Google Scholar]

- Church RB, Goldin-Meadow S. The mismatch between gesture and speech as an index of transitional knowledge. Cognition. 1986;23:43–71. doi: 10.1016/0010-0277(86)90053-3. [DOI] [PubMed] [Google Scholar]

- Collins DW, Kimura D. A large sex difference on a two- dimensional mental rotation task. Behavioral Neuroscience. 1997;111:845–849. doi: 10.1037//0735-7044.111.4.845. [DOI] [PubMed] [Google Scholar]

- Cooper LA. Demonstration of a mental analog of an external rotation. Perception & Psychophysics. 1976;19:296–302. [Google Scholar]

- Cooper LA, Shepard RN. Chronometric studies of the rotation of mental images. In: Chase WG, editor. Visual information processing. Academic Press; New York: 1973. pp. 75–176. [Google Scholar]

- Emmorey K, Casey S. Gesture, thought and spatial language. Gesture. 2001;1:35–50. [Google Scholar]

- Frick A, Daum MM, Walser S, Mast FW. Motor processes in children's mental rotation. Journal of Cognition and Development. 2009;10:18–40. [Google Scholar]

- Feyereisen P, Havard I. Mental imagery and production of hand gestures while speaking in younger and older adults. Journal of Nonverbal Behavior. 1999;23:153–171. [Google Scholar]

- Garber P, Goldin-Meadow S. Gesture offers insight into problem solving in adults and children. Cognitive Science. 2002;26:817–831. [Google Scholar]

- Geiser C, Lehmann W, Eid M. Separating `Rotaters' from `Non-Rotaters' in the Mental Rotations Test: A Multigroup Latent Class Analysis. Multivariate Behavioral Research. 2006;41:261–293. doi: 10.1207/s15327906mbr4103_2. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S. Hearing gesture: How our hands help us think. Harvard University Press; Cambridge, MA: 2003. [Google Scholar]

- Goldin-Meadow S, Beilock SL. Action's influence on thought: The case of gesture. Perspectives on Psychological Science. 2010;5:664–674. doi: 10.1177/1745691610388764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin-Meadow S, Levine SL, Zinchenko E, Yip TK-Y, Hemani N, Factor L. Doing gesture promotes learning a mental transformation task better than seeing gesture. Developmental Science. doi: 10.1111/j.1467-7687.2012.01185.x. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin-Meadow S, Nusbaum H, Kelly SD, Wagner S. Explaining math: Gesturing lightens the load. Psychological Sciences. 2001;12:516–522. doi: 10.1111/1467-9280.00395. [DOI] [PubMed] [Google Scholar]

- Hegarty M. Mechanical reasoning as mental simulation. Trends in Cognitive Sciences. 2004;8:280–285. doi: 10.1016/j.tics.2004.04.001. [DOI] [PubMed] [Google Scholar]

- Hegarty M, Mayer S, Kriz S, Keehner M. The role of gestures in mental animation. Spatial Cognition and Computation. 2005;5:333–35. [Google Scholar]

- Hostetter AB, Alibali MW. Raise your hand if you're spatial: Relations between verbal and spatial skills and gesture production. Gesture. 2007;7:73–95. [Google Scholar]

- Hostetter AB, Alibali MW, Bartholomew AE. Gesture during mental rotation. In: Carlson L, Hoelscher C, Shipley TF, editors. Proceedings of the 33rd Annual Conference of Cognitive Science Society. Cognitive Science Society; Austin, TX: 2011. pp. 1448–1453. [Google Scholar]

- Hyun J-S, Luck SJ. Visual working memory as the substrate for mental rotation. Psychonomic Bulletin and Review. 2007;14:154–158. doi: 10.3758/bf03194043. [DOI] [PubMed] [Google Scholar]

- Jansen-Osmann P, Heil M. Suitable stimuli to obtain (no) gender differences in the speed of cognitive processes involved in mental rotation. Brain and Cognition. 2007;64:217–227. doi: 10.1016/j.bandc.2007.03.002. [DOI] [PubMed] [Google Scholar]

- Just MA, Carpenter PA, Maguire M, Diwadkar V, McMains S. Mental rotation of objects retrieved from memory: A functional MRI study of spatial processing. Journal of Experimental Psychology: General. 2001;130:493–504. doi: 10.1037//0096-3445.130.3.493. [DOI] [PubMed] [Google Scholar]

- Kosslyn SM, Margolis JA, Barrett AM, Goldknopf EJ, Daly PF. Age differences in imagery abilities. Child Development. 1990;61:995–101. [PubMed] [Google Scholar]

- Lavergne J, Kimura D. Hand movement asymmetry during speech: No effect of speaking topic. Neuropsychologia. 1987;25:689–693. doi: 10.1016/0028-3932(87)90060-1. [DOI] [PubMed] [Google Scholar]

- McNeill D. Hand and mind. University of Chicago Press; Chicago: 1992. [Google Scholar]

- Perry M, Elder AD. Knowledge in transition: Adults' developing understanding of a principle of physical causality. Cognitive Development. 1997;12:131–157. [Google Scholar]

- Perry M, Church RB, Goldin-Meadow S. Transitional knowledge in the acquisition of concepts. Cognitive Development. 1988;3:359–400. [Google Scholar]

- Peters M, Laeng B, Latham K, Jackson M, Zaiyouna R, Richardson C. A Redrawn Vandeberg and Kuse Mental Rotations Test: Different Versions and Factors that Affect Performance. Brain and Cognition. 1995;28:39–58. doi: 10.1006/brcg.1995.1032. [DOI] [PubMed] [Google Scholar]

- Peters M, Lehmann W, Takaira S, Takeuchi Y, Jordan K. Mental rotation test performance in four cross-cultural samples (N=3367): Overall sex differences and the role of Academic Program in performance. Cortex. 2006;42:1005–1014. doi: 10.1016/s0010-9452(08)70206-5. [DOI] [PubMed] [Google Scholar]

- Ping R, Goldin-Meadow S. Gesturing saves cognitive resources when talking about nonpresent objects. Cognitive Science. 2010;34:602–619. doi: 10.1111/j.1551-6709.2010.01102.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauscher FB, Krauss RM, Chen Y. Gesture, speech, and lexical access: The role of lexical movements in speech production. Psychological Science. 1996;7:226–231. [Google Scholar]

- Sauter A, Uttal D, Alman AS, Goldin-Meadow S, Levine SC. Learning what children know about space from looking at their hands: The added value of gesture in spatial communication. Journal of Experimental Child Psychology. 2012;111:587–606. doi: 10.1016/j.jecp.2011.11.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwartz DL, Black JB. Shuttling between depictive models and abstract rules: Induction and fallback. Cognitive Science. 1996;20:457–497. [Google Scholar]

- Schwartz DL, Black T. Inferences through imagined actions: Knowing by simulated doing. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1999;25:116–136. [Google Scholar]

- Schwartz DL, Holton DL. Tool use and the effect of action on the imagination. Journal of Experimental Psychology: Learning, Memory and Cognition. 2000;26:1655–1665. doi: 10.1037//0278-7393.26.6.1655. [DOI] [PubMed] [Google Scholar]

- Sekiyama K. Kinesthetic aspects of mental representations in the identification of left and right hands. Perception & Psychophysics. 1982;32:89–95. doi: 10.3758/bf03204268. [DOI] [PubMed] [Google Scholar]

- Shepard R, Cooper L. Mental images and their transformations. MIT Press; Cambridge, MA: 1982. [Google Scholar]

- Shepard RN, Metzler J. Mental rotation of three-dimensional objects. Science. 1971;171:701–703. doi: 10.1126/science.171.3972.701. [DOI] [PubMed] [Google Scholar]

- Vanetti EJ, Allen GL. Communicating environmental knowledge: The impact of verbal and spatial abilities on the production and comprehension of route directions. Environment and Behavior. 1988;20:667–682. [Google Scholar]

- Vandenberg SG, Kuse AR. Mental rotations, a group test of three-dimensional spatial visualization. Perceptual and Motor Skills. 1978;47:599–604. doi: 10.2466/pms.1978.47.2.599. [DOI] [PubMed] [Google Scholar]

- Wexler M, Kosslyn SM, Berthoz A. Motor processes in mental rotation. Cognition. 1998;68:77–94. doi: 10.1016/s0010-0277(98)00032-8. [DOI] [PubMed] [Google Scholar]

- Wilson M. Six views of embodied cognition. Psychonomic Bulletin & Review. 2002;9:625–636. doi: 10.3758/bf03196322. [DOI] [PubMed] [Google Scholar]

- Wohlschlager A, Wohlschlager A. Mental and manual rotation. Journal of Experimental Psychology, Human Perception and Performance. 1998;2:397–412. doi: 10.1037//0096-1523.24.2.397. [DOI] [PubMed] [Google Scholar]

- Wright R, Thompson WL, Ganis G, Newcombe NS, Kosslyn SM. Training generalized spatial skills. Psychonomic Bulletin & Review. 2008;15:763–771. doi: 10.3758/pbr.15.4.763. [DOI] [PubMed] [Google Scholar]

- Xu L, Franconeri SL. The head of the table: The location of the spotlight of attention may determine the `front' of ambiguous objects. Journal of Neuroscience. in press. [Google Scholar]