Abstract

This paper investigates a rapid and accurate detection system for spoilage in meat. We use unsupervised feature learning techniques (stacked restricted Boltzmann machines and auto-encoders) that consider only the transient response from undoped zinc oxide, manganese-doped zinc oxide, and fluorine-doped zinc oxide in order to classify three categories: the type of thin film that is used, the type of gas, and the approximate ppm-level of the gas. These models mainly offer the advantage that features are learned from data instead of being hand-designed. We compare our results to a feature-based approach using samples with various ppm level of ethanol and trimethylamine (TMA) that are good markers for meat spoilage. The result is that deep networks give a better and faster classification than the feature-based approach, and we thus conclude that the fine-tuning of our deep models are more efficient for this kind of multi-label classification task.

Keywords: electronic nose, sensor material, representational learning, fast multi-label classification

1. Introduction

Nanostructured zinc oxide (ZnO) thin films are showing an increasing potential as sensing components in electronic nose instruments. As described in [1-3], these materials have been successfully applied in the detections of volatile organic compounds particularly associated to markers of meat spoilage. With certain markers such as ethanol, the nanostructured ZnO thin films have shown detection levels in the ppb levels, thus outperforming traditional metal oxide semiconductors based on SnO2. However, as illustrated in [3], a potential drawback is the longer settling time required, and therefore traditional methods that rely on sensors reaching a steady state are less suitable for applications requiring a fast response. In this paper, we propose to circumvent this shortcoming via a fast classification algorithm that does not require that the sensors reach a steady state, but instead uses transient information from the response characteristic of the sensor when exposed to an analyte. The application area that we are considering is food safety and in particular we aim at developing an instrument that can be used in situ for rapid identification of meat spoilage [4–8].

In the past two decades, the awareness about food safety, particularly with respect to specific pathogenic bacteria, has increased. This is especially true in the case of meat and fish, where microbial spoilage can be dangerous for humans, and where there is a clear requirement for a rapid and accurate detection system [9–11]. Traditionally, fish and meat quality is assessed by examining the structure of the food (texture, tenderness, flavor, juiciness, color), or by detecting the microorganism and its count, or by detecting the gases generated by these microorganisms. A number of techniques have been used to examine the quality of the meat, namely instrumental mechanical methods [12,13], the ultrasound technique [14,15], as well as optical spectroscopy [16,17], microscopy [18,19] and magnetic resonance [20,21] methods. These techniques have several disadvantages: they are destructive of the sample, they require complex sample preparation and data analysis, and they can be quite costly. Using electronic nose technologies, that is, an array of partially selective gas sensors together with pattern recognition techniques, gives a rapid quality discrimination with great accuracy and efficiency [22–26]. For applications regarding meat spoilage, two typical markers of interest are ethanol and trimethylamine [27–31].

Discrimination of the transient response has shown to be successful in previous investigations with SnO2 semiconductors [32]. The works considering the transient response can be divided between those using solely transient response, and those which use both features extracted from the transient, as well as the steady-state phase. In the latter case, the features used include modeling the signal with a multi-exponential function, features extracted using polynomial and exponential functions, ARX models, wavelets, and simple heuristics. In these works, classification performance improves when taking into account both the transient and the steady-state properties of the signal response. Works which only use the transient response have been mainly applied to mobile robotic olfaction where so-called open sampling systems are used. These systems have an open exposed sensor array interacting directly with the environment while the robot is moving [33–35]. In these works, only the transient information is available, and successful classification has been obtained using various feature extraction techniques (Fast Fourier transform, Discrete Wavelet Transform) together with a support vector machine classifier [35,36].

In this paper, we investigate the use of transient analysis on nanostructured zinc oxide thin films. Furthermore, in order to circumvent the tendency to rely on handmade features when extracting relevant data from the signal response, this paper investigates the use of unsupervised feature learning, where, namely, deep networks that include stacks of restricted Boltzmann machines and stacks of auto-encoders are applied. Previously, it has been demonstrated that a stack of restricted Boltzmann machines can be used for discrimination of a typical three phase signal (baseline, exposure and recovery) from commercially available tin dioxide semiconductor sensors [37]. However, this paper investigates the possibility to apply unsupervised feature learning considering only information from the transient response collected from nanostructured ZnO thin films both undoped and doped with Mn and F. Our results show that it is possible to improve classification speed from 14 min (in some cases) to less than 30 s.

2. Materials & Methods

2.1. Materials

Nanostructured undoped ZnO, Mn-doped ZnO, and F-doped ZnO thin films were deposited using the spray pyrolysis technique over the surface of ultrasonically cleaned glass substrates at optimized deposition conditions. The structural, morphological, optical, electrical properties, and the sensing characteristics (transient response, response and recover times) towards few ppm levels of ethanol and trimethylamine of these films are reported in [1–3]. From the investigation on the influence of precursor concentrations on the structural, morphological, optical, and electrical properties of ZnO thin films deposited with the 0.05 M of zinc acetate dihydrate 0.004 M of manganese acetate in 0.05 M of zinc acetate dihydrate, 0.002 M of ammonium fluoride in 0.05 M of zinc acetate dihydrate and 0.06 M of cadmium acetate dihydrate in 0.04 M of zinc acetate dihydrate as precursor concentrations were taken into consideration for sensing studies. In this investigation, these developed sensing elements have been used to collect sensing data for various concentrations of ethanol and trimethylamine (TMA) at the optimized operating temperature using the methodology reported in [1–3].

Mn-doped ZnO is an n-type semiconducting material. When it is exposed to the atmosphere, the oxygen molecules react with its surface and capture electrons from its conduction band. This in turn leads to a decrease in the electron concentration and, hence, increases the surface resistance until equilibrium. The stabilized surface resistance forms the baseline for the sensing studies. When the reducing vapours like ethanol or TMA are presented to the sensing element, the vapour reacts with surface-adsorbed oxygen species and increases the electrons concentration on the surface. As a result, the surface resistance decreases from the stabilized baseline and attains saturation. This change in surface resistance has a strong correlation with the concentration of ethanol/TMA in dry air atmospheric conditions [3].

In the case of F-doped ZnO, the baseline formation is very similar to the Mn-doped ZnO case. However, the response towards ethanol and TMA is the opposite of the undoped ZnO and Mn-doped ZnO sensing behavior, see Figure 1. This is because of the high electronegative fluorine sites restrict the flow of electrons injected by the reducing nature of ethanol/TMA which in turn enhanced the scattering of the electrons at the grain boundaries. As a result, the surface resistance increases form the baseline and attains saturation [1].

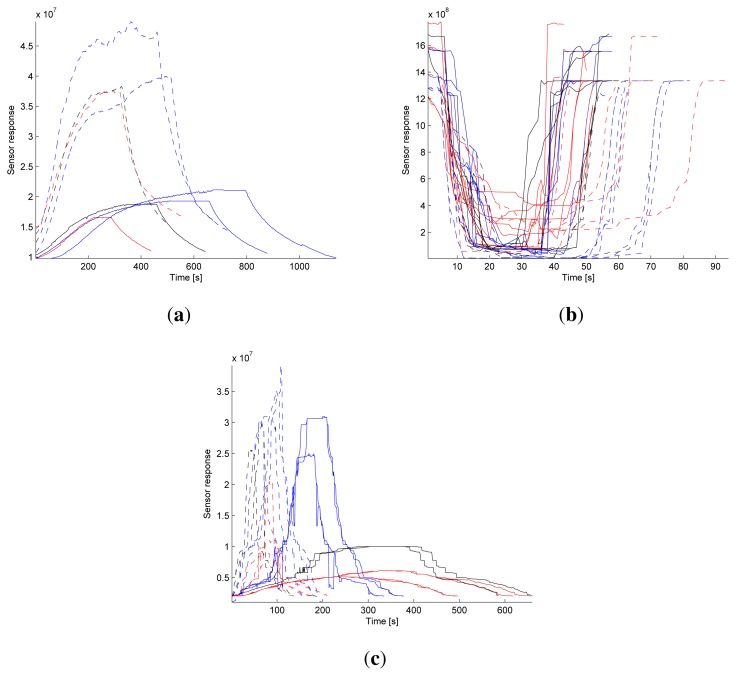

Figure 1.

Sensor response for (a) undoped ZnO, (b) Mn doped ZnO, and (c) F doped ZnO. Solid line is the response towards ethanol and dotted line is the response towards trimethylamine. The color indicates ppm level where red, black, and blue represent low, medium, and high ppm level, respectively. Best viewed in color.

2.2. Sample Collection

Spray-deposited nanostructured thin films with the dimension of 1 cm × 1 cm were used as the sensing element. Electrical contacts were made using copper wire and silver paste on the film to obtain Ohmic contact. The response of the selected films was observed at an optimized operating temperature using a homemade volatile organic compound (VOC) testing chamber of 5 L capacity with a digital thermostat coupled with a compact heater and a septum provision to inject desired concentration of VOCs using a micro-syringe. Changes in the electrical resistance of the films were recorded using an electrometer (Model 6517A, Keithley, Germany) as a function of time during the process of injection and venting.

As soon as the resistance was stabilized in dry air atmosphere, the baseline was fixed. Then the desired concentration of the target gas was injected into the glass chamber using a micro-syringe. Once the change in resistance became stable or saturated in the presence of target gas, the target gas was evacuated using the vacuum pump.

2.3. Data Preprocessing

A total of 64 acquisitions (baseline, exposure, and recovery phase) of three surface materials (undoped ZnO, Mn-doped ZnO, and F-doped ZnO with 8, 36, and 20 acquisitions, respectively) exposed to two gases (ethanol and trimethylamine, 34 and 30 acquisitions, respectively) with three different ppm intervals (<20 ppm, 20–50 ppm and >50 ppm, 24, 16 and 24 acquisitions respectively) are obtained. Figure 1 shows the raw un-normalized sensor responses. The signal amplitude and response time vary greatly, e.g., the response from undoped ZnO reaches a maximum value after 6–14 min, F-doped ZnO reaches a maximum value after 1–5 min, and Mn-doped ZnO reaches a maximum value after just a few seconds. Table 1 shows a summary of all 64 acquisitions.

Table 1.

Summary of all 64 acquisitions.

| Material | Gas | ppm level | # acquisitions |

|---|---|---|---|

| ZnO | ethanol | low medium high |

1 1 2 |

| TMA | low medium high |

1 1 2 |

|

| MnZnO | ethanol | low medium high |

8 6 6 |

| TMA | low medium high |

6 4 6 |

|

| FZnO | ethanol | low medium high |

4 2 4 |

| TMA | low medium high |

4 2 4 |

Preprocessing is done by subtracting each acquisition with the baseline value and dividing with the maximum value of all acquisitions for that material.

2.4. Dimension Reduction and Classification

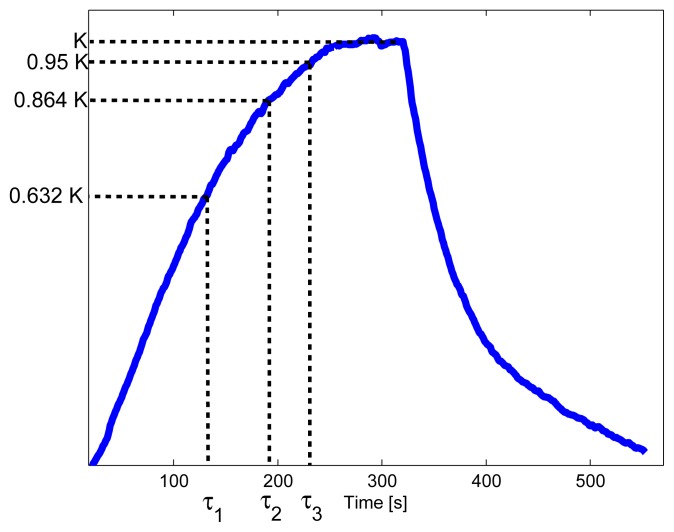

For each acquisition, a set of features are extracted and used with three individually trained support vector machines (SVM) to classify each acquisition into material, gas and ppm level. A total of 7 features [38] are used: the maximum response (K), the first, second, and third time constant (τ1, τ2, and τ3 respectively), and the area under the response between 0 and τ1, τ1 and τ2 and τ2 to τ3, see Figure 2. Normalization of each feature is done by subtracting the mean and dividing with the standard deviation. Note that these features are based on knowing the maximum response, K.

Figure 2.

Depiction of feature extraction from one acquisition. See text for details.

A SVM with a Gaussian kernel is used, and cross-validation is used for selecting the model parameters C and γ. Comparison with Naive Bayes and a softmax classifier revealed that the SVM gave the best classification accuracy of the three.

2.5. Unsupervised Feature Learning

Deep neural networks (stacked restricted Boltzmann machines and auto-encoders) are employed in this work primarily because they offer the advantage of being unsupervised. Features are learned instead of being hand-designed, which can be a challenging task for gas sensor responses. Another possible advantage is the usage of self-taught learning [39] or transfer learning [40], which is a framework for training the model using additional examples not necessarily drawn from the same distribution as the samples of the classification task. This is especially useful when there are few labeled examples.

2.5.1. Stacked Restricted Boltzmann Machine

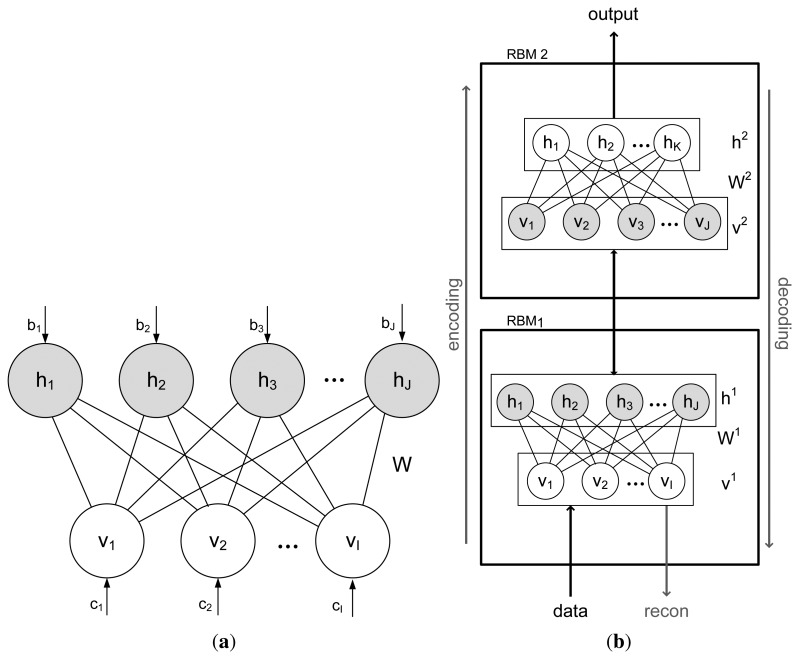

A Restricted Boltzmann Machine (RBM) is defined by restricting the interactions in the Boltzmann energy function [41] to only include connections between the input data and hidden units, i.e., there are no visible-to-visible, or hidden-to-hidden connections, see Figure 3.

Figure 3.

Graphical depiction of (a) RBM and (b) stacked RBM.

Modules of RBMs can be stacked on top of each other to form a deep belief network [42] (DBN). A DBN is a probabilistic undirected graphical model, where the model parameters are initialized by unsupervised greedy layer-wise training and the hidden layer from a lower-level RBM is the visible layer at the next level RBM. The layer of visible units (that represents the data), v, and hidden units, h, with corresponding bias vector, c and b are connected by a weight matrix, W and the energy function and joint distribution for a given visible and hidden vector are:

where Z is the partition function that ensures that the distribution is normalized.

To feed our sensor data to a RBM we chose a window width, w, which will be the number of visible units, and let visible unit υi represent data x(t + i) for t = 0…T − w where x is the data vector and T is the length of x.

For a Bernoulli–Bernoulli RBM (which assumes visible and hidden units in the range of [0, 1] and requires less data manipulation for our data and is simpler to implement and explain than a Gaussian–Bernoulli RBM), the feed-forward and feed-backward passes are given by:

where σ(·) is the sigmoid activation function . The parameters W, b, and v, are trained using contrastive divergence [42], which is an approximation of the gradient of the log likelihood of v. The learning rule for RBM is

where 〈·〉 is the average value over all training samples.

After the initial pre-training of each layer, the network is finetuned with backpropagation. Both unsupervised, by minimizing the reconstruction error from the input to the top RBM and back, and supervised, by minimizing the classification accuracy on the training data. The learning objective in the fine-tuning step is heavily regularized in order to prevent overfitting and reduces the model complexity.

A Conditional Restricted Boltzmann Machine [43] (cRBM) is similar to a RBM except that the bias vectors for the visible and hidden layers is dynamic and depends on previous visible layers. While RBMs are used to learn representations of data, the cRBM can model temporal dependencies and is usually used for making predictions [44], in particular for multivariate data. The dynamic bias vectors are defined as:

where Ai is the autoregressive connections between visible layers at time t − i and current visible layer, Bi is the weight matrix connecting visible layer at time t − i to the current hidden layer. The model order is defined by the constant n.

2.5.2. Auto Encoder

An auto-encoder [45] consists of a layer of visible units, υ, a layer of hidden units, h, and a layer of reconstruction of the visible units, υ̂. The layers are connected via weight matrices W1 and W2 and the hidden and reconstructed layer have bias vectors b1 and b2, respectively. The hidden layer has a non-linear activation function, σ, in this case the sigmoid function . The reconstruction layer uses a linear activation function, σ(x) = x, which enables the visible layer to have values below zero and over 1. A feed-forward step in the network thus becomes

The objective function for an auto-encoder is

where pj is the mean activation for unit j and N is the number of training examples. The first term is the square root error term, the second term is the weight decay term, and the third term is the sparsity penalty term [46]. Hyperparameters (λ, β, and ρ) are set by examining the model parameters, layer activations and classification accuracy on validation set from randomized hyperparameter initialization [47].

The cost function for supervised fine-tuning is the same as for unsupervised training except for the square error term which becomes

where ŷ is the training label vector.

The auto-encoder can be modified to resemble the structure of a cRBM in order to make it more suitable for multivariate time-series data. This is done by setting the new visible layer as the concatenation of current and previous visible layers. For the first layer, this is equivalent to using a window of data as input. For the second layer, however, this is equivalent to using a sequence of first hidden layers as input to the second layer. More precisely, with model order, ni, at layer i and signal data at time t, s(t), the visible layer in the first layer becomes

| (1) |

where T1 is the number of samples in the data.

For the second layer, the visible layer is the concatenation of previous hidden layers and becomes

| (2) |

where is the number of hidden layer 1 samples.

3. Results and Discussion

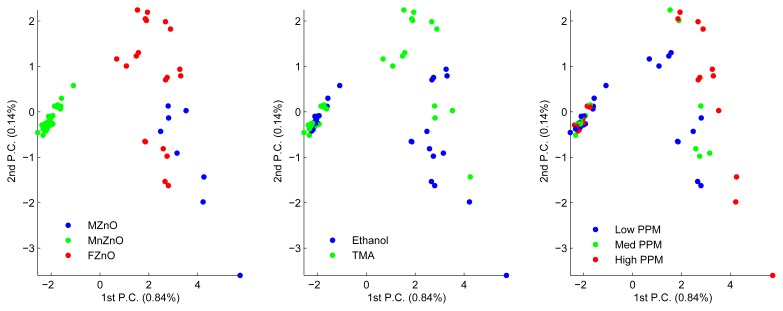

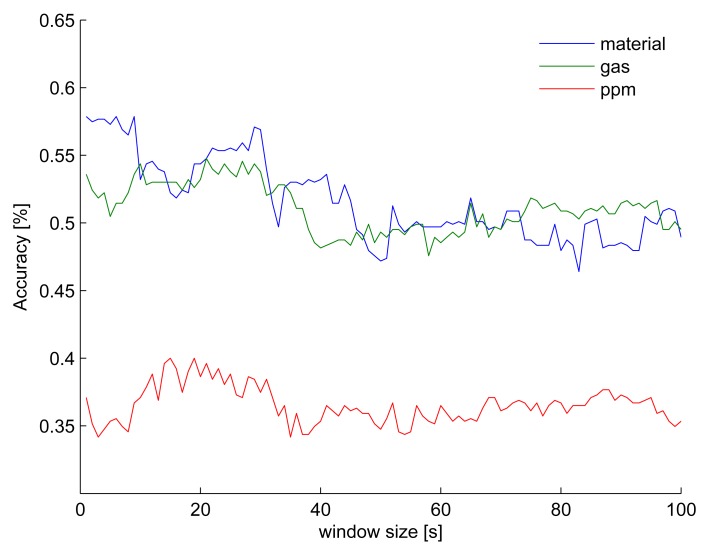

Figure 4 shows three principal component analysis (PCA) with the same data but colored according to the three classification tasks: material, gas, and ppm level. These plots indicate that material is the easiest category to classify, followed by gas and, finally, ppm level. Figure 5 shows the classification accuracy when a support vector machine (SVM) is trained on (normalized) raw data with increasing window size. Using around 25 s of raw data with a SVM gives the best accuracy for the most difficult classification task. Therefore, a deep belief network (DBN) and auto-encoder are initially trained on a window of 25 s of input data and then incrementally decreased.

Figure 4.

PCA of the data with 7 extracted features. Each point belongs to three categories, namely material of sensor, type of gas, and ppm level of the gas. For visualization purposes, three plots are shown where the data points have been colored according to category belonging.

Figure 5.

Classification accuracy when training a SVM on raw data with variable window size. The optimal window size is around 25 s.

Table 2 shows the classification accuracies for a number of experiments. The accuracy with a SVM with seven features reaches 89.0%, 60.1% and 42.9% for the task of classifying material, gas and ppm level, respectively. However, this method requires the knowledge of the maximum response to extract the required features, which for some materials could take up to 14 min.

Table 2.

Classification accuracy (mean ± standard deviation) [%] with five-fold cross-validation for the task of classifying material, gas, and ppm level using different set-ups. The number after DBN defines the window width (number of visible units) and the numbers after auto-encoder define the model order in the first and second layer.

| Material | Gas | ppm | Required input data | |

|---|---|---|---|---|

| SVM, 7 features | 89.0 ± 6.3 | 60.1 ± 11.1 | 42.9 ± 7.6 | 15 s to 14 min |

| SVM, 25 s raw data | 55.5 ± 3.7 | 53.8 ± 7.3 | 38.8 ± 4.1 | 25 s |

| DBN, 25 s | 86.8 ± 4.6 | 83.7 ± 4.1 | 49.5 ± 5.6 | 25 s |

| DBN, 10 s | 83.7 ± 1.9 | 61.4 ± 4.7 | 41.7 ± 6.8 | 10 s |

| DBN, 5 s | 72.4 ± 3.5 | 60.0 ± 4.5 | 33.0 ± 3.3 | 5 s |

| Auto-encoder, 5-5 | 93.2 ± 3.6 | 84.3 ± 3.8 | 61.2 ± 6.8 | 25 s |

| Auto-encoder, 5-2 | 95.7 ± 1.3 | 80.8 ± 6.6 | 51.1 ± 1.6 | 10 s |

| Auto-encoder, 3-2 | 91.8 ± 3.2 | 71.1 ± 5.8 | 45.3 ± 4.5 | 6 s |

| Auto-encoder, 2-2 | 61.7 ± 1.5 | 45.4 ± 4.7 | 36.5 ± 3.5 | 4 s |

When using a two-layer unsupervised pre-trained and supervised fine-tuned DBN with the first 25 s of the response, the classification accuracy is 86.8%, 83.7% and 49.5%, which is better at classifying gas and ppm level and slightly worse at classifying the material compared to using a SVM with seven features. When the input window decreased, the classification accuracy decreases, as well. Before applying the DBN the data in the first 25 s where normalized in order to keep the values between 0 and 1. Additional training examples were obtained by shifting existing training examples.

A two-layer unsupervised pre-trained and supervised fine-tuned auto-encoder achieved 93.2%, 84.3%, 61.2% with the first 25 s of the response, which is already better than the feature-based approach on all three categories. The model uses a model order of 5 in both the first and second layer. With 10 s of input data (model order 5 in first layer and model order 2 in second layer) the accuracy becomes 95.7%, 80.8%, 51.1%, which is still higher than the SVM with seven features. Further decreasing the model order of the first layer reveals that a model order of 3 is better than the feature based approach but not a model order of 2. This means that the fastest classification at run-time, while still having comparable result with an approach where the maximum value is known, is 6 s of input data. When comparing the auto-encoder with a DBN, we see that given 25 s of input data to both models, the auto-encoder performs much better on all three tasks. Even when lowering the model order of the auto-encoder to 3 for first layer and 2 for second layer, resulting in using 6 s of data, the auto-encoder outperforms a DBN that uses 25 s of data. The main reason for this result is because our auto-encoder has been modified to better resemble a conditional RBM, which is more suitable for multi-variate time-series data than a standard DBN.

4. Conclusion

In this work, two deep network models, namely the DBN and an auto-encoder with concatenated previous visible layers, have been trained on multi-labeled data. The results have been compared to a feature-based approach where the maximum response is known. It was shown that the auto-encoder can achieve comparable results as the feature-based approach using only 6 s of input data. The DBN also achieves comparable results to the feature-based approach, though it requires more input data compared to the auto-encoder. In sum, this paper has shown that a deep network is able to give better and faster classification. Further, additional benefits arise when training a deep network. In the deep network, the unsupervised greedy layer-wise pre-training and unsupervised fine-tuning of the whole network is only performed once. When it is time to specialize the model to a specific task, the supervised fine-tuning step is performed for each classification task. In a SVM, on the other hand, the training has to be restarted for every classification task. Therefore, an unsupervised feature learning step is efficient for this kind of multi-label classification task.

Clearly for electronic nose applications, the selection of the materials used in the array is important to achieve good discrimination for the intended application. However, solving the problems related to the selectivity in gas-sensing applications still remains a major challenge. In this context, the different sensing behavior of F and Mn-doped ZnO thin films towards ethanol and TMA is encouraging and will help to solve the problem of selectivity. Though F/Mn-doped films are n-type semiconductors and ethanol/TMA are reducing gases, the presence of grain boundary scattering during sensing in the first case, and the absence of the same in the second case, helped to develop sensing elements with inherent selective nature. Adding such sensing elements to the array of sensors present in an electronic nose will provide new possibilities for detection of specific markers. Further, using these materials together with deep network models has shown to provide a classification performance (in accuracy and time) that is suitable for real-world deployment and comparable to current instruments on the market today.

Acknowledgments

The authors wish to express their sincere thanks to Department of Science & Technology, India and VINNOVA, Sweden for their financial support (Project ID: INT/SWD/VINN/P-04/2011). They also wish to acknowledge SASTRA University, Thanjavur, India and Orebro University, Orebro, Sweden for extending infrastructural support to carry out the study.

References

- 1.Sivalingam D., Rayappan J., Gandhi S., Madanagurusamy S., Sekar R., Krishnan U. Ethanol and trimethyl amine sensing by Zno-based nanostructured thin films. Int. J. Nanosci. 2011;10:1161–1165. [Google Scholar]

- 2.Sivalingam D., Gopalakrishnan J., Rayappan J.B. Influence of precursor concentration on structural, morphological and electrical properties of spray deposited ZnO thin films. Crystal Res. Technol. 2011;46:685–690. [Google Scholar]

- 3.Sivalingam D., Gopalakrishnan D., Rayappan R. Structural, morphological, electrical and vapour sensing properties of Mn doped nanostructured ZnO thin films. Sens. Actuators B Chem. 2012;166–167:624–631. [Google Scholar]

- 4.Danilo E., Federica R., Villani F. Mesophilic and psychrotrophic bacteria from meat and their spoilage potential in vitro and in beef. Appl. Environ. Microbiol. 2009;75:1990–2001. doi: 10.1128/AEM.02762-08. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Yu V., Musatov V., Sysoev M., Sommer M., Kiselev I. Assessment of meat freshness with metal oxide sensor microarray electronic nose: A practical approach. Sens. Actuators B Chem. 2010;144:99–103. [Google Scholar]

- 6.Hongsith N., Choopun S. Enhancement of ethanol sensing properties by impregnating platinum on surface of ZnO tetrapods. IEEE Sens. J. 2010;10:34–38. [Google Scholar]

- 7.Wei A. Recent progress in the ZnO nanostructure-based sensors. Mater. Sci. Eng. B Solid State Mater. Adv. Technol. 2011;176:1409–1421. [Google Scholar]

- 8.Janotti A., Walle C.V.D. Fundamentals of zinc oxide as a semiconductor. Rep. Progr. Phys. 2009;72:126501–126529. [Google Scholar]

- 9.Haugen J., Chanie E., Westad F., Jonsdottir R., Bazzo S., Labreche S., Marcq P., Lundbyc F., Olafsdottir G. Rapid control of smoked Atlantic salmon (Salmo salar) quality by electronic nose: Correlation with classical evaluation methods. Sens. Actuators B Chem. 2006;116:72–77. [Google Scholar]

- 10.Schlundt J. Emerging food-borne pathogens. Biomed. Environ. Sci. 2001;14:44–52. [PubMed] [Google Scholar]

- 11.Frost J.A. Current epidemiological issues in human cam-pylobacteriosis. J. Appl. Microbiol. 2001;90:85s–95s. doi: 10.1046/j.1365-2672.2001.01357.x. [DOI] [PubMed] [Google Scholar]

- 12.Timm R.R., Unruh J.A., Dikeman M.E., C.Hunt M., Lawrence T.E., Boyer J.E. Mechanical measures of uncooked beef longissimus muscle can predict sensory panel tenderness and Warner-Bratzler shear force of cooked steaks. J. Anim. Sci. 2003;81:1721–1727. doi: 10.2527/2003.8171721x. [DOI] [PubMed] [Google Scholar]

- 13.Shackelford S., Wheeler T., Koohmaraie M. Evaluation of slice shear force as an objective method of assessing beef longissimus tenderness. J. Anim. Sci. 1999;77:2693–2699. doi: 10.2527/1999.77102693x. [DOI] [PubMed] [Google Scholar]

- 14.Stephens J.W., Unruh J.A., Dikeman M.E., Hunt M.C., Lawrence T.E., Loughin T.M. Mechanical probes can predict tenderness of cooked beef longissimus using uncooked measurements. J. Anim. Sci. 2004;82:2077–2086. doi: 10.2527/2004.8272077x. [DOI] [PubMed] [Google Scholar]

- 15.Ferguson D. Objective Evaluation of Meat-Quality Characteristics. Proceedings of the Australian Meat Industry Research Conference; Gold Coast, Australia. 11–13 October 1993; pp. 1–8. [Google Scholar]

- 16.Abouelkaram S., Suchorski K., Buqueta B., Bergea P., Culiolia P., Delachartreb P., Basset O. Effects of muscle texture on ultrasonic measurements. Food Chem. 2000;69:447–455. [Google Scholar]

- 17.Ophir J., Miller R.K., Ponnekanti H., Cespedes I., Whittaker A.D. Elastography of beef muscle. Meat Sci. 1994;36:239–250. doi: 10.1016/0309-1740(94)90043-4. [DOI] [PubMed] [Google Scholar]

- 18.Kempen T.V. Infrared technology in animal production. World Poultry Sci. J. 2001;57:29–48. [Google Scholar]

- 19.Meullenet J.F., Jonville E. Prediction of the texture of cooked poultry pectoralis major muscles by near-infrared reflectance analysis of raw meat. J. Texture Stud. 2004;35:573–585. [Google Scholar]

- 20.Herrero A. Raman spectroscopy a promising technique for quality assessment of meat and fish: A review. Food Chem. 2008;107:1642–1651. [Google Scholar]

- 21.Xing J., Ngadi M., Gunenc A., Prasher S., Gariepy C. Use of visible spectroscopy for quality classification of intact pork meat. J. Food Eng. 2007;82:135–141. [Google Scholar]

- 22.Egelandsdal B., Wold J., Sponnich A., Neegard S., Hildrum K. On attempts to measure the tenderness of Longissimus dorsi muscles using fluorescence emission spectra. J. Food Eng. 2002;60:187–202. doi: 10.1016/s0309-1740(01)00121-8. [DOI] [PubMed] [Google Scholar]

- 23.Oshima I., Iwamoto H., Tabata S., Ono Y., Shiba N. Comparative observations on the growth changes of the histochemical property and collagen architecture of the Musculus pectoralis from Silky, layer-type and meat-type cockerels. J. Food Eng. 2007;78:619–630. [Google Scholar]

- 24.Yarmand M., Baumgartner P. Environmental scanning electron microscopy of raw and heated veal semimembranosus muscle. J. Agric. Sci. Technol. 2000;2:217–214. [Google Scholar]

- 25.Venturi L., Rocculi P., Cavani C., Placucci G., Rosa M., Cremonini M. Water absorption of freeze-dried meat at different water activities: A multianalytical approach using sorption isotherm, differential scanning calorimetry, and nuclear magnetic resonance. J. Agric. Food Chem. 2007;55:10572–10578. doi: 10.1021/jf072874b. [DOI] [PubMed] [Google Scholar]

- 26.Swatland H. Effect of connective tissue on the shape of reflectance spectra obtained with a fibre-optic fat-depth probe in beef. Meat Sci. 2001;57:209–213. doi: 10.1016/s0309-1740(00)00095-4. [DOI] [PubMed] [Google Scholar]

- 27.Haugen J., Chanie E., Westad F., Jonsdottir R., Bazzo S., Labreche S., Marcq P., Lundbyc F., Olafsdottir G. Rapid control of smoked Atlantic salmon (Salmo salar) quality by electronic nose: Correlation with classical evaluation methods. Sens. Actuators B Chem. 2006;116:72–77. [Google Scholar]

- 28.Edwards R., Dainty R., Hibbard C. Volatile compounds produced by meat pseudomonads and relate reference strains during growth on beef stored in air at chill temperatures. J. Appl. Bacteriol. 1987;62:403–412. doi: 10.1111/j.1365-2672.1987.tb02669.x. [DOI] [PubMed] [Google Scholar]

- 29.Wilson A., Baietto M. Applications and advances in electronic-nose technologies. Sensors. 2009;9:5099–5148. doi: 10.3390/s90705099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Mayr D., Margesin R., Klingsbichel E., Hartungen E., Jenewein D., Schinner F., Mark T.D. Rapid detection of meat spoilage by neasuring volatile organic compounds by using proton transfer reaction mass spectrometer. Appl. Environ. Microbiol. 2003;69:4697–4705. doi: 10.1128/AEM.69.8.4697-4705.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Barbri N.E., Mirhisse J., Ionescu R., Bari N.E., Correig X., Bouchikhi B., Llobet E. An electronic nose system based on a micro-machined gas sensor array to assess the freshness of sardines. Sens. Actuators B Chem. 2009;141:538–543. [Google Scholar]

- 32.Trincavelli M., Coradeschi S., Loutfi A. Odour classification system for continuous monitoring applications. Sens. Actuators B Chem. 2009;139:265–273. [Google Scholar]

- 33.Loutfi A., Coradeschi S. Relying on an Electronic Nose for Odor Localization. Proceedings of the IEEE International Symposium on Virtual and Intelligent Measurement Systems; Anchorage, AK, USA. 19–20 May 2002; pp. 46–50. [Google Scholar]

- 34.Lilienthal A., Loutfi A., Duckett T. Airborne chemical sensing with mobile robots. Sensors. 2006;6:1616–1678. [Google Scholar]

- 35.Loutfi A., Coradeschi S., Lilienthal A., Gonzalez J. Gas distribution mapping of multiple odour sources using a mobile robot. Robotica. 2009;27:311–319. [Google Scholar]

- 36.Trincavelli M., Coradeschi S., Loutfi A. Classification of Odours with Mobile Robots Based on Transient Response. Proceedings of the International Conference on Intelligent Robots and Systems; Nice, France. 22–26 September 2008; pp. 4110–4115. [Google Scholar]

- 37.Längkvist M., Loutfi A. Unsupervised Feature Learning for Electronic Nose Data Applied to Bacteria Identification in Blood. Proceedings of the NIPS 2011 Workshop on Deep Learning and Unsupervised Feature Learning; Granada, Spain. 3 December 2011. [Google Scholar]

- 38.Carmela L., Levyb S., Lancetc D., Harelm D. A feature extraction method for chemical sensors in electronic noses. Sens. Actuators B Chem. 2003;93:67–76. [Google Scholar]

- 39.Raina R., Battle A., Lee H., Packer B., Ng A.Y. Self-taught learning: Transfer learning from unlabeled data. Proceedings of the 24th International Conference on Machine Learning; Oregon, OR, USA. 21–23 June 2007. [Google Scholar]

- 40.Jia S., Yang Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010;22:1345–1359. [Google Scholar]

- 41.Ackley D.H., Hinton G.E., Sejnowski T.J. A learning algorithm for Boltzmann machines. Cognit. Sci. 1985;9:147–169. [Google Scholar]

- 42.Hinton G., Osindero S., Teh Y. A fast learning algorithm for deep belief nets. Neural Comput. 2006;18:1527–1554. doi: 10.1162/neco.2006.18.7.1527. [DOI] [PubMed] [Google Scholar]

- 43.Taylor G., Hinton G.E., Roweis S. Modeling human motion using binary latent variables. Adv. Neural Inform. Process. Syst. 2007;17:1345–1352. [Google Scholar]

- 44.Mnih V., Larochelle H., Hinton G. Conditional Restricted Boltzmann Machines for Structured Output Prediction. Proceedings of the Uncertainty in Artificial Intelligence; Barcelona, Spain. 14–17 July 2011. [Google Scholar]

- 45.Bengio Y., Lamblin P., Popovici D., Larochelle H. Greedy layer-wise training of deep networks. Adv. Neural Inform. Process. Syst. 2006;19:153–160. [Google Scholar]

- 46.Bengio Y., Courville A., Vincent P. Unsupervised Feature Learning and Deep Learning: A Review and New Perspectives. Available online: http://arxiv.org/pdf/1206.5538v2.pdf (accessed on 17 January 2013)

- 47.Bengio Y. Practical Recommendations for Gradient-Based Training of Deep Architectures. Available online: http://arxiv.org/pdf/1206.5533v2.pdf (accessed on 17 January 2013)