Abstract

Automated surveillance utilizing electronically available data has been found to be accurate and save time. An automated CDI surveillance algorithm was validated at four CDC Prevention Epicenters hospitals. Electronic surveillance was highly sensitive, specific, and showed good to excellent agreement for hospital-onset; community-onset, study facility associated; indeterminate; and recurrent CDI.

Keywords: Clostridium difficile, surveillance, algorithm

It is recommended all US hospitals track Clostridium difficile infection (CDI) (1). At a minimum it is recommended to conduct surveillance for hospital onset CDI, but tracking CDI with onset in the community may have important epidemiological and prevention implications (1–2). However, surveillance for community-onset CDI is much more labor intensive than hospital-onset CDI. Due to increased demand for patient safety coupled with an emphasis to adopt and implement electronic health records, automated surveillance systems for tracking nosocomial infections needed to be investigated to maximize both limited resources and patient safety (3–4). There were goal of this study was to develop and validate an automated CDI surveillance algorithm utilizing electronically available data at multiple healthcare facilities.

METHODS

The study population included all adult patients ≥ 18 years of age admitted to four US hospitals participating in the CDC Epicenters Program from July 1, 2005 to June 30, 2006. These hospitals included Barnes-Jewish Hospital (St. Louis, MO), Brigham and Women’s Hospital (Boston, MA), The Ohio State University Medical Center (Columbus, OH), and University Hospital (Salt Lake City, UT).

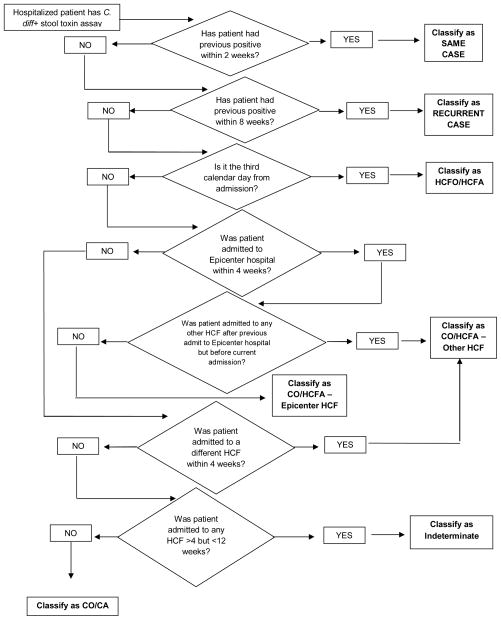

A conceptual automated CDI surveillance algorithm was created based on recommended surveillance definitions (Figure 1) (1). Each center worked with their medical informatics departments to apply the algorithm to their local databases. CDI case categorizations by the algorithm were compared to categorizations previously determined by chart review (5). A second chart review was performed for discordant results. The gold standard comparison was all concordant cases and the categorization determined to be correct by the re-review. The algorithms were modified as needed to improve accuracy. Sensitivities and specificities were calculated for each CDI surveillance definition. Kappa (κ) statistics were also calculated. Statistical analyses were performed with SPSS for Windows, version 19.0 (SPSS, Inc., Chicago, IL).

Figure 1.

Conceptual automated CDI surveillance algorithm.

RESULTS

There were 1767 patients with stool positive for C. difficile toxins identified. After the initial comparison of the algorithm’s categorization of CDI cases to categorizations determined by chart review, hospital A had 204 discordant cases (27.1%), hospital B had 77 (18.7%), hospital C had 55 (22.4%), and hospital D had 104 (29.1%). Data on discordant cases were submitted back to the appropriate hospitals for re-review.

The overall sensitivities, specificities, and kappa values of the algorithm by CDI onset compared to the gold standard were as follows: hospital-onset: 92%, 99%, and 0.90; community-onset, study facility associated: 91%, 98%, and 0.84; community-onset, other healthcare facility associated: 57%, 99%, and 0.65; community-onset, community-associated: 96%, 94%, and 0.69; indeterminate cases: 80%, 98%, and 0.76; and recurrent cases: 94%, 99%, and 0.94 (Table 1). Similar sensitivity, specificity, and Kappa values were seen at all individual hospitals for community-onset, study-center associated and recurrent CDI (Table 1). The algorithm had excellent agreement for hospital-onset CDI at each hospital – except for hospital B. Community-onset, other healthcare facility associated CDI showed a wide range of sensitivities (16% to 96%) and Kappa values (0.25 to 0.93). Similar trends were seen for community-onset, community-associated and indeterminate CDI.

Table 1.

Sensitivities, specificities, and kappa values by CDI onset and facility

| Case definition | Facility [Sensitivity (%), Specificity(%),(Kappa value)] | |||||||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| A | B | C | D | Total | ||||

| Healthcare facility-onset | 99, 98 (.97) | 75, 99 (.66) | 94, 100 (.93) | 100,99 (.99) | 92, 99 (.90) | |||

| Community-onset, study center-associated | 93, 96 (.83) | 100, 97 (.86) | 84, 99 (.83) | 81, 100 (.88) | 91, 98 (.84) | |||

| Community-onset, other healthcare facility associated | 16, 99 (.25) | 82, 98 (.61) | 53, 98 (.59) | 96, 98 (.93) | 57, 99 (.65) | |||

| Community-associated, community-onset | 91, 95 (.71) | 100, 87 (.63) | 100, 92 (.44) | 100, 99 (.91) | 96, 94 (.69) | |||

| Indeterminate | 83, 98 (.80) | 73, 98 (.63) | 63, 97 (.48) | 84, 99 (.88) | 80, 98 (.76) | |||

| Recurrent | 99, 99 (.97) | 88, 99 (.85) | 64, 100 (.77) | 97, 100 (.98) | 94, 99 (.94) | |||

Each hospital had to individualize the algorithm to their facility. Hospitals A, B, and C did not have discrete data on where a patient was admitted from (e.g. admit from home, long term care facility), whereas hospital D did. Therefore, categorization of community-onset cases at these hospitals was dependent on the discharge status (e.g. discharge to home, long term care facility) if the patient had a prior hospitalization in the previous 12 weeks. Hospital A has a code for patients with frequent outpatient visits called “recurring patients,” which has a start date of the first visit and end date of December 31. Many “recurring patients” with CDI were misclassified as hospital-onset CDI. The medical informatics team created a new table within the database which contained information regarding the visit type associated with a given encounter to correct this problem. Hospital B made minor modifications to the hospital-onset time cut-off to improve accuracy. Hospital C was not able to modify their algorithm because some data was available only through free text fields. Hospital D initially included patients who were admitted to only one particular building, missing those patients who were admitted to the other three buildings of their medical center. This was corrected. Three other issues were identified and resolved after the initial review of discordant cases: outpatient encounters were included when determining case categorization rather than only inpatient encounters; only the first positive C difficile toxin result per patient was evaluated, so subsequent episodes of CDI were missed; and stool collection date was used to identify patients instead of the admit date.

DISCUSSION

This goal of this study was to develop and validate an automated CDI surveillance algorithm utilizing existing electronically available data. Previous research indicates electronic surveillance is more accurate and reliable than manual surveillance (3, 6). Automated surveillance also requires less time as it eliminates the need to do chart review. This study found automated CDI surveillance to be feasible and reliable with overall good to excellent agreement for hospital-onset; community-onset, study facility associated; indeterminate; and recurrent CDI case categorizations.

Each hospital worked with their individual information technology teams to apply the general automated CDI surveillance algorithm to the data available at their facilities. In this study, data availability and type of data varied from hospital to hospital, thus impacting the accuracy of the automated algorithm. This issue is illustrated by Hospital D, where hospital performed the best at categorizing community-onset CDI because there was a discrete variable that captured where patients were admitted from.

There are potential limitations to the use of an automated CDI surveillance algorithm. Electronic surveillance requires access to an electronic health record (EHR) system. Only about 12% of US hospitals have an EHR system (7). To develop an automated algorithm, surveillance rules need to be specified into electronic algorithm rules. This can lead to algorithms that vary from site to site based on data availability. As a result, each center can potentially have different rules for the same infection, resulting in different rates, making inter-hospital comparisons difficult (3).

Another limitation of using an automated CDI surveillance algorithm is that chart review is not performed. While the lack of chart review is mitigated by enforcing toxin testing of only diarrheal stool, misclassification is still possible. It is possible that a true community-onset CDI case could be misclassified as a hospital-onset CDI case if stool was collected after the hospital-onset cut-off date. In addition, patients with a positive assay for C. difficile may not have clinically significant diarrhea, and therefore do not truly have CDI. This may be especially problematic at hospitals that use nucleic acid amplification tests (8).

This study found automated electronic CDI surveillance to be highly sensitive and specific for identifying cases of hospital-onset; community-onset, study center-associated; and recurrent CDI. Automated CDI surveillance will allow infection preventionists to devote more time to infection prevention efforts. In addition, automated CDI surveillance may facilitate a healthcare facility’s ability to track community-onset CDI. Community-onset CDI likely contributes to hospital-onset CDI because patients admitted to a healthcare facility with CDI are a source of C. difficile transmission to other patients. Understanding the burden of community-onset CDI may allow for targeting of CDI prevention efforts (2). Implementing an automated algorithm utilizing electronically available data is feasible and reliable.

Acknowledgments

This work was supported by grants from the Centers for Disease Control and Prevention (UR8/CCU715087-06/1 and 5U01C1000333 to Washington University, 5U01CI000344 to Eastern Massachusetts, 5U01CI000328 to The Ohio State University, and 5U01CI000334 to University of Utah) and the National Institutes of Health (K23AI065806, K24AI06779401, K01AI065808 to Washington University).

Footnotes

DISCLOSURES

ERD: research: Optimer, Merck; consulting: Optimer, Merck, Sanofi-Pasteur, and Pfizer

HAN, DSY, JM, KBS, JEM, YMK, VJF: no disclosures

Findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention. Preliminary data were presented in part at the 21st Annual Society of Healthcare Epidemiology of America, Dallas, TX (Apr 1 – 4, 2011), abstract number 157.

References

- 1.McDonald LC, Coignard B, Dubberke E, et al. Recommendations for Surveillance of Clostridium difficile-Associated Disease. Infect Control Hosp Epidemiol. 2007;28:140–145. doi: 10.1086/511798. [DOI] [PubMed] [Google Scholar]

- 2.Dubberke ER, Butler AM, Hota B, et al. Multicenter study of the impact of community-onset Clostridium difficile infection on surveillance for C. difficile infection. Infect Control Hosp Epidemiol. 2009;30:518–25. doi: 10.1086/597380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hota, Lin M, Doherty JA, et al. Formulation of a model for automating infection surveillance: algorithmic detection of central-line associated bloodstream infection. J Am Med Inform Assoc. 2010;17:42–48. doi: 10.1197/jamia.M3196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.CDC. Multidrug-Resistant Organism (MDRO) and Clostridium difficile-Associated Disease (CDAD) Module. PowerPoint Slides. 2008 Retrieved from http://www.cdc.gov/nhsn/wc_MDRO_CDAD_ISlabID.html.

- 5.Dubberke ER, Butler AM, Yokoe DS, et al. Multicenter study of Clostridium difficile infection rates from 2000 to 2006. Infect Control Hosp Epidemiol. 2010;31:1030–1037. doi: 10.1086/656245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Klompas M, Kleinman K, Platt R. Development of an algorithm for surveillance of ventilator-associated pneumonia with electronic data and comparison of algorithm results with clinician diagnoses. Infect Control Hosp Epidemiol. 2008;29:31–37. doi: 10.1086/524332. [DOI] [PubMed] [Google Scholar]

- 7.Jha AK, DesRoches CM, Kralovec PD, Joshi MS. A progress report on electronic health records in U.S. hospitals. Health Affairs. 2010;29:1951–57. doi: 10.1377/hlthaff.2010.0502. [DOI] [PubMed] [Google Scholar]

- 8.Dubberke ER, Han Z, Bobo L, Lawrence B, Copper S, Hoppe-Bauer J, Burnham CA, Dunne WM., Jr Impact of Clinical Symptoms on Interpretation of Diagnostic Assay for Clostridium difficile Infections. J Clin Microbiol. 49:2887–2893. doi: 10.1128/JCM.00891-11. [DOI] [PMC free article] [PubMed] [Google Scholar]