Abstract

Study Objective:

To determine variations in interobserver and intraobserver agreement of drug-induced sleep endoscopy (DISE) in a cohort of experienced versus nonexperienced ear, nose, and throat (ENT) surgeons.

Design:

Prospective, blinded agreement study.

Setting:

Ninety-seven ENT surgeons (90 nonexperienced with DISE; seven experienced) observed six different DISE videos and were asked to score the upper airway (UA) level (palate, oropharynx, tongue base, hypopharynx, epiglottis), direction (anteroposterior, concentric, lateral), and degree of collapse (none; partial or complete collapse). Findings were collected and analyzed, determining interobserver and intraobserver agreement [overall agreement (OA), specific agreement (SA)] and kappa values per UA level.

Measurement and Results:

In the nonexperienced group, overall interobserver agreement on presence of tongue base collapse (OA = 0.63; kappa = 0.33) was followed by the agreement on epiglottis (OA = 0.57; kappa = 0.23) and oropharynx collapse (OA = 0.45; kappa = 0.09). Low overall interobserver agreement in this group was found for hypopharyngeal collapse (OA = 0.33; kappa = 0.08). A similar ranking was found for degree of collapse. For direction of collapse, high interobserver agreement was found for the palate (OA = 0.57; kappa = 0.16). Among the experienced observers, overall interobserver agreement was highest for presence of tongue base collapse (OA = 0.93; kappa = 0.71), followed by collapse of the palate (OA = 0.80; kappa = 0.51). In this group, lowest agreement was also found for hypopharyngeal collapse (OA = 0.47; kappa = 0.03). Interob-server agreement on direction of collapse was highest for epiglottis collapse (OA = 0.97; kappa = 0.97). Concerning the degree of collapse, highest agreement was found for degree of oropharyngeal collapse (OA = 0.82; kappa = 0.66). Among the experienced observers a statistically significant higher interobserver agreement was obtained for presence, direction, and degree of oropharyngeal collapse, as well as for presence of tongue base collapse and degree of epiglottis collapse. Among the nonexperienced observers, high intraobserver agreement was found in particular for tongue base and epiglottis collapse. Among the experienced observers, high agreement was found for all levels but to a lesser extent for hypopharyngeal collapse. Intraobserver agreement was statistically significantly higher in the experienced group, for all UA levels expect for the hypopharynx.

Conclusion:

This study indicates that both interobserver and intraobserver agreement was higher in experienced versus nonexperienced ENT surgeons. Agreement ranged from poor to excellent in both groups. The current results suggest that experience in performing DISE is necessary to obtain reliable observations.

Citation:

Vroegop AVMT; Vanderveken OM; Wouters K; Hamans E; Dieltjens M; Michels NR; Hohenhorst W; Kezirian EJ; Kotecha BT; de Vries N; Braem MJ; Van de Heyning PH. Observer variation in drug-induced sleep endoscopy: experienced versus nonexperienced ear, nose, and throat surgeons. SLEEP 2013;36(6):947-953.

Keywords: Drug-induced sleep endoscopy, interobserver agreement, intraobserver agreement, obstructive sleep apnea, sleep disordered breathing, sleep related breathing disorders

INTRODUCTION

Obstructive sleep apnea (OSA) is a syndrome characterized by recurrent episodes of apnea and hypopnea during sleep that are caused by repetitive upper airway (UA) collapse and often result in decreased oxygen blood levels and arousal from sleep.1 Continuous positive airway pressure (CPAP) therapy is considered the gold standard treatment for moderately severe OSA, but in patients with mild to moderate OSA or in cases of CPAP failure, oral appliance therapy and/or UA surgery can be considered.2–4 Identification of predictors of treatment outcome is important in selecting patients who might benefit from non-CPAP therapies.5–7 However, the ability to preselect suitable patients for non-CPAP treatment in daily clinical practice is limited by criteria based on individual elements associated with UA behavior.

The aim of UA evaluation is not only to gain better insight into the complex pathophysiology of UA collapse but also to improve treatment success rates by selecting the most appropriate therapeutic option for the individual patient.8,9 Sleep nasendos-copy or drug-induced sleep endoscopy (DISE), first described by Croft and Pringle in 1991,10 uses a flexible nasopharyngo-scope to visualize the UA under sedation. DISE enables evaluation of the localization of flutter and collapse in patients with sleep disordered breathing. During the procedure, UA collapse patterns can be assessed when alternatives to CPAP are considered. It has shown its value in optimizing patient selection for surgical UA interventions and can also be helpful in selecting patients for oral appliance therapy.11,12 Its validity and reliability in experienced observers have been demonstrated in the literature13,14; however, assessment is subjective and may vary based on clinical experience. Although several scoring systems have been introduced over the years,15–23 no standard approach toward assessment and classification of DISE findings has been universally adopted.

DISE is a relatively new procedure to most general ear, nose, and throat (ENT) surgeons. Because further development and implementation of this procedure in the interdisciplinary field of sleep medicine is expected, assessment of the evaluation technique could be of interest to improve its application. The purpose of the current study was to assess differences in DISE evaluation by experienced versus nonexperienced observers. Therefore, observer agreement values were calculated in a cohort of 97 ENT surgeons, of whom seven were experienced with DISE.

METHODS

Study Design

This prospective study comprised a blinded multiobserver assessment of six DISE videos. These specific videos were selected because of the distinctive UA collapse patterns recorded. The DISE videos were shown to the observers twice in a randomized order, at two different time points.

Patients

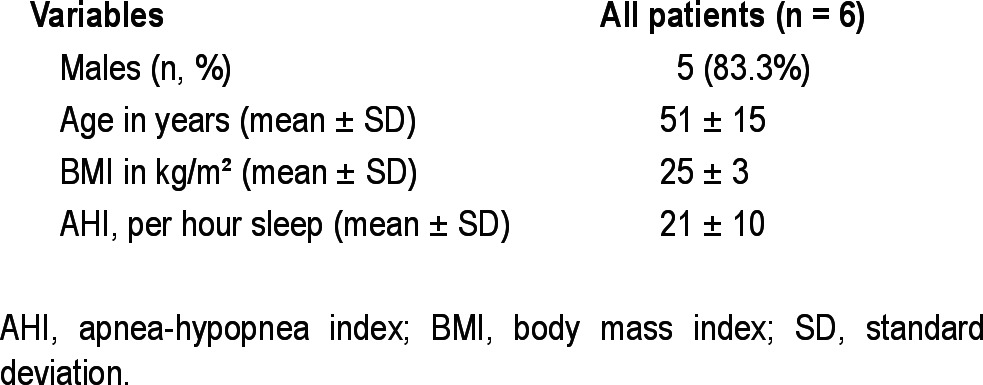

The DISE videos originated from six different patients with OSA, who were reluctant about or non-responding to CPAP, or who had failed to use CPAP and underwent DISE (baseline characteristics shown in Table 1). Each DISE video represented one unique OSA patient. DISE was performed by an experienced ENT surgeon in a semidark and silent operating room with the patient lying supine in a hospital bed. Artificial sleep was induced by intravenous administration of midazolam (bolus injection of 1.5 mg) and propofol through a target-controlled infusion system (2.0-2.5 μg/mL). During the procedure, electrocardiography and oxygen saturation were continuously monitored. A fiberlaryngoscope (Olympus ENF-GP, diameter 3.7 mm, Olympus Europe GmbH, Hamburg, Germany) was used for videoscopic imaging, and simultaneous sound recording was performed.

Table 1.

Baseline characteristics of patients

Observers

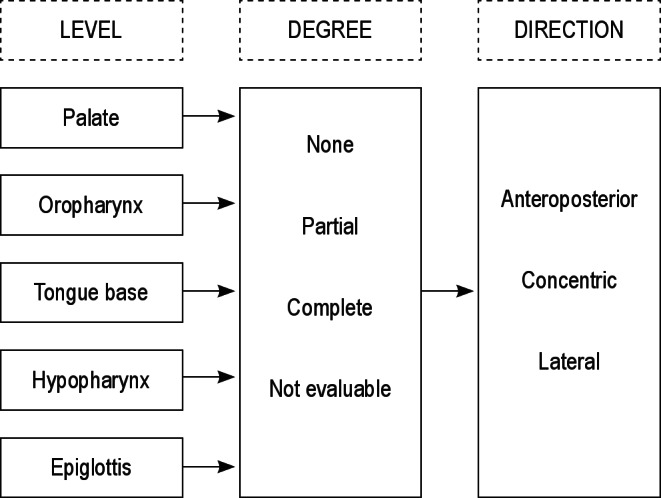

The cohort consisted of 97 observers, who were invited to participate in this project during a 2-day conference. Ninety observers were general ENT surgeons with diverse clinical interests, who were nonexperienced with DISE. The other seven observers were experienced with DISE, as they perform the procedure on a regular basis in specialized sleep clinics and also conduct clinical research on this topic. A subset of observers was able to assess the DISE videos twice, as these observers were available on both days of the conference. All observers had no prior knowledge of patient history, including previous CPAP and non-CPAP treatment, polysomnography results, and findings on clinical examination or planned treatment. Each observer was asked to note his or her conclusions as to the level(s) of collapse and the degree and direction thereof, using a uniform scoring system (Figure 1). The scoring system consisted of anatomic UA landmarks and was based on key elements of scoring systems recently proposed in the literature.21–24

Figure 1.

Reporting of the assessment of the level, the corresponding degree, and direction of upper airway collapse patterns.

Statistical Analysis

Data analysis was performed using R version 2.15.0 (R Foundation for Statistical Computing, Vienna, Austria). Descriptive statistics for clinical characteristics of patients were presented as means ± standard deviation (SD). Raw agreement among the observers was calculated by dividing the number of actual agreements by the total number of possible agreements. This was done for global agreement per UA level [overall agreement (OA)] and per specific response option [specific agreement (SA)].25 Ad ditionally, Fleiss kappa values were determined to correct for chance agreement. For the intraobserver agreement, raw agreement and kappa values were calculated per observer and averaged over all observers. For both interobserver and intraobserver agreement, 95% confidence intervals were obtained using bootstrap with 1,000 samples on the level of the observer.26,27 For the nonexperienced observers this means that 1,000 samples, containing 90 × 6 videos, were taken with replacement from the 90 observers. Similarly, for the experienced observers, 1,000 samples were taken from seven observers. Bootstrap P values for the differences between experienced and non-experienced raters were computed by pooling the experienced and nonexperienced raters together. Observers were then repeatedly artificially classified into experienced and nonexperienced in the same ratio as the original sample and the number of times the differences exceeded the observed value were counted.

RESULTS

Interobserver Variation

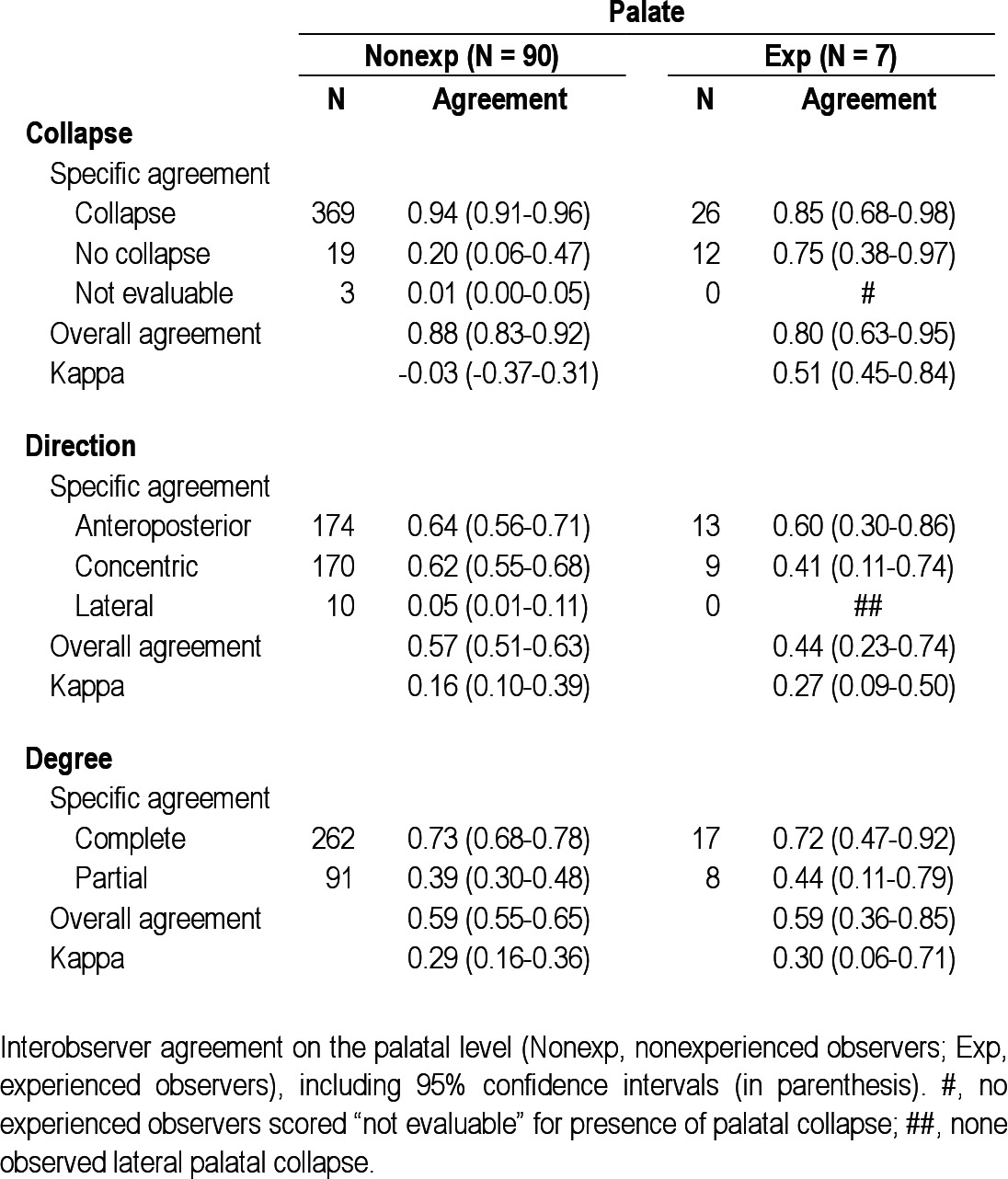

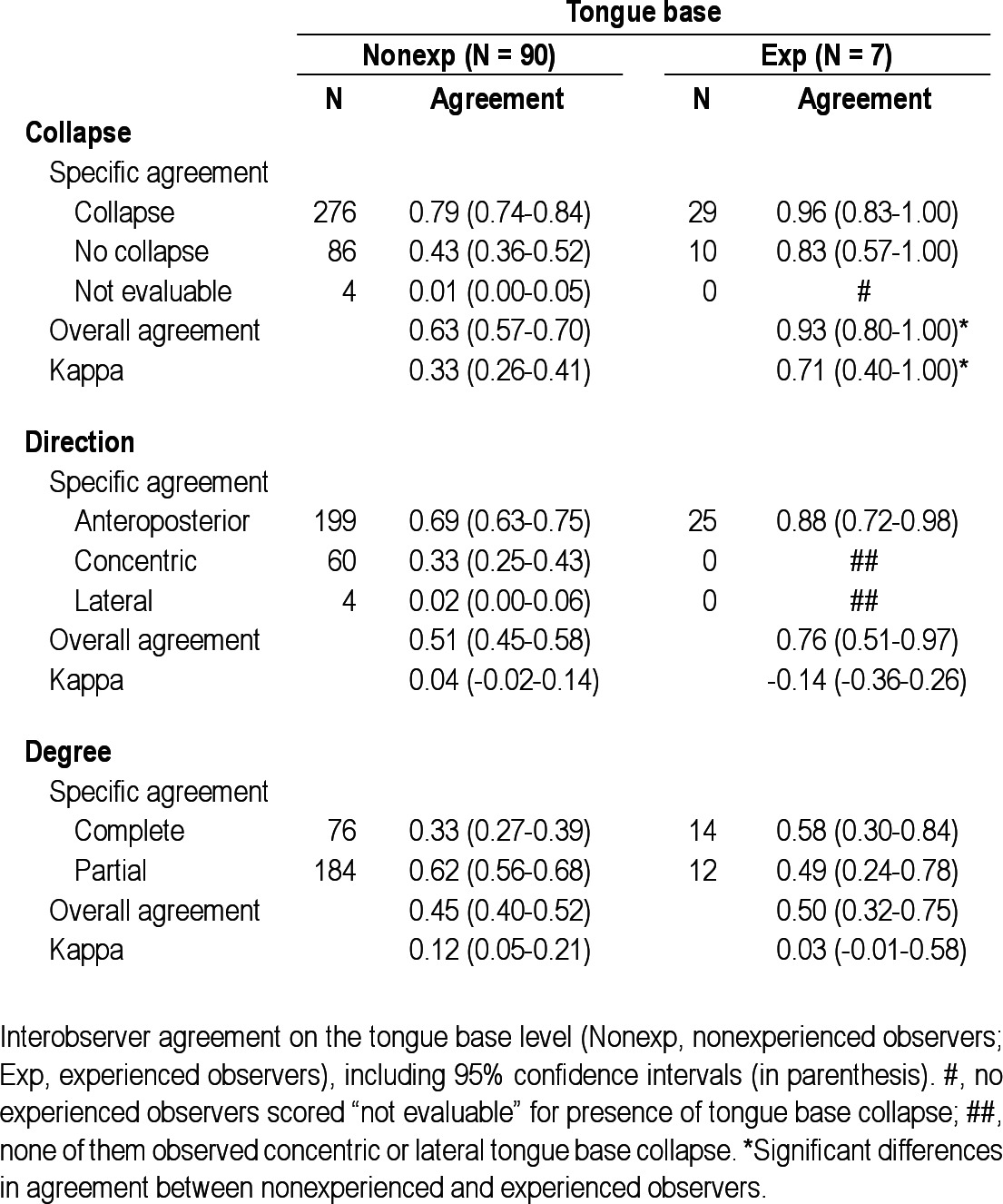

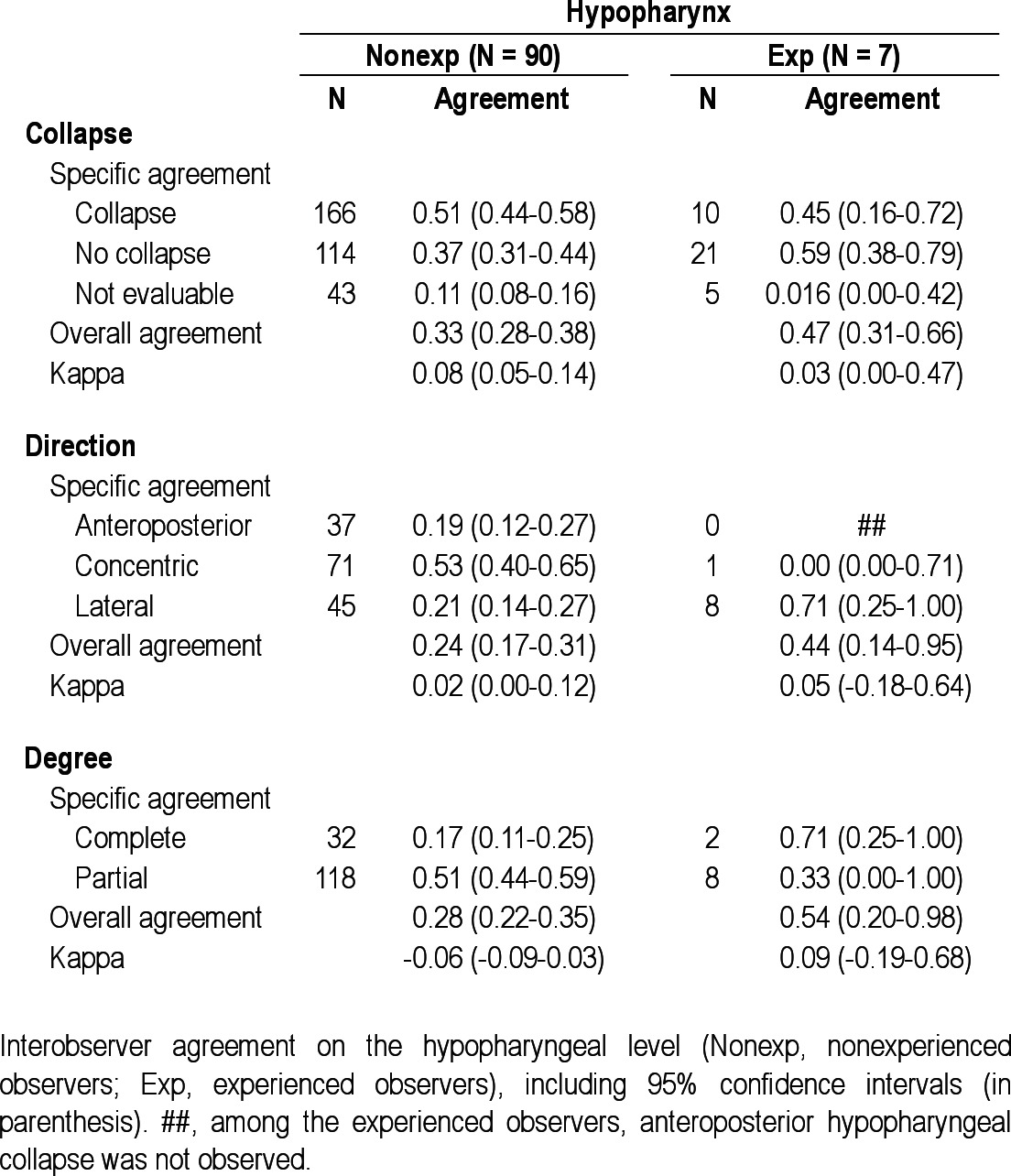

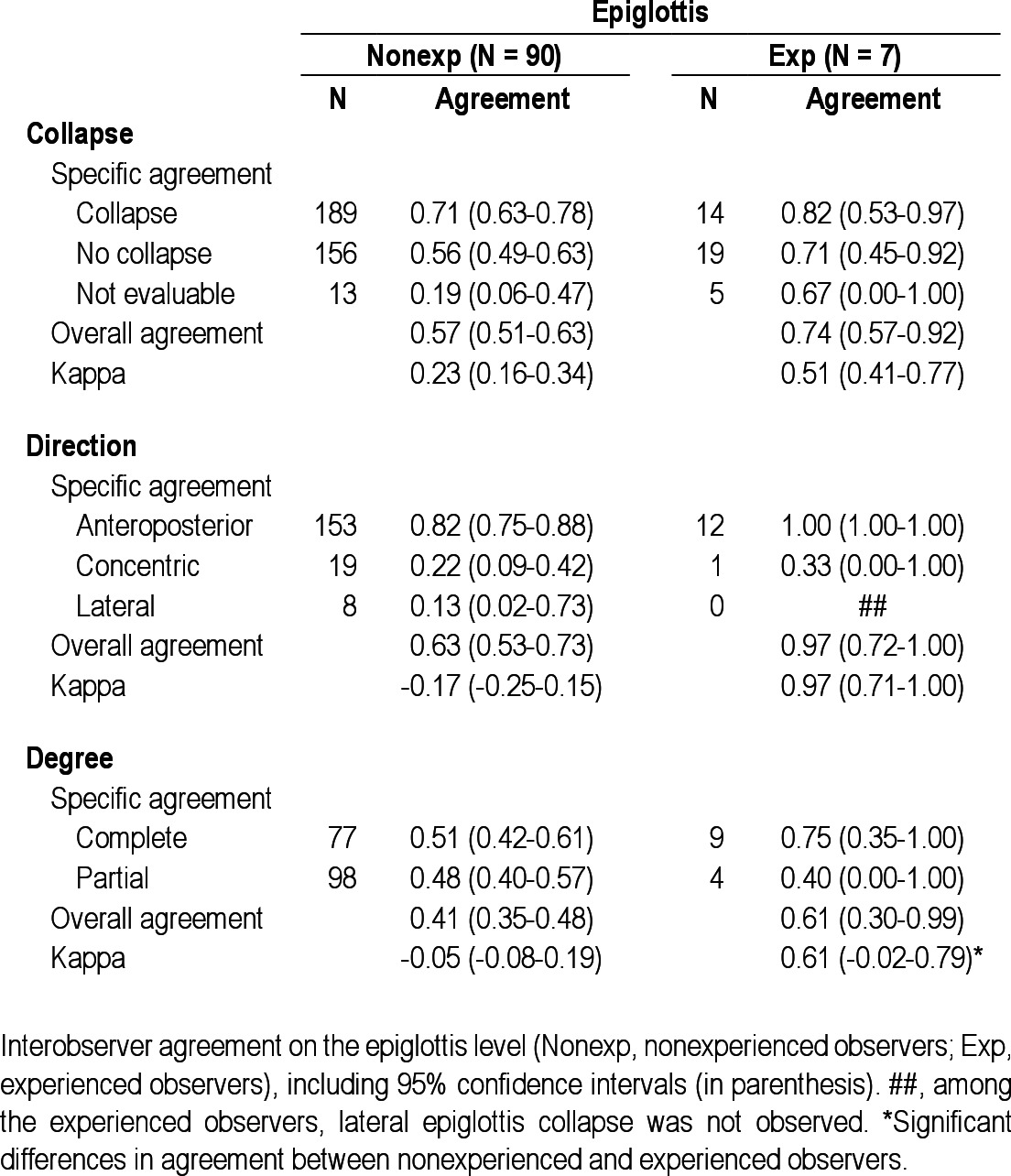

Specific and overall interobserver agreement values per UA level are shown in Tables 2–6. In the nonexperienced group (n = 90), overall interobserver agreement was highest for presence of palatal collapse (OA = 0.88), although the kappa value for the presence of palatal collapse was lowest (-0.03). The agreement on the presence of tongue base collapse (OA = 0.63; kappa = 0.33) was followed by the agreement on epiglottis (OA = 0.57; kappa = 0.23) and oropharynx collapse (OA = 0.45; kappa = 0.09). Low overall interobserver agreement in this group was found for hypopharyngeal collapse (OA = 0.33; kappa = 0.08). A similar ranking was found for degree of collapse. For direction of collapse, high interobserver agreement was found for the palate (OA = 0.57; kappa = 0.16).

Table 2.

Interobserver agreement on the palatal level

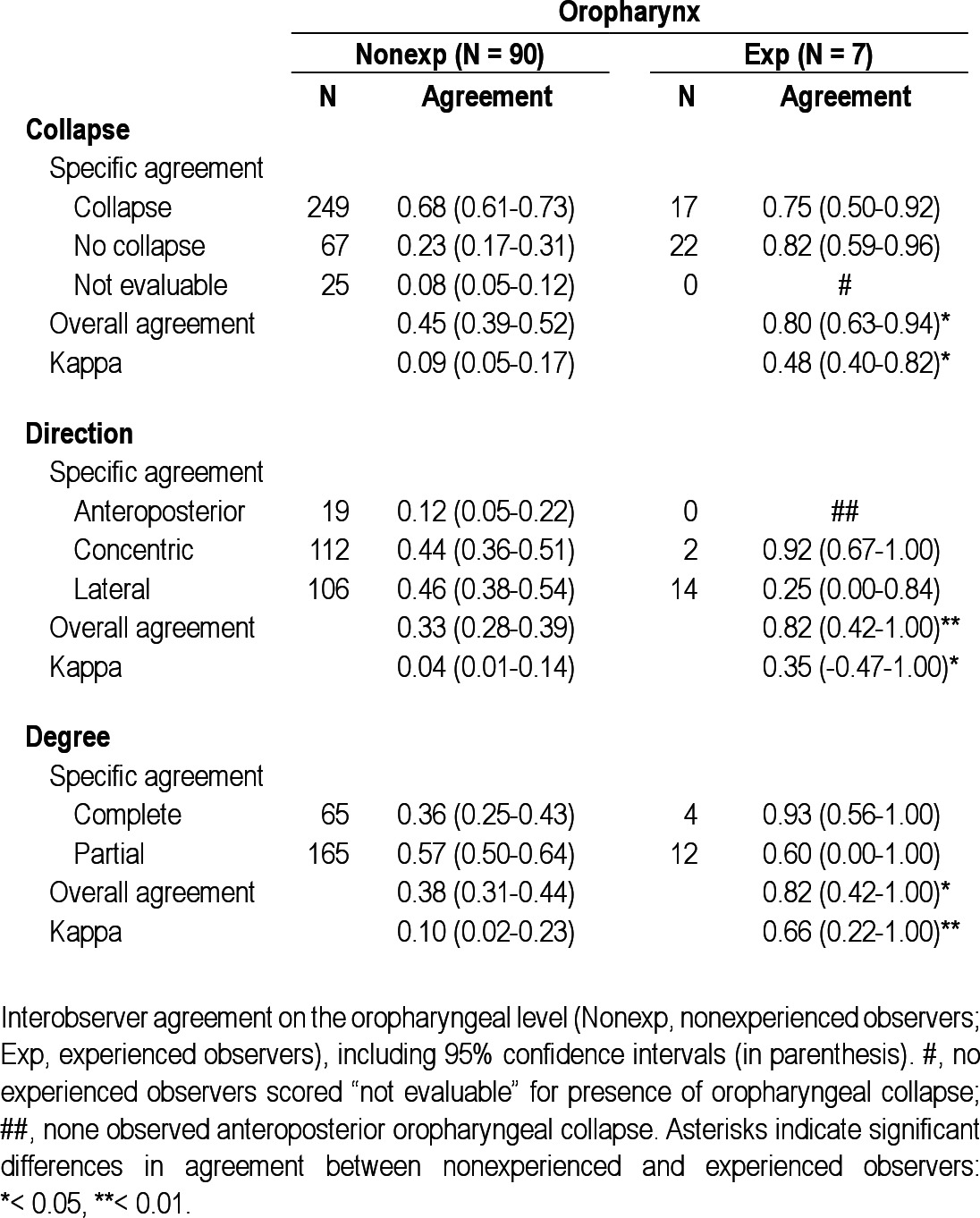

Table 3.

Interobserver agreement on the oropharyngeal level

Table 4.

Interobserver agreement on the tongue base level

Table 5.

Interobserver agreement on the hypopharyngeal level

Table 6.

Interobserver agreement on the epiglottis level

In the experienced group (n = 7), no observers scored “not evaluable” for presence of collapse at the palate, oropharynx, or tongue base (marked with a number sign (#) in Tables 2 through 6). Overall interobserver agreement was highest for presence of tongue base collapse (OA = 0.93; kappa = 0.71), followed by collapse of the palate (OA = 0.80; kappa = 0.51). In this group, lowest agreement was also found for hypopharyngeal collapse (OA = 0.47; kappa = 0.03). Interobserver agreement on direction of collapse was highest for epiglottis collapse (OA = 0.97; kappa = 0.97), followed by oropharynx (OA = 0.82; kappa = 0.35). Among the experienced observers, several specific structure-configurations for direction of collapse were not observed (marked with double number signs (##) in Tables 2 through 6). Concerning the degree of collapse, highest agreement was found for degree of oropharyngeal collapse (OA = 0.82; kappa = 0.66).

Among the experienced observers a statistically significant higher interobserver agreement was obtained for presence, direction, and degree of oropharyngeal collapse, as well as for presence of tongue base collapse and degree of epiglottis collapse.

Intraobserver Variation

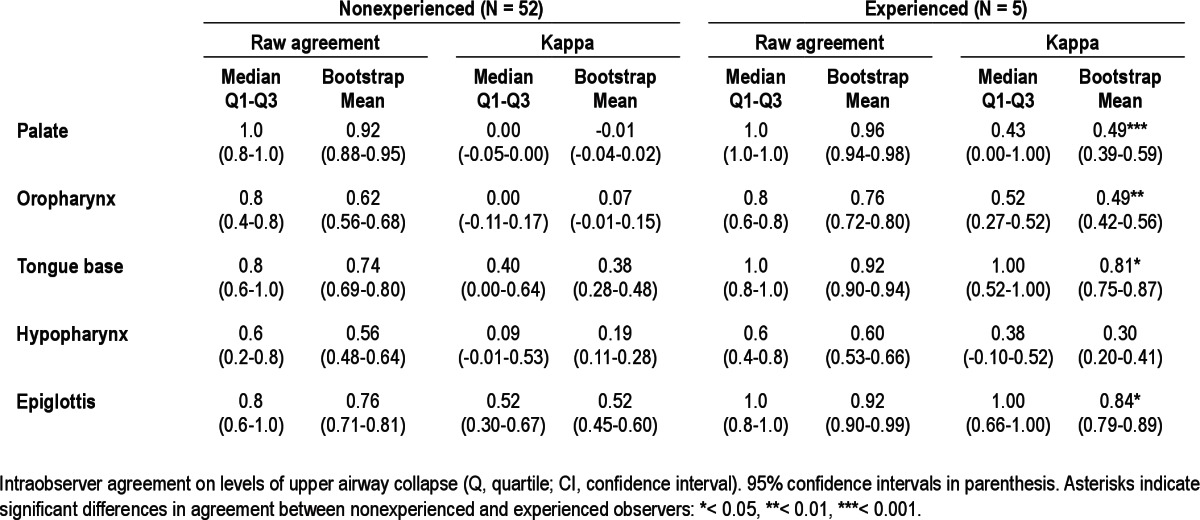

The intraobserver agreement per UA level is presented in Table 7. Among the nonexperienced observers, high intraobserver agreement was found in particular for tongue base and epiglottis collapse. Among the experienced observers, high agreement was found for all levels but to a lesser extent for hypopharyngeal collapse. Intraobserver agreement was statistically significantly higher in the experienced group, for all UA levels except for the hypopharynx. In total, 52 nonexperienced observers and five experienced observers were available to score the videos twice.

Table 7.

Intraobserver agreement

DISCUSSION

This study describes variations in the interobserver and intraobserver agreement of DISE in a large cohort of ENT surgeons, consisting of both experienced and non-experienced DISE observers. The current results indicate that as a rule, agreement is higher among experienced observers, and therefore experience in performing DISE is necessary to obtain reliable results. Training could be beneficial to ENT surgeons new to this emerging technique. Higher interobserver agreement was found in particular for oropharyngeal, tongue base, and epiglottis collapse. Intraobserver agreement was higher among the experienced raters, also in particular for the oropharynx, tongue base, and epiglottis (Table 7).

The difference in interobserver agreement on palatal collapse between the nonexperienced and experienced ENT surgeons might be due to a higher tendency of non-experienced observers to see a collapse at this specific level, as it is the most common site of snoring19 but does not necessarily implicate collapse. The differences in overall interobserver agreement for this specific UA level might thus be explained by an artificially high agreement among the nonexperienced observers, rather than a substantially lower agreement among the experienced raters. The low kappa value for presence of collapse of this specific UA level in the nonexperienced group might endorse this explanation. Least interobserver agreement was found for hypopharyngeal collapse in both the non-experienced and experienced groups. General ENT surgeons regularly perform endoscopic UA procedures as part of their routine clinical practice but might be less accustomed to evaluation of the hypopharynx as an isolated entity of the UA tract. The low agreement for hypopharyngeal collapse in the experienced group might be attributed to the use of different scoring systems in specific specialized centers,13,15,21 in which qualification of the hypopharynx is particularly subject to different approaches. Also, evaluation of the hypopharyngeal level in general can by hindered by collapse at a higher UA level where the tip of the nasopharyngoscope is positioned to observe the hypopharynx. This phenomenon is not present when assessing the palate, as the nasopharyngoscope is then positioned in the nasopharynx, not being a part of the collapsible segment of the UA. Furthermore, the hypopharynx has less defined structural boundaries, compared with other UA levels, e.g., the palate. The experienced observers appeared to find identification of the presence of UA collapse at the level of the palate, oropharynx, and tongue base less cumbersome, having not scored “not evaluable” for these levels, unlike the nonexperienced observers. Also, the absence of certain structure-configurations in the ratings of the experienced observers is prominent. This is particularly true for the absence of lateral palatal collapse, antero-posterior oropharyngeal collapse, concentric or lateral tongue base collapse, anteroposterior hypopharyngeal collapse, and lateral epiglottis collapse.

This study is the first to specifically describe differences in interobserver and intraobserver agreement between nonexperienced and experienced ENT surgeons. The current results on interobserver agreement in our subgroup of experienced ENT surgeons are in accordance with previous findings in the field, although study designs are roughly comparable.13,14 Kezirian et al.13 found that the reliability of global assessment of obstruction was somewhat higher than the degree of obstruction, especially for the hypopharynx. Rodriguez-Bruno et al.14 found high interobserver and intraobserver agreement for obstruction at the level of the tonsils, followed by the epiglottis. There are major differences with the current study: the number of experienced observers (Kezirian et al. study: two; Rodriguez-Bruno et al. study: two; current study: seven), number of video segments shown (Kezirian et al. study: 108; Rodriguez-Bruno et al. study: 32; current study: six), and the type of scoring system used. Furthermore, Kezirian et al.13 found that among experienced surgeons, assessment of the palate, tongue, and epiglottis showed greater reliability than for other structures, whereas in the current study, higher agreement was found for palatal, oropharyngeal, and tongue base collapse. As the palate and tongue base are better-defined UA structures, this might partially explain the findings of consistently better reliability on these specific levels.

The current study provides important additional insights into what is known of interobserver and intraobserver variability in DISE, as it was conducted in a large cohort of general ENT surgeons, most of whom were nonexperienced with DISE and not yet specifically trained to assess DISE findings. All observers were blinded to baseline characteristics of the study participants, so no confounding effects of variables such as the apnea-hypopnea index or body mass index) were to be expected. In recent literature on the value of DISE, it was suggested that DISE is most relevant when considering tongue base surgery or oral appliance therapy9; therefore, the high interobserver and intraobserver agreement found for tongue base collapse among the experienced ENT surgeons in the current study might be of particular importance. Furthermore, results on interobserver and intraob-server agreement in a cohort of both experienced and nonexperienced observers may help to detect differences in assessment for varying levels of training. A possible solution to the unreliability of DISE findings on particular levels could be training ENT surgeons with a set of standardized DISE videos to foster consistency for scoring the UA level variables. To do so, the use of a standardized and universally accepted DISE scoring system will also be essential.

There are several limitations to this study. Ideally, intraob-server agreement is to be determined based on more ratings, which was practically not feasible in the study setting. A fuller set of DISE samples, potentially including more different patterns, would have allowed more robust reliability testing of the scoring system. Furthermore, there were only seven observers in the experienced group. However, the current setting was considered sufficient to demonstrate and verify differences in agreement in experienced versus nonexperienced observers— the main goal of this study. As for the statistical analysis, it is to be mentioned that kappa coefficients have an important drawback in the current study design, because the kappa value is heavily dependent on the observed marginal frequencies. Some specific UA collapse configurations (i.e., lateral palatal collapse, anteroposterior oropharyngeal collapse) are generally rare or unlikely to be observed. This leads to lower kappa values and can therefore be severely misleading in interpreting the agreement among the observers. The observers participating in the current study were possibly acquainted with different DISE scoring systems at their home clinics. Using an unknown uniform study scoring system while assessing the DISE video segment may have been cumbersome. Furthermore, only segments of the DISE videos were shown, although this was considered a minor limitation, as the main goal was to assess interobserver and intraobserver variation and not to provide a treatment plan. The heterogeneity of the observer group is to be considered relative in this context. Indeed, there are differences in levels of experience within the subcategories of nonexperienced and experienced. However, this aspect reflects clinical reality and may help to emphasize the need for specific training in DISE assessment in daily clinical practice.

In conclusion, the reported findings on interobserver and intraobserver agreement in a cohort of ENT surgeons, both nonexperienced and experienced with DISE, demonstrate that overall observer agreement was higher in experienced versus nonexperienced ENT surgeons, suggesting that experience in performing DISE is necessary to obtain reliable observations. Training under guidance of an experienced surgeon might be helpful for those unexperienced with DISE. These findings are new to the field and add additional insights into the validity of the technique. If DISE is to be extended into general ENT practice, the need for a standardized scoring system increases.

DISCLOSURE STATEMENT

This was not an industry supported study. Dr. Hamans has consulted for Philips Healthcare. Dr. Hohenhorst is investigator for Inspire Medical Systems, Inc. Dr. Kezirian is on the Medical Advisory Board of Apnex Medical and ReVENT Medical and has consulted for Apnex Medical, ArthroCare, Medtronic, Pavad Medical, and Magnap (Intellectual Property Rights). Dr. de Vries is a medical advisor for MSD, ReVENT, and Night-Balance; investigator for Inspire Medical Systems, Inc.; consultant for Philips; and has stock options in ReVENT. The other authors indicated no financial conflicts of interest. No product was used for an indication not in the approved labeling, and no investigational products were used.

ACKNOWLEDGMENTS

The authors express their gratitude to all ENT surgeons who collaborated in this project. Parts of this study were presented as a poster at the SLEEP 2012 26th Annual Meeting of the Associated Professional Sleep Societies (APSS). The work was supported by the Agency for Innovation by Science and Technology (IWT) of the Flemish Government (Belgium), project number 090864.

ABBREVIATIONS

- CPAP

continuous positive airway pressure

- DISE

drug-induced sleep endoscopy

- ENT

ear, nose and throat

- OSA

obstructive sleep apnea

- OA

overall agreement

- SA

specific agreement

- SD

standard deviation

- UA

upper airway

REFERENCES

- 1.Sleep-related breathing disorders in adults: recommendations for syndrome definition and measurement techniques in clinical research. The Report of an American Academy of Sleep Medicine Task Force. Sleep. 1999 Aug 1;:667–89. [PubMed] [Google Scholar]

- 2.Sullivan CE, Issa FG, Berthon-Jones M, Eves L. Reversal of obstructive sleep apnoea by continuous positive airway pressure applied through the nares. Lancet. 1981;1:862–5. doi: 10.1016/s0140-6736(81)92140-1. [DOI] [PubMed] [Google Scholar]

- 3.Epstein LJ, Kristo D, Strollo PJ, Jr, et al. Clinical guideline for the evaluation, management and long-term care of obstructive sleep apnea in adults. J Clin Sleep Med. 2009;5:263–76. [PMC free article] [PubMed] [Google Scholar]

- 4.Kushida CA, Morgenthaler TI, Littner MR, et al. Practice parameters for the treatment of snoring and Obstructive Sleep Apnea with oral appliances: an update for 2005. Sleep. 2006;29:240–3. doi: 10.1093/sleep/29.2.240. [DOI] [PubMed] [Google Scholar]

- 5.Kezirian EJ, Goldberg AN. Hypopharyngeal surgery in obstructive sleep apnea: an evidence-based medicine review. Arch Otolaryngol Head Neck Surg. 2006;132:206–13. doi: 10.1001/archotol.132.2.206. [DOI] [PubMed] [Google Scholar]

- 6.Vanderveken OM, Vroegop AV, Van de Heyning P, Braem MJ. Drug-induced sleep endoscopy completed with a simulation bite approach for the prediction of the outcome of treatment of obstructive sleep apnea with mandibular repositioning appliances. Operative Techniques in Otolaryngology - head and neck surgery. 2011;22:175–82. [Google Scholar]

- 7.Johal A, Battagel JM, Kotecha BT. Sleep nasendoscopy: a diagnostic tool for predicting treatment success with mandibular advancement splints in obstructive sleep apnoea. Eur J Orthod. 2005;27:607–14. doi: 10.1093/ejo/cji063. [DOI] [PubMed] [Google Scholar]

- 8.Vanderveken OM. Drug-induced sleep endoscopy (DISE) for non-CPAP treatment selection in patients with sleep-disordered breathing. Sleep Breath. 2012 doi: 10.1007/s11325-012-0671-9. (Epub ahead of print) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Eichler C, Sommer JU, Stuck BA, Hormann K, Maurer JT. Does drug-induced sleep endoscopy change the treatment concept of patients with snoring and obstructive sleep apnea? Sleep Breath. 2012 doi: 10.1007/s11325-012-0647-9. (Epub ahead of print) [DOI] [PubMed] [Google Scholar]

- 10.Croft CB, Pringle M. Sleep nasendoscopy: a technique of assessment in snoring and obstructive sleep apnoea. Clin Otolaryngol Allied Sci. 1991;16:504–9. doi: 10.1111/j.1365-2273.1991.tb01050.x. [DOI] [PubMed] [Google Scholar]

- 11.Johal A, Hector MP, Battagel JM, Kotecha BT. Impact of sleep nasendos-copy on the outcome of mandibular advancement splint therapy in subjects with sleep-related breathing disorders. J Laryngol Otol. 2007;121:668–75. doi: 10.1017/S0022215106003203. [DOI] [PubMed] [Google Scholar]

- 12.Hessel NS, de Vries N. Results of uvulopalatopharyngoplasty after diagnostic workup with polysomnography and sleep endoscopy: a report of 136 snoring patients. Eur Arch Otorhinolaryngol. 2003;260:91–5. doi: 10.1007/s00405-002-0511-9. [DOI] [PubMed] [Google Scholar]

- 13.Kezirian EJ, White DP, Malhotra A, Ma W, McCulloch CE, Goldberg AN. Interrater reliability of drug-induced sleep endoscopy. Arch Otolaryngol Head Neck Surg. 2010;136:393–7. doi: 10.1001/archoto.2010.26. [DOI] [PubMed] [Google Scholar]

- 14.Rodriguez-Bruno K, Goldberg AN, McCulloch CE, Kezirian EJ. Test-re-test reliability of drug-induced sleep endoscopy. Otolaryngol Head Neck Surg. 2009;140:646–51. doi: 10.1016/j.otohns.2009.01.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Pringle MB, Croft CB. A grading system for patients with obstructive sleep apnoea-based on sleep nasendoscopy. Clin Otolaryngol Allied Sci. 1993;18:480–4. doi: 10.1111/j.1365-2273.1993.tb00618.x. [DOI] [PubMed] [Google Scholar]

- 16.Camilleri AE, Ramamurthy L, Jones PH. Sleep nasendoscopy: what benefit to the management of snorers? J Laryngol Otol. 1995;109:1163–5. doi: 10.1017/s0022215100132335. [DOI] [PubMed] [Google Scholar]

- 17.Sadaoka T, Kakitsuba N, Fujiwara Y, Kanai R, Takahashi H. The value of sleep nasendoscopy in the evaluation of patients with suspected sleep-related breathing disorders. Clin Otolaryngol Allied Sci. 1996;21:485–9. doi: 10.1111/j.1365-2273.1996.tb01095.x. [DOI] [PubMed] [Google Scholar]

- 18.Higami S, Inoue Y, Higami Y, Takeuchi H, Ikoma H. Endoscopic classification of pharyngeal stenosis pattern in obstructive sleep apnea hypopnea syndrome. Psychiatry Clin Neurosci. 2002;56:317–8. doi: 10.1046/j.1440-1819.2002.00960.x. [DOI] [PubMed] [Google Scholar]

- 19.Quinn SJ, Daly N, Ellis PD. Observation of the mechanism of snoring using sleep nasendoscopy. Clin Otolaryngol Allied Sci. 1995;20:360–4. doi: 10.1111/j.1365-2273.1995.tb00061.x. [DOI] [PubMed] [Google Scholar]

- 20.Iwanaga K, Hasegawa K, Shibata N, et al. Endoscopic examination of obstructive sleep apnea syndrome patients during drug-induced sleep. Acta Otolaryngol Suppl. 2003;550:36–40. doi: 10.1080/0365523031000055. [DOI] [PubMed] [Google Scholar]

- 21.Kezirian EJ, Hohenhorst W, de Vries N. Drug-induced sleep endoscopy: the VOTE classification. Eur Arch Otorhinolaryngol. 2011;268:1233–6. doi: 10.1007/s00405-011-1633-8. [DOI] [PubMed] [Google Scholar]

- 22.Bachar G, Nageris B, Feinmesser R, et al. Novel grading system for quantifying upper-airway obstruction on sleep endoscopy. Lung. 2012;190:313–8. doi: 10.1007/s00408-011-9367-3. [DOI] [PubMed] [Google Scholar]

- 23.Vicini C, De Vito A, Benazzo M, et al. The nose oropharynx hypopharynx and larynx (NOHL) classification: a new system of diagnostic standardized examination for OSAHS patients. Eur Arch Otorhinolaryngol. 2012;269:1297–300. doi: 10.1007/s00405-012-1965-z. [DOI] [PubMed] [Google Scholar]

- 24.Hamans E, Meeus O, Boudewyns A, Saldien V, Verbraecken J, Van de Heyning P. Outcome of sleep endoscopy in obstructive sleep apnoea: the Antwerp experience. B-ENT. 2010;6:97–103. [PubMed] [Google Scholar]

- 25.Uebersax JS. A design-independent method for measuring the reliability of psychiatric diagnosis. J Psychiatr Res. 1982;17:335–42. doi: 10.1016/0022-3956(82)90039-5. [DOI] [PubMed] [Google Scholar]

- 26.Efron B. Nonparametric estimates of standard error: the jackknife, the bootstrap and other methods. Biometrika. 1981;68:589–99. [Google Scholar]

- 27.Efron B, Tibshirani R. An introduction to the bootstrap. Boca Raton, FL: Chapman & Hall / CRC; 1993. [Google Scholar]