The authors undertook a solutions-focused exercise to identify reporting barriers and devise a pilot improvement intervention.

Abstract

Background:

As adjuvant treatment moves to outpatient settings, required reporting is problematic. We undertook a solutions-focused exercise to identify reporting barriers and devise a pilot improvement intervention.

Methods:

We convened a multidisciplinary group of community-based oncologists, tumor registry (TR) staff, and hospital leadership. The group identified three key barriers to reporting: (1) inability to identify correct managing physician, (2) poor communication, and (3) manual reporting burden. Our intervention addressed the first two barriers and involved correcting physician contact information, simplifying contact forms, ascertaining cases in real time, and priming physician office staff to respond to TR requests.

Results:

Preintervention, the TR did not identify any pilot patients' managing medical oncologists and little adjuvant treatment. During the April-May 2012 intervention, 22 patients with breast cancer listed our volunteer surgeon as managing physician. The TR sent 22 treatment letters to the surgeon's office and received 19 (86%) responses identifying the managing medical oncologist. Nine of the 19 cases (47%) were closed. To close a case required an average of 5.9 contacts and 28 minutes for electronic medical record–based cases and 38.9 minutes for community oncology cases. Sixty-four percent of required treatment was reported. Surgical staff spent ∼0.5 hours per case to identify the oncologist prescribing adjuvant treatment.

Conclusion:

The solutions-focused exercise improved identification of managing oncologists from 0% to 86% for patients treated by community oncologists. Treatment reporting increased from 2.6% to 64%. The pilot did not address the burden of reporting, which remains great. Electronic records can reduce this burden, but this approach is not currently feasible for many oncologists.

Introduction

In 2007, the American College of Surgeons' Commission on Cancer took the bold step of requiring accredited programs to submit adjuvant treatment data for breast and colon cancer cases.1,2 This requirement makes hospitals accountable for reporting treatments delivered beyond their walls and fiscal jurisdiction. Tumor registries (TRs), both hospital and SEER, are historically unreliable data sources for treatment delivered outside the hospital.3–5 In fact, the TR at an academic medical center found that only 12% to 32% of radiation postlumpectomy, 8% to 29% of chemotherapy and 0% to 3% of hormonal treatments were reported by community- and hospital-based oncologists, respectively.6 Yet such data are critical as they form the foundation for future quality improvement efforts.7

The basic challenge to improve treatment reporting is the disincentive inherent in the staff time and money required to identify cases and treatments, and to complete and return forms. Additional barriers to treatment reporting include limited awareness of TR reporting requirements, competing priorities, and lack of supporting information technologies (IT). Furthermore, many community-based oncologists mistrust hospitals' motives, suspecting the treatment reporting requests as thinly veiled efforts to acquire their patients.6

Countering reasons not to report, some oncologists believe the TR could provide useful data such as survival statistics based on their prescribing protocols.6 However, it is unclear whether this positive attribute is sufficient to overcome the aforementioned barriers. Because reporting was so poor at our institution, we undertook this study and devised a pilot intervention to improve breast cancer adjuvant treatment reporting from community oncology practices by means of a solutions-focused exercise.

Methods

Our study was conducted at a high breast cancer volume hospital striving to obtain American College of Surgeons accreditation. The single hospital locale includes 19 medical, three radiation, and 23 surgical oncologists who treat breast cancer; 75% are part of community-based solo and group practices, and 25% are providers in hospital-based faculty practices and resident clinics. These practices treat patients ranging from the indigent to the very wealthy. The study was approved by the institutional review board.

The solutions-focused exercise, facilitated by an expert in quality improvement methods, identified reporting barriers and facilitators7 and used discussion to determine which barriers the intervention would address. Exercise participants included one surgical and two medical community-based oncologists, one hospital radiation oncologist, the hospital tumor registrar, her assistant, the administrator overseeing the TR, and the deputy chief medical officer. We focused on the community-based practices because reporting rates were worse there than they were for hospital-based practices. First, we informed participants of the rationale for and importance of treatment reporting, the scope of the problem (Table 1), and the barriers identified during a series of interviews previously conducted with oncologists, their office staff, and hospital leadership. The deputy chief medical officer then emphasized the importance of this effort to the hospital overall and challenged the group to come up with strategies to improve current practice.

Table 1.

Baseline Performance of Tumor Registry Requests and Treatments Reported

| Adjuvant Treatment Needed | Need Treatment Follow-Up Information (No.) | Letters Sent |

Letters Received |

||

|---|---|---|---|---|---|

| No. | % | No. | % | ||

| Breast-conserving surgery patients | 187 | 134 | 72 | 4 (3 with treatment info) | 3 |

| Chemotherapy | 20 | 17 | 85 | 2 | 12 |

| Hormonal therapy | 180 | 133 | 74 | 5 | 3 |

The solutions-focused exercise facilitator worked with exercise participants to create a process map of the current state of information flow. Using time-date stamp data collected by the TR, delay and completion data were shared with the group. Participants brainstormed about potential reasons for process failures, then organized the reasons according to theme using an affinity diagramming facilitation tool. The participant group then voted on which themes were most likely to be responsible for poor returns to the TR, thus identifying the “critical few.” They further prioritized issues using both an in scope/out of scope tool and an ease/impact grid. The in scope/out of scope tool enables participants to identify those issues within and those outside the group's control. With those issues that the group defined as within their control, we then used the ease/impact grid. This grid was used to distinguish between an issue's potential impact and its ease of address, assessing potential impact from low to high, and ease of address from difficult to easy. The issues the group identified as high impact and easy to address became the focus of the pilot intervention.

Results

The group identified three key barriers to treatment reporting: (1) burden of manual reporting, (2) inability to identify the correct managing physician, and (3) poor communication between the TR and physician practices. They then devised two pilot studies to target key barriers: one focused on the TR's ability to identify the correct managing physician, and the second focused on improving communication between the TR and a practice. These barriers were felt to be under participants' control, relatively easy to address, and to possibly have a high impact. Although the burden of reporting was felt to be critical, the group deemed the steps needed to improve reporting processes as largely beyond their control.

Two community oncology practices volunteered to be test sites, one surgical group and one solo medical oncology practice. Each practice had access to hospital-based electronic data (eg, pathology, laboratory test results), used paper charts, and had electronic billing.

For the first pilot, each oncologist submitted a list of 10 of their patients to the TR. The TR correctly identified the surgeon as the managing physician for 9 cases and the medical oncologist in only 1 case. We focused the second pilot on the surgical oncologist because while the surgeon was correctly identified as the managing physician, none of her patients had adjuvant treatment reported.

Conducted from April 1 through May 31, 2012, the second pilot's steps are listed in Table 2. The TR monitored the amount of time spent per case on case identification, communicating with physician practices, and tracking the managing physician contact and patient treatment information. She started tracking treatment information with the managing surgeon and followed the referral oncologist contacts they provided.

Table 2.

Intervention Steps

|

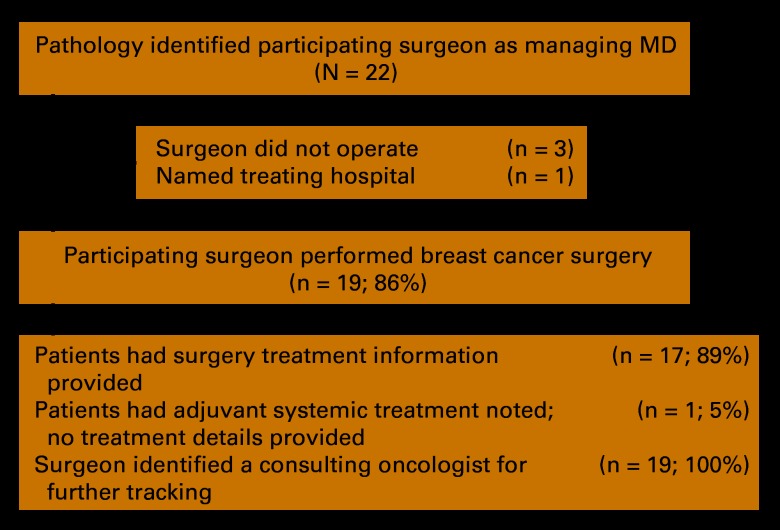

A total of 22 patients with breast cancer who listed our participating surgeon as the managing physician were identified from pathology (Appendix Figure A1, online only); 19 (86%) correctly identified the surgeon. Of these 19, 17 (89%) had surgery treatment provided, 1 (5%) noted adjuvant systemic treatment but provided no details, and 19 (100%) identified a consulting oncologist for further tracking. At the pilot's end, 47% of cases were closed. The remaining open cases were missing treatment data: 24% of needed systemic therapy and 61% of radiotherapy information. All five patients treated by hospital-based oncologists had treatment data in the electronic medical record (EMR) and were closed.

The mean number of contacts to close a case was 5.9 (standard deviation [SD] = 1.9). At the close of the pilot test, an average of 8.2 (SD = 3.3) contacts were made per open case. The tumor registrar estimated she spent an average of 6 minutes per e-mail, 5 minutes per fax, 3 minutes per letter, and 8 minutes per phone call tracking treatment information. On average, it took 28.1 minutes (SD = 2.4) to close a case for a patient whose data were in the EMR, and 38.9 (SD = 9.0) minutes for patients receiving adjuvant treatment in the community. Open cases took 48.4 (SD = 18.7) minutes. The pilot's surgeon reported that each case took one half hour of her staff's time, and noted that reporting was challenged by the competing priorities of conducting a busy practice. Treatment reporting increased significantly, from 2.6% to 64% (Table 3).

Table 3.

Change in Adjuvant Treatments Reported to the Tumor Registry

| Adjuvant Treatment | Preintervention* |

Postintervention |

P | ||||

|---|---|---|---|---|---|---|---|

| No. Needing Follow-Up Treatment | Reported Treatment |

No. Needing Follow-Up Treatment | Reported Treatment |

||||

| No. | % | No. | % | ||||

| RT post-BCS | 187 | 3 | 1.6 | 8 | 3 | 37.5 | < .001 |

| Systemic treatment | 200 | 7 | 3.5 | 17 | 13 | 76 | < .001 |

| Overall† | 387 | 10 | 2.6 | 25 | 16 | 64 | < .001 |

Abreviations: BCS, breast-conserving surgery; RT, radiation treatment.

Data obtained from tumor registry for 2010-2011 calendar years.

Episodes of needed care (not individual patients).

Discussion

Reporting adjuvant treatments to the hospital TR is impeded by the difficulty of identifying the treating oncologist, practical IT issues, competing priorities, communication, and mistrust.7,8 A solutions-focused exercise to identify the treating oncologist increased identification of the treating oncologist from 0% to 86% and successfully improved adjuvant treatment reporting from less than 3% to 64%. The steps taken to improve physician identification and communication were relatively easy to implement. The process focused on the surgeon, who was easily identified from pathology reports. We educated surgeons about reporting requirements, encouraged them to ensure staff compliance, updated their contact information and preferred mode of communication with the registry, enabled varying modes of treatment reporting by getting approval from the privacy officer, and reached out biweekly to the surgeon to encourage staff participation.

The practical IT challenge differs between hospital- and community-based oncologists. For patients treated in a hospital with an EMR, the tumor registrar can easily access treatment data but must manually enter the data it into a TR program. Software interfaces between the EMR and tumor registry software can facilitate direct data transfer, yet few sites have this capability. Until these interfaces are improved, the registrar will have to continue manually entering treatment data.

The greater challenge is accessing community-based treatment data as community practice sites vary in their use and types of EMRs. Many practices still use paper charts, making case and treatment identification quite burdensome. For high-volume community practices with paper charts and electronic billing, hospitals should consider supporting a billing reporting process that details chemotherapies administered and can be sent to the TR on a monthly basis. For offices with different EMRs, software that enables interface with the TR is needed. The growth of accountable care organizations may help overcome some of the technical connectivity and trust issues between the hospital and community physicians. However, because accountable care organizations focus more on primary and not cancer care delivery, it is unlikely that, in the short term, the emergence of such entities will improve cancer treatment reporting.

None of these approaches, however, deals with the basic conundrum facing both community practices and hospitals: treatment reporting poses a burden to busy clinicians and staff whose priority is patient care. Hospitals, facing increasing financial strain and budget cuts, often cut tumor registries. Such cuts, combined with increasing numbers of cancer cases, create heightened tensions for registry staff. There are limited incentives to encourage treatment reporting. Pay for performance may incentivize practices to improve treatment delivery, but not treatment reporting to the hospital TR.

In summary, a simple pilot intervention successfully increased identification of the treating oncologist and improved treatment reporting. To broadly increase treatment reporting from community-based practices will require interventions beyond the scope of this study, namely increased electronic connectivity, interfaces between disparate billing programs, EMRs and TR software, and educational outreach about reporting requirements. However, until there is a simplified approach to reduce the burden of reporting, it is unlikely that hospitals will be able to provide an accurate measure of the quality of their cancer care.

Acknowledgment

Supported by National Cancer Institute Grant R21CA132773.

Appendix

Figure A1.

Pilot study population.

Authors' Disclosures of Potential Conflicts of Interest

The author(s) indicated no potential conflicts of interest.

Author Contributions

Conception and design: Nina A. Bickell, Jill Wellner, Ann Scheck McAlearney

Financial support: Nina A. Bickell

Administrative support: Nina A. Bickell, Rebeca Franco

Provision of study materials or patients: Nina A. Bickell, Jill Wellner, Rebeca Franco

Collection and assembly of data: Nina A. Bickell, Jill Wellner, Rebeca Franco, Ann Scheck McAlearney

Data analysis and interpretation: Nina A. Bickell, Jill Wellner, Ann Scheck McAlearney

Manuscript writing: Nina A. Bickell, Ann Scheck McAlearney, Jill Wellner

Final approval of manuscript: All authors

References

- 1.American College of Surgeons. CoC quality of care measures: National Quality Forum endorsed commission on cancer measures for quality of cancer care for breast and colorectal cancers. www.facs.org/cancer/qualitymeasures.html.

- 2.Williams RT, Stewart AK, Winchester DP. Monitoring the delivery of cancer care: Commission on Cancer and National Cancer Data Base. Surg Oncol Clin N Am. 2012;21:377–388. vii. doi: 10.1016/j.soc.2012.03.005. [DOI] [PubMed] [Google Scholar]

- 3.German RR, Wike JM, Bauer KR, et al. Quality of cancer registry data: Findings from CDC-NPCR's Breast and Prostate Cancer Data Quality and Patterns of Care Study. J Registry Manage. 2011;38:75–86. [PubMed] [Google Scholar]

- 4.Malin JL, Kahn KL, Adams J, et al. Validity of cancer registry data for measuring the quality of breast cancer care. J Natl Cancer Inst. 2002;94:835–844. doi: 10.1093/jnci/94.11.835. [DOI] [PubMed] [Google Scholar]

- 5.Caldarella A, Amunni G, Angiolini C, et al. Feasibility of evaluating quality cancer care using registry data and electronic health records: A population-based study. Int J Qual Health Care. 2012;24:411–418. doi: 10.1093/intqhc/mzs020. [DOI] [PubMed] [Google Scholar]

- 6.Bickell NA, McAlearney AS, Wellner J, et al. Understanding the challenges of adjuvant treatment measurement and reporting in breast cancer: Cancer treatment measuring and reporting. Med Care. doi: 10.1097/MLR.0b013e3182422f7b. [epub ahead of print on December 30, 2011] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Edwards BK, Brown ML, Wingo PA, et al. Annual report to the nation on the status of cancer, 1975-2002, featuring population-based trends in cancer treatment. J Natl Cancer Inst. 2005;97:1407–1427. doi: 10.1093/jnci/dji289. [DOI] [PubMed] [Google Scholar]

- 8.McAlearney AS, Wellner J, Bickell NA. How to improve breast cancer care measurement and reporting: Suggestions from a complex urban hospital setting. J Healthc Manage. 2013;58:205–223. [PMC free article] [PubMed] [Google Scholar]