This study establishes a baseline IAA for QOPI abstraction performed at a single academic institution over 2 consecutive years, and demonstrates the feasibility of conducting an IAA study of medical data abstraction without knowledge of the abstractors.

Abstract

Purpose:

The Quality Oncology Practice Initiative (QOPI) relies on the accuracy of manual abstraction of clinical data from paper-based and electronic medical records (EMRs). Although there is no “gold standard” to measure manual abstraction accuracy, measurement of inter-annotator agreement (IAA) is a commonly agreed-on surrogate. We quantified the IAA of QOPI abstractions on a cohort of cancer patients treated at Beth Israel Deaconess Medical Center.

Methods:

The EMR charts of 49 patients (20 colorectal cancer; 18 breast cancer; 11 non-Hodgkin lymphoma) were abstracted by separate physician abstractors in the fall 2010 and fall 2011 QOPI abstraction rounds. Cohen's kappa (κ) was calculated for encoded data; raw levels of agreement and magnitude of discrepancies were calculated for numeric and dated data.

Results:

One hundred two data elements with 2,035 paired entries were analyzed. Overall IAA for the 1,496 coded entries was κ = 0.75; median IAA for n = 85 individual coded elements was κ = 0.84 (interquartile range, 0.30 to 1.00). Overall IAA for the 421 dated entries was 73%; median IAA for n = 17 individual dated elements was 67% (interquartile range, 61% to 86%).

Conclusion:

This study establishes a baseline level of IAA for a complex medical abstraction task with clear relevance for the oncology community. Given that the observed κ is considered only fair IAA, and that the rate of date discrepancy is high, caution is necessary in interpreting the results of QOPI and other manual abstractions of clinical oncology data. The accuracy of automated data extraction efforts, possibly including a future evolution of QOPI, will also need to be carefully evaluated.

Introduction

Quality reporting on the basis of clinical information has been undergoing a rapid evolution in recent years, driven primarily by the transition to electronic medical records (EMRs). Whereas the limiting factor in the interpretation of a handwritten medical chart was often the legibility of the scribe, this factor has now been removed from the equation in many cases.1 Abstraction accuracy can now be assessed against the veracity of the underlying data.

The Quality Oncology Practice Initiative (QOPI) is a large manual abstraction effort that occurs twice yearly across the United States.2–4 QOPI was conceived after the release of the Institute of Medicine report, Ensuring Quality Cancer Care,5 and was opened to all oncology practices in the United States in 2006. Since then, participation has increased yearly, with 272 practices participating in fall 2012.6 Because QOPI currently relies on the manual abstraction of clinical data from charts (handwritten and/or EMR-based), the possibility of transcription and interpretation errors exists. To date, no study has assessed the accuracy of manual abstraction for QOPI.

Inter-annotator agreement (IAA), also called inter-rater reliability, is the extent to which two independent manual abstractors agree on their measurements. The use of IAA metrics to assess the reliability of abstraction decisions based on unstructured text has been widely studied in the context of annotating natural language texts with hand-coded data for use in training machine-learned classifiers.7,8 It is not uncommon to use IAA as part of an annotation development cycle designed to ascertain the complexity of the task and to fine-tune annotators' instructions before data collection begins in earnest.9,10 A recent in-depth study of manual abstraction from clinical records revealed that even apparently simple decisions can be much more cognitively complex than experimenters expect.11 For example, the question “Is the patient a smoker?” can be subject to numerous nuances of interpretation if the medical chart talks about giving up smoking, the time of cessation, a related prescription, or even smoked substances other than tobacco. Annotators tend to invent idiosyncratic ad hoc rules in order to accommodate ambiguities in either the clinical text or the questions themselves. Capturing IAA can serve to flag such discrepancies, exposing complexities overlooked by the designers of the task. Yet, as Kottner et al point out, within the medical domain, “the level of reliability and agreement among users of scales, instruments, or classifications in many different areas is largely unknown.”12(p103) Therefore, we undertook a blinded trial to establish IAA for a cohort of patients with cancer treated at Beth Israel Deaconess Medical Center (BIDMC) whose charts were analyzed in two QOPI abstraction rounds.

Methods

QOPI rules allow for chart abstraction of patients who were included in a prior QOPI round, as long as they continue to meet the core criteria of (1) an original diagnosis within 2 years of the beginning of the new abstraction period, and (2) at least two practitioner visits in the 6 months before the beginning of the new abstraction period. We screened all patients abstracted in the fall 2010 QOPI round for reabstraction eligibility in the fall 2011 QOPI round. Eligible patients were assigned to a different clinician abstractor from the pool of participating hematology/oncology fellows and faculty. The fall 2011 abstractors were unaware that the charts had been previously abstracted. Each fall 2011 abstractor was responsible for between five and 10 charts total; no more than two or three were reabstraction charts.

Subsequently, we determined the set of QOPI data elements that were common to both rounds, had paired data entry for both rounds, and did not contain information expected to change after the fall 2010 round. Coded, numeric, and dated elements were analyzed. Overall IAA across all encoded elements was determined by using Cohen's kappa (κ); IAA was also determined on an element-wise basis. Percentage agreement, as well as date discrepancy magnitudes (in days), were calculated for dated data. This study was determined to be exempt from institutional research board approval, and all investigators completed appropriate human subjects research training.

Results

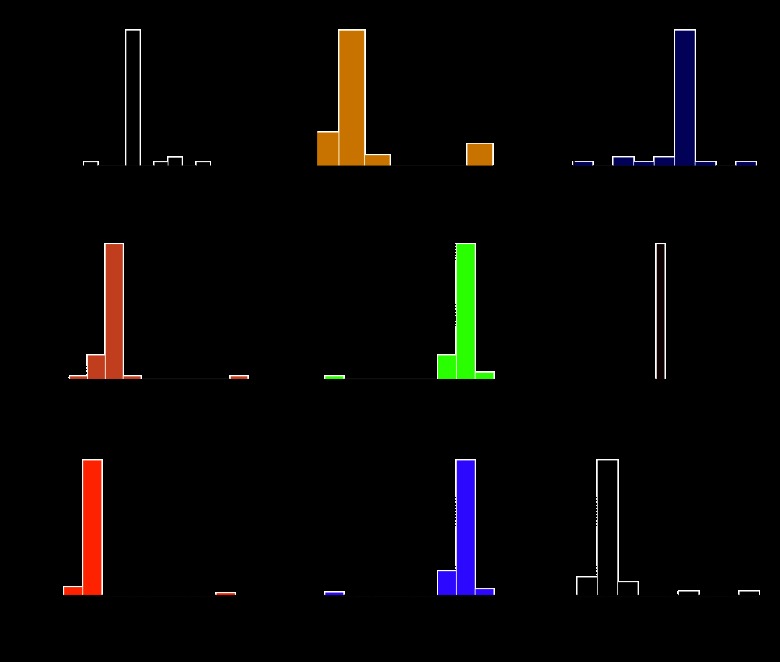

A total of 49 patients met the QOPI core criteria in both years and were thus eligible for dual abstraction. The breakdown of the cases by cancer type and the profile of the abstracting clinicians are shown in Table 1. In 2010 and 2011, there were 244 and 362 discrete QOPI elements, respectively. Two hundred thiry-five elements were common to both years, and 152 had at least one paired data entry. After exclusion of duplicates, metadata (eg, QOPI version number), and “most recent” elements (eg, most recent clinic visit), 107 data elements remained. Of these, five were narrative and were excluded; the remainder were encoded (n = 85) and dated (n = 17). Across these, there were 2,035 paired data entries. Overall IAA for the encoded entries was κ = 0.75; when analyzed by individual data element, median IAA for the coded elements was κ = 0.84 (interquartile range, 0.30 to 1.00). Some examples of coded elements with low IAA are “chemotherapy intent discussed with patient” (κ = 0.18); “specify whether pain intensity quantified, first 2 visits” (κ = 0.29); and “documented chemotherapy intent” (κ = 0.57). Overall IAA for the dated entries was 73%; median IAA for the individual dated elements was 67% (interquartile range, 61% to 86%). These results are summarized in Table 2. The median discrepancy for the 113 discrepant dated entries was +6 days (range, −217 to +391 days). Figure 1 shows date discrepancy histograms of those elements with paired data entry for ≥ 33% of patients. IAA for all individual analyzed elements is shown in Appendix Table A1 (online only).

Table 1.

Summary Statistics of Patients and Abstractors

| Cancer Type | Patients |

Abstractor | 2010 |

2011 |

|||

|---|---|---|---|---|---|---|---|

| No. | % | No. | % | No. | % | ||

| Colorectal | 20 | 41 | First-year H/O clinical fellow | 15 | 30 | 2 | 4 |

| Breast | 18 | 37 | Second-year H/O clinical fellow | 19 | 39 | 14 | 29 |

| Non-Hodgkin lymphoma | 11 | 22 | Third-year H/O clinical fellow | 14 | 29 | 24 | 49 |

| H/O clinical attending | 1 | 2 | 9 | 18 | |||

Abbreviation: H/O, hematology and oncology.

Table 2.

Overall IAA for Coded and Dated Elements

| Element Type | Overall IAA | Median IAA | IQR |

|---|---|---|---|

| Coded (n = 1,496) | κ = 0.75 | κ = 0.84 | 0.30-1.00 |

| Dated (n = 421) | 73% | 67% | 61%-86% |

NOTE. Overall IAA is calculated for the group; median IAA is calculated element-wise.

Abbreviation: IAA, inter-annotator agreement; IQR, interquartile range

Figure 1.

Histograms of date discrepancies for commonly measured temporal features: (A) first chemotherapy date, (B) initial chemotherapy end date, (C) date that chemotherapy was recommended, (D) diagnosis date, (E) diagnosis date 2, (F) date of birth, (G) date of first office visit, (H) date of diagnostic pathology report, (I) date stage was documented. Only one of these elements, date of birth, has 100% agreement.

Discussion

There is no established standard to define what level of IAA is considered sufficient for a complicated medical abstraction task such as QOPI, although some authors consider κ ≤ 0.75 to be a fair to good IAA and κ more than 0.75 an excellent IAA.13 On the basis of our single-institution study, physician expert abstractors appear to have only fair IAA overall. In comparison, the Veterans Affairs Surgical Quality Improvement Program nurse reviewers had a reported IAA ranging from κ = 0.60 (fair) for postoperative myocardial infarction to κ = 0.89 (excellent) for postoperative pulmonary embolism.14 Although hematologist/oncologists are not trained for precise manual abstraction tasks, the daily care of patients with cancer requires accurate interpretation of many documents from a variety of sources. Therefore, physician experts are thought to represent a group of accurate data abstractors. This study is, to our knowledge, one of the first to objectively evaluate this assumption. Although the 2011 abstractors had more subspecialty experience, it is not clear that this would necessarily influence abstraction accuracy. Objective measures with clear EMR representation (eg, date of birth) had nearly 100% IAA; subjective measures (eg, date of cancer staging) had lower IAA.

There are several possible explanations for the fair IAA observed in our study. A simple source of disagreement is a data entry, also known as keystroke error.15 This may explain several of the 365-day discrepancies (Figure 1). In similar fashion, cognitive misperception errors may result in incorrect entry; these two errors cannot always be distinguished easily.15,16 Some of the discrepancies may be explained by changes in patient condition between year 1 and year 2, despite our efforts to exclude all elements that might have been time dependent, such as the “most recent” elements. A more likely source of disagreement is the subjectivity inherent in clinical progress notes, which are the “ground truth” for many of the QOPI data elements.17 For example, cancer stage documentation may not be coherent from one note to another. This may be due to down-staging/upstaging as a result of new information, or may be an erroneous entry by the note author. As another example, it may be difficult to discern whether chemotherapy intent was “discussed with the patient” versus merely “documented.” IAA was low for both of these categories but lower for the former, likely due to increased subjectivity. Finally, some discrepancies may be explained by the possibility that the QOPI instructions were ambiguous or unclear for certain elements, particularly those with a great deal of subjectivity inherent to their description.

Future plans envision the capture of QOPI data elements directly from EMRs (“eQOPI”). This would reduce the need for time-consuming manual annotation and possibly improve accuracy. Subjective elements that are not well encapsulated in structured data format will likely not be within the scope of eQOPI. In addition, it is necessary to validate the accuracy of any automated extraction system against the “gold standard” of human annotator abstraction. For example, a recent study focused on the accuracy of electronic reporting as part of meaningful use of EMRs (as mandated by the US Department of Health and Human Services) found wide variations in accuracy.18 Given the importance of accurate capture of demographic, treatment, and outcome metrics for the realization of a rapid-learning system for cancer care, these considerations are not trivial.19,20

In conclusion, this study establishes a baseline IAA for QOPI abstraction performed at a single academic institution over 2 consecutive years. This study also demonstrates that it is feasible to conduct an IAA study of medical data abstraction in a real-world setting, without knowledge of the abstractors. Although similar studies at other academic institutions or community practices may yield different results, our findings have implications for the accuracy of expert knowledge extraction; these concerns are likely to extend to automated knowledge extraction.

Acknowledgment

Presented as a poster at the ASCO Quality Care Symposium, San Diego, CA, November 30-December 1, 2012.

Appendix

Table A1.

IAA for Each Individual Analyzed Element

| QOPI Element Code | QOPI Description | Agreement |

|---|---|---|

| qopi_ajcc_breast | AJCC stage (0-IV) at breast cancer diagnosis | 0.33 |

| qopi_ajcc_crc | AJCC/Dukes' stage at colon or rectal cancer diagnosis | 0.71 |

| qopi_analgesic | Narcotic analgesic prescription written in past 6 months | 0.35 |

| qopi_antiemetics_1 | Antiemetics prescribed or administered on date of first administration of moderate emetic risk chemotherapy | 1 |

| qopi_antiemetics_2 | Antiemetics prescribed or administered on date of first administration of moderate emetic risk chemotherapy | 1 |

| qopi_aprepitant | Aprepitant prescribed or administered on date of first administration of high emetic risk chemotherapy | 0 |

| qopi_bonemetastases | Bone metastases | 1 |

| qopi_cancerstage | Cancer stage documented | 0.55 |

| qopi_cd20_nhl | CD-20 antigen expression | 1 |

| qopi_chemoadmin | Chemotherapy administered during initial treatment course | 1 |

| qopi_chemoadmin_bc | Multiagent chemotherapy administered during initial treatment course (breast cancer) | 0.84 |

| qopi_chemodiscussed | Chemotherapy intent discussed with patient | 0.18 |

| qopi_chemodt | Enter the date the chemotherapy was initiated | 0.86 |

| qopi_chemoend | Initial chemotherapy end | 0.08 |

| qopi_chemoenddt | Date chemotherapy ended | 0.56 |

| qopi_chemorcvd | Patient ever received chemotherapy for this diagnosis | 0.88 |

| qopi_chemorec_bc | Chemotherapy recommended during initial treatment course (breast cancer) | 1 |

| qopi_chemorecdt | Enter the date the chemotherapy was first recommended | 0.61 |

| qopi_chemorecinitial | Chemotherapy recommended during initial treatment course | 0.84 |

| qopi_chopadmin | CHOP administered | 0.77 |

| qopi_chopdt | Date of first CHOP administration | 0.8 |

| qopi_cigarette | Cigarette smoking status assessed, first two office visits | 0.61 |

| qopi_clinicaltrial | Care on clinical trial | 0.38 |

| qopi_consentdoc_1 | Consent documentation | 1 |

| qopi_consentdoc_2 | Consent documentation | 1 |

| qopi_consentdoc_3 | Consent documentation | 1 |

| qopi_constipationdisc | Constipation discussed when prescription written | 0 |

| qopi_curative_1 | Curative chemotherapy provided | 1 |

| qopi_curative_2 | Curative chemotherapy provided | 1 |

| qopi_curative_3 | Curative chemotherapy provided | 1 |

| qopi_cytologydt | Cytology report date | 1 |

| qopi_deceased2 | Deceased | 0 |

| qopi_diagnosisdt | Date of diagnosis | 0.61 |

| qopi_diagnosisdt2 | Diagnosis date 2 | 0.63 |

| qopi_dob | Patient date of birth | 1 |

| qopi_egfr | Anti-EGFR monoclonal antibody therapy | 0 |

| qopi_emoprobaddress | Emotional well-being addressed | 0 |

| qopi_er | ER status | 0.83 |

| qopi_esa_3 | ESAs initiated in the past 6 months | 1 |

| qopi_fertility | Fertility preservation | 0.82 |

| qopi_firstvisit | First office visit | 0.86 |

| qopi_gender | Patient gender | 0.95 |

| qopi_growthdateunk | Date of first granulocytic growth factor administration unknown | 1 |

| qopi_growthfactor | Granulocytic growth factor administered | 0 |

| qopi_growthfactordt | Date of first granulocytic growth factor administration | 0.67 |

| qopi_hepb | Hepatitis B surface antigen expression | 0.78 |

| qopi_her2neutumor | HER2/neu status | 0.72 |

| qopi_highestpain | Enter highest pain intensity | 0 |

| qopi_hormone | Hormonal therapy recommendation | 0.85 |

| qopi_hormonedt | Hormone administration start date | 1 |

| qopi_hormonerecdt | Date hormonal therapy first recommended | 0.46 |

| qopi_hormonetheradmin | Hormonal therapy administered | 0.25 |

| qopi_icd9 | ICD-9-CM Code | Not analyzed |

| qopi_initialadmin_br | Chemotherapy administered during initial treatment course (breast cancer) | 0.84 |

| qopi_initialtrasadmin | Trastuzumab (Herceptin) administered during initial treatment course | 0.81 |

| qopi_initialtrasrec | Trastuzumab (Herceptin) recommended during initial treatment course | 0.84 |

| qopi_intent | Documented chemotherapy intent | 0.57 |

| qopi_kras | KRAS gene mutation testing | 0.27 |

| qopi_margins | CEA following curative resection | 0 |

| qopi_metastases | Metastases | 0.6 |

| qopi_mstage_breast | AJCC M stage at breast cancer diagnosis | 1 |

| qopi_mstage_crc | AJCC M stage at colon or rectal cancer diagnosis | 0 |

| qopi_muscularis | For rectal cancer only: penetration through the muscularis propria of the rectum into the subserosa or non-peritonealized pericolic or perirectal ti | 1 |

| qopi_neoadjrcvd2 | Neoadjuvant radiation received | 1 |

| qopi_nicassess | Narcotic-induced constipation assessed on visit following prescription | 0 |

| qopi_nodes | Number of nodes examined by pathologist | Not analyzed |

| qopi_nohormoneadmin | Select reason hormonal therapy not administered | 1 |

| qopi_notadmin | Select reason chemotherapy not administered | 0.58 |

| qopi_notadmin_bc | Select reason multiagent chemotherapy not administered (breast cancer) | 0.08 |

| qopi_notation | Effectiveness of narcotic assessed on visit following prescription | −0.2 |

| qopi_notrasadmin | Select the reason trastuzumab not administered | 1 |

| qopi_nstage_breast | AJCC N stage at breast cancer diagnosis | 0 |

| qopi_nstage_crc | AJCC N stage at colon or rectal cancer diagnosis | 1 |

| qopi_pain | Pain assessed, first two office visits | 0.29 |

| qopi_painintensity | Specify whether pain intensity quantified, first two visits | 0.29 |

| qopi_pathcyt_1 | Pathology/hemato-pathology report or cytology report confirming malignancy | 1 |

| qopi_pathcyt_2 | Pathology/hemato-pathology report or cytology report confirming malignancy | 1 |

| qopi_patientofage_1 | If patient of reproductive age, check all that apply | 1 |

| qopi_patientofage_2 | If patient of reproductive age, check all that apply | 1 |

| qopi_patientofage_5 | If patient of reproductive age, check all that apply | 1 |

| qopi_peri | For rectal cancer only: peri-rectal lymph node involvement | 1 |

| qopi_phdt | Pathology/hemato-pathology report date | 0.65 |

| qopi_plan_1 | Plan documented | 1 |

| qopi_plan_2 | Plan documented | 1 |

| qopi_plan_3 | Plan documented | 1 |

| qopi_plan_4 | Plan documented | 1 |

| qopi_plan_5 | Plan documented | 1 |

| qopi_pr | PR status | 0.86 |

| qopi_reasnochemo | Enter documented reason not recommended (optional) | Not analyzed |

| qopi_reasnochemoadmin | Enter other documented reason chemotherapy not administered | Not analyzed |

| qopi_reasnochemoadmin_bc | Enter other documented reason not administered (optional) (breast cancer) | Not analyzed |

| qopi_reasnohormonerec | Enter documented reason hormonal therapy not recommended (optional) | Not analyzed |

| qopi_resectiondt | Date of surgical resection | 1 |

| qopi_results | If patient had a surgical resection: Surgical resection results | 1 |

| qopi_rituximab | Rituximab administered | 1 |

| qopi_smokecounseling | Smoking cessation counseling recommended | 0.5 |

| qopi_stagedt | Cancer stage documented date | 0.66 |

| qopi_stop | Reason for stopping treatment | 0.65 |

| qopi_surgery | Surgery for primary tumor/cancer | 0.49 |

| qopi_transferin | Transfer-in status | 0 |

| qopi_tstage_breast | AJCC T stage at breast cancer diagnosis | 0.56 |

| qopi_tstage_crc | AJCC T stage at colon or rectal cancer diagnosis | 1 |

| qopi_txphysician | Date treatment summary provided or communicated to practitioner(s) | 0.5 |

| qopi_txsummary | Chemotherapy treatment summary completed | 0.06 |

| qopi_txsummarydt | Date completed-treatment summary | 0.67 |

| qopi_txsummprovphys | Treatment summary provided or communicated to practitioner(s) providing continuing care | 1 |

| qopi_txsummprovpt | Treatment summary provided to patient | 0 |

| qopi_wellbeing | Emotional well-being assessed | 0.31 |

NOTE. The Quality Oncology Practice Initiative (QOPI) element name and description are as provided by QOPI. IAA is κ for coded elements and percent agreement for dated elements. Elements that were not analyzed are denoted as such. Note: the elements qopi_chemodt_bc and qopi_chemorecdt_bc were combined with qopi_chemodt and qopi_chemorecdt, respectively; there were no overlapping data between the breast-specific and general elements.

Abbreviations: AJCC, American Joint Committee on Cancer; CEA, carcinoembryonic antigen; CHOP, cyclophosphamide, doxorubicin, vincristine, prednisone; EGFR, epidermal growth factor receptor; ER, estrogen receptor; ESA, erythropoietin-stimulating agent; HER2, human epidermal growth factor receptor 2; ICD-9-CM, International Classification of Diseases, Ninth Revision, Clinical Modification; PR, progesterone receptor.

Authors' Disclosures of Potential Conflicts of Interest

Although all authors completed the disclosure declaration, the following author(s) and/or an author's immediate family member(s) indicated a financial or other interest that is relevant to the subject matter under consideration in this article. Certain relationships marked with a “U” are those for which no compensation was received; those relationships marked with a “C” were compensated. For a detailed description of the disclosure categories, or for more information about ASCO's conflict of interest policy, please refer to the Author Disclosure Declaration and the Disclosures of Potential Conflicts of Interest section in Information for Contributors.

Employment or Leadership Position: Jeremy L. Warner, American Society of Clinical Oncology, HIT and eQOPI Work Groups (U) Consultant or Advisory Role: None Stock Ownership: None Honoraria: None Research Funding: None Expert Testimony: None Other Remuneration: None

Author Contributions

Conception and design: Jeremy L. Warner

Administrative support: Reed E. Drews

Provision of study materials or patients: Reed E. Drews

Collection and assembly of data: Jeremy L. Warner, Reed E. Drews

Data analysis and interpretation: All authors

Manuscript writing: Jeremy L. Warner, Peter Anick

Final approval of manuscript: All authors

References

- 1.Berwick DM, Winickoff DE. The truth about doctors' handwriting: A prospective study. BMJ. 1996;313:1657–1658. doi: 10.1136/bmj.313.7072.1657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Campion FX, Larson LR, Kadlubek PJ, et al. Advancing performance measurement in oncology: Quality Oncology Practice Initiative participation and quality outcomes. J Oncol Pract. 2011;7(suppl):31s–35s. doi: 10.1200/JOP.2011.000313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Neuss M, Gilmore TR, Kadlubek P. Tools for measuring and improving the quality of oncology care: The Quality Oncology Practice Initiative (QOPI) and the QOPI certification program. Oncology. 2011;25:880–883. 886–887. [PubMed] [Google Scholar]

- 4.Neuss MN, Desch CE, McNiff KK, et al. A process for measuring the quality of cancer care: The Quality Oncology Practice Initiative. J Clin Oncol. 2005;23:6233–6239. doi: 10.1200/JCO.2005.05.948. [DOI] [PubMed] [Google Scholar]

- 5.Hewitt ME, Simone JV. Ensuring Quality Cancer Care. Washington, DC: National Academies Press; 1999. [PubMed] [Google Scholar]

- 6.American Society of Clinical Oncology. Quality Oncology Practice Initiative. http://qopi.asco.org.

- 7.Hripcsak G, Rothschild AS. Agreement, the f-measure, and reliability in information retrieval. J Am Med Inform Assoc. 2005;12:296–298. doi: 10.1197/jamia.M1733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Uzuner O, Solti I, Xia F, et al. Community annotation experiment for ground truth generation for the i2b2 medication challenge. J Am Med Inform Assoc. 2010;17:519–523. doi: 10.1136/jamia.2010.004200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Pustejovsky J, Stubbs A. Natural Language Annotation for Machine Learning. Sebastopol, CA: O'Reilly; 2012. [Google Scholar]

- 10.Fort K, Nazarenko A, Rosset S. Modeling the complexity of manual annotation tasks: A grid of analysis. International Conference on Computational Linguistics; 2012. pp. 895–910. [Google Scholar]

- 11.Campbell EM, Sittig DF, Chapman WW, et al. Understanding inter-rater disagreement: A mixed methods approach. AMIA Ann Symp Proc. 2010;2010:81–85. [PMC free article] [PubMed] [Google Scholar]

- 12.Kottner J, Audige L, Brorson S, et al. Guidelines for Reporting Reliability and Agreement Studies (GRRAS) were proposed. J Clin Epidemiol. 2011;64:96–106. doi: 10.1016/j.jclinepi.2010.03.002. [DOI] [PubMed] [Google Scholar]

- 13.Fleiss JL. Statistical Methods for Rates and Proportions. ed 2. New York, NY: Wiley; 1981. [Google Scholar]

- 14.Davis CL, Pierce JR, Henderson W, et al. Assessment of the reliability of data collected for the Department of Veterans Affairs National Surgical Quality Improvement Program. J Am Coll Surg. 2007;204:550–560. doi: 10.1016/j.jamcollsurg.2007.01.012. [DOI] [PubMed] [Google Scholar]

- 15.Powell SG, Baker KR, Lawson B. A critical review of the literature on spreadsheet errors. Decision Supp Syst. 2008;46:128–138. [Google Scholar]

- 16.Rosenbloom ST, Crow AN, Blackford JU, et al. Cognitive factors influencing perceptions of clinical documentation tools. J Biomed Inform. 2007;40:106–113. doi: 10.1016/j.jbi.2006.06.006. [DOI] [PubMed] [Google Scholar]

- 17.Rosenbloom ST, Denny JC, Xu H, et al. Data from clinical notes: A perspective on the tension between structure and flexible documentation. J Am Med Inform Assoc. 2011;18:181–186. doi: 10.1136/jamia.2010.007237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kern LM, Malhotra S, Barron Y, et al. Accuracy of electronically reported “meaningful use” clinical quality measures: A cross-sectional study. Ann Intern Med. 2013;158:77–83. doi: 10.7326/0003-4819-158-2-201301150-00001. [DOI] [PubMed] [Google Scholar]

- 19.Abernethy AP, Etheredge LM, Ganz PA, et al. Rapid-learning system for cancer care. J Clin Oncol. 2010;28:4268–4274. doi: 10.1200/JCO.2010.28.5478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Roth BJ, Krilov L, Adams S, et al. Clinical cancer advances 2012: Annual report on progress against cancer from the American Society of Clinical Oncology. J Clin Oncol. 2013;31:131–161. doi: 10.1200/JCO.2012.47.1938. [DOI] [PubMed] [Google Scholar]