Abstract

A desirable goal of functional MRI (fMRI), both clinically and for basic research, is to produce detailed maps of cortical function in individual subjects. Single-subject mapping of the somatotopic hand representation in the human primary somatosensory cortex (S1) has been performed using both phase-encoding and block/event-related designs. Here, we review the theoretical strengths and limits of each method and empirically compare high-resolution (1.5 mm isotropic) somatotopic maps obtained using fMRI at ultrahigh magnetic field (7 T) with phase-encoding and event-related designs in six subjects in response to vibrotactile stimulation of the five fingertips. Results show that the phase-encoding design is more efficient than the event-related design for mapping fingertip-specific responses and in particular allows us to describe a new additional somatotopic representation of fingertips on the precentral gyrus. However, with sufficient data, both designs yield very similar fingertip-specific maps in S1, which confirms that the assumption of local representational continuity underlying phase-encoding designs is largely valid at the level of the fingertips in S1. In addition, it is shown that the event-related design allows the mapping of overlapping cortical representations that are difficult to estimate using the phase-encoding design. The event-related data show a complex pattern of overlapping cortical representations for different fingertips within S1 and demonstrate that regions of S1 responding to several adjacent fingertips can incorrectly be identified as responding preferentially to one fingertip in the phase-encoding data.

Keywords: high-resolution functional MRI, somatosensory cortex, tactile perception, human, high-field MRI

the ability to measure reliable and detailed cortical maps in individual subjects is key to applying functional MRI (fMRI) to the clinical setting. This ability has been compellingly demonstrated in early visual cortex where fMRI at fields of 3 T and below has provided robust maps of retinotopically organized areas in single subjects with high sensitivity and spatial resolution (e.g., Baseler et al. 2011; for review, see Wandell et al. 2007). In the somatosensory cortex, the mapping of the cortical representation of the fingertips of the hand could be especially important for understanding and characterizing abnormal sensory-motor cortical functions or cortical reorganization, especially following disruption of normal developmental periods, traumatic brain injury, stroke, peripheral nerve damage (Tecchio et al. 2002), recovery from hand surgery, or dystonia (Hinkley et al. 2009). In addition, there may be a significant benefit in the accurate localization of brain areas subserving sensory-motor function before neurosurgery such as the resection of a tumor or epileptogenic tissue.

Retinotopic mapping of early visual cortex is most often performed using a phase-encoding paradigm design because of the efficiency and power that this approach provides (Engel 2012). Phase-encoding designs in the visual modality take advantage of the spatial correspondence between visual/retinotopic space and cortical space to create a travelling wave of activity in the cortex by stimulating adjacent parts of the visual field successively in time (using expanding ring and rotating wedge stimuli). With appropriate timing of the stimulus presentation, the delay and temporal dispersion of the resulting hemodynamic response means that each voxel that responds preferentially to a location in visual space shows a quasisinusoidal response for which the peak occurs at a given phase, allowing the direct association of the phase value to location in visual space. Several groups have applied this experimental design to map other topographically organized sensory cortices (as reviewed in Engel 2012).

In the tactile modality, the phase-encoding design has been used to map the somatotopic representation of the arm (Servos et al. 1998), face (Huang and Sereno 2007; Sereno and Huang 2006), multiple fingertips (Sanchez-Panchuelo et al. 2010), and within a single finger (Overduin and Servos 2004, 2008; Sanchez-Panchuelo et al. 2012). However, there are potential problems in applying this paradigm to sensory modalities other than visual since the phase-encoding analysis makes the assumption of locally continuous (or conformal) mapping: i.e., adjacent cortical locations are assumed to represent adjacent locations in external space (Engel 2012; Warnking et al. 2002). This assumption is largely valid in the visual cortex in that there are: 1) no known discontinuities within cortical retinotopic maps (except between hemispheres); and 2) no discontinuities between visual areas since most adjoining retinotopic maps represent the same, mirrored part of the visual field at their border (Wandell et al. 2005, 2007). Discontinuities within somatotopic maps have been reported in primate electrophysiological mapping studies. One well-described discontinuity is between the adjacent representations of the hand and the face (e.g., Nelson et al. 1980). These discontinuities represent a potential limitation of phase-encoding designs because spurious phase values can arise at the interface of adjacent representations of discontinuous body parts (map discontinuities) if they coexist within the same voxel or even within the spatial spread of the hemodynamic response, potentially resulting in localization errors.

Another important feature of phase-encoding designs, whatever the sensory modality being studied, is that they are relatively insensitive to overlapping representations (Dumoulin and Wandell 2008; see, however, Smith et al. 2001). This is because regions that respond to stimulation of multiple sites will display an attenuated quasisinusoidal response for which the phase reflects the weighted average location of the representation. Although this characteristic can be useful for eliminating nonspecific responses resulting from draining veins, spatial overlap can arise from genuinely overlapping neural representations, including representations of adjacent fingers (Iwamura et al. 1983a, 1985). Phase-encoding designs are not well-suited to investigate such overlapping neural representations.

An alternative approach to mapping that has often been used in the somatosensory modality is the independent estimation of the response to stimulation of different locations on the body surface using either a block (e.g., Nelson and Chen 2008; Schweisfurth et al. 2011; Stringer et al. 2011) or event-related (ER) design (e.g., Deuchert et al. 2002; J. Besle, R. M. Sanchez-Panchuelo, R. Bowtell, S. T. Francis, and D. Schluppeck, unpublished observations). Activation is then estimated for each body part, usually in the generalized linear model (GLM) framework. Advantages of this independent estimation over phase-encoding designs are that it allows overlapping hemodynamic response patterns to be mapped and does not rely on the assumption of local continuity: a voxel located at a representational discontinuity, responding to two nonadjacent locations, is activated only by these locations and not by intermediate locations, avoiding mislocalization. However, because GLM mapping does not include prior knowledge of the expected maps (in the form of the local continuity assumption), it is likely to be less powerful than phase-encoding for a given scanning time (Engel 2012) and, as a consequence, more sensitive to the choice of statistical threshold.

In this study, we evaluate the use of phase-encoding and ER paradigm designs to map the representation of the five fingertips of the left hand in individual subjects at high spatial resolution (1.5 mm isotropic) using ultrahigh magnetic field (7 T). We demonstrate that the phase-encoding design needs less data than the ER design to produce maps of equivalent quality. We also show that, when overlapping cortical responses are ignored, phase-encoding and ER paradigm designs produce very similar maps of S1 as well as additional somatotopic representations in the motor cortex, suggesting that the assumption of spatial continuity is a good approximation at the level of representation measured with high-resolution fMRI. However, ER maps provide additional information on the overlapping cortical responses (J. Besle, R. M. Sanchez-Panchuelo, R. Bowtell, S. T. Francis, and D. Schluppeck, unpublished observations) and aid the interpretation of features of the maps obtained using the phase-encoding design.

MATERIALS AND METHODS

Subjects

Six subjects who were experienced in fMRI experiments participated in this study (aged 28.3 ± 4.3 yr, 2 females). Approval for the study was obtained from the University of Nottingham Medical School's Ethics Committee, and all subjects gave full written consent. Each subject participated in two scanning sessions: one functional session at 7 T and one structural session at 3 T. The latter was used to obtain a T1-weighted image of the whole brain for image segmentation and cortical unfolding.

Stimuli and Task

The fingertips of each subject's left hand were stimulated using five independently controlled, piezo-electric devices to deliver suprathreshold vibrotactile stimuli (50 Hz) to ∼1 mm2 of the skin (http://www.dancerdesign.co.uk; Dancer Design, St. Helens, United Kingdom).

Somatosensory stimuli were presented using two different paradigms to assess the somatotopic representation in S1: 1) a phase-encoding localizer (Sanchez-Panchuelo et al. 2010); and 2) an ER design (Fig. 1).

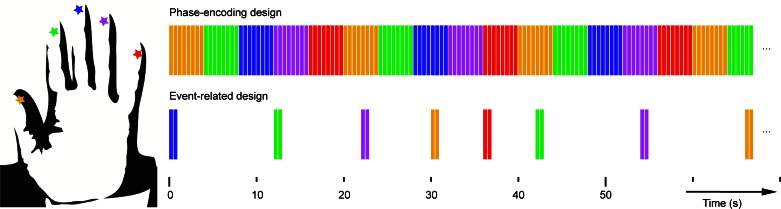

Fig. 1.

Timing of stimuli and experimental designs. Top: phase-encoding design in which the fingertips are stimulated sequentially either from thumb (D1) to little finger (D5) or in the reverse order (data not shown). Each color bar represents 0.4 s of continuous 50-Hz fingertip stimulation (yellow, D1; green, D2; blue, D3; magenta, D4; and red, D5). Note, for the phase-encoding design, there are no periods of rest between consecutive fingertip stimulations. Bottom: event-related (ER) design with intertrial interval of between 4- and 12-s duration. Each vibrotactile event was formed of 2 periods of vibrotactile 50-Hz stimulation lasting 0.4 s separated by 0.1 s.

Phase-Encoding Design

Data were collected during 2–4 runs of the phase-encoding design in which individual fingertips were stimulated for 4-s periods in either a forward (fingertip 1 to 5) or reverse order (fingertip 5 to 1) over a 20-s cycle (equal number of forward and reverse runs collected per subject). The 4-s stimulation period of each fingertip was subdivided into 8 periods of continuous, 50-Hz stimulation of 0.4-s duration separated by 0.1-s gaps so as to limit habituation effects. Two subjects (subjects 4 and 5) were presented with 2 phase-encoding runs of 12 cycles (4 min; 1 forward and 1 reverse) immediately before the ER runs. The 4 other subjects were presented with 2 runs of 9 cycles (3 min) before and 2 identical runs after the ER runs. For subject 1, all runs were shortened to 8 cycles (2 min 40 s) due to an error during the acquisition of 1 of the runs. To control attentional state, subjects performed a visual, 2-interval, forced-choice discrimination on the fixation point to assess which interval had lower luminance (Gardner et al. 2008); see next section for full details.

ER Design

Six to eight runs of the ER design were carried out (4 min per run). Each run comprised 30 stimulation trials (6 per fingertip) with each trial consisting of 2 periods of stimulation of 0.4-s duration separated by a 0.1-s gap. The onset of each trial was synchronized to the start of an MRI volume acquisition with a random intertrial interval ranging from 4 to 12 s (in 2-s steps). The order in which fingertips were stimulated was block-randomized [i.e., 6 randomized sequences of (1 … 5)]. To keep the attentional state constant throughout each run, subjects performed a 2-interval, forced-choice task. In each alternate run, we used either a visual, fixation-point dimming task (which interval had lower luminance; see Gardner et al. 2008) or a somatosensory, amplitude-discrimination task (choosing in which interval there was a slight increase in stimulus amplitude). In both tasks, the stimulus difference was continuously adjusted using a three-down, one-up staircase procedure. Subjects reported their responses by pressing one of two buttons with their nonstimulated, right hand. We found no significant main effect of attention or interaction between task and finger stimulation in five out of six subjects, implying that the relatively coarse attentional manipulation had no appreciable differential effect in S1 on mapping the fingertips. We therefore pooled runs using the visual and somatosensory attentional tasks for all ER analyses. Subjects were given several tens of seconds rest between consecutive runs.

Image Acquisition

MR data were collected on a 7-T system (Achieva; Philips) using a volume-transmit coil and a 16-channel receive coil (Nova Medical, Wilmington, MA). Participants' heads were stabilized with a customized MR-compatible vacuum pillow (B.u.W. Schmidt, Garbsen, Germany) and foam padding so as to minimize head motion.

Functional data were acquired using T2*-weighted, multislice, single-shot gradient echo, echoplanar imaging (EPI) with the following parameters: echo time (TE) = 25 ms, SENSE reduction factor of 3 in the right-left (RL) direction for parallel imaging, flip angle (FA) = 75°, repetition time (TR) = 2,000 ms. The spatial resolution was 1.5 mm isotropic with a reduced field of view (FOV) of 156 × 192 × 42 mm in RL, anterior-posterior (AP), and foot-head directions, respectively. Magnetic field inhomogeneity was minimized using an image-based shimming approach (Poole and Bowtell 2008; Wilson et al. 2002) as described in detail in Sanchez-Panchuelo et al. (2010).

The functional runs were followed by the acquisition of high-resolution, T2*-weighted axial images (0.25 × 0.25 × 1.5 mm3 resolution; TE/TR = 9.3/457 ms, FA = 32°, SENSE factor = 2) with the same slice prescription and coverage as the functional data. These data allowed registration with the whole-head anatomic T1-weighted images acquired at 3 T.

In addition, a high-resolution, three-dimensional (3-D) magnetization-prepared rapid acquisition with gradient echo (MPRAGE) data set [1-mm isotropic resolution, linear phase-encoding order, TE/TR = 3.7/8.13 ms, FA = 8°, inversion time (TI) = 960 ms] was collected at 3 T. These data were acquired at 3 T for ease of segmentation before flattening, as images acquired at 3 T display less B1-inhomogeneity-related intensity variation than 7-T data.

Data Analysis

Preprocessing steps were carried out using tools in FSL (Smith et al. 2004), and statistical analysis of functional imaging data was performed using mrTools (http://www.cns.nyu.edu/heegerlab) in MATLAB (The MathWorks, Natick, MA).

Preprocessing

The functional data were all aligned (motion-corrected) to the last volume of the functional data set, acquired closest in time to the high-resolution T2*-weighted volume (the reference EPI frame). To account for scanner drift and other low-frequency signals, all time series were high-pass filtered (0.01-Hz cutoff), and data were then converted to percent signal change for subsequent statistical analysis.

Statistical Analysis

Phase-encoding design.

Time series from reverse-order stimulation runs were 1st reversed and then shifted forward by 1 TR period (2 s). The phase/amplitude and coherence of the best-fitting sinusoid at the stimulation frequency were estimated for each forward-order run and each shifted, time-reversed, reverse-order run, and then averaged across runs. We show in appendix and Fig. 2 that averaging the phase of forward-order and time-reversed, reverse-order runs cancels the hemodynamic response function (HRF) delay in the estimation of the phase and that shifting the reverse-order runs by 1 TR corrects for slice acquisition time and discrete time sampling effects, resulting in a direct relationship between the phase value and the location of stimulation. This ensures that the phase of an hypothetical voxel responding to only one fingertip would fall exactly in the middle of the phase bin corresponding to that fingertip (e.g., a voxel responding only to the index finger would have a phase of 3π/10, which is the center of the [π/5 2π/5] phase bin).

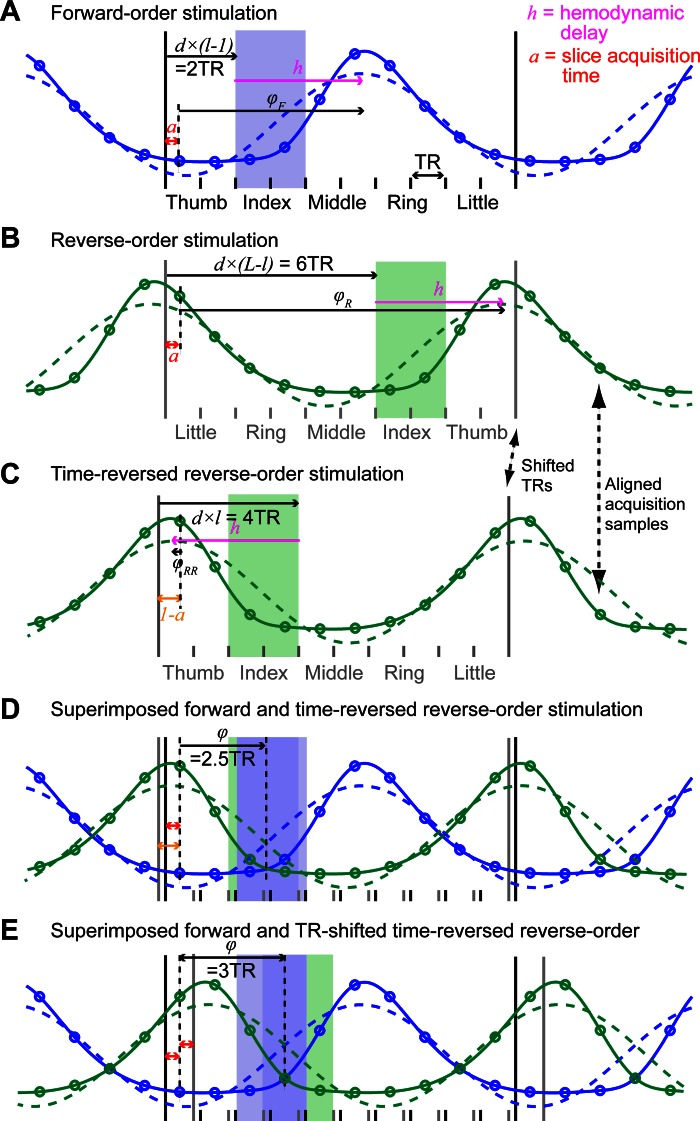

Fig. 2.

Phase computation and independence from slice acquisition time and hemodynamic response function (HRF) delay for phase-encoding data (see appendix for details). A: solid blue line models the time series of activation of a hypothetical voxel responding only to the index finger (2nd location, l = 2), in a phase-encoding run in which fingertips are stimulated from the thumb to the little finger (total number of locations, L = 5). Only 1 cycle of the stimulation is illustrated. The fingertip that is being stimulated changes every 2nd repetition time (TR), at the start of the TR period, as described in the main text. Each circle on the curve represents a time point sampled at an unknown acquisition time, a, after the start of each TR period. The activation corresponding to the stimulation of an individual fingertip is modeled as a boxcar function with a period of 10 TR and an on period d = 2 TR, convolved with a double-γ HRF model. Dashed blue line: best-fitting cosine function. φF, phase of the cosine function (relative to the acquisition time of the 1st sample in the stimulation cycle, expressed in TR), as measured in the phase-encoding analysis described in the text. The best-fitting cosine peaks after an unknown delay, h, relative to the start of stimulation, due to the hemodynamic delay. B: as for A but for reverse-order stimulation. C: in the phase-encoding analysis, reverse-order stimulation runs are time-reversed relative to forward-order runs. However, this reversal is limited by the acquisition sampling (1 sample every TR). This is illustrated by the fact that acquisition samples, but not the actual start of TR/stimulation, are aligned between B and C as highlighted by the arrows. D: superimposed model activation for forward stimulation and time-reversed, reversed-order stimulations model. The 2 model activations (and corresponding best-fitting cosine) are mirror images of each other. In the phase-encoding analysis, the phase is computed as the complex average of the phase of both acquired time series (the amplitude is ignored) and therefore corresponds to the point of symmetry of the forward-order and time-reversed, reverse order time series. Because the phase is computed relative to acquisition sample times (circles), the point of symmetry is independent of both a and h (see appendix for details) and corresponds to exactly 2.5 TR. E: to make the phase values easier to interpret, we shift the time-reversed, reverse-order time series by an additional TR so that the point of symmetry is moved forward by half of a TR. Now the phase for a voxel activated uniquely by the index fingertip is predicted to be exactly 3TR/10TR×2π = 3π/10, which is the center of the [π/5 2π/5] phase bin.

To delineate regions of interest (ROIs) for each fingertip, coherence values were converted into t statistics using the formula t = c × , where c is the coherence and n is the number of time points in the time series. The uncorrected P values corresponding to these t-statistic values were then false discovery rate (FDR)-adjusted using a step-up method (Benjamini et al. 2006). ROIs corresponding to each fingertip were formed by dividing phase values into five bins of 2π/5 width (spanning the range of phase values from 0 to 2π) and selecting spatially contiguous regions of voxels from the same bin, which were above a coherence threshold equivalent to an FDR-adjusted P value of 0.05 (corresponding to coherence thresholds ranging from 0.29 to 0.49 depending on the subject). ROI selection was restricted to voxels within the cortical gray matter by first transforming the phase map into the structural whole-head space using nonlinear transformation coefficients (see below) and then converting the ROI voxel coordinates back into the functional volume space. These fingertip ROIs were subsequently used as independent ROIs (Kriegeskorte et al. 2009) in the analysis of the ER data so as to 1) limit the number of multiple comparisons, and 2) allow group-level inference tests to be conducted.

When there appeared to be several separate somatotopic maps in the same subjects on the flattened cortical view, we checked that they did represent distinct maps and were not an artifact of blood oxygen level-dependent (BOLD) signal spreading across a sulcus due to either extensive extracortical BOLD activity or segmentation errors. Our criterion for distinguishing independent somatotopic maps was that clusters of contiguous voxels corresponding to different individual fingers (phase bins of width 2π/5) in one map should not be contiguous with the corresponding clusters of another map in the volumetric data.

ER design: statistical analysis.

Data were fitted using a GLM in which each fingertip stimulation sequence was modeled as a separate regressor. For each regressor, the 1-s stimulation was modeled as a 1-s boxcar convolved with a canonical HRF model and its orthogonalized temporal derivative, resulting in a GLM of 10 regressors. To simplify the inference tests while still taking into account differences in the hemodynamic response delay across subjects, we maximized the variance explained by the 1st component (magnitude) of each fingertip condition and minimized the contribution of the derivative component. This was achieved by fitting the GLM in 2 steps. In the 1st fitting step, identical HRF model parameters were used for all subjects (a canonical HRF with time to peak of the 1st positive γ-function of 6 s and time to peak of the 2nd undershoot of 16 s), and the GLM was fitted using ordinary least-squares. We then estimated in each phase-encoding-defined ROI the times to the maximum positive and negative peaks of the estimated HRF for the corresponding fingertip condition. We used these estimates, averaged across the 5 ROIs, as the parameters of the γ-functions in the 2nd step. In this 2nd step, time series were fitted using generalized least-squares to correct for correlated time series (Burock and Dale 2000; Wicker and Fonlupt 2003), which otherwise lead to biased inference test outcomes (Smith et al. 2007). The noise correlation matrix was estimated at each voxel using Tukey tapers (Woolrich et al. 2001) from the time series averaged across within-slice, square regions of 20 × 20 voxels, excluding voxels outside of the brain.

For each fingertip condition, we tested on a voxel-by-voxel basis whether the magnitude parameter estimate was: 1) greater than the average of the four other conditions; and 2) greater than zero. The former contrast enhances voxels that show a larger response to the stimulation of one fingertip and was used to facilitate comparison with the phase-encoding results. All contrasts were tested using a one-sided t-test taking into account the noise correlation matrix to reduce bias (Burock and Dale 2000; Wicker and Fonlupt 2003; Woolrich et al. 2001). To reduce the number of inference tests, the analysis was restricted to voxels in the ROIs that were identified using the phase-encoding localizer (dilated by 5 voxels to ensure complete coverage). The average number of voxels analyzed per subject was 7,425 ± 1,396, which is equivalent to a volume of 25 ± 4.7 cm3.

FDR adjustment (Benjamini and Hochberg 1995) was performed using an adaptive step-up method (Benjamini et al. 2006). All adjusted P values were converted to quantiles of the standard normal distribution (z-values).

Alignment and Projection of Statistical Maps onto Surfaces and Flattened Patches

Automated cortical segmentation of the anatomic images and reconstruction of the white/gray and gray/pial cortical surfaces were performed using Freesurfer (http://surfer.nmr.mgh.harvard.edu/; Dale et al. 1999). The mrFlatMesh algorithm, part of the VISTA software (http://white.stanford.edu/software/), was used to create flattened representations of the cortical regions surrounding the central sulcus and postcentral gyrus of the right hemisphere.

To render the results on surface and flattened representations, statistical maps were moved from functional data acquisition space into the anatomic space, in which cortical surface reconstruction had been performed. First, we estimated the linear alignment matrix between the undistorted, partial FOV, high-resolution T2*-weighted volume and the T1-weighted whole-head anatomic images using an iterative, multiresolution robust estimation method (Nestares and Heeger 2000). Second, we estimated the alignment between the (distorted) reference EPI frame (see Preprocessing) and the undistorted, partial FOV, high-resolution T2*-weighted volume. Ultrahigh-field MRI is susceptible to relatively large field inhomogeneities that can cause significant geometric distortions in EPI data (Poole and Bowtell 2008). Even though image-based shimming was used here to minimize such inhomogeneities, residual distortions will remain, prohibiting the use of a rigid-body registration between functional EPI data and anatomic images. To address this problem, nonlinear alignment estimation was performed using FSL nonlinear registration algorithm (FNIRT; Andersson et al. 2007). Note that ROI definition depended on this nonlinear registration because it was restricted to the cortical surface. All other analyses were performed in the space of the original data (after motion correction), and only the resulting statistical maps were linearly and nonlinearly transformed for display on the cortical surface.

To project the statistical maps onto the 3-D reconstruction of the cortical surface, we applied the above-described alignments: functional maps were first nonlinearly transformed into the space of the structural T2* volume using FSL FNIRT applywarp and then linearly transformed from the structural T2* to the whole-head volume space. Statistical values were sampled (using nearest-neighbor interpolation) at the coordinates of the inner (white/gray) and outer (gray/pial) surfaces and at nine intermediate, equally spaced cortical depths. Values at a given coordinate (and cortical depth) in the original whole-head anatomic space were then displayed on the inflated surface or flattened patch using the known direct correspondence between each point of the original inner/upper surface and each point of the inflated/flattened surface.

For coherence values from the phase-encoding analysis and parameter estimate maps from the ER GLM analysis, the values displayed correspond to the maximum intensity projection of the relevant surface points across the 11 cortical depths. For phase maps in the phase-encoding data, values were computed as the phase of the complex average across cortical depths (using amplitude and phase values at each depth).

To convey the size and statistical significance of parameters generated using the canonical HRF GLM analysis, parameter estimates were color-coded, and the transparency α-value of each pixel was set to reflect the z-value in the corresponding inference test. When superimposing two fingertip activation maps, colors were additively combined according to the formula: C = CA × (1 − CB) + CB, where CA and CB are RGB vectors between 0 and 1 premultiplied by their respective α-values. When superimposing more than two maps, the formula was applied iteratively, effectively resulting in a whitish hue for any voxels that were activated by more than two conditions.

RESULTS

Phase-Encoding Results

Analysis of data from the phase-encoding experiment reveals cortical areas showing high coherence values (high fingertip specificity) that are located on the right postcentral gyrus and posterior bank of the central sulcus, corresponding to the primary somatosensory cortex (S1) contralateral to the stimulated left hand, consistent with our previous study (Sanchez-Panchuelo et al. 2010). Figure 3A shows the coherence and phase values projected on the cortical surface for an example subject (subject 1). The phase map in the high-coherence region shows an orderly phase pattern ranging from low values (thumb) to high values (little finger) following the main inferior/superior direction of the postcentral gyrus (and lateral/medial direction given the orientation of the gyrus in the coronal plane). This pattern is indicative of an organized cortical representation of fingertips in S1 (thin black outline). Results are similar for the other five subjects (Fig. 4A) with some notable variations, both from the expected somatotopic pattern and between subjects. The five subjects in Fig. 4 show an additional region responding preferentially to the index finger directly inferior to the thumb area (black dotted arrow in Fig. 4A). One of these subjects (subject 5, Fig. 4A) shows an additional area responding to the thumb, inferior to the additional area responding to the index finger. In four of the subjects, there is a second, smaller area of high fingertip specificity on the precentral gyrus that also displays an orderly phase pattern in the inferior/superior direction (black dotted circles: subject 1, Fig. 3A and subjects 2, 3, and 6, Fig. 4A). We verified for each subject that this additional map is not contiguous with the main S1 somatotopic map in the volumetric data and therefore does not reflect a spread of activity from S1 through the central sulcus (due to segmentation errors or extracortical vascular BOLD contributions). In subjects 2 and 4–6, we observed ordered phase patterns just anterior to S1, in the anterior bank of the central sulcus. However, these patterns were often contiguous with the S1 or precentral gyrus maps, and we therefore could not ascertain that they represent independent somatotopic maps.

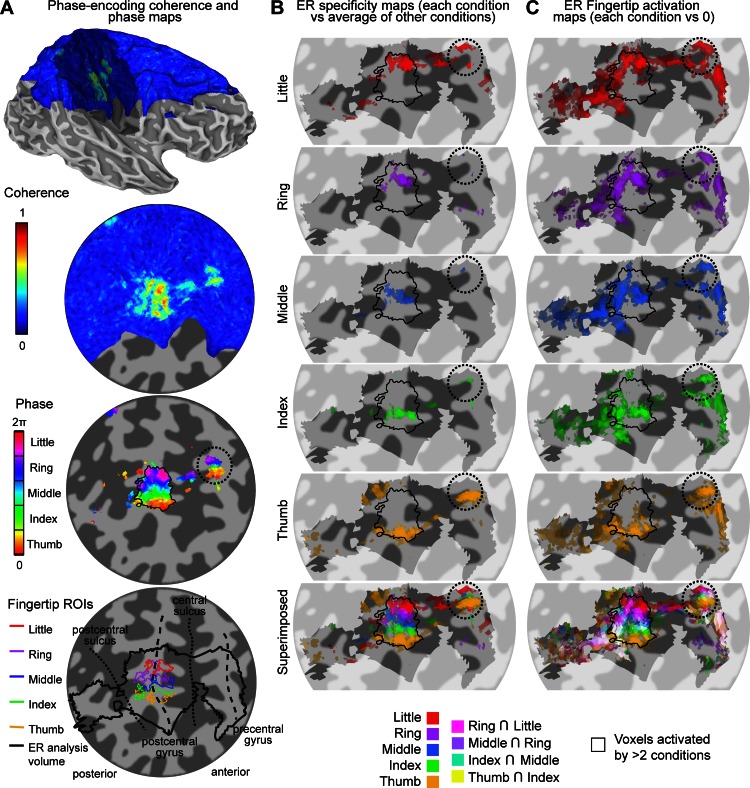

Fig. 3.

Procedures to obtain somatotopic maps from the phase-encoding and ER data in subject 1. A: phase-encoding design. Coherence maps, phase maps, and regions of interest (ROIs) are displayed on inflated 3-dimensional (3-D) model of the right hemisphere cortical surface (top) and flattened cortical patch (bottom 3 maps). Dark gray, areas of negative curvature (sulci); light gray, areas of positive curvature (gyri); shaded area on the 3-D model, location of the cortical flat patch. Coherence values are given by finding the maximum intensity projection of coherence across coordinates corresponding to different cortical depths. Note that not all surface points of the patch have an associated value because of the partial field of view (FOV) of the functional images as shown in blue. Phase maps for the corresponding data set are thresholded at a coherence value equivalent to a false discovery rate (FDR)-adjusted P value of 0.05. Phase values (in radians) represent corresponding preferred stimulus location (fingertip). Phase values are complex-averaged across cortical depths using phase and amplitude values at each depth. Colored outline in bottom map, fingertip-specific ROIs defined as all contiguous voxels within the cortical sheet with significant coherence values (P < 0.05, FDR-adjusted). Thick black outline, volume in which the ER analysis was performed to limit number of multiple comparisons (constructed by expanding the 5 fingertip ROIs by 5 voxels in 3-D space). Thin black outline in phase map, union of 5 finger-specific ROIs. B: fingertip specificity maps (each stimulation condition compared with the average of 4 other stimulation conditions), displayed on a flattened cortical patch. The saturation of each color map represents the amplitude of the contrast estimate. Transparency represents the corresponding statistical significance (thresholded at P < 0.05, FDR-corrected). Shaded area, voxels included in the analysis. Bottom map: superimposed maps of all fingertip conditions using the color blending scheme described in materials and methods (Alignment and Projection of Statistical Maps onto Surfaces and Flattened Patches). C: same as B but for fingertip activation (each stimulation condition compared with 0).

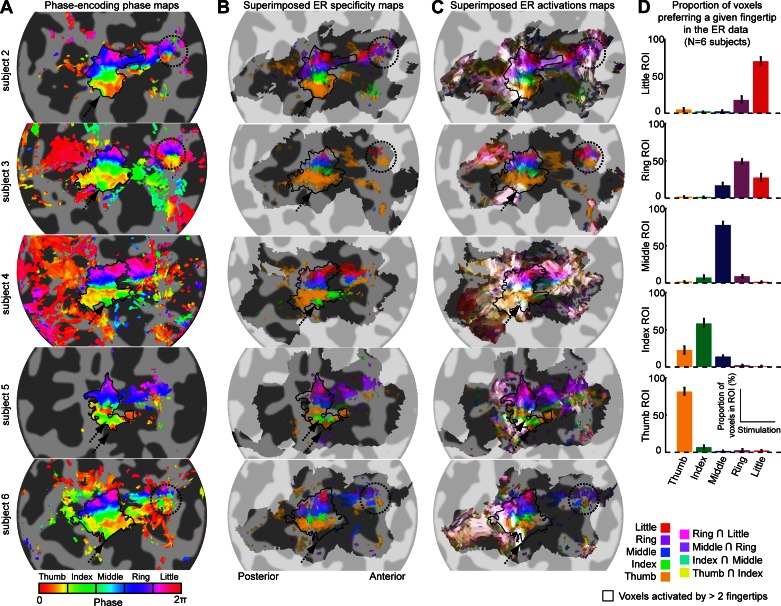

Fig. 4.

Comparison of phase-encoding and ER data in subjects 2–6. Each row shows data from 1 subject. A: somatotopic (phase) maps from phase-encoding experiment (see Fig. 3A for details). B: superimposition of all fingertip specificity maps (see Fig. 3B for details) shows tight correspondence with fingertip-specific maps from phase-encoding experiment (A). C: superimposition of all fingertip activation maps (see Fig. 3C for details) shows important overlap of activation in the posterior part of the somatotopic representation in S1. D: proportion of voxels in each phase-encoding ROI that respond maximally in each of the 5 fingertip stimulation conditions of the ER design. In each ROI, the majority of voxels responded maximally to the finger expected from the phase-encoding design.

For the main S1 phase map, we defined fingertip-specific ROIs corresponding to each fingertip stimulation by dividing the range of possible phase values into five equally spaced intervals and selecting contiguous cortical voxels within the same phase interval. This procedure yields five adjacent ROIs in all subjects, each corresponding to one fingertip (shown for subject 1, Fig. 3A, bottom). In five subjects, we also defined an additional index fingertip ROI inferior to the thumb ROI. We analyzed the ER data only in the vicinity of these ROIs, as depicted in the bottom map of Fig. 3A.

ER Results

We constructed two types of maps from the ER data: the first emphasized voxels responding preferentially to a particular fingertip stimulation (referred to here as specificity maps) and the second displaying voxels responding to any (and thus, possibly, multiple) fingertip stimulations (activation maps). Figure 3B illustrates the specificity maps for an example subject (subject 1). The first five maps only display voxels that showed a response for a given fingertip stimulation condition that was significantly larger than the average of the responses elicited by the four other conditions (thresholded at P < 0.05, FDR-adjusted), and the bottom map is a superimposition of these five fingertip-specific maps. For each of the five fingertip stimulations, the main cluster extends from the posterior bank of the central sulcus to the postcentral gyrus. This superimposition map shows somatotopic organization that is in good agreement with the phase-encoding results (black outline) and also displays little overlap between fingertip-specific clusters. A second orderly set of fingertip-specific clusters can also be seen on the precentral gyrus, at the same location as in the phase-encoding maps, although some clusters consist of only 1 or 2 voxels. Figure 3C illustrates the activation maps constructed from the ER design. Each of the first five maps displays only voxels that responded significantly more than baseline in a given fingertip stimulation condition (thresholded at P < 0.05, FDR-adjusted), and the bottom map is a superimposition of these fingertip maps (with whiter hues indicating overlapping activations). In contrast with the fingertip specificity maps of Fig. 3B, the fingertip activation maps of Fig. 3C show multiple activation clusters, some of them present in several or all five of the fingertip stimulation conditions. The superimposition map (bottom map of Fig. 3C) shows that the overlap between fingertip stimulation conditions (white voxels) is fairly limited in the posterior bank of the central sulcus but is widespread on and posterior to the postcentral gyrus.

Comparison of Phase-Encoding and ER Results

Figure 4 compares the phase-encoding phase maps with the superimposed ER specificity and ER activation maps for the other five subjects. As for the example subject 1, the phase-encoding maps (Fig. 4A) and the ER specificity maps (Fig. 4B) are very similar. The main somatotopic representation in S1 is clearly visible on both types of maps. For subjects showing a second, smaller somatotopic representation on the precentral gyrus (subjects 2, 3, and 6, dotted black circles), this feature is more clearly visible on the phase-encoding maps than on the ER specificity maps. In contrast, the additional index-specific and thumb-specific areas, seen on the phase-encoding maps in subjects 2-6, can only be seen on the ER specificity map of subjects 4 and 5. The correspondence of the phase-encoding and ER data in the main postcentral somatotopic representation was quantified by plotting, for each phase-encoding-defined fingertip ROI, the proportion of voxels responding maximally to each fingertip stimulation in the ER design (Fig. 4D, bar plots). Between 50 and 82% of the voxels in a given phase-encoding-defined ROI responded maximally to the stimulation of the predicted fingertip. A significant proportion of voxels responded maximally to the finger directly adjacent to the predicted finger, but very few voxels responded maximally to nonadjacent fingers (between 3 and 10% depending on the ROI). This pattern does not change when the analysis is applied only to voxels that meet the statistical threshold criterion of P < 0.05, FDR-adjusted in the ER design.

Activation maps showing overlapping responses to different fingertip stimulations (Fig. 4C) give a different picture. In all subjects except subject 3, the main somatotopically organized activation pattern can only be seen in the anterior part of S1 (mainly in the posterior bank of the central sulcus, although for some subjects, it extends onto the postcentral gyrus), whereas in its posterior part (on the postcentral gyrus), voxels generally respond to more than two fingertip stimulations (white voxels). In four subjects, overlapping activation (white voxels) clearly extends posteriorly to the main somatotopic representation seen in the phase-encoding data (subject 1, Fig. 3C, and subjects 2, 4, and 6, Fig. 4C) and sometimes also anteriorly (subjects 2 and 4). The smaller precentral somatotopic representation can also be seen on ER overlapping activation maps (in subjects 1, 3, and 6 but not subject 2), although not as clearly as on the phase-encoding maps.

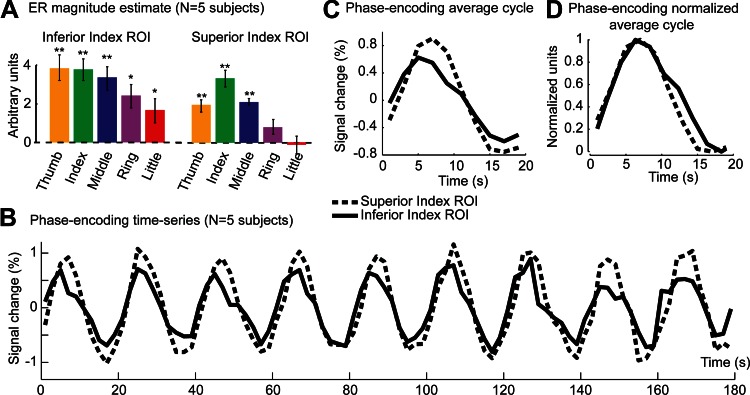

Finally, for all five subjects in Fig. 4C, the location corresponding to the additional inferior index area is not only activated by stimulation of the index fingertip, but also by other fingertip stimulations (dotted black arrow). We plotted the average magnitude estimates across voxels of the additional index fingertip ROI for each fingertip stimulation condition and compared the results to magnitude estimates averaged across voxels of the superior index-specific ROI (Fig. 5A). We found that the inferior index area is significantly activated by all fingertip stimulation conditions, although more by stimulation of the first three fingers than of the ring or little finger. In contrast, the superior index ROI is activated maximally by the stimulation of the index fingertip and to a lesser extent by fingers adjacent to the index finger. To see how these differences manifest in the phase-encoding design, we analyzed the averaged BOLD response in that design across voxels of both ROIs. Figure 5B plots the time series for each ROI (averaged across forward-order and time-reversed, reverse order). Even though the inferior index ROI responds more strongly than the superior index ROI in the ER design [β-values averaged across fingertip stimulation conditions: 3.01 vs. 1.63, t(4) = 4.75, P < 10−4], the amplitude of the signal fluctuation in the phase-encoding is smaller for the inferior ROI [amplitude of the best-fitting sine wave: 0.60 vs. 0.87, t(5) = 2.52, P < 0.07]. As a consequence, the inferior index ROI shows lower coherence values than the superior index ROI [0.85 vs. 0.93, t(5) = 2.58, P < 0.07]. The time series (Fig. 5B) also suggests that the response peaks earlier in the inferior than the superior index ROI. This can be better seen on a single cycle of response averaged across cycles of stimulation (Fig. 5C). This effect does not, however, reach significance at the group level [phase of the best-fitting sine wave: 0.26 vs. 0.08, t(5) = 2.058, P = 0.109]. Finally, we estimated the width of the response in each ROI by normalizing the amplitude of a single cycle between 0 and 1 (Fig. 5D) and measuring the width at an amplitude of 0.5. The response in the inferior index ROI was found to be significantly wider in the inferior index ROI compared with the superior index ROI [10.2 s vs. 8.9 s, t(5) = 5.06, P < 0.01].

Fig. 5.

ER and phase-encoding responses in inferior and superior “index-specific” ROIs. A: ER parameter estimates for each of the 5 fingertip stimulation regressors (magnitude component) averaged across voxels in the inferior ROI and superior index-specific ROIs and across 5 subjects. Error bars, standard error across subjects. *P < 0.05, **P < 0.01, statistical significance compared with 0, corrected for multiple comparison using Hommel's modified Bonferroni procedure. B: phase-encoding time series for the inferior and superior index-specific ROIs averaged across 5 subjects, across voxels, and across forward-order and time-reversed, reverse-order runs. C: response to a single cycle of the phase-encoding design averaged across cycles. D: as for C after normalization of each subject's time series between 0 and 1 and shifting the data of the inferior index ROI by 2/3 of a TR (1.66 s) to align the rising edges of the response in each ROI.

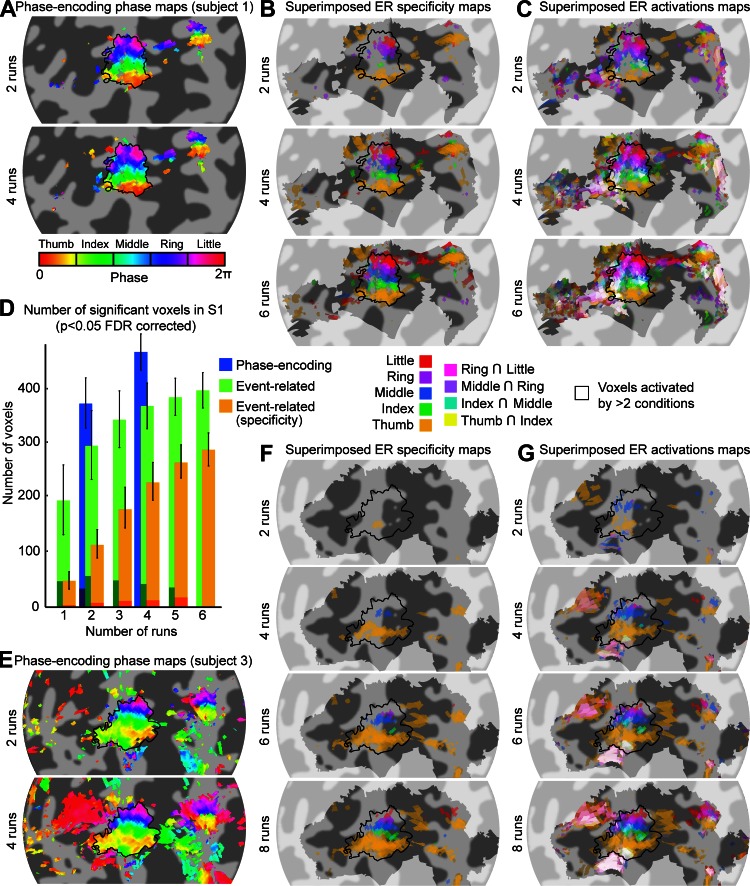

We also compared the efficiency of using phase-encoding and ER designs to obtain somatotopic maps. Figure 6 illustrates the maps obtained for 2 subjects (subject 1, A–C, and subject 3, E–G) as an increasing number of runs are included in the analysis. These maps show that 2 runs (6 min) of the phase-encoding design were sufficient to obtain good maps of both the postcentral and the precentral somatotopic cortical representations (including data from an additional 2 runs did not qualitatively improve the maps), whereas 6–8 runs (24–32 min) of the ER design were necessary to visualize the corresponding specificity maps. To compare quantitatively the ability of both designs to map the somatotopic representation, we computed the number of voxels within S1 that were considered significant in the phase-encoding data and in the ER data as a function of the number of runs included in the analysis. Figure 6D shows that 6 runs of the ER specificity data (orange) did not identify as many voxels as 2 runs of the phase-encoding design (blue), whereas for the ER activation maps, 4 runs were sufficient to reach the same number of significant voxels. Only a small number of voxels showed a change in their preferred fingertip as a function of the number of runs included (as indicated by the shaded area in Fig. 6D).

Fig. 6.

Effect of the number of scans included in the analysis. A: phase maps formed from the phase-encoding data acquired in 2 or 4 runs, thresholded at a coherence value of 0.25. Phase values (in radians) represent corresponding preferred stimulus location (fingertip). Phase values are complex-averaged across cortical depths using phase and amplitude values at each depth. Runs of the phase-encoding design were of 3-min duration for this subject. The maps constructed using 2 or 4 scans are very similar, suggesting that 2 runs (6 min) are sufficient to yield robust maps. B: superimposed fingertip specificity maps (see Fig. 3B for details) for 2, 4, and 6 runs. Runs of the ER design were 4 min long. Each addition of 2 runs improves the maps found up to 6 runs (24 min). C: same as B for superimposed activation maps (each stimulation condition compared with 0). D: number of voxels in the S1 ROI (black outline in other panels) responding significantly (P < 0.05, FDR-adjusted) to at least 1 fingertip as a function of the number of runs for each analysis [Phase-encoding, Event-related, and Event-related (specificity)] averaged across 6 subjects. Voxel count was restricted to the cortical surface. The darker shaded area at the base of each bar is the number of voxels for which the preferred fingertip was different from the preferred finger when all runs were included. Error bars indicate the standard error across 6 subjects. E: same as A for subject 3. Runs of the phase-encoding design were 3 min long for this subject. As for subject 1, somatotopic maps were very similar using 2 or 4 scans. F: same as B for subject 3. Subject 3 underwent 8 runs of the ER design. For this subject, 8 runs (32 min) were necessary to obtain a finger specificity map including all 5 fingertips. G: same as C for subject 3.

DISCUSSION

Here, we have compared the use of ER and phase-encoding designs in mapping the somatotopic representations of fingertips in S1 in individual subjects at 7 T. Both designs revealed essentially the same somatotopic maps when considering only differential responses. This was the case despite the fact that the phase-encoding strategy is based on the assumption that local discontinuities in body representation are absent. Whereas the phase-encoding design was predicted and observed to be more efficient for this purpose, the ER design allowed the additional assessment of overlapping activations in response to different fingertip stimulations (J. Besle, R. M. Sanchez-Panchuelo, R. Bowtell, S. T. Francis, and D. Schluppeck, unpublished observations). This detailed analysis led us to describe some previously unreported features of the somatotopic representations of the hand in the central region: there exists an area inferior to the main postcentral somatotopic representation responding to stimulation of each of the fingertips (but mainly dominated by thumb, index, and middle finger) and an additional complete somatotopic representation of the fingertips on the precentral gyrus.

Phase-Encoding and ER Designs Yield Comparable Somatotopic Maps

To compare phase-encoding and ER results, we have used a differential contrast in the GLM analysis of the ER data and only displayed voxels that show significantly larger responses for stimulation of a specific fingertip (specificity maps). This effectively eliminates voxels that respond to stimulation of several fingertips, thus eliminating overlapping responses. When superimposing the specificity maps of different fingertips, we obtain somatotopic maps that are essentially identical to those obtained using the phase-encoding design, although with less power. Both mapping techniques showed cortical bands extending in the AP direction responding preferentially to the same fingertip, which also show somatotopic organization as described in previous fMRI studies (Sanchez-Panchuelo et al. 2010) and are in accordance with primate electrophysiological studies (Kaas et al. 1979). Unexpectedly, the phase-encoding data and, to a lesser extent, the ER data also showed a reversal of the somatotopy around the inferior thumb representation into an additional index finger cortical representation.

It is important to show that phase-encoding designs yield the same maps as more conventional designs based on independent sequences of stimulation (as in the ER design), as an important assumption in the phase-encoding analysis is that there is local continuity in the topological correspondence between cortical maps and peripheral sensory receptive fields. Although this continuity is clearly present in retinotopic and tonotopic cortical maps, discontinuities exist in somatotopic maps, for example at the interface of the face and hand representations (e.g., Nelson et al. 1980). These discontinuities could result in spurious phase values in the phase-encoding analysis if the same voxels contain neurons responding to noncontiguous body parts. At the scale of representation of the fingertips, however, electrophysiological primate studies have shown that the order of fingertip representation is respected (e.g., Merzenich et al. 1987), and here we have empirically confirmed that the assumption of local continuity is valid for measuring fingertip cortical representation with fMRI in humans even at a spatial resolution of 1.5 mm.

Using both designs, we identified some variability of somatotopy across subjects in this study. Most notably, in subjects 4 and 5, the majority of index fingertip-specific voxels were found inferior/lateral rather than superior/medial to the thumb area, even though some index fingertip-specific voxels were found between the thumb and middle finger areas. In subject 5, additional thumb-specific voxels were found at the medial border of the somatotopic map, resulting in two alternate stripes corresponding to the representations of the thumb and index finger. Idiosyncratic variations in somatotopic maps have been observed previously in monkeys using electrophysiology (Merzenich et al. 1987). Imaging at higher spatial resolution and in more subjects will be necessary to form a clear picture of the exact anatomic variations in humans across a population.

In four out of six subjects, we also observed a second, more anterior, somatotopically organized cortical region on the precentral gyrus in both the phase-encoding and ER designs. This second somatotopic map was smaller than the one found in S1. Precentral activation during tactile stimulation has been reported previously (e.g., Schweizer et al. 2008) but was found in the anterior bank of the central sulcus, facing the S1 area, and did not reflect somatotopic organization in this region. The location of the map at the crown of the precentral gyrus is compatible both with the primary motor cortex (area 4) and the premotor cortex (area 6), the location of the border between these areas being highly variable between individuals (Geyer 2004). The primary motor cortex in monkeys is known to respond to somatosensory input (e.g., Strick and Preston 1982), however, we do not know of any report of fingertip somatotopy of tactile responses in the motor or the premotor cortex. At this point, we cannot exclude the explanation that small movements of the fingers in reaction to the vibrotactile stimulation might be responsible for this anterior map (e.g., Olman et al. 2012).

As predicted, the phase-encoding design was found to be more efficient than the ER design (Engel 2012), allowing mapping S1 in 6 min, compared with at least 24 min for ER designs. In particular, the phase-encoding design allowed the identification of a small somatotopic map in the precentral gyrus that would have been difficult to identify based on the ER data.

Had we used a block design, this difference in power might not have been so great, since block designs are more efficient than ER designs to detect activations (Friston et al. 1999). It is, however, an open question how much overlap can be uncovered using block designs, as previous studies based on block designs have reported limited overlap in S1 (Schweizer et al. 2008; Stringer et al. 2011). Part of this failure could be attributed to a lack of power in these studies, as we discuss elsewhere (J. Besle, R. M. Sanchez-Panchuelo, R. Bowtell, S. T. Francis, and D. Schluppeck, unpublished observations).

ER Designs Provide Additional Information About Cortical Somatotopic Representations

Phase-encoding designs are intrinsically differential in that they usually do not include a baseline condition (Dumoulin and Wandell 2008). As a result, overlapping responses to different fingertips are difficult to estimate (see, however, Smith et al. 2001). In contrast, the ER design allowed us to detect voxels that responded to stimulation of two or more different fingertips by comparing magnitude estimates to baseline instead of to the average of other stimulation conditions. The ER activation maps obtained using this contrast for each fingertip stimulation condition are best described as a patchwork of clusters, some showing fingertip specificity and other showing significant activation in response to stimulation of any fingertip. We did not see any clear indication of distinct clusters of activation corresponding to areas 3b, 1, and 2, in contrast with what has been reported by others (Nelson and Chen 2008; Stringer et al. 2011).

However, the posterior part of the somatotopic map in S1 (postcentral gyrus) clearly showed more overlap (white voxels in Figs. 3C and 4C) than its anterior part (posterior bank of the central gyrus). In a companion paper (J. Besle, R. M. Sanchez-Panchuelo, R. Bowtell, S. T. Francis, and D. Schluppeck, unpublished observations), we quantitatively show that there is some overlap between the response to adjacent fingertips in the anterior part of S1, with many voxels responding to two adjacent fingertips but that in the posterior part of S1, overlap increases and many voxels respond to five fingertips. The limited overlap in the central sulcus could be explained by the spatial spread of the BOLD response (Parkes et al. 2005; Shmuel et al. 2007) even if the underlying cortical representation of adjacent fingertips does not overlap as would be predicted from electrophysiological measurements in area 3b. In contrast, the increase in overlap in the posterior part of S1 must reflect a genuine increase in size/overlap of cell receptive fields compatible with larger receptive fields in area 1 and 2 compared with area 3b (Iwamura et al. 1983a,b, 1985; Pons et al. 1987).

We also found that the overlap extends posteriorly to the somatotopic representation defined from the phase-encoding design- and ER-specific maps. Since phase-encoding designs fail to detect voxels for which population receptive fields become so large to encompass all fingertips (Dumoulin and Wandell 2008), this would suggest that cells in these posterior areas have receptive fields encompassing all five fingertips, yielding no significant differences in the responses to stimulation of different fingertips.

Activation estimates from the ER data also helped interpret a previously unreported feature of S1 fingertip somatotopic maps: in five subjects, we found on both the phase maps and the ER specificity maps that the somatotopic representation seems to reverse inferiorly/laterally into an additional index finger representation. Examination of ER magnitude estimates revealed that this area is in fact activated by stimulation of all five fingertips, although more strongly for the first three fingertips than for the ring or little finger. This could correspond to the activation cluster reported in a previous study (Nelson and Chen 2008) and labeled as area 1i (for inferior) by these authors. It is an open question whether this cluster corresponds to actual cortical subregions tied to cytoarchitecture or rather arises from the signal from veins (draining deoxygenated blood preferentially from areas responding to the thumb, index, and middle finger). This illustrates one of the shortcomings of the phase-encoding designs (and of differential designs in general). Although they are useful for reducing the common, nonspecific responses from large draining veins (Grinvald et al. 2000; Uǧurbil et al. 2003), they can miss potentially important areas of activation that respond to all stimulation conditions.

To benefit from the advantages of both overlap estimation and efficiency, alternative mapping techniques relying on the assumption of conformal mapping but sensitive to overlap, such as population receptive field mapping (Dumoulin and Wandell 2008), could be used in further mapping studies of S1.

Differences in Phase-Encoding and ER Design Implementation and Their Potential Impact on Our Results

There were slight differences in the way we implemented the details of our phase-encoding and ER designs that could result in differences in statistical power and potentially have consequences for the validity of our comparison. However, we believe that these differences are unlikely to have significantly influenced our conclusions for the reasons outlined below.

Since we expected the phase-encoding design to be more powerful than the ER design, we did not equate the number of runs of each experimental design. Consequently, all subjects performed fewer runs of the phase-encoding design than the ER design. Since two runs of the phase-encoding design consistently resulted in better somatotopic maps than six or eight runs of the ER design, adding more phase-encoding runs would not have changed this result.

Similarly, we used localization information from the phase-encoding analysis to decrease the number of statistical tests in our ER analysis and thus to increase its statistical power. Had we not used this information, statistical results from the ER data would have been less significant. However, this would not have changed our conclusion that the ER design is less powerful than the phase-encoding design.

Finally, the task performed by the subjects was different in half of the ER runs (whereas in the other half, it was identical to the visual task performed in the phase-encoding runs), resulting in different attentional states between the two designs that could potentially affect the amplitude of sensory responses and therefore the obtained maps. However, we found that the task manipulation (visual vs. tactile) did not significantly affect the way most voxels responded to the stimulation of different fingertips in the ER data (see ER Design in materials and methods). It is therefore unlikely that this difference could have affected our comparison of the ER and phase-encoding designs.

Conclusion

Phase-encoding and ER designs are complementary mapping techniques that can both be used to map the fingertip representation in S1 of individual subjects at 7 T. We have demonstrated a close correspondence between the maps generated using phase-encoding designs and the specificity maps from the ER design. We also highlight the additional benefit of ER designs in the assessment of the spatial overlap of responses. We suggest that phase encoding may be used as a rapid localizer rather than as an accurate tool for studying the functional organization of the somatosensory cortex per se. Ultrahigh-field imaging allows the use of low-sensitive ER designs, opening up the possibility to assess more subtle transient changes in the responses of somatosensory cortex in individual subjects (potentially even for diagnostic purposes), such as those due to the effects of cognitive demand, attention, and changes due to neural plasticity.

GRANTS

This study was supported by the Biotechnology and Biological Sciences Research Council Research Council (Grant BB/G008906/1 to D. Schluppeck and S. Francis). D. Schluppeck is a Research Councils UK Academic Fellow.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

J.B., R.-M.S.-P., S.F., and D.S. designed the experiments; R.-M.S.-P., R.B., and S.F. designed the scanning sequences; J.B., R.-M.S.-P., and D.S. collected the data; J.B., R.-M.S.-P., S.F., and D.S. analyzed and interpreted the data; J.B. drafted the manuscript; J.B., R.-M.S.-P., R.B., S.F., and D.S. edited and revised the manuscript.

APPENDIX

Here, we show that 1) averaging the phase of forward-order and time-reversed, reverse-order runs of a phase-encoding experiment cancels the HRF delay in the estimation of the phase, and 2) shifting the reverse-order runs by 1 TR corrects for slice acquisition time and discrete time sampling effects, resulting in a direct relationship between the phase value and the location of stimulation such that the phase of an hypothetical voxel responding to only one fingertip would fall exactly in the middle of the phase bin corresponding to that fingertip. This demonstration holds for any number of stimulated locations and any duration of stimulation at a given location. However, we limit our demonstration to cases where locations are stimulated in succession without overlap and stimulation duration is an integer number of TR periods. We express all durations in TRs. Figure 2 illustrates the main steps of the demonstration.

Let L be the number of different stimulation locations (L = 5 in our case) and d the duration of stimulation at any given location (d = 2 TRs in our case).

For any particular location, l, the phase of the best-fitting cosine for a hypothetical voxel responding only to that location (relative to the start of a cycle of stimulation) is given by:

where h is the phase delay introduced by the hemodynamic response (see Fig. 2A). However, it is important to take into account the fact that the phase actually measured in our analysis is not the phase relative to the start of the stimulation cycle but the phase relative to the first acquired volume after the start of the stimulation cycle so that the phase actually depends on both the hemodynamic delay and the slice acquisition time, a (expressed as a fraction of the TR):

Similarly, the phase relative to the first acquired volume for a reverse-order run (Fig. 2B) is given by:

Time-reversing this reverse-order run has three effects (Fig. 2C): 1) it shifts the start of the stimulation [d × (L − l) TRs] so that it becomes its complement within the stimulation cycle (d × l TRs); 2) it replaces the positive hemodynamic delay by a negative delay; and 3) it replaces the acquisition time (a TRs) by its complement within a TR period [(1 − a) TRs]. The phase for a time-reversed, reverse-time run relative to the last acquired volume in the cycle is thus given by:

If we now average the phase of the forward-order and time-reversed, reverse-order runs (assuming that the shape of the hemodynamic response is identical is both types of runs), this gives:

We can see that the resulting phase depends on neither the delay introduced by the hemodynamic response, h, nor the slice acquisition time, a (Fig. 2D). However, whereas h is simply eliminated in the average, differences in slice acquisition time phase delays (a vs. 1 − a) between the forward-order and time-reversed, reverse-order runs result in a phase that is not exactly the center of the phase bin corresponding to location l and differs from it by an amount inversely proportional to the duration of stimulation and the number of stimulated location. (Note: this is only true because we have been careful to express the phase relative to the acquisition samples and not to the start of the stimulation cycle).

One simple way to obtain the desired phase value is to shift the time-reversed, reverse-order runs by 1 TR. The corresponding phase thus becomes:

and the averaged phase is now:

which is the center of the phase bin corresponding to location l.

REFERENCES

- Andersson JL, Jenkinson M, Smith SM. Non-Linear Registration aka Spatial Normalisation. 2007 [Google Scholar]

- Baseler HA, Gouws A, Haak KV, Racey C, Crossland MD, Tufail A, Rubin GS, Cornelissen FW, Morland AB. Large-scale remapping of visual cortex is absent in adult humans with macular degeneration. Nat Neurosci 14: 649–655, 2011 [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc Series B Stat Methodol 57: 289–300, 1995 [Google Scholar]

- Benjamini Y, Krieger AM, Yekutieli D. Adaptive linear step-up procedures that control the false discovery rate. Biometrika 93: 491–507, 2006 [Google Scholar]

- Burock MA, Dale AM. Estimation and detection of event-related fMRI signals with temporally correlated noise: a statistically efficient and unbiased approach. Hum Brain Mapp 11: 249–260, 2000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI. Cortical surface-based analysis. I. Segmentation and surface reconstruction. Neuroimage 9: 179–194, 1999 [DOI] [PubMed] [Google Scholar]

- Deuchert M, Ruben J, Schwiemann J, Meyer R, Thees S, Krause T, Blankenburg F, Villringer K, Kurth R, Curio G, Villringer A. Event-related fMRI of the somatosensory system using electrical finger stimulation. Neuroreport 13: 365–369, 2002 [DOI] [PubMed] [Google Scholar]

- Dumoulin SO, Wandell BA. Population receptive field estimates in human visual cortex. Neuroimage 39: 647–660, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engel SA. The development and use of phase-encoded functional MRI designs. Neuroimage 62: 1195–1200, 2012 [DOI] [PubMed] [Google Scholar]

- Friston KJ, Zarahn E, Josephs O, Henson RN, Dale AM. Stochastic designs in event-related fMRI. Neuroimage 10: 607–619, 1999 [DOI] [PubMed] [Google Scholar]

- Gardner JL, Merriam EP, Movshon JA, Heeger DJ. Maps of visual space in human occipital cortex are retinotopic, not spatiotopic. J Neurosci 28: 3988–3999, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geyer S. The microstructural border between the motor and the cognitive domain in the human cerebral cortex. Adv Anat Embryol Cell Biol 174: I–VIII, 1–89, 2004 [DOI] [PubMed] [Google Scholar]

- Grinvald A, Slovin H, Vanzetta I. Non-invasive visualization of cortical columns by fMRI. Nat Neurosci 3: 105–107, 2000 [DOI] [PubMed] [Google Scholar]

- Hinkley LB, Webster RL, Byl NN, Nagarajan SS. Neuroimaging characteristics of patients with focal hand dystonia. J Hand Ther 22: 125–134; quiz 135, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang RS, Sereno MI. Dodecapus: an MR-compatible system for somatosensory stimulation. Neuroimage 34: 1060–1073, 2007 [DOI] [PubMed] [Google Scholar]

- Iwamura Y, D'Esposito M, Sakamoto M, Hikosaka O. Diversity in receptive field properties of vertical neuronal arrays in the crown of the postcentral gyrus of the conscious monkey. Exp Brain Res 58: 400–411, 1985 [DOI] [PubMed] [Google Scholar]

- Iwamura Y, Tanaka M, Sakamoto M, Hikosaka O. Converging patterns of finger representation and complex response properties of neurons in area 1 of the first somatosensory cortex of the conscious monkey. Exp Brain Res 51: 327–337, 1983a [Google Scholar]

- Iwamura Y, Tanaka M, Sakamoto M, Hikosaka O. Functional subdivisions representing different finger regions in area 3 of the first somatosensory cortex of the conscious monkey. Exp Brain Res 51: 315–326, 1983b [Google Scholar]

- Kaas JH, Nelson RJ, Sur M, Lin CS, Merzenich MM. Multiple representations of the body within the primary somatosensory cortex of primates. Science 204: 521–523, 1979 [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI. Circular analysis in systems neuroscience: the dangers of double dipping. Nat Neurosci 12: 535–540, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merzenich MM, Nelson RJ, Kaas JH, Stryker MP, Jenkins WM, Zook JM, Cynader MS, Schoppmann A. Variability in hand surface representations in areas 3b and 1 in adult owl and squirrel monkeys. J Comp Neurol 258: 281–296, 1987 [DOI] [PubMed] [Google Scholar]

- Nelson AJ, Chen R. Digit somatotopy within cortical areas of the postcentral gyrus in humans. Cereb Cortex 18: 2341–2351, 2008 [DOI] [PubMed] [Google Scholar]

- Nelson RJ, Sur M, Felleman DJ, Kaas JH. Representations of the body surface in postcentral parietal cortex of Macaca fascicularis. J Comp Neurol 192: 611–643, 1980 [DOI] [PubMed] [Google Scholar]

- Nestares O, Heeger DJ. Robust multiresolution alignment of MRI brain volumes. Magn Reson Med 43: 705–715, 2000 [DOI] [PubMed] [Google Scholar]

- Olman CA, Pickett KA, Schallmo MP, Kimberley TJ. Selective BOLD responses to individual finger movement measured with fMRI at 3T. Hum Brain Mapp 33: 1594–1606, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Overduin SA, Servos P. Distributed digit somatotopy in primary somatosensory cortex. Neuroimage 23: 462–472, 2004 [DOI] [PubMed] [Google Scholar]

- Overduin SA, Servos P. Symmetric sensorimotor somatotopy. PLoS One 3: e1505, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parkes LM, Schwarzbach JV, Bouts AA, Deckers RH, Pullens P, Kerskens CM, Norris DG. Quantifying the spatial resolution of the gradient echo and spin echo BOLD response at 3 Tesla. Magn Reson Med 54: 1465–1472, 2005 [DOI] [PubMed] [Google Scholar]

- Pons TP, Wall JT, Garraghty PE, Cusick CG, Kaas JH. Consistent features of the representation of the hand in area 3b of macaque monkeys. Somatosens Res 4: 309–331, 1987 [DOI] [PubMed] [Google Scholar]

- Poole M, Bowtell R. Volume parcellation for improved dynamic shimming. MAGMA 21: 31–40, 2008 [DOI] [PubMed] [Google Scholar]

- Sanchez-Panchuelo RM, Besle J, Beckett A, Bowtell R, Schluppeck D, Francis S. Within-digit functional parcellation of Brodmann areas of the human primary somatosensory cortex using functional magnetic resonance imaging at 7 tesla. J Neurosci 32: 15815–15822, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sanchez-Panchuelo RM, Francis S, Bowtell R, Schluppeck D. Mapping human somatosensory cortex in individual subjects with 7T functional MRI. J Neurophysiol 103: 2544–2556, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schweisfurth MA, Schweizer R, Frahm J. Functional MRI indicates consistent intra-digit topographic maps in the little but not the index finger within the human primary somatosensory cortex. Neuroimage 56: 2138–2143, 2011 [DOI] [PubMed] [Google Scholar]

- Schweizer R, Voit D, Frahm J. Finger representations in human primary somatosensory cortex as revealed by high-resolution functional MRI of tactile stimulation. Neuroimage 42: 28–35, 2008 [DOI] [PubMed] [Google Scholar]

- Sereno MI, Huang RS. A human parietal face area contains aligned head-centered visual and tactile maps. Nat Neurosci 9: 1337–1343, 2006 [DOI] [PubMed] [Google Scholar]

- Servos P, Zacks J, Rumelhart DE, Glover GH. Somatotopy of the human arm using fMRI. Neuroreport 9: 605–609, 1998 [DOI] [PubMed] [Google Scholar]

- Shmuel A, Yacoub E, Chaimow D, Logothetis NK, Ugurbil K. Spatio-temporal point-spread function of fMRI signal in human gray matter at 7 Tesla. Neuroimage 35: 539–552, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith AT, Singh KD, Balsters JH. A comment on the severity of the effects of non-white noise in fMRI time-series. Neuroimage 36: 282–288, 2007 [DOI] [PubMed] [Google Scholar]

- Smith AT, Singh KD, Williams AL, Greenlee MW. Estimating receptive field size from fMRI data in human striate and extrastriate visual cortex. Cereb Cortex 11: 1182–1190, 2001 [DOI] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TE, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, Niazy RK, Saunders J, Vickers J, Zhang Y, De Stefano N, Brady JM, Matthews PM. Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage 23, Suppl 1: S208–S219, 2004 [DOI] [PubMed] [Google Scholar]

- Strick PL, Preston JB. Two representations of the hand in area 4 of a primate. II. Somatosensory input organization. J Neurophysiol 48: 150–159, 1982 [DOI] [PubMed] [Google Scholar]

- Stringer EA, Chen LM, Friedman RM, Gatenby C, Gore JC. Differentiation of somatosensory cortices by high-resolution fMRI at 7T. Neuroimage 54: 1012–1020, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tecchio F, Padua L, Aprile I, Rossini PM. Carpal tunnel syndrome modifies sensory hand cortical somatotopy: a MEG study. Hum Brain Mapp 17: 28–36, 2002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uǧurbil K, Toth L, Kim DS. How accurate is magnetic resonance imaging of brain function? Trends Neurosci 26: 108–114, 2003 [DOI] [PubMed] [Google Scholar]

- Wandell BA, Brewer AA, Dougherty RF. Visual field map clusters in human cortex. Philos Trans R Soc Lond B Biol Sci 360: 693–707, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wandell BA, Dumoulin SO, Brewer AA. Visual field maps in human cortex. Neuron 56: 366–383, 2007 [DOI] [PubMed] [Google Scholar]

- Warnking J, Dojat M, Guérin-Dugué A, Delon-Martin C, Olympieff S, Richard N, Chéhikian A, Segebarth C. fMRI retinotopic mapping–step by step. Neuroimage 17: 1665–1683, 2002 [DOI] [PubMed] [Google Scholar]

- Wicker B, Fonlupt P. Generalized least-squares method applied to fMRI time series with empirically determined correlation matrix. Neuroimage 18: 588–594, 2003 [DOI] [PubMed] [Google Scholar]

- Wilson JL, Jenkinson M, De Araujo I, Kringelbach ML, Rolls ET, Jezzard P. Fast, fully automated global and local magnetic field optimization for fMRI of the human brain. Neuroimage 17: 967–976, 2002 [PubMed] [Google Scholar]

- Woolrich MW, Ripley BD, Brady M, Smith SM. Temporal autocorrelation in univariate linear modeling of FMRI data. Neuroimage 14: 1370–1386, 2001 [DOI] [PubMed] [Google Scholar]