Abstract

In this article we review, and discuss the clinical implications of, five projects currently underway in the Cochlear Implant Laboratory at Arizona State University. The projects are (1) norming the AzBio sentence test, (2) comparing the performance of bilateral and bimodal cochlear implant (CI) patients in realistic listening environments, (3) accounting for the benefit provided to bimodal patients by low-frequency acoustic stimulation, (4) assessing localization by bilateral hearing aid patients and the implications of that work for hearing preservation patients, and (5) studying heart rate variability as a possible measure for quantifying the stress of listening via an implant.

The long-term goals of the laboratory are to improve the performance of patients fit with cochlear implants and to understand the mechanisms, physiological or electronic, that underlie changes in performance. We began our work with cochlear implant patients in the mid-1980s and received our first grant from the National Institutes of Health (NIH) for work with implanted patients in 1989. Since that date our work with cochlear implant patients has been funded continuously by the NIH. In this report we describe some of the research currently being conducted in our laboratory.

Keywords: Cochlear implants, diagnostic techniques, hearing science, pediatric audiology, speech perception

A LITTLE HISTORY

Our early work focused on the performance of patients fit with the Ineraid cochlear implant– a four-channel, analog device manufactured by Symbion Inc. in Salt Lake City. The device was unique because it was attached to the patient by a percutaneous plug, it contained no internal electronics, and it employed simultaneous, analog stimulation to four electrodes of six electrodes in the scala tympani. For more than 10 yr the first author commuted between Phoenix, Arizona, and Salt Lake City, Utah, to conduct research, first at Symbion Inc. then in the Department of Otolaryngology, Head and Neck Surgery at the University of Utah School of Medicine. The head of that program was James Parkin, MD, one of the pioneers of cochlear implant surgery and research. Some of the early experiments on multichannel electrical stimulation (e.g., Eddington et al, 1978) and the efficacy of multichannel cochlear implants (Eddington, 1980) were with Parkin’s patients. Eddington’s 1978 paper is an extraordinary one and should be required reading for students of cochlear implants.

In the middle to late 1980s the principal questions driving our research were (1) What level of performance could be achieved with a few (four) channels of simultaneous, analog stimulation? and (2) How could high levels of performance, for example, a 73% consonant-nucleus- consonant (CNC) word score, be achieved with such a sparse input? Our early work on outcomes and mechanisms underlying those outcomes can be found in Dorman et al (1988, 1989). The performance of our highest functioning patients may have influenced one implant company to offer a simultaneous analog stimulation (SAS) option, similar to that used by the Ineraid, for their implant (Osberger and Fisher, 1999).

In 1990 we began a long-running collaboration with Blake Wilson and colleagues at the Research Triangle Institute (RTI) in North Carolina by sending our Ineraid patients to RTI. Wilson’s group had been developing new processing strategies for cochlear implants since the early 1980s under contracts from the Neural Prosthesis Program of the National Institutes of Health (NIH). Because our Ineraid patients had a percutaneous plug instead of an implanted receiver, Wilson could test new signal processing strategies without the limitations imposed by the electronics in the implanted receiver. Several of our Ineraid patients participated in the development and initial tests of the continuous interleaved sampling (CIS) strategy (Wilson et al, 1991), which is now either the default strategy or an option for all implant systems. The CIS strategy gets its name from the manner in which pulses are directed to the electrodes: the pulse trains for the different channels and corresponding electrodes are interleaved in time so that the pulses across channels and electrodes are nonsimultaneous. This strategy allows definition of the amplitude envelopes in each of the channels without encountering current interactions among simultaneously stimulated electrodes, which was a major problem for simultaneous channel output devices like the Ineraid.

Following one of our many visits to Wilson’s laboratory to test his novel signal processors, we described the improvement in frequency resolution that could be obtained with an extra channel produced by a “current steering” or “virtual channel” processor (Wilson et al, 1992; Dorman et al, 1996). This type of processing strategy is now implemented in the Advanced Bionics HiRes 120 device.

A major change in the work of the laboratory was introduced by Philip Loizou, a postdoctoral fellow in 1995–1996. Our project was motivated by the work of Robert Shannon, who developed a noise-band simulation of a cochlear implant for research with normal hearing listeners. For this simulation, signals are processed in the same manner as a cochlear implant; for example, the input signal is divided into frequency bands, and the energy in each band is estimated. However, instead of outputting a pulse with an amplitude equal to that of the envelope in a channel, a narrow band of noise with an appropriate amplitude is output. We realized the potential usefulness of this tool; we could conduct experiments with normal hearing listeners and use the results to understand and, hopefully, improve the performance of cochlear implants. Loizou developed a variant of this simulation, one which output a sine wave at the center frequency of each input filter instead of a noise band. Our motivation for this processor was that, in our experience, no implant patient had ever reported that his or her implant sounded like a hissy, gravelly, noise-band simulation. Instead, patients usually reported a “thin,” tonal (sometimes “mechanical” or “electronic”) percept. Loizou’s sine wave simulation captured that sound quality to some degree and allowed us to conduct many experiments (e.g., Dorman et al, 1997a, 1997b; Dorman and Loizou, 1997). Simulations, both noise band and sine wave, have become a very useful tool for researchers because factors that normally vary across patients (for example, depth of insertion, number of active electrodes, the length of auditory deprivation) can be held constant in a simulation, and the effect of an independent variable can be seen without uncontrolled interactions.

In 2003, Anthony Spahr initiated a series of experiments that set the stage for some of the work described in this report. Following years of claims that all widely used, multichannel, cochlear implant systems allowed similar levels of speech understanding (in spite of different signal processing), we decided to put the claim to the test (Spahr et al, 2007). All of the manufacturers were concerned, quite reasonably, that, no matter what sentence material we used, the patients of the “other” manufacturer would have heard the sentences more often than their patients and that familiarity would bias the results of the experiment. To get around this problem we developed a new set of sentence materials—the AzBio sentences. The development of these materials is described in the first of the five reports that follow.

In 2005, prompted by the first reports of the success of hearing preservation surgery for cochlear implant patients in Frankfurt, Warsaw, and at the University of Iowa (von Ilberg et al, 1999; Gantz and Turner, 2004; Skarzynski et al, 2004), Anthony Spahr and Rene Gifford were dispatched to the International Center for Hearing and Speech in Warsaw to obtain estimates of cochlear function in the region of preserved hearing for the hearing preservation patients. In hearing preservation surgery, the surgeon attempts to preserve low-frequency hearing apical to the tip of an electrode array that is inserted 10 to 20 mm into the cochlea. The patients in Warsaw had 20 mm insertions. At issue were the reports of nearly perfect conservation of residual hearing in several of the patients. We were able to confirm the reports (Gifford et al, 2008a), and a part of our current research portfolio includes research with hearing preservation patients in the United States.

In the following sections we describe five projects currently underway in our laboratory that are being carried out with the collaboration of colleagues at Vanderbilt University and the University of Ottawa Faculty of Medicine. Those projects are (1) norming the AzBio sentence test, (2) comparing the performance of bilateral and bimodal cochlear implant (CI) patients in realistic listening environments, (3) accounting for the benefit provided to bimodal patients by low-frequency acoustic stimulation, (4) assessing localization by bilateral hearing aid patients and the implications of that work for hearing preservation patients, and (5) studying heart-rate variability as a possible measure for quantifying the stress of listening via an implant.

AZBIO SENTENCE TEST

As noted above, the AzBio sentences were developed so that we could test patients who had extensive familiarity with conventional sentence test materials. The development of the sentences was enabled by a small grant from the Arizona Biomedical Institute at Arizona State University. In appreciation, the resulting speech materials were dubbed the “AzBio” sentences.

Sentence Recordings

Four speakers, two male (aged 32 and 56 yr) and two female (aged 28 and 30 yr), each recorded 250 sentences. Each speaker was instructed to speak at a normal pace and volume. Across speakers, the speaking rate ranged from 4.4 to 5.1 syllables per second, consistent with normal speaking rates (Goldman-Eisler, 1968), and the root mean square (RMS) level of individual sentences had a standard deviation of 1.5 dB and a range of 9.6 dB.

Sentence Intelligibility Estimation

The 1000 sentence files were processed through a five-channel cochlear implant simulation (Dorman et al, 1997b) and presented to 15 normal hearing listeners. Sentences were presented at a comfortable level using Sennheiser HD 20 Linear II headphones. Each sentence was scored as the number of words correctly repeated by each listener. The mean percent correct score for each sentence (total words repeated correctly/total words presented) was used as the estimate of intelligibility. Sentences from each speaker ranged from<20 to 100% correct.

Sentence Selection and List Formation

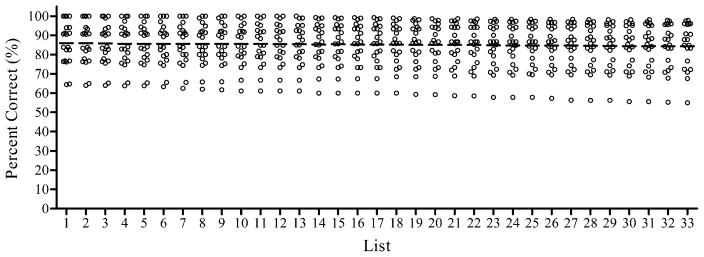

It was determined that each list would consist of five sentences from each speaker and that the average level of intelligibility for each speaker would be held constant across lists. For each speaker, sentences were rank ordered by mean percent correct scores. Sentences were then sequentially assigned to lists, with the first 33 sentences assigned, in order, to lists from 1 to 33 and the next 33 sentences assigned, in order, to lists from 33 to 1. As shown in Figure 1, this sentence-to-list assignment produced 33 lists of 20 sentences with a mean score of 85% correct (SD = 0.5). The average intelligibility of individual talkers across lists was 90.4% (SD = 0.5) and 86.0% (SD = 0.5) for the two female talkers and 87.0% (SD = 0.4) and 77.2% (SD = 0.7) for the two male talkers. Lists had an average of 142 words (SD = 6.4, range = 133 to 159).

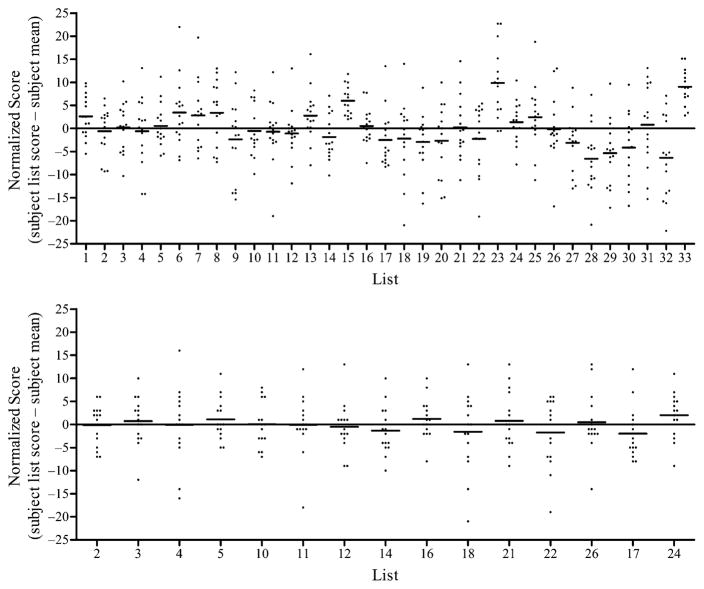

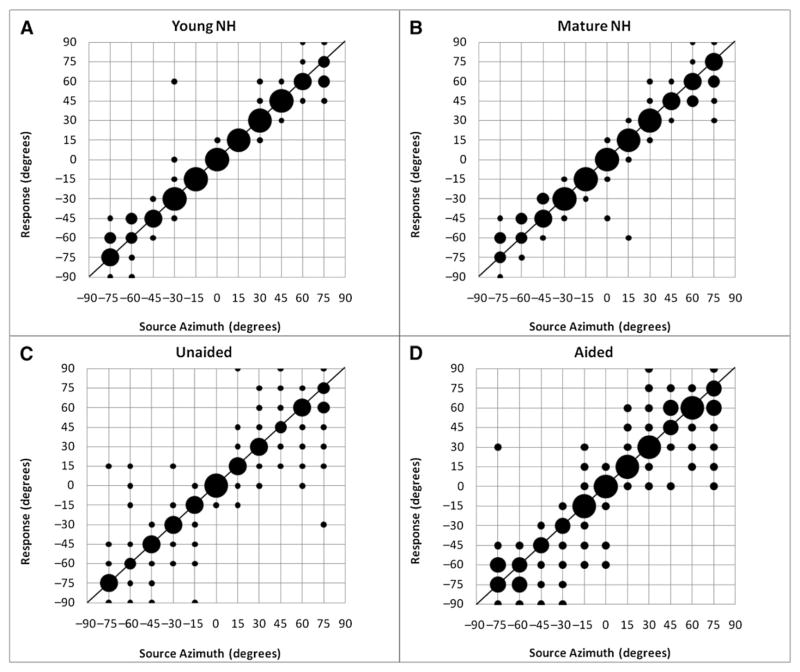

Figure 1.

Percent correct scores for 33 lists of the AzBio sentences for normal hearing subjects listening to a five-channel simulation of a cochlear implant. Each data point is the mean score for 15 listeners for one of the 20 sentences in the list. The horizontal bar is the mean percent correct score for that list.

List Equivalency Validation

Validation of the newly formed sentence lists was accomplished by testing 15 cochlear implant users on all sentence lists. Participants had monosyllabic word scores of 36–88% correct (avg=61%, SD=16). To avoid ceiling effects, sentence lists were presented at +5 dB signal-to-noise ratio (SNR) (multitalker noise) for subjects with word scores of 85% or greater, +10 dB SNR for subjects with word scores between 65 and 84%, and in quiet for subjects with word scores below 65%.

Sentences were presented at 60 dB SPL in the sound field from a single loudspeaker at 0° azimuth. Subjects were instructed to repeat back each sentence and to guess when unsure of any word. Each sentence was scored as the number of words correctly repeated and a percent correct score was calculated for each list.

Averaged across all subjects, individual list scores ranged from 62 to 79% correct, with an average of 69% correct (SD = 3.8). The individual results of the 15 subjects were used to identify lists that were not of equal difficulty. For this analysis, list scores from each subject were normalized by subtracting the subject’s mean percent correct score from the individual list score. The mean scores and distribution of normalized scores for all 33 lists is shown in Figure 2 (top).

Figure 2.

Top: Normalized score for each patient (list score minus mean score for all lists) for 33 lists of the AzBio sentences. The horizontal bar is the mean normalized score for that list. Bottom: Normalized scores and mean score for 15 commercially available lists of the AzBio sentences.

AzBio Sentence Test

It was decided that a subset of the AzBio sentence lists would be released in CD format for clinical evaluation of hearing-impaired listeners. The 15 lists with scores nearest to the group mean and the smallest distribution of individual scores were selected for this subset. The distribution of normalized scores for the 15 lists (2, 3, 4, 5, 10, 11, 12, 14, 16, 17, 18, 21, 22, 24, and 26) included in the commercial version of the AzBio Sentence Test is shown in Figure 2 (bottom).

Clinical Relevance

The goals for these materials were to provide an evaluation of individuals with extensive exposure to traditional sentence materials, to allow for evaluation of performance in a large number of within- or between-subjects test conditions by creating multiple lists with similar levels of difficulty, and to provide an estimate of performance that was consistent with the patient’s perception of his or her performance in everyday listening environments. We have created a test of sentence intelligibility, using both male and female speakers, which, according to Gifford et al (2008b), is more difficult than the Hearing in Noise Test (HINT; Nilsson et al, 1994) sentence test. We have created 15 lists of sentences with very similar mean list intelligibility that will allow clinicians to track performance over time, or over different signal processor settings, and have some confidence that large differences in performance are not due to differences in list intelligibility. Finally, patients have reported that their scores on the relatively difficult AzBio sentences are consistent with their own estimation of performance in at least some real-world environments.

We are currently working on two new sentence tests for use with children. One is in English and one is in Spanish. We have used the same organization principles to create these lists as we used to create the AzBio sentence lists and are now in the normative phase of testing these materials with CI patients.

THE PERFORMANCE OF BILATERAL AND BIMODAL CI PATIENTS

Until recently most CI patients heard with one “ear”—the implanted ear. In the near future most CI patients will hear with two “ears”–either two cochlear implants or with a cochlear implant and a hearing aid in the opposite ear (bimodal hearing). A chart review from two large CI clinics indicates that slightly over half of the patients have potentially useable, low-frequency hearing in the ear opposite the implant (Dorman and Gifford, 2010). The clinical issue is whether these patients would benefit more from aiding that ear or from providing a second cochlear implant (for recent reviews see Ching et al, 2007; Dorman and Gifford, 2010).

Clinical decision making will depend critically on the nature of the tests and test environments used to assess benefit. Standard clinical test environments can only approximate the natural environment of sound surrounding the listener. Laboratory environments with multiple, spatially separated speakers can simulate the natural environment and are sensitive to the potentially synergistic effects of head shadow, squelch, and diotic summation. However, this environment cannot be duplicated, for reasons of time, space, and cost, inmost clinics.

In this project we are testing bimodal and bilateral CI patients in both standard and simulated realistic test environments with the goal of creating a decision matrix that links data that can be easily collected in the clinic (for example, CNC scores in quiet and the amount of residual hearing) with data that cannot be collected in the clinic (for example, performance data collected with multiple, spatially separated loudspeakers). We are testing bilateral CI patients and bimodal patients (grouped by degree of residual hearing in the ear contralateral to the implant) in two realistic test environments using an eight-speaker surround-sound system. This system can simulate, with high fidelity, a restaurant environment and a cocktail party environment with competing sentence material as the noise—a situation of “informational” masking. At issue is the bilateral benefit for bimodal patients and for bilateral cochlear implant patients in these “real world” environments and the relationship of bilateral benefit in these environments to performance on standard clinical tests.

Results and Clinical Relevance

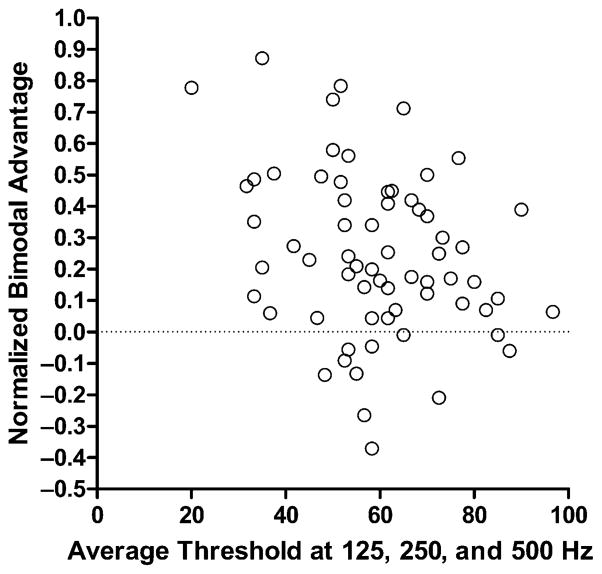

Our initial work suggests that the audiogram will not be a completely useful predictor of success with bimodal stimulation. Figure 3 shows normalized bimodal benefit for 66 patients as a function of the average threshold at 125, 250, and 500 Hz in the nonimplanted ear. The patients were drawn from populations at Arizona State University, Mayo Clinic, Rochester, and the University of Ottawa Faculty of Medicine, Department of Otolaryngology. We compute normalized benefit as bimodal score minus the electric-only score divided by 100 minus the electric-only score. This computation helps adjust for the electric-only starting-level of performance. The sentence materials were the AzBio sentences presented at either +10 or +5 dB SNR. Each open symbol on the plot represents the performance of one patient. Inspection of Figure 3 indicates that for averaged hearing losses from 30 to 70 dB benefit can range up to about 90% of the potential benefit. However, as shown over the range of 50–60 dB loss, there is a very large degree of variability in benefit for a similar degree of hearing loss. Most generally, the data suggest that the hearing loss in the nonimplanted ear has only a limited relationship to bimodal benefit. Motivated by this outcome, we have looked to other measures that might be related to the benefit that low-frequency hearing adds to electric stimulation. This work is described in the next section.

Figure 3.

Normalized bimodal advantage (bimodal score − CI score/100 − CI score) as a function of the averaged threshold at 125, 250, and 500 Hz for 66 bimodal patients. Each open circle indicates the performance of a single patient. The sentence material was the AzBio sentences presented at +10 or +5 dB signal-to-noise ratio.

SPECTRAL PROCESSING AND THE BENEFITS OF ACOUSTIC HEARING IN BIMODAL PATIENTS

As noted in the previous section, auditory thresholds over the range 30–70 dB HL in the nonimplanted ear do not account for the gain in speech understanding in noise when acoustic and electric stimulation are combined. Moreover, we have found no significant relationship between bimodal benefit and psychophysical measures of auditory function including nonlinear cochlear processing (Shroeder-phase effect), frequency resolution (auditory filter shape at 500 Hz), and temporal resolution (temporal modulation detection) (Gifford et al, 2007a, 2007b, 2008a).

Here we describe the relationship between another measure of auditory function in the region of low-frequency hearing: spectral modulation detection (e.g., Henry et al, 2005; Litvak et al, 2007) and the gain in intelligibility, for speech in noise, when both acoustic and electric stimulation are available. The bimodal patients had similar audiograms. At issue was whether a measure of spectral resolution in the region of low-frequency acoustic hearing was related to the gain in speech understanding when electric and acoustic signals were both available.

Subjects

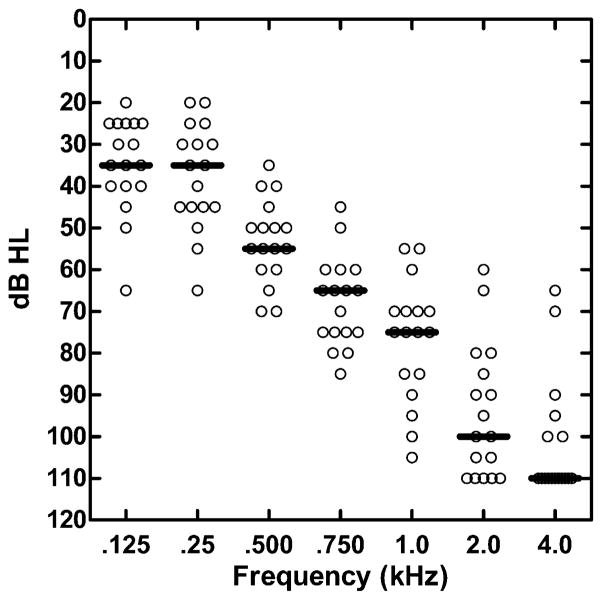

The patients were 17 adults who used a CI and who had residual hearing in the ear opposite the implant. The thresholds for these patients are shown in Figure 4. For all patients the average pure tone threshold over the range 125–750 Hz was less than 70 dB HL.

Figure 4.

Unaided hearing thresholds for 17 bimodal patients. Each open circle indicates the audiometric threshold of a single patient. The horizontal bars are the median thresholds.

Stimuli

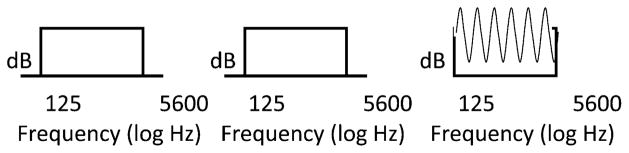

Rippled noise stimuli of 125–5600 Hz bandwidth were generated using MATLAB software. The spectral modulation frequency was 1 cycle/octave. The stimuli differed in spectral contrast, the difference (dB) between spectral peaks and valleys (see Fig. 5). The stimuli were of 400 msec duration and were gated with each 400 msec observation interval. The minimum spectral contrast (dB) needed to discriminate the spectrally modulated stimuli from the unmodulated stimuli was the spectral modulation detection threshold (SMDT).

Figure 5.

Stimulus sequence for spectral modulation detection task.

The spectral modulation detection thresholds in the nonimplanted ear were estimated using a cued, two-interval, two-alternative, forced-choice (2IFC) paradigm. For each set of three intervals, the first interval always contained the standard stimulus with a flat spectrum. The test interval, chosen at random from the other two intervals, contained the comparison stimulus. The comparison was always a modulated signal with variable spectral contrast (see Fig. 5). A run consisted of 60 trials. Each run began with the comparison stimulus (modulation depth with a peak-to-valley ratio of approximately 20 dB) clearly different from the reference. The modulation depth of the comparison was reduced after three correct responses and was increased after one incorrect response to track 79.4% correct responses (Levitt, 1971). The initial step size of the change of the modulation depth was 2 dB and was 0.5 dB after three reversals. All threshold estimates were based on the average of the last even number of reversals, excluding the first three. Signals were presented via a headphone (Sennheiser HD250 Linear II) at a comfortable listening level depending on the individual’s residual acoustic hearing. A larger spectral detection threshold score suggests poorer spectral resolution.

The AzBio sentences were presented via a loudspeaker at 70 dB SPL with a +5 or +10 dB SNR. Patients used their CIs and hearing aids with their “everyday” settings for all testing.

Results

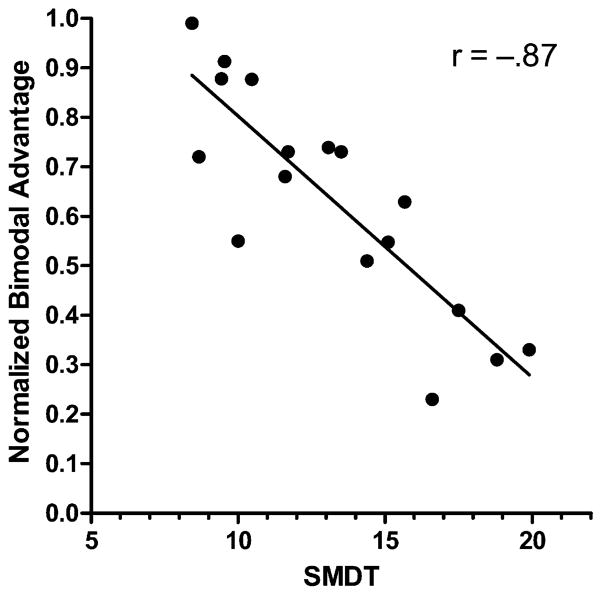

Figure 6 shows normalized benefit (see “Results and Clinical Relevance” above) from adding the acoustic signal to the electric signal as a function of the spectral modulation detection threshold. We find a significant negative correlation between the two variables (r=−.87, p < 0.0001). Recall that a larger spectral modulation detection threshold indicates poorer spectral resolution. Figure 6 suggests that the larger the detection threshold (and the poorer the spectral resolution of residual acoustic hearing), the less the acoustic signal adds to the intelligibility provided by the electric signal.

Figure 6.

Normalized bimodal advantage as a function of the spectral modulation detection threshold for 17 bimodal patients.

Clinical Relevance

It is possible that a relatively simple psychophysical measurement of spectral modulation detection may provide clinical guidance when making a decision about either aiding or implanting a “second” ear, when that ear has at least potentially usable hearing for bimodal stimulation. Given two ears with similar audiometric thresholds, an ear with good spectral resolution would be appropriate for bimodal stimulation, but an ear with poor spectral resolution would be a candidate for a second (bilateral) cochlear implant.

LOCALIZATION BY BILATERAL CI PATIENTS: IMPLICATIONS FOR SPATIAL HEARING IN HEARING PRESERVATION PATIENTS

The long-term goal of this project is to compare the performance of bilateral CI patients and hearing preservation patients on a task of localization in the horizontal plane and on speech understanding in surround sound environments, that is, in “restaurant” and “cocktail” test environments. At issue is whether performance on the localization task is related to performance on the speech perception tasks in the surround- sound environments. We assume that performance in the surround sound environments will show the combined effects of head shadow, summation, and squelch. We also assume that spatial release from masking will play a role in this environment. In these environments binaural cues are essential. Critically, nature has provided us with two groups of CI patients with different access to binaural cues.

Bilateral CI patients have access to one set of binaural cues, interaural level difference (ILD) cues, but not to interaural time difference (ITD) cues (Grantham et al, 2007). This is reasonable given the design of CI signal processors. These processors recover the overall temporal changes in amplitude, that is, the envelope, but not the subtle, instant-by-instant amplitude changes, or temporal fine structure. The ability to use ITDs as a cue for sound localization is based primarily on the temporal fine structure of sound. Bilateral CI patients can localize sounds on the horizontal plane using ILDs, but their ability is poorer (approximately 25° of error) than normal (approximately 7–8°) (Grantham et al, 2007).

Hearing preservation patients (patients with a CI and preserved low-frequency [under 750 Hz] hearing in both the implanted and contralateral ears) should have access to ITD cues but not to ILD cues. This follows from ILDs being most effective for frequencies above 1500 Hz (Yost, 2000), a frequency region where most hearing preservation patients have no acoustic hearing. Dunn et al (2010) report that hearing preservation patients fit with the Cochlear Corporation 10 mm array can localize with errors similar to those found for bilateral CI patients, approximately 25° of error versus 7–8° for normal hearing listeners. That study did not report the frequency composition of the sound sources used.

To have a reference for the localization performance of hearing preservation patients and their access to ITD cues we tested the localization ability of bilateral hearing-aid patients using low frequency (under 500Hz) noise bands. The research questions were (1) do hearing impaired listeners show sensitivity to ITD cues, as measured by localization performance using low-frequency stimuli, and (2) do bilateral hearing aids alter localization ability? These are relevant questions because many hearing preservation patients use a hearing aid in both ears.

Lorenzi et al (1999) reported that hearing-impaired listeners have poorer localization abilities than normal hearing listeners. Aiding hearing-impaired listeners has been shown to improve (Boymans et al, 2008), impair (Van den Bogaert et al, 2006), and not effect localization thresholds (Köbler and Rosenhall, 2002).

Methods

Twenty-two young (aged 21–40 yr) and 10 mature (aged 50–70 yr) normal hearing listeners were tested on a task of localization in the horizontal plane. In addition, ten bilateral hearing-aid patients with symmetrical mild-to-severe sensorineural hearing loss were tested in both aided and unaided conditions.

Three 200 msec, filtered (48 dB/octave) noise stimuli with different spectral content were presented in random order. Noise stimuli consisted of low-pass (LP) noise filtered from 125–500 Hz; high-pass (HP) noise filtered from 1500–6000 Hz, and wideband (WB) noise filtered from 125–6000 Hz. Here we present data only on the data collected with the LP noise.

The stimuli were presented from a 13 loudspeaker array with an arc of 180° in the frontal horizontal plane. The signals were presented at 65 dBA; level was adjusted in 5dB increments as necessary to make it audible in the unaided conditions. Overall level was randomly roved 2 dB from presentation to presentation to ensure that the level of the loudspeakers was not a cue.

Results

RMS error in degrees was calculated for the young and mature normal hearing listeners (Fig. 7, top). There were no differences in RMS error between the two groups (8° and 9°, respectively). Moreover, there was no significant correlation between age and localization over the range 21–70 yr (r = .21). As shown in Figure 7 (bottom), errors for the hearing impaired group were larger than NH listeners in both the unaided and aided conditions. The RMS error for the unaided condition was 15°. For the aided condition it was 16°.

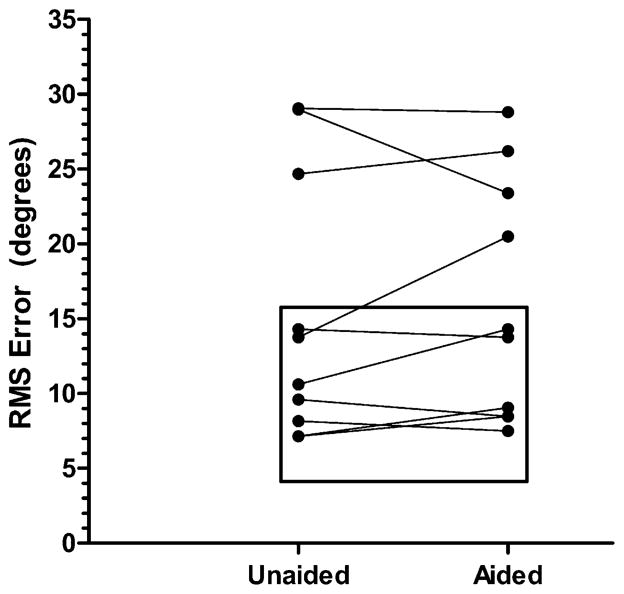

Figure 7.

Localization accuracy for young and mature normal hearing listeners and bilateral hearing-aid patients in unaided and aided test conditions. The larger the balloon the higher the percent response. The smallest balloon indicates 0 to 25% response; the largest balloon indicates greater than 75% response. The mean error for the young normal hearing listeners was 8° while for the mature listeners the mean error was 9°. For the hearing-aid patients the mean error was 15° in the unaided condition and 16° in the aided condition.

Individual responses for the hearing-impaired listeners are shown in Figure 8 along with a rectangle showing the range of responses from normal hearing listeners. The hearing-impaired listeners show a range of outcomes, from within the range of normal to errors twice that of normal.

Figure 8.

Localization error for bilateral hearing-aid patients in aided and unaided test conditions. The box indicates the range of errors for normal hearing listeners.

Clinical Relevance

Overall, our results show that (1) some bilateral hearing-aid users demonstrate localization abilities comparable to normal hearing listeners for low-pass stimuli, (2) some bilateral hearing-aid users have errors two times those of normal hearing listeners, and (3) amplification has no effect on localization for most of the bilateral hearing-aid users. Based on our results with bilateral hearing aid patients responding to low-frequency noise bands, we can expect hearing preservation patients to show a range of localization abilities, from near normal to clearly impaired. However, all should be able to at least lateralize stimuli. At issue in our current work is whether localization ability has any relationship to speech recognition performance in realistic listening environments with multiple, spatially separated loudspeakers

HEART RATE VARIABILITY: A POSSIBLE MEASURE FOR QUANTIFYING THE STRESS OF LISTENING VIA AN IMPLANT

The goal of this project is to quantify the overall effort involved in speech perception as indicated by a physiological measure of stress—heart rate variability. Hearing-impaired listeners commonly report feelings of anxiety, stress, and mental fatigue in response to communication demands in social settings and are significantly more likely to be withdrawn, depressed, and insecure than their normal hearing peers. The effects of hearing loss can be reversed, to some extent, by an intervention such as a hearing aid or a cochlear implant. Following treatment, individuals commonly report a general reduction of stress-related symptoms and improved social interactions.

These outcomes linking hearing loss and stress are compelling. However, they are largely ignored in clinical practice. Questionnaires are sometimes employed to describe the degree of hearing impairment experienced before and after intervention. More commonly, objective success with an intervention is measured with a score on a test of speech understanding. Though there is general agreement between these subjective and objective measures, the relationship is not highly predictable. For example, cochlear implant users comparing bilateral performance to performance with only the better ear commonly demonstrate small improvements (i.e., approximately 10% points) in speech understanding for CNC words but report significant improvements in health-related quality of life. This pattern suggests that large changes in speech understanding are not necessary to affect meaningful change in the day-to-day lives of these individuals. Unfortunately, clinical intervention protocols and insurance reimbursement policies are largely determined by objective, not subjective, outcome measures. For that reason, it would be valuable to have an objective measure of stress that could be used to assess the value of auditory interventions (e.g., hearing aids, cochlear implants, or auditory training).

Heart rate variability (HRV) can be measured and interpreted as a physiological measure of the stress response. HRV measurements are derived from electrocardiogram (ECG) data and reflect beat-to-beat variation in the natural heartbeat, created by the actions of the sympathetic and parasympathetic branches of the autonomic nervous system. Berntson and Cacioppo (2004) suggest that even mild stress from arithmetic problems incites changes in baroreceptor reflexes (neurons that maintain blood pressure) significant enough to produce parasympathetic and sympathetic nervous system changes and alter HRV. Under stressful conditions, in which sympathetic nervous system activity is higher, heart rate variability decreases. In the absence of a stressor, there is greater parasympathetic nervous system activity and HRV increases.

This project was a pilot experiment to determine whether listening in noise leads to a stress response, that is, a reduction in beat-to-beat variability.

Subjects

The subjects were six normal hearing undergraduates at Arizona State University.

Measuring Heart Rate Variability

ECG activity was recorded using a BIOPAC Student Laboratories (BSL) Pro MP35 recording unit with BSLPro software. The R-wave component of each heart beat was identified, and R-to-R intervals were calculated using commercial software (Allen et al, 2007). HRV was defined as the standard deviation of the R-to-R intervals observed over a 4 min recording period.

Listening Conditions

The AzBio sentences were presented in six listening conditions: quiet, +3, 0, −3, −6, and −9 dB SNR. The order of the conditions was randomized across subjects.

For each test condition the protocol was as follows: four minutes of silence (baseline), four minutes of AzBio sentences in quiet (subject repeated the sentences), a two minute break, four minutes of noise (no sentences), four minutes of AzBio sentences in one of the noise conditions described above, and finally two minutes of quiet.

RESULTS

Sentence Understanding in Noise

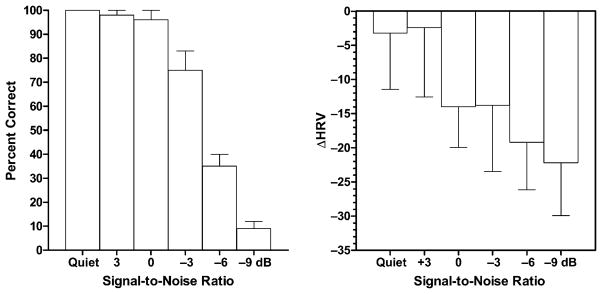

The average sentence understanding scores are shown in Figure 9 (left). A repeated measures ANOVA revealed a significant main effect for conditions (F(5, 25) = 589.5, p < 0.001). A posttest indicated significant drops in performance as the SNR was decreased to −3, −6, and −9 dB. Thus, as expected, sentence understanding scores decreased with increasing noise.

Figure 9.

Left: Percent correct AzBio score for normal hearing listeners as a function of signal-to-noise ratio. Right: The change in heart rate variability (relative to no sound condition) as a function of signal-to-noise ratio. Error bars indicate ± 1 SEM.

Heart Rate Variability

Baseline, HRV measures were subtracted from average HRV measures during each task to yield the mean change in HRV or ΔHRV. A repeated measures ANOVA revealed no significant main effect of noise on ΔHRV (F(5, 25) = 0.57, p < 0.72). Visual inspection of the individual data indicated that one patient behaved in a manner opposite that of the other patients. For 5 of the 6 patients, HRV generally decreased as the SNR became poorer; for one patient the HVR increased systematically as the SNR became poorer. When this subject was removed from the analyses, we found a significant effect of conditions on HRV and, relative to the quiet, resting baseline, a mean 3.2 point decrease in HRV when listening in quiet and a mean 22.2 point decrease in HRV when listening at −9 dB SNR (see Fig. 9, right). To give a context to this change, Allen et al (2007) report a 6.3 point change in HRV during a difficult mental arithmetic task.

Clinical Relevance

Hearing impaired listeners commonly report that understanding speech in noisy environments requires significant effort, creates internal stress, and is physically draining. This pilot experiment suggests that changes in heart rate variability may reflect changes in stress, or listening effort, in different listening environments. Our current NIH-sponsored project (Spahr, PI) evaluates changes in heart rate variability (i.e., stress or listening effort) in different levels of background noise and in different listening configurations (e.g., with and without hearing aids, unilateral and bilateral cochlear implants, cochlear implant alone and bimodal). The goal is to determine the effect of various treatment options on the level of stress experienced by the listener. The results of this study could provide valuable counseling information for clinicians about the potential benefits of hearing aid use or cochlear implantation.

Acknowledgments

This research was supported by grants from the NIDCD to authors M. Dorman and R. Gifford (R01 DC 010821), T. Spahr (R03 DC 011052), and T. Zhang (F32 DC010937).

Abbreviations

- CI

cochlear implant

- CNC

consonant-nucleus-consonant

- ECG

electrocardiogram

- HRV

heart rate variability

- ILD

interaural level difference

- ITD

interaural time difference

- NIH

National Institutes of Health

- RMS

root mean square

- SNR

signal-to-noise ratio

References

- Allen JJ, Chambers AS, Towers DN. The many metrics of cardiac chronotropy: a pragmatic primer and a brief comparison of metrics. Biol Psychol. 2007;74:243–262. doi: 10.1016/j.biopsycho.2006.08.005. [DOI] [PubMed] [Google Scholar]

- Berntson GG, Cacioppo JT. Heart rate variability: stress and psychiatric conditions. In: Malik M, Camm AJ, editors. Dynamic Electrocardiography. New York: Furtuna; 2004. pp. 57–64. [Google Scholar]

- Boymans M, Goverts T, Kramer S, Festen J, Dreschler W. A prospective multi-centre study of the benefits of bilateral hearing aids. Ear Hear. 2008;29(6):930–941. doi: 10.1097/aud.0b013e31818713a8. [DOI] [PubMed] [Google Scholar]

- Ching TY, Van Wanrooy E, Dillon H. Binaural-bimodal fitting or bilateral implantation for managing severe to profound deafness: a review. Trends Amplif. 2007;11:161–192. doi: 10.1177/1084713807304357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Dankowski K, Smith L, McCandless G. Word recognition by 50 patients fitted with the Symbion multichannel cochlear implant. Ear Hear. 1989;10:44–49. doi: 10.1097/00003446-198902000-00008. [DOI] [PubMed] [Google Scholar]

- Dorman M, Gifford R. Combining acoustic and electric stimulation in the service of speech recognition. Int J Audiol. 2010;49(12):912–919. doi: 10.3109/14992027.2010.509113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Hannley M, McCandless G, Smith L. Acoustic/phonetic categorization with the Symbion multichannel cochlear implant. J Acoust Soc Am. 1988;84:501–510. doi: 10.1121/1.396828. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Loizou P. Speech intelligibility as a function of the number of channels of stimulation for normal-hearing listeners and for patients with cochlear implants. Am J Otol. 1997;18(6 Suppl):113–114. [PubMed] [Google Scholar]

- Dorman MF, Loizou P, Rainey D. Speech intelligibility as a function of the number of channels of stimulation for signal processors using sine-wave and noise-band outputs. J Acoust Soc Am. 1997a;102(4):2403–2411. doi: 10.1121/1.419603. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Loizou P, Rainey D. Simulating the effect of cochlear-implant electrode insertion depth on speech understanding. J Acoust Soc Am. 1997b;102(5 Pt 1):2993–2996. doi: 10.1121/1.420354. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Smith L, Smith M, Parkin J. Frequency discrimination and speech recognition by patients who use the Ineraid and CIS cochlear-implant signal processors. J Acoust Soc Am. 1996;99:1174–1184. doi: 10.1121/1.414600. [DOI] [PubMed] [Google Scholar]

- Dunn C, Perreau A, Gantz B, Tyler R. Benefits of localization and speech perception with multiple noise sources in listeners with a short-electrode cochlear implant. J Am Acad Audiol. 2010;21:44–51. doi: 10.3766/jaaa.21.1.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eddington Speech discrimination in deaf patients with cochlear implants. J Acoust Soc Am. 1980;68(3):885–891. doi: 10.1121/1.384827. [DOI] [PubMed] [Google Scholar]

- Eddington D, Dobelle W, Brackmann D, Mladejovsky M, Parkin J. Auditory prosthesis research with multiple channel intracochlear stimulation in man. Ann Otol Rhinol Laryngol. 1978;87(6, Pt 2, Suppl 53):1–39. [PubMed] [Google Scholar]

- Gantz BJ, Turner CW. Combining acoustic and electrical speech processing: Iowa/Nucleus hybrid implant. Acta Otolaryngol. 2004;124:334–347. doi: 10.1080/00016480410016423. [DOI] [PubMed] [Google Scholar]

- Gifford R, Dorman M, McKarns S, Spahr A. Combined electric and contralateral acoustic hearing: word and sentence intelligibility with bimodal hearing. J Speech Lang Hear Res. 2007a;50:835–843. doi: 10.1044/1092-4388(2007/058). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R, Dorman M, Spahr A, Bacon S. Auditory function and speech understanding in listeners who qualify for EAS Surgery. Ear Hear. 2007b;28(2 Suppl):114S–118S. doi: 10.1097/AUD.0b013e3180315455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R, Dorman M, Spahr A, Bacon S, Skarzynski H, Lorens A. Hearing preservation surgery: psychophysical estimates of cochlear damage in recipients of a short electrode array. J Acoust Soc Am. 2008a;124(4):2164–2173. doi: 10.1121/1.2967842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R, Shallop J, Peterson A. Speech recognition materials and ceiling effects: considerations for cochlear implant programs. Audiol Neurootol. 2008b;13:193–205. doi: 10.1159/000113510. [DOI] [PubMed] [Google Scholar]

- Goldman-Eisler F. Psycholinguistics: experiments in spontaneous speech. New York: Academic Press; 1968. [Google Scholar]

- Grantham DW, Ashmead DH, Ricketts TA, Labadie RF, Haynes DS. Horizontal-plane localization of noise and speech signals by postlingually deafened adults fitted with bilateral cochlear implants. Ear Hear. 2007;28(4):524–541. doi: 10.1097/AUD.0b013e31806dc21a. [DOI] [PubMed] [Google Scholar]

- Henry BA, Turner CW, Behrens A. Spectral peak resolution and speech recognition in quiet: normal hearing, hearing impaired, and cochlear implant listeners. J Acoust Soc Am. 2005;118:1111–1121. doi: 10.1121/1.1944567. [DOI] [PubMed] [Google Scholar]

- Köbler S, Rosenhall U. Horizontal localization and speech intelligibility with bilateral and unilateral hearing aid amplification. Int J Audiol. 2002;41:395–400. doi: 10.3109/14992020209090416. [DOI] [PubMed] [Google Scholar]

- Levitt H. Transformed up-down methods in psychoacoustics. J Acoust Soc Am. 1971;49(2, Suppl 2):467. [PubMed] [Google Scholar]

- Litvak LM, Spahr AJ, Saoji AA, Fridman GY. Relationship between perception of spectral ripple and speech recognition in cochlear implant and vocoder listeners. J Acoust Soc Am. 2007;122:982–991. doi: 10.1121/1.2749413. [DOI] [PubMed] [Google Scholar]

- Lorenzi C, Gatehouse S, Lever C. Sound localization in noise in hearing-impaired listeners. J Acoust Soc Am. 1999;105(6):3454–3463. doi: 10.1121/1.424672. [DOI] [PubMed] [Google Scholar]

- Nilsson M, Soli S, Sullivan J. Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am. 1994;95:1085–1099. doi: 10.1121/1.408469. [DOI] [PubMed] [Google Scholar]

- Osberger MJ, Fisher L. SAS-CIS preference study in postlingually deafened adults implanted with CLARION cochlear implant. Ann Otol Rhinol Laryngol. 1999;177:74–79. doi: 10.1177/00034894991080s415. [DOI] [PubMed] [Google Scholar]

- Skarzynski H, Lorens A, Piotrowska A. Preservation of low-frequency hearing in partial deafness cochlear implantation. Int Congr Ser. 2004;1273:239–242. doi: 10.1080/00016480500488917. [DOI] [PubMed] [Google Scholar]

- Spahr A, Dorman M, Loiselle L. Performance of patients fit with different cochlear implant systems: effects of input dynamic range. Ear Hear. 2007;28(2):260–275. doi: 10.1097/AUD.0b013e3180312607. [DOI] [PubMed] [Google Scholar]

- Van den Bogaert T, Klasen T, Moonen M, van Deun L, Wouters J. Horizontal localization with bilateral hearing aids: without is better than with. J Acoust Soc Am. 2006;119(1):515–526. doi: 10.1121/1.2139653. [DOI] [PubMed] [Google Scholar]

- von Ilberg C, Kiefer J, Tillein J, et al. Electric-acoustic stimulation of the auditory system. ORL J Otorhinolaryngol Relat Spec. 1999;61:334–340. doi: 10.1159/000027695. [DOI] [PubMed] [Google Scholar]

- Wilson BS, Finley CC, Lawson DT, Wolford FD, Eddington DK, Rabinowitz WM. Better speech recognition with cochlear implants. Nature. 1991;352:236–238. doi: 10.1038/352236a0. [DOI] [PubMed] [Google Scholar]

- Wilson BS, Lawson DT, Zerbi M, et al. First Quarterly Progress Report NIH Contract N01-DC-2-2401. Bethesda, MD: Neural Prosthesis Program, National Institutes of Health; 1992. Speech Processors for Auditory Prostheses: Virtual Channel Interleaved Sampling (VCIS) Processors. [Google Scholar]

- Yost WA. Fundamentals of Hearing: An Introduction. San Diego: Academic; 2000. [Google Scholar]