Abstract

Science is strengthened not by research alone, but by publication of original research articles in international scientific journals that gets read by a global scientific community. Research publication is the ‘heart’ of a journal and the ‘soul’ of science - the outcome of collective efforts of authors, editors and reviewers. The publication process involves author-editor interaction for which both of them get credit once the article gets published – the author directly, the editor indirectly. However, the remote reviewer who also plays a key role in the process remains anonymous and largely unrecognised. Many potential reviewers therefore, stay away from this ‘highly honorary’ task. Appropriate peer review controls quality of an article and thereby ensures quality and integrity of the journal. Recognising and rewarding the role of the reviewer is therefore vital. In this article, we propose a novel idea of Reviewer Index (RI), Reviewer Index Directory (RID) and Global Reviewer Index Directory (GRID), which will strengthen science by focussing on the reviewer, as well as the author. By adopting this innovative Reviewer Centric Approach, a new breed of well-trained reviewers of high quality and sufficient quantity will be available for eternity. Moreover, RI, RID and GRID would also enable grading and ethical rewarding of reviewers.

Keywords: Author, Editor, Global reviewer index directory, Journal, Reviewer, Reviewer index, Reviewer index directory, Reward, Science

Introduction

Science and knowledge have progressed considerably due to various articles published in peer-reviewed journals which help build a sound scientific network of knowledge all over the world. At present, contribution from authors is the main focus of a scientific paper. Although the editor is the final judge, this published product would not have seen the light of day unless it got some sort of a ‘Go Ahead’ from one or the other reviewer.

Review of literature

The internet has made it possible to have a fast and effective peer review, which filters articles and thereby improves the quality of any journal (Altman, 2002[1]). Although, it is now a well accepted fact that peer review is crucial to the selection and publication of qualitative scientific work, identifying and motivating high-quality peer reviewers is still an unsolved question. Of course, there are reviewers who take their task too seriously and make it their duty to decimate an article, or become quasi-authors themselves, and thus become a menace. But they are not our focus here. We concentrate, rather, on how to solve the problem of reluctant and tardy reviewers, as also streamline and standardise the whole peer review process.

To improve peer review skills, Callaham and Tercier (2007[3]), conducted a survey of past training and experiences in 306 experienced reviewers and confirmed that it was difficult to identify types of formal training or experience that would predict the performance of a reviewer. Moreover, they also highlighted that skill in scientific peer review was as ill defined and hard to impart as was “common sense.” They therefore, recommended implementation of a review ratings system by every journal to routinely monitor the quality of their reviews.

Efforts have also been made to train peer reviewers so as to improve the quality of their subsequent reviews (Callaham et al., 2012[4]). One such involved pairing newly recruited peer reviewers with senior reviewers as mentors for structured training to review the same article. But the results did not show any immediate improvement in the quality of subsequent reviews by new reviewers.

Grainer (2007[7]) puts forth that those who seek to publish should also freely and voluntarily share responsibility as reviewers, so as to provide timely, unbiased, quality feedback to improve contributions to the system reviewers are serving. He therefore stresses that peer review be considered a professional responsibility for a quality control system and that it was only as good as its participants.

Getting good reviewers is difficult. Why is it so? Let us see what reviewers get from the existing system. In the current scenario, the author gets aid from the journal in the form of language and statistical support, and early recognition as AOP (Ahead of Print) online publication etc., But very few journals extend support to reviewers, and that too only in the form of a limited period online subscription for searching references. Practically for all other aspects, the reviewers are left to fend on their own.

To be fair, it's not that reviewers are totally ignored. Most journals now publicly acknowledge their reviewers and some even award those providing the most careful and constructive reviews (Siemens, 2012[9]). Conducting peer review workshops during annual meetings, which are included as part of periodic education group activity, is another way of ensuring encouragement to reviewers (Siemens, 2012[9]). But all this is small recompense for the work reviewers do.

Although most journals do send a mail thanking reviewers for their valuable time and effort, very few even let them know the status and future of the articles reviewed by them. Thus, although authors get due acknowledgement and reward for their contribution to the pool of scientific knowledge, the reviewers largely remain in oblivion.

Due to this, many capable intellectuals either stay away from reviewership, or if at all they agree, they give this work their last priority. Some even do not keep their promise of reviewing the manuscript, and ultimately the article's publication is delayed, or it is not published at all. This breaks the trust of the author in the journal system and tarnishes the image of the journal in the scientific community.

In the past, some journals have addressed this issue by reviewing the reviewers using fictitious manuscript to evaluate peer reviewers performance (Baxt et al., 1989[2]). More recently, FOSE (Framework for Open Science Evaluation), which is a novel concept based on social networking, has been proposed to complement the existent system to ensure valid and transparent reviews (Walther and Bosch, 2012[11]). “Peer-reviewed” peer review is another newly proposed review system that has a wide scientific community base (Wicherts et al., 2012[12]). In this system, reviews are first published online and then peers rate them. Hence reviewers become more accountable, which ultimately increases the scientific quality of the article. But all these novel ideas can succeed only if reviewers come forward and actively participate, which at the moment appears unlikely.

Some reviewers are swift, while others are laggards. But, both are in the same boat as far as a journal is concerned. Hence, the process of accurate and timely reviewership is disrupted and needs an urgent fix. Efforts have been on for making reviewers visible by bringing in openness, accountability and credit (Godlee, 2002[6]), and even by giving them CME points for their hard work (Frank, 2009[5]). Wicherts et al., 2012[12] have put forth the Broad Daylight Publication Model, which emphasises openness at three levels: transparency of the editorial process, accountability of reviewers, and openness with respect to data. In their model, reviews by reviewers are published alongside the manuscripts so that in the scientific community; along with the authors, reputation of the reviewers keeps climbing and they have better chances for higher appointments [like permanent membership of the reviewing board followed by that of the editorial board, associate editorship, and ultimately, even appointment as editor of a scientific journal].

Hauser and Fehr (2007[8]) believe that, as mortal human beings, we are highly sensitive to rewards and punishments. And so they propose a solution based on the logic of economic incentives and the evolutionary origins of human nature. In their model, journals should reward timely reviewers by sending their manuscripts out for review at the earliest, and if accepted for publication, by pushing their papers high up into the publication queue. For every manuscript that the reviewer refused to review, a one-week delay was added to the review of their own next submission. For reviewers who were not punctual after accepting to review any manuscript, for every day since receipt of the manuscript for review plus the number of days past the deadline, the reviewer's next personal submission to the journal was to be held in editorial limbo for twice as long before it was sent for review.

Tite and Schroter (2007[10]) performed a survey of peer reviewers from five different biomedical journals to determine why reviewers decline to review and also get their opinions on reviewer incentives. They found that the most important factor that made reviewers accept to review a paper included significant contribution of the submitted paper to their subject area [mean 3.67 (standard deviation (SD) 86)], relevance of the topic to their own work [mean 3.46 (SD 0.99)], and opportunity in it to learn something new [mean 3.41 (SD 0.96)]. Also, conflict with other workload [mean 4.06 (SD 1.31)] was a prime reason for them to decline review. Many reviewers opined that financial incentives would not be effective when time constraints were prohibitive [mean 3.59 (SD 1.01)]. It was noted that non-financial incentives might encourage reviewers to accept requests to review: For example, free subscription to journal content [mean 3.72 (SD 1.04)], annual acknowledgement on the journal's website [mean 3.64 (SD 0.90)], more feedback about the outcome of the submission [mean 3.62 (SD 0.88)] and quality of the review [mean 3.60 (SD 0.89], and appointment of reviewers to the journal's editorial board [mean 3.57 (SD 0.99)].

But till date all these laudable proposals have not resulted in any significant concrete action in the scientific community. For that a two-pronged solution is necessary. Firstly, supporting reviewers, with a focus on their research needs, statistical as well as language requirements; and secondly, setting in place a proper reviewer reward programme.

We propose such a proper reviewer reward programme in the form of a Reviewer Index, which will strengthen science by focussing on the reviewer, as well as the author.

The 4 step reviewer centric approach

The innovation we propose is adopting a four step Reviewer Centric Approach – an incentive that would enable and encourage reviewers to do their best.

Step 1: Compiling the preliminary database

For a given speciality reviewers are chosen/selected after carefully going through their academic and research performance and portfolios (which can be requested/accessed from their respective institutions). This database is then compiled and four times the list of required reviewers is chosen. This ground-work can either be done by the Research and Development or Human Resource Development supporting team of any journal [if the journal is independent and economically vibrant] or, more appropriately, by the scientific journal publishing houses. With giant strides in the information and telecommunication sector, various software companies, and even the social media, may not shy away from creating this central pool.

Step 2: Making the offer

The chosen ones are preliminarily contacted via letters/e-mails/telephones etc., Their preferred method of communication is verified and an offer/request for reviewer-ship are officially sent. Out of those who respond positively, three times the list of required reviewers is chosen. This task has to be done by the same team of the journal, or the scientific journal publishing house, or the social media site that was involved in step one. At this stage, they can be introduced to the concept of Reviewer Index and the Global Reviewer Index directory that is described in detail in the next section of this article. The stepwise benefits described in this system would be sufficient to convince them that they would be adequately rewarded for their efforts. Success stories of prior achievers may be highlighted so that the concept is well understood by them.

Step 3: ‘Ideal reviewer programme’

Their commitment is ensured by sending them a link to ‘Ideal Reviewer Programme’ developed in-house by the publisher, and requesting them to go through it as per their convenience, and to answer some relevant questions. The question arises: Although it is a good idea, how do we ensure prospective reviewers get motivated enough take part in this programme? As described in stage two, success stories of academic/research advancement in the scientific community of prior participants/individuals who followed similar, if not the same path, may be highlighted, so that prospective reviewers get motivated to take part in this programme. For the initial period, achievements of persons of high scientific calibre on top academic positions across the globe, like for example the success stories of Dean/Head of institution, editors of reputed scientific journals and heads of various corporate firms that deal in scientific activities may be displayed [with their prior permission]; until there are enough examples from the currently proposed system. Active participation in this step from prospective reviewers would separate the chaff from the grain, and get those that have real brains with an academic inclination to become future reviewers.

Step 4: Offering tailor made support

The committed lot of reviewers are then offered tailor made statistical and language support; and also complete access to references quoted in the submitted article. The reviewer has to file his review back within the stipulated time. A positive feedback from at least two out of three (or more) reviewers should enable the article to be accepted.

Having laid the background, let us now come to the concrete proposal.

Reviewer index, reviewer index directory and global reviewer index directory: Rewards to the reviewer

The reviewer index

Introduction of this concept to the scientific community is the need of the hour. As there are various indices available for comparing authors, we feel that Reviewer Index should be a concept that should be tagged to a reviewer throughout his life. Further details are in Part [C].

Reviewer index directory and global reviewer index directory

A directory of RI called Reviewer Index Directory [RID] should be made for each Publishing House/Journal and then compiled into a single Global Reviewer Index Directory [GRID] in which RI of a reviewer could be added for different journals. In this process the help of World Health Organization or other global scientific organisations may be requested for.

The working

How does RI work?

Any article accepted/rejected due to proper review by a reviewer will enable him to add 1 point on his RI. Similarly, if a reviewer fails to submit his review in the given time after having agreed to do so, in the first instance he gets intimation. If it is repeated, one point is deducted from his RI. An email is sent to the reviewer when a point is added or deducted, also mentioning his total RI, RID position, and GRID Position.

All reviewers with a RI > 5 – receive a personal letter from the Publisher/Journal on their official stationary, acknowledging them as High Quality Reviewers of Good Standing.

Reviewers with a RI > 5 - For every additional article reviewed after reaching a stage of RI 5, the name of the reviewer could be mentioned in the published scientific article just below the line mentioning names of the authors. Thereby, whenever the article is read, the name of the reviewer/s too is read. Thus everybody who has contributed to the article gets due recognition.

Reviewers with a RI > 10 - The journal- The journal / publishing house could write an official letter to the Head of the Institute where the reviewer works and also to the respective scientific societies/organisations of which these reviewers are members. This letter should carry a recommendation for further progress of these reviewers on the institutional/organisational academic ladder. In addition, their names would appear in the article published as well.

Reviewers with a RI > 15- Their names appear on journal website and also in the journal under section – Reviewers Hall of Fame. Also, they get free access to e-journal if that is not provided earlier.

Reviewers with a RI > 20 - Sponsored to various academic activities nationally, as well as internationally every time they cross RI of 20.

Reviewers with RI > 15 - 20 are used specifically for articles which have been unnecessarily delayed due to one reason or the other, and for exceptional articles that are of prime importance and need urgent publication. The eligible RI values may be different for different journals/publishers as per their interim decisions.

The proposed concept of The Reviewer Index [RI], thus, enables grading of reviewers, as well as entitles them to different benefits. It is an action plan whose time has come. Interested researchers and experts in the field can of course suggest suitable modifications.

Quality, feasibility, benefits and limitations

Quality

By adopting a Reviewer Centric Approach, a new breed of well trained reviewers of high quality and sufficient quantity will be made available for eternity. [So the pool will be available, even if individual reviewers no longer remain functional.]

Feasibility

We feel that Adopting a Reviewer Centric Approach is highly feasible if the 4 steps outlined earlier are followed. Moreover, it will ensure that everyone who is contributing to the published article gets due recognition.

The journals/editors/publishers have to take the lead and approach individuals, institutions and global organisations for this. Even if all do not join initially, a sincere effort by a single journal/editor/publisher will surely make a difference, and shall be noticed and complimented, so that others would soon follow in their footsteps. And once the process is on, it will ensure a satisfied committed scientific community of reviewers, authors and editors.

Benefits

How will this concept benefit practitioners of the process -- editors, reviewers, authors, publishers – and equally important, readers?

With prompt and efficient review, the author gets the best outcome as the quality of his submission is enhanced. Also, the RI duly rewards the efforts of the reviewers. The journal/editor gets a strong and scientifically bonded author-reviewer force which takes the journal to newer heights, and ultimately benefits scientific advancement itself. And finally, the ultimate beneficiary of all this is the reader who gets the best product in a timely manner.

Limitations or drawbacks

This concept is new and hence, like most new concepts, all its ramifications have not been worked out. For example, we may need to work out a formula, like in Impact Factor [IF], rather than a simple linear calculation of RI mentioned here. This, we leave to future development.

Moreover, it is likely to be battered by criticism/cynicism, and/or initial inertia due to a status quoist mindset. As it is implemented, its flaws can be found and fixed. Only time will tell whether this system is efficient or not. But, first of all it is necessary to accept that there is an urgent need for this, and prima facie, the action plan suggested makes practical sense.

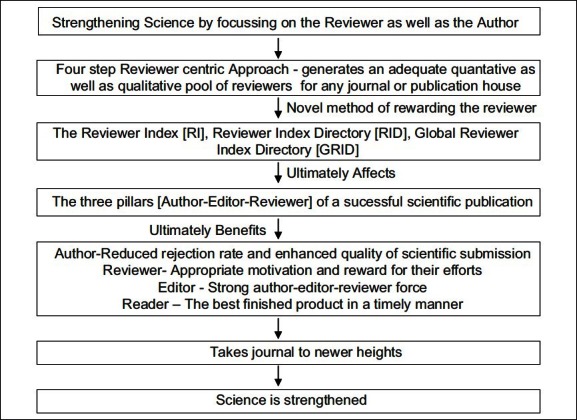

Concluding Remarks [See also Figure 1: Flowchart of Paper]

Figure 1.

Flowchart of the paper

In conclusion, this idea of RI, RID and GRID has a wide scope as it can be applied to all journals, irrespective of their speciality. It has the following usefulness for all stakeholders involved in scientific publications and advancement of science:

For a reviewer, implementation of this idea will enable him to respond to submissions promptly and efficiently, bringing out the best in him, and feel amply rewarded for the same.

For authors, this idea will ensure that their submission is properly and promptly reviewed, verified and appropriately modified to qualify as a high quality publication.

For editors, as the quality of inputs from author (researchers) and reviewers improves, and promptness is ensured, the standard of the journal grows exponentially.

For readers, they get the best finished product in a timely manner.

Take home message

This concept of rewarding the reviewer by introducing the Reviewer Index [RI], the Reviewer Index Directory [RID], and finally the Global Reviewer Index Directory [GRID] is suitable for all subject areas.

It will solve the current problems of a tardy peer review process and insufficient reviewer availability.

It will ensure reviewer rewardability within the confines of permitted ethicality.

It will ensure greater number of reviewed papers ultimately benefiting science itself.

Questions that this Paper Raises

Are reclusive reviewers responsible for rejection of an original article, and/or its delayed publications?

Should reviewers be rewarded appropriately for their time and efforts in reviewing a publication?

What measures can be adopted to reward the reviewers to encourage their commitment towards time-bound scientific reviewership?

Is the concept of Reviewer Index [RI], the Reviewer Index Directory [RID] and the Global Reviewer Index Directory [GRID] suitable for all subject areas of science?

Can the concepts of RI, RID and GRID really solve the perennial problem of tardy demotivated peer review process that plagues every journal at some time or the other?

Can we work out a formula, like in the case of IF, for RI?

About the Author

Dr. Sushil Ghanshyam Kachewar is a Radiologist by profession. He has completed his MD and DNB in Radio-diagnosis, a fellowship in Vascular and Interventional Radiology and is currently pursuing PhD in the Medical faculty. He is a full time Associate Professor in the Radio-diagnosis Department of Rural Medical College, Pravara Institute of Medical Sciences (DU), LONI, Maharashtra, India. He has successfully completed basic as well as advanced course in Medical Education Technology. Apart from being a writer of five dozen scientific articles and about half a dozen textbooks pertaining to his speciality, he is an active reviewer for 3 national and 6 international journals by publishing houses from Asia, Europe as well as the US for the past couple of years. His current area of research is the non-invasive evaluation of the middle cerebral artery of foetus by colour Doppler ultrasound imaging.

Dr. Smita Balwant Sankaye did her M.B.B.S from LTMMC Sion, Mumbai, India, and is currently pursuing post-graduation in Pathology from Rural Medical College, Pravara Institute of Medical Sciences (DU), LONI, Maharashtra, India. She has actively published about a dozen scientific articles in national, as well as international journals and has co-authored a scientific textbook on cervical lymphadenopathy. She also has two full-length chapters to her credit as an independent author in a soon to be published scientific book entitled. Calcifications: Processes, Determinants and Health Impacts from an international publication house. She also serves as an honorary reviewer for national, as well as international journals. Her current area of research involves assessment of the role of fine needle aspiration cytology in evaluating various breast pathologies.

Acknowledgement

I gratefully acknowledge the help of the reviewers and the editor towards shaping of this paper.

Footnotes

Conflict of interest None declared.

Declaration This is our original unpublished work not under consideration for publication elsewhere.

CITATION: Kachewar SG, Sankaye SB. Reviewer Index: A New Proposal Of Rewarding The Reviewer. Mens Sana Monogr 2013;11:274-84

Peer reviewers for this paper: Elizabeth Wager PhD; Harvey Markovitch MD; Andrew Burd MD.

References

- 1.Altman DG. Poor-quality medical research: What can journals do? JAMA. 2002;287:2765–7. doi: 10.1001/jama.287.21.2765. [DOI] [PubMed] [Google Scholar]

- 2.Baxt WG, Waeckerle JF, Berlin J, Callaham ML. Who reviews the reviewers? Feasibility of using fictitious manuscript to evaluate peer reviewer performance. Ann Emerg Med. 1989;32:310–7. doi: 10.1016/s0196-0644(98)70006-x. [DOI] [PubMed] [Google Scholar]

- 3.Callaham ML, Tercier J. The relationship of previous training and experience of journal peer reviewers to subsequent review quality. PLoS Med. 2007;4:e40. doi: 10.1371/journal.pmed.0040040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Callaham M, Green S, Houry D. Does mentoring new peer reviewers improve review quality? A randomized trial. BMC Med Educ. 2012;12:83. doi: 10.1186/1472-6920-12-83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Frank JD, Caldamone A, Woodward M, Mouriquand P. This is the reason why we are exploring the possibility of rewarding courageous contributors and reviewers by giving them CME points for their hard work. J Pediatr Urol. 2009;5:1. doi: 10.1016/j.jpurol.2008.11.009. [DOI] [PubMed] [Google Scholar]

- 6.Godlee F. Making reviewers visible. Openness, accountability and credit. JAMA. 2002;287:2762–5. doi: 10.1001/jama.287.21.2762. [DOI] [PubMed] [Google Scholar]

- 7.Grainger DW. Peer review as professional responsibility: A quality control system only as good as the participants. Biomaterials. 2007;28:5199–203. doi: 10.1016/j.biomaterials.2007.07.004. [DOI] [PubMed] [Google Scholar]

- 8.Hauser M, Fehr E. An incentive solution to the peer review problem. PLoS Biol. 2007;5:e107. doi: 10.1371/journal.pbio.0050107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Siemens DR. A time for review. Can Urol Assoc J. 2012;6:11. doi: 10.5489/cuaj.12035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tite L, Schroter S. Why do peer reviewers decline to review? A survey. J Epidemiol Community Health. 2007;61:9–12. doi: 10.1136/jech.2006.049817. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Walther A, van den Bosch JJ. FOSE: A framework for open science evaluation. 11. Front Comput Neurosci. 2012;6:32. doi: 10.3389/fncom.2012.00032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wicherts JM, Kievit RA, Bakker M, Borsboom D. Letting the daylight in: Reviewing the reviewers and other ways to maximize transparency in science. Front Comput Neurosci. 2012;6:20. doi: 10.3389/fncom.2012.00020. [DOI] [PMC free article] [PubMed] [Google Scholar]