Abstract

Motivated by the analysis of genetical genomic data, we consider the problem of estimating high-dimensional sparse precision matrix adjusting for possibly a large number of covariates, where the covariates can affect the mean value of the random vector. We develop a two-stage estimation procedure to first identify the relevant covariates that affect the means by a joint ℓ1 penalization. The estimated regression coefficients are then used to estimate the mean values in a multivariate sub-Gaussian model in order to estimate the sparse precision matrix through a ℓ1-penalized log-determinant Bregman divergence. Under the multivariate normal assumption, the precision matrix has the interpretation of a conditional Gaussian graphical model. We show that under some regularity conditions, the estimates of the regression coefficients are consistent in element-wise ℓ∞ norm, Frobenius norm and also spectral norm even when p ≫ n and q ≫ n. We also show that with probability converging to one, the estimate of the precision matrix correctly specifies the zero pattern of the true precision matrix. We illustrate our theoretical results via simulations and demonstrate that the method can lead to improved estimate of the precision matrix. We apply the method to an analysis of a yeast genetical genomic data.

Keywords: Estimation bounds, Graphical Model, Model selection consistency, Oracle property

1. Introduction

Estimation of high-dimensional covariance/precision matrix has attracted a great deal of interest in recent years [1, 2, 3, 4, 5, 6]. The problem is related to sparse Gaussian graphical modeling where the precision matrix provides information on the conditional independency among a large set of variables. Application of estimating the precision matrix includes analysis of gene expression data, spectroscopic imaging, FMRI data, numerical weather forecasting. Under the assumption of sparsity and some regularity conditions on the underlying precision matrix, regularization methods have been proposed to estimate such precision matrices. Some explicit rates of convergence of the resulting estimates have been obtained [1, 2, 7, 6]. Furthermore, [4] and [3] have studied the optimal convergence rate of the estimates in Frobenius and operator norms, as well as the matrix ℓ1 norm.

Almost all current methods for precision matrix estimation or Gaussian graphical model estimation assume that the random vector has zero or constant mean. However, in many real applications, it is often important to adjust for the covariate effects on the mean of the random vector in order to obtain more precise and interpretable estimate of the precision matrix. One such example is related to analysis of genetical genomic data, where we have both high dimensional genetic marker data and high dimensional gene expression data measured on the same set of samples in a segregation population. One important goal is to study the conditional independency structure among a set of genes at the expression level. This is related to estimating the precision matrix when the data are assumed to be normally distributed. However, it is now known that genetic marker data can affect the mean gene expression levels for many genes [8]. It is therefore important to adjust for the marker effects on gene expression when the conditional independency structure is studied.

In this paper, we consider the problem of adjusting for high-dimensional covariates in precision matrix estimation by ℓ1-penalization. It can be formulated as the sparse multivariate regression with correlated errors. The model has both high dimensional regression coefficient matrix and high dimensional covariance matrix. Estimation of such multivariate regressions with correlated errors have been studied in literature. [9] focused on estimating the regression coefficient matrix and presented several algorithms based on ℓ1 penalization. However, no theoretical results were provided. [10] developed an estimation procedure that iteratively estimates the regression coefficients and the precision matrix based on ℓ1-penalization. They provided asymptotic results on estimation bounds and consistency. However, the computation is quite intensive.

We propose a two-stage ℓ1 penalization procedure that first jointly estimates the multiple regression coefficients to obtain a sparse estimation of the regression coefficient matrix. We extend the results of [11] on sharp recovery and convergence rate for sparsity in single regression to multiple regression setting. The estimates of the regression coefficients are then used to adjust for the means in estimating the precision matrix. Under the assumption of a matrix version of the irrepresentable condition [12, 11] on the covariate matrix as well as a matrix version of the irrepresentable condition on the precision matrix, we obtain the consistency results. We additionally obtain the explicit convergence rates for both the estimates of the regression coefficient matrix and estimates of precision matrix in element-wise ℓ∞ norm, hence also in spectral and Frobenius norms. The theoretical property of our estimates depends on the method of primal dual witness construction [11, 5]. If the primal-dual witness construction succeeds, it acts as a witness to the fact that the solution to the restricted problem is equivalent to the solution to the original problem. When further conditions on the minimum values of the true coefficient matrix and the precision matrix are assumed, we also establish the sign consistency results for the estimates.

2. Model and notation

Consider a random vector Y ∈ ℝp and a deterministic covariate vector X ∈ ℝq, we assume that

| (1) |

where Γ is the p × q regression coefficient matrix, ε is a mean-zero error vector and is assumed to distribute as a sub-Gaussian vector with covariance matrix Σ = Θ−1 and precision matrix Θ. Specifically, we assume that for each εj in ε = (ε1, …, εp), is sub-Gaussian with parameter σ. A zero-mean random variable Z is sub-Gaussian if there exists a constant σ ∈ (0, ∞) such that E[exp(tZ)] ≤ exp(σ2t2/2), for all t ∈ ℝ. By Chernoff bound, this upper bound on the moment generating function implies a two-sided tail bound of the form pr(|Z| > z) ≤ 2 exp(−z2/(2σ2)). If every element in the vector ε is sub-Gaussian, we call the vector ε sub-Gaussian.

Given n independent and identically distributed observations of a random vector (Y |X), we propose to estimate the regression coefficient matrix Γ and precision matrix Θ in model (1) in a two-step ℓ1 penalization procedure. To simplify the problem, we assume the Xi are fixed observations for i = 1, ⋯, n. Denote X = (X1, ⋯, Xn)⊤ = (X(1), ⋯, X(q)) as the design matrix. Denote as the realized noise matrix and Y = (Y1, ⋯, Yn)⊤. We further denote and .

We first introduce notation related to vector and matrix norms. We use the notation A ≻ 0 for the positive definiteness of matrix A. We denote A̅ = vec(A) as the vectorization of an arbitrary matrix A. Define ‖A‖1 = ∑i,j |Aij | as the element-wise ℓ1 norm for a matrix A and ‖A‖1,off = ∑i≠j |Aij| as the off-diagonal ℓ1 norm of matrix A. We denote ‖A‖∞ = maxi,j |Aij| and as the element-wise ℓ∞ norm and the matrix ℓ∞ norm of a matrix A, respectively. Furthermore, we use ‖A‖F as the Frobenius norm, which is the square-root of the sum of the squares of the entries of A, and ‖A‖2 as the spectral norm, which is the largest singular value of A. Finally, we use Γ*, Σ* and Θ* to denote the true matrix parameters in model (1), while Γ̂, Σ̂ and Θ̂ as their estimates.

As commonly used in Gaussian graphical model, we similarly relate the nonzero elements of the precision matrix Σ* to the edges between two variables, and define the support of the precision matrix as

and the maximum degree or row cardinality of Θ* as

Similarly, for the regression coefficient matrix, let T(Γ*) be the support of a matrix Γ*, defined as

Also define , which is the support of the regression coefficients for the ith variable. We define the maximum degree or row cardinality of Γ* as

which corresponds to the maximum number of non-zeros in any row of Γ*. Denote the cardinality of T(Γ*) as kn = |T(Γ*)|. Finally, we define the extended sign matrix of Γ* as

3. Two-stage Penalized log-Determinant Bregman Divergence Estimation

We propose to develop a two-stage penalized estimation procedure for estimating the regression coefficient matrix Γ and the precision matrix Θ, where in the first stage, we estimate Γ through a penalized joint least square estimation and in the second stage, we estimate Θ by minimizing a penalized log-determinant Bregman divergence after plugging in the regression coefficient estimates. This algorithm can be summarized as the following:

- Step 1. Estimate Γ by minimizing a joint penalized residual sum of squares,

where ρn is a tuning parameter.(2) - Step 2. Compute

(3) - Step 3. Solve the optimization problem,

where λn is a tuning parameter.(4) Step 4. Output the solution (Γ̂, Θ̂Γ̂).

Note that in Step 1, we ignore the correlation among the Y variables when estimating the multiple regression coefficients. [9] showed that only when the correlation of the errors is high, incorporation of of such an dependency can lead to increased efficiency in estimating Γ. Theorem 1 in the next section shows that the maximum estimation error is controlled in a certain rate. The estimate Σ̂Γ̂ in Step 2 is a plug-in estimate based on the estimated residuals. This leads to our two-stage estimate of the precision matrix Θ̂Γ̂ in Step 3, formulated as the ℓ1-penalized log-determinant divergence problem [5]. Efficient coordinate descent algorithm can be applied to implement the optimization problems in Step 1 and Step 3 [13]. The tuning parameters can be chosen based on the BIC. The convergent rate in element-wise ℓ∞ norm of this estimate is established in Theorem 2, followed by rates in other norms.

4. Theoretical properties

4.1. Estimation bound and sign consistency of Γ̂

Let T ≡ T(Γ*), T(i) and CX be defined as above and denote Ip as the identity matrix of dimension p. In addition, for any matrix A, let AS,T be the submatrix with the row indices given in set S and column indices given in set T. We first make several assumptions on the covariate matrix X.

Assumption 1. There exists a γ ∈ (0, 1], such that

| (5) |

This is the matrix extension of the irrepresentable condition used in ℓ1 penalized regression setting [12]. This assumption is equivalent to the irrepresentable assumption for Lasso for each of the p components of the response, i.e., ‖|(CX)T(i)c,T(i)[(CX)T(i),T(i)]−1|‖∞ ≤ 1 − γ, for i = 1 ⋯, p. This can be further written as

The assumption implies that the number of non-zero elements in each row of Γ should be less than n.

Assumption 2. There exists a constant Cmax, such that the largest eigenvalue

| (6) |

This condition assumes an upper bound on the operator norm of the matrix [(CX ⊗ Ip)T,T]−1(CX ⊗ Σ)T,T[(CX ⊗ Ip)T,T]−1, which is a combination of the assumptions (26b) and (26c) in [11]. It is easy to check that this assumption holds if

Since CX ⊗ Σ is no longer a block diagonal matrix, we cannot obtain an equivalent assumption for each of the p components of the response and then take the supreme over all p components.

Assumption 3. For all n > 0, the largest eigenvalue of CX has a common upper bound Λmax, that is

This is also commonly used assumption in sparse high dimensional regression analysis ([12] and [11]).

Theorem 1. Suppose that the design matrix X satisfies the Assumptions (1) and (2) and X is column-standardized such that

| (7) |

If the sequence of regularization parameters {ρn} satisfies

| (8) |

then for some constant C1 > 0, the following properties hold with probability greater than ,

- The minimization of Step 1 of the algorithm has a unique solution Γ̂ ∈ ℝp×q with its support contained within the true support, i.e. T(Γ̂) ⊆ T(Γ*). In addition, the element-wise ℓ∞ norm and the Frobenius norm have the following bounds

If the minimum absolute value of the regression coefficient matrix Γ* on its support is bounded below as |Γ*|min > ρnMn(X, T, Σ*), then Γ̂ has the correct signed support, i.e. S±(Γ̂) = S±(Γ̂*).

Theorem 1 is an extension of the results for single regression of [11] to multiple regressions when we simultaneously estimate the regression coefficients of multiple regressions. A lower bound on the minimum absolute value of elements of Γ* is required for sign consistency. Such an estimation bound on the regression coefficient matrix is required to establish the theoretical property of Θ̂Γ̂

4.2. Estimation bound and sign consistency of Θ̂

We next present results on the estimate of the precision matrix Θ̂ = Θ̂Γ̂. Define Ω* = Θ*−1 ⊗ Θ*−1, which is the Hessian of the log-determinant objective function respect to Θ* [5]. Since , it can be viewed as an edge-based counterpart to the usual covariance matrix Σ* [5]. Let S(Θ*) = {E(Θ*) ∪ {(1, 1), ⋯, (p, p)} } be the augmented set including the diagonals. With slight abuse of notation, we also use S and Sc to denote S(Θ*) and its complement. We further define

as the matrix ℓ∞ norm of the true covariance matrix Σ*, and

Before we present the theorem on Θ̂Γ̂, we need one assumption on the Heissian matrix Ω*,

Assumption 4. There exists an α ∈ (0, 1], such that

This assumption is the mutual incoherence or irrepresentable condition introduced in [5], which controls the influence of the non-edge terms on the edge-based terms.

Define for some τ > 2, where . We then have the following main theorem on the estimation error bound and edge selection.

Theorem 2. Under the model of Theorem 1 and additional Assumptions (3) and (4), assume that ε is a sub-Gaussian random vector with parameter σ2. Let Γ̂ be the estimate of Γ from Step 1 of two-stage procedure and Θ̂Γ̂ be the unique solution in Step 3 of the procedure, that is

where Σ̂Γ̂ is defined in (3). Suppose that d2 in Γ* satisfies the following upper bound

where , and tuning parameter ρn satisfies

where C2 is some constant. Choosing the regularization parameter

If the sample size exceeds the lower bound

| (9) |

where , then with probability greater than

where

we have:

- The estimate Θ̂Γ̂ satisfies the element-wise ℓ∞-bound:

- The edge set E(Θ̂) is a subset of the true edge set E(Θ*) and includes all edges (i, j) with

The proof of this theorem is based on the primal-dual witness method used in [5]. The key difference between our approach and that of [5] is the result on controlling the sampling noise. Define U ≔ Σ̂Γ̂ − Σ*, where . Our proof is mainly on the control of ‖U‖∞. As part of the proof of this theorem, a new result on controlling the sampling noise in our setting is given as Lemma 2 in the Appendix, taking into account that Γ has to be estimated. [5] on the other hand considered the model with zero mean and only has to consider the noise control for . Theorem 2 indicates that we have the same bound on the element-wise ℓ∞ norm of the discrepancy between the estimate and the truth as that in [5], but with a slower convergence probability, which is the price we pay for estimating Γ.

Based on the result on of the element-wise ℓ∞ norm bound, we can get the results on Frobenius and spectral norm bounds. Denote sn = |E(Θ*)| as the total number of off-diagonal non-zeros in Θ*. We have following corollary:

Corollary 1 (Rates in Frobenius and spectral norm). Under the same assumptions as Theorem 2, with probability at least , the estimator Θ̂Γ̂ satisfies

where .

Our final theoretical result is on sign consistency, which requires a lower bound on the minimum value of Θ*. Define and the sign recovery event . We have the following theorem on sign consistency:

Theorem 3. Under the same conditions as in Theorem 2, suppose that the sample size satisfies the lower bound

then the estimator is model selection sign consistent with high probability,

5. Monte Carlo simulations

5.1. Models for comparisons and generation of data

We present results from Monte Carlo simulations to examine the performance of the proposed two-stage estimates. We simulated data to mimic genetical genomic data, where both binary genetic marker data and continuous gene expression data are simulated. We compare our estimate with several other procedures in terms of estimating the precision matrix and neighborhood selection, including the standard Gaussian graphical model implemented as GLASSO [13] using only the gene expression data, a procedure that iteratively updates the regression coefficient matrix and the precision matrix [9, 10] and a neighbor-based graphical model selection procedure of [14], where each gene is regressed on other genes and also the genetic markers using the ℓ1 regularized regression, and a link is defined between gene i and j if gene i is selected for gene j and gene j is also selected by gene i. Note that in our setting, the neighbor-based procedure does not provide an estimate of the precision matrix. For each simulated data set, we chose the tuning parameters ρ and λ based on the BIC.

To compare the performance of different estimators for the precision matrix, we use the quadratic loss function LOSS(Θ, Θ̂) = tr(Θ−1Θ̂ − I)2, where Θ̂ is an estimate of the true precision matrix Θ. We also compare ‖Δ‖∞, ‖|Δ|‖∞, ‖Δ‖2 and ‖Δ‖F, where Δ = Θ − Θ̂ is the difference between the true precision matrix and its estimate. In order to compare how different methods recover the true graphical structures, we consider the specificity (SPE), sensitivity (SEN) and Matthews correlation coefficient (MCC) scores, which are defined as

and

where TP, TN, FP and FN are the numbers of true positives, true negatives, false positives and false negatives in identifying the non-zero elements in the precision matrix. Here we consider the non-zero entry in a sparse precision matrix as “positive.”

In the following simulations, we consider a general sparse precision matrix, where we randomly generate a link (i.e., non-zero elements in the precision matrix, indicated by δij) between variables i and j with a success probability proportional to 1/p. Similar to the simulation setup of Li and Gui [15], Fan et al. [16] and Peng et al. [17], for each link, the corresponding entry in the precision matrix is generated uniformly over [−1, −0.5]∪[0.5, 1]. Then for each row, every entry except the diagonal one is divided by the sum of the absolute value of the off-diagonal entries multiplied by 1.5. Finally the matrix is symmetrized and the diagonal entries are fixed at 1. To generate the p × q coefficient matrix Γ = (γij), we first generated a p × q sparse indicator matrix (δij), where δij = 1 with a probability proportional to 1/q. If δij = 1, we generated γij from Unif ([υm, 1] ∪ [−1, −υm]), where υm is the minimum absolute non-zero value of Θ generated.

After Γ and Θ were generated, we generated the marker genotypes X = (X1, ⋯, Xq) by assuming Xi ~ Bernoulli(1, 1/2), for i = 1, ⋯, q. Finally, given X, we generated Y the multivariate normal distribution Y |X ~ 𝒩(ΓX, Σ). For a given model and a given simulation, we generated a data set of n independent and identically distributed random vectors (X, Y). The simulations were repeated 50 times.

5.2. Simulation results

We first consider the setting when the sample size n is larger than the number of genes p and the number of genetic markers q. We simulated data from three models with different values of p, q (See Table 1 Model 1 – Model 3) and present the simulation results in Table 2. We observe that the two-stage procedure performs very similarly to the iterative procedure. Clearly, the two-stage procedure and the iterative procedure provide much improved estimates of the precision matrix over the Gaussian graphical model for all three models considered in all measurements. This is expected since the Gaussian graphical model assumes a constant mean of the multivariate vector, which is a misspecified model. In addition, the two-stage procedure resulted in higher sensitivities, specificities and MCC than the Gaussian graphical model and the neighbor-based method. We observed that the Gaussian graphical model often resulted in much denser graphs than the real graphs. This is partially due to the fact that some of the links identified by Gaussian graphical model can be explained by shared common genetic variants. By assuming constant means, in order to compensate for the model misspecification, the Gaussian graphical tends to identify many non-zero elements in the precision matrix. The results indicate that by adjusting the effects of the covariates on the means, we can reduce both false positives and false negatives in identifying the non-zero elements of the precision matrix. The neighbor-based selection procedure using multiple LASSO accounts for the genetic effects in modeling the relationship among the genes. It performed better than the Gaussian graphical in graph structure selection, but worse than the two-stage procedure. This procedure, however, did not provide an estimate of the precision matrix.

Table 1.

Six models considered in simulations, where p is the number of the variables, q is the number of covariates and n is the sample size. pr(Θij ≠ 0) and pr(Γij ≠ 0) specify the sparsity of the model.

| Model | (p, q, n) | pr(Θij ≠ 0) | pr(Γij ≠ 0) |

|---|---|---|---|

| 1 | (100, 100, 250) | 2/p | 3/q |

| 2 | (50, 50, 250) | 2/p | 4/q |

| 3 | (25, 10, 250) | 2/p | 3.5/q |

| 4 | (1000, 200, 250) | 1.5/p | 20/q |

| 5 | (800, 200, 250) | 1.5/p | 25/q |

| 6 | (400, 200, 250) | 2.5/p | 20/q |

Table 2.

Comparison of the performances on estimating the precision matrix Θ by the two-stage procedure, the iterative selection procedure of [10], a neighbor-based selection procedure [14] and the Gaussian graphical model using glasso [13], where Δ = Θ − Θ̂.

| Method | AUC | SPE | SEN | MCC | ‖Δ‖∞ | ‖|Δ|‖∞ | ‖Δ‖2 | ‖Δ‖F |

|---|---|---|---|---|---|---|---|---|

| Model 1: (p, q, n)=(100, 100, 250) | ||||||||

| Two-stage | 0.91 | 0.99 | 0.49 | 0.56 | 0.32 | 1.18 | 0.68 | 3.24 |

| Iterative | 0.91 | 0.99 | 0.48 | 0.56 | 0.33 | 1.17 | 0.67 | 3.18 |

| glasso | 0.81 | 0.97 | 0.24 | 0.21 | 0.69 | 1.89 | 1.12 | 5.19 |

| Neighbor | 0.86 | 0.99 | 0.38 | 0.48 | ||||

| Model 2: (p, q, n)=(50, 50, 250) | ||||||||

| Two-stage | 0.91 | 0.97 | 0.69 | 0.65 | 0.35 | 1.31 | 0.73 | 2.43 |

| Iterative | 0.92 | 0.98 | 0.69 | 0.66 | 0.37 | 1.30 | 0.72 | 2.36 |

| glasso | 0.74 | 0.87 | 0.37 | 0.18 | 0.75 | 2.12 | 1.20 | 4.57 |

| Neighbor | 0.88 | 0.95 | 0.60 | 0.48 | ||||

| Model 3: (p, q, n)= (25, 10, 250) | ||||||||

| Two-stage | 0.89 | 0.91 | 0.76 | 0.62 | 0.23 | 0.90 | 0.51 | 1.20 |

| Iterative | 0.89 | 0.91 | 0.76 | 0.62 | 0.24 | 0.90 | 0.52 | 1.21 |

| glasso | 0.57 | 0.43 | 0.73 | 0.12 | 0.65 | 1.99 | 1.12 | 2.77 |

| Neighbor | 0.85 | 0.84 | 0.68 | 0.44 | ||||

| Model 4: (p, q, n)=(1000, 200, 250) | ||||||||

| Two-stage | 0.93 | 1 | 0.32 | 0.51 | 0.46 | 1.77 | 0.91 | 13.42 |

| Iterative | 0.90 | 1 | 0.31 | 0.47 | 0.59 | 1.81 | 0.97 | 13.48 |

| glasso | 0.88 | 0.98 | 0.08 | 0.02 | 0.71 | 2.86 | 1.31 | 19.82 |

| Neighbor | 0.87 | 1 | 0.12 | 0.16 | ||||

| Model 5: (p, q, n)=(800, 200, 250) | ||||||||

| Two-stage | 0.93 | 1 | 0.21 | 0.45 | 0.48 | 1.80 | 0.97 | 12.58 |

| Iterative | 0.89 | 1 | 0.21 | 0.34 | 0.75 | 2.30 | 1.20 | 12.82 |

| glasso | 0.87 | 0.97 | 0.07 | 0.02 | 0.76 | 2.97 | 1.40 | 18.39 |

| Neighbor | 0.87 | 0.96 | 0.61 | 0.19 | ||||

| Model 6: (p, q, n)=(400, 200, 250) | ||||||||

| Two-stage | 0.79 | 1 | 0.05 | 0.20 | 0.39 | 1.56 | 0.79 | 7.13 |

| Iterative | 0.75 | 1 | 0.05 | 0.21 | 0.44 | 1.55 | 0.77 | 6.86 |

| glasso | 0.71 | 0.95 | 0.03 | −0.01 | 0.69 | 2.72 | 1.22 | 11.01 |

| Neighbor | 0.73 | 0.99 | 0.08 | 0.10 | ||||

We next consider the setting when p > n and simulated data from three models with different values of n, p and q (see Table 1 Model 4 – Model 6). Note that for all three models, the graph structure is very sparse due to the large number of genes considered. The performances over 50 replications are reported in Table 2 for the optimal tuning parameters chosen by the BIC. For all three models, we observed much improved estimates of the precision matrix from the proposed two-stage procedure as reflected by smaller norms of the difference between the true and estimated precision matrices. In terms of graph structure selection, in general, we observe that when p is larger than the sample size, the sensitivities from all four procedures are much lower than the settings when the sample size is larger. This indicates that recovering the graph structure in a high-dimensional setting is statistically difficult. However, the specificities are in general very high, agreeing with our theoretical result of the estimates.

Finally, Table 3 presents the comparison of the estimates of Γ of three different procedures. Overall, we observe no differences in estimates of Γ from the two-stage and the iterative procedures, both perform better than the neighbor-based procedure.

Table 3.

Comparison of the performances on estimating the regression coefficient matrix Γ from the two-stage procedure, an iterative selection procedure of [10] and a neighbor-based procedure [14], where Δ = Γ − Γ̂.

| Algorithm | AUC | SPE | SEN | MCC | ‖Δ‖∞ | ‖|Δ|‖∞ | ‖Δ‖F |

|---|---|---|---|---|---|---|---|

| Model 1: (p, q, n)=(100,100,250) | |||||||

| Two-stage | 0.98 | 0.99 | 0.87 | 0.77 | 0.38 | 1.03 | 2.39 |

| Iterative | 0.98 | 0.98 | 0.90 | 0.64 | 0.36 | 1.01 | 2.16 |

| Neighbor | 0.97 | 0.99 | 0.87 | 0.78 | 0.38 | 1.06 | 2.39 |

| Model 2: (p, q, n)=(50,50,250) | |||||||

| Two-stage | 0.98 | 0.99 | 0.89 | 0.84 | 0.37 | 1.65 | 2.48 |

| Iterative | 0.98 | 0.98 | 0.90 | 0.81 | 0.36 | 1.70 | 2.32 |

| Neighbor | 0.97 | 0.96 | 0.91 | 0.75 | 0.35 | 1.48 | 2.21 |

| Model 3: (p, q, n)=(25,10,250) | |||||||

| Two-stage | 0.98 | 0.75 | 0.98 | 0.68 | 0.24 | 0.74 | 0.97 |

| Iterative | 0.97 | 0.81 | 0.98 | 0.74 | 0.25 | 0.75 | 1 |

| Neighbor | 0.98 | 0.90 | 0.95 | 0.81 | 0.31 | 1.02 | 1.30 |

| Model 4: (p, q, n)=(1000,200,250) | |||||||

| Two-stage | 0.96 | 1 | 0.82 | 0.82 | 0.48 | 1.90 | 11.86 |

| Iterative | 0.96 | 1 | 0.83 | 0.79 | 0.62 | 2.98 | 11.98 |

| Neighbor | 0.83 | 1 | 0.65 | 0.80 | 0.81 | 3.51 | 18.75 |

| Model 5: (p, q, n)=(800,200,250) | |||||||

| Two-stage | 0.97 | 1 | 0.83 | 0.82 | 0.48 | 2.49 | 11.69 |

| Iterative | 0.96 | 1 | 0.81 | 0.79 | 0.89 | 6.52 | 12.75 |

| Neighbor | 0.79 | 0.97 | 0.77 | 0.46 | 0.76 | 4.21 | 14.48 |

| Model 6: (p, q, n)=(400,200,250) | |||||||

| Two-stage | 0.96 | 1 | 0.82 | 0.82 | 0.45 | 2.03 | 7.29 |

| Iterative | 0.96 | 0.99 | 0.86 | 0.65 | 0.44 | 2.27 | 6.40 |

| Neighbor | 0.86 | 1 | 0.78 | 0.83 | 0.56 | 2.64 | 8.35 |

6. Real data analysis

To demonstrate the proposed method, we present results from the analysis of a data set generated by [18], where 112 yeast segregants, one from each tetrad, were grown from a cross involving parental strains BY4716 and wild isolate RM11-1A and gene expression levels of 6,216 genes were measured. These 112 segregants were individually genotyped at 2,956 marker positions throughout the genome. Since many of these markers are in high linkage disequilibrium, we combined the markers into 585 blocks where the markers within a block differed by at most one sample. For each block, we chose the marker that had the least number of missing values as the representative marker.

To demonstrate our methods, we focused our analysis on a set of genes of the protein-protein interaction (PPI) network obtained from a previously compiled set by [19] combined with protein physical interactions deposited in Munich information center for protein sequences. We further selected 1,207 genes with variance greater than 0.05. Based on the most recent yeast protein-protein interaction database BioGRID [20], there are a total of 7,619 links among these 1,207 genes. Our goal is to construct a conditional independent network among these genes based on the sparse Gaussian graphical model adjusting for possible genetic effects on gene expression levels.

Results from several different procedures are summarized in Table 4. We observe that the neighbor-based method resulted in sparsest graph and the standard Gaussian graphical model without adjusting for the effects of genetic markers resulted in the densest graph, and the two-stage procedure was in between. A summary of the degrees of the graphs estimated by these three procedures is given in Table 4. We observe that the standard Gaussian graphical model gave a much denser graph than the other two procedures, agreeing with what we observed in simulation studies. The Gaussian graphical selected a lot more links than the other two methods, among the links that were identified by the Gaussian graphical model only, 476 pairs are associated with at least one common genetic marker based on the two-stage procedure, further explaining that some of the links identified by gene expression data alone can be due to shared comment genetic variants. The neighbor-based selection procedure identified only 1,917 edges, out of which 1880 were identified by the two-stage procedure and 1,916 were identified by the graphical model. There was a common set of 1749 links that were identified by all three procedures.

Table 4.

Comparison of the results of the two-stage procedure, the neighbor-based procedure [14] and the Gaussian graphical model using glasso [13] for the yeast protein-protein interaction data where n = 112, p = 1207, q = 578.

| Two-stage | Neighbor | Gaussian graph | |

|---|---|---|---|

| No. of edges in Θ̂ | 13522 | 7518 | 18987 |

| No. of links in Γ̂ | 1030 | 330 | NA |

| Tuning parameter | (0.326, 0.362) | 0.324 | 0.224 |

| Mean degree | 27.16 | 3.18 | 31.5 |

| Max degree | 53 | 12 | 60 |

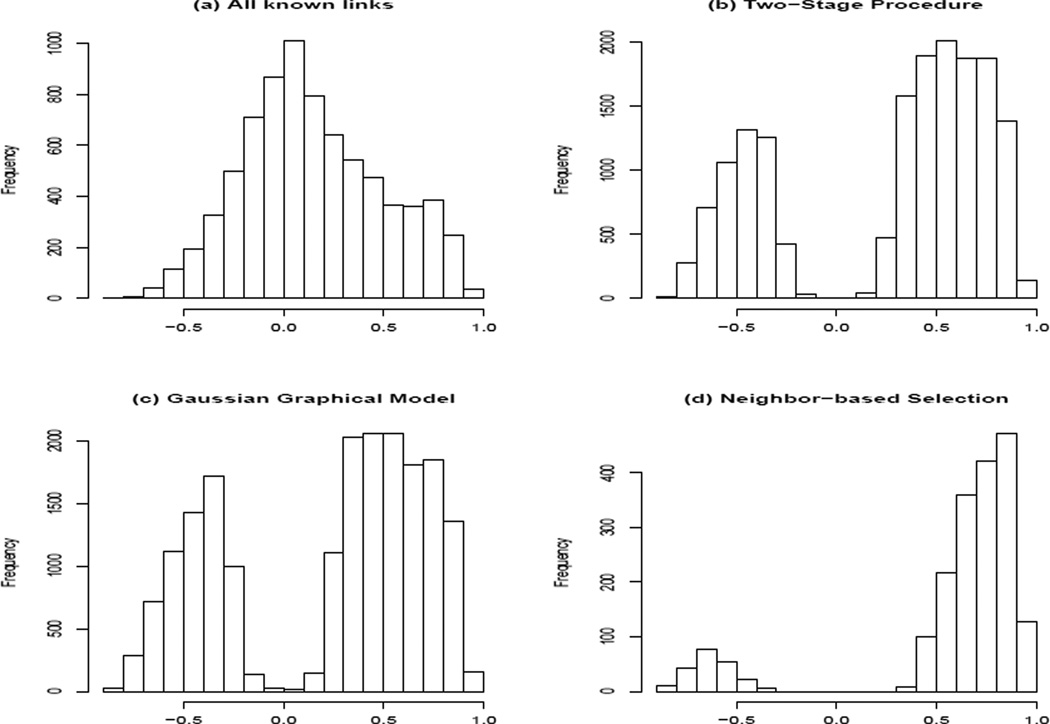

If we treat the PPI of the BioGRID database as the true network among these genes, the true positive rate from the two-stage procedure, the Gaussian graphical model and the neighbor-based selection procedure was 0.068, 0.071 and 0.019, respectively, and the false positive rate was 0.018, 0.026 and 0.0025, respectively. The reason for having low true positive rates is that many of the protein-protein interactions cannot be reflected at the gene expression level. Figure 1 (a) shows the histogram of the correlations of genes that are linked on the BioGRID PPI network, indicating that many linked gene pairs have very small marginal correlations. The Gaussian graphical models are not able to recover these links. Figure 1 plots (b) – (d) show the marginal correlations of the gene pairs that were identified by the two-stage procedure, the Gaussian graphical model and the neighbor-based procedure, clearly indicating that the linked genes identified by the two-stage procedure have higher marginal correlations. In contrast, some linked genes identified by the Gaussian graphical model have quite small marginal correlations.

Figure 1.

Histograms of marginal correlations for pairs of linked genes based on BioGRID (a) and linked genes identified by the two-stage procedure (b), the Gaussian graphical model (c) and a neighbor-based selection procedure (d).

7. Discussion

The proposed two-stage procedure is computationally efficient through coordinate descent algorithm and can be applied to high dimensional settings. Our simulation results show that this two-stage procedure performs very similarly to the iterative procedure of [9, 10]. To ensure model selection consistency and to derive the estimation bounds, our main theoretical assumption is an irrepresentable or mutual incoherence condition on both the covariates matrix and the true precision matrix. These conditions are similar to those required for model selection consistency of the LASSO or precision matrix estimation. Compared to the asymptotic results in [10], the results in this paper provide more explicit bounds in different matrix norms and present conditions for correct sign support. Our theoretical results on the estimate of the precision matrix parallel to those in [5]. However, the proofs are more difficult since the estimation biases of the regression coefficients have to be accounted for when studying the properties of the estimate of the precision matrix. This is achieved by proving an important lemma on control of sampling noise.

Partially due to computational consideration, we used the ℓ1-penalization to obtain sparse results for both regression coefficient matrix and the precision matrix. However, other non-convex penalty functions can be applied in our two-stage algorithm, although computationally the optimizations are more challenging. Alternatively, one can extend the Dantzig selector [21] to estimate the regression coefficient matrix and the constrained ℓ1 minimization [22] to estimate the precision matrix. It would be interesting to compare the performances of these different approaches. Finally, we can also consider to impose low-rank sparsity in stage 1 of the estimation using a penalty proportional to the rank of Γ [23]. This approach yields a closed form solution and different rates of convergence. It is interesting to compare these alternatives with the proposed approach in this paper.

Acknowledgement

This research is supported by NIH grants R01CA127334 and R01GM097505 and National Natural Science Foundation of China (grant No. 11201479).

Appendix

We present the proofs of the theorems in this Appendix. Proof of Theorem 1 extends that of [11] to multiple regressions and coefficient matrix settings. The key of the proof of Theorem 2 is a lemma on control of sampling noise, which we present detailed proof. Using this lemma, the proof of Theorem 2 is mainly based on the technique of primal-dual witness method [5].

Proof of Theorem 1.

From equation (2) and the model Yi = Γ*Xi+εi, the estimation equation becomes

where B is the sub-differential of ‖Γ‖1, defined as Bij = sign(Γij) if Γij ≠ 0 and ∈ [−1, 1], if Γij = 0. With these definitions, we have the following lemma

Lemma 1. (a) A matrix Γ̂ ∈ ℝp×q is optimal to the ℓ1 penalization problem (2) if and only if there exists an element B̂ of the sub-differential ∂‖Γ̂‖1 such that

(b) Suppose that the sub-different matrix satisfies the strict dual feasibility condition |B̂ij| < 1 for all (i, j) ∉ T(Γ̂). Then any optimal solutione Γ̃ to the ℓ1 penalization problem (2) satisfiese Γij = 0 for all (i, j) ∉ T(Γ̂).

(c) Under the condition of part (b), if the |T(Γ̂)|×|T(Γ̂)| matrix (CX⊗Ip)T(Γ̂),T(Γ̂) is invertible, then Γ̂ is the unique optimal solution of the ℓ1 penalization problem (2).

Similar technique as in [11] can be used to prove this Lemma. From Lemma 1, we know that strict dual feasibility conditions are sufficient to ensure the uniqueness of Γ̂. We construct the primal-dual witness solution (Γ̃, B̃) as follows:

- First, we determine the matrix Γ̃ by solving the restricted LASSO problem

(.1) Second, we choose B̃T as an element of the sub-differential of the regularizer ‖ · ‖1, evaluated at Γ̃.

Third, we set e B̃Tc to satisfy the zero sub-differential condition (.1), and check whether or not the dual feasibility condition B̃ij ≤ 1 for all (i, j) ∈ Tc is satisfied. To ensure the uniqueness, we check for strict dual feasibility B̃ij < 1 for all (i, j) ∈ Tc.

Fourth, we check whether the sign consistency condition is satisfied.

PROOF OF THEOREM 1. From the primal-dual witness construction, denote Λ = Γ̃ − Γ*, where Γ̃ is the solution to (.1) and Γ* is the true parameter. The equation (.1) can be rewritten as:

| (.2) |

| (.3) |

Since Λ̅Tc = 0, in order to establish strict dual feasibility, we need to check whether . From (.2), we have

substituting this into (.3) leads to

| (.4) |

For the second term (II) of (.4), from the Assumption (1) and , we have

From the sub-Gaussian (sG for short) distribution assumption on ε, . Denote the projection matrix as

Choosing a particular element (j, i) in the first term (I) of (.4),

with and ei is ith row of identity matrix Ip, then

where using the fact that Inp − A is a projection matrix and the condition (7) in the theorem, we have

By applying the Chernoff bound, we have,

where (I)(j,i) is the (j, i)th element in the first term (I) in (.4) and kn is the number of nonzero elements in the true parameter Γ*. Setting t = γ/2 yields

Putting together the pieces and using our choice (8) of ρn, we have

for some constant c1. So from Lemma 1, the estimated support T̂(Γ̂) is contained in the support T̃ hence in the true support T*(Γ*) with probability at least .

Next we establish the ℓ∞ bounds, from (.4) we know

where B̂T is in the sub-differential of ‖Γ̂‖1. So

Note that the second term in (.4) is a fixed term. Since , then

where

Define the first term in (.4) as ξ, then the (j, i)-th element of ξ where j ∈ T(i) is distributed as sub-Gaussian with parameter σ2, that is ξ(j,i) ~ sG(0, σ2), with σ2 ≤ 1/nCmax, where Cmax is defined in the Assumption 2. Again from Chernoff bound,

Setting , then . Since ρn satisfies (8), . So vanishes at the rate at least , where c2 is a constant. Overall, we conclude that

with probability greater than where C1 is a constant (for example C1 can be chosen as min{c1, c2}). Thus assertion (1) in Theorem 1 is proved and the (2) directly follows when (1) is proved. Thus complete the proof of Theorem 1.

Proof of Theorem 2:

We define

and Cmax is the constant in Assumption 2. Define U ≔ Σ̂Γ̂ − Σ*, where . Our proof is mainly on the control of ‖U‖∞, which is the major difference between our Theorem 2 and Theorem 1 in [5]. We state this noise control result in the following lemma:

Lemma 2 (Control of Sampling Noise). Under the assumptions that log pn = o(n), , d2 = o(qn) and furthermore, for some real number τ > 2,

where and Λmax is the constant in Assumption 3 and σ is the parameter in the tail condition on εi. Choose a constant C2 > 1, such that

| (.5) |

Assume the conditions in Theorem 1 are satisfied and in addition to the tuning parameter ρn satisfying condition (8), ρn also satisfies

where . Under this condition, denote

| (.6) |

then

where C1 and C2 are constants.

PROOF OF LEMMA 2. From the definition of U, we have

We want to bound the element-wise ℓ∞ norm ‖U‖∞,

Let 𝒜 be the event that T̂(Γ̂) ⊆ T(Γ*) and . Then . Under event . So

So under event 𝒜,

where

From Lemma 1 of [5] on sub-Gaussian tail condition, we have

| (.7) |

Since λmax(CX) ≤ Λmax, then because of ‖| A ⊗ B |‖∞ ≤ ‖|A|‖∞ ‖|B|‖∞. Then II ≤ Λmax(d2‖Γ̂ −Γ*‖∞)2. Under event 𝒜, T̂(Γ̂ ⊆ T(Γ*)) and . So

Next we bound (III).We know under event 𝒜, ‖(Γ̂−Γ*)T‖∞ ≤ ρnMn(X, T,Σ*). We need further bound each row’s ℓ1 norm in [(W⊤X/n)⊗Ip]·,T. Since is a pnqn × 1 random vector with mean zero and covariance matrix CX ⊗ Σ*/n, for certain index (i, j), the (i, j)-th row in [(W⊤X/n) ⊗ Ip]·,T is , and ei, ej ∈ ℝp are the simple base functions for i, j = 1, ⋯, p. Since is with mean zero and covariance matrix

The non-zero elements in is , so is mean zero with covariance matrix , and ‖|[(W⊤X/n) ⊗ Ip]·,T|‖∞ equals the maximum value for all (i, j) pair, the ℓ1 norm of . Obviously variables in vector are sub-Gaussian. In next lemma, we bound the ℓ1 norm of such type of sub-Gaussian vectors.

Lemma 3. For any j ∈ {1, ⋯, p}, let T(j) be defined as before. Suppose that |T(j)| ≥ 1. If y ∈ ℝ|T(j)| is a random vector with mean zero and covariance matrix , and every variable in y is sub-Gaussian. Then

PROOF OF LEMMA 3: First we have:

Note that yk is sub-Gaussian with parameter and i ∈ {1, ⋯, |T(j)|}. From Chernoff bound,

which completes the proof.

Since f(x) = x exp{−a/x2} for some a > 0 is an increasing function of x and ∀j ∈ {1, ⋯, p}, |T(j)| ≤ d2, we have

| (.8) |

If we choose , we have

From the choice of ρn in (8), we can see

so

and from the condition d2 = o(qn), we know in (.8), the exponential part dominates and converges to zero at some exponential rate. On the other hand the term on the exponential shoulder is bounded by for some constant . Denote for any event B, , then

So

That is

| (.9) |

where C2 is defined above and C1 is defined in Theorem 1.

Denote

where . Define

and

So

from the choice of C2 in (.5) and inequality (.6). We have

Choosing the parameter in (.7), so from (.7),

So

Note that

and

Thus we proved Lemma 2.

Based on Lemma 2, the rest of the proof follows closely to the proof to Theorem 1 in [5]. We only outline the proof here.

Lemma 4. For any λn > 0 and sample covariance of εi based on the estimate Γ̂, Σ̂Γ̂ with strictly positive diagonal, the ℓ1-penalized log-determinant problem (4) has a unique solution Θ̂Γ̂ ≻ 0 characterized by

| (.10) |

where Ẑ is an element of the sub-differential ∂‖Θ̂Γ̂‖1,off.

This lemma is a slightly revised version of Lemma 3 in [5] and hence we omit the proof here. Based on this lemma, we construct the primal-dual witness solution (Θ̃, Z̃) as follows:

- Determine the matrix Θ̃ by solving the restricted log-determinant problem

Note that by construction, we have Θ̃ ≻ 0 and Θ̃Sc = 0.(.11) We choose Z̃S as a member of the sub-differential of the regularizer ‖·‖1,off, evaluated at Θ̃.

- Set Z̃Sc as

where Σ̂ is short for Σ̂Γ̂ and the constructed (Θ̃, Z̃) satisfy the optimality condition (.10). - We verify the strict dual feasibility condition

If the primal-dual witness construction succeeds, then it acts as a witness to the fact that the solution Θ̃ to the restricted problem (.11) is equivalent to the solution Θ̂ to the original unrestricted problem (4) [5]. The proof proceeds as this: we first show that the primal-dual witness technique succeeds with high probability, hence the support of the optimal solution Θ̂ is contained within the support of the true Θ*. In addition, the characterization of Θ̂ provided by the primal-dual witness construction can establish the element-wise ℓ∞ bounds claimed in Theorem 2. Note we define the ”effective noise” in the sample covariance matrix Σ̂Γ̂ in the appendix as U ≔ Σ̂Γ̂ − (Θ*)−1 and we use Δ ≔ Θ̃ − Θ* to measure the discrepancy between the restricted estimate Θ̃ in (.11) and the truth Θ*. We define R(Δ) ≔ Θ̃−1 − Θ*−1 + Θ*−1ΔΘ*−1.

PROOF OF THEOREM 2. We first show that with high probability the witness matrix Θ̃ is equal to the solution Θ̂ to the original log-determinant problem (4), by showing that the primal-dual witness construction succeeds with high probability. Let ℬ denote the event that where . Condition (9) on sample size n implies , which indicates that sub-Gaussian tail condition can be used in our control of sampling noise. Lemma 2 can guarantee .

Conditioning on event ℬ, the following analysis follows as that of [5]. The choice of regularization penalty implies ‖U‖∞ ≤ (α/8)λn. Following the same steps as [5], we can show that ‖R(Δ)‖∞ ≤ αλn/8. We can then show that the matrix Z̃Sc constructed in step (c) satisfies ‖Z̃Sc‖∞ < 1 and therefore Θ̃ = Θ̂. The estimator Θ̂ then satisfies the ℓ∞ bound as claimed in Theorem 2 (1), and moreover, Θ̂Sc = Θ̃Sc = 0, as claimed in the first part Theorem 2 (2). Second part of Theorem 2 (2) follows directly after (1). Since the above is conditioned on the event ℬ, these statements hold with probability

Hence we proved Theorem 2.

Proof of Theorem 3:

The proof of Theorem 3 depends on the following lemma.

Lemma 5 (Sign Consistency). Suppose the minimum absolute value θmin of nonzero entries in the true precision matrix Θ* is bounded from below by

| (.12) |

then holds.

Proof of Lemma 5. This claim follows from the bound (.12), which guarantees for all (i, j) ∈ S, the estimate Θ̃ij cannot differ enough from to change sign.

Proof of Theorem 3. Using the notation , where , the lower bound on n implies

As in the proof of Theorem 2, with probability greater than

we have Θ̃Γ̂ = Θ̂Γ̂ and ‖Θ̃Γ̂ − Θ*‖∞ ≤ θmin/2. Consequently, Lemma 5 implies that for all (i, j) ∈ E(Θ*). Overall, we can conclude that with probability greater than

the sign consistency condition holds for all (i, j) ∈ E(Θ*). This proves the theorem.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Bickel P, Levina E. Regularized estimation of large covariance matrices. Annals of Statistics. 2008a;36(1):199–227. [Google Scholar]

- 2.Bickel P, Levina E. Covariance regularization by thresholding. Annals of Statistics. 2008b;36(6):2577–2604. [Google Scholar]

- 3.Cai T, Zhou H. Minimax estimation of large covariance matrices under ℓ1 norm. Technical Report. 2010 [Google Scholar]

- 4.Cai T, Zhang C-H, Zhou H. Optimal rates of convergence for covariance matrix estimation. The Annals of Statistics. 2010;38:2118–2144. [Google Scholar]

- 5.Ravikumar P, Wainwright M, Raskutti G, Yu B. High-dimensional covariance estimation by minimizing ℓ1-penalized log-determinant divergence. Electronic Journal of Statistics. 2011;5:935–980. [Google Scholar]

- 6.Lam C, Fan J. Sparsistency and rates of convergence in large covariance matrices estimation. The Annals of Statistics. 2009;37:4254–4278. doi: 10.1214/09-AOS720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.El Karoui N. Operator norm consistent estimation of large dimensional sparse covariance matrices. The Annals of Statistics. 2008;36:2717–2756. [Google Scholar]

- 8.Cheung V, Spielman R. The genetics of variation in gene expression. Nature Genetics. 2002:522–525. doi: 10.1038/ng1036. [DOI] [PubMed] [Google Scholar]

- 9.Rothman A, Levina E, Zhu J. Sparse multivariate regression with covariate estimation. Journal of Computational and Graphical Statistics. 2010;19(4):947–962. doi: 10.1198/jcgs.2010.09188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Yin J, Li H. A sparse conditional gaussian graphical model for analysis of genetical genomics data. Annals of Applied Statistics. 2011;5:2630–2650. doi: 10.1214/11-AOAS494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wainwright MJ. Sharp thresholds for noisy and high-dimensional recovery of sparsity using ℓ1-constrained quadratic programming (lasso) IEEE Transactions on Information Theory. 2009;55:2183–2202. [Google Scholar]

- 12.Zhao P, Yu B. On model selection consistency of lasso. Journal of Machine Learning Research. 2006;7:2541–2567. [Google Scholar]

- 13.Friedman J, Hastie T, Tibshirani R. Sparse inverse covariance estimation with the graphical lasso. Biostatistics. 2008;9:432–441. doi: 10.1093/biostatistics/kxm045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Meinshausen N, Bühlmann P. High-dimensional graphs and variable selection with the lasso. Annals of Statistics. 2006;34 [Google Scholar]

- 15.Li H, Gui J. Gradient directed regularization for sparse gaussian concentration graphs, with applications to inference of genetic networks. Biostatistics. 2006;7:302–317. doi: 10.1093/biostatistics/kxj008. [DOI] [PubMed] [Google Scholar]

- 16.Fan J, Feng Y, Wu Y. Network exploration via the adaptive lasso and scad penalties. The Annals of Applied Statistics. 2009;3:521–541. doi: 10.1214/08-AOAS215SUPP. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Peng J, Wang P, Zhou N, Zhu J. Partial correlation estimation by joint sparse regression models. Journal of American Statistical Association. 2009;104:735–746. doi: 10.1198/jasa.2009.0126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Brem R, Kruglyak L. The landscape of genetic complexity across 5,700 gene expression traits in yeast. Proceedings of National Academy of Sciences. 2005;102:1572–1577. doi: 10.1073/pnas.0408709102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Steffen M, Petti A, Aach J, D’Haeseleer P, Church G. Automated modelling of signal transduction networks. BMC Bioinformatics. 2002;3:34. doi: 10.1186/1471-2105-3-34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Stark C, Breitkreutz B, Chatr-Aryamontri A, Boucher L, Oughtred R, Livstone M, Nixon J, Van Auken K, Wang X, Shi X, Reguly T, Rust J, Winter A, Dolinski K, Tyers M. The biogrid interaction database: 2011 update. Nucleic Acids Research. 2011;39:D698–D704. doi: 10.1093/nar/gkq1116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Candes E, Tao T. The dantzig selector: Statistical estimation when p is much larger than n. Annals of Statistics. 2007;35:2313–2351. [Google Scholar]

- 22.Cai T, Liu W, Luo X. A constrained l1 minimization approach to sparse precision matrix estimation. Journal of American Statistical Association. 2011;106:594–607. [Google Scholar]

- 23.Bunea F, She Y, Wegkamp M. Optimal selection of reduced rank estimators of high-dimensional matrices. Annals of Statistics. 2011;39(2):1282–1309. [Google Scholar]