Abstract

Background

Safety initiatives in the United States continue to work on providing guidance as to how the average practitioner might make patients safer in the face of the complex process by which radiation therapy (RT), an essential treatment used in the management of many patients with cancer, is prepared and delivered. Quality control measures can uncover certain specific errors such as machine dose mis-calibration or misalignments of the patient in the radiation treatment beam. However, they are less effective at uncovering less common errors that can occur anywhere along the treatment planning and delivery process, and even when the process is functioning as intended, errors still occur.

Prioritizing Risks and Implementing Risk-Reduction Strategies

Activities undertaken at the radiation oncology department at the Johns Hopkins Hospital (Baltimore) include Failure Mode and Effects Analysis (FMEA), risk-reduction interventions, and voluntary error and near-miss reporting systems. A visual process map portrayed 269 RT steps occurring among four subprocesses—including consult, simulation, treatment planning, and treatment delivery. Two FMEAs revealed 127 and 159 possible failure modes, respectively. Risk-reduction interventions for 15 “top-ranked” failure modes were implemented. Since the error and near-miss reporting system’s implementation in the department in 2007, 253 events have been logged. However, the system may be insufficient for radiation oncology, for which a greater level of practice-specific information is required to fully understand each event.

Conclusions

The “basic science” of radiation treatment has received considerable support and attention in developing novel therapies to benefit patients. The time has come to apply the same focus and resources to ensuring that patients safely receive the maximal benefits possible.

Radiation therapy (RT) is an essential treatment used in the management of many patients with cancer. Based on statistics from the National Cancer Institute, approximately 50% of cancer patients receive radiation during the course of their cancer treatment. Although the vast majority of these treatments are safe and effective, when treatment errors do occur they can have serious consequences, as highlighted in a series of popular press articles.1,2 Catastrophic errors are rare, but the safe delivery of therapeutic radiation is an inherently complex and high-risk process. Physicians prescribe and patients receive potentially curative treatments that cannot be seen or felt, and a substantial safety infrastructure has been developed to appropriately deliver these treatments.

Despite these efforts, there is much progress that can be made. We recently published an assessment estimating that the rate of radiation misadministrations may approach 0.2% per patient (1 in 500).3 This work is consistent with error rates reported by other authors within the radiation oncology literature.4–9 For example, Mans et al., at the Netherlands Cancer Institute used a novel, unbiased, objective radiation measurement technique to uncover treatment errors.8 For 4,227 patients treated, 17 errors were found in this analysis, suggesting a rate similar to that found in our analysis (0.4%).3 We note that these error rates do not compare favorably to other ultrasafe industries such as commercial aviation or nuclear power generation, nor do they compare well with other areas of medicine such as modern anesthesiology and blood transfusion.10–14 And while the scale of the challenge is increasingly clear and the need for improvements well recognized, there are few resources to guide the average radiation oncology practice on how to implement processes to avoid errors.15 In this article, we (1) discuss the landscape of nationwide safety efforts within radiation oncology in the United States; (2) explore the implications and opportunities created by the radiation oncology work flow; and (3) describe our institutional experience with performing a Failure Mode and Effects Analysis (FMEA), implementing a voluntary intradepartmental error and near-miss reporting system, and using data from both efforts to prioritize risks and implement risk-reduction strategies with the goal of making the patients we treat safer.

Nationwide Efforts to Improve Patient Safety in Radiation Oncology

Identifying and implementing practical solutions derived from the experiences of radiation oncology providers, therapists, and patients is feasible. Radiation oncology can learn from the airline industry, the experience of which is frequently cited as the gold standard for demonstrating that it is possible to operate high-risk, intricate and advanced technology safely. Although the airlines have implemented many interventions to improve safety, radiation oncology has yet to implement two of their most effective interventions: (1) a national error-reporting system, the Air Safety Reporting System (ASRS) and (2) the Commercial Aviation Safety Teams (CAST), public-private partnerships seeking to “design risks out of airplanes.”

The need for a national radiation oncology voluntary event-reporting system to collect information about errors and near misses is well recognized in the field, and related efforts, as reflected in recent conferences,15–17 are under way by both the American Society for Therapeutic Radiology and Oncology (ASTRO) and the American Association of Physicists in Medicine (AAPM). However, little tangible progress toward a national event-reporting system has yet been made. In the international setting, a model reporting system for RT—the Radiation Oncology Safety Information System (ROSIS)—does exist, and the experience in using this system can potentially inform the development of a national reporting system in the United States. Equally important is the development of consensus definitions of errors and near misses and a methodology to understand and categorize “clinical impact.” Although a national reporting system will allow us to better understand the patterns of radiation-related errors, in the absence of better methodology, this information will not clarify the extent to which these events cause patient harm. This important distinction was highlighted in a study published by a group in Toronto which suggested that 94% of 555 errors identified in a five-year period were of “little or no clinical significance.”6

CAST members collaborate to prioritize their greatest risks, investigate them thoroughly, and design and implement interventions that work. This approach addresses ubiquitous errors that are beyond the ability of any single entity to solve. Since its founding in 1997, CAST has helped the aviation industry improve an already admirable record of safety. Between 1994 and 2006, the average rate of fatal accidents decreased from 0.05 to 0.022 per 100,000 departures. Given the high dependence on computer-based systems and the technical and potentially systematic nature of treatment errors within radiation oncology, it would seem that such an approach would also be appropriate. To date, such a systematic approach is lacking.14,18,19

Any effort to address safety gaps must also acknowledge the staggering technological advancements that have taken place during the last 15 years in radiation oncology, a point that was central to discussions at the AAPM and ASTRO meetings in June 2010. There has been a growing dependence on computer systems and software interlocks for both the design of radiation plans and for treatment delivery. In this setting, the control and “double-check” of actual treatments by the personnel trained to deliver treatments (radiation therapists) has decreased, and treatment plan complexity often makes it impossible for an individual to independently verify that the treatment is delivered exactly as intended. Distractions such as crowded workstations, ready Internet availability, interruptions by multiple staff members and inadequate alert systems when a treatment has gone awry, were all cited in these discussions as factors that could impact the creation of a safe work environment.20 In addition, the groups cited the absence of specific policies and procedures that define the role of each team member and the lack of empowerment of staff to challenge those higher in the hierarchy as potential issues that could impede safety efforts. To begin addressing these issues, ASTRO has developed a six-point action plan for safety improvement. The importance of organizing radiation safety efforts has led to U.S. Congressional hearings as well as testimony before the Food and Drug Administration (FDA).21,22

Radiation Oncology Work Flow and Safety Strategies

National safety initiatives continue to work on providing guidance as to how the average practitioner might make patients safer in the face of the complex process by which RT is prepared and delivered. Our own work at the Johns Hopkins Hospital (Baltimore) in process mapping has indicated the scale of the complexity of the radiation treatment process. A typical treatment involves many steps, such as acquiring a computer-assisted tomography (CT) scan (and perhaps other modalities), delineating the intended tumor and normal tissue targets on a treatment planning computer, generating a radiation dose plan, and then executing this plan on another set of computers. Treatment delivery also requires an accurate alignment of the patient to the radiation treatment beam, a process that is error-prone. In our external beam RT service process, there are typically about 270 steps from start to finish.23 We noted 269 steps in our process map; even if each step is error-proof at the 99.9% level, the cumulative total probability of an error would be approximately 25%. The fact that the observed error rates are much lower speaks to the measures already in place to prevent errors. Given the number of steps involved, our error rates compare favorably in comparison to, for example, dispensing prescription drugs in an in-patient setting, where there are “only” 150 steps, and yet error rates of approximately 5% have been reported.24 However, there is still substantial room for improvement of the safety processes already in place.

Radiation oncology departments across the United States make significant efforts to maintain quality in care delivery and monitor for patient safety using multiple methods. Detailed programs for quality assurance have been developed by national and international organizations to reduce the potential for errors and to increase the likelihood that errors will be addressed in an appropriate and timely manner.25 These strategies include weekly peer review conferences of patient information, verification of patient setup using treatment films (either x-ray or cone-beam CT verification of patient positioning) and radiation dose checks via radiation detectors such as diodes. These intradepartmental quality control (quality assurance) measures can uncover certain specific errors such as machine dose miscalibration or misalignments of the patient in the radiation treatment beam. However, they are less effective at identifying less common errors that can occur anywhere along the treatment planning and delivery process, and even when the process is functioning as intended, errors still occur.25 Prior to our work, a systematic processwide risk analysis has not been performed in radiation oncology despite its proven use in multiple health care fields.26,27 We now discuss activities undertaken at the Johns Hopkins Hospital to improve RT safety.

Prioritizing Risks and Implementing Risk-Reduction Strategies in a Radiation Oncology Department

1. Failure Mode and Effects Analysis

Vulnerabilities in a medical process may be identified by proactive risk assessment (PRA) methods, which are used to quantitatively analyze possible errors that may occur in a particular setting.28 PRA can be used to determine potential ways in which processes break down in a prospective manner. An example of a PRA method is Failure Mode and Effects Analysis (FMEA), which has been used by other medical groups with success.10,12,28 FMEA relies on the identification by staff of errors that could potentially occur throughout a particular process. Unlike error reporting, which is retrospective, FMEA is prospective and seeks to identify hazards before patients are harmed. Clinicians and administrators can then prioritize the identified hazards and implement interventions to reduce the risk that patients will be harmed.

As reported elsewhere, FMEA was performed in the department of radiation oncology and molecular radiation sciences at the Johns Hopkins Hospital in 2006–2007 and again in 2009 as part of an ongoing effort of prospective safety improvement.23 A committee of 14 people was commissioned with representatives from each section of the department and included administrators, nurses, physicists, physicians, radiation therapists, and dosimetrists. A visual process map was generated, and the complex process was divided into four subprocesses—consult, simulation, treatment planning, and treatment delivery—with 269 different nodes identified, as stated earlier.

The potential failure modes and their causes were identified for each subprocess by a group of four people during three one-hour sessions and were then reviewed by the full committee for final inclusion into the analysis. Each failure mode was scored by 10 individuals and assigned a risk probability number (RPN) tabulated on the basis of severity, frequency, and detectability. The committee used a 10-point scale to score each category, using consensus to produce summary scores. Again using consensus, the committee scored the failure modes within the simulation subprocess. Higher RPN score correlated with importance of a failure.

A total of 127 and 159 different possible failure modes were identified in the 2006–2007 and 2009 analyses, respectively. In the 2009 analysis, 29 failure modes were identified within the consult subprocess—39 in simulation, 49 in treatment planning, and 42 in treatment delivery (unpublished data). The mean severity scores ranged from 3.0 to 8.2, with an overall mean of 5.3. The mean RPN scores ranged from 16.2 to 217.1, with an overall mean of 80.4. The mean RPN score for treatment delivery was significantly greater (p < .003) than for all other subprocesses. The RPN variability, calculated as the standard deviation among participants for each failure mode, was quite significant—the mean RPN variability was 59.6 (range, 15.4–202.2), suggesting wide variation among committee members in perceptions of severity, frequency, and detectability. Overall, severity scores correlated with RPN score.

2. Risk-Reduction Interventions

After failure modes were identified and their effects characterized, mitigation strategies were considered and prioritized. Possible interventions were evaluated in terms of possible effectiveness cost and ease of execution. We also used Grout’s four approaches to reduce human error, including mistake prevention, mistake detection, process design to fail safely, and work environment design to prevent errors, as guidance.29

On the basis of the FMEA, we identified numerous high-risk failure modes distributed throughout the entire treatment preparation and delivery process. The 15 “top-ranked” failure modes by RPN score were identified, and the FMEA committee met to brainstorm potential solutions to these potential failures (Table 1, above). The solutions were then scored and ranked according to feasibility and effectiveness. Seventy-nine possible solutions were identified for the top 15 failure modes, and 33 solutions were considered to be possible targets for implementation. Action plans were then developed to implement the solutions chosen for immediate execution. Sample failure modes derived from this top-ranked list and the methods by which the processes were redesigned to eliminate the potential causes are provided in Sidebar 1 (page 295).

Table 1.

Risk Probability Number (RPN) Scores for Selected “Top-Ranked” Failure Modes

| Failure Mode | RPN |

|---|---|

| Incorrectly exporting films to record and verify system | 160 |

| Needing to re-mark patient after treatment shifts are made | 160 |

| Incorrect beams and energy are chosen | 160 |

| Inability to verify patient’s marks are valid for treatment | 128 |

| Incorrect treatment plan generated because of incorrect physician contours | 100 |

| Treatment plan and film are exported incorrectly to treatment machine | 96 |

| Simulation therapist creates incorrect patient file | 96 |

| Radiation therapist write patient setup information on paper leading to incorrect information | 96 |

| Therapists incorrectly re-mark patient because of shifts that were written incorrectly on paper | 96 |

| Incorrect verification by radiation therapists of paper setup instructions written at the time of simulation | 80 |

| Incorrect treatment plan generated because of incorrect beam placement by dosimetry | 80 |

| Dosimetrists export dose information to dose calculation program incorrectly | 80 |

| Radiation therapist shifts the patient’s position based on treatment plan incorrectly | 80 |

| Incorrect instructions on contrast administered to patient | 75 |

| Immobilization for patient is incorrect | 75 |

Sidebar 1. Sample Failure Modes and the Methods for Redesigning the Processes to Eliminate the Potential Causes.

Radiation Treatment Plans Designed Using the Wrong Set of Planning Information

One of the first steps in the radiation process involves the planning of radiation by outlining or contouring the tumor in need of treatment and any normal structures that should be spared on a special computer-assisted tomography (CT) scan acquired in the radiation oncology department during a process called the “simulation.” After the physicians contour the tumor and normal tissues, the scan and the contours are transferred to radiation planners. These planners, called dosimetrists, determine the radiation beam arrangement that will optimally allow the delivery of radiation to the tumor while sparing normal organs as much as possible. For a given patient, sometimes more than one CT scan is performed during the simulation, and, as a result, it is possible for a radiation plan to be created using the wrong scan. In an effort to reduce the likelihood that this could occur, several possible solutions were identified: deleting unused scans, using site-specific plan names to identify the relevant CT scan, and locking plans that are no longer required.

Patient Aligned to the Incorrect Treatment Setup Radiograph

Failure modes in the transfer of information from radiation planning to radiation treatment were identified. On the first day of treatment, radiation therapists and physicians compare a setup radiograph from treatment planning to imaging performed in real-time. The setup radiograph is exported into the computer system that verifies the radiation treatment, and it is possible for the incorrect setup film to be accidentally attached to a patient’s treatment plan during this information export process. Seven solutions were identified as possible measures to reduce the likelihood of this event. After extensive discussions, measures incorporated for prevention of this event included color-coding the centerpoint of the radiograph to ensure that the film demonstrates the correct positioning, having a printout of the center-point coordinates, and having an explicit checkbox for radiation therapists to indicate that they have verified the correct film. These measures were felt to be systematic changes that could be implemented to reduce the likelihood that this adverse event would occur.

The Incorrect Radiation Treatment Plan is Brought Up at the Computer Console for a Particular Patient

Another failure mode involved inadvertently calling up treatment plans for the wrong patient on the computer that executes radiation treatment delivery. This failure mode was considered to be severe and a result of the radiation therapists being responsible for manually pulling up individual treatment plans. To address this challenge, we implemented a system whereby a unique bar code is printed on the back of the plastic patient identification card. Before each daily treatment, the card is given to the radiation therapist, who scans this bar code into the treatment computer. The computer then automatically pulls up the treatment plan for that patient. In addition, a system was implemented whereby the patient’s photo is displayed on the treatment screen while the therapists are aligning the patient for treatment, as an identification double-check. These two interventions made this particular failure mode both less possible and, if the error somehow still occurred, easier to detect.

Incorrect Patient Positioning with Respect to the Treatment Beams (Table Setup)

Failure modes associated with positioning the patient when he or she is lying on the treatment table were also identified as potentially frequent and severe errors. For example, during our radiation treatment planning session (simulation), marks are placed on the patient’s skin to align the radiation beam with the area to be treated. There are instances when the marks need to be changed or moved after the radiation planning process has been completed, and the process of updating these marks or replacing marks that have rubbed off is error-prone. As a result, a patient could be treated to the wrong location as the radiation beam is targeted to the wrong marks. Therefore, the Failure Mode and Effects Analysis team proposed to change the method for documenting marks by photographing the marks for confirmation of location. The photos were then placed in the patient’s chart.

These prospectively identified sources of potential errors and the interventions we designed and implemented to prevent them were one important part of our department’s systemic patient safety improvements. The validated FMEA tool systematically and quantitatively identified vulnerabilities in our system and we were able to use relative RPN scores to rank the risk of system failures in our complex department. This ranking was particularly useful in terms of providing a structure to prioritize safety improvements, given that resources are always going to be limited. This analysis could be applied to the radiation oncology departments of other institutions and in other process-intensive oncologic areas. Moreover, multiple radiation oncology departments can share FMEAs or conduct site visits in an attempt to learn together how to reduce risks to patients.

However, the FMEA approach has its limitations, and PRAs have been criticized for several reasons.28 The analysis requires substantial effort and time from the participants.30,31 For example, we estimate that 170 hours of staff time were required to complete the analysis from January through June 2009. Habraken et al. examined 13 FMEAs, which, on average, required 69 person hours to complete.30,32 Given the staff resources required, the FMEA process may be difficult to accomplish in a smaller clinic. In addition, as we discovered in our own case, in view of the extensive process map and number of nodes identified, it was difficult to keep track of the information and keep it updated.23 The potential events identified are based on the experiences of the members of the committee. Similarly, the scoring of each event is highly variable and influenced by the outlook of the participants. The ranking of events is sensitive to RPN scores and therefore, ultimately subjective. Despite such criticisms of FMEA, we have found the process a valuable approach to error identification and mitigation in a team-oriented, prospective manner. As a result, FMEA is currently being rolled out to other radiation oncology sites within the Johns Hopkins Medical Institutions.

3. Voluntary Error and Near-Miss Reporting Systems

The goal of FMEA is to identify potential errors before they occur. Another method of safety analysis relies on the use of error-reporting systems. Unlike FMEA, error reporting systems collect information on errors that have already occurred or near-miss incidents in the clinical setting.33,34 When used correctly, these systems provide valuable data on actual and near-miss errors in day-to-day operations. Such voluntary reporting has been a cornerstone of safety improvement efforts in the airline industry with the ASAP program, as well as in other medical fields such as anesthesiology.

The utility of voluntary reporting systems to capture near misses and errors is also recognized in the field of radiation oncology. Marks et al. used data from such a system to quantify the effects of introducing advanced technology into the radio-therapy clinic.9 Errors in radiation beam shaping were seen to decrease with the introduction of computer-controlled beam shaping devices. Such results demonstrate the need to capture incidents in an effort to understand how our changing work environment affects patient safety.

In an effort to improve safety and quality, an incident reporting system was implemented in our department in July 2007. This system is Web-based and uses a computerized database. The use of the error reporting system is voluntary and can be used by any member of the department. Any event deviating from the expected course, from consultation to treatment delivery, can be logged. This system was created to gather information on actual events to understand the potential specific weaknesses of our departmental processes. Like other institutions, our hospital operates the Web-based patient safety reporting system, the patient safety network (PSN). However, we believe that the PSN is insufficient for the specific needs of radiation oncology because a greater level of practice-specific information is required to fully understand each event. Examples of such information include the radiation dose/fractionation prescribed, the type of image-guidance used, the disease being treated, and the anatomic sites in the treated radiation field, etc. Although it is possible to input this information in PSN, this would rely on the user to remember what details are required and would also not be in a database structure that could be readily analyzed.

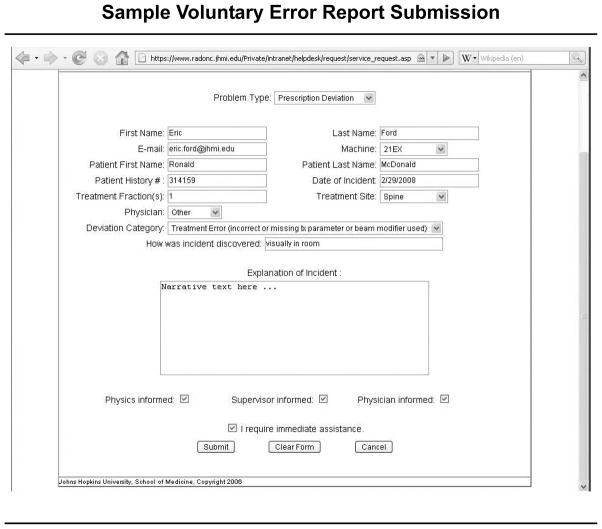

Since the implementation of the incident-reporting system in our department, 253 events have been logged. To compare the incidents logged in our voluntary reporting system with the failure modes identified in the FMEA, we reviewed and analyzed the incident reports for a three-month period (August to November 2009); a sample report is shown in Figure 1 (right). A total of 24 prescription deviations were recorded during this period. All events identified were corrected before treatment was affected. Although all members of the department have access to this system, the majority of events in the log were reported by radiation therapists and no errors were reported by physicians. This pattern of reporting reveals an inherent bias in the types of errors logged. There is significant variability in the number of event reports from month to month, and because only a nonrandom minority of actual events are reported to the system, its use to estimate incident rates is limited.35 However, valuable information that appears to complement the results of the FMEA can be gained from a review of real-time incidents occurring in the clinic.

Figure 1.

This sample report logged into the voluntary reporting system at the Johns Hopkins Hospital cites a treatment error involving an incorrect or missing treatment parameter or beam modifier.

In comparing the reported errors from our departmental log to the results of the FMEA, we found that 42% of actual errors reported in the reporting system were not identified as potential errors in the FMEA. These numbers indicate that while FMEA can help to prioritize and identify a multitude of potential errors, it cannot be used exclusively. There are a number of possible explanations for this finding, which may suggest that improvements to the methodology used in conducting the FMEA should be explored. It is also possible that because FMEA analysis depends on the participants’ ability to imagine all possible errors, failure to identify all possible errors is actually a “failure of imagination”; our capacity to anticipate all possible outcomes is limited. This finding also reinforces, as Croteau suggests, that we should continually “risk assess” our “risk assessment process.”36 Clearly, the process of shepherding a patient from consultation to successful completion of RT, is highly complex. Given the number of steps required to ensure that radiation treatment is delivered precisely and without error, it is not surprising that all possible events were not conceptualized or anticipated using FMEA in only 170 staff hours. Although the number of staff hours spent was significant, it is still unlikely to be sufficient (as our data suggested) to predict all possible failure modes.

With regard to improvement of the robustness of the voluntary error and near-miss reporting system, attention must be paid to the potential bias in reporting patterns that comes from nonuniform participation. Although this is a challenging task, we believe that it can be accomplished through the right mix of system design and implementation. Clearly, both prospective (FMEA) and retrospective (error reporting) approaches are needed, and our analysis suggests that they may provide complementary information that together can be used to improve patient safety.

One other global area identified by both FMEA and the event reporting system as deserving of further attention was communication between providers, especially at key handoff points in a patient’s care. Within our department, these handoffs frequently occur between practice groups that include physicians, nurses, radiation therapists, physicists, and dosimetrists at multiple points as a patient moves through the system from consultation to simulation to radiation treatment planning to radiation treatment delivery and clinical on-treatment care. When we evaluated the events in our voluntary incident reporting system, we noted that a number of the near-miss incidents occurred as a result of communication errors between groups. This is not unique to our field.37–41

Given that these handoffs and the data to be included at each step is clear, we are in the process of coupling our reporting system to an electronic checklist required for handoffs. Checklists are now used widely in radiation oncology and other complex areas of medicine because they can standardize a process to ensure that all elements needed for the delivery of safe care are completed.37–41 Checklists also create and use standardized language agreed upon by all parties involved in treating the patient. Studies have shown how checklist implementation can improve the quality of care by reducing central line-associated bloodstream infections, reducing wrong-site surgery, and enhancing communication when patient care plans are changed.42–47 The uniformity of a checklist can help regulate and enhance the quality of care delivered particularly when there are a number of tasks being completed by a number of people. Checklists also reduce the need to rely on individual human cognition and memory, especially important in complex processes that involve multiple professional groups.

This handoff checklist will be embedded within our current electronic work flow such that at any point, if a near miss or error is identified, an easy-to-use required link will automatically populate the relevant patient information and capture the deviation or near miss. Such a forcing function should yield more accurate data while minimizing the burden on staff. This approach may also help address bias in terms of who is currently participating in the voluntary reporting system and will be rigorously evaluated. After recognizing events using this methodology, mitigation strategies will be implemented.

Recommendations for Action

The risk identification and mitigation strategies that we have described above are effective but time-consuming. Because errors are rare overall, more efficient and effective learning is likely to result from coordination across multiple radiation oncology departments. To this end, we make four recommendations to broadly improve the safety of radiation therapy.

First, radiation oncology should develop a national safety reporting system, as we stated earlier. The creation of a national reporting system and an associated robust database for radiation-related errors should help identify systematic challenges and suggest solutions, as well as provide baseline information against which future safety initiatives may be assessed for efficacy.

Second, RT should be the focus of an entity such as a health care version of CAST, as described earlier. Many of the RT hazards are potential design flaws that may be mitigated with the involvement of human factors engineers. There are strong economic incentives for industry to get these devices to market, but human factors principles cannot be overlooked. A health care version of CAST, Public Private Partnership Promoting Patient Safety (P5S),19 should address RT safety, with a focus on the identification of design flaws that should be addressed.

Third, radiation oncology departments should consider creating a peer-to-peer review system to share best practices similar to what is performed by the World Association of Nuclear Operators.48 Internal peer review such as chart rounds is extremely important for the maintenance of physician quality. However, a peer-to-peer assessment in which one organization confidentially evaluates another using valid assessment tools to anonymously share lessons learned with others and focus on continuous learning, is important to help improve safety. Organizations, such as the ACR and AAPM, have begun to facilitate peer-to-peer review. Approximately 10% of clinics are currently accredited by the ACR/ASTRO process, and an emphasis on the importance of an accreditation step is evolving.

Fourth, radiation oncology departments need to ensure that they have staff with the expertise and resources to lead their patient safety efforts. Few departments have staff skilled in systems or human factors engineering, and few facilities also provide physicians and engineers time to monitor and improve patient safety. In our department, safety and quality issues are discussed in our weekly quality assurance conferences and in biweekly executive safety meetings. In addition, a dedicated department safety coordinator has been appointed to investigate the events logged in the reporting system and provide oversight of mitigation strategies. Usability testing, a human factors engineering approach to address error-proofing that incorporates feedback from typical users of the technology,11 could also be employed to analyze the interface between health care workers and the complex technology used in radiation oncology.

Conclusions

We described how we used prospective and retrospective risk identification strategies to inform and guide patient safety efforts within our radiation oncology department. The “basic science” of radiation treatment has received considerable support and attention in developing novel therapies to benefit patients. The time has come to apply the same focus and resources to ensuring that patients safely receive the maximal benefits possible.

Contributor Information

Stephanie A. Terezakis, Department of Radiation Oncology and Molecular Radiation Sciences, Sidney Kimmel Comprehensive Cancer Center at Johns Hopkins, Johns Hopkins School of Medicine, Baltimore.

Peter Pronovost, Quality and Safety Research Group, Department of Anesthesiology and Critical Care Medicine, and Department of Surgery, The Johns Hopkins University School of Medicine; and Department of Health Policy and Management, The Johns Hopkins Bloomberg School of Public Health, Baltimore; and a member of The Joint Commission Journal on Quality and Patient Safety’s Editorial Advisory Board.

Kendra Harris, Department of Radiation Oncology and Molecular Radiation Sciences, Johns Hopkins School of Medicine.

Theodore DeWeese, Department of Radiation Oncology and Molecular Radiation Sciences, Johns Hopkins School of Medicine.

Eric Ford, Department of Radiation Oncology and Molecular Radiation Sciences, Johns Hopkins School of Medicine.

References

- 1.Bogdanich W. Radiation offers new cures, and ways to do harm. [last accessed May 24, 2011];New York Times. 2010 Jan 23; http://www.nytimes.com/2010/01/24/health/24radiation.html.

- 2.Bogdanich W, Rebelo K. A pinpoint beam strays invisibly, harming instead of healing. [last accessed May 24, 2011];New York Times. 2010 Dec 28; http://www.nytimes.com/2010/12/29/health/29radiation.html.

- 3.Ford EC, Terezakis S. How safe is safe? Risk in radiotherapy. Int J Radiat Oncol Biol Phys. 2010 Oct 1;78:321–322. doi: 10.1016/j.ijrobp.2010.04.047. [DOI] [PubMed] [Google Scholar]

- 4.World Health Organization (WHO) Radiotherapy Risk Profile: Technical Manual. Geneva, Switzerland: 2008. [last accessed May 24, 2011]. http://www.who.int/patientsafety/activities/technical/radiotherapy_risk_profile.pdf. [Google Scholar]

- 5.Fraass BA. Errors in radiotherapy: Motivation for development of new radiotherapy quality assurance paradigms. Int J Radiat Oncol Biol Phys. 2008;71(1 Suppl):S162–S165. doi: 10.1016/j.ijrobp.2007.05.090. [DOI] [PubMed] [Google Scholar]

- 6.Huang G, et al. Error in the delivery of radiation therapy: Results of a quality assurance review. Int J Radiat Oncol Biol Phys. 2005 Apr 1;61:1590–1595. doi: 10.1016/j.ijrobp.2004.10.017. [DOI] [PubMed] [Google Scholar]

- 7.Macklis RM, Meier T, Weinhous MS. Error rates in clinical radiotherapy. J Clin Oncol. 1998 Feb;16:551–556. doi: 10.1200/JCO.1998.16.2.551. [DOI] [PubMed] [Google Scholar]

- 8.Mans A, et al. Catching errors with in vivo EPID dosimetry. Med Phys. 2010 Jun;37:2638–2644. doi: 10.1118/1.3397807. [DOI] [PubMed] [Google Scholar]

- 9.Marks LB, et al. The impact of advanced technologies on treatment deviations in radiation treatment delivery. Int J Radiat Oncol Biol Phys. 2007 Dec 1;69:1579–1586. doi: 10.1016/j.ijrobp.2007.08.017. [DOI] [PubMed] [Google Scholar]

- 10.Burgmeier J. Failure mode and effect analysis: An application in reducing risk in blood transfusion. Jt Comm J Qual Improv. 2002 Jun;28:331–339. doi: 10.1016/s1070-3241(02)28033-5. [DOI] [PubMed] [Google Scholar]

- 11.Israelski EW, Muto WH. Human factors risk management as a way to improve medical device safety: A case study of the therac 25 radiation therapy system. Jt Comm J Qual Saf. 2004 Dec;30:689–695. doi: 10.1016/s1549-3741(04)30082-1. [DOI] [PubMed] [Google Scholar]

- 12.Moss J. Reducing errors during patient-controlled analgesia therapy through failure mode and effects analysis. Jt Comm J Qual Patient Saf. 2010 Aug;36:359–364. doi: 10.1016/s1553-7250(10)36054-5. [DOI] [PubMed] [Google Scholar]

- 13.Berenholtz SM, et al. Eliminating catheter-related bloodstream infections in the intensive care unit. Crit Care Med. 2004 Oct;32:2014–2020. doi: 10.1097/01.ccm.0000142399.70913.2f. [DOI] [PubMed] [Google Scholar]

- 14.Logan TJ. Error prevention as developed in airlines. Int J Radiat Oncol Biol Phys. 2008;71(1 Suppl):S178–S181. doi: 10.1016/j.ijrobp.2007.09.040. [DOI] [PubMed] [Google Scholar]

- 15.Williamson JF, et al. Quality assurance needs for modern image-based radiotherapy: Recommendations from 2007 interorganizational symposium on “Quality assurance of radiation therapy: Challenges of advanced technology. Int J Radiat Oncol Biol Phys. 2008;71(1 Suppl):S2–S12. doi: 10.1016/j.ijrobp.2007.08.080. [DOI] [PubMed] [Google Scholar]

- 16.American Association of Physicists in Medicine. 52nd Annual Meeting; Philadelphia. Jul. 18–22, 2010; [last accessed May 24, 2011]. http://www.aapm.org/meetings/2010AM/MeetingProgramInfo.asp. [Google Scholar]

- 17.American Association of Physicists in Medicine. Safety in Radiation Therapy: A Call to Action. Miami: Jun 24–25, 2010. [last accessed May 24, 2011]. http://www.aapm.org/meetings/2010srt/ [Google Scholar]

- 18.Pronovost P. Radiation hazards indicates need for industrywide response. [last accessed May 24, 2011];Health Aff (Millwood) Blog. 2011 Mar 10; http://healthaffairs.org/blog/author/pronovost/

- 19.Pronovost PJ, et al. Reducing health care hazards: Lessons from the Commercial Aviation Safety Team. Health Aff (Millwood) 28:w479–w489. doi: 10.1377/hlthaff.28.3.w479. Epub Apr. 7, 2009. [DOI] [PubMed] [Google Scholar]

- 20.Hendee WR, Herman MG. Improving patient safety in radiation oncology. Med Phys. 2011 Jan;38:78–82. doi: 10.1118/1.3522875. [DOI] [PubMed] [Google Scholar]

- 21.American Association of Physicists in Medicine: Statement of Michael G. Herman, Ph.D., FAAPM, FACMP, On Behalf of the American Association of Physicists in Medicine (AAPM) [(last accessed May 24, 2011)];Before the Subcommittee on Health of the House Committee on Energy and Commerce. 2010 Feb 26; http://www.aapm.org/publicgeneral/StatementBeforeCongress.asp.

- 22.U.S. Food and Drug Administration: Public Meeting. [last accessed May 24, 2011];Device Improvements to Reduce the Number of Under-Doses, Over-Doses, and Misaligned Exposures From Therapeutic Radiation. 2010 Jun 9–10; http://www.fda.gov/MedicalDevices/NewsEvents/WorkshopsConferences/ucm211110.htm.

- 23.Ford EC, et al. Evaluation of safety in a radiation oncology setting using failure mode and effects analysis. Int J Radiat Oncol Biol Phys. 2009 Jul 1;74:852–858. doi: 10.1016/j.ijrobp.2008.10.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Reason JT. Managing the Risks of Organizational Accidents. Aldershot, U.K: Ashgate Publishing; 1997. [Google Scholar]

- 25.Shafiq J, et al. An international review of patient safety measures in radio-therapy practice. Radiother Oncol. 2009 Jul;92:15–21. doi: 10.1016/j.radonc.2009.03.007. [DOI] [PubMed] [Google Scholar]

- 26.Duwe B, Fuchs BD, Hansen-Flaschen J. Failure mode and effects analysis application to critical care medicine. Crit Care Clin. 2005 Jan;21:21–30. doi: 10.1016/j.ccc.2004.07.005. [DOI] [PubMed] [Google Scholar]

- 27.Sheridan-Leos N, Schulmeister L, Hartranft S. Failure mode and effect analysis: A technique to prevent chemotherapy errors. Clin J Oncol Nurs. 2006 Jan;10:393–398. doi: 10.1188/06.CJON.393-398. [DOI] [PubMed] [Google Scholar]

- 28.Faye H, et al. Involving intensive care unit nurses in a proactive risk assessment of the medication management process. Jt Comm J Qual Patient Saf. 2010 Aug;36:376–384. doi: 10.1016/s1553-7250(10)36056-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Grout JR. Mistake proofing: Changing designs to reduce error. Qual Saf Health Care. 2006 Dec;15(Suppl 1):s44–s49. doi: 10.1136/qshc.2005.016030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ashley L, et al. A practical guide to failure mode and effects analysis in health care: Making the most of the team and its meetings. Jt Comm J Qual Patient Saf. 2010 Aug;36:351–358. doi: 10.1016/s1553-7250(10)36053-3. [DOI] [PubMed] [Google Scholar]

- 31.Riehle MA, Bergeron D, Hyrkas K. Improving process while changing practice: FMEA and medication administration. Nurs Manage. 2008 Feb;39:28–33. doi: 10.1097/01.NUMA.0000310533.54708.38. [DOI] [PubMed] [Google Scholar]

- 32.Habraken MM, et al. Prospective risk analysis of health care processes: A systematic evaluation of the use of HFMEA in Dutch health care. Ergonomics. 2009 Jul;52:809–819. doi: 10.1080/00140130802578563. [DOI] [PubMed] [Google Scholar]

- 33.Cooke DL, Dunscombe PB, Lee RC. Using a survey of incident reporting and learning practices to improve organisational learning at a cancer care centre. Qual Saf Health Care. 2007 Oct;16:342–348. doi: 10.1136/qshc.2006.018754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Williams MV. Improving patient safety in radiotherapy by learning from near misses, incidents and errors. Br J Radiol. 2007 May;80(953):297–301. doi: 10.1259/bjr/29018029. [DOI] [PubMed] [Google Scholar]

- 35.Pronovost PJ, Miller MR, Wachter RM. Tracking progress in patient safety: An elusive target. JAMA. 2006 Aug 9;296:696–699. doi: 10.1001/jama.296.6.696. [DOI] [PubMed] [Google Scholar]

- 36.Croteau RJ. Risk assessing risk assessment. Jt Comm J Qual Patient Saf. 2010 Aug;36:348–350. doi: 10.1016/s1553-7250(10)36052-1. [DOI] [PubMed] [Google Scholar]

- 37.Pronovost PJ, et al. Toward learning from patient safety reporting systems. J Crit Care. 2006 Dec;21:305–315. doi: 10.1016/j.jcrc.2006.07.001. [DOI] [PubMed] [Google Scholar]

- 38.Gawande A. The checklist: If something so simple can transform intensive care, what else can it do? New Yorker. 2007 Dec 10;:86–101. [PubMed] [Google Scholar]

- 39.Gawande AA, et al. Analysis of errors reported by surgeons at three teaching hospitals. Surgery. 2003 Jun;133:614–621. doi: 10.1067/msy.2003.169. [DOI] [PubMed] [Google Scholar]

- 40.Lingard L, et al. Communication failures in the operating room: An observational classification of recurrent types and effects. Qual Saf Health Care. 2004 Oct;13:330–334. doi: 10.1136/qshc.2003.008425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Sutcliffe KM, Lewton E, Rosenthal MM. Communication failures: An insidious contributor to medical mishaps. Acad Med. 2004 Feb;79:186–194. doi: 10.1097/00001888-200402000-00019. [DOI] [PubMed] [Google Scholar]

- 42.Pronovost P, et al. An intervention to decrease catheter-related bloodstream infections in the ICU. N Engl J Med. 2006 Dec 28;355:2725–2732. doi: 10.1056/NEJMoa061115. [DOI] [PubMed] [Google Scholar]

- 43.Lingard L, et al. Evaluation of a preoperative checklist and team briefing among surgeons, nurses, and anesthesiologists to reduce failures in communication. Arch Surg. 2008 Jan;143:12–17. doi: 10.1001/archsurg.2007.21. [DOI] [PubMed] [Google Scholar]

- 44.Verdaasdonk EG, et al. Can a structured checklist prevent problems with laparoscopic equipment? Surg Endosc. 2008 Oct;22:2238–2243. doi: 10.1007/s00464-008-0029-3. [DOI] [PubMed] [Google Scholar]

- 45.Makary MA, et al. Operating room briefings and wrong-site surgery. J Am Coll Surg. 2007 Feb;204:236–243. doi: 10.1016/j.jamcollsurg.2006.10.018. [DOI] [PubMed] [Google Scholar]

- 46.Clay AS, et al. Debriefing in the intensive care unit: A feedback tool to facilitate bedside teaching. Crit Care Med. 2007 Mar;35:738–754. doi: 10.1097/01.CCM.0000257329.22025.18. [DOI] [PubMed] [Google Scholar]

- 47.Levy MM, et al. Sepsis change bundles: Converting guidelines into meaningful change in behavior and clinical outcome. Crit Care Med. 2004 Nov;32(11 Suppl):S595–S597. doi: 10.1097/01.ccm.0000147016.53607.c4. [DOI] [PubMed] [Google Scholar]

- 48.Revuelta R. Operational experience feedback in the World Association of Nuclear Operators (WANO) J Hazard Mater. 2004 Jul 26;111:67–71. doi: 10.1016/j.jhazmat.2004.02.025. Heal the sick, advance the science, share the knowledge. [DOI] [PubMed] [Google Scholar]