Abstract

Purpose

To prepare public systems to implement evidence-based prevention programs for adolescents, it is necessary to have accurate estimates of programs’ resource consumption. When evidence-based programs are implemented through a specialized prevention delivery system, additional costs may be incurred during cultivation of the delivery infrastructure. Currently, there is limited research on the resource consumption of such delivery systems and programs. In this article, we describe the resource consumption of implementing the PROSPER (PROmoting School–Community–University Partnerships to Enhance Resilience) delivery system for a period of 5 years in one state, and how the financial and economic costs of its implementation affect local communities as well as the Cooperative Extension and University systems.

Methods

We used a six-step framework for conducting cost analysis, using a Cost–Procedure–Process–Outcome Analysis model (Yates, Analyzing costs, procedures, processes, and outcomes in human services: An introduction, 1996; Yates, 2009). This method entails defining the delivery System; bounding cost parameters; identifying, quantifying, and valuing systemic resource Consumption, and conducting sensitivity analysis of the cost estimates.

Results

Our analyses estimated both the financial and economic costs of the PROSPER delivery system. Evaluation of PROSPER illustrated how costs vary over time depending on the primacy of certain activities (e.g., team development, facilitator training, program implementation). Additionally, this work describes how the PROSPER model cultivates a complex resource infrastructure and provides preliminary evidence of systemic efficiencies.

Conclusions

This work highlights the need to study the costs of diffusion across time and broadens definitions of what is essential for successful implementation. In particular, cost analyses offer innovative methodologies for analyzing the resource needs of prevention systems.

Keywords: Prevention delivery systems, Economic analysis, Diffusion, Sustainability, Implementation science

It is increasingly clear that evidence-based preventive interventions (EBPIs) need to be implemented on a much greater scale and sustained over time to achieve a significant public health impact [1,2,3]. This recognition has spurred the development of community-based delivery systems capable of widely diffusing EBPIs [4,5]. Such delivery systems are instituted to cultivate community capacity for effective EBPI implementation as well as to achieve local buy-in and sustainability. Despite growing evidence of the effectiveness of such delivery methods [6,7], little research has explored the financial and economic impact of these prevention systems. To install such systems in the existing social service infrastructure, it is important to have accurate cost estimates of their resource consumption, effectively plan for their introduction, and assess their efficiency in real-world contexts (i.e., that program benefits outweigh their costs). Specifically, by evaluating the resource consumption of formal systems that deliver EBPIs, a more precise accounting of a given program’s resource needs may be considered. This article presents the results of a financial and economic cost analysis of one such delivery system, known as PROSPER (PROmoting School–Community–University Partnerships to Enhance Resilience).

Although there has been considerable work that evaluated the economic impact of some EBPIs, most reports include only limited evaluation of program costs and tend to focus exclusively on the resources directly required for programming (i.e., “day-of implementation” costs). What has not been examined is the system-level resources that may be necessary for program adoption, implementation, and sustainability. These include costs from planning, recruitment, technical assistance, resource generation, and so forth. Such additional expenditures—that comprise prevention system costs—may account for a substantial proportion of programming costs, and their inclusion is necessary for accurate estimates.

Diffusing Sustained Programming: Prevention Systems

Prevention systems may be used to cultivate local community ownership and decision making to promote diffusion of EBPIs [2,5,8]. The PROSPER delivery system focuses not only on program implementation, but also seeks to nurture sustainable prevention efforts that serve community needs [2,9]. This delivery system is in contrast to the traditional implementation models that initiate prevention efforts with limited seed funding, but do not sustain support after the earliest stages of planning, often resulting in an inability to maintain the effort [10]. However, PROSPER delivery system uses early resource streams to foster self-sustaining local efforts; therefore, programs are delivered to a larger proportion of the population for a longer period [3].

PROSPER delivery system accomplishes this by linking stakeholders from state Cooperative Extension System (CES) with local public schools for delivering school- and family-based EBPIs to adolescent populations during the sixth and seventh grades (e.g., Life skills training, Strengthening families program 10–14). The CES is a network for diffusing research-based knowledge from land-grant universities to local extension agents in >3,000 U. S. counties [11]. These agents partner with a local school administrator to lead prevention teams, including representatives from a variety of local interests, with the goal of delivering EBPIs within their communities. University faculty facilitate teams’ delivery by providing training and technical assistance through the CES’s existing infrastructure [12]. A randomized controlled trial of PROSPER (24 communities, 17,701 adolescent participants) delivery system has previously demonstrated the system’s effectiveness in promoting EBPI adoption, implementation, and sustainability [13,14] as well as significantly reducing rates of adolescent substance abuse [7,15,16].

Evaluating Financial and Economic Costs of Prevention Systems

To better understand the value of PROSPER model, we consider system-level resource consumption as well as both its financial and economic costs. Resource consumption refers to the use of capital (e.g., knowledge, equipment, participant’s incentives, sustainability, funding) as well as space and labor resources [17]. In turn, the resources consumed for a particular activity may be valued based on their financial costs (i.e., the monetary outlays to secure the capital, space, and labor needed for an activity). Resource consumption may also be evaluated in terms of its economic cost (i.e., the value of all resources consumed by an activity, as measured by the cost of not using these resources for another purpose, known as an opportunity cost [15,18–20]).

What determines whether a cost analysis evaluates financial versus economic costs depends on the perspective, scope, and period of the analysis. For instance, cost analysis perspectives vary by payer, with some perspectives being more or less comprehensive (societal costs vs. participant costs). A financial cost analysis tends to focus on only the costs incurred by the organization (e.g., company, school) overseeing program distribution. Financial cost estimates can be used to identify and estimate the resource needs for different activities and procedures within a system. Alternatively, an economic cost analysis considers the total societal impact of an activity. From this perspective, any cost incurred by an individual or organization, which would not have otherwise been endured, is considered an economic cost.

These varied perspectives imply differences in what costs should be considered (scope) and how long consumption should be tracked (period). Consequently, financial and economic cost analyses may be used to answer different questions about resource consumption and, if used simultaneously, may provide estimates that have both real-world utility and generalizability. Financial analysis can aid future field work, whereas analyses of economic costs provide assessments of program resource requirements and are essential for considering costs in the context of outcomes (e.g., cost-effectiveness and cost-benefit analysis [16]).

Although evaluations of the costs and benefits of school and family prevention programs demonstrate that sizeable savings can occur from the delivery of EBPIs [21], researchers have yet to assess the costs of prevention systems themselves (i.e., the costs needed to facilitate successful adoption, implementation, and sustainability), much less assess their economic benefit. One factor hindering this work is insufficient tracking of resources used, which is essential for accurate cost analyses. Consequently, the PROSPER randomized controlled trial was designed to prospectively track resource consumption to study prevention system costs.

We describe the financial and economic costs of installing PROSPER delivery system within seven rural Pennsylvanian communities involved in this trial [12]. First, we outline our methodological framework for evaluating systemic costs. We then present the results of the analysis including longitudinal estimates of PROSPER’s infrastructure expenditures and financial cost estimates for adopting, implementing, and sustaining the delivery system. Finally, we compare the cost of delivering EBPIs with and without PROSPER and discuss the value of estimating program diffusion costs.

Methods

Procedure

We evaluated the resources consumed by PROSPER trial using a six-step framework for conducting financial and economic cost analyses of health initiatives (Table 1; [17,19]). Using this approach, we (1) defined the project; (2) bounded the perspective, period, and scope; (3) identified, (4) quantified, and (5) valued the resources essential to installing the delivery system; and finally, (6) evaluated estimate uncertainty using sensitivity analyses. Using these estimates, we valued the cost of delivering different EBPIs within PROSPER model. Additionally, we used the financial estimates to assess the evolution of the system’s infrastructure as well as the costs of adopting, implementing, and sustaining EBPIs within PROSPER program. Costs were estimated from budgetary, sustainability, and volunteer-time data that tracked both expenditures from the parent grant and inputs from any outside sources. Because of the involved nature of these evaluations, additional discussion of the approaches used to calculate estimates is provided in the Appendix.

Table 1.

Analytic steps for PROSPER cost analysis

| Step | Method | Result |

|---|---|---|

| 1. Definition | Integrate PROSPER sustainability and CPPOA models Project goals and purpose → outcomes Project objectives and strategies → processes Project procedures → procedures Project activity-costs → costs |

Analytic CPPOA structure |

| 2. Bounding | Delineate bounds of analysis Perspectives: local team, Cooperative Extension, University team, and societal Analytic horizon: project years 1–5 (2002–2006) Scope: costs directly related to sustaining quality programming and well-functioning teams |

Boundaries of analysis |

| 3. Identification | Conduct qualitative cost analysis Classification of 16 project procedures Classification of 116 project activities |

Integrative Cost Matrix (ICM) |

| 4. Quantification | Account for project financial costs and resource consumption Removal of first copy and research expenditures Application of activity-based costing procedure (ABC) Allocation of resource consumption to team-and program-level procedures |

Estimates of project expenditures by activity |

| 5. Valuation | Estimate opportunity costs and adjust for market imperfections Opportunity costs: team co-leader, team member, participants Market adjustments: inflation |

Estimate of economic costs |

| 6. Sensitivity analysis | Employ extreme-scenario and one-way sensitivity analyses Assumption 1: allocation rate of university personnel costs to implementation activities Assumption 2: allocation rate of university overhead to implementation activities Assumption 3: allocation rate of university expenses to implementation activities Assumption 3: cost of family curriculum |

Final cost estimate |

Definition

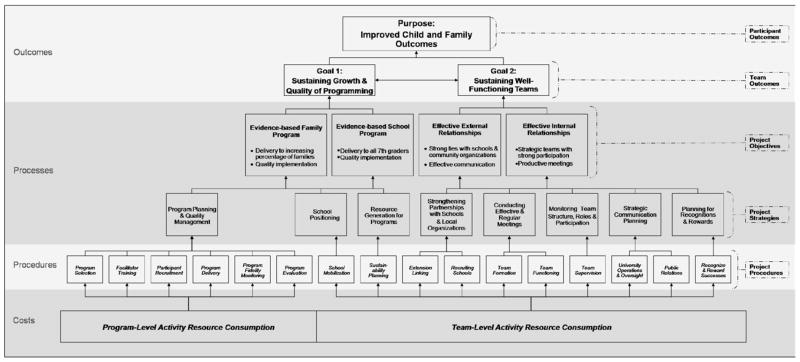

To define this analysis, we adopted the Costs → Procedure → Process Outcome analysis model (CPPOA) for the evaluation of human service systems [22]. The CPPOA model conceptualizes a service system hierarchically, wherein system costs go toward specific system procedures (i.e., coordinated system activities used for a specific purpose) that, in turn, support broader processes, which are believed to fulfill systemic goals (i.e., these processes represent the larger constructs that mediate an intervention and its subsequent outcomes). The CPPOA model is thus predicated on the assumption that by providing the necessary resources and making use of the appropriate procedures, desired outcomes can be achieved. To guide this cost analysis, we apply this framework to PROSPER model by expanding the system’s “Sustainability-Model,” which articulates the delivery system’s processes and outcomes, to include system procedures and costs (Figure 1; [3]).

Figure 1.

PROSPER Costs → Procedures → Processes → Outcomes.

PROSPER’s sustainability model illustrates that the delivery system’s purpose is to improve child and family well-being, which may be achieved by fulfilling two goals: (1) sustaining growth of EBPIs, and (2) sustaining well-functioning teams. These two goals represent the outcomes for PROSPER, whereas the improvement of child and family well-being represents the ultimate public health impact desired. To achieve these team and participant outcomes, four objectives were described, which were further broken down into eight project strategies. These objectives and strategies represent the processes believed to produce the desired system outcomes (Figure 1). The sustainability model thus represents the system’s outcomes and processes, but does not articulate the specific procedures and costs necessary to enact them. Consequently, to assess resource consumption, we ultimately sought to identify the costs of the project procedures and activities that are required to enact each procedure (Figure 1, bottom row).

Bounding

The process of bounding further defines specific parameters of the analysis (perspective, period, scope) and provides consistent inclusion criteria necessary to obtain accurate estimates. As noted earlier, the perspective of a cost analysis guides the selection of what costs to include in the evaluation (e.g., intervention curriculum represents a cost to the program provider, but generally not a cost to the participant [20]). This analysis involved evaluation of four cost perspectives (Table 1). These perspectives provide insight into the resource inputs required from each respective group to support PROSPER. We estimated costs between the first 5 years of the PROSPER delivery system’s randomized controlled trial (period). Additionally, we limited inclusion of resource inputs to those directly related to the two goals articulated in the sustainability model (scope).

The next four steps (identification, quantification, valuation, and sensitivity analyses) outline how we identified, quantified, and valued PROSPER’s costs through a process of qualitative cost analysis, activity-based costing, and the application of economic models, respectively.

Identification

First, all assets and activities that consume resources were identified through a qualitative cost analysis to capture the wide variety of inputs essential to programming goals [23]). The qualitative cost analysis mapped the infrastructure expenditures of the PROSPER system by identifying resource consumptive activities across system levels (i.e., Local Team ⇔ CES-System ⇔ University Team). For instance, nine general resource consumptive activities related to sustainability planning were identified, which in turn informed the quantification and valuation steps (e.g., grant writing, fund-raising). Further description of this process is available in the Appendix, Section B.

Quantification

We then quantified the resource consumption of PROSPER model to estimate the system’s financial costs. We used activity-based costing to assign expenditures to specific project procedures (i.e., strategies). For instance, the costs of PROSPER’s resource generation process were estimated by considering the nine identified activities that support the “sustainability planning” procedure (Figure 1). In turn, by monetizing the time, equipment, travel, and space requirements to successfully carry out each sustainability planning activity, we obtained an aggregate estimate of the delivery system’s expenditures on resource generation.

Valuation

After quantification, we estimated PROSPER’s economic costs by valuing different measures of resource consumption (e.g., financial costs, participant time) to determine the opportunity cost of PROSPER’s operation. Opportunity costs represent the difference in costs from using the same resources for PROSPER instead of the next best alternative (i.e., program delivery without a prevention system [17]) and differentiate economic costs from financial costs [24]. Including these costs is important for valuing resource consumption that is not generally captured in project budgets (e.g., volunteer time, in-kind donation, participant time, sustainability funding), but is essential to the system’s success [25]. Finally, costs were discounted at a 3% rate during the first year of system operations (2004) and adjusted for inflation in 2010 dollars.

Sensitivity analyses

Sensitivity analyses were used to evaluate uncertainty in the estimates [20]. This involved varying certain assumptions that were made concerning programming costs so as to consider estimate variability (thus a range of estimates are determined). To accomplish this, we conducted extreme-scenario analyses to describe the best- and worst-case cost estimates [26], whereby minimum and maximum costs of an activity are used to represent uncertainty in actual resource consumption. Within this analysis, uncertainty was mainly a product of implementation within a university research setting. Personnel time costs were disaggregated between time spent on installing the PROSPER system versus time spent conducting research each year (M = 41.1%, standard deviation = 5.1%). Consequently, range estimates for personnel time and expenses (e.g., equipment, space) were evaluated at approximately two standard deviations from the mean (30%–50%). University overhead was allocated at a low estimate common within non-university settings and a high estimate that reflected the full proportion of personnel time expended on nonresearch activities (15%–41.1%; [17]). Finally, a range of allocation rate for program curriculum costs was considered to account for the possibility of no or varying expenditures to procure curriculum (0%–100%; further discussion available in Appendix, pp. 11).

Results

On the basis of the six-step cost analysis of PROSPER’s resource consumption, we first provide the total financial and economic costs of the system. We then break down the financial estimates of cultivating PROSPER’s infrastructure as well as the resource requirements for adoption, implementation, and sustainability of EBPIs within the delivery system.

Financial and economic costs of PROSPER

This analysis was conducted during a randomized controlled trial of PROSPER’s implementation within a university context. Consequently, there was uncertainty whether implementation of PROSPER in a nonresearch context would require the same level of resources, considering university-level expenditures (as expenses are expected to be lower). This uncertainty is reflected in our results as range estimates. The total financial costs of implementing PROSPER trial for >5 years ranged from $2,663,434 (“best-case”) to $3,529,196 (“worst-case”). The total economic costs for the entire PROSPER system were estimated between $4,343,346 and $5,209,135, which include all volunteer and participant opportunity costs as well as resources generated by local sustainability efforts (Table 2).

Table 2.

Cost estimates of PROSPER delivery system

| Low estimate | High estimate | Inclusion criteria | |

|---|---|---|---|

| Cost perspective | |||

| Total economic costs | $4,343,346 | $5,209,135 | Total opportunity cost of implementing the PROSPER project to partner universities, the Cooperative Extension System, and local communities (i.e., includes team co-leader, team member and participant costs)—excludes systemic transfer costs |

| Total financial cost | $2,663,434 | $3,529,196 | All resources consumed by the PROSPER system (i.e., University, Cooperative Extension and local teams)—excludes team co-leader, team member, and participant opportunity costs |

| Financial cost of resources consumed by University prevention team (n = 1) | $1,435,959 | $1,871,851 | All resource consumed by the University Prevention Team, including all prevention personnel salary and wage expenditures as well as all operations costs (including university overhead)—excludes Cooperative Extension and local costs |

| Cooperative extension system (n = 1) | $1,030,876 | $1,343,803 | All resources consumed by the Cooperative Extension System, including all extension faculty, prevention coordinator and local team leader salaries as well as direct expenditures by the Cooperative Extension System for operations (e.g., travel, copying, and printing)–excludes university and local community costs |

| Local prevention team (n = 7) | $81,488 | $106,224 | All resources consumed by the Local Prevention Teams (per team) including team functioning and direct costs of program implementation (e.g., facilitators, materials, meals), but not program-level capacity building expenditures (i.e., curriculum and training) |

| Average costs of program delivery | |||

| Day of implementation’ family program costs (per family attending) (n = 1,127) | $278 | $348 | All nonresearch incentives (recruitment, prizes, meals, child care), curriculum, and facilitator costs (training and implementation); (high estimate also includes curriculum and supplies) divided by the number of families graduating from family program |

| “Day of implementation” school program costs (per student) (n = 8,049) | $9 | $27 | All curriculum and training costs (high estimate also includes facilitator, incentives, and program supplies) divided by the number of students participating in the school program |

| Total financial costs (per program) (n = 9,176) | $311 | $405 | All resources consumed by the PROSPER system (i.e., University, Cooperative Extension and Local teams)—excludes team co-leader, team member, and participant opportunity costs–divided by total youth serve |

Using these estimates, we were able to assess the average costs of delivering EBPIs and supporting the local teams. First, the “day-of-implementation” costs, as calculated in previous analyses [27,28], were estimated. The average cost per family that attended the family intervention (n = 1,177) was between $278 and $348, and the program cost per student to deliver the school program (n = 8,049) ranged from $9 to $27 per student (Table 2). The average costs of program delivery, including all team level costs, were then calculated. These financial costs ranged between $311 and $405 per youth served, and the average economic cost ranged from $486 to $580 per youth served. Additionally, the average financial and economic costs of supporting a local team were considered. The financial estimate for the total cost of supporting a local team for a year ranged from $81,488 to $106,224, whereas the economic costs ranged from $129,485 to $154,221 (Table 2).

Cost estimate breakdown for decision makers

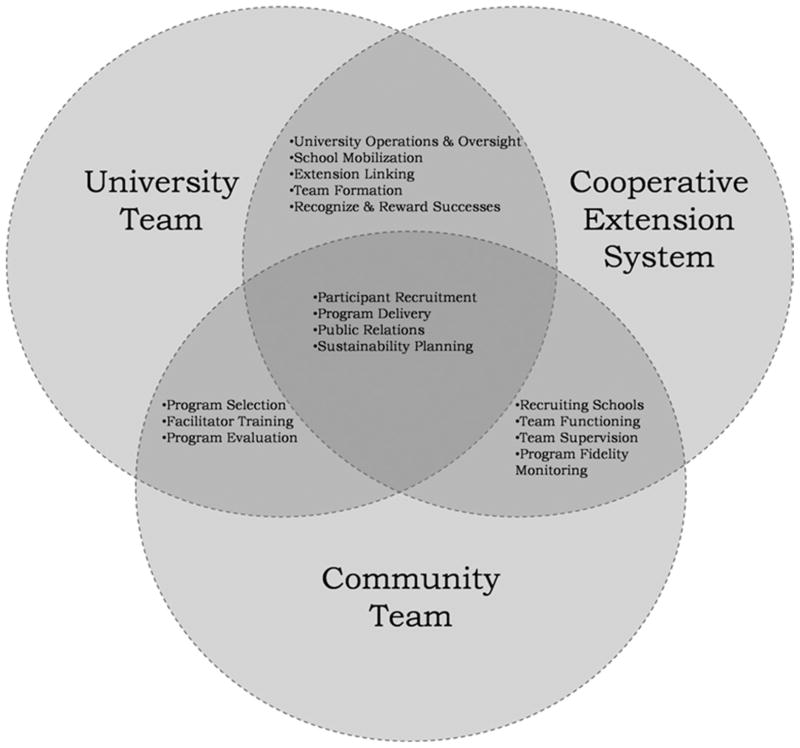

Financial cost estimates of PROSPER model illustrate a complex infrastructure that developed during the first 5 years (Figure 2). Additionally, estimates provide insight into the differential resource needs of using PROSPER to adopt, implement, and sustain EBPIs.

Figure 2.

Intra-system overlap of PROSPER project procedures. Note: Figure 1 denotes overlap between the three project systems (i.e., Local Team ↔ Cooperative Extension System ↔ University Team) in the project procedures utilized to implement PROSPER. To estimate systemic costs of implementation, procedures were further dissaggregated based on specific activities to differentiate resource consumption between the different project systems.

Financial costs of installing PROSPER’s infrastructure

This analysis of PROSPER’s installation costs illustrated the developmental process of cultivating new health service systems. We provide proportions of the total financial resources consumed by different system procedures on an annual basis in the following paragraphs (Figures A8, A9).

In the first year of PROSPER’s implementation, we found that resources were allocated to facilitate partnerships between extension and prevention faculty as well as identification and community sites (i.e., University Operations and Oversight: 22.3% of annual expenditures). This nascent infrastructure funneled resources toward the cultivation of extension capacity; resources were then used to recruit local extension agents (extension linking: 4.9%) and school administrators to lead the community teams (school recruitment: 1%). As extension and team leaders increased membership (team formation: 3.8%), resources were allocated to strengthen partnerships between the local team and school district (school mobilization: 6.0%). After teams selected their family program (program selection: 1.9%), the university partners organized training opportunities (facilitator training: 24.8%) and began to provide technical assistance regarding family recruitment (participant recruitment: 3.9%).

During the second and third years, resources increasingly went to sustainability planning (Y2 = 1.2%, Y3 = 5.8%). Expenditures to facilitate team formation declined from year 2 (2.7%) to year 3 (.5%). At the program level, facilitator training continued (Y2 = 11.3%, Y3 = 10.2%) as teams selected their school program (Y2 = .8%). Increased emphasis was placed on participant recruitment (Y1 = 3.9%, Y2 = 11.3%, Y3 = 13.9%) as more resources were expended on the family program. Expenditures on program delivery more than tripled in the second year as programming ramped up and the school program began (22.6%). With the rise in delivery, the amount of resources expended on program fidelity monitoring also increased (Y1 = 3.6%, Y2 = 5.4%).

Overall, expenditures on team activity costs decreased in year 4, except for sustainability planning which showed an increase (Y4 = 10.4%, Y5 = 11.8%). Program=level activity costs also began to decrease during year 4. In particular, expenditures on program delivery decreased during the last 2 years of the analysis (Y4 = 4.4%, Y5 = 7.1%).

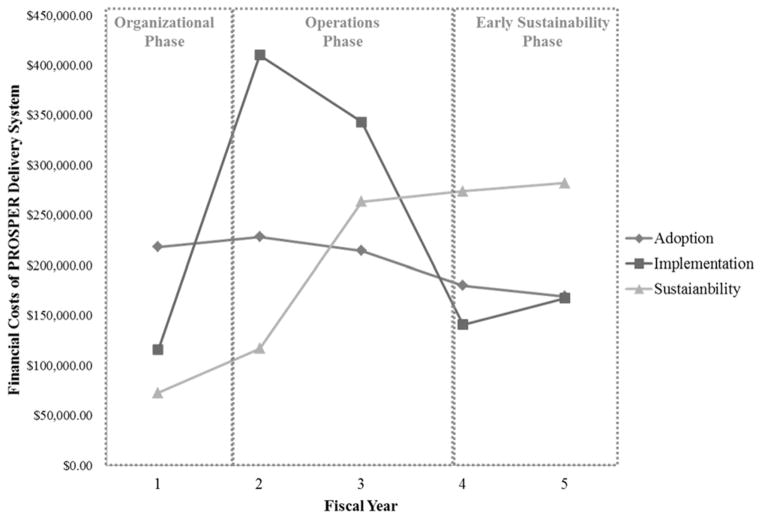

Adoption, implementation and sustainability costs

PROSPER activity cost estimates were aggregated to determine the financial costs of adopting, implementing, and sustaining EBPIs within PROSPER. These costs are presented in Figure 3 and illustrate variation across time that is consistent with our developmental model for achieving team goals (Appendix, Section B). These estimates move beyond the “day-of-implementation” costs and represent the financial costs of building, growing, and maintaining PROSPER model.

Figure 3.

Financial costs of adopting, implementing, and sustaining EBPIs within the PROSPER delivery system.

Discussion

This cost analysis represents one of the first formal evaluations of prevention system resource consumption. These estimates compare favorably with costs previously projected for the standard implementation of family (“Strengthening-Families-Project 10–14”) and school EBPIs (“Life-Skills-Training,” “Project-Alert,” and “All-Stars”) used by the PROSPER communities. Specifically, previous evaluations estimated the costs of the “Strengthening-Families-Program 10–14” at approximately $851 per family with an estimated societal benefit worth $6,656 [21]. In addition, the costs of the three school programs implemented in PROSPER (“Life-Skills-Training,” “Project-Alert,” or “All-Stars”) were estimated to be $49, $3, and $29 per student, respectively, with societal benefits of approximately $746, $58, and $169, respectively [21]. For instance, delivered within PROSPER, the “day-of-implementation” costs of delivering the “Strengthening-Families-Project 10–14” were between $502 and $572 less than implementing outside of PROSPER. Assuming the “Strengthening-Families-Project 10–14” was as effective as it was in previous implementations, this investment would represent a societal net benefit of between $6,307 and $6,377 per family. Reductions in school program delivery costs were less clear, with a decrease in cost between $22 and $40 for the “Life-Skills-Training,” and $2 and $20 for “All-Stars” programs, but an increase between $5 and $23 for the “Project-Alert” program. Assuming the school programs were as effective as in previous implementations, this investment would represent a total per student societal net benefit worth between $719 and $737 for “Life-Skills-Training,” $142–$160 for “All-Stars,” and $31 and $49 for “Project-Alert” (Table A5).

Conclusions

Although this analysis has not assessed specific gains in efficiency, it does provide preliminary evidence that the costs of program delivery within PROSPER may be lower than without the support of a delivery system. Specifically, we see a decrease in the “day-of-implementation” costs for delivering the family and two school (“Life-Skills-Training” and “All-Stars”) EBPIs within PROSPER. These lower costs are masked by the higher systemic and societal costs of the delivery system—specifically, the team-level resource consumption appears to increase the costs of implementing EBPIs. This poses interesting questions for researchers studying the economic impact of prevention. Specifically, are we concerned with understanding the cost of a program as it “sits on the shelf,” or the cost of getting the program to communities who would benefit from its sustained implementation? When we estimate the costs of EBPIs, are we doing enough to consider the total costs of adoption, implementation, and sustainability?

Prevention systems, such as PROSPER, not only deliver EBPIs, but also attempt to ensure that facilitators maintain high implementation quality. These systems spread the word about available programs and focus on getting participants “in the door.” Perhaps of greatest importance is the ability of prevention systems to cultivate local capacity so that community efforts achieve a lasting public health impact. Yet, rarely are the resources required for these activities included in program cost estimates. The neglect of these costs is, in many ways, analogous to the child who receives his or her most desired present during the holidays, only to learn that his or her parents forgot the batteries. As we conduct further research on program diffusion, it may be time to make evaluation of diffusion efficiency a higher priority—if for no other reason—so that we can allocate the resources to put the batteries in the box.

Supplementary Material

Appendix. Supplementary data

Supplementary data associated with this article can be found, in the online version, at doi:10.1016/j.jadohealth.2011.07.001.

References

- 1.Glasgow RE, Klesges LM, Dzewaltowski DA, Bull SS, Estabrooks P. The future of health behavior change research: What is needed to improve translation of research into health promotion practice? Ann Behav Med. 2004;27:3–12. doi: 10.1207/s15324796abm2701_2. [DOI] [PubMed] [Google Scholar]

- 2.Spoth RL, Greenberg MT. Toward a comprehensive strategy for effective practitioner–scientist partnerships and larger-scale community health and well-being. Am J Community Psychol. 2005;35:107–26. doi: 10.1007/s10464-005-3388-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Spoth R, Greenberg M. Impact challenges in community science-with-practice: Lessons from PROSPER on transformative practitioner-scientist partnerships and prevention infrastructure development. Am J Community Psychol. 2011;48:106–19. doi: 10.1007/s10464-010-9417-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Backer T. The failure of success: Challenges of disseminating effective substance abuse prevention programs. J Community Psychol. 2000;28:363–73. [Google Scholar]

- 5.Hawkins JD, Catalano RF, Arthur MW. Promoting science-based prevention in communities. Addict Behav. 2002;27:951–76. doi: 10.1016/s0306-4603(02)00298-8. [DOI] [PubMed] [Google Scholar]

- 6.Hawkins D, Oesterle S, Brown EC, et al. Results of a type 2 translational research trial to prevent adolescent drug use and delinquency: A test of communities that care. Arch Pediatr Adolesc Med. 2009;163:789–93. doi: 10.1001/archpediatrics.2009.141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Spoth R, Redmond C, Shin C, et al. Substance use outcomes at 18 months past baseline from the PROSPER community-university partnership trial. Am J Prev Med. 2007;32:395–402. doi: 10.1016/j.amepre.2007.01.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chinman M, Imm P, Wandersman A. Getting To Outcome 2004: Promoting Accountability Through Methods and Tools for Planning, Implementation, and Evaluation. Santa Monica, CA: RAND Corporation; 2004. http://www.rand.org/pubs/technical_reports/TR101. [Google Scholar]

- 9.Shediac-Rizkallah MC, Bone LR. Planning for sustainability of community health programs: Conceptual frameworks and future directions for research, practice and policy. Health Educ Res. 1998;13:87–108. doi: 10.1093/her/13.1.87. [DOI] [PubMed] [Google Scholar]

- 10.Adelman HS, Taylor L. Creating school and community partnerships for substance abuse prevention programs. Commissioned by SAMHSA’s Center for Substance Abuse Prevention. J Prim Prev. 2003;23:331–69. [Google Scholar]

- 11.Molgaard V. The extension service as key mechanism for research and services delivery for prevention of mental health disorders in rural areas. Am J Community Psychol. 1997;25:515–44. doi: 10.1023/a:1024611706598. [DOI] [PubMed] [Google Scholar]

- 12.Spoth D, Greenberg M, Bierman K, Redmond C. PROSPER community-university partnership model for public education systems: Capacity-building for evidence-based, competence-building prevention. Prev Sci. 2004;5:31–9. doi: 10.1023/b:prev.0000013979.52796.8b. [DOI] [PubMed] [Google Scholar]

- 13.Spoth R, Guyll M, Lillehoj CJ, Redmond C, Greenberg MT. PROSPER study of evidence-based intervention implementation quality by community-university partnerships. J Community Psychol. 2007;35:981–9. doi: 10.1002/jcop.20207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Spoth R, Guyll M, Redmond C, Greenberg MT, Feinberg ME. Sustainability of evidence-based intervention implementation quality by community-university partnerships: The PROSPER study. Am J Community Psychol. doi: 10.1007/s10464-011-9430-5. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Spoth R, Redmond C, Clair S, et al. Preventing substance misuse through community-university partnerships and evidence-based interventions: PROSPER outcomes 4. 5 years past baseline. Am J Prev Med. 2011;40:440–7. doi: 10.1016/j.amepre.2010.12.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Spoth R, Redmond C, Clair S, et al. Preventing substance misuse through community health partnerships and evidence-based interventions: PROSPER RCT outcomes 4. 5 years past baseline. Am J Prev Med. 2011;40:440–7. doi: 10.1016/j.amepre.2010.12.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Drummond M, O’Brien B, Stoddart G, Torrance G. Methods for economic evaluation of health care programmes. Oxford, NY: Oxford Medical Publishing Group; 1997. [Google Scholar]

- 18.Foster EM. How economists think about family resources and child development. Child Dev. 2002;73:1904–14. doi: 10.1111/1467-8624.00513. [DOI] [PubMed] [Google Scholar]

- 19.Foster EM, Porter M, Ayers T, Kaplan D, Sandler I. Estimating the costs of preventive interventions. Eval Rev. 2007;31:261–86. doi: 10.1177/0193841X07299247. [DOI] [PubMed] [Google Scholar]

- 20.Haddix A, Teutsch S, Corso P. Prevention effectiveness: A guide to decision analysis and economic evaluation. 2. Oxford, NY: Oxford University Press; 2003. [Google Scholar]

- 21.Aos S, Lieb R, Mayfield J, Miller M, Pennucci A. Benefits and costs of prevention and early intervention programsfor youth. Washington State Institute for Public Policy. 2004 Available at http://www.wsipp.wa.gov/rptfiles/04-07-3901.pdf.

- 22.Yates BT. Analyzing costs, procedures, processes, and outcomes in human services: An introduction. Thousand Oaks, CA: Sage Publications; 1996. [Google Scholar]

- 23.Rogers PJ, Stevens K, Boymal J. Qualitative cost–benefit evaluation of complex, emergent programs. Eval Program Plann. 2009;32:83–90. doi: 10.1016/j.evalprogplan.2008.08.005. [DOI] [PubMed] [Google Scholar]

- 24.Foster M, Dodge KA, Jones D. Issues in the economic evaluation of prevention programs. Appl Dev Sci. 2003;7:76–86. doi: 10.1207/S1532480XADS0702_4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Gold M, Siegel J, Russell L, Weinstein M. Cost-effectiveness in health and medicine. Oxford, NY: Oxford University Press; 1996. [Google Scholar]

- 26.Briggs AH. A Bayesian approach to stochastic cost-effectiveness analysis. Health Economics. 1999;8:257–61. doi: 10.1002/(SICI)1099-1050(199905)8:3<257::AID-HEC427>3.0.CO;2-E. [DOI] [PubMed] [Google Scholar]

- 27.Mihalopoulos C, Sanders R, Turner KM, Murphy-Brennan M, Carter R. Does the triple p-positive parenting program provide value for money? Aust N Z J Psychiatry. 2007;41:239–46. doi: 10.1080/00048670601172723. [DOI] [PubMed] [Google Scholar]

- 28.Spoth R, Guyll M, Day S. Universal family focused interventions in alcohol use disorder prevention: Cost effectiveness and cost benefit analyses of two interventions. J Stud Alcohol. 2002;63:220–8. doi: 10.15288/jsa.2002.63.219. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.