Abstract

In primary care settings, follow-up regarding the outcome of acute outpatient visits is largely absent. We sought to develop an automated interactive voice response system (IVRS) for patient follow-up with feedback to providers capable of interfacing with multiple pre-existing electronic medical records (EMRs). A system was designed to extract data from EMRs, integrate with the IVRS, call patients for follow-up, and provide a feedback report to providers. Challenges during the development process were analyzed and summarized. The components of the technological solution and details of its implementation are reported. Lessons learned include: (1) Modular utilization of system components is often needed to adapt to specific clinic workflow and patient population needs (2) Understanding the local telephony environment greatly impacts development and is critical to success, and (3) Ample time for development of the IVRS questionnaire (mapping all branching paths) and speech recognition tuning (sensitivity, use of barge-in tuning, use of “known voice”) is needed. With proper attention to design and development, modular follow-up and feedback systems can be integrated into existing EMR systems providing the benefits of IVRS follow-up to patients and providers across diverse practice settings.

Keywords: Interactive voice response system, Primary care, Follow-up, Feedback

Introduction

In high-volume contemporary primary care settings, follow-up regarding the outcome of acute outpatient visits is not systematically collected for all patients. As a result, reports of negative patient outcomes often come to light during subsequent care visits, or reach providers at a later time in the form of reports from other practitioners in the local health system. In many cases, once the patient leaves the clinic there is no feedback to providers as to the resolution of the health care issue addressed. The implementation of routine patient contact after each visit could potentially capture unexpected outcomes early and allow providers the opportunity to intervene in a timely fashion to prevent, or mitigate, negative outcomes. However, the existing time demands on clinic staff make the prospect of routinely contacting patients for follow-up a daunting task. Interactive voice response technology with automated calling can provide a feasible, low cost alternative to contact patients for routine follow-up in ambulatory care settings. [1–6]

Over the past decade, multiple studies have demonstrated the benefits of integrating interactive voice response systems (IVRS) to screen and deliver interventions for a broad variety of medical conditions in the outpatient care setting. [3, 7–14] These technologies provide cost effective alternatives for gathering additional patient data used to enhance subsequent care. Many IVRS initiatives in the extant literature refer to stand alone research projects that target specific medical conditions and are not integrated into existing routine outpatient care services. [5, 14–17]

Proponents often struggle with integration of IVRS into the routine care process. [3] As part of a larger study where we evaluated the impact of strategies for patient follow-up and reporting outcomes to providers, we developed an automated system to provide routine post-acute visit follow-up. The system was designed to make telephone calls and interview patients after their visits without adding additional demands to clinic staff or disrupting established clinic workflow. Specifically, we: (1) designed and developed a technologically flexible, interoperable IVRS as a modular attachment capable of interfacing with multiple electronic medical record (EMR) products without requiring alteration of their underlying source code; (2) integrated this system successfully into various clinic operations without disrupting existing clinic workflow; and finally (3) used the system to report timely feedback regarding patient outcomes to providers at the point of care, thus closing the feedback loop. The present manuscript focuses on the development of the systems for post-visit follow-up and the lessons learned in developing these systems. The overall outcomes of use of the system, including the number of calls made and patients' responses are described in a separate manuscript.

Materials and methods

Settings

The Closing the Feedback Loop (CFL) project was funded by the Agency for Healthcare Research and Quality (AHRQ). Three primary care clinics using EMRs for patient care participated in this study. Two of the clinics are a part of the University of Alabama at Birmingham (UAB) Health System. Both UAB clinics provide longitudinal primary care to patients; one is a general Family Practice clinic, and the other provides care for individuals with human immunodeficiency virus (HIV) infection. The third clinic site provides primary and specialty care to patients with cerebral palsy.

The Family Practice site utilized Allscripts™ TouchWorks, a commercially available EMR. The clinic serving HIV infected patients utilized an internally developed EMR where clinical, diagnostic and medication data were available to providers. The clinic serving patients with cerebral palsy used an open source outpatient oriented EMR known as World-VistA, based on the EMR used by the U.S. Department of Veterans Affairs.

Design meetings took place at the University of Alabama at Birmingham where software requirements were finalized by the study investigators and collaborators from each site. Software development was carried out in collaboration with Physician Innovations, LLC, a healthcare software development and consulting firm. This study was approved by the UAB Institutional Review Board.

Workflow for automated feedback loop

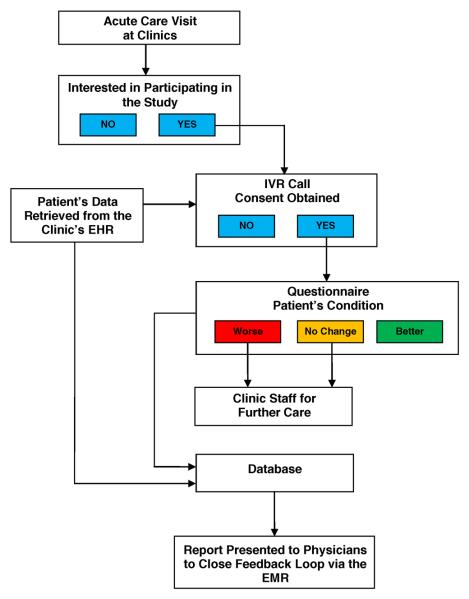

In planning the development and implementation of our automated feedback loop, we outlined the following processes for initiation and completion of patient follow-up after acute care visits:

Following acute care visits, patients would be provided an opportunity to receive a follow-up phone call in order for clinic staff to subsequently assess their status.

Patients choosing to participate would fill out a paper consent form at the clinic. A clinical coordinator would then update the patient's record in the study database to indicate consent along with contact details (i.e. preferred phone number and time/day to be called). Only those patients who consented would have their data uploaded from their clinic's EMR to enable an IVRS follow-up phone call one-week after their acute care visit. The uploaded data included basic demographic, diagnostic, and medication data as well as the aforementioned contact details. A second verbal informed consent would be obtained from patients at the time of the IVRS follow-up call and once consent was recorded, the IVRS would query patients for information on their current health status using a standardized questionnaire. The data would be stored in a HIPAA-compliant environment.

Patients reporting continued or worsening symptoms during the IVRS follow-up call would be automatically directed/triaged to appropriate clinic staff to assist in their care.

Data collected by the IVRS, along with data extracted (pre-populated) from the EMR, would be stored in a database created to interact with both the IVRS and EMR systems.

Using information stored in the database, patient responses would be summarized in a standardized report form given to providers via their EMR. The report included data for both individual patients as well as a summary of aggregate data for all patients seen by the providers during the study period.

Finally, providers would acknowledge receipt of the report. The report would not require immediate action on the part of the physician because urgent problems would have already been handled at the time of the follow-up call via the usual clinic triage protocol (see step 3 above).

Figure 1 illustrates these steps.

Fig. 1.

Workflow for automated feedback loop

The successful implementation of these processes required the development of multiple interacting systems including:

An automatic scheduling system for the follow-up call.

A centralized database housed in a secure server to store the data collected by the IVRS during the follow-up call.

An interface between this centralized database and multiple EMR products that could be used to extract pertinent clinical data used for patient follow-up.

An application to aggregate the data into a report for the providers and to display the report at the point of care during subsequent provider log-in to their clinic's EMR.

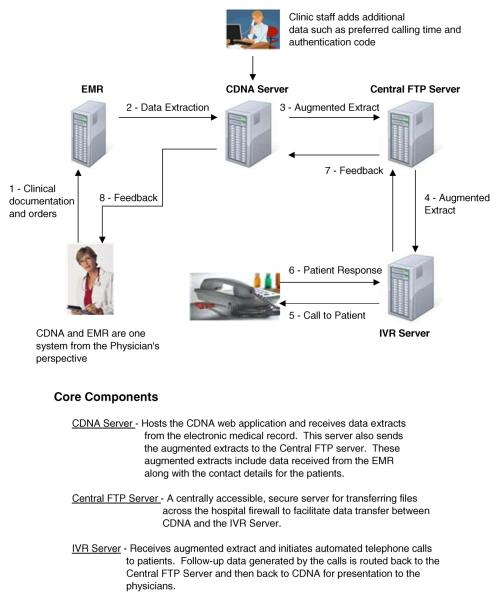

Clinical documentation and notification application (CDNA©)

In order to address the aims above and to interface with three different EMRs, without altering the underlying source codes, we developed an intermediate application, known as CDNA, to which data from each EMR could be reported via automated data extracts (Fig. 2).

Fig. 2.

Overall schematic of data collection and data sharing between project components

CDNA provides a user interface to serve two groups of users: 1) clinical coordinators who augment the data extracts with patient contact details (i.e. preferred call time and phone number); 2) physicians who view follow-up data on their patients. By implementing the project's user interface changes in a physically separate, but carefully interfaced application, we circumvented the issue of modifying each organization's core EMR system.

Flexible data entry was crucial in the design of the CDNA as it would have to accommodate different data sources, including standardized extracts from an EMR and manual data entry. CDNA was designed to: (1) automatically extract select clinical data from the EMR that either a human or an automated IVRS could use to conduct post-visit follow-up telephone interviews; (2) allow the interview data to be recorded; and (3) provide individual patient reports to providers on the outcomes of the interview. CDNA was designed as a universal add-on module capable of achieving interoperability with any existing EMR. The product is a web-based application created with open-source technology. CDNA can receive data from extract files from EMR systems (i.e. demographics and other data on consenting patients) in a variety of formats including comma separated value (csv) files. Available EMR products that can produce data extracts in virtually any structured text-based format can achieve integration with CDNA. Our approach to launching the CDNA application for specific users involved login and patient context synchronization (i.e. User authentication through the EMR automatically resulted in authentication into CDNA. Similarly, selecting a given patient in the EMR automatically caused the same patient to be selected in CDNA). Our approach achieves both data and desktop interoperability with EMRs from the standpoint of the end user (who perceives the EMR and CDNA to be a single application).

Upon provider sign-on to their local EMR, the CDNA application concurrently displayed a summary report of the outcomes of that provider's patients enrolled in the study. CDNA was designed to provide intuitive visual cues to physicians in order to facilitate efficient feedback that could be processed “at a glance.” Color coding was used in the summary report to illustrate individual and aggregate data and was modeled after the widely understood traffic-light coloring scheme (red for patients who have developed new or worsening issues, yellow for patients without improvement and green for those with resolved or improving issues). A pie-chart was used to show patient outcomes from the entire clinic population for benchmarking purposes. In addition, a listing for each patient sorted by status to draw attention to those presumably needing the most attention (i.e. red first, followed by yellow and green) was provided. Through bolded text, providers are prompted to process the individual feedback resulting in a “clearing” of this feedback information.

The add-on module approach we employed for CDNA eliminates the need to modify a facility's core EMR. This was a critical point as modification of a core EMR carries the risk of introducing defects as well as voiding a product's Certification Commission for Health Information Technology (CCHIT) certification. [18] Additionally, the modification of a core EMR would almost certainly require assistance from the EMR vendor which adds a great deal of cost and complexity to a project. Lastly, by designing CDNA to interface with existing EMRs we achieved significant project cost savings by leveraging a single development effort that could be used across multiple EMRs.

Interfacing data between EMRs and CDNA

Only those patients who had agreed to be called were entered into CDNA. To optimize operational efficiency, CDNA was pre-loaded with basic patient demographic information to reduce the data entry burden on clinic staff. To accomplish the preloading we developed custom data interfaces between the cerebral palsy and the HIV Clinics' respective EMRs. In both cases, at scheduled times each night, new patient data were extracted from the EMRs and sent directly to the CDNA server via secure file transmission. CDNA was configured to import this data 30 min later (to accommodate any unexpected transmission delays). If there were missing or incomplete demographic data, due to CDNA's web interface, discrepancies were easily detectable and could be corrected efficiently in collaboration with clinic staff. In the case of the Family Practice clinic, data queries were completed through the EMRs' reporting functions and data were then uploaded to CDNA.

Once the data were in CDNA, extracts were produced in a format intended for import by an IVRS. In this scenario, CDNA essentially serves as an intermediary between the EMR and the IVRS accepting data extracts, and also allows for the manual addition of any needed data not present in the EMRs (e.g. preferred contact phone numbers, preferred day/time to call, authentication codes, etc.).

Interactive voice response system

The IVRS we developed ran on a Dell PowerEdge R200 server running the Windows Server 2003 R2 operating system. The server had a Dialogic telephony card with four analog ports to facilitate calling up to four patients concurrently. The telephony card served as the interface between the server and the physical telephone lines. The proprietary IVRS software application drove the hardware and used speech recognition and text-to-speech technology as well as traditional use of touch buttons on the phone to interpret responses. For our application we used the speech recognition features to improve usability and eliminate the need to touch buttons on the phone. This is a significant advantage for recipients of calls utilizing mobile phones with small or difficult to press buttons and facilitates response for users whose hands are occupied.

We were concerned that computer-generated speech might result in increased hang-ups, and limited its use to variable components of the questionnaire such as patient names. We utilized high-quality pre-recorded audio from a clinic representative as much as possible. Thus, the initial verbal introduction was indistinguishable from a live human, and call recipients heard a “familiar voice” from the specific clinic. Complete sentences are formed by enunciating prerecorded speech and computer generated speech in a continuous stream. For example, “Hi, may I speak with (pre-recorded) “John Smith?” (computer generated). Both open ended and multiple choice questions were used.

Automated data transfers and imports

Data were exchanged between the EMR, CDNA, and the IVRS via a series of precisely timed automated uploads. The nightly EMR extracts to the CDNA included basic demographic, diagnostic, and medication data. Sixty minutes after the EMR extract, CDNA sent data to a central secure server (ftp server) that served as a university-wide resource for secure data exchange. CDNA data were uploaded to the IVRS from the central server, while IVRS data containing patient responses to the administered surveys were uploaded to the central server and subsequently accessed by CDNA. Through CDNA, IVRS data reached clinicians as part of standard reports when they logged-on to their local EMR. These standard reports were shown immediately upon login to the EMR since the CDNA application is automatically launched in parallel as noted previously (and the reports specific to that user are displayed in the CDNA window). The central ftp server functioned as a secure bridge that allowed data to be sent bi-directionally across firewalls in encrypted form, ensuring the safety of sensitive patient information.

The IVRS also produced an anonymous extract twice daily for use by the research team. The extract is fully HIPAA compliant and excluded all identifiable patient data. Most data from the IVRS were discrete coded responses, but in cases where the patient had to explain something the research extract included recordings of patient responses to the IVRS administered questionnaire in the wav file format.

Summary of patient safety and security measures

As noted above we have taken many steps to protect the patient's safety and confidentiality and security of the data.

Patients who indicated that they were no better or were worse were automatically routed directly to their clinic where a clinic staff member could address their concerns.

CDNA and the IVRS were housed in a HIPAA-compliant environment approved by the institution's Chief of Data Security.

The project protocol was approved by the Institutional Review Board of the University of Alabama at Birmingham.

Patients were given information about the project and voluntarily provided phone numbers by which they could be reached. To assure we reached the patient and not another member of the household, patients provided a four digit code that they used to authenticate themselves when the IVRS called. Formal verbal consent for the data collection was obtained at this time.

The questionnaires were structured to avoid asking for identifiable patient information, and there were no instances in which the voice files contained this type of information.

Results and discussion

In the following paragraphs we will discuss key challenges that arose during design and implementation of our IVRS. These challenges led to several important inferences that will be of assistance to others considering IVRS development and implementation.

Modular use of the system

Because of the different populations and workflows in the three settings, different combinations of the components of the full system were used at each site. The family practice setting used the IVRS to make follow-up phone calls, but no direct interface between its EMR and CDNA was built. Rather, an extract generated with the existing reporting functions of its commercial EMR was sent to CDNA. Additionally, it imported the data collected and provided feedback reports to providers within its own system. The cerebral palsy clinic used CDNA to schedule phone calls and present feedback reports to providers, but because of the limitations of its patient population, it followed up in person with patients rather than using the IVRS. The HIV clinic used all three combined systems: CDNA to automatically extract data from the EMR, the IVRS for the phone calls, and CDNA to present the reports to the providers.

Lesson When developing an automated system for use with multiple products within diverse settings, it is important to consider how to integrate new with existing technology. Both clinic workflow and impact of pre-existing conditions to successful communication with the targeted patient population must be considered. Designing and developing a technologically flexible system while understanding both clinic workflow and patient limitations are necessary for widespread integration of the system across multiple settings.

Local telephony environment

Decisions about both hardware and programming needed to take into account the local telephony environment where the system was implemented as well as where call recipients reside. As an example of call-recipient-dependent logic, we needed to program the IVRS to automatically add an area code and/or other prefixes depending on the recipient's phone number even if not provided by the patient. This was necessary to minimize long-distance charges. We tuned a Dialogic® card's technical parameters to our telephony environment. The local environment is determined by the telephony switch in use (in our case a Nortel® CS2100 switch). Additionally, the specific protocols supported also play a key role (i.e. ISDN versus Analog). In our case, seeking optimal audio quality we preferred IVRS technology that supported ISDN (digital) communication. However, the local environment dictated an Analog approach, necessitating a change in our plans. Utilization of an analog or digital approach impacts multiple parameters including recognition of hang-ups, the initiation of call transfers, and the speech volume level (i.e. excessive volume creates echoes while low volume is difficult to hear).

Lesson In order to obtain optimal outcomes, a complete understanding of the local (e.g., healthcare facility) and broader (e.g., patient's communication devices, connectivity, telephone environment/local networks, etc.) telephony environment is critical. Local communication departments' insight into the existing telephony infrastructure will provide a valuable resource throughout development and implementation. Early contact and engagement of these experts in IVRS development initiatives is strongly recommended. Allowing adequate planning time to fully address such issues will ultimately save time and false starts.

Testing the IVRS questionnaire

Questions asked by the IVRS are often guided by responses to prior queries (i.e., if a patient is not taking medications, then medication adherence questions are skipped). Due to the variety of branching paths possible through the IVRS questionnaire, comprehensive testing was necessary. Initially, investigators created a flow chart that outlined all potential logical paths and we tested these paths. The IVRS was then programmed to call selected team members for further testing. In the process, we tested male and female speakers, slow and fast speaking speakers, soft and loud voices, and foreign and US- born speakers. We also made sure the IVRS responded properly to errors or no answers. In case testing team members were not available at the pre-specified testing call times, and in order to provide additional flexibility to our testers, we created a call-in phone number for IVRS testing. The call-in line gave testers the ability to spend as much time as needed with the IVRS and to replay scenarios as necessary to outline discrepancies in the logical paths of the questionnaire.

In addition to testing out the questionnaire logic, we also found that we needed to modify some of the text-to-speech features of the system. Text-to-speech was used for patient names. The IVRS software was programmed to recognize the text for common abbreviations and say the full word or expression. Names that could be mistaken for abbreviations could result in inappropriate translations, as we found out in our testing. A humorous example is that when one of the authors whose first name is “Eta” tested the system, the system asked, “Is this Estimated Time of Arrival Berner?”

Upon completion of this initial testing phase, we initiated user-acceptance testing by creating outbound calls to clinic coordinators using specific testing scenarios, subsequently reviewing and validating the resulting data collected and ensuring the data propagated back into CDNA for clinician viewing (at the time of log-in to local EMR) was consistent with that captured in the IVRS.

Lesson To facilitate the meticulous user testing necessary to map out all logical branching paths we provided our team members with multiple ways to test, including waiting for pre-specified call times or calling in to a specific number. This increased flexibility, as well as facilitating and expediting testing.

Speech recognition and patient response tuning

We chose to use speech recognition because we felt it would be easier for patients. However, this adds complexity compared to relying on telephone keypad responses as speech recognition tuning requires careful consideration of multiple variables. These include the adjustment of speech recognition sensitivity (i.e. the probability threshold setting necessary to recognize a response) as well as the specific responses accepted (i.e. “yes”, “sure”, “okay”). All speech recognition systems utilize probabilistic models such as the Hidden Markov Model to recognize speech. In essence this means a computer can never be 100 % certain that a given word was spoken – only that a word was spoken with a certain probability. During IVRS development, establishing speech recognition sensitivity thresholds required much trial and error to achieve optimal results. Low settings, while more permissive, carry the risk of misrecognizing words, while high settings might lead to a correct word not being recognized. This trial and error testing needs to involve subjects of both genders as well as individuals with a variety of accents. In our testing, we included a variety of respondents with different voices and accents to make these adjustments.

“Barge-in” tuning represents another critical aspect of speech recognition tuning. This term refers to whether or not the patient can interrupt the IVRS's questioning by speaking. In some cases, we wanted the IVRS to be interruptible (e.g., as soon as the correct option is heard, the patient should be able to select it). In other situations, we needed an entire phrase, sentence, or paragraph to be heard before allowing interruptions (e.g. if performing informed consent for study participation through the IVRS). Establishing which parts of the IVRS script are permissible to “barge-in” and which are not will shorten the time burden on participants and ensure that information provided to users by the IVRS is complete and accurate. Other aspects that required testing included how much time delay to allow for patient responses, how to handle situations where patients appear not to understand the question, and other similar contingencies.

Finally, we opted for using prerecorded speech of a voice familiar to patients (a representative from one of the clinics) because we hoped it would reduce hang-ups. However, some individualized aspects, such as the patients' names, could not be prerecorded. Trying to prerecord as much as possible also required us to be clear and concise with our questions and actually simplified our questionnaire.

Lesson Setting aside ample time for speech recognition sensitivity, “barge-in” tuning and other aspects in the project schedule will pay large dividends in the project implementation phase and subsequent user satisfaction. We received positive feedback from study participants in regards to the use of recorded speech from a clinic representative (“known voice”) rather than computer generated speech. Despite the extensive testing that should be done during the development phase, it is also important to monitor the actual results from patients and be prepared to revisit the decisions if problems arise.

Strengths and limitations

Based on our experience, one of the strengths in our approach is the flexibility of integrating the modular feedback application into three different practices that serve distinct primacy care populations. Thus, the lessons learned from the implementation into the workflow of these varied settings may contribute to the work of other teams.

Our findings are limited by comprising the results of one experience within three distinct clinics in the Southeastern United States. In addition, our study was limited to English-speaking patients, so we did not test our IVRS scripts in other languages. Although our questions were intentionally very simple and were spoken, it is possible that patients with cognitive limitations might still have problems with the IVRS. The use of select technologies and products may also limit the applicability to other settings.

Our design lacks real-time data interfacing as feedback obtained via the IVRS is relayed back to clinicians in a batch manner (twice per day). This approach inevitably results in a potential delay in receiving relevant alerts. We made this design decision to reduce system complexity in conjunction with our knowledge that most clinicians in the study were reviewing electronic patient records only sporadically throughout the day. However, as EMR access via wireless mobile devices becomes ubiquitous, expectations of real-time feedback increase and our design will need to evolve accordingly. It is important to note that this decision did not affect patients with immediate needs getting immediate attention, since patients with those needs were transferred to the clinic immediately at the end of the call.

A final limitation is that although we fine-tuned the system with extensive formative evaluation we did not do formal testing of the reliability of the voice recognition.

Conclusion

IVRS technology has been used in both behavioral, medication and preventive care adherence as well as in the ambulatory monitoring and care of multiple chronic conditions. [3, 5–7, 9–12, 14, 15, 19–29] The accumulating body of evidence on the benefits of such technology in the management of chronic conditions must contend with a convoluted health informatics landscape where multiple proprietary EMRs are utilized. As part of this AHRQ-funded study, we developed a flexible, modular approach and were able to successfully work with three distinct EMR systems, including commercial, open source and “home grown” EMRs, making IVRS follow-up of patients feasible for routine care in different outpatient care settings serving different populations. By creating an intermediary CDNA application that was able to adjust to different EMRs' data extraction specifications, we were able to both upload data to our IVRS as well as return the results of IVRS surveys to clinicians. These results were delivered within the context of routine clinic care, in a standardized report that was automatically available when providers logged on to their clinic's specific EMR. Flexible strategies such as ours will be needed to successfully bring the benefits of IVRSs to diverse practices in our current fragmented national health informatics landscape where interoperability with a diverse array of EMRs is required. Through strategies such as ours, the increasingly reported benefits of IVRS interventions can be brought to bear across the spectrum of chronic medical conditions affecting our populace.

Acknowledgments

This project was supported by grant number HS017060 from the Agency for Healthcare Research and Quality (Eta S. Berner, EdD, Principal Investigator).

Integrity of Research and Reporting Ethical Standards: The experiments/research conducted and discussed here in comply with the current laws of the United States of America, the country in which the research was conducted. This study was approved by the Institutional Review Board of the University of Alabama at Birmingham.

Mark Cohen is now retired from United Cerebral Palsy where this study was conducted.

Footnotes

Conflicts of Interest CDNA is copyrighted by the University of Alabama at Birmingham. James Willig, Marc Krawitz, Anantachai Panjamapirom, Midge Ray and Eta Berner are inventors of CDNA. The other co-authors declare that they have no conflicts of interest.

Author's Contributions All authors contributed to aspects of the design and implementation of the IVRS system, participated in revision of key intellectual content in the present manuscript and approved the final submitted version.

References

- 1.Fewer bells and whistles, but IVR (interactive voice response) gets the job done. Dis. Manag. Advis. 2001;7(9):129–133. [No authors listed] [PubMed] [Google Scholar]

- 2.Abu-Hasaballah K, James A, Aseltine RH., Jr. Lessons and pitfalls of interactive voice response in medical research. Contemp. Clin. Trials. 2007;28(5):593–602. doi: 10.1016/j.cct.2007.02.007. [DOI] [PubMed] [Google Scholar]

- 3.Oake N, Jennings A, van Walraven C, Forster AJ. Interactive voice response systems for improving delivery of ambulatory care. Am. J. Manag. Care. 2009;15(6):383–391. [PubMed] [Google Scholar]

- 4.Pierce G. IVR (interactive voice response) me ASAP. Four hot applications of interactive voice response. Healthc. Inform. 1998;15(2):147–148. 150, 152. [PubMed] [Google Scholar]

- 5.Schroder KE, Johnson CJ. Interactive voice response technology to measure HIV-related behavior. Curr. HIV/AIDS Rep. 2009;6(4):210–216. doi: 10.1007/s11904-009-0028-6. [DOI] [PubMed] [Google Scholar]

- 6.Shaw WS, Verma SK. Data equivalency of an interactive voice response system for home assessment of back pain and function. Pain Res. Manag. 2007;12(1):23–30. doi: 10.1155/2007/185863. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Andersson C, Soderpalm Gordh AH, Berglund M. Use of real-time interactive voice response in a study of stress and alcohol consumption. Alcohol. Clin. Exp. Res. 2007;31(11):1908–1912. doi: 10.1111/j.1530-0277.2007.00520.x. [DOI] [PubMed] [Google Scholar]

- 8.Artinian NT. Telehealth as a tool for enhancing care for patients with cardiovascular disease. J. Cardiovasc. Nurs. 2007;22(1):25–31. doi: 10.1097/00005082-200701000-00004. [DOI] [PubMed] [Google Scholar]

- 9.Bender BG, Apter A, Bogen DK, Dickinson P, Fisher L, Wamboldt FS, Westfall JM. Test of an interactive voice response intervention to improve adherence to controller medications in adults with asthma. J. Am. Board. Fam. Med. 2010;23(2):159–165. doi: 10.3122/jabfm.2010.02.090112. [DOI] [PubMed] [Google Scholar]

- 10.Cranford JA, Tennen H, Zucker RA. Feasibility of using interactive voice response to monitor daily drinking, moods, and relationship processes on a daily basis in alcoholic couples. Alcohol. Clin. Exp. Res. 2010;34(3):499–508. doi: 10.1111/j.1530-0277.2009.01115.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Haas JS, Iyer A, Orav EJ, Schiff GD, Bates DW. Participation in an ambulatory e-pharmacovigilance system. Pharmacoepidemiol. Drug. Saf. 2010;19(9):961–969. doi: 10.1002/pds.2006. [DOI] [PubMed] [Google Scholar]

- 12.Kaminer Y, Litt MD, Burke RH, Burleson JA. An interactive voice response (IVR) system for adolescents with alcohol use disorders: a pilot study. Am. J. Addict. 2006;15(Suppl 1):122–125. doi: 10.1080/10550490601006121. [DOI] [PubMed] [Google Scholar]

- 13.Kashner TM, Trivedi MH, Wicker A, Fava M, Greist JH, Mundt JC, Shores-Wilson K, Rush AJ, Wisniewski SR. Voice response system to measure healthcare costs: a STAR*D report. Am. J. Manag. Care. 2009;15(3):153–162. [PubMed] [Google Scholar]

- 14.Reidel K, Tamblyn R, Patel V, Huang A. Pilot study of an interactive voice response system to improve medication refill compliance. BMC Med. Inform. Decis. Mak. 2008;8:46. doi: 10.1186/1472-6947-8-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Oake N, van Walraven C, Rodger MA, Forster AJ. Effect of an interactive voice response system on oral anticoagulant management. CMAJ. 2009;180(9):927–933. doi: 10.1503/cmaj.081659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Piette JD. Interactive voice response systems in the diagnosis and management of chronic disease. Am. J. Manag. Care. 2000;6(7):817–827. [PubMed] [Google Scholar]

- 17.Sinadinovic K, Wennberg P, Berman AH. Population screening of risky alcohol and drug use via Internet and Interactive Voice Response (IVR): A feasibility and psychometric study in a random sample. Drug Alcohol Depend. 2010;114(1):55–60. doi: 10.1016/j.drugalcdep.2010.09.004. [DOI] [PubMed] [Google Scholar]

- 18. [Accessed 13 March 2011];Certification Commission for Health Information Technology. http://www.cchit.org/about).

- 19.Crawford AG, Sikirica V, Goldfarb N, Popiel RG, Patel M, Wang C, Chu JB, Nash DB. Interactive voice response reminder effects on preventive service utilization. Am. J. Med. Qual. 2005;20(6):329–336. doi: 10.1177/1062860605281176. [DOI] [PubMed] [Google Scholar]

- 20.Farzanfar R, Stevens A, Vachon L, Friedman R, Locke SE. Design and development of a mental health assessment and intervention system. J. Med. Syst. 2007;31(1):49–62. doi: 10.1007/s10916-006-9042-z. [DOI] [PubMed] [Google Scholar]

- 21.Kobak KA, Greist JH, Jefferson JW, Mundt JC, Katzelnick DJ. Computerized assessment of depression and anxiety over the telephone using interactive voice response. MD Comput. 1999;16(3):64–68. [PubMed] [Google Scholar]

- 22.Levin E, Levin A. Evaluation of spoken dialogue technology for real-time health data collection. J. Med. Internet. Res. 2006;8(4):e30. doi: 10.2196/jmir.8.4.e30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Moore HK, Wohlreich MM, Wilson MG, Mundt JC, Fava M, Mallinckrodt CH, Greist JH. Using daily interactive voice response assessments: to measure onset of symptom improvement with duloxetine. Psychiatry (Edgmont) 2007;4(3):30–38. [PMC free article] [PubMed] [Google Scholar]

- 24.Mundt JC, Bohn MJ, King M, Hartley MT. Automating standard alcohol use assessment instruments via interactive voice response technology. Alcohol. Clin. Exp. Res. 2002;26(2):207–211. [PubMed] [Google Scholar]

- 25.Mundt JC, Ferber KL, Rizzo M, Greist JH. Computer-automated dementia screening using a touch-tone telephone. Arch. Intern. Med. 2001;161(20):2481–2487. doi: 10.1001/archinte.161.20.2481. [DOI] [PubMed] [Google Scholar]

- 26.Mundt JC, Greist JH, Gelenberg AJ, Katzelnick DJ, Jefferson JW, Modell JG. Feasibility and validation of a computer-automated Columbia-Suicide Severity Rating Scale using interactive voice response technology. J. Psychiatr. Res. 2010;44(16):1224–1228. doi: 10.1016/j.jpsychires.2010.04.025. [DOI] [PubMed] [Google Scholar]

- 27.Mundt JC, Moore HK, Bean P. An interactive voice response program to reduce drinking relapse: a feasibility study. J. Subst. Abuse Treat. 2006;30(1):21–29. doi: 10.1016/j.jsat.2005.08.010. [DOI] [PubMed] [Google Scholar]

- 28.Piette JD, Kerr E, Richardson C, Heisler M. Veterans Affairs research on health information technologies for diabetes self-management support. J. Diabetes Sci. Technol. 2008;2(1):15–23. doi: 10.1177/193229680800200104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Stuart GW, Laraia MT, Ornstein SM, Nietert PJ. An interactive voice response system to enhance antidepressant medication compliance. Top Health Inf. Manage. 2003;24(1):15–20. [PubMed] [Google Scholar]