Abstract

Objective. To evaluate pharmacy students' self-assessment skills with an electronic portfolio program using mentor evaluators.

Design. First-year (P1) and second-year (P2) pharmacy students used online portfolios that required self-assessments of specific graded class assignments. Using a rubric, faculty and alumni mentors evaluated students' self-assessments and provided feedback.

Assessment. Eighty-four P1 students, 74 P2 students, and 59 mentors participated in the portfolio program during 2010-2011. Both student groups performed well overall, with only a small number of resubmissions required. P1 students showed significant improvements across semesters for 2 of the self-assessment questions; P2 students' scores did not differ significantly. The P1 scores were significantly higher than P2 scores for 3 questions during spring 2011. Mentors and students had similar levels of agreement with the extent to which students put forth their best effort on the self-assessments.

Conclusion. An electronic portfolio using mentors based inside and outside the school provided students with many opportunities to practice their self-assessment skills. This system represents a useful method of incorporating self-assessments into the curriculum that allows for feedback to be provided to the students.

Keywords: portfolio, assessment, self-assessment, professional development, mentor

INTRODUCTION

Healthcare professionals are responsible for continuing their education far beyond what they learn in the classroom. Self-directed, lifelong learning is essential to maintain and broaden the healthcare professional’s knowledge base. Mandatory continuing education (CE) for pharmacist licensure is designed to maintain and enhance competence and promote problem-solving and critical-thinking skills.1 Although these aspects of CE are important, CE is not always successful in achieving these goals. Continuing professional development (CPD) is a strategic approach to lifelong professional learning.2 It is not a replacement for CE but rather includes CE as a fundamental component.2,3 The CPD framework itself is cyclical and includes the following components: reflect, plan, act, evaluate.2 At the center of the cycle is record, which refers to the documentation of information from the surrounding components into a portfolio. The CPD framework can provide more structure to post–pharmacy school education compared with the current system of CE.

The most recent Accreditation Council for Pharmacy Education (ACPE) doctor of pharmacy (PharmD) curriculum standards emphasize the need for student self-directed learning. According to guideline 11.1, “…the college or school should encourage and assist students to assume responsibility for their own learning,” throughout the curriculum from beginning to end.4 Because the CPD process is grounded in lifelong and self-directed learning, its use should be explored as a means to help achieve these goals beginning in the classroom. Key steps in the CPD process could be presented to students early in their pharmacy degree program and reinforced throughout to allow students the opportunity to better prepare for self-directed learning.5

Self-assessment, the first step in the process of self-directed learning, is critical for subsequent CPD. Despite its integral role in both of these processes, the accuracy of self-assessments has been called into question.6-8 A link between competency and self-assessment accuracy has been suggested.6 That is, if a student lacks the relevant content knowledge, the student may overrate his or her performance. On the other hand, students who excel on an assignment may underrate their performance on a self-assessment.7,8 Given the potential for such inconsistencies, the results of self-assessments may be compromised. Several strategies have been suggested to address this potential issue. Directed self-assessment (ie, self-assessment guided by external sources such as preceptors, mentors, or practice guidelines and standards) has been recommended as an approach to enhance professional performance and improve the ability to self-assess.9 Directed self-assessment might be of particular benefit when used for student self-assessments.9 Giving feedback to students has allowed them to improve not only their performance but also their ability to accurately self-assess,7 providing a means of confirming both knowledge and competence.10

Determining how to develop self-assessment and self-directed learning skills as part of the pharmacy school curriculum is a challenge for pharmacy educators. Guideline 15.4 of the ACPE accreditation standards states that portfolios should be used throughout the curriculum to measure the achievement of professional competencies; self-assessment should be included in the portfolio along with faculty and peer assessment.4 Therefore, a portfolio program might be of value for integrating self-assessment assignments and the CPD process into the PharmD curriculum to progressively develop students' self-assessment and lifelong learning skills.

The educational benefits of portfolios, particularly in pharmacy education, have not been thoroughly documented. Briceland and Hamiltion found the use of electronic portfolios successful in demonstrating achievement of ability-based outcomes in pharmacy students’ advanced pharmacy practice experiences (APPEs).11 However, this study did not examine the use of portfolios during the earlier years and did not focus on self-assessment skills.11 Factors to consider when developing a portfolio program include student buy-in (ie, student understanding of the relevance of the portfolio program to the learning process), faculty buy-in (ie, faculty understanding of the competencies upon which the portfolios are based), and presence of a student-faculty link (ie, use of a mentor to assist in student progress).12 Type of assessment method, whether quantitative or qualitative, also must be taken into consideration. Plaza and colleagues suggested that qualitative assessment methods might be the more appropriate choice because of the inherently qualitative nature of portfolios. In a review of the use of portfolios in undergraduate education, Buckley and colleagues also described several key aspects important in portfolio development, including reasonable time demands, specific aims and objectives that align to course outcomes, and support for students to build their reflective skills.13 With these considerations in mind, a portfolio program was implemented at the West Virginia University School of Pharmacy with a goal of developing students’ self-assessment skills using directed self-assessment and guidance from a mentor while introducing students to the CPD process.

No information was found in the literature describing the combined use of portfolios and mentors in pharmacy education for the purpose of evaluating students’ self-assessment skills and providing feedback. The objective of this study was to determine the effect on pharmacy students’ self-assessment skills of an electronic portfolio involving assigned mentors and multiple required self-assessments of completed and graded course assignments.

DESIGN

The electronic portfolio program at the West Virginia University (WVU) School of Pharmacy was initiated in fall 2009 with P1 students (class of 2013), with phased-in implementation with each subsequent entering class. LiveText (LiveText, Inc., La Grange, IL) was used as the platform for the Web-based electronic portfolio platform after reviewing different electronic programs available at the time. Although no formal grade was assigned for the portfolio, completion of the portfolio assignments each year was a curricular requirement for all students.

Mentors for each year’s class were identified from among current/former faculty members, alumni, and preceptors. Serving as a mentor was strictly voluntary with no compensation provided for these services. All full-time faculty members along with selected alumni and preceptors were sent a note from the portfolio program coordinator (director of assessment) asking if they were interested in volunteering to serve as a mentor. The alumni and preceptors were identified based upon previous interactions with faculty members or the experiential coordinator and/or by achievements since graduation. Those who volunteered to participate as mentors were asked how many students (ranging from 1 to a maximum of 5) they were interested in mentoring. They were told that they would be a mentor to the same student(s) throughout pharmacy school. In fall 2010, individuals who were already serving as mentors for the P2 students were asked if they were interested in adding any students from the new incoming P1 class.

Once mentors were identified, the portfolio program coordinator assigned each student to a mentor. To the extent possible, students were assigned a portfolio mentor based on geographic location (eg, student’s hometown and mentor’s location). Geographic matching was designed to facilitate possible face-to-face meetings between students and mentors, especially for mentors who resided at a distant location from the school. Students and mentors were asked to contact each other, at a minimum, at the beginning and end of each semester simply to talk and determine if the students had any concerns or questions and to have face-to-face or phone conversations in addition to e-mail correspondence when possible. At the beginning of the school year, the P1 students were asked to write a brief autobiographical sketch for their mentors, and the mentors were asked to share information about themselves with their mentees. Students were also encouraged to ask their mentors non–portfolio-related questions and to obtain advice beyond the portfolio assignments.

At the start of the fall 2010 semester, all students and mentors were provided training/education about the portfolio requirements. Student training consisted of a live presentation at the beginning of the school year describing the purpose of the portfolio, the program requirements for that semester, and instructions for the use of LiveText. The training was conducted during class time and attendance was required. Students were also provided with examples of (de-identified) outstanding student self-assessments from the previous year; the students involved were asked permission to use their work. A presentation to the students was repeated at the beginning of the following semester to review the portfolio requirements for that semester. Additionally, all materials and instructions were posted on the health science center's electronic course management system for continued student access whenever desired. Mentors were provided with a description of the portfolio’s purpose, written program and semester requirements, instructions for using LiveText, and instructions for grading that included the examples of outstanding self-assessments provided to the students. Mentors were also given examples of unacceptable student self-assessment responses (ie, composites from unacceptable responses that were further modified so they did not represent any individual student’s work). Mentors were asked and encouraged to review all training materials, but because of their diverse physical locations and other work responsibilities, a formal, required training session was not held for mentors.

Based on student and mentor feedback received following the first year of portfolio use (ie, end of spring 2010), several changes were made in the program prior to the start of the fall 2010 semester. These changes included a decrease in the number of self-assessments (from 6 to 3 per semester), use of a single due date at the end of the semester for all self-assessments instead of the multiple due dates throughout the semester used the first year, slight revisions in the self-assessment questions to improve clarity, and improved training materials to serve as guides (ie, the addition of examples of excellent self-assessments for students and excellent and poor self-assessments for mentors). For comparative purposes, this evaluation focuses on the 2010-2011 academic year because it allows for analysis of 2 different classes (ie, 2014 and 2013) that included the modifications implemented.

During the 2010-2011 academic year, the portfolio consisted of the following assignments for both the class of 2013 (P2 students) and the class of 2014 (P1 students): 3 self-assessments of course-related assignments per semester (2 prechosen for all students and 1 chosen by each student from a list of options); and 1 professionalism submission per semester. Because the professionalism submission used different questions and a different rubric for grading, it was not evaluated as part of the current study.

To identify the assignments to use as the basis for student self-assessments each semester, the faculty coordinators of each required pharmacy course for that semester were asked to identify 1 or more assignments from their course that they felt would allow for appropriate self-assessment by students. Desired attributes of these assignments included that: (1) they had been graded/scored (regardless of whether formative, summative, or pass/fail), (2) feedback had been provided to the student about his/her performance, and (3) the assignment had required more than simple memorization/recall by the student. Each faculty coordinator was also asked to indicate whether the assignment(s) should be required or optional for the portfolio self-assessment and to identify the educational outcomes and general abilities that the assignment(s) had helped students to achieve. Educational outcomes and general abilities are school-level competencies that students are expected to fulfill by graduation. Educational outcomes are pharmacy focused, such as “Apply an evidence-based approach to care provision and pharmacy practice,” and the general abilities represent general skills, such as verbal and written communication skills and self-learning. Using this information, the school’s Educational Outcomes Assessment Committee (EOAC) identified the 2 course assignments each semester that the P2 and P1 students would be required to self-assess and those assignments from which the students could select their third self-assessment.

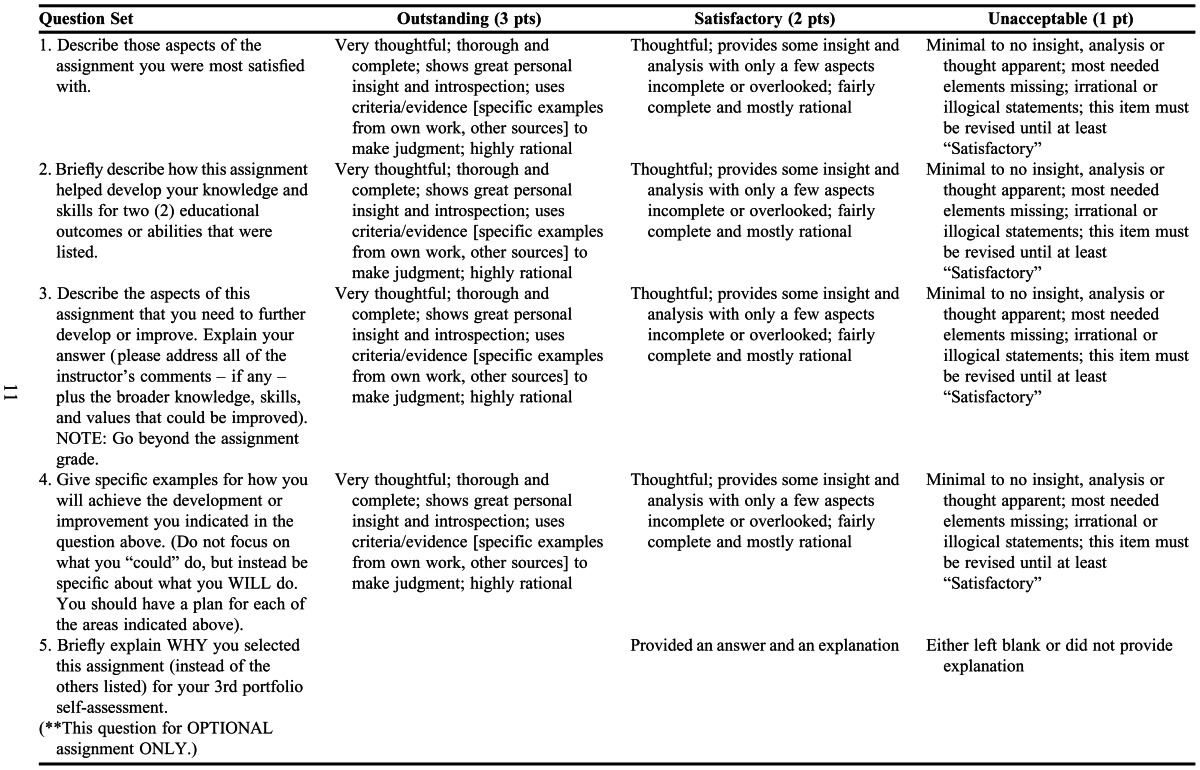

The students used a question template (Appendix 1) to self-assess their performance on the above-mentioned assignments. The template was designed and approved by the EOAC in keeping with the reflect and plan steps of the CPD cycle. Also, as part of question 2 (“Briefly describe how this assignment helped develop your knowledge and skills for 2 educational outcomes or abilities that were listed”), students chose 2 of the educational outcomes and/or general abilities listed for each assignment (relevant educational outcomes and/or general abilities were specified by the involved instructor) and were asked how the assignment helped them achieve those educational outcomes and/or general abilities. The self-assessments were completed in written form and submitted to the assigned mentor at the end of the fall and spring semesters through LiveText, along with an electronic copy of the respective graded assignment. The school’s director of assessment and a portfolio subcommittee of the EOAC managed the overall portfolio program, including necessary communications with mentors and students throughout the semester to answer questions and resolve any problems, and monitoring of assignment submissions and mentor grading. At the end of the fall 2010 and spring 2011 semesters, mentors evaluated the self-assessments submitted by their assigned students. The mentors did not grade the actual course assignments but rather evaluated the quality of the self-assessments. Mentors completed their evaluation using a rubric in which each self-assessment response was scored on a 3-point scale: outstanding=3, satisfactory=2, and unacceptable=1 (Appendix 1). Students receiving a score of 1 (unacceptable) on any question were required to resubmit the assignment to reflect changes suggested by their respective mentors. Each mentor would then review the assignment to ensure that the revisions were at least satisfactory (ie, score of 2). Students were considered to have successfully completed the portfolio requirement for each semester only when all assignments were submitted and all rubric scores were at least satisfactory. Students were told that failure to comply with the portfolio requirements each semester would result in their name being forwarded to the school’s academic standards committee for possible disciplinary action. Students were also told that remediation, in the form of a presentation, would be necessary if portfolio self-assessments were not submitted by the designated due dates.

Mentors could type comments directly on the submitted self-assessments to provide students with their own perspective, as well as to give guidance for improvement. LiveText allowed mentors to easily insert comments by simply clicking the part of the text they wished to comment on or by typing feedback into an existing comment box. Completion of evaluations by mentors was readily verified within LiveText. Reminder e-mails were sent to the mentors when the student assignments were due, with a second reminder sent closer to the mentor evaluation due date.

EVALUATION AND ASSESSMENT

At the end of spring 2011, mentors and students were asked to complete a 5-point Likert scale questionnaire to obtain feedback about the portfolio program and ideas for improvement. Findings from these voluntary mentor and student questionnaires were shared with the EOAC. All statistical analyses were performed using SAS software, version 9.2 (SAS Institute Inc., Cary, North Carolina). The rubric scores were analyzed within groups using the Wilcoxon signed rank test and between groups using the Mann-Whitney U test. Questionnaire scores between student groups and between students and mentors were analyzed using the Mann-Whitney U test. Approval for this project was obtained from the West Virginia University Institutional Review Board.

The entire classes of P2 students (n=75) and P1 students (n=84) participated in the portfolio program during the 2010-2011 academic year. Fifty-nine mentors participated in the program: 18 were mentors for P2 students only, 26 were mentors for P1 students only, and 15 were mentors for both P2 and P1 students. About half of the mentors (31; 51%) were full or jointly funded faculty members, with the remainder consisting of alumni preceptors, alumni practicing out of state, and a former faculty member. Thirty individuals were located in the Morgantown, West Virginia, area, with the remainder outside the local area, including 7 out-of-state residents. An average of 2 students were assigned per mentor, with a range of 1 to 5 students per mentor.

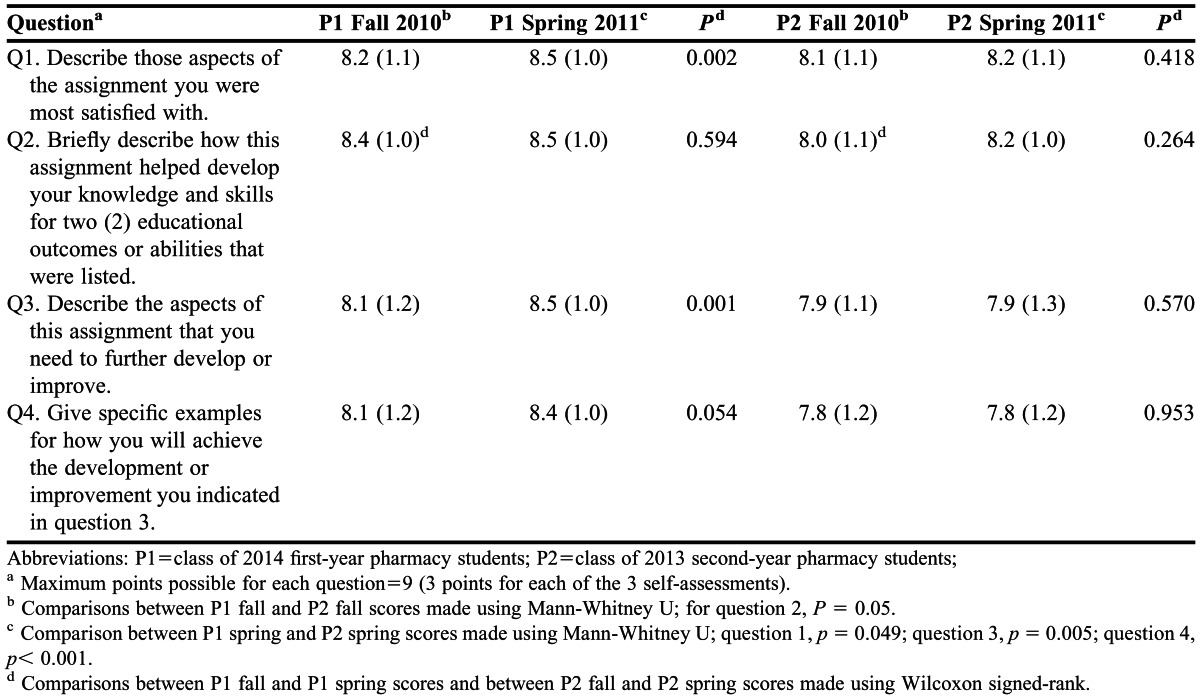

The P1 and P2 students’ fall and spring semester rubric scores for their self-assessments are shown in Table 1. Significant differences were found for the P1 student scores between the fall and spring semesters for self-assessment question 1 (“Describe those aspects of the assignment you were most satisfied with”) and question 3 (“Describe the aspects of this assignment that you need to further develop or improve”) (p=0.002 and p=0.001, respectively). No significant differences were found between the P1 fall and spring scores for self-assessment question 2 (“Briefly describe how this assignment helped develop your knowledge and skills for 2 educational outcomes or abilities that were listed.”) or question 4 (“Give specific examples for how you will achieve the development or improvement you indicated in question 3.”) (p=0.59 and p=0.05, respectively). When fall and spring semester self-assessment question scores were compared for the P2 students, no significant differences were found (p>0.05 for all questions).

Table 1.

Comparisons of Rubric Scores From Mentor Evaluations

Spring rubric scores represented a year of portfolio use for P1 students and 2 years of use for P2 students. Scores for questions 1, 3, and 4 were higher for the P1 students than those for P2 students (p=0.049, p=0.005, and p<0.0001, respectively). No significant difference was found between the class spring scores for question 2 (p=0.071). Comparison of fall rubric scores between classes showed no significant differences (p≥0.05 for all questions).

To help eliminate the possible effect that different mentors might have had on the differences in scores between the P1 and P2 students, a separate analysis was conducted using only students from the 15 mentors who were assigned students from both classes. Twenty P1 students and 34 P2 students were assigned to these 15 mentors. Comparisons of the scores for these P1 and P2 students for each question found no significant differences between groups for either the fall or spring semesters (p value range 0.246-1.000; Mann-Whitney U).

In both classes, there were fewer total resubmissions requested in the spring than in the fall semester. During fall 2010, 22 self-assessments were resubmitted (13 for P1 students; 9 for P2 students), compared with 11 self-assessments (5 for P1 students; 6 for P2 students) during spring 2011. The difference in the number of resubmissions between spring 2011 and fall 2010 was significant for the P1 class (p=0.04) but not for the P2 class (p=0.41).

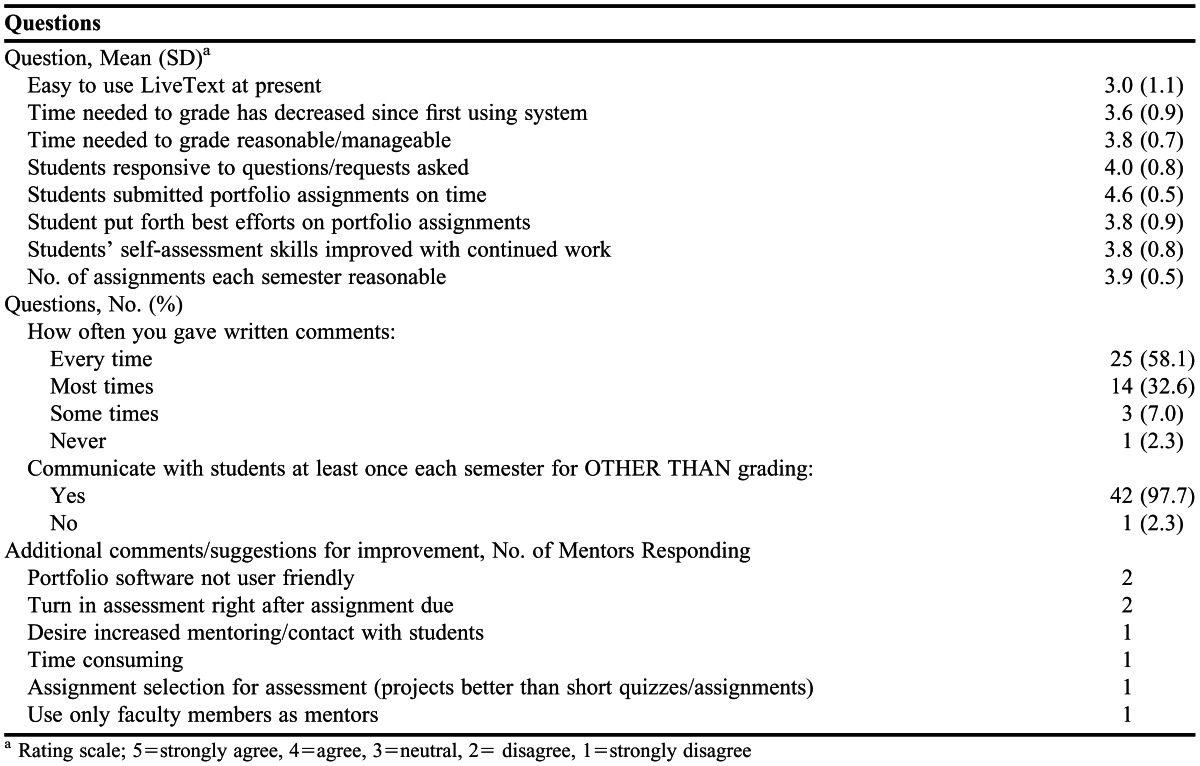

At the end of the spring 2011 semester, 43 mentors (72.9%), 43 P1 students (51.2%), and 27 P2 students (32.4%) completed the voluntary questionnaire. The mentor questionnaire responses are shown in Table 2. Approximately 90% of the mentors indicated they provided written comments to their students for most to all of the self-assessments. The mentors agreed that students were responsive to their comments (mean and median=4.0) and generally agreed that the time needed to grade the self-assessments was reasonable and manageable (mean=3.8; median=4). The overwhelming majority of the responding mentors also stated that they communicated with the students at least once a semester for purposes other than grading.

Table 2.

Mentor Feedback on the Portfolio Program (N=43)

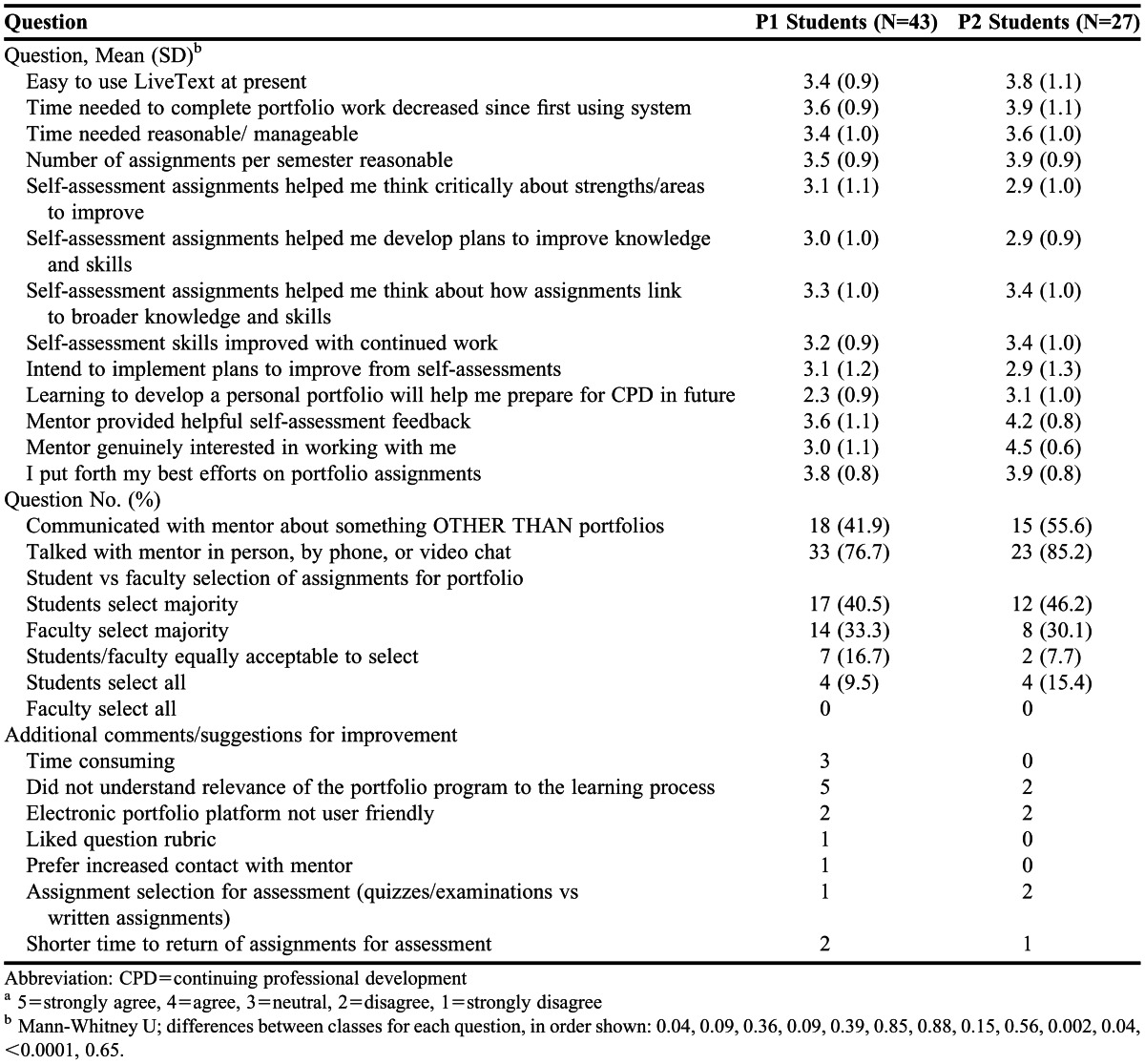

The student questionnaire results are shown in Table 3. When student responses were compared by class, the P2 students were slightly but significantly more positive than were the P1 students (p=0.04) regarding LiveText being easy to use. The P2 students felt more strongly that the mentors provided helpful feedback (p=0.04) and were generally interested in working with them (p<0.0001) compared with the P1 students. Although P2 students’ mean response regarding whether the program helped prepare them for CPD in the future was neutral, it was still significantly more positive that that of P1 students, who generally disagreed with this statement (p=0.002). Identical or similar percentages of students and mentors, respectively, strongly agreed (18.6% vs 18.6%), agreed (58.6% vs 55.8%), and disagreed (8.6% vs 11.6%) with the statement that students put forward their best efforts on the self-assessments.

Table 3.

Student Feedback on Portfolio Programa

DISCUSSION

The focus of the portfolios historically in our program has been on the development of self-assessment skills, even though several therapeutics case studies have also been submitted and graded through LiveText using a standardized rubric. The self-assessment portfolio assignments have been developed in a manner that mimics the initial steps of the CPD cycle (ie, reflect and plan2) during the first 3 classroom-based years of the curriculum.

Because mentors have been identified as important to the development of students’ self-assessment skills with portfolio use,12,13 mentors were assigned to students. A relatively large number of mentors was desirable to maintain a fairly small and manageable workload for all participants, and the use of electronic portfolios allowed for these individuals to be based anywhere in the nation, which meant that alumni practitioners could be invited to participate. Based on responses to the feedback questionnaire, a manageable workload for mentors was successfully maintained. Mentors tended to agree that both the time required for grading and the number of assignments each semester were reasonable.

There were some challenges with involving a large number of mentors in the portfolio program. These included identifying potential mentors with an interest in participating, training/educating the mentors to be effective evaluators of the self-assessments, the need for ongoing communications with a large pool of mentors, and the potential for inconsistent grading of the student self-assessments across mentors (although this was not raised as a concern by those students completing the questionnaire). Although the initial set-up and continual management of the program with a large pool of mentors is somewhat time-consuming, it is much less time-intensive compared with having only 1 or a small number of faculty members review all the portfolio self-assessments. For example, assuming each self-assessment review takes about 20 minutes (a reasonable estimate based on the investigators’ experience) with 3 self-assessments per semester and approximately 80 students per class with 3 concurrent classes of students using the portfolios, an investment of 240 hours each semester (or 480 hours per year) would be needed for the evaluations. In contrast, the director of assessment and portfolio subcommittee members estimated they spent about 40 hours total each year identifying and managing mentors and preparing training materials.

Use of assignments, exercises, quizzes, etc., which had already been graded and returned by the involved course instructor as the basis for the portfolio self-assessment assignments was consistent with the definition of directed self-assessment.9 The students knew the instructors’ expectations and the aspects of the assignments they missed. Because mentors could view the graded students’ work attached to each self-assessment, their evaluations of the self-assessments and suggestions for identifying and improving students’ weaknesses served to guide and direct students’ skills in this area.

Grades on the self-assessments were generally good across classes, as indicated by the relatively small number of resubmissions requested each semester. The decrease in the number of resubmissions from the fall to spring semesters in both classes reflects a general improvement in self-assessment skills throughout the year. The difference was significant in the P1 class, although smaller numbers of P2 students were asked to resubmit self-assessments in both semesters. When each question was examined individually, the P2 students tended to have more difficulty with the questions that asked them to identify areas for improvement and then to give specific examples of how they would achieve these improvements.

Although the P1 students showed significant improvements from the fall to the spring semester as well as during the spring semester compared with the P2 class, a few points must be considered when analyzing these differences. The P2 students were using portfolios for their second year, and changes had been made in their portfolio requirements from the previous year (ie, the initial year of portfolio use). Examples of excellent self-assessments (for both students and mentors) and poor self-assessments (for mentors only) were not provided in the initial year because there had been no prior experience to draw upon. Mentors may have graded more leniently during the initial year and then more rigorously during the following year after reviewing the examples, so that some improvement in students’ self-assessment scores during year 2 was counterbalanced by more rigorous mentor grading. Although P1 mentors were given the same examples and grading instructions, however, improvement was seen in their students’ work between the fall and spring semesters for some of the self-assessment questions. Because the mentors were not identical for both class years, several of the P1 mentors may have been “easier” graders compared with P2 mentors. This hypothesis is supported to an extent by the finding of no significant difference in the P1 and P2 scores when comparing only the students of mentors who had both P1 and P2 students. However, given the relatively small number of these mentors involved (15), there was likely insufficient power to detect small differences in scores.

Students on average tended to agree that the time needed to use the electronic portfolio decreased as they used the system, the number of portfolio assignments per semester was reasonable, and they put forth their best efforts on the self-assessments. The responding students were generally neutral regarding the portfolio self-assessments helping them think more critically about strengths and weaknesses, develop plans for improvement, and prepare for CPD. These responses may be attributable to inconsistent student buy-in for portfolio use, an important factor to consider when developing a portfolio program.12 Other possible factors include a lack of knowledge and appreciation by early-level students regarding the need for continuing professional education and the CPD process and/or a disconnect between self-assessment and actual need for improvement, which has been demonstrated across a variety of health professionals and health professions students.6-8,14,15 A lack of understanding about CPD by newer students is consistent with the finding of significantly greater agreement (although still neutral) among P2 students regarding the statement that self-assessments helped to prepare them for future CPD.

A potential disadvantage of using a large number of mentors, several of whom were at distant locations from the school, was ensuring that there were sufficient and beneficial mentor-student interactions from the perspective of both individuals. On average, the P2 students responded significantly more positively than did P1 students to statements regarding the helpfulness of mentor feedback, although overall most students “agreed” that their mentor had a genuine interest in working with them. In reviewing the comments made by mentors on the students’ self-assessments, the investigators noted that many of the mentors from both years provided thoughtful comments that were encouraging and/or instructional. The mentors for the P2 students included the first faculty members to express interest in and to volunteer for the program, so they may have had greater interest in the program than some of the faculty mentors for the P1 students. Different groups of alumni and preceptors were contacted regarding their interest in serving as mentors for each class. Prior to the start of the 2011-2012 academic year, all current mentors were asked if they wanted to continue serving as mentors. Only 2 of the 33 individuals (6%) who had served as mentors for the P2 students were either not interested or unable to continue serving, compared with 10 of the 46 mentors (22%) for the P1 students. The reasons mentors gave for wishing to discontinue their service were varied and primarily involved work-related or personal issues that decreased their available time (eg, switching jobs, short-staffed at work, other personal time demands, illness). Thus, more of the P1 mentors might have been less able to work with their students compared with the P2 mentors. Alternatively, the P2 student mentors had an extra year to practice their mentoring skills, which could also explain some of the differences in student opinions.

A few mentors and students from both classes felt that the electronic portfolio platform was not user friendly, although this was not a comment made by the majority of participants, and ease of use generally improved with time. An important question was the extent to which students and mentors felt that the students put forth their best efforts for the self-assessments, as opposed to writing self-assessments that met the bare minimum requirements. Mentor feedback was positive overall, and nearly identical numbers of students and mentors agreed or strongly agreed that best efforts were put forth. When asked who should select the assignments to include for the self-assessments, the largest number of responding students indicated that students should select the majority of those assignments to include. During the first year that portfolios were used, the EOAC selected all the portfolio self-assessment assignments. This practice was changed to 2 selected by the EOAC and 1 by the student for the 2010-2011 year, when the number of self-assessments was decreased to 3 a semester. Whether to reverse this change (2 student-selected assignments, 1 faculty-selected assignment) with further portfolio use will be considered in the future.

One problem with portfolio use that frustrated some students and portfolio subcommittee members was when course instructors did not return graded assignments to students until shortly before the deadline date for self-assessment submission. At least 2 portfolio course-related assignments per semester fell into this category. Efforts have been made this year to ensure that assignments selected for the portfolio are those that are due earlier in the semester to allow sufficient time for faculty grading and assignment return to the students. Also, the importance of timely grading and feedback provision was emphasized to course instructors.

Several future activities based on the portfolio experiences thus far are planned. Students’ self-assessment scores will continue to be monitored to determine if they improve as they progress through the classroom curriculum. Beginning in 2011-2012, the presentation covering the portfolio purposes, contributions to CPD skill development, and expectations given to the P1 students at the start of the fall semester was repeated in its entirety in the spring for the P1 students and also provided to the P2 students in the fall as reinforcement. In the P4 experiential year, students will be asked to complete all the steps in the CPD cycle for 1 or more self-assessments to illustrate how all the steps fit together to improve knowledge and skills. Feedback will continue to be obtained from students and mentors to strengthen the portfolio program.

Students’ responses in the self-assessments will also be used for broad-based student-learning assessment and program assessment. Student analyses of the areas they need to improve upon for specific assignments will be examined to help identify common themes that might indicate the need for curricular changes. Students’ self-assessments of certain course assignments and activities will be shared with the involved faculty member(s) to allow instructors to identify aspects of the assignments they might wish to change in the future.

SUMMARY

Development of students’ self-assessment skills is emphasized in the ACPE guidelines for the PharmD curriculum and is also critical for continuing professional development as pharmacy practitioners. In addition to their use for other assessment and evaluation purposes, portfolios can provide many opportunities for students to practice and develop their self-assessment skills throughout the curriculum in a manner that mimics the CPD cycle. Regardless of their physical location, preceptors and alumni can successfully serve as mentors and guides for student electronic portfolio self-assessments in the pharmacy program. Potential advantages of this model include the distribution of mentoring responsibilities among a large number of individuals while giving students the vantage point of external practitioners. However, care must be taken to recruit mentors who are interested in working with the students and to ensure that appropriate training and education are provided to all involved.

ACKNOWLEDGEMENTS

We thank the Educational Outcomes Assessment Committee members, with special thanks to the portfolio subcommittee members Carla See, Mary Stamatakis, and Ginger Scott for their assistance in setting up the portfolios for student use. The ideas expressed in this manuscript are those of the authors and in no way are intended to represent the position of Mylan Pharmaceuticals, Inc.

Appendix 1.

Self-Assessment Evaluation Rubric

REFERENCES

- 1.Accreditation Council for Pharmacy Education. Definition of continuing education for the profession of pharmacy. http://www.acpe-accredit.org/ceproviders/standards.asp. Accessed March 25, 2011. [Google Scholar]

- 2.Rouse MJ. Continuing professional development in pharmacy. Am J Health-Syst Pharm. 2004;61:2069–2076. doi: 10.1093/ajhp/61.19.2069. [DOI] [PubMed] [Google Scholar]

- 3.Accreditation Council for Pharmacy Education. Continuing professional development (CPD) http://www.acpe-accredit.org/ceproviders/CPD.asp. Accessed March 25, 2011. [Google Scholar]

- 4.Accreditation Council for Pharmacy Education. Accreditation standards and guidelines for the professional program in pharmacy leading to the doctor of pharmacy degree. http://www.acpe-accredit.org/deans/standards.asp. Accessed March 25, 2011. [Google Scholar]

- 5.Janke KK. Continuing professional development: don’t miss the obvious. Am J Pharm Educ. 2010;74(2):Article 31. doi: 10.5688/aj740231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Austin Z, Gregory PAM, Galli M. “I just don’t know what I am supposed to know:” Evaluating self-assessment skills of international pharmacy graduates in Canada. Res Social Adm Pharm. 2008;4(2):115–124. doi: 10.1016/j.sapharm.2007.03.002. [DOI] [PubMed] [Google Scholar]

- 7.Colthart I, Bagnall G, Evans A, et al. The effectiveness of self-assessment on the identification of learner needs, learner activity, and impact on clinical practice: BEME Guide no. 10. Med Teach. 2008;30(2):124–145. doi: 10.1080/01421590701881699. [DOI] [PubMed] [Google Scholar]

- 8.Austin Z, Gregory PAM, Chiu S. Evaluating the accuracy of pharmacy students’ self-assessment skills. Am J Pharm Educ. 2007;71(5):Article 89. doi: 10.5688/aj710589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Dornan T. Self-assessment in CPD: lessons from the UK undergraduate and postgraduate education domains. J Contin Educ Health Prof. 2008;28(1):32–37. doi: 10.1002/chp.153. [DOI] [PubMed] [Google Scholar]

- 10.Motycka CA, Rose RL, Ried D, Brazeau G. Self-assessment in pharmacy and health science education and professional practice. Am J Pharm Educ. 2010;74(5):Article 85. doi: 10.5688/aj740585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Briceland LL, Hamilton RA. Electronic reflective student portfolios to demonstrate achievement of ability-based outcomes during advanced pharmacy practice experiences. Am J Pharm Educ. 2010;74(5):Article 79. doi: 10.5688/aj740579. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Plaza CM, Draugalis JR, Slack MK, Skrepnek GH, Sauer KA. Use of reflective portfolios in health sciences education. Am J Pharm Educ. 2007;71(2):Article 34. doi: 10.5688/aj710234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Buckley S, Coleman J, Davison I, et al. The educational effects of portfolios on undergraduate student learning: a Best Evidence Medical Education (BEME) systematic review: BEME Guide No. 11. Med Teach. 2009;31(4):340–355. doi: 10.1080/01421590902889897. [DOI] [PubMed] [Google Scholar]

- 14.Davis DA, Mazmanian PE, Fordis M, Harrison RV, Thorpe KE, Perrier L. Accuracy of physician self-assessment compared with observed measures of competence. JAMA. 2006;296(9):1094–1102. doi: 10.1001/jama.296.9.1094. [DOI] [PubMed] [Google Scholar]

- 15.Liaw SY, Scherpbier A, Rethans JJ, Klainin-Yobas P. Assessment for simulation learning outcomes: a comparison of knowledge and self-reported confidence with observed clinical performance. Nurse Educ Today. 2012;32(6):e35–e39. doi: 10.1016/j.nedt.2011.10.006. [DOI] [PubMed] [Google Scholar]