ABSTRACT

BACKGROUND

Transition to a Patient-Centered Medical Home (PCMH) is challenging in primary care, especially for smaller practices.

OBJECTIVE

To test the effectiveness of providing external supports, including practice redesign, care management and revised payment, compared to no support in transition to PCMH among solo and small (<2–10 providers) primary care practices over 2 years.

DESIGN

Randomized Controlled Trial.

PARTICIPANTS

Eighteen supported practices (intervention) and 14 control practices (controls).

INTERVENTIONS

Intervention practices received 6 months of intensive, and 12 months of less intensive, practice redesign support; 2 years of revised payment, including cost of National Council for Quality Assurance’s (NCQA) Physician Practice Connections®─Patient-Centered Medical Home™ (PPC®-PCMH™) submissions; and 18 months of care management support. Controls received yearly participation payments plus cost of PPC®-PCMH™.

MAIN MEASURES

PPC®-PCMH™ at baseline and 18 months, plus intervention at 7 months.

KEY RESULTS

At 18 months, 5 % of intervention practices and 79 % of control practices were not recognized by NCQA; 10 % of intervention practices and 7 % of controls achieved PPC®-PCMH™ Level 1; 5 % of intervention practices and 0 % of controls achieved PPC®-PCMH™ Level 2; and 80 % of intervention practices and 14 % of controls achieved PPC®-PCMH™ Level 3. Intervention practices were 27 times more likely to improve PPC®-PCMH™ by one level, irrespective of practice size (p < 0.001) 95 % CI (5–157). Among intervention practices, a multilevel ordinal piecewise model of change showed a significant and rapid 7-month effect (ptime7 = 0.01), which was twice as large as the sustained effect over subsequent 12 months (ptime18 = 0.02). Doubly multivariate analysis of variance showed significant differential change by condition across PPC®-PCMH™ standards over time (ptime x group = 0.03). Intervention practices improved eight of nine standards, controls improved three of nine (pPPC1 = 0.009; pPPC2 = 0.005; pPPC3 = 0.007).

CONCLUSIONS

Irrespective of size, practices can make rapid and sustained transition to a PCMH when provided external supports, including practice redesign, care management and payment reform. Without such supports, change is slow and limited in scope.

KEY WORDS: patient centered care, care management, randomized trials, primary care, health care delivery

INTRODUCTION

Transition to a Patient-Centered Medical Home (PCMH) has become a priority for primary care practices, to achieve the goals of improving patient outcomes while reducing costs.1,2 The evidence base for evaluating the effectiveness of this model is growing as demonstration projects begin to report outcomes3 showing reduced hospitalizations and/or emergency room visits,4–6 improved quality,1,4,7 and the benefits of change facilitators.8 Despite promising reports, there is scarce evidence from demonstrations conducted in generalizable settings, using robust evaluation methods, and employing detailed analyses of the pace and ease with which components of the model are being adopted.9

The majority of primary care practices in the US are of small or medium size, and are not part of an integrated health system.10 There is growing concern about the capacity of small practices to make the PCMH transition.11–14 Early evaluation reports on successful transitions, while informative,5,7,8,15 lack relevance for small practices. As others have reported,11 smaller practices lack many of the characteristics associated with successful change in some demonstrations, such as a history of successful change.6,7,15 They also lack the organizational resources available in large integrated systems5,16 or statewide organizations.1,4 Thus, it is unclear whether small, independent practices can successfully transition and at what pace.

The use of rigorous study designs, while often difficult to apply in the context of routine clinical practice, is important. Such study designs allow validation of earlier non-controlled evaluations, and they control for change due to the intervention itself, versus characteristics of the practice or time alone. Demonstrations have rarely utilized randomized designs, and most have either lacked comparison sites,17 been implemented in older, sicker or specialized populations,18 had comparisons of convenience,5 or lacked revised payment arrangements,19 thought to be essential to the transition.2

While the number of practices recognized as PCMH by the National Committee for Quality Assurance (NCQA) is growing daily,14,20 it is challenging for practices to change the wide range of practice components8,21–24 required by the multidimensional PCMH model. These reports include little detail on change along the PCMH dimensions17 of enhanced access to a physician led team, continuity and coordination of comprehensive care, promotion of patient self-management, and use of evidence-based medicine facilitated by registries, health information technology and exchange.2,25 Detail is especially lacking among practices unsuccessful in their attempt to achieve recognition.14 Thus, the evidence required to inform decisions about where to apply resources to facilitate or accelerate change is lacking.23

This paper reports on a study, conducted by EmblemHealth, the largest health insurer based in New York,26 addressing many of the current limitations in the growing evidence base. The study provides a unique view on the implementation of the PCMH in adult primary care using the first randomized, controlled, longitudinal trial that operationalizes the key principles of the PCMH model, including revised payment.2 The demonstration posits a rapid transition (after 6 months of support), even among solo and small, independent practices; and tracks change over time in intervention versus control practices.

The research questions addressed in this paper are:

Can independent practices of varying sizes successfully transition to PCMH, with or without external support, and in what time frame?

What components of the PCMH model are more or less amenable to change, with or without external support?

METHODS

Design

This two-year, randomized, controlled trial was conducted between January 2008 and December 2010. Practices were, solo (< two providers), small (two to ten providers), or multisite (solo or small). Participating practitioners were specialists in internal, general, or family medicine, and included a mix of HMO, PPO, Medicaid and Medicare patients. The University of Connecticut Health Center Institutional Review Board determined the study not to be human subjects’ research.

Recruitment

Practices were recruited and enrolled by EmblemHealth in two waves from January to June 2008, based on a starting list of 831 practices in the five primary counties of New York City. After rank-ordering by EmblemHealth’s data on patient panel size, 243 solo and small practices were targeted; recruitment was planned to end once a goal of 50 practices were enrolled. Wave One utilized mailings, calls, and provider informational sessions. Wave Two added outreach and site visits with eligible, interested practices, followed by signed enrollment consent.

Randomization

Practices were randomly assigned to condition (intervention or control) by external evaluators (JAB) by use of 1:1 urn randomization, stratified on four factors: 1) number of participating providers at the practice (one versus two or more); 2) Federally Qualified Health Center status (yes or no); 3) median household income by practice zip code; 4) electronic health record status at baseline (yes or under contract versus none).

Time Line and Protocol

After randomization, intervention practices received a three-part intervention package, delivered over 2 years.

Practice Redesign Support

Intervention practices received 6 months of intensive and 12 months of less intensive, practice redesign support. Support was tailored to the individual needs of each practice, and was provided by consultants from Enhanced Care Initiatives, Inc., under contract with EmblemHealth. Each intervention practice was assigned a practice redesign facilitator who engaged providers on-site at the practice, and assisted in conducting the PPC®-PCMH™ self-assessment, including the identification of practices’ top three diagnoses to operationalize into guideline-driven care. Practice redesign facilitators also: provided hands-on education about the PCMH model using a standardized manual; enhanced or initiated the use of an electronic health record; developed practice-specific policies, procedures, and work plans/flows; reviewed coding and payer contracts to optimize reimbursement; developed practice-specific care management protocols; engaged staff in health and cultural literacy; and procured and implemented patient communication portals.

Care Management Support

Intervention practices received 18 months of care management support provided by nurse care managers embedded in the practice team (1.0 full-time equivalent (FTE) care manager per 1,000 EmblemHealth patients, regardless of severity). Nurse care managers worked with practices to: identify, contact, and engage complex EmblemHealth patients; educate and provide guideline-based care including medication reconciliation; organize self-care group visits; provide care management pre-appointment and post-appointment; and facilitate post in-patient transitions. Nurse care managers educated practices on population management, monitoring of chronic conditions, developing patient care plans, coordinating care with other providers, and developing open access scheduling.

Revised Payment

Intervention practices received 2 years of a revised payment plan, including the cost of NCQA’s Physician Practice Connections®─Patient-Centered Medical Home™ (PPC®-PCMH™) application fee, and a Pay-For-Performance plan (P4P), in addition to their EmblemHealth negotiated fee. P4P was based on maximums of $2.50 per member per month for improvements in 'medical homeness' (achieving and/or increasing the level of PPC®-PCMH™ Recognition), and $2.50 per member per month for improvements in predetermined clinical quality and patient experience targets. Controls received participation payments of $5,000/year, if compliant with data submission requirements.

To incentivize practice retention, both conditions received a proportion of projected first year payments in advance. For improvements in medical homeness, intervention practices were paid a mean (standard deviation–SD) of $13,166 ($12,468) in Year 1, ranging from $1,416 to $37,520; Year 2 payments were similar, ranging from $0 to $34,800 with a mean (SD) of $10,690 ($9,167). All control practices received their annual $5,000 participation payment during Year 1, although only 36 % received it during Year 2.

Measures

Data

As the primary outcome, PPC®-PCMH™ data were collected at baseline (enrollment), 7 months after baseline (completion of intensive support) and 18 months after baseline (completion of less-intensive support) for intervention practices, and at baseline and 18 months for controls.

Medical Homeness

The extent to which participating practices achieved medical homeness over time was assessed using NCQA’s 2008 PPC®-PCMH™ recognition program.27 The PPC®-PCMH™ evaluates practices based on nine PCMH standards which assess many, but not all, of the components of the PCMH model: PPC-1) patient access and communication; PPC-2) patient tracking; PPC-3) care management; PPC-4) patient self-management; PPC-5) e-prescribing; PPC-6) test tracking; PPC-7) referral tracking; PPC-8) performance reporting; and PPC-9) advanced electronic communication. Standards are composed of multiple elements providing detail-level data, ten of which are considered “Must Pass”. Practices completed a self-study following published PPC®-PCMH™ Guidelines27 prior to the online submission process. Recognition followed NCQA procedures, including an overall score (zero through 100 point scale, higher being best), and ten “Must Pass” elements, each requiring a minimum score of 50 %. NCQA recognition requires: Level 1: 25–49 points, and 5/10 “Must Pass” elements; Level 2: 50–74 points, and 10/10 “Must Pass” elements; and Level 3: 75–100 points, and 10/10 “Must Pass” elements. Multisite practices could choose to submit PPC®-PCMH™ scores at the location level. Therefore, the N for PPC®-PCMH™ data throughout reflect the number of practice locations actually submitted, differing slightly from the number of practices enrolled prior to PPC®-PCMH™ submission. PPC®-PCMH™ recognition levels are publically available on the NCQA website. Data on the detail scores require a release from NCQA. Six practices did not sign data releases (one intervention and five controls).

Analytical Approach

Descriptives and univariate tests of significance, means, standard deviations, medians, minimum and maximum values for descriptive data were produced. To test baseline differences between study conditions, Independent Samples t-tests were conducted for normally distributed data, Mann–Whitney U tests for non-parametric data, and Pearson Chi-Square tests for proportional data. At 18 months, differences in PPC®-PCMH™ level distributions between study conditions were assessed with a Kolmogorov–Smirnov test statistic. The unit of analysis for descriptive and inferential statistics was the practice.

Ordinal Logistic Regression

To test whether PPC®-PCMH™ levels were significantly different at 18 months by condition, an ordinal logistic regression was conducted.28 PPC®-PCMH™ results were categorized as no increase, increased one or two levels, and increased three levels from baseline to 18 months. Ordinal logistic regression28 assessed prediction of membership in one of these three outcome categories, controlling for practice size (number of providers in practice, range one to ten). Since practice size was not a significant predictor, the model without size was reported.

Hierarchical Ordinal Piecewise Model

To assess whether change in PPC®-PCMH™ levels occurs rapidly in the first 6 months among intervention practices (baseline to 7 months), and to see if change in the next 12 months (from 7 months to 18 months) differs from the initial change, a piecewise random intercept ordinal logistic regression analysis was conducted in SAS 9.2 NLMIXED.29,30 The slope of the time period from baseline to 7 months was assessed for significance, and piecewise slopes were compared for each period, based on a chi square difference test of the -2 Log Likelihoods between the model with one slope and the model with two slope segments.31

Doubly Multivariate Analysis of Variance

To analyze change in medical homeness beyond that indicated by the PPC®-PCMH™ level achieved, we look at standards. A doubly multivariate analysis of variance was conducted to examine overall change in the points earned for each of nine PPC®-PCMH™ standards over time by condition, and to examine which standards differed significantly over time within each condition.32

RESULTS

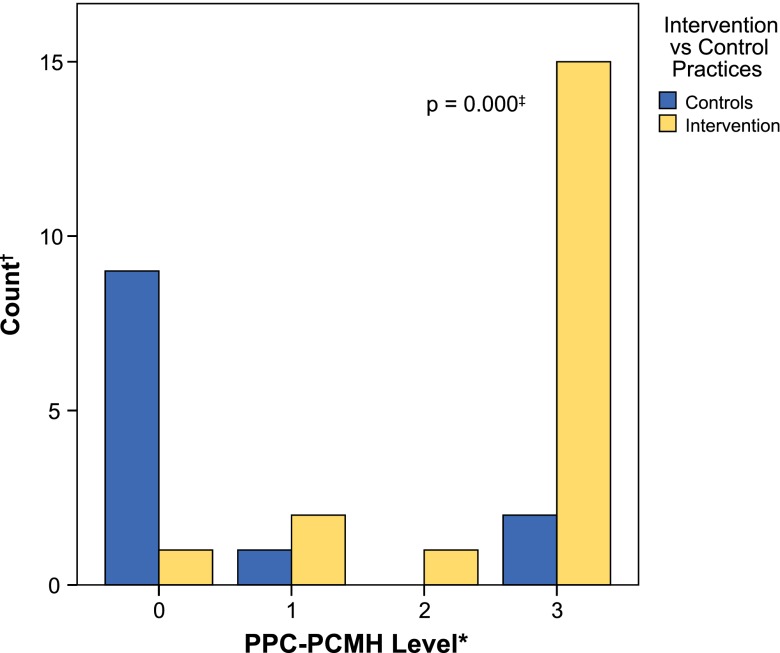

Figure 1 shows the enrollment and retention of practices by condition. Of 243 practices targeted for recruitment, many (162) declined to participate, citing lack of providers’ time, reluctance to collaborate with a payer-sponsored study, hesitation to participate in a randomized, controlled trial, and generally low awareness of the benefits of PCMH at the time of recruitment (2008). Fifteen percent (37/243) of targeted practices enrolled in the study, 74 % of the recruitment goal (37/50). Five practices (14 %) withdrew prior to providing study data, citing the project to be too much work (2), lack of approval by practices’ board (2), and lack of interest (1). Of the 31 practices available for analysis, two completed multisite PPC®-PCMH™ surveys (a two-location and a three-location practice); thus, 34 locations were included in the recognition-level analysis. An additional six practices (16 %) declined to release detailed PPC®-PCMH™ data to evaluators; thus, detailed standards analysis was conducted on 28/34 locations. Three controls did not resubmit PPC®-PCMH™ documentation at 18 months, reporting no changes, hence their data for 18- months were imputed from baseline values.

Figure 1.

Enrollment and retention of practices.

Practices were primarily small, non-federally qualified health centers, in zip codes with modest household income ($60,000/household), with an average of two primary care physicians and 400 EmblemHealth patients per practice. The two multisite intervention practices were of modest size; one was a two location practice with four physicians and an average of 103 patients per provider; the other was a multi-specialty practice with three locations, six physicians and an average of 84 patients per provider. Table 1 shows that at baseline, intervention practices had significantly more outpatient visits for all episode types (Median = 3,037) than controls (Median = 2,346) (p = 0.02).

Table 1.

Baseline Characteristics of Intervention Versus Control Practices (N = 32)

| Intervention (N = 18) | Controls (N = 14) | P-value | |

|---|---|---|---|

| Variables | |||

| Physicians per Practice, Median (IQR) | 2 (9) | 2 (5) | 0.73 |

| Locations per Practice, Median (IQR) | 1 (2) | 1 (2) | 0.71 |

| EmblemHealth Patients per Practice, Mean (SD) | 407 (221) | 409 (185) | 0.78 |

| EmblemHealth Patients per Physician, Mean (SD) | 203 (89) | 246 (151) | 0.36 |

| Household Income of Practice Zip Code, Mean (SD) | $61,752 ($15,873) | $61,746 ($24,196) | 1.00 |

| Percentage of Practices that are Federally Qualified Health Centers, % (n) | 6 % (1) | 0 % (0) | 1.00 |

| Percentage of Practices with Electronic Health Record, % (n) | 50 % (9) | 50 % (7) | 1.00 |

| Emergency Room Visits for All Procedure Types, | |||

| Mean (SD) | 81 (43) | 68 (52) | |

| Median*, †, ‡ | 75 | 45 | 0.13 |

| Hospital Admission Visits for All Procedure Types, | |||

| Mean (SD) | 32 (17) | 29 (21) | |

| Median*, †, ‡ | 32 | 25 | 0.07 |

| Outpatient Visits for All Procedure Types, | |||

| Mean (SD) | 4,161 (2,685) | 2,750 (1,581) | |

| Median*, †, ‡ | 3,037 | 2,346 | 0.02 |

| Emergency Room Visits for Cardiovascular Procedure Types, | |||

| Mean (SD) | 11 (7) | 11 (9) | |

| Median*, †, ‡, § | 8 | 8 | 0.49 |

| Hospital Admission Visits for Cardiovascular Procedure Types, | |||

| Mean (SD) | 9 (5) | 9 (10) | |

| Median*, †, ‡, § | 9 | 6 | 0.12 |

| Outpatient Visits for Cardiovascular Procedure Types, | |||

| Mean (SD) | 571 (451) | 496 (259) | |

| Median*, †, ‡, § | 368 | 452 | 0.54 |

* Count of visits over baseline year, 2007

† Independent Samples Mann–Whitney U test for non-parametric data

‡ Patient level data aggregated to practice level and weights applied to adjust for volume of episodes at each practice

§ Limited to cardiovascular procedure type episodes only

Transition to PCMH

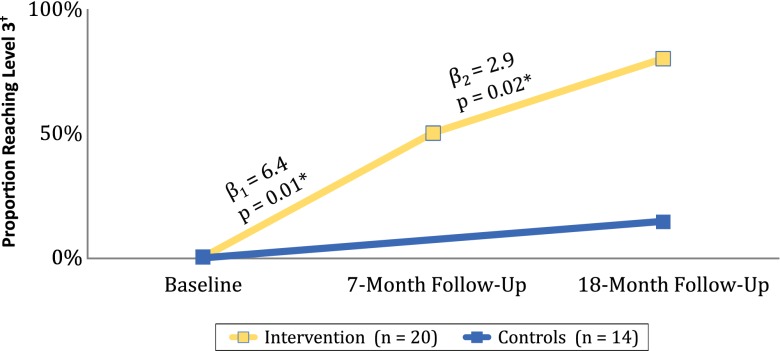

Examining PPC®-PCMH™ levels achieved shows that only 8.8 % of practices, or 15.0 % intervention practices and 0.0 % controls, achieved any level of recognition at baseline with no differences between intervention practices and controls. From Baseline to 18-months among 14 controls, 78.6 % (n = 11) remained unrecognized by the PPC®-PCMH™, 7.1 % (n = 1) achieved Level 1 and 14.3 % (n = 2) achieved Level 3. Among 20 intervention practices, only 5.0 % (n = 1) remained unrecognized, 10.0 % (n = 2) achieved Level 1, 5.0 % achieved Level 2 and 80.0 % achieved Level 3. Intervention practices were 27 times more likely to improve PPC®-PCMH™ by one level than controls, irrespective of practice size (p < 0.001) 95 % CI (5–157). Figure 2 shows the significantly different distribution of PPC®-PCMH™ levels between study conditions at 18 months (p < 0.001).

Figure 2.

Distribution of PPC®-PCMH™ levels at 18-month follow-up by study condition. * Zero values represent practices that did not achieve NCQA recognition. † Number of practices at each PPC®-PCMH™ level at 18-month follow-up. ‡ Kolmogorov–Smirnov (K–S) statistic p-value of significant differences in distributions.

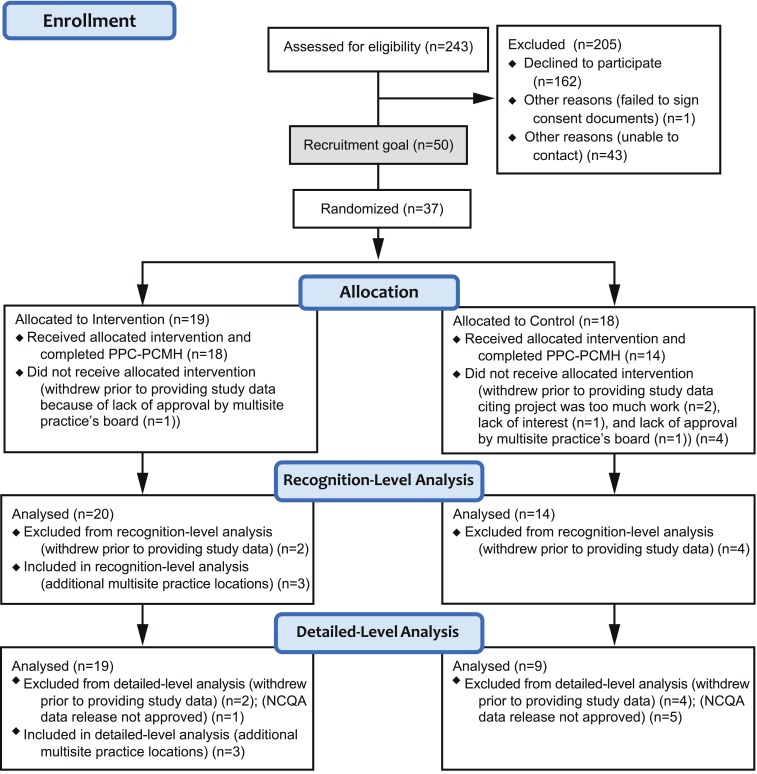

How Quickly Can Change Occur Among Intervention Practices?

Figure 3 depicts the observed proportions reaching PPC®-PCMH™ Level 3 at 18-month follow-up, and also shows the comparison of the piecewise slopes from Baseline to 7 months and from 7 months to 18 months among intervention practices only. The chi-square difference test shows that the model with two separate slopes improves the fit of the model, (χ2(1) 96.6–92.7 = 3.9, p < 0.05). While each slope segment is significant, they are significantly different from each other, and the two follow-up periods have different rates of change. Change is twice as fast from Baseline to 7 months, as it is during the second period, from 7 months to 18 months.

Figure 3.

Observed proportion of practices reaching PPC®-PCMH™ Level 3 by 18-months follow-up, and piecewise ordinal multilevel model predicted slope estimates and P-values. * Piecewise ordinal multilevel model coefficients and p-values for intervention practices. † PPC®-PCMH™ level.

Change Across PCMH Components as Measured by the PPC®-PCMH™

Table 2 shows that over 18 months, intervention practices improved significantly on eight of the nine PCMH standards. PPC-9 (utilizing advanced electronic communications) remained a barrier even to the intervention practices, with only 11–26 % of practices accruing any points within the standard. In contrast, controls made significant improvements on only the first three standards. Only 44 % of the control practices accrued any points across the first three standards, whereas 100 % of the intervention practices accrued points in these standards.

Table 2.

Mean Difference in PPC®-PCMH™ Standards Scores between Baseline and 18-Month Follow-up within Each Study Condition (N = 28)*

| Standards (points) | Mean of difference† | SE of difference† | Differential change (95 % CI)‡, § | P-value§ |

|---|---|---|---|---|

| PPC 1: Access and Communication (9) | ||||

| Intervention|| | 5.59 | 0.66 | 3.15 (0.99, 5.30) | 0.006 |

| Controls|| | 2.44 | 0.96 | ||

| PPC 2: Patient Tracking and Registry Functions (21) | ||||

| Intervention|| | 14.51 | 1.68 | 7.82 (2.32, 13.32) | 0.007 |

| Controls|| | 6.69 | 2.44 | ||

| PPC 3: Care Management (20) | ||||

| Intervention|| | 14.08 | 1.54 | 8.22 (3.18, 13.26) | 0.002 |

| Controls|| | 5.86 | 2.24 | ||

| PPC 4: Patient Self-Management Support (6) || | ||||

| Intervention|| | 4.21 | 0.48 | 2.99 (1.42, 4.56) | <0.001 |

| Controls | 1.22 | 0.70 | ||

| PPC 5: Electronic Prescribing (8) | ||||

| Intervention|| | 3.83 | 0.64 | 2.52 (0.46, 4.59) | 0.02 |

| Controls | 1.31 | 0.92 | ||

| PPC 6: Test Tracking (13) | ||||

| Intervention|| | 7.47 | 1.21 | 4.20 (0.14, 8.25) | 0.04 |

| Controls | 3.28 | 1.76 | ||

| PPC 7: Referral Tracking (4) | ||||

| Intervention|| | 2.10 | 0.39 | 1.44 (0.13, 2.74) | 0.03 |

| Controls | 0.67 | 0.56 | ||

| PPC 8: Performance Reporting and Improvement (15) | ||||

| Intervention|| | 8.96 | 1.12 | 6.24 (2.48, 10.00) | 0.002 |

| Controls | 2.72 | 1.63 | ||

| PPC 9: Advanced Electronic Communications (4) | ||||

| Intervention | 0.08 | 0.06 | −.03 (−0.24, 0.17) | 0.75 |

| Controls | 0.11 | 0.09 | ||

Multivariate test of study condition X time F[9, 18] = 2.79 p = 0.03.

* Intervention practices (n = 19), Control practices (n = 9).

† Final PPC minus Baseline PPC; positive values reflect improvement in PPC scores.

‡ Differential PPC®-PCMH™ standards score change from Baseline to 18-month follow-up between intervention and control practices.

§ Between-within effects using doubly Multivariate Analysis of Variance (MANOVA).

|| Significant simple slope test within study condition (p < 0.05).

DISCUSSION

This paper is the first to report on outcomes from a randomized, controlled trial of a demonstration operationalizing the key principles of the PCMH, including revised payment2 among solo and small independent, adult primary care practices. Thus, it provides important insights for the majority of primary care practices in the U.S.10 In contrast to previous reports,11–14 we found that practice size posed no disadvantage in making the transition to a PCMH over time. However, the key to success was clearly the provision of support in the form of revised payment, on-site practice redesign expertise, and on-site care management personnel. Those solo and small practices without access to support were much less successful in making the transition. This paper also adds to the growing evidence base by using robust analytical methods as well as a detailed focus on the pace of change. Finally, it sheds light on where practices experience delays in passing standards across the PPC®-PCMH™, both with and without external support, including those that achieve the highest level of recognition.9,17

Despite the strengths of this study, there are limitations. First, due to the small number of practices recruited, some attrition, and missing data, the final sample has less than optimal power, and may reflect some self-selection bias. Second, while our inclusion of solo and small practices increases the external validity of this study, the generalizability of our findings may be affected by the urban setting of our practices. Third, while our study was randomized, we were not able to achieve tight control in the context of routine practice, and both conditions were exposed to potential confounding factors. Anecdotal reports suggest that both conditions received some non-study assistance with electronic health record selection and implementation. However, we are unaware of any non-study, on-site care management assistance that was provided. Both conditions were exposed to the potentially behavior changing effect of the PPC®-PCMH™, pointing to where practices need to improve, and the controls’ participation fee could have been an incentive to change. However, the magnitude of the difference in outcomes between the conditions, and the consistency of change within each group, suggests that none of these factors (electronic health record advice, completing the PPC®-PCMH™, incentive payments) resulted in the change we observed. Fourth, we are confident that the internal validity of this study is high, because: we are unaware of any unmeasured change-producing event experienced solely by the intervention practices; our primary outcome measure did not change during the period of the study; sites were not selected for extreme scores or past histories of successful change; there was no bias on the part of EmblemHealth in site selection; and there were no significant differences in conditions at baseline beyond a difference in volume of intervention outpatient visits.

Given our findings that solo and small practices can become medical homes in a short time frame, planning and allocation of resources by policy makers and practice leaders can now be guided by a realistic time line. They can also see where, even with embedded support, practices need to put more effort to produce change, such as utilizing electronic prescribing, instituting referral tracking, and utilizing advanced electronic communications. Among unsupported practices, there are numerous areas needing change, with the biggest lags in the area of instituting patient self-management and performance reporting and improvement.

Our intervention was intensive and included embedding personnel at sites for months, raising the issue of how to pay for such support. Accountable Care Organizations, States, and others seeking to create broad-scale change might consider the public utility model.33 The public utility model would provide centrally held PCMH support services to enrolled practices on a contractual basis. Such a model would allow policy makers to compare the benefits of publicly-held supports and services versus a private payer model such as ours, or a distributed model where practices are independently responsible for developing and supporting their PCMH transition.

Acknowledgments

The Commonwealth Fund supported the independent evaluation of this demonstration by the Ethel Donaghue Center for Translating Research into Practice and Policy (TRIPP Center) at the University of Connecticut Health Center. The full trial protocol can be accessed by contacting EmblemHealth.

Conflict of Interest

Judith Fifield, PhD, reports having received two honoraria from Zimmer in the last 3 years. All other authors declare they have no conflict of interest.

REFERENCES

- 1.Gabbay RA, Bailit MH, Mauger DT, Wagner EH, Siminerio L. Multipayer patient-centered medical home implementation guided by the chronic care model. Joint Comm J Qual Patient Saf. 2011;37(6):265–273. doi: 10.1016/s1553-7250(11)37034-1. [DOI] [PubMed] [Google Scholar]

- 2.American Academy of Family Physicians, American Academy of Pediatrics, American College of Physicians, American Osteopathic Association. Joint Principles of the Patient-Centered Medical Home. 2007 Feb [cited 2012 July 17]. Available from: URL: www.aafp.org/pcmh/principles.pdf

- 3.Bitton A, Martin C, Landon B. A nationwide survey of patient centered medical home demonstration projects. J Gen Intern Med. 2010;25(6):584–592. doi: 10.1007/s11606-010-1262-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Steiner BD, Denham AC, Ashkin E, Newton WP, Wroth T, Dobson LA. Community care of North Carolina: improving care through community health networks. Ann Fam Med. 2008;6(4):361–367. doi: 10.1370/afm.866. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Steele GD, Haynes JA, Davis DE, et al. How Geisinger’s advanced medical home model argues the case for rapid-cycle innovation. Health Aff. 2010;29(11):2047–2053. doi: 10.1377/hlthaff.2010.0840. [DOI] [PubMed] [Google Scholar]

- 6.Reid RJ, Coleman K, Johnson EA, et al. The group health medical home at year two: cost savings, higher patient satisfaction, and less burnout for providers. Health Aff. 2010;29(5):835–843. doi: 10.1377/hlthaff.2010.0158. [DOI] [PubMed] [Google Scholar]

- 7.Reid RJ, Fishman PA, Onchee Y, et al. Patient-centered medical home demonstration: a prospective, quasi-experimental, before and after evaluation. Am J Manage Care. 2009;15(9):e71–e87. [PubMed] [Google Scholar]

- 8.Nutting PA, Miller WL, Crabtree BF, Jaen CR, Stewart EE, Stange KC. Initial lessons from the first national demonstration project on practice transformation to a patient-centered medical home. Ann Fam Med. 2009;7(3):254–260. doi: 10.1370/afm.1002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Barr MS. The need to test the patient-centered medical home. JAMA. 2008;300(7):834–835. doi: 10.1001/jama.300.7.834. [DOI] [PubMed] [Google Scholar]

- 10.Hing E, Burt CW. Office-based medical practices: methods and estimates from the national ambulatory medical care survey. Advanced data from vital and health statistics. Hyattsville (MD): National Center for Health Statistics; 2007. p. 383. [PubMed] [Google Scholar]

- 11.Rittenhouse DR, Casalino LP, Shortell SM, McClellan SR, Alexander JA, Drum ML. Small and medium-size physician practices use few patient-centered medical home processes. Health Aff. 2011;30(8):1575–1584. doi: 10.1377/hlthaff.2010.1210. [DOI] [PubMed] [Google Scholar]

- 12.Friedberg MW, Safran DG, Coltin KL, Dresser M, Schneider EC. Readiness for the patient-centered home: structural capabilities of Massachusetts primary care practices. J Gen Intern Med. 2009;24(2):162–169. doi: 10.1007/s11606-008-0856-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wolfson D, Bernabeo E, Leas B, Sofaer S, Pawlson LG, Pilliterre D. Quality improvement in small office settings: an examination of successful practices. BMC Fam Pract. 2009;10(14):1–12. doi: 10.1186/1471-2296-10-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Scholle SH, Saunders RC, Tirodkar MA, Torda P, Pawlson LG. Patient-centered medical homes in the united states. J Ambul Care Manag. 2011;34(1):20–32. doi: 10.1097/JAC.0b013e3181ff7080. [DOI] [PubMed] [Google Scholar]

- 15.Stewart EE, Nutting PA, Crabtree BF, Stange KC, Miller WL, Jaen CR. Implementing the patient-centered medical home: observation and description of the national demonstration project. Ann Fam Med. 2010;8(Suppl 1):S21–S32. doi: 10.1370/afm.1111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Paulus RA, Davis K, Steele GD. Continuous innovation in health care: implications of the Geisinger experience. Health Aff. 2008;27(5):1235–1245. doi: 10.1377/hlthaff.27.5.1235. [DOI] [PubMed] [Google Scholar]

- 17.Berenson RA, Devers KJ, Burton RA. Will the Patient-Centered Medical Home Transform the Delivery of Health Care? Timely Analysis of Immediate Health Policy Issues 2011 Aug [cited 2012 July 16]. Princton (NJ): Robert Wood Johnson Foundation. Available from: URL: http://www.rwjf.org/files/research/20110805quickstrikepaper.pdf

- 18.Peikes D, Zutshi A, Genevro J, Smith K, Parchman M, Meyers D. Early Evidence on the Patient-Centered Medical Home. Final Report. Rockville (MD): Agency for Healthcare Research and Quality; 2012 Feb. Contract No.: HHSA290200900019I/HHSA29032002T and HHSA290200900019I/HHSA29032005T. Sponsored by: Agency for Healthcare Research and Quality.

- 19.Jaén CR, Crabtree BF, Palme RF, et al. Methods for evaluating practice change toward a patient-centered medical home. Ann Fam Med. 2010;8(Suppl 1):S9–S20. doi: 10.1370/afm.1108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.National Committee for Quality Assurance. New NCQA Standards Take Patient-Centered Medical Homes to the Next Level. NCQA 2011 Jan 28 [cited 2012 July 17]. Available from: URL: http://www.ncqa.org/tabid/1300/Default.aspx

- 21.Crabtree BF, Nutting PA, Miller WL, Stange KC, Stewart EE, Jaen CR. Summary of the national demonstration project and recommendations for the patient-centered medical home. Ann Fam Med. 2010;8(Suppl 1):S80–S90. doi: 10.1370/afm.1107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Luxford K, Safran DG, Delbanco T. Promoting patient-centered care: a qualitative study of facilitators and barriers in healthcare organizations with a reputation for improving the patient experience. Int J Qual Health Care. 2011;23(5):510–515. doi: 10.1093/intqhc/mzr024. [DOI] [PubMed] [Google Scholar]

- 23.Berenson R, Hammons T, Gans D, et al. A house is not a home: keeping patients at the center of practice redesign. Health Aff. 2008;27(5):1219–1230. doi: 10.1377/hlthaff.27.5.1219. [DOI] [PubMed] [Google Scholar]

- 24.O’Malley AS, Peikes D, Ginsburg PB. Qualifying a Physician Practice as a Medical Home. Policy Perspectives 2008 Dec [cited 2012 July 17]; 1:1, 3-8. Available from: URL: http://hschange.org/CONTENT/1030/1030.pdf

- 25.American Academy of Family Physicians, American Academy of Pediatrics, American College of Physicians, American Osteopathic Association. Guidelines for Patient-Centered Medical Home (PCMH) Recognition and Accreditation Programs. 2011 Feb [cited 2012 July 17]. Available from: URL: http://www.aafp.org/online/etc/medialib/aafp_org/documents/membership/pcmh/pcmhtools/pcmhguidelines.Par.0001.File.tmp/GuidelinesPCMHRecognitionAccreditationPrograms.pdf

- 26.EmblemHealth. It’s Who We Are: Emblemhealth 2010 Annual Report. 2010 [cited 2012 July 17]. Available from: URL: http://www.emblemhealth.com/pdf/2010_annual_report.pdf

- 27.National Committee for Quality Assurance. Standards and Guidelines for Physician Practice Connections – Patient-Centered Medical Home Version. 2008 [cited 2012 July 17]. Available from: URL: http://www.usafp.org/PCMH-Files/NCQA-Files/PCMH_Overview_Apr01.pdf

- 28.SPSS [computer program]. Version 18.0. Somers (NY): IBM; 2011.

- 29.Hedeker D, Gibbons RD. Longitudinal data analysis. Hoboken (NJ): John Wiley & Sons, Inc; 2006. pp. 187–218. [Google Scholar]

- 30.Raudenbush SW, Bryk AS. Hierarchical linear models. Thousand Oaks (CA): Sage Publications, Inc; 2002. pp. 178–179. [Google Scholar]

- 31.McCoach DB, Kaniskan B. Using time-varying covariates in multilevel growth models. Front Psychol. 2010;1(17):1–12. doi: 10.3389/fpsyg.2010.00017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Tabachnick BG, Fidell LS. Profile analysis: the multivariate approach to repeated measures. In: Hartman S, editor. Using multivariate statistics. Boston (MA): Pearson Education, Inc; 2007. pp. 311–374. [Google Scholar]

- 33.Villagra VG. Accelerating the adoption of medical homes in Connecticut: a chronic-care support system modeled after public utilities. Conn Med. 2012;76(3):173–178. [PubMed] [Google Scholar]