Abstract

Introduction

In 2001, a Cochrane review of mammography screening questioned whether screening reduces breast cancer mortality, and a more comprehensive review in Lancet, also in 2001, reported considerable overdiagnosis and overtreatment. This led to a heated debate and a recent review of the evidence by UK experts intended to be independent.

Objective

To explore if general medical and specialty journals differed in accepting the results and methods of three Cochrane reviews on mammography screening.

Methods

We identified articles citing the Lancet review from 2001 or updated versions of the Cochrane review (last search 20 April 2012). We explored which results were quoted, whether the methods and results were accepted (explicit agreement or quoted without caveats), differences between general and specialty journals, and change over time.

Results

We included 171 articles. The results for overdiagnosis were not quoted in 87% (148/171) of included articles and the results for breast cancer mortality were not quoted in 53% (91/171) of articles. 11% (7/63) of articles in general medical journals accepted the results for overdiagnosis compared with 3% (3/108) in specialty journals (p=0.05). 14% (9/63) of articles in general medical journals accepted the methods of the review compared with 1% (1/108) in specialty journals (p=0.001). Specialty journals were more likely to explicitly reject the estimated effect on breast cancer mortality 26% (28/108), compared with 8% (5/63) in general medical journals, p=0.02.

Conclusions

Articles in specialty journals were more likely to explicitly reject results from the Cochrane reviews, and less likely to accept the results and methods, than articles in general medical journals. Several specialty journals are published by interest groups and some authors have vested interests in mammography screening.

Keywords: Public Health, Qualitative Research

Introduction

In October 2001, the Nordic Cochrane Centre published a Cochrane review of mammography screening, which questioned whether screening reduces breast cancer mortality.1 Within the same month, the Centre published a more comprehensive review in Lancet that also reported on the harms of screening and found considerable overdiagnosis and overtreatment (a 30% increase in the number of mastectomies and tumourectomies).2 This resulted in a heated debate, which is still ongoing.3 The Cochrane review was updated in 2006,4 to include overdiagnosis, and again in 2009.5

Recently, several studies have questioned whether screening is as beneficial as originally claimed,6–8 and confirmed that overdiagnosis is a major harm of breast cancer screening.9–11 The US Preventive Services Task Force published updated screening recommendations in November 2009 and asserted that the benefit is smaller than previously thought and that the harms include overdiagnosis and overtreatment, but it did not quantify these harms.12 The task force changed its previous recommendations and now recommends that women aged 40–49 years discuss with their physician whether breast screening is right for them, and it further recommends biennial screening instead of annual screening for all age groups.12 These recommendations were repeated in the 2011 Canadian guidelines for breast screening.13

Screening is likely to miss aggressive cancers because they grow fast, leaving little time to detect them in their preclinical phases.6 Further, the basic assumption that finding and treating early-stage disease will prevent late stage or metastatic disease may not be correct, as breast cancer screening has not reduced the occurrence of large breast cancers14 or late-stage breast cancers,11 despite the large and sustained increases in early invasive cancers and ductal carcinoma in situ with screening.

A systematic review from 2009 showed that the rate of overdiagnosis in organised breast screening programmes was 52%, which means that one in three cancers diagnosed in a screened population is overdiagnosed.9 It is quite likely that many screen-detected cancers would have regressed spontaneously in the absence of screening.15 16

We explored how the first comprehensive systematic review on mammography screening ever performed, the one from 2001 published in Lancet,2 and the subsequent systematic Cochrane reviews from 20064 and 20095 have been cited from 2001 to April 2012. We investigated whether there were differences between general medical journals and specialty journals regarding which results were mentioned and how overdiagnosis, overtreatment, breast cancer mortality, total mortality, and the methods of the reviews were described. Vested interests on behalf of both journals and contributing authors may be more pronounced in specialty journals, and this may influence views on specific interventions, such as mammography screening.

Methods

We searched for articles quoting one of the three versions of the review2 4 5 (date of last search 20 April 2012). We used the ‘source titles function’ in the Institute for Scientific Information (ISI) Web of Knowledge to count the number of times each review had been cited in individual journals. We only included journals in which four or more articles had cited one of the three versions of the review. This criterion led to the exclusion of specialty journals of little relevance for our study, for example, Nephrology and Research in Gerontological Nursing. Articles written by authors affiliated with the Nordic Cochrane Centre were also excluded.

We could not include the 2001 Cochrane review1 because it was not indexed by the ISI Web of Knowledge. Furthermore, even if it had been indexed, we would have excluded it. This version of the review1 is not comparable to the other three versions,2–5 as the editors of the Cochrane Breast Cancer Group had refused to publish these data on overdiagnosis and overtreatment.

A journal was classified as a general medical journal if it did not preferentially publish papers from a particular medical specialty. A journal was classified as a specialty journal if it preferentially published articles from a particular medical specialty or topic.

When we rated how the papers cited the review, we looked for statements applicable to the following categories:

Overdiagnosis

Overtreatment

Breast cancer mortality

Total mortality

Methods used in the review

We rated the quoting articles’ general opinions about the results and methods of the review using the labels—accept, neutral, reject, unclear, or not applicable, using the following definitions:

Accept: the authors explicitly agreed with the results or methods, or quoted the numerical results without comments.

Neutral: the results or methods were mentioned and the author presented arguments both for and against them.

Reject: the authors explicitly stated that the results or methods were flawed, wrong, or false, or only presented arguments against them. Only reporting a result from a favourable subgroup analysis was also classified as rejected.

Unclear: the results or methods were mentioned, but it was not possible to tell if the authors agreed with them or not, or the results were only mentioned qualitatively. If several conflicting opinions were presented, it would also be classified as unclear.

Not applicable: the review was quoted for something else than its results or methods.

The articles quoting the review were assessed in relation to the five categories (overdiagnosis, overtreatment, breast cancer mortality, total mortality and methods) separately, and no overall assessment of the articles’ general opinion about the review was made.

Texts classified as not applicable regarding any of the five categories were reread to determine and note which topics were discussed.

Two researchers (KR, Andreas Brønden Petersen, see Acknowledgements) assessed the text independently. Disagreements were settled by discussion.

In order to ensure blinded data extraction, an assistant (Mads Clausen, see Acknowledgements) not involved with data extraction identified the text sections citing one of the three review versions and copied them into a Microsoft Word document. Only this text was copied, and the two data extractors were therefore unaware of the author and journal names, time of publication and the title of the article. The fonts of the copied text were converted into Times New Roman, saved in a new document and the text labelled with a random number using the ‘Rand function’ in Microsoft Excel. The key to matching the text with the articles was not available to data extractors until the assessments had been completed. The person responsible for copying the text made sure it did not contain any information that might reveal which of the three versions of the review had been cited. When there was more than one reference within the copied text, the reference to the review was highlighted to make it clear which statements referred to the review.

All article types, as well as letters to the editor, were included and were classified as research papers, systematic reviews, editorials, letters, guidelines and narratives.

p Values were calculated using Fisher's exact test (two-tailed p values (http://www.swogstat.org/stat/public/fisher.htm)).

Results

In total, 523 articles cited one of the three versions of the review: 360 cited the 2001 Lancet review,2 123 the 2006 Cochrane review4 and 40 the 2009 Cochrane review.5 Three articles cited both the 2001 and the 2006 versions of the review; for these, we only used information related to the 2001 citation.

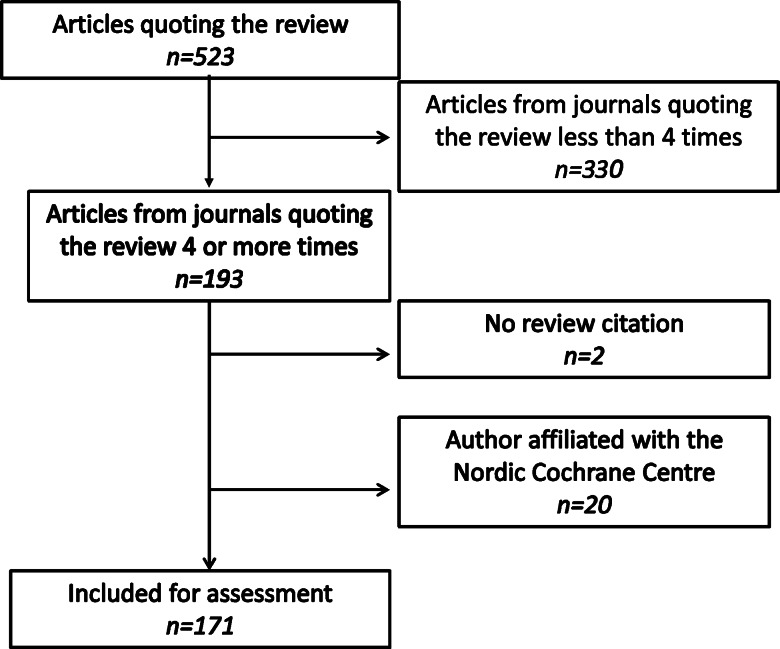

Including only journals that had published at least four articles, which cited one or more of the three versions of the review, the search identified 151, 27 and 15 articles, respectively (193 in total, or 37% of the total of 523 articles). A flow chart is shown in figure 1.

Figure 1.

Flow diagram of article exclusion.

We excluded 22 additional articles, two because there was no reference to the review in the text, even though the review was listed as a reference,17 18 and 20 (10, 5 and 5 citing the 2001, 2006 and 2009 versions, respectively) because they had one or more authors affiliated with the Nordic Cochrane Centre.

Thus, 171 articles were included for assessment. In total, 63 articles (37%) were from general medical journals and 108 (63%) from specialty journals. A total of 80 (47%) were from European journals and 91 (53%) from North American journals. No journals from other regions contained at least four articles citing the review.

The general medical journals included were Lancet (21 articles), BMJ (13 articles), Annals of Internal Medicine (13 articles), Journal of the American Medical Association (7 articles), New England Journal of Medicine (5 articles) and International Journal of Epidemiology (4 articles). The specialty journals included Journal of the National Cancer Institute (13 articles), Cancer (13 articles), American Journal of Roentgenology (7 articles) and 15 others (see box 1). Most of the included articles were either research papers (n=63, 37%) or narrative articles (n=44, 26%; table 1).

Box 1. The specialty journals included in this study.

Specialty journals included

Journal of the National Cancer Institute (13)

Cancer (13)

European Journal of Cancer (7)

British Journal of Cancer (7)

American Journal of Roentgenology (7)

Cancer Causes and Control (6)

Annals of Oncology (6)

European Journal of Surgical Oncology (6)

Journal of Medical Screening (5)

Cancer Epidemiology, Biomarkers and Prevention (5)

CA: a Cancer Journal for Clinicians (5)

Journal of Clinical Oncology (5)

Radiologic Clinics of North America (5)

Oncologist (4)

Breast Cancer Research and Treatment (4)

Breast (4)

Radiology (3)

Journal of Surgical Oncology (3)

Table 1.

The article types included in this study

| Article type | ||||||

|---|---|---|---|---|---|---|

| Research | Letter | Editorial | Guideline | Narrative | Review | |

| General EU | 8 | 17 | 1 | 0 | 12 | 1 |

| General NA | 8 | 5 | 4 | 1 | 5 | 2 |

| Special EU | 27 | 4 | 4 | 0 | 6 | 0 |

| Special NA | 20 | 4 | 14 | 2 | 21 | 5 |

| Total | 63 | 30 | 23 | 3 | 44 | 8 |

EU, European; NA, North American.

The text of 32 of the 171 included articles (19%) was rated as not applicable for all the five categories (overdiagnosis, overtreatment, breast cancer mortality, total mortality and methods). In total, 15 of these 32 articles discussed the controversy when the first review was published, without specifically mentioning any of the categories. Other subjects discussed were screening of women under the age of 50 (two articles), and benefits of breast cancer screening other than those in our categories (two articles; see online supplementary appendix 1 for a full list of topics).

The review’s conclusions regarding overdiagnosis were not quoted in 87% (149/171) of the included articles and the results for breast cancer mortality were not quoted in 53% (91/171) of the included articles.

General medical journals were more likely to accept the results or methods of systematic reviews than specialty journals, for example, overdiagnosis was classified as accepted in 11% (7/63) of articles in general medical journals, but in only 3% (3/108) of the articles in specialty journals (p=0.05), and the methods were accepted in 14% (9/63) of articles in general medical journals, but only in 1% (1/108) of articles in specialty journals (p=0.001). Specialty journals were also more likely to reject the results for breast cancer mortality, namely for 26% (28/108) of articles compared with 8% (5/63; p=0.02) in general medical journals. The differences between general medical and specialty journals in relation to rejecting the categories overdiagnosis, overtreatment, total mortality and methods were small (table 2).

Table 2.

General medical journals compared with specialty journals. Column percentages in brackets

| Overdiagnosis | Overtreatment | Breast cancer mortality | Total mortality | Methods | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| General | Special | p Value | General | Special | p Value | General | Special | p Value | General | Special | p Value | General | Special | p Value | |

| Accept | 7 (11%) | 3 (3%) | 0.05 | 7 (11%) | 1 (1%) | 0.01 | 4 (6%) | 2 (2%) | 0.20 | 6 (10%) | 0 | 0.00 | 9 (14%) | 1 (1%) | 0.00 |

| Neutral | 1 (2%) | 0 | 0.37 | 2 (3%) | 0 | 0.14 | 4 (6%) | 3 (3%) | 0.43 | 1 (2%) | 0 | 0.37 | 3 (5%) | 1 (1%) | 0.15 |

| Reject | 0 | 0 | 1.00 | 1 (2%) | 4 (4%) | 0.65 | 5 (8%) | 28 (26%) | 0.02 | 3 (5%) | 6 (6%) | 1.00 | 10 (16%) | 19 (17%) | 1.00 |

| Unclear | 2 (3%) | 10 (9%) | 0.22 | 4 (6%) | 11 (10%) | 0.58 | 12 (19%) | 23 (21%) | 0.85 | 9 (14%) | 19 (18%) | 0.68 | 10 (16%) | 13 (12%) | 0.65 |

| Not applicable | 53 (84%) | 95 (88%) | 0.91 | 49 (78%) | 92 (85%) | 0.72 | 38 (60%) | 53 (49%) | 0.43 | 44 (70%) | 83 (77%) | 0.72 | 31 (49%) | 74 (69%) | 0.24 |

Two-tailed p values are used.

The European and North American journals were equally likely to reject or accept the review's methods or results (data not shown).

The number of citations of the three versions of the review differed a lot over time (see table 3). Some years had very few citations, the lowest being 2012 and 2006 where the review was cited only 1 and 6 times, respectively. The highest number of citations was in 2002 (42 citations). There were no clear trends over time regarding the number of articles accepting or rejecting the methods and conclusions of the reviews, although the breast cancer mortality results may have received greater acceptance in recent years, for example, in 2002, there was no acceptance of the breast cancer mortality results (0 of 42), whereas 19% (3/16) explicitly accepted them in 2010 (p=0.02; data not shown).

Table 3.

Number of citations of one of the three reviews per year

| Number of citations per year | |

|---|---|

| 2001 | 8 |

| 2002 | 42 |

| 2003 | 28 |

| 2004 | 22 |

| 2005 | 13 |

| 2006 | 6 |

| 2007 | 9 |

| 2008 | 10 |

| 2009 | 10 |

| 2010 | 15 |

| 2011 | 7 |

| 2012 | 1 |

The 2001 version of the review had more categories rejected and fewer categories accepted than the 2006 and 2009 versions, for example, 30% (3/10) accepted the results for breast cancer mortality presented in the 2009 version of the review, compared with 0 (0/140) in the 2001 version (p=0.0002; see table 4).

Table 4.

Comparison of the three versions of the review in terms of accepting or rejecting the five categories

| Overdiagnosis | Overtreatment | Breast cancer mortality | Total mortality | Methods | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Comparing 2001–2006 | 2001 | 2006 | p | 2001 | 2006 | p | 2001 | 2006 | p | 2001 | 2006 | p | 2001 | 2006 | p |

| Accepted | 4 (3%) | 4 (19%) | 0.02 | 5 (4%) | 2 (10%) | 0.25 | 0 | 3 (14%) | 0.00 | 4 (3%) | 0 | 1.00 | 8 (6%) | 1 (5%) | 1.00 |

| Rejected | 0 | 0 | 1.00 | 4 (3%) | 0 | 1.00 | 29 (21%) | 2 (10%) | 0.38 | 9 (6%) | 0 | 0.60 | 29 (21%) | 0 | 0.05 |

| Comparing 2001–2009 | 2001 | 2009 | p | 2001 | 2009 | p | 2001 | 2009 | p | 2001 | 2009 | p | 2001 | 2009 | p |

| Accepted | 4 (3%) | 3 (30%) | 0.01 | 5 (4%) | 2 (20%) | 0.09 | 0 | 3 (30%) | 0.00 | 4 (3%) | 2 (20%) | 0.07 | 8 (6%) | 1 (10%) | 0.48 |

| Rejected | 0 | 0 | 1.00 | 4 (3%) | 1 (10%) | 0.31 | 29 (21%) | 2 (20%) | 1.00 | 9 (6%) | 0 | 1.00 | 29 (21%) | 0 | 0.37 |

| Comparing 2006–2009 | 2006 | 2009 | p | 2006 | 2009 | p | 2006 | 2009 | p | 2006 | 2009 | p | 2006 | 2009 | p |

| Accepted | 4 (19%) | 3 (30%) | 0.67 | 2 (10%) | 2 (20%) | 0.59 | 3 (14%) | 3 (30%) | 0.64 | 0 | 2 (20%) | 0.13 | 1 (5%) | 1 (10%) | 1.00 |

| Rejected | 0 | 0 | 1.00 | 0 | 1 (10%) | 0.34 | 2 (10%) | 2 (20%) | 0.59 | 0 | 0 | 1.00 | 0 | 0 | 1.00 |

Percentages in brackets are calculated from the total number of articles cited in that version of the review. The total number of articles cited in the 2001 version: 140. The total number of articles cited in the 2006 version: 22. The total number of articles cited in the 2009 version: 10. Two tailed p values are shown.

Discussion

Although we deliberately reduced the sample size by requiring at least four citations for each included journal, we had enough articles that quoted the review for our comparisons.

Specialty journals were more likely to reject the estimate of the effect of screening on breast cancer mortality than the six general medical journals we included.

Articles in general medical journals were also more approving of four of the five individual categories we assessed (overdiagnosis, overtreatment, total mortality and methods) than the specialty journals were and the difference was statistically significant for all the categories, except for breast cancer mortality.

We have previously found that scientific articles on breast screening tend to emphasise the major benefits of mammography screening over its major harms and that overdiagnosis was more often downplayed or rejected in articles written by authors affiliated with screening by specialty or funding, compared with authors unrelated with screening.19 Recommendations in guidelines for breast screening are also influenced by the authors’ medical specialty.20

The difference we found between the general medical and specialty journals could be explained by conflicts of interest, which are likely to be more prevalent in specialty journals owned by political interest groups such as the American Cancer Society or by medical societies with members whose income may depend on the intervention. All the six general medical journals, but only 22% (4/18) of the specialty journals follow the International Committee of Medical Journal Editors’ (ICMJE) Uniform Requirements for Manuscripts Submitted to Biomedical Journals.21 Even though journals have conflict of interest reporting policies, the conflicts of interest reported are not always reliable.22

All the general medical journals included are members of the World Association of Medical Editors (WAME); however, this is only the case for 22% (4/18) of the specialty journals included. WAME aims to improve the editorial standards and, among other things, to ensure a balanced debate on controversial issues.23 Being a member of WAME helps with transparency in terms of their guidelines for conflicts of interest, but it also reminds editors to ensure that their journals are covering both sides of a debate.

Development over time

The results and conclusions on breast cancer mortality and overdiagnosis were more often accepted in 2010 than in any other year (data not shown). This may reflect that the criticism of breast screening is becoming more widespread. The ongoing independent review of the National Health Service (NHS) Breast Screening Programme announced by Mike Richards, the UK National Clinical Director for Cancer and End of Life Care, Department of Health, in October 2011 is a further indication of this development.24 Also, the US Preventive Services Task Force changed its recommendations for breast screening in 2009.12 Though our data did not show strong time trends, we believe that these developments demonstrate a growing acceptance of the results and conclusions of our systematic review. In support of this, the 2009 version of the Cochrane review has received more approval than disapproval, for example, 30% (3/10) accepted the results for breast cancer mortality presented in the 2009 version of the review, compared with 0 (0/140) in the 2001 version.25–31 The US Preventive Services Task Force was heavily criticised after the publication of its new recommendations in 2009,29 32 but the criticism came from people with vested interests, and the independent Canadian Task Force supported the conclusions of the US Preventive Services Task Force and the 2009 Cochrane review5 in 2011.13

The 2001 review published in Lancet was by far the most cited of the three reviews. It was 5 years older than the Cochrane review from 2006, but the vast majority of the citations came within the first year of publication. It was unique at the time, as it questioned whether mammography screening was effective, based on a thorough quality assessment of all the randomised controlled trials, and also was the first systematic review to quantify overdiagnosis.

Limitations

A minor part of the included articles (19%, 32/171) did not refer to any of our five specified outcomes. In nearly half of the cases (47%), this was due to the article referring only to the debate that followed the first review,33 and not its results or methods. The texts also dealt with topics such as false positives or screening women under the age of 50 years. The articles also simply stated that mammography screening was beneficial without further specification. The most frequently used classification for each of our specified categories was not applicable. This was the case for articles in both the general medical and specialty journals, and for articles in the European and North American journals. The text typically dealt with only one or two of our categories, for example, overdiagnosis, and did not mention overtreatment or any other categories.

None of the articles rejected overdiagnosis (0 of 171 articles), which could be because they did not mention the issue at all. This was the case in 76% of scientific articles on breast screening in a previous study by Jørgensen et al.19

Our definition of rejection was that the author should explicitly state that the review's estimate was flawed, wrong or false, or that they should in some way argue against it. With this strict definition, we did not capture authors who have consistently stated over the years in other articles than those we included that they do not believe that overdiagnosis is a problem, and we also did not present their views on the subject.

Numerous articles were classified as unclear for one or more of our categories. The texts in question did not allow an interpretation in any direction and we did not rate the articles as accepting or rejecting the review’s results and methods unless it was perfectly clear what the authors meant. This reflects that authors often do not present clear opinions of the intervention which they discuss. An additional explanation for the many articles found to be unclear could be that we did not assess the entire article, and arguments could have been presented elsewhere in the text.

Letters were included in this study, which could explain why some of the articles were classified as not applicable in all the five categories. The specialists who read and respond to letters in their own journals might be more likely to react negatively towards the review because of conflicts of interest.19 Specialists with a connection to mammography screening also reply to articles in general medical journals when they concern mammography screening. Therefore, it is quite likely that there is a greater difference between the specialists involved with the screening programmes and the doctors not involved in breast cancer screening, in terms of accepting and rejecting the results and methods, than we have found in this study.

Conclusion

Articles in specialty journals were less approving of the results and methods of the systematic review of breast screening than those in general medical journals. This may be explained by conflicts of interest, as several specialty journals were published by groups with vested interests in breast screening, and several articles had authors with vested interests.

Supplementary Material

Supplementary Material

Acknowledgments

We would like to thank Andreas Brønden Petersen and Mads Clausen for assisting us in preparing the text and extracting data.

Footnotes

Contributors: KR participated in the design of the study, carried out data analysis, performed statistical analysis and drafted the manuscript. KJJ and PCG both participated in the design of the study and helped to draft the manuscript. All authors read and approved the final manuscript.

Competing interests: None.

Open Access: This is an Open Access article distributed in accordance with the Creative Commons Attribution Non Commercial (CC BY-NC 3.0) license, which permits others to distribute, remix, adapt, build upon this work non-commercially, and license their derivative works on different terms, provided the original work is properly cited and the use is non-commercial. See: http://creativecommons.org/licenses/by-nc/3.0/

References

- 1.Olsen O, Gøtzsche PC. Screening for breast cancer with mammography. Cochrane Database Syst Rev 2001;CD001877. [DOI] [PubMed] [Google Scholar]

- 2.Olsen O, Gøtzsche PC. Cochrane review on screening for breast cancer with mammography. Lancet 2001;358:1340–2 [DOI] [PubMed] [Google Scholar]

- 3.Gøtzsche PC. Mammography screening: truth, lies and controversy. London: Radcliffe Publishing, 2012 [DOI] [PubMed] [Google Scholar]

- 4.Gøtzsche PC, Nielsen M. Screening for breast cancer with mammography. Cochrane Database Syst Rev 2006;CD001877. [DOI] [PubMed] [Google Scholar]

- 5.Gøtzsche PC, Nielsen M. Screening for breast cancer with mammography. Cochrane Database Syst Rev 2009;CD001877. [DOI] [PubMed] [Google Scholar]

- 6.Esserman L, Shieh Y, Thompson I. Rethinking screening for breast cancer and prostate cancer. JAMA 2009;302:1685–92 [DOI] [PubMed] [Google Scholar]

- 7.Jørgensen KJ, Zahl PH, Gøtzsche PC. Breast cancer mortality in organised mammography screening in Denmark: comparative study. BMJ 2010;340:c1241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Autier P, Boniol M, Gavin A, et al. Breast cancer mortality in neighbouring European countries with different levels of screening but similar access to treatment: trend analysis of WHO mortality database. BMJ 2011;343:d4411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Jørgensen KJ, Gøtzsche PC. Overdiagnosis in publicly organised mammography screening programmes: systematic review of incidence trends. BMJ 2009;339:b2587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Morrell S, Barratt A, Irwing L, et al. Estimates of overdiagnosis of invasive breast cancer associated with screening mammography. Cancer Causes Control 2010;21:275–82 [DOI] [PubMed] [Google Scholar]

- 11.Kalager M, Adami HO, Bretthauer M, et al. Overdiagnosis of invasive breast cancer due to mammography screening: results from the Norwegian screening program. Ann Intern Med 2012;156:491–9 [DOI] [PubMed] [Google Scholar]

- 12.Nelson HD, Tyne K, Naik A, et al. Screening for breast cancer: an update for the U.S. Preventive Services Task Force. Ann Intern Med 2009;151:727–37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Canadian Task Force on Preventive Health Care Tonelli M, Gorber SC, et al. Recommendations on screening for breast cancer in average-risk women aged 40–74 years. CMAJ 2011;183:1991–2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Autier P, Boniol M, Middleton R, et al. Advanced breast cancer incidence following population-based mammographic screening. Ann Oncol 2011;22:1726–35 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zahl PH, Maehlen J, Welch HG. The natural history of invasive breast cancers detected by screening mammography. Arch Intern Med 2008;168:2311–16 [DOI] [PubMed] [Google Scholar]

- 16.Zahl PH, Gøtzsche PC, Mæhlen J. Natural history of breast cancers detected in the Swedish mammography screening programme: a cohort study. Lancet Oncol 2011;12:1118–24 [DOI] [PubMed] [Google Scholar]

- 17.Añorbe E, Aisa P. Screening mammography. Radiology 2003;227:903–4 [DOI] [PubMed] [Google Scholar]

- 18.Edwards QT, Li AX, Pike MC, et al. Ethnic differences in the use of regular mammography: the multiethnic cohort. Breast Cancer Res Treat 2009;115:163–70 [DOI] [PubMed] [Google Scholar]

- 19.Jørgensen KJ, Klahn A, Gøtzsche PC. Are benefits and harms in mammography screening given equal attention in scientific articles? A cross-sectional study. BMC Med 2007;5:12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Norris SL, Burda BU, Holmer HK, et al. Author's specialty and conflicts of interest contribute to conflicting guidelines for screening mammography. J Clin Epidemiol 2012;65:725–33 [DOI] [PubMed] [Google Scholar]

- 21.International Committee of Medical Journal Editors, 2012. http://www.icmje.org/journals.html#I (accessed 24 Apr 2013).

- 22.Riva C, Biollaz J, Foucras P, et al. Effect of population-based screening on breast cancer mortality. Lancet 2012;379:1296. [DOI] [PubMed] [Google Scholar]

- 23.World Association of Medical Editors, 2013. http://www.wame.org/about (accessed 24 Apr 2013). [Google Scholar]

- 24.Richards M. An independent review is under way. BMJ 2011;343:d6843. [DOI] [PubMed] [Google Scholar]

- 25.Woloshin S, Schwartz LM. The benefits and harms of mammography screening: understanding the trade-offs. JAMA 2010;303:164–5 [DOI] [PubMed] [Google Scholar]

- 26.Nelson HD, Naik A, Humphrey L, et al. The background review for the USPSTF recommendation on screening for breast cancer. Ann Intern Med 2010;152:538–9 [DOI] [PubMed] [Google Scholar]

- 27.Dickersin K, Tovey D, Wilcken N, et al. The background review for the USPSTF recommendation on screening for breast cancer. Ann Intern Med 2010;152:537. [DOI] [PubMed] [Google Scholar]

- 28.Sherman ME, Howatt W, Blows FM, et al. Molecular pathology in epidemiologic studies: a primer on key considerations. Cancer Epidemiol Biomarkers Prev 2010;19:966–72 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.The Annals of Internal Medicine Editors When evidence collides with anecdote, politics, and emotion: breast cancer screening. Ann Intern Med 2010;152:531–2 [DOI] [PubMed] [Google Scholar]

- 30.McCartney M. Selling health to the public. BMJ 2010;341:c6639 [Google Scholar]

- 31.Lyratzopoulos G, Barbiere JM, Rachet B, et al. Changes over time in socioeconomic inequalities in breast and rectal cancer survival in England and Wales during a 32-year period (1973–2004): the potential role of health care. Ann Oncol 2011;22:1661–6 [DOI] [PubMed] [Google Scholar]

- 32.Stein R. Federal panel recommends reducing number of mammograms. The Washington Post 17 November, 2009

- 33.Horton R. Screening mammography—an overview revisited. Lancet 2001;358:1284–5 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.