Abstract

Sign languages display remarkable crosslinguistic consistencies in the use of handshapes. In particular, handshapes used in classifier predicates display a consistent pattern in finger complexity: classifier handshapes representing objects display more finger complexity than those representing how objects are handled. Here we explore the conditions under which this morphophonological phenomenon arises. In Study 1, we ask whether hearing individuals in Italy and the United States, asked to communicate using only their hands, show the same pattern of finger complexity found in the classifier handshapes of two sign languages: Italian Sign Language (LIS) and American Sign Language (ASL). We find that they do not: gesturers display more finger complexity in handling handshapes than in object handshapes. The morphophonological pattern found in conventional sign languages is therefore not a codified version of the pattern invented by hearing individuals on the spot. In Study 2, we ask whether continued use of gesture as a primary communication system results in a pattern that is more similar to the morphophonological pattern found in conventional sign languages or to the pattern found in gesturers. Homesigners have not acquired a signed or spoken language and instead use a self-generated gesture system to communicate with their hearing family members and friends. We find that homesigners pattern more like signers than like gesturers: their finger complexity in object handshapes is higher than that of gesturers (indeed as high as signers); and their finger complexity in handling handshapes is lower than that of gesturers (but not quite as low as signers). Generally, our findings indicate two markers of the phonologization of handshape in sign languages: increasing finger complexity in object handshapes, and decreasing finger complexity in handling handshapes. These first indicators of phonology appear to be present in individuals developing a gesture system without benefit of a linguistic community. Finally, we propose that iconicity, morphology and phonology each play an important role in the system of sign language classifiers to create the earliest markers of phonology at the morphophonological interface.

Keywords: Sign language, phonology, morphology, homesign, gesture, language evolution, historical change, handshape, classifier predicates

1. INTRODUCTION

This paper has two goals. The goal of Study 1 is to establish clear descriptions, using an experimental probe, of the handshapes that two target groups use when communicating with their hands. We compare the classifier predicates produced by users of conventional sign languages with the gestures produced by hearing people asked to communicate without using their voices. We then use these descriptions in Study 2 to frame an analysis of gestures produced by a third group—deaf adults whose hearing losses have prevented them from learning a spoken language and who have not been exposed to a sign language. These deaf individuals, called homesigners, use gesture to communicate. The goal of Study 2 is therefore to determine whether the gestures produced by homesigners resemble more closely gestures produced by signers or by gesturers. These observations will then allow us to frame some tentative hypothesis about the historical development of phonology in sign language.

Not surprisingly, hearing individuals who are asked to create gestures on the spot produce handshapes that are transparently related to the objects they represent and, in this sense, are iconic (Singleton et al. 1993, Goldin-Meadow et al. 1996). In addition, in order for a homesigner’s handshapes to be intelligible to friends and acquaintances who do not know the system, they too need to be iconic (although they are morphologically structured within this iconicity, Goldin-Meadow et al. 1995; Goldin-Meadow et al. 2007). In order to examine comparable handshapes in gesturers, homesigners, and signers, we focus on a heavily iconic component of sign languages—the classifier system (Padden 1988, Brentari & Padden 2001, Aronoff et al. 2003, 2005).

The handshapes investigated here are part of the verbal complex in sign languages and are associated with the class of verbs known as classifier predicates.1 Sign language classifiers are closest in function to verbal classifiers in spoken languages. The handshape is an affix on the verb that “classifies” an argument of the verb as one of several types (e.g., a vehicle, an animal, a round object). In (1), from the Papuan language Waris, which is a verbal classifier language (Brown, 1981, p. 95ff , cf. Benedicto & Brentari 2004), the classifier “put” encodes the fact that the direct object of the verb is a round object. In (2), from ASL, also a verbal classifier language, the classifier “1-CLz”encodes the fact that the direct object of the verb is a flat object. Despite the apparent iconicity of the handshapes found in ASL classifiers, these handshapes have morphological structure: they are discrete, meaningful, productive forms that are stable across related contexts (Supalla 1982, Emmorey & Herzig 2003, Eccarius 2008).

-

a verbal classifier in Waris

sa ka-m put-ra-ho-o coconut 1sg-dat class[round]-get-benefactive-imperative ‘Give me a coconut.’ -

a verbal classifier in ASL

BOOK location-1(signer) 1-CLz -GIVE-sweeping arc movement BOOK 1sg handle-flat-object-give-3pl ‘I give them the book(s).’

In sign languages, classifier handshapes that represent objects, i.e., Object Classifiers (Object-CLs) show higher finger complexity than handshapes that represent how objects are manipulated, i.e., Handling Classifiers (Handling-CLs). The question we address here is: How did this conventionalized pattern of finger complexity in sign language phonology arise? One possibility is that this pattern is a natural way of gesturing about objects and how they are handled. The high finger complexity in Object-CLs might be an outgrowth of the fact that objects have many different, sometimes complex, shapes that require a wide variety of finger groups to represent them. For example, it is plausible that a pen might be represented by one finger because it is thin B and a ruler by two fingers T because it is slightly wider (3ai-3aii). In contrast, in terms of the finger used, there are fewer and less complex, ways that these objects would be handled; both the pen and the ruler might both be placed on a table using a “power grip” handshape 3 (3bi-3bii). Moreover, the number of fingers used is rarely even mentioned in taxonomies of handgrips. Indeed, Napier (1956) describes a “power grip” and a “precision grip” that both use all of the fingers (the whole hand). Other options for finger selection, such as the one in 3biii that uses only the thumb and index finger, are not discussed. Following this reasoning, the handshapes required to reflect those manipulations might be expected to be simpler and less varied. In Study 1, we address the hypothesis that the conventionalized pattern of finger complexity found in sign language phonology reflects natural ways of gesturing about objects and how they are handled by analyzing the handshapes that native signers used when describing a set of vignettes, and comparing them to the handshapes used by hearing speakers who are asked to describe the vignettes using only their hands and no speech. If iconicity is the only factor motivating this pattern in sign languages, gesturers should show it as well.

Alternatively, the conventionalized finger complexity pattern found across many sign languages might be a product of two types of linguistic reorganization: one related to the general stability and productivity of form-meaning pairings (morphology), and one related to the specific patterns of features found in the handshape forms (phonology). Although the system may have had iconic roots, changes over time could have moved the system away from those roots. If so, the handshapes that signers and non-signing gesturers use in describing the vignettes might not resemble each other at all. In this event, we will have to look elsewhere for precursors to the sign language pattern, which we do in Study 2.

We begin with a description of the role that handshape plays in the grammar of sign languages, particularly in classifier predicates, and the phenomenon under investigation. The next three sections review the evidence that classifier handshapes, despite being iconic, have morphological, syntactic, and phonological properties.

1.1. Morphology: Two types of handshapes

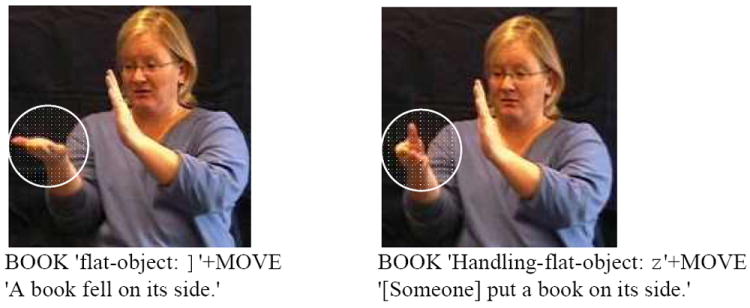

Despite the apparent iconicity of Object-CLs and Handing-CLs, previous work has shown that these handshapes are productive morphological affixes of the predicate that are stable across related contexts (Supalla 1982, Emmorey & Herzig 2003, Eccarius 2008). Moreover, the iconicity found in these handshapes has been reduced to some extent;for example, only three handshapes representing three widths are possible for Object-CLs (w T B) even though one could imagine more finger combinations (Supalla 1982, Eccarius 2008). The Object-CL w in Figure 1a (4 fingers extended, thumb tucked) represents an attribute of the whole object (Engberg-Pedersen 1993, Benedicto & Brentari 2004)—the extended fingers reflect the fact that the book is a ‘flat object’. The Handling-CL z in Figure 1b (4 fingers extended, thumb opposed) represents how the object is manipulated (Supalla 1982, Schick 1987)—the hand carves out space for a flat object of medium depth, a book. Handling-CLs represent the object indirectly by allowing the empty space carved out by the hand to capture the shape of the object being manipulated. Examples of other Object-CLs and Handling-CLs are given in (3).2

Figure 1.

Example of Object and Handling classifier handshapes in ASL. The circled hand in the left panel (a) is an Object classifier representing ‘book’; the circled hand in the right panel (b) is a Handling classifier representing ‘Handling book’. The hand not circled in both examples represents a second book on the shelf.

(3)

a. Examples of other Object-CLs

|

b. Examples of other Handling-CLs

|

The morphological categories for Object and Handling might or might not be paralleled by a corresponding phonological pattern. Most morphological categories in signed and spoken languages are not associated with particular phonological shapes, but some are. A widely attested case in spoken languages is the templatic morphology of Semitic languages where the root is composed of consonants and the inflectional morphology is composed (primarily) of the intervening vowels (McCarthy 1979). In such a language the vowels are recognizable as grammatical affixes simply by being vowels. A parallel case in a sign language would be if the following set of handshapes—w &2 T P B N n — were to comprise a particular classifier type. Here the set would form a phonological class because the handshapes in the set share a phonological property; they are all fully extended. This morphological set of classifiers would be recognizable by the phonological property of having extended fingers, and new handshapes that enter the set would be predicted to be fully extended as well. In contrast, if the following handshapes—w < & L T A — were to comprise the classifier type, the set would not form a phonological class, as there is no common property that the handshapes share. In this event, the handshapes would constitute a morphological but not a phonological class. In Section 1.1.3, we will argue that the inventories of Object and Handling classifier handshapes in sign languages not only have morphological structure but also phonological structure.

1.2. Syntax: The two types of handshapes show distinct syntactic distributions

In addition to having differences in meaning, Object-CLs and Handling-CLs are also characterized by syntactic alternations. Benedicto and Brentari (2004) have shown that Object-CLs vs. Handling-CLs display an intransitive vs. transitive opposition. They developed syntactic tests that are sensitive to the presence of agents/subjects and objects that establish this opposition in ASL (see also Kegl 1990, Janis 1992, Zwitserlood 2003, Mazzoni 2008). One of the tests is sensitive to the presence of an agent and involves the “negative imperative” construction demonstrated in (4)—the verb followed by the sign FINISH!. MELT in (4a) is an unaccusative verb. It subcategorizes for an object/theme only; in such sentences, adding the sign FINISH is ungrammatical. LAUGH in (4b) is an unergative verb. It subcategorizes for a subject/agent only; in such sentences, adding FINISH is grammatical. The sentence with an Object-CL in (4c) obtains an ungrammatical result, like that of the unaccusative verb; therefore this structure contains no agent. The sentence with the Handling-CL in (4d) obtains a grammatical result, like that of the unergative verb; therefore this structure contains an agent.

(4) Test for agents applied to ASL lexical verbs, Object-CLs and Handling-CLs ([verb], FINISH!)

| a. *MELT FINISH | [unaccusative lexical verb] |

| ‘Stop melting!’ | |

| b. LAUGH FINISH | [unergative lexical verb] |

| ‘Stop laughing!’ | |

| c.*BOOK ‘object classifier]’ +MOVE FINISH | [object classifier predicate] |

| ‘Book, stop falling on your side!’ | |

| d. BOOK ‘handling classifier z’+MOVE FINISH | [handling classifier predicate] |

| ‘Stop putting the book on its side!’ |

Another test is sensitive to the presence of a syntactic object. This test uses the distributive movement affix, which is an additional movement produced on the verb that means “to each”. This test is demonstrated in (5). The unaccusative verb MELT in (5a) obtains a grammatical result. The unergative verb LAUGH in (5b) obtains a semantically anomalous result; the correct interpretation of that sentence would be “the woman laughed at each ‘x’”. Both the sentence with the Object-CL in (5c) and the Handling-CL in (5d) obtain a grammatical result, showing the presence of an object/theme in both types of classifier predicates.

(5) Test for objects applied to lexical verbs, Object-CLs and Handling-CLs ([verb]+distributive)

| a. BUTTER MELT+distributive | [unaccusative lexical verb] |

| ‘Each of the butters melted.’ | |

| b.#WOMAN LAUGH+distributive | [unergative lexical verb] |

| ‘Each woman laughed.’ | |

| c. BOOK ‘object classifier]’ +MOVE+distributive | [object classifier predicate] |

| ‘Each book fell on its side.’ | |

| d. BOOK ‘handling classifier z ‘+MOVE+distributive | [handling classifier predicate] |

| ‘[Someone] put each the book on its side.’ |

These tests demonstrate that Object-CLs are associated with intransitive predicates and Handling-CLs with transitive ones. The relevant point for our purposes is that the sentences have identical structures in (4c-d) and (5c-d), except for the classifiers. The difference in grammaticality is thus attributed to them, and demonstrates that these classifiers carry syntactic alternations. We mention this syntactic alternation to underscore the fact that the iconicity of these forms in no way prevents them from having syntactic properties; however, the analyses in this paper will be concerned exclusively with morphological and phonological patterns.

1.3. Phonology: The two types of handshapes show distinct phonological patterns

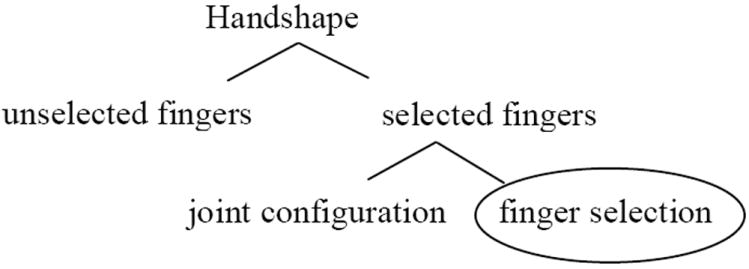

We turn next to the phonology of handshape, in general, and the pattern under investigation, in particular.3 Handshapes can be characterized by fingers that are active, i.e., fingers that can move or contact the body during articulation—selected fingers—and fingers that are not active—unselected fingers. There are two further distinctions that can be made for selected fingers—finger selection, which is the focus of this paper, and joint configuration (see Figure 2).

Figure 2.

A schematized hierarchical representation of handshape (cf. Brentari 1998). The handshape node branches into selected fingers and unselected fingers feature classes, and the selected fingers node further branches into joint complexity and finger complexity feature classes.

Finger selection and joint configuration in sign language handshapes have distinct sets of features that can vary independently from one another (Brentari 1998, Sandler & Lillo-Martin 2006). A parallel case in spoken languages might be coronal and voiced sounds, which also have specific sets of features that can vary independently from one another. For example, the handshapes in (6a) have different fingers selected, but they all have the same joint configuration; namely, the fingers are extended (i.e., the fingers are open, straight, and not curved or bent in any way). In contrast, the handshapes in (6b) have the same fingers selected (all four fingers), but they have different joint configurations (bent, curved, flat, or closed). We concentrate here on finger selection (i.e., categories of the sort shown in (6a), the node circled in Figure 2) and its complexity.

(6) Examples of finger and joint features in two sets of handshapes

Different selected fingers/same joint configuration: v B 2 n s h Y

Different joint configuration/same selected fingers: w 6 A z ; ( 0

The central claim of this paper is that the distribution of finger complexity in Object-CLs vs. Handling-CLs in sign languages is phonological as well as morphological. We begin by describing the phenomenon; we then lay out the reasons we consider the phenomenon to be phonological.

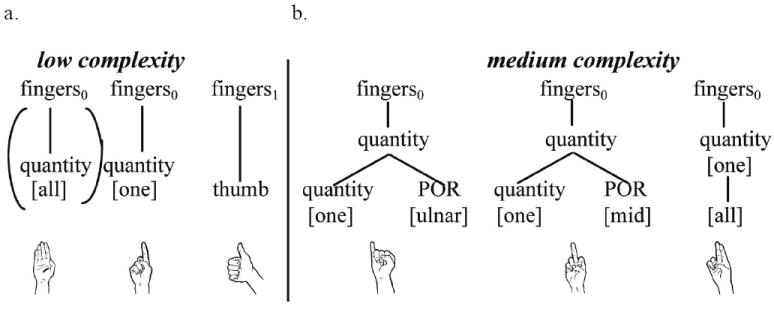

The phenomenon we study involves the distribution of finger complexity in the two types of handshapes introduced above—Object-CL handshapes and Handling-CL handshapes. Handshapes can be divided into three levels of finger complexity, based on a number of different criteria. Low complexity handshapes have the simplest phonological representation (Brentari 1998), are the most frequent handshapes crosslinguistically (Hara 2003, Eccarius and Brentari 2007), and are the earliest handshapes acquired by native signers (Boyes Braem 1981). These three criteria converge on the finger groups represented in Figure 3a: all fingers (finger group w), the index finger (finger group B), and the thumb (finger group 2).4 Interestingly, these three handshapes have been found to be frequent in the spontaneous gestures that accompany speech (Singleton et al. 1993, Goldin-Meadow et al. 1996) and in child homesign (Goldin-Meadow et al. 1995). Medium complexity handshapes include one additional elaboration of the representation of a [one]-finger handshape. This elaboration can take two forms (Figure 3b). In the first two examples, the elaboration indicates that the single selected digit is not on the ‘radial’ (thumb) side of the hand, the default position for all finger groups, e.g., in finger group P the selected finger is on the ‘ulnar’ (pinky) side of the hand, and in the finger group N the selected finger is in the ‘middle’ of the hand. In the third example, the elaboration indicates that there is an additional finger selected, as in finger group T, where two fingers are selected rather than one. High complexity handshapes include all other finger groups, e.g., n, j and g.

Figure 3.

Finger groups with low and medium finger complexity (Brentari 1998). The low complexity finger groups (a) are characterized by a single, non-branching elaboration of the fingers node. The medium complexity finger groups (b) have one more elaboration, either a branching structure or an extra association line. The parentheses around the l- finger group indicate that it is the default finger group in the system.

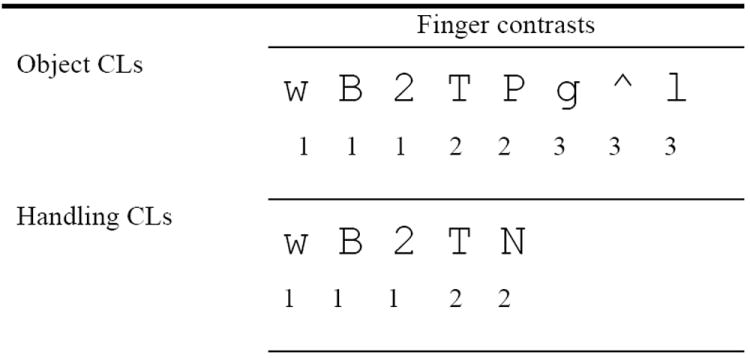

Eccarius (2008) and Brentari and Eccarius (2010) demonstrated using archival data and grammaticality judgments that Object-CLs and Handling-CLs differ in their distribution of finger complexity. Object-CLs have a larger set of finger distinctions and more finger complexity than Handling-CLs in three historically unrelated sign languages—ASL, DSGS and HKSL, as shown in Figure 4. Despite the fact that finger groups of medium complexity are used when objects are handled in real world situations (e.g., to handle a baseball or to pick up a teacup by its small handle), handshapes with high finger complexity were not attested in the Handling-CLs of any of the sign languages studied; higher finger complexity was found only in Object-CLs.

Figure 4.

Finger groups found in Object and Handling classifiers in ASL, HKSL, and DSGS (cf. Eccarius 2008). The finger complexity score for each handshape is listed below it. Note that finger complexity is, on average, higher for the Object-CLs than for the Handling-CLs.

Spoken languages also exhibit cases that are both phonological and morphological. Take, for example, Arapeshan (Fortune 1942, Dobrin 1997) where gender is phonological as well as morphological. There are thirteen gender classes in which the singular and plural forms are generally paired with one another, and the plural form typically has more phonological complexity than the singular form. This is especially evident in the three gender classes shown in boldin (7): by (sg.) vs. bys (pl.), n (sg.) vs. nab (pl.), and t (sg.) vs. tog (pl.). This phenomenon is comparable to the Object-CL vs. Handling-CL distinction we are studying—the Object-CL has more finger complexity than the Handling-CL.

(7) Arapeshan gender marking (cf. Dobrin 1997)

| Gender | singular | plural |

|

| ||

| I | ahoryby (‘knee’) | ahorybys (‘knees’) |

| VI. | lawan (‘tree snake’) | lawanab (‘tree snakes’) |

| XI. | alit (‘shelf’) | alitog (‘shelves’) |

We are arguing that the finger complexity phenomenon in classifier handshapes is phonological as well as morphological (in addition to being iconic). We present three types of evidence supporting the claim that the phenomenon is phonological in the sections below.

First, the building blocks of finger complexity are the features of the finger selection node. These same features generate contrast throughout the system and therefore constitute a class of phonological features.5 Selected finger features can generate minimal pairs. For example, in ‘core’ vocabulary items (i.e., dictionary entries) and ‘foreign’ forms (i.e., items including a letter of the manual alphabet) in ASL, a difference in selected fingers is distinctive, since it creates two unrelated lexical items (minimal pairs). The sign READ with two spread fingers selected (Y) vs. BARCELONA with four spread fingers selected (v) are distinctively contrastive, as are the letters of the manual alphabet ‘U’ (T) vs. ‘B’ (w). The contrast in both sign pairs is between finger group T and finger group w. The important point is that finger selection generates contrasts through the entire phonological system in a sign language, dictionary forms as well as the classifier predicates considered here. For example, the classifier predicate ‘cut-with-knife’ with two fingers selected (T) vs. ‘cut-with-saw’ with four fingers selected (w) are contrastive. In this case, however, the two items are morphologically related (they vary with respect to the width of the blade). Nevertheless, the same class of features that generates contrast throughout the system is responsible for the forms that these two signs assume in the classifier system, and, in this sense, can be considered phonological.6 Finger complexity is based on the number of selected finger features and so it too is a phonological phenomenon.

Second, we are expressing a new generalization about finger complexity across two sets of forms, in this case Object-CLs and Handling-CLs. The only way to capture this generalization is in phonological terms via the features. A nearly parallel case in spoken languages is the generalization that sounds with higher complexity (i.e., secondary articulations) are more likely to occur in syllable onsets than in codas (Goldsmith 1990). The only difference between the generalization about finger complexity in sign languages and the case of sound complexity in spoken languages is that the finger complexity case holds across two types of morphological affixes and the sound complexity case holds across two positions in the syllable. Other generalizations using selected fingers in sign languages have also been formulated. For example, Eccarius (2008) used the set of selected finger features and phonological principles found in Dispersion Theory (Flemming 2002, Padgett 2003) to explain handshape inventories found ASL, DSGS, and HKSL.7 She found that finger selection is not arbitrarily distributed but is instead systematically generated subject to pressures of iconicity, markedness (articulatory ease, perceptual distinctiveness), and maximal dispersion. The set of features associated with finger selection and principles of Dispersion Theory allowed Eccarius (2008) to account not only for the similarities among the unrelated sign languages, but also for the language-particular differences. The generalization concerning finger complexity is best expressed in phonological terms and is, in this sense, phonological.

Finally, the fact that a generalization refers to morphological classes does not mean that it is not, at the same time, phonological. Consider English Trisyllabic Laxing as an example from spoken language. The alternations seen in pairs such as op[ei]que–op[æ]city, ser[i]ne–ser[ε]nity, and gr[ei]de–gr[æ]dual occur only in the context of specific, Class 1 morphological suffixes (e.g., -ity, -ous, -ize, -ual, -ify, Kiparsky 1982). But the alternations are still phonological. Similarly, the systematic distribution of finger complexity in sign languages that we are investigating here is restricted to specific morphological classes (Object-CLs and Handling-CLs). It is, nevertheless, a phonological process.

We designed a task to elicit handshapes that represent either an object or a hand manipulating an object with two goals in mind. Our first goal was to replicate, using an experimental probe, the finger complexity patterns found by Eccarius (2008) and Brentari and Eccarius (2010) in signers. Our second goal was to use the same probe to determine whether hearing individuals asked to describe objects using their hands and no speech would display the same or different finger complexity patterns.

2. STUDY 1: FINGER COMPLEXITY IN SIGNERS VS. GESTURERS

2.1 Participants

The participants in this study come from 4 language groups (ASL, LIS, spoken English, and spoken Italian) and 2 countries (US and Italy). The three American gesturers were native English speakers and students at Purdue University (22, 23 and 24 years of age). The three native ASL signers were from the greater Chicago area (33, 41, and 56 years of age). The three Italian gesturers were native Italian speakers and students at the Università di Firenze (20, 22, and 22 years of age). The three native LIS signers were from the greater Milan metropolitan area (23, 35, and 37 years of age).8

2.2. Stimuli and Procedures

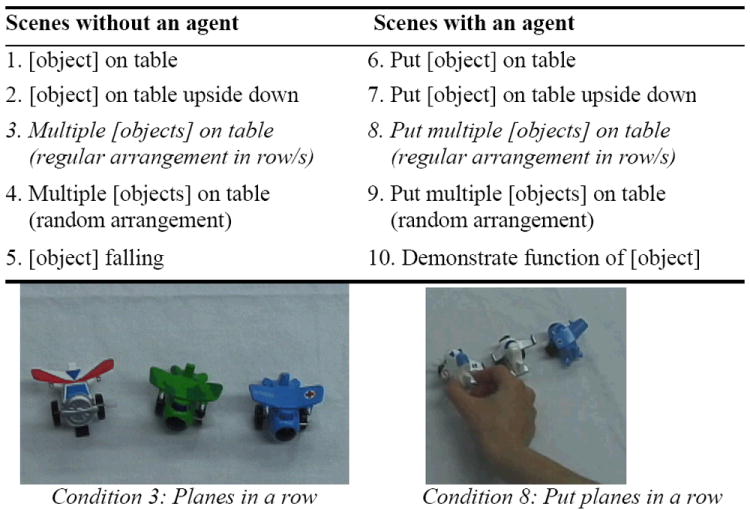

The stimuli consisted of 131 photographs or short movie clips (henceforth vignettes). Eleven objects were used in the vignettes: airplanes, books, cigars, lollipops, marbles, pens, strings, tapes, television sets, and tweezers. The object depicted in the stimulus clips exhibited a range of colors, shapes, and sizes. Each object was portrayed in 10 conditions: 5 depicted a stationary object or an object moving on its own without an agent, and 5 depicted an object being moved by the hand of an agent (Figure 5).9 The gesturers were instructed to “describe what you see using your hands and without using your voice.” Signers were instructed in sign to “describe what you see.”10 Data collection sessions were videotaped, then captured using iMovie and clipped into individual files, one file for each vignette description. The video files containing the participants’ responses were transcribed using ELAN (EUDICO Linguistic Annotator), a tool developed at the Max Planck Institute for Psycholinguistics, Nijmegen, for the analysis of language, sign language, and gesture.

Figure 5.

Description of the 10 conditions in which each of the 11 objects appeared (top), and examples of two airplane vignettes (a frame from the ‘no agent’ condition #3; a frame from the corresponding ‘agent’ condition #8).

Three data sets were selected for analysis: the ‘falling’ condition (#5) for all 11 objects, and the ‘airplane’ and ‘lollipop’ objects for all 10 conditions. The falling condition provides opportunities to produce a construction depicting a theme moving without an agent. ‘Airplanes’ and ‘lollipops’ were chosen because they tend to elicit high complexity handshapes in both sign and gesture.

Most signers labeled the object in a vignette with a noun before producing a verb (containing a classifier affix) to describe the event. As our focus is on verbal classifiers, we did not include the noun labels in our analyses. To make the comparable cut for the gesturers, we segmented their responses into gestures used to label the object (typically gestures produced on the body or without reference to a specific location in neutral space) and gestures used to describe the event or spatial arrangement shown in the event (gestures that moved or were situated at a particular location). We analyzed only the event gestures.

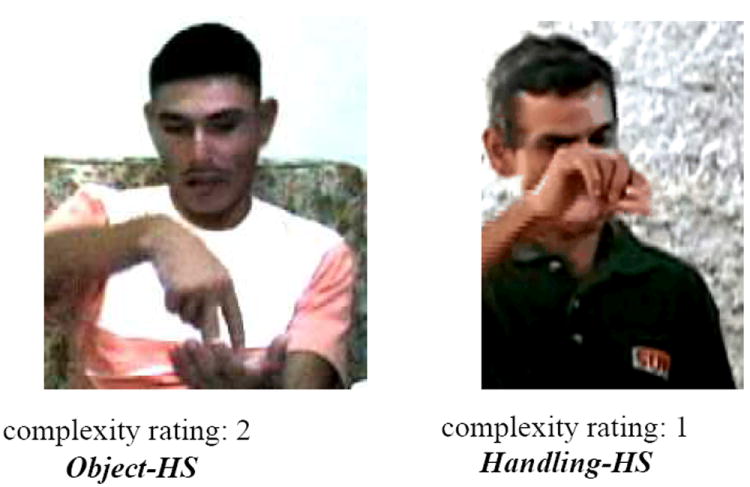

Each handshape was first coded in terms of the class of handshape to which it belonged, regardless of the stimulus: (1) Object Handshapes (Object-HSs) represented the whole object, part of the object, or physical characteristics, such as size and shape, of the object. (2) Handling Handshapes (Handling-HSs) represented the manipulation or handling of the object. In order to be able to use the same categories for our signers and gesturers, from this point on, we use the structurally neutral terms Object-HSs and Handling-HSs rather than Object-CLs and Handling-CLs. Participants produced a number of handshapes that were not relevant to our analyses and thus were excluded from the database: the index finger or neutral handshape used to trace the object’s path or indicate its location; the whole body used to substitute for an object in motion (e.g., falling off the chair to indicate the object falling); a lexical verb, rather than a classifier predicate (relevant only to signers). We included in the analyses only handshape types that matched the intent of the stimulus; that is, Object-HSs that were produced in response to ‘no agent’ stimulus events, and Handling-HSs that were produced in response to ‘agent’ stimulus events.11 These criteria resulted in 595 handshapes across the 4 language groups. Importantly, if we include all Object-HSs and Handling-HSs regardless of the type of stimulus that elicited them (i.e., Object-HSs produced in response to both types of stimuli, and Handling HSs produced in response to both types of stimuli), the results described below are unchanged.

Each handshape was then transcribed and assigned a finger complexity score using the criteria described in Figure 3: Low complexity finger groups were w, B, or 2, and were assigned a score of 1; the thumb did not count towards finger complexity unless it was the only finger selected. Medium complexity finger groups were P, N, or T, and were assigned a score of 2; high complexity handshapes were all remaining forms, and were assigned a score of 3. All joint configurations were included in their appropriate finger group (see footnote 4). A handshape was given an extra point for complexity if there was a change in the fingers used over the course of the gesture or sign, e.g., the gesture began using all of the fingers and ended in a handshape using only one finger (w B). Thus, finger complexity values ranged from 1 to 4. Reliability was assessed on the coding of handshape type (Object-HS, Handling-HS, irrelevant); agreement between coders was 90%.

2.3 Results

2.3.1. Finger complexity in Object and Handling handshapes: a phonological pattern

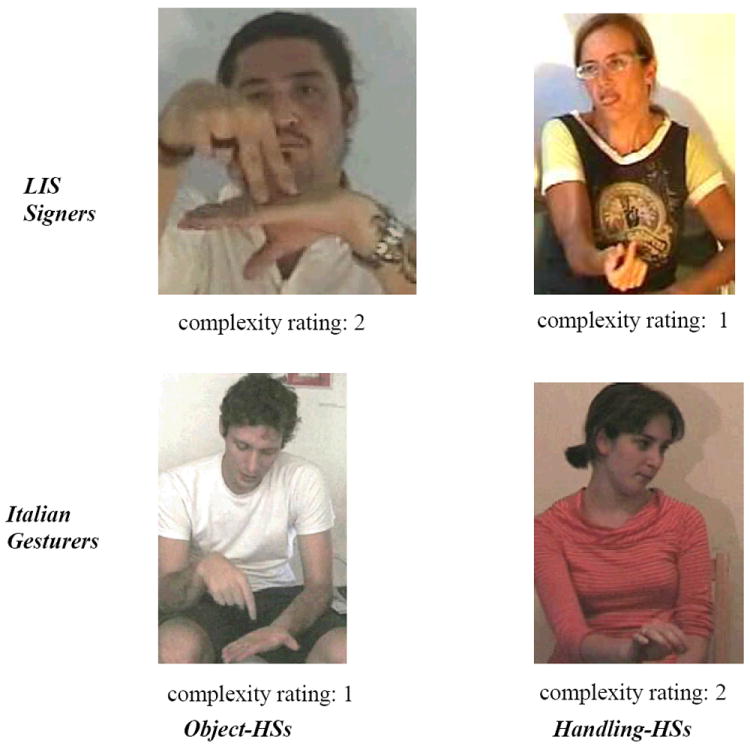

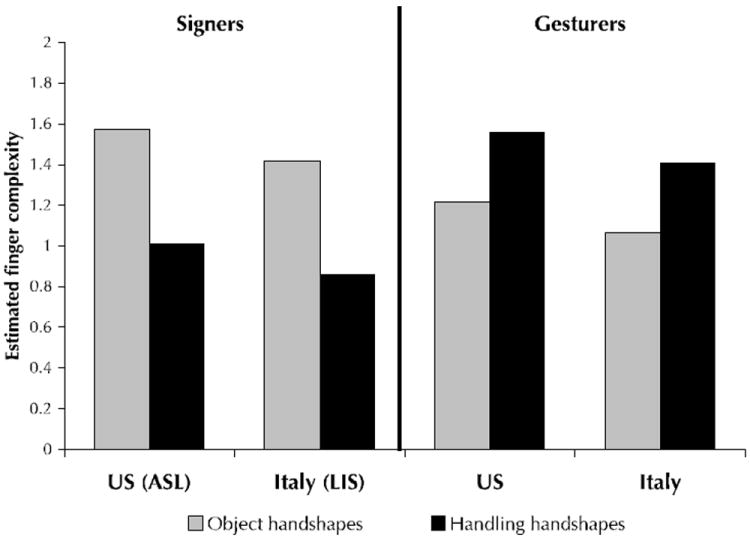

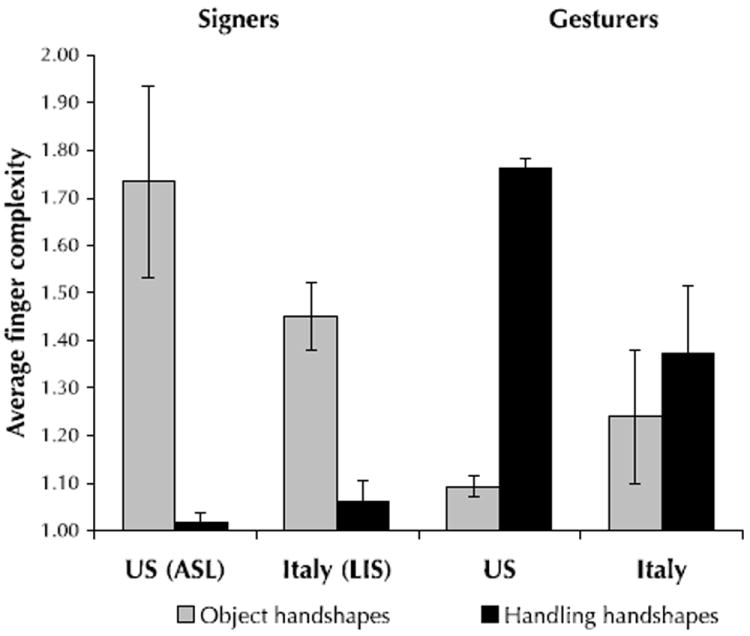

Finger complexity was averaged for each participant and, then again, for each language group. There are two sources of random variation in our finger complexity data: variation stemming from different assessments of the same participant, and variation among stimulus items (e.g., airplane, lollipop). Thus we used a Mixed Linear Model that allows grouping of interdependent samples in which Group (Sign/Gesture), Country (US/Italy), and Handshape Type (Object/Handling) were treated as fixed effects, and Participant and Stimulus Item were treated as random effects. Figure 7 displays the estimated mean finger complexity for Object-HSs and Handling-HSs for signers and gesturers by Country.

Figure 7.

Example handshapes from the Italian group illustrating the sign pattern and the gesture pattern. Replicating previously found cross-linguistic patterns, LIS signers showed higher finger complexity in Object-HSs than in Handling-HSs. Italian gesturers showed the opposite pattern, higher finger complexity in Handling-HSs than in Object-HSs.

We first examined finger complexity in the signing participants; signers of ASL and LIS showed significantly higher finger complexity for Object-HSs than for Handling-HSs, t(5) = 7.35, p < .001. These results replicate previously found patterns (Eccarius 2008, Brentari and Eccarius 2010), and illustrate the morphophonological distinction in finger complexity for Object-HSs vs. Handling-HSs described in Section 1.1.3. All six of the signers, regardless of country, displayed a higher finger complexity in Object-HSs than in Handling-HSs. This result also validates our experimental methodology, demonstrating that the vignette materials effectively elicited the contrast of interest: Object-HSs for stimulus items without an agent, and Handling-HSs for items with an agent. We have thus succeeded in creating a reliable experimental task that can be used to probe handshape use in groups who cannot provide grammaticality judgments.

We next asked whether hearing people who are not signers would show the signers’ pattern when responding to the same vignettes using only their hands and no speech. The model revealed a significant interaction between Group and Handshape Type, indicating that the signers and gesturers differed in their patterns of finger complexity for Object-HSs vs. Handling-HSs (see Figure 6). Gesturers showed the opposite pattern from signers: lower finger complexity for Object-HSs than for Handling-HSs, t(5) = -3.81, p < .05.12

Figure 6.

Estimated mean finger complexity for Object-HSs and Handling-HSs in signers and gesturers by Country. Signers in both countries replicated the previous cross-linguistic findings (higher finger complexity for Object-HSs than for Handling-HSs). Gesturers in both countries showed the opposite pattern (higher finger complexity for Handling-HSs than for Object-HSs). The estimated values provided by the model reflect the effects of removing covariates (such as stimulus item and participant) and, in this sense, provide a more accurate picture of the underlying patterns in the dataset than the observed values (which can be found in Appendix A). Because these are estimated values provided by the model, their values can be less than 1, which was the minimum finger complexity value assigned in our system.

We then compared finger complexity for Object-HSs and Handling-HSs across groups. Post-hoc contrasts indicated significant differences between signers and gesturers in both Handshape Types: signers showed higher finger complexity for Object-HSs than gesturers, t(10) = 4.16, p < .05, and lower finger complexity for Handling-HSs, t(10) = -5.05, p < .001, than gesturers. There were no other significant effects.

Finally, we examined individual patterns in each group, and found that the gesture group displayed more variability than the sign group. Each of the six signers, but only four of the six gesturers, showed their group pattern (displayed in Figure 6). In particular, all of the signers from both countries displayed more finger complexity in their Object-HSs than in their Handling-HSs. All three of the American gesturers showed the opposite pattern—higher finger complexity in their Handling-HSs than in their Object-HSs. However, the Italian gesturers showed more variability—one Italian gesturer looked like the American gesturers, one displayed equal finger complexity for both handshape types, and one looked like the signers (higher finger complexity in Object-HSs than in Handling-HSs.

Figure 7 displays examples of the handshapes produced by participants in the Italian groups. In the top row, we see an Object-HS, T , with a medium complexity score, contrasted with a Handling-HS, B, with a low complexity score, both produced by LIS signers. In the bottom row, we see the opposite pattern—an Object-HS, B, with a low complexity score, contrasted with a Handling-HS, T, with a medium complexity score, both produced by Italian gesturers.13

2.3.2 Is the phonological pattern embedded within a morphology?

We have found that finger complexity varies across two types of handshapes (Object-HSs and Handling-HSs). Our next question was whether these two handshape types constitute morphological classes, as we would expect if variation in finger complexity is a phonological process embedded within a morphological system. To address this question, we examined the number of different handshapes used by an individual signer or gesturer, on the assumption that the range of forms within a morphological class should not vary freely but rather should be relatively constrained.14 We used the responses from the non-agentive conditions with a single object for ‘airplane’ and ‘lollipop’ (conditions 1, 2, and 5 from Figure 6) to calculate this measure.15 We limited our analyses to these conditions in order to make sure that the handshapes produced for a given item referred to the object and not to other aspects of meaning portrayed in the vignettes (e.g., “many”, “row-of”, or “random arrangement,” which were features of the stimulus items containing more than one item). We thus analyzed seven items per participant—four for airplane and three for lollipop.

We chose type-token ratio as a measure of the variability of the handshapes a participant used for the two items (airplane and lollipop). We expected that signers would use at least two different handshapes, one to represent airplane and one to represent lollipop. If participants used only these two handshapes across the seven items, their type-token ratio would be .29—two types (1 per object) divided by 7 tokens (one production for each item; many of the participants produced more than one sign or gesture per item, thus increasing the number of tokens and providing more opportunities for variability). We found that the signers’ data approached the predicted value: their mean type-token ratio was .29 (range: .17 – .38), indicating relatively low variability.16 In contrast, the mean type-token ratio for the gesturing group was .52 (range: .30 – .80), indicating relatively high variability (Table 1, Mann-Whitney, p =.002). Thus, the finger complexity variation displayed in Figure 7 seems to be operating on morphological classes in the signers, but not in the gesturers. In this sense, the finger complexity variation can be considered a phonological process for the signers, but not for the gesturers (see Table 1).

Table 1.

The average type-token ratio and average finger complexity values for Object-HSs and Handling-HSs for each group of signers and gesturers by country.

| Signers | Gesturers | |||

|---|---|---|---|---|

| ASL | LIS | US | IT | |

| Morphology | ||||

| Type-Token ratio | .29 | .30 | .53 | .52 |

| Phonology | ||||

| Finger complexity: Object-HSs | 1.86 | 1.53 | 1.11 | 1.23 |

| Finger complexity: Handling-HSs | 1.08 | 1.24 | 1.30 | 1.67 |

2.4 Discussion

The results of Study 1 are interesting for several reasons. First, the signers’ data demonstrate that a well-constructed laboratory task can replicate results obtained by more traditional linguistic methodology. We found that the signers’ handshapes displayed the stability characteristics of a morphological system and, within that system, showed the expected phonological pattern—more finger complexity in Object-HSs than in Handling-HSs (as opposed to equal levels of finger complexity in the two categories).

Second, our analysis allowed us to evaluate whether non-signers, asked to use gesture in the same contexts that signers use their signs, display the same handshape patterns as signers. We found that they do not. Thus, the pattern previously reported for sign languages is not inevitable every time the hands are used to communicate—the gesturers in our study did not show it and, in fact, displayed the opposite pattern: more finger complexity in Handling-HSs than in Object-HSs. Moreover, the gesturers’ pattern cannot be interpreted as phonological because it is not embedded in a morphological system (their type-token ratio indicates more variability in handshape forms than is typically found in signers; see also Goldin-Meadow et al. 1994, 1995; Emmorey & Herzig 2003, Schembri et al. 2005). Importantly, this result makes it clear that the morphophonological pattern found in conventional sign languages is not a simple elaboration or codified version of the pattern invented by hearing individuals on the spot. Of the three major manual parameters of a sign language—handshape, location and movement—the handshape parameter has been shown to differ most noticeably between ‘first-time’ gesturers and signers (Singleton et al. 1993, Goldin-Meadow et al. 1996). Our task confirmed this result and uncovered an additional way in which this difference is manifested.

Third, the different patterns found in gesturers vs. signers underscore the fact that different processes can be recruited when using the manual modality to describe objects involved in an event. When gesturers view the vignettes containing an agent, they replicate to a large extent the actual configuration of the hand in the vignette, and, as a result, they display a fair amount of finger complexity in these Handling handshapes. They are using the hand to represent the hand (hand-as-hand iconicity), and thus are relying on an accessible mimetic process; i.e., participants see a hand in the vignette and mimic it with their own hand. But this direct mimetic process does not work when the task is to represent the object itself. The hand can no longer represent the hand in the event (as there is none), but must instead capture and display features of the object (hand-as-object iconicity). What gesturers do in this case is fall back on the simplest handshapes, handshapes that are routinely used in co-speech gesture (Goldin-Meadow et al., 1996). As a result, the gesturers’ Object-HSs capture few of the properties of the objects they stand for and display little finger complexity. Thus, the gesturers’ reliance on the mimetic hand-as-hand iconicity may be responsible for the relatively high finger complexity they display in Handling but not Object handshapes.

In contrast, the signers rely on classifiers that make good use of hand-as-object iconicity, which allows them to capture many properties of the objects they are describing and leads naturally to relatively high levels of finger complexity in Object handshapes. On this view, it is not surprising that signers display more finger complexity in their Object-HSs than gesturers. But why do signers display less finger complexity in their Handling-HSs than gesturers?17 We propose that the finger complexity is lost in Handling-HSs because sign languages have undergone a process of phonological reorganization in order to maximize the difference between the two morphological categories. Frishberg (1975) was the first to observe that, over historical time, sign languages become less motivated by iconicity and more motivated by other pressures; her work highlighted the pressures of ease of articulation and ease of perception.In the particular case we are discussing here between Object-CLs and Handling-CLs, we suggest that the pressure is the morphology. Once the system had differentiated and regularized the meanings of these two morphemes, their phonological forms (in particular, the possible choices for selected fingers) may have become differentiated as well. The system would then more closely conform to the universal tendency to have one form for one meaning. This idea has a long and complex history in linguistic theory (see Wurzel 1989 for a good overview). A recent account using Optimality Theory (Xu 2007) captures the tendency in two complementary constraints: *FEATURE SPLIT, which bans the realization of a meaning by more than one form, and *FEATURE FUSION, which bans a form realizing more than one meaning. The ways in which these features are violated have given rise to a wide range of morphophonological phenomena attested in natural languages. But sign languages are very young (less than 300 years old) compared to spoken languages. As a result, sign languages may express the one form-one meaning tendency more directly than spoken languages, which have undergone thousands of years of historical change. This reorganization might require a linguistic community; that is, users of a system may negotiate and ultimately settle on forms that maximize differences between these two morphological categories, or it might happen within a single individual.

In Study 2, we ask whether continued use of gesture as a primary communication system—gesture use in homesign—leads to the morphophonological pattern found in sign languages: (1) hand-as-object iconicity resulting in higher finger complexity in Object-HSs and (2) phonological reorganization resulting in lower finger complexity in Handling-HSs. If we find that the homesigners do not display the signers’ pattern, we will have evidence that conventionalization within a community may be a necessary factor in triggering the reorganization from gesture to sign. If, however, the homesigners do display the signers’ pattern, we will have evidence that factors internal to an individual system (perhaps the markedness factors mentioned above) may be responsible for the reorganization.

3. STUDY 2: FINGER COMPLEXITY IN HOMESIGNERS

The field of sign language phonology can make an important contribution to our understanding of how a phonological system takes shape. New sign languages are constantly emerging, thus allowing insight into the steps that may have led to the morphophonological handshape patterns found in current day sign languages. The circumstances favorable to sign language genesis are a critical mass of primary users who interact with each other in the manual modality and who can transmit the language to new users. These characteristics converge in two general types of situations: (1) existing communities of hearing people who experience a high incidence of deafness, be it genetic or acquired18; and (2) new Deaf communities, in which deaf individuals come together and are free to communicate manually, such as a school for special education or other gathering places for deaf people. Nicaraguan Sign Language (NSL) is one such sign language that has been studied closely as it has developed from its origins (Kegl et al. 1999, Senghas 1995, 2003; Senghas and Coppola 2001; Senghas et al. 2004).19

The deaf children and adolescents present in the early stages of the formation of NSL had not previously been exposed to, nor acquired, a spoken, written, or signed language. However, like deaf individuals in similar situations in other cultures, they had likely invented gestures to communicate with their hearing family members and neighbors, called homesigns (see Goldin-Meadow, 2003, for comprehensive background on homesign). Thus, what began as an assortment of different homesign systems eventually converged into a single, common system that became NSL. The historical record suggests that homesign systems formed part of the early raw materials available for sign language genesis in ASL (Supalla 2008), and historical and empirical data support a relationship between adult homesign systems in Nicaragua and NSL (Coppola and Senghas 2010). In accord with other researchers (e.g., Cuxac 2005, Fusellier-Souza 2006), we can therefore view homesign systems as a precursor to sign language structure.

Previous analyses of homesigns in Nicaragua have uncovered several types of linguistic structure: the grammatical category Subject (Coppola & Newport 2005); techniques to indicate arguments, including spatial modulations, combinations of nouns and points, and person-classifier-like forms (Coppola 2002; Coppola & So 2005); and techniques to represent locations and nominals (Coppola and Senghas 2010). We focus here on finger complexity, which has not been previously analyzed in Nicaraguan homesign.

3.1 Participants

Adult homesigners are rare in developed countries such as the United States and Italy because of the educational policies in these countries; deaf children are either exposed to sign language as early as possible, or they are given cochlear implants and intensive training in speech. However, adult homesigners do exist in developing countries, such as Nicaragua, where resources for, and access to, education is limited for both hearing and deaf individuals. Four homesigners in Nicaragua participated in our study. At the time of the study, they were 20, 24, 29, and 29 years old, and they displayed no apparent cognitive deficits. The homesigners did not know each other, did not interact regularly with other deaf people, and were not part of the Deaf community in Nicaragua that uses Nicaraguan Sign Language (Coppola 2002). The homesigners were tested on the same materials as the signers and gesturers in Study 1.

3.2. Stimuli and Procedures

The procedure was the same as in Study 1, except that the instructions to the homesigners involved pointing to the computer screen with a questioning face and shoulder shrug. All of the participants understood by this gesture that we were asking them to describe what they saw using their homesign system. Homesigners were given only the no-instruction condition, the condition that was analyzed for the signers and gesturers in Study 1. The homesigners produced 156 handshapes to describe the target events that met the criteria for inclusion described in Study 1.

3.3. Results

3.3.1. Finger complexity in Object and Handling handshapes: a phonological pattern

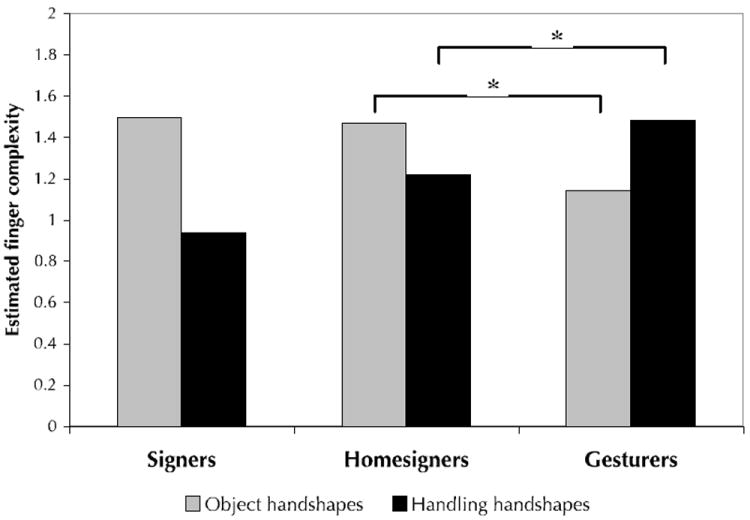

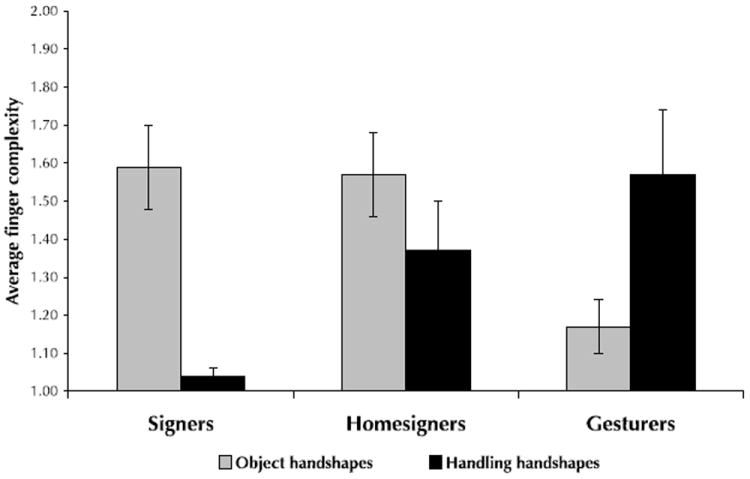

To compare the homesigners’ performance to the performances of the signers and gesturers, we contrasted the 156 observations from the Nicaraguan homesigners to the 595 observations from the native signers and gesturers described in Study 1 (a total of 751 observations) using the Mixed Linear Model previously described. The model considered the fixed effects of Group (Sign, Gesture, Homesign) and Handshape Type (Handling, Object), and treated Participant and Stimulus Item as random effects. Because the factor Country (US/Italy) revealed no significant interaction with Group (Sign/Gesture) in Study 1, this factor was ignored in this analysis.

Figure 8 shows the estimated mean finger complexity for Object-HSs and Handling-HSs in homesigners, signers, and gesturers (collapsing across country). To situate the homesigners’ levels of finger complexity within the levels for the other two groups, we compared their finger complexity to finger complexity in the signers and the gesturers. Post-hoc pairwise comparisons showed that homesigners’ finger complexity differed significantly from gesturers’: they displayed significantly lower finger complexity than gesturers in Handling-HSs, t(8) = -2.62, p < .05), and higher finger complexity than gesturers in Object-HSs, t(8) = 2.24, p = 0.055. Moreover, homesigners’ finger complexity did not differ significantly from signers’ in either Handling-HSs, t(8) = 1.6, p = 0.15. or Object-HSs, t(8) = -1.02, p = 0.34. Thus, the homesigners’ finger complexity levels were closer to the signers’ levels than to the gesturers’ levels.

Figure 8.

Estimated mean finger complexity for Object-HSs and Handling-HSs in signers, homesigners, and gesturers. Signers displayed significantly higher finger Object-HSs than in Handling-HSs. Gesturers displayed significantly higher finger complexity in Handling-HSs than in Object-HSs. The homesigners’ pattern resembled the signers’ pattern and was significantly different from the gesturers’ pattern. The estimated values provided by the model reflect the effects of removing covariates (such as stimulus item and participant) and, in this sense, provide a more accurate picture of the underlying patterns in the dataset than the observed values (which can be found in Appendix B). Because these are estimated values provided by the model, their values can be less than 1, which was the minimum finger complexity value assigned in our system.

In terms of individual patterns, three of the four homesigners displayed the signers’ pattern: higher finger complexity in Object-HSs than in Handling-HSs (1.87 vs. 1.46; 1.55 vs. 1.00; and 1.50 vs. 1.43). However, the fourth homesigner displayed the gesturers’ pattern, producing higher finger complexity in Handling-HSs (1.58) than in Object-HSs (1.36).

Figure 9 displays examples of the handshapes produced by the homesigners: an Object-HS, T, with a medium complexity score, contrasted with a Handling-HS, B, with a low complexity score. The homesigners’ pattern provides an important link between the high finger complexity in Object-HSs and a decrease in finger complexity in Handling-HSs, and thus represents a potentially important intermediate stage between gesture and sign.

Figure 9.

Example handshapes illustrating the pattern found in three of the four homesigners: higher finger complexity in Object-HSs than in Handling-HSs.

3.3.2 Is the phonological pattern embedded within a morphology?

To determine whether the homesigners’ handshapes constituted morphological categories (and thus whether the finger complexity variation we see in the homesigners’ gestures was embedded within a morphological system), we conducted a type-token analysis on the homesign productions of the single, non-agentive ‘plane’ and ‘lollipop’ items (conditions 1, 2, and 5), 55 tokens in all. Homesigners 1, 2 and 3 had relatively low type-token ratios (.38, .33, .28) that were in the same range as the signers’ (.29, see the portion of Table 2 in bold). However, homesigner 4’s type-token ratio (.50) was much closer to the gesturers’ mean (.52, see the portion of Table 2 in italics).

Table 2.

The type-token ratio and average finger complexity values for Object-HSs and Handling-HSs for each homesigner.

| Signers | HS1 | HS2 | HS3 | HS4 | Gesturers | |

|---|---|---|---|---|---|---|

| Morphology | ||||||

| Type-Token ratio | .29 | .38 | .33 | .28 | .50 | .52 |

| Phonology | ||||||

| Finger complexity Object-HSs | 1.69 | 1.87 | 1.55 | 1.50 | 1.36 | 1.17 |

| Finger complexity Handling-HSs | 1.16 | 1.46 | 1.00 | 1.43 | 1.58 | 1.48 |

Note that homesigners 1, 2, and 3 display morphological and phonological patterns reminiscent of the signers’ patterns (in bold), whereas homesigner 4’s patterns resemble the gesturers’ (in italics, cf. Table 1).

Interestingly, Homesigners 1, 2, and 3 also displayed a finger complexity pattern that resembled the signers’ pattern, whereas homesigner 4’s finger complexity pattern more closely resembled the gesturers’ pattern (Table 2). Thus, the three homesigners who displayed a more tightly constrained morphological system also displayed the phonological pattern closely aligned with conventional sign language, suggesting that the two patterns go hand-in-hand.20

4. GENERAL DISCUSSION AND CONCLUSIONS

Our goal was to explore the roots of the morphophonological pattern that characterizes the handshape inventories of two types of classifiers in sign languages—higher finger complexity in Object-HSs than in Handling-HSs. This phenomenon has an iconic base and therefore might be one that hearing individuals would invent if asked to create gestures on the spot. We found, however, that it is not. When hearing individuals use gesture to represent objects, they show less finger complexity in their Object-HSs, and more finger complexity in their Handling-HSs, than do signers. Thus, the finger complexity pattern found in signers, despite its iconicity, is not a codified version of the gestures hearing individuals invent on the spot. In addition, the finger complexity pattern found in signers differs from the gesturers’ pattern in one other important respect—it is embedded in a morphological system. Signers used a constrained set of forms to express object and handling meanings; the forms the gesturers used for the same meanings displayed significantly more variability and, in this sense, did not constitute a morphological system. Because it was not situated within a morphological system, the contrast that the gesturers displayed in their use of selected fingers cannot be considered phonological.

But perhaps continued use of gesture as a primary communication system would result in the morphophonological pattern found in sign languages. To explore this hypothesis, we asked whether homesigners—deaf individuals who have not acquired a signed or spoken language and use their own gestures to communicate—use handshapes that are more similar to signers’ conventionalized handshapes than to gesturers’ spontaneously generated handshapes. As a group, the homesigners’ patterns were closer to the signers’ pattern than to the gesturers’. Homesigners displayed the same level of finger complexity in Object handshapes that signers do and, like signers, less finger complexity in Handling handshapes than gesturers do (although not quite as low as the signers’). Moreover, like signers (and unlike gesturers), homesigners’ finger complexity variation was embedded within a morphological system (they used a relatively constrained set of forms to express object and handling meanings). Interestingly, the three homesigners whose handshape forms displayed the least variability (i.e., a morphological pattern) also displayed the finger complexity pattern characteristic of signers (i.e., a phonological pattern), suggesting that the two may go hand-in-hand.

The discontinuity in finger complexity between gesturers on the one hand and homesigners and signers on the other provides clues to the sequence of changes that might have taken place in the evolution of the morphophonological system underlying handshape in sign languages. Although gesturers have had a lifetime of experience with co-speech gesture, communicating in a gesture-only mode is a new experience. As a result, gesturers rely on an accessible mimetic process when they use their hand to represent what the hand is actually doing in the vignettes. We suggest that because of this mimetic process they display high levels of finger complexity in these hand-as-hand representations. In contrast, a more abstract process is called for when the hand is used to represent properties of the object in an event. Gesturers use relatively little finger complexity in these hand-as-object representations. If we assume that the handshapes homesigners used when first fashioning their gesture systems are similar to the handshapes used by the gesturers in our study, we can make guesses about the process of reorganization and change that led to the homesigners’ current gesture systems.21 We see the first indicators of a phonologization process in the homesigners’ Object-HSs: Homesigners increase finger complexity in their Object-HSs from the gesturers’ level to the signers’ level. We also see changes in the homesigners’ Handling-HSs: homesigners decrease finger complexity in Handling-HSs from the gesturers’ level, although not yet to the signers’ level.

Although homesigners have achieved sign-like levels of finger complexity in their Object handshapes, their Handling-HSs still appear to be in transition. Homesigners’ finger complexity levels in Handling-HSs are significantly lower than the gesturers’ levels, but they have not yet fully decreased to the sign level. It is possible that achieving the sign level of finger complexity in Handling-HSs, which has the effect of maximizing the distinction between Object-HSs and Handling-HSs, may require a linguistic community in which some sort of negotiation of form takes place. The homesigners’ daily communication partners are their hearing family members and friends, none of whom uses the homesign system as a primary means of communication. Thus, it is difficult to characterize homesigners as having access to a shared linguistic community. Moreover, the homesigners do not know or interact with one another. There is, consequently, no pressure to arrive at the most efficient solution for a whole community. The fact that homesigners’ complexity level is closer to the gesture level for Handling handshapes than for Object handshapes suggests that a shared linguistic community may play a role in “losing” the hand-as-hand iconicity displayed so robustly by the gesturers.

Along these lines, Aronoff and Sandler (2009) have argued that the emergence of phonology in Al-Sayyid Bedouin Sign Language (ABSL) arises within families, as it is there that they first find evidence of word-level processes (handshape assimilation in compound-like structures). They suggest that use within a social group, coupled with frequency of use, are essential factors in the conventionalization of form. However, it is important to point out that, even without a shared linguistic community, the homesigners in our study used handshape forms in a relatively stable and arbitrary way (displaying a pattern that was different from the gesturers’ pattern) and, in this sense, had begun to develop both a morphological and phonological component in their gesture systems. Our findings thus raise the possibility that the social community does not play an essential role in the early stages of the emergence of morphological or phonological components, although a linguistic community is undoubtedly necessary to stabilize and fully conventionalize these components.22 We are not suggesting that the homesigners have fully established morphological or phonological systems, but rather that their gesture systems represent a historical step in the conventionalization process. The interesting result is how far the homesigners have been able to go toward developing a sign-like morphophonological pattern without support from a linguistic community.

What then accounts for the sign-like pattern observed in the homesigners, but not in the gesturers? There are two factors that distinguish homesigners from gesturers: They have been using their gestures to communicate over a long period of time, and gesture is their primary means of communication. Although intertwined, these two factors can be distinguished. To explore the hypothesis that prolonged use contributes to the sign-like pattern, we can ask gesturers to describe events using only their hands over a relatively long period of time. If continued use is the root of the sign-like pattern, we should see gesturers begin to move toward this pattern (i.e., to develop more finger complexity in Object handshapes and less in Handling handshapes). To explore the hypothesis that using the system as a primary means of communication contributes to the sign-like pattern, we can examine young homesigners as they develop their gesture systems. If using gesture as a primary communication system is the root of the sign-like pattern, then we should see this pattern early in homesign development. In other words, even in the absence of a conventional language model or prolonged experience, when gesture takes the place of speech or sign, morphophonological properties would begin to emerge. Pilot data on 4-year-old gesturers and signers suggest that the adult-like patterns seen in Study 1 may already be present at this young age (Jung 2008), and we are currently conducting cross-sectional and longitudinal analyses of child homesign systems in Nicaragua to determine whether homesigning children display the same pattern as the homesigning adults.

We began with a set of crosslinguistic facts about morphophonology in sign language classifier systems (Eccarius 2008, Brentari & Eccarius 2010). This type of systematicity is inter-componential; that is, it is not a purely phonological phenomenon. It is part of the interface between phonology and morphology, with a strong link to iconicity. Similar phenomena have been found in spoken languages.23 For example, in Standard Italian, there is iconicity between the size of an object described by a spoken form and the size of the space in the oral cavity used to express the form (Nobile 2008); the [round, -high, back] vowel /o/ is associated with large objects in the suffix –one, and the [high, -back] vowel /i/ is associated with small objects in the suffix –ino. These vowels are, at the same time, part of the phonological and morphological systems of Italian.

Because the homesigners’ handshapes are different from the handshapes that hearing individuals create on the spot, we can argue that handshape in homesign has already undergone the reorganization evident in sign languages. The systematic relation between handshape meaning and form, along with the relative stability of handshape form within a category, makes these structures in homesign morphological. But it is the particular reorganization of iconicity (more finger complexity in Object handshapes than in Handling handshapes) that makes these homesign patterns phonological. We suggest that the cross-componential type of grammar building seen here may be the first step that evolving sign languages take in acquiring a phonological component. Minimal pairs are not abundant in sign languages (van der Kooij 2002, van der Hulst & van der Kooij 2006, Brentari in press a, in press b) and, thus far, there have been no minimal pairs reported in homesign (nor in Al-Sayyid Bedouin Sign Language, the young sign language currently evolving in Israel; Sandler et al. 2005). But, as we have shown here, homesign does display a sign-like pattern in finger complexity distribution and, in this sense, can be said to display morphophonological structure.

In terms of the role that iconicity plays in sign language grammar, we would argue that iconicity is one factor, along with other pressures, such as ease of articulation, ease of perception, and maximal dispersion, that influences the shape of the system. In other words, phonology and iconicity are not mutually exclusive (see also Meir 2002, van der Kooij 2003, Eccarius 2008, Padden et al. in press). In this way, iconicity is organized, conventionalized, and systematized in sign language grammar, but it is not eliminated. Although there are cases of a sign form going completely against iconicity, more often the form is simply more rigidly constrained than would be predicted by iconicity, as we have seen in the finger complexity levels analyzed here. Sign languages do exploit iconicity but, ultimately, iconicity in signs has to assume a distribution that takes on arbitrary dimensions.

To conclude, we can learn a great deal about how language emerges by employing the method used here. We used an elicitation methodology to replicate results obtained using grammaticality judgments in established languages, licensing us to use the methodology with other populations. By extracting a carefully defined subset of forms and analyzing them with tools available from our knowledge of sign language phonological universals, we have the potential to trace both continuities and discontinuities from gesture to homesign to sign language.

Acknowledgments

This research was supported by NSF grants BCS 0112391 and BCS 0547554 to Brentari and R01DC00491 to Goldin-Meadow. We would also like to extend special thanks to Angela Righi of the Università di Firenze for help with data collection and to Myoungji Lee of the Statistics Consulting Service in the Department of Statistics at the University of Chicago.

APPENDIX A

Observed values for average finger complexity and standard error for Object-HSs and Handling-HSs for signers and gesturers by Country with error bars indicated.

APPENDIX B

Observed values for average finger complexity for signers, homesigners, and gesturers, collapsing across country, with error bars indicated.

Footnotes

The term ‘classifier’ has been called into question as an accurate label for these structures in sign languages (see Emmorey 2003), but the type of spoken language morphology that most parallels the sign language phenomenon is traditionally called “classifier”; we therefore use it here.

There are other types of classifiers in sign languages represented by handshape, also affixed to the verb (e.g, body part classifiers, extension classifiers), which are not the focus of our investigation (see Supalla 1982, Emmorey & Herzig 2003, Fish et al. 2003, Benedicto &Brentari 2004, Eccarius 2008).

We have adopted a single model of phonological representation for our work, the Prosodic Model (Brentari 1998). However, it is important to point out that other models of sign language phonology agree on the constructs that are central to our handshape analyses (e.g. Liddell & Johnson 1989, Sandler 1989; Brentari 1990, 1998; van der Hulst 1993, 1995; van der Kooij 2002; Sandler and Lillo-Martin 2006). As a result, using a different model to frame the study would not affect the pattern or significance of the findings.

Rather than use unfamiliar notation or features, we use the handshape font (e.g., T) to indicate handshapes. When this font is used for individual handshapes, the image is a picture of a particular hand. When this font is used to represent finger groups, the image stands for a category of handshapes. In these cases, the term “finger group” will precede the image (i.e., finger group T), and the image will picture a handshape with unspread, extended fingers (without the thumb). For example, the finger group T in Figure 3 represents the set of handshapes that includes the whole range of joint configurations Z, {, X, @, b, Y, and d.

For an overview of the role of selected fingers in sign language phonology see Brentari (1995, in press a, in press b).

Advances in phonological theory in recent decades have demonstrated that the inventory of phonological forms includes not only distinctive features that create unrelated lexemes, but also features used in phonological operations, particularly features that qualify as autosegmental tiers. Autosegmental phonology (Goldsmith 1976, 1990), Underspecification Theory (Steriade 1995), and recent work on Feature Theory (Hall 2001) address these issues. Along these lines, Clements (2001) proposes three types of contrasts, all of which can be considered phonological: “distinctive”, “active” (those involved in phonological operations), and “prominent” (those that qualify as an autosegmental tier). Tone in spoken languages—like finger complexity in sign languages—can be used to create all three types of contrasts. For example, in Ga’anda tone is needed not only for distinctive contrast and in phonological rules, but also to express “noun of noun” (associative) constructions, which are realized exclusively by tone (Ma Newman 1971, cf. Kenstowicz 1994).

Even though these sign languages are historically unrelated, sign languages form a typological class (Brentari 1995, 2002). It is therefore not surprising to find similarities across them.

A native signer has at least one parent who was a signer of the language in question. With one exception, the experimenter was a native user of the language under investigation.

The handshape that the actor used when handling an object in the agentive vignettes was difficult to see, particularly without slow motion. It therefore would have been difficult for participants to veridically copy the actor’s handshape in their descriptions of the Agent vignettes.

Signers and gesturers were asked to describe the vignette under four different conditions: (1) no instruction (other than to use only the hands); (2) as if they were addressing a deaf child who does not sign; (3) as if they were addressing someone located at a distance; and (4) as precisely as possible. The data reported here are drawn from the no-instruction condition, which was the first condition all participants performed.

The proportion of Object-HSs and Handling-HSs that did not match the intent of the stimulus, and were therefore excluded, was .16.

The main effect for Country was marginally significant, indicating that signers and gesturers from the US tended to produce higher finger complexity than signers and gesturers from Italy, t(10) = -2.02, p = 0.064. However, the lack of interaction between Country and Handshape Type (t = -0.915, p = .38) indicates that the overall pattern in finger complexity for the different Handshape Types did not differ by Country.

We also explored whether the sign and gesture groups differed in the way they used joint complexity in Object-HSs and Handling-HSs. We analyzed joint configurations in the singular agentive and nonagentive vignettes (conditions 1 and 6). Joint complexity was divided into four levels based on frequency in the ASL system (Hara, 2003) and on the complexity of the phonological representation: fully open and fully closed handshapes were assigned to Level 1 (e.g., 6 w); flat and spread handshapes were assigned to level 2 (e.g., z. v); bent and curved handshapes were assigned to Level 3 (e.g., - <); stacked and crossed handshapes were assigned to Level 4 (e.g., X d). An average joint complexity value was calculated for each participant and then for each group. All groups showed greater joint complexity in Handling-HSs than in Object-HSs. The difference between sign and gesture groups thus appears to be confined to selected finger complexity.

A difference in joints or finger complexity constituted a different variant.

The object ‘airplane’ had two types of falling conditions, 5a and 5b.

We emphasize that the signers approached the ideal type-token ratio because there was some variability within an individual’s productions, as the range indicates.

The handshape inventories in Object-CLs and Handling-CLs are not entirely non-overlapping; there are a few handshapes that exist in both categories, namely those with an opposed thumb.

The languages that arise in this context are called village sign languages and often have a high proportion of hearing users, for example, the sign language used on Martha’s Vineyard (Groce 1985), Al-Sayyid Bedouin Sign Language, ABSL (Sandler et al. 2005; Meir et al. 2007, Meir et al. 2010; Padden et al. 2010), Adamarobe Sign Language (Nyst 2010); Kata Kolok (Branson et al. 1996).

Prior to 1977, deaf individuals in Nicaragua had few opportunities to interact with one another. Between 1977 and 1983, two educational programs were established that served more than 400 deaf students; although instruction was not in sign, students were able to freely communicate with each other using gestures.

Like the signers and gesturers, the homesigners displayed greater average joint complexity in Handling-HSs than in Object-HSs.

It is possible that the sign-like pattern in homesigners’ handshapes is the result of influence from Nicaraguan Sign Language (NSL). We think this possibility unlikely because none of the homesigners had regular contact with individuals who knew and used NSL.

Exactly how many people constitute a community of users is an empirical question; e.g., could more than one homesigner in a family (using the system as a primary communication medium) constitute a “community”?

See Hinton, Nichols and Ohala (1994) for an excellent overview of the topic of sound symbolism.

Contributor Information

Diane Brentari, Email: dbrentari@uchicago.edu, Department of Linguistics, University of Chicago, 1010 East 59th Street, Chicago, IL 60637-1512, USA.

Marie Coppola, Departments of Psychology and Linguistics, University of Connecticut, Storrs, CT, USA.

Laura Mazzoni, Linguistics Department, University of Pisa, Pisa, Italy.

Susan Goldin-Meadow, Departments of Psychology and Comparative Human Development, University of Chicago, Chicago, IL, USA.

References

- Aronoff Mark, Sandler Wendy. Al-Sayyid Bedouin Sign Language: An Autochthonous Sign Language of the Negev Desert. paper presented at the American Association for the Advancement of Science; February, 15; Chicago, IL. 2009. [Google Scholar]

- Aronoff Mark, Meir Irit, Sandler Wendy. The Paradox of Sign Language Morphology. Language. 2005;81(2):301–344. doi: 10.1353/lan.2005.0043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aronoff Mark, Meir Irit, Padden Carol, Sandler Wendy. Classifier Constructions and Morphology in Two Sign Languages. In: Emmorey K, editor. Perspectives On Classifier Constructions In Sign Languages. Lawrence Erlbaum Associates; Mahwah, NJ: 2003. pp. 53–86. [Google Scholar]

- Benedicto Elena, Brentari Diane. Where did all the arguments go?: Argument-changing Properties of Classifiers in ASL. Natural Language and Linguistic Theory. 2004;22(4):1–68. [Google Scholar]

- Boyes Braem Penny. Ph D dissertation. University of California; Berkeley, California: 1981. Distinctive Features of the Handshapes of American Sign Language. [Google Scholar]

- Branson Jan, Miller Don, Marsaja I Gede. Everyone Here Speaks Sign Language, Too: A Deaf Village in Bali, Indonesia. In: Lucas C, editor. Multicultural Aspects of Sociolinguistics in Deaf Communities. Gallaudet University Press; Washington, DC: 1996. pp. 39–57. [Google Scholar]

- Brentari Diane. Sign Language Phonology. In: Goldsmith J, Riggle J, Yu A, editors. Handbook of Phonological Theory. Blackwells; New York/Oxford: in press a. [Google Scholar]

- Brentari Diane. Handshape in Sign Language Phonology. In: van Oostendorp M, Ewen C, Hume E, Rice K, editors. Companion to Phonology. Wiley-Blackwells; New York/Oxford: in press b. [Google Scholar]

- Brentari Diane. Modality Differences in Sign Language Phonology and Morphophonemics. In: Meier R, Quinto-Pozos D, Cormier K, editors. Modality in Language and Linguistic Theory. Cambridge University Press; Cambridge, UK: 2002. pp. 35–64. [Google Scholar]

- Brentari Diane. A Prosodic Model of Sign Language Phonology. MIT Press; Cambridge, MA: 1998. [Google Scholar]

- Brentari Diane. Sign Language Phonology: ASL. In: Goldsmith J, editor. Handbook of Phonological Theory. Basil Blackwell; Oxford, UK: 1995. pp. 615–39. [Google Scholar]