Abstract

This paper presents a real-time implementation of an intent recognition system on one transfemoral (TF) amputee. Surface Electromyographic (EMG) signals recorded from residual thigh muscles and the ground reaction forces/moments collected from the prosthetic pylon were fused to identify three locomotion modes (level-ground walking, stair ascent, and stair descent) and tasks such as sitting and standing. The designed system based on neuromuscular-mechanical fusion can accurately identify the performing tasks and predict intended task transitions of the patient with a TF amputation in real-time. The overall recognition accuracy in static states (i.e. the states when subjects continuously performed the same task) was 98.36%. All task transitions were correctly recognized 80–323 ms before the defined critical timing for safe switch of prosthesis control mode. These promising results indicate the potential of designed intent recognition system for neural control of computerized, powered prosthetic legs.

I. Introduction

LOWER limb amputation has a profound impact on the basic activities of the leg amputee's daily life. Recent advancements in microcomputer-controlled, powered artificial legs have increased the number of functions that a prosthetic leg can perform [1–3]. The control of a powered artificial leg is mode-based [1, 3–4] since the dynamics of prosthesis depends on the user's locomotion intent, such as level-ground walking and stair ascent/descent. Without knowing the user's intent, the prosthetic leg cannot appropriately select the prosthetic control mode and smoothly transit the activities from one to another. Currently, the artificial legs are simply controlled by manually adjusting extra body motions or a remote key fob [5], which are both cumbersome. Accurately recognizing the leg amputee’s locomotion intent is required in order to realize the smooth and seamless control of prosthetic legs.

A recent study reported an intent recognition approach which was achieved by using the mechanical measurements from the powered prosthetic legs [6]. Task transitions among level walking, sitting, and standing were investigated and tested on one patient with transfemoral (TF) amputation. The study reported 100% accuracy of recognizing the mode transitions and only 6 misclassifications during a 570s testing period. However, over 500ms system delay was reported. In addition, except the level walking, no other locomotion modes were tested. Only using mechanical information may not be able to promptly recognize the transitions between different locomotion modes because this type of information may not be directly associated with the user’s intent. Alternatively, utilizing the neural control signal can enable the true intuitive control of the artificial limbs.

Surface electromyographic (EMG) signals is as one of the major neural control sources for the powered prosthesis [7–10]. A phase-dependent EMG pattern recognition strategy was developed in our previous study [11]. The method was tested on eight able-bodied subjects and two subjects with TF amputation. About 90% accuracy was obtained when recognizing seven locomotion modes. The accuracy for user intent recognition was further improved by fusing EMG signals measured from the residual thigh muscles and the ground reaction forces/moments collected from the prosthetic pylon, called neuromuscular-mechanical fusion [12]. The algorithm was tested in real-time to recognize three locomotion modes (level walking, stair ascent, and stair descent) on one able-bodied subject with recognition accuracy of 99.73%.

Although the previous real-time testing on the able-bodied subject has demonstrated promising results, whether or not the designed intent recognition system can be used for neural control of artificial legs is unclear. This is because there might not be enough EMG recording sites available for neuromuscular information extraction due to the muscle loss in patients with leg amputations, which may cause the accuracy of user intent recognition to be inadequate for robust prosthetic control. Therefore, in order to evaluate the potential of our designed intent recognition system for prosthetic legs, it is essential to test the system on leg amputees in real-time. In this presented study, the designed system was tested and evaluated on one TF amputee subject. In addition, besides the previous tested tasks, another two tasks: sitting and standing, were included in this study. The results of this study will aid the further development of neural-controlled artificial legs.

II. METHODS

A. Architecture of Intent Recognition System

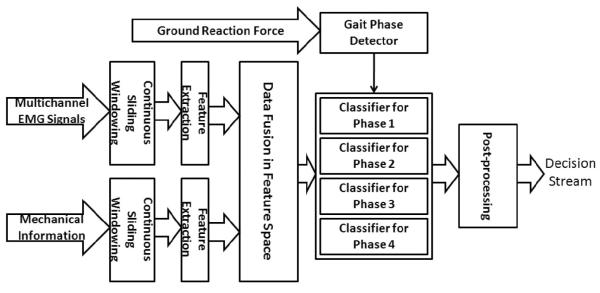

The architecture of the intent recognition system based on neuromuscular-mechanical fusion is demonstrated in Fig.1. The multichannel EMG signals and mechanical measurements from 6-degree of freedom (DOF) load cell are simultaneously sent into the system and then segmented into continuous, overlapped analysis windows. In each analysis window, EMG features are extracted from each channel; the mechanical features are computed from individual degree of freedom. Then, both the EMG and mechanical features are concatenated into one feature vector. The fused feature vector is then sent into a phase-dependent classifier. The phase-dependent classifier consists of multiple sub-classifiers, each one of which is established based on the data in one defined gait phase. The corresponding classifier is switched on based on the output of the designed gait phase detector. A post-processing algorithm is applied to the decision stream to produce smoothed decision continuously.

Fig. 1.

Architecture of locomotion intent recognition system based on neuromuscular-mechanical fusion.

B. Signal Pre-processing and Feature Extraction

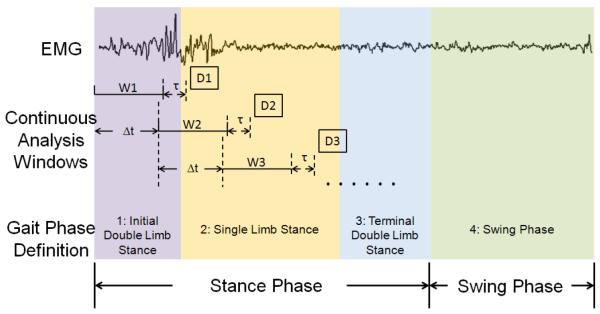

The raw EMG signals are band-pass filtered between 25 and 450 Hz by an eighth-order Butterworth filter. The mechanical forces/moments are recorded from a 6-DOF load cell mounted on the prosthetic pylon and low-pass filtered with a 50 HZ cutoff frequency. Then, the signal streams are segmented by sliding analysis windows as shown in Fig. 2. In this study, the length of the analysis window is 150 ms and the window increment is 50 ms.

Fig. 2.

Continuous windowing scheme for real time pattern recognition and definition of gait phases. W1, W2 and W3 are continuous analysis windows. For each analysis window, a classification decision (D1, D2 and D3) is made window increment) seconds later. τ is the processing time required of the classifier, where τ is no larger than Δt.

Four time-domain (TD) features were extracted from the EMG signals: (1) the mean absolute value, (2) number of zero crossings, (3) number of slope sign changes, and (4) waveform length as described in [9]. For mechanical signals, the mean, minimum, and maximum values in each analysis window were extracted as the features.

C. Phase-dependent Classification Strategy

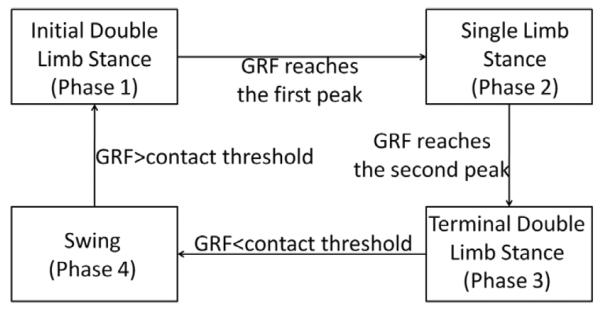

The phase-dependent classification strategy was applied in order to deal with the non-stationary EMG signals. Different from the gait phase cutting strategy employed in previous study [11], in which the discrete gait phases with constant 200ms duration were used, continuously gait phases were used in this study. Four clinically gait phases are defined and applied (shown in Fig. 2). The real-time gait phase detection is implemented by monitoring the vertical ground reaction force (GRF) measured from the 6 DOF load cell mounted on the prosthetic leg. The detection criteria are shown in Fig. 3. The applied contact threshold is 2% of the subject's weight. If one analysis window is located between two defined gait phases (e.g. the window W2 Fig. 2), the activated classifier is associated with the gait phase, in which it incorporates the data more than half of the window length (e.g. the classifier associated with the phase 2 should be used for the data in W2).

Fig. 3.

The real-time gait phase detection criteria.

D. Pattern Recognition Algorithm

Support Vector Machine (SVM) with a nonlinear kernel was used in the design of the classifier to identify the locomotion intent of the subject. The reasons for choosing the nonlinear kernel based SVM are: (1) a nonlinear classifier might accurately classify the data when the linear boundaries among classes are difficult to define, and (2) SVM is more computationally efficient than other nonlinear classifiers such as ANN, which makes the real-time implementation feasible. In this study, a multiclass SVM with “one-against-one” (OAO) scheme [13] and C-Support Vectors Classification (C-SVC) [14] were used to identify different locomotion intent. The applied kernel function was the radial basis function (RBF). More details about SVM algorithm can be found in [13–14]. A 5-point majority vote scheme is applied to eliminate the erroneous decisions from the classifier.

E. Participant and Experimental Setup

This study was conducted with Institutional Review Board (IRB) approval and informed consent of the subject. One female patient with unilateral transfemoral (TF) amputation was recruited.

Ten channels surface EMG signals from the residual thigh muscles were monitored. A 16-channel EMG system (Motion Lab System, US) was used to collect EMG signals from the subject. The EMG electrodes were embedded in customized gel liners (Ohio Willow Wood, US) for both comfort and reliable electrode-skin contact and placed at locations where strong EMG signals could be recorded. A ground electrode was placed on the bony area near the anterior iliac spine. The EMG system filtered signals between 20 Hz and 450 Hz with a pass-band gain of 1000 and then sampled at 1000 Hz. Mechanical ground reaction forces and moments were measured by a six–degree of freedom (DOF) load cell (Bertec Corporation, OH, US) mounted on the prosthetic pylon. The six measurements from the load cell were also sampled at 1000 Hz. All data recordings were synchronized and streamed into a PC through data collection system. The PC is Dell Precision 690 with 1.6GHz Xeon CPU and 2GB RAM. The real-time algorithm was implemented in MATLAB and the real-time locomotion predictions were displayed on a flat Plasma TV. In addition, the states of sitting and standing were indicated by a pressure measuring mat which was attached to the gluteal region of the subject. The experiment was videotaped to provide the ground truth for recognition system evaluation.

F. Experimental Protocol

During the experiment, the TF subject wore a hydraulic passive knee. Experimental sockets were duplicated from the subject's ischial containment socket with suction suspension. The subject received instructions and practiced the tasks several times prior to experiment.

Three locomotion modes including level-ground walking (W), stair ascent (SA), and stair descent (SD) and two tasks such as sitting (S) and standing (ST) were investigated in this study. The mode transitions included W→SA, SA→W, W→SD, SD→W, S→ST, ST→W, W→ST, and ST→S. The whole testing consisted of two sessions: training session and testing session. A training session was conducted before the testing to collect the training data for building the classifier. For each locomotion task (class), the training data was collected in an individual trial. At least three training trials for each task were required in order to collect enough training data. During the real-time testing session, the subject was asked to transit between the five different tasks continuously. Each trial lasted about 1 minute. Totally 15 real-time testing trials were conducted. For the subject's safety, she was allowed to use hand railing. Rest periods were allowed between trials to avoid fatigue.

G. Real-time Performance Evaluation

The real time performance of intent recognition system is evaluated by the following parameters.

1) Classification Accuracy (CA) in the Static States

The static state is defined as the state of the subject continuously walking on the same type of terrain (level ground and stair) or performing the same task (sitting and standing). The classification accuracy in the static state is quantified by

| (1) |

2) The Number of Missed Mode Transitions

For the transition between different locomotion modes, the transition period starts from the initial prosthetic heel contact (phase 1 in Fig. 2) before switching the negotiated terrain and terminates at the end of single stance phase (phase 2 in Fig. 2) after the terrain switching; for the transition between different tasks such as sitting and standing, the transition period begins from the subject starting to switch the task and ends when the subject completely sit/stand. A transition is missed if no correct transition decision is made within the defined transition period.

3) Prediction Time of the Transitions

The prediction time of a transition is defined as the elapsed time from the moment when the decisions of the classifier changes locomotion mode to the critical timing for the investigated task transitions. For the transitions between walking on level-ground and staircase (W→SA, SA→W, W→SD, and SD→W), the critical timing is defined as the beginning of the swing phase in the transitional period; for the transition ST→W, the critical timing is chosen as the beginning of the swing phase; for the transition W→ST, the beginning of initial double limb stance phase was regarded as the critical timing; for the transition S→ST and ST→S, the critical timing is the moment that the pressure under the gluteal region of the subject starts to drop to zero reading or exceed the zero reading.

III. RESULTS

The locomotion intent recognition system was tested on one TF amputee subject in real-time. The classification accuracy in static states was calculated across 15 real-time testing trials. The overall accuracy for recognizing the level-ground walking, stair ascent, stair descent, sitting, and standing was 98.36%. For all of the 15 trials, all the mode transitions were accurately identified within the defined transition period. The prediction time for 8 types of transitions is shown in Table I. This result showed that the user intent for mode transitions can be accurately predicted about 80–323 ms before the critical timing for switching the control of prosthesis.

Table I.

Predication Time of Mode Transitions before Critical Timing

| Transition | W→SA | SA→W | W→SD | SD→W | W→ST | ST→W | ST→S | S→ST |

|---|---|---|---|---|---|---|---|---|

| Estimation Time (ms) | 135.6±23.2 | 143.3±36.8 | 123.2±26.4 | 92.9±43.7 | 80.4±48.1 | 152.8±36.2 | 323.2±50.3 | 90.0±28.9 |

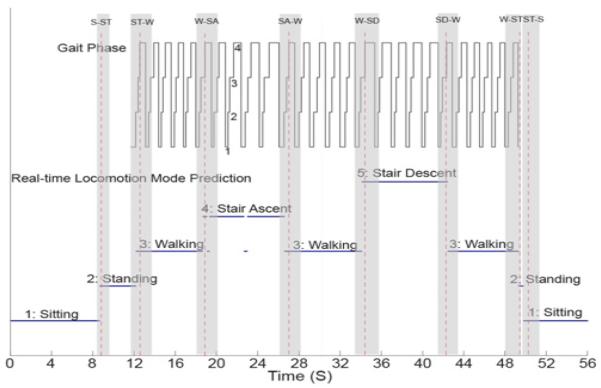

The real-time performance of the intent recognition system in one representative trial is shown in Fig. 4. During the 56 second real-time testing, totally three decision errors in static states were observed when the subject performed the stair ascent locomotion mode. These three errors were misclassified as level-ground walking. All the transitions are correctly recognized before the defined critical timing within the transition period.

Fig. 4.

Real-time system performance in one representative testing trial. The white area denotes the static states period (level walking, stair ascent, stair descent, sitting, and standing); the gray area represents the transitional period. The red dash line indicates the critical timing for each transition.

IV. DISCUSSION

In this study, an intent recognition system based on neuromuscular-mechanical fusion was tested and evaluated in real-time to recognize the locomotion intent of a patient with a transfemoral (TF) amputation. Although the tested amputee only has a 84% of residual limb length, the designed system still produced a 98.36% recognition accuracy in static states and 92–143ms transition prediction time (for W→SA, SA→W, W→SD, and SD→W), similar to the static states accuracy (99.73%) and transition prediction time (112–197ms) received in our previous real-time testing on one able-bodied subject. This implies that the muscles in the amputee's residual limb still present different activation pattern among studied locomotion modes. These preliminary results imply the potential of the designed system for neural control of artificial legs.

A phase-dependent classification strategy was applied in this designed system. Different from the previous study [11], in which the discrete gait phases with constant 200ms duration were used, continuously gait phases were used in this study. The continuous gait phase strategy makes the real-time implementation of the designed system feasible and practical. In addition, it is noteworthy that the gait phase is determined based on the vertical ground reaction force measured from a load cell mounted on the prosthetic pylon. This design of gait phase detector enables the system to be self-contained, which makes the integration of intent recognition system into prosthetic legs possible.

Our current and future efforts include (1) investigation of the information carried by each sensor, (2) quantification of the performance of designed intent recognition system on more TF amputees with different residual length, and (3) study of the effects of errors of the intent recognition on the prosthetic leg control.

V. CONCLUSION

In this study, an intent recognition system based on neuromuscular-mechanical fusion was implemented in real-time on one patient with a transfemoral amputation. The real-time results showed that the designed system can recognize the performing locomotion mode with a high accuracy and predict the mode transitions of the patient with a TF amputation. The system achieved 98.36% accuracy for indentifying the locomotion modes in static states and showed fast response time (80–323ms) for predicting the task transitions. These preliminary promising results demonstrated potentials of designed intent recognition system to aid the future design of neural-controlled artificial legs and therefore improve the quality of life of leg amputees.

ACKNOWLEDGMENT

The authors thank Andrew Burke, Chi Zhang, Yuhong Liu, and Ming Liu at the University of Rhode Island, and Becky Blaine at the Nunnery Orthotic and Prosthetic Technology, LLC, for their suggestion and assistance in this study.

This work was partly supported by DoD/TATRC #W81XWH-09-2-0020, NIH #RHD064968A, and NSF #0931820.

REFERENCES

- [1].Au S, Berniker M, Herr H. Powered ankle-foot prosthesis to assist level-ground and stair-descent gaits. Neural Netw. 2008;21(4):654–66. doi: 10.1016/j.neunet.2008.03.006. [DOI] [PubMed] [Google Scholar]

- [2].Martinez-Villalpando EC, Herr H. Agonist-antagonist active knee prosthesis: a preliminary study in level-ground walking. J Rehabil Res Dev. 2009;46(3):361–73. [PubMed] [Google Scholar]

- [3].Sup F, Bohara A, Goldfarb M. Design and Control of a Powered Transfemoral Prosthesis. Int J Rob Res. 2008;27(2):263–273. doi: 10.1177/0278364907084588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Popovic D, Og uztöreli MN, Stein RB. Optimal control for the active above-knee prosthesis. Ann. Biomed. 1991;Engng 19:131–149. doi: 10.1007/BF02368465. [DOI] [PubMed] [Google Scholar]

- [5].Bedard S, Roy P. Actuated leg prosthesis for above-knee amputees. Vol. 7. U. S.: 2003. [Google Scholar]

- [6].Varol HA, Sup F, Goldfarb M. Multiclass real-time intent recognition of a powered lower limb prosthesis. IEEE Trans Biomed Eng. 2010;57(3):542–51. doi: 10.1109/TBME.2009.2034734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Williams TW., 3rd Practical methods for controlling powered upper-extremity prostheses. Assist Technol. 1990;2(1):3–18. doi: 10.1080/10400435.1990.10132142. [DOI] [PubMed] [Google Scholar]

- [8].Parker PA, Scott RN. Myoelectric control of prostheses. Crit Rev Biomed Eng. 1986;13(4):283–310. [PubMed] [Google Scholar]

- [9].Hudgins B, Parker P, Scott RN. A new strategy for multifunction myoelectric control. IEEE Trans Biomed Eng. 1993;40(1):82–94. doi: 10.1109/10.204774. [DOI] [PubMed] [Google Scholar]

- [10].Englehart K, Hudgins B. A robust, real-time control scheme for multifunction myoelectric control. IEEE Trans Biomed Eng. 2003;50(7):848–54. doi: 10.1109/TBME.2003.813539. [DOI] [PubMed] [Google Scholar]

- [11].Huang H, Kuiken TA, Lipschutz RD. A strategy for identifying locomotion modes using surface electromyography. IEEE Trans Biomed Eng. 2009;56(1):65–73. doi: 10.1109/TBME.2008.2003293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Zhang F, DiSanto W, Ren J, Dou Z, Yang Q, Huang H. A Novel CPS System for Evaluating a Neural-Machine Interface for Artificial Legs. Proceeding of 2nd ACM/IEEE International Conference on Cyber-Physical Systems; 2011. to be published. [Google Scholar]

- [13].Oskoei MA, Hu H. Support vector machine-based classification scheme for myoelectric control applied to upper limb. IEEE Trans Biomed Eng. 2008;55(8):1956–65. doi: 10.1109/TBME.2008.919734. [DOI] [PubMed] [Google Scholar]

- [14].Crawford B, et al. Real-Time Classification of Electromyographic Signals for Robotic Control. Proceedings of the 20th National Conference on Artificial Intelligence.2005. [Google Scholar]

- [15].Hargrove LJ, Englehart K, Hudgins B. A comparison of surface and intramuscular myoelectric signal classification. IEEE Trans Biomed Eng. 2007;54(5):847–53. doi: 10.1109/TBME.2006.889192. [DOI] [PubMed] [Google Scholar]