Abstract

Estimation of high-dimensional covariance matrices is known to be a difficult problem, has many applications, and is of current interest to the larger statistics community. In many applications including so-called the “large p small n” setting, the estimate of the covariance matrix is required to be not only invertible, but also well-conditioned. Although many regularization schemes attempt to do this, none of them address the ill-conditioning problem directly. In this paper, we propose a maximum likelihood approach, with the direct goal of obtaining a well-conditioned estimator. No sparsity assumption on either the covariance matrix or its inverse are are imposed, thus making our procedure more widely applicable. We demonstrate that the proposed regularization scheme is computationally efficient, yields a type of Steinian shrinkage estimator, and has a natural Bayesian interpretation. We investigate the theoretical properties of the regularized covariance estimator comprehensively, including its regularization path, and proceed to develop an approach that adaptively determines the level of regularization that is required. Finally, we demonstrate the performance of the regularized estimator in decision-theoretic comparisons and in the financial portfolio optimization setting. The proposed approach has desirable properties, and can serve as a competitive procedure, especially when the sample size is small and when a well-conditioned estimator is required.

Keywords: covariance estimation, regularization, convex optimization, condition number, eigenvalue, shrinkage, cross-validation, risk comparisons, portfolio optimization

1 Introduction

We consider the problem of regularized covariance estimation in the Gaussian setting. It is well known that, given n independent samples x1, …, xn ∈ ℝp from a zero-mean p-variate Gaussian distribution, the sample covariance matrix

maximizes the log-likelihood as given by

| (1) |

where det A and tr(A) denote the determinant and trace of a square matrix A respectively. In recent years, the availability of high-throughput data from various applications has pushed this problem to an extreme where in many situations, the number of samples, n, is often much smaller than the dimension of the estimand, p. When n < p the sample covariance matrix S is singular, not positive definite, and hence cannot be inverted to compute the precision matrix (the inverse of the covariance matrix), which is also needed in many applications. Even when n > p, the eigenstructure tends to be systematically distorted unless p/n is extremely small, resulting in numerically ill-conditioned estimators for Σ; see Dempster (1972) and Stein (1975). For example, in mean-variance portfolio optimization (Markowitz, 1952), an ill-conditioned covariance matrix may amplify estimation error because the optimal portfolio involves matrix inversion (Ledoit and Wolf, 2003; Michaud, 1989). A common approach to mitigate the problem of numerical stability is regularization.

In this paper, we propose regularizing the sample covariance matrix by explicitly imposing a constraint on the condition number.1 Instead of using the standard estimator S, we propose to solve the following constrained maximum likelihood (ML) estimation problem

| (2) |

where cond(M) stands for the condition number, a measure of numerical stability, of a matrix M (see Section 1.1 for details). The matrix M is invertible if cond(M) is finite, ill-conditioned if cond(M) is finite but high (say, greater than 103 as a rule of thumb), and well-conditioned if cond(M) is moderate. By bounding the condition number of the estimate by a regularization parameter κmax, we directly address the problem of invertibility or ill-conditioning. This direct control is appealing because the true covariance matrix is in most situations unlikely to be ill-conditioned whereas its sample counterpart is often ill-conditioned. It turns out that the resulting regularized matrix falls into a broad family of Steinian-type shrinkage estimators that shrink the eigenvalues of the sample covariance matrix towards a given structure (James and Stein, 1961; Stein, 1956). Moreover, the regularization parameter κmax is adaptively selected from the data using cross validation.

Numerous authors have explored alternative estimators for Σ (or Σ−1) that perform better than the sample covariance matrix S from a decision-theoretic point of view. Many of these estimators give substantial risk reductions compared to S in small sample sizes, and often involve modifying the spectrum of the sample covariance matrix. A simple example is the family of linear shrinkage estimators which take a convex combination of the sample covariance matrix and a suitably chosen target or regularization matrix. Notable in the area is the seminal work of Ledoit and Wolf (2004) who study a linear shrinkage estimator toward a specified target covariance matrix, and choose the optimal shrinkage to minimize the Frobenius risk. Bayesian approaches often directly yield estimators which shrink toward a structure associated with a pre-specified prior. Standard Bayesian covariance estimators yield a posterior mean Σ that is a linear combination of S and the prior mean. It is easy to show that the eigenvalues of such estimators are also linear shrinkage estimators of Σ; see, e.g., Haff (1991). Other nonlinear Steinian-type estimators have also been proposed in the literature. James and Stein (1961) study a constant risk minimax estimator and its modification in a class of orthogonally invariant estimators. Dey and Srinivasan (1985) provide another minimax estimator which dominates the James-Stein estimator. Yang and Berger (1994) and Daniels and Kass (2001) consider a reference prior and hierarchical priors, that respectively yield posterior shrinkage.

Likelihood-based approaches using multivariate Gaussian models have provided different perspectives on the regularization problem. Warton (2008) derives a novel family of linear shrinkage estimators from a penalized maximum likelihood framework. This formulation enables cross-validation of the regularization parameter, which we discuss in Section 3 for the proposed method. Related work in the area include Sheena and Gupta (2003), Pourahmadi et al. (2007), and Ledoit and Wolf (2012). An extensive literature review is not undertaken here, but we note that the approaches mentioned above (and the one proposed in this paper) fall in the class of covariance estimation and related problems which do not assume or impose sparsity, on either the covariance matrix, or its inverse (for such approaches either in the frequentist, Bayesian, or testing frameworks, the reader is referred to Banerjee et al. (2008); Friedman et al. (2008); Hero and Rajaratnam (2011, 2012); Khare and Rajaratnam (2011); Letac and Massam (2007); Peng et al. (2009); Rajaratnam et al. (2008)).

1.1 Regularization by shrinking sample eigenvalues

We briefly review Steinian-type eigenvalue shrinkage estimators in this subsection. Dempster (1972) and Stein (1975) noted that the eigenstructure of the sample covariance matrix S tends to be systematically distorted unless p/n is extremely small. They observed that the larger eigenvalues of S are overestimated whereas the smaller ones are underestimated. This observation led to estimators which directly modify the spectrum of the sample covariance matrix and are designed to “shrink” the eigenvalues together. Let li, i = 1, …, p, denote the eigenvalues of the sample covariance matrix (sample eigenvalues) in nonincreasing order (l1 ≥ … ≥ lp ≥ 0). The spectral decomposition of the sample covariance matrix is given by

| (3) |

where diag(l1, …, lp) is the diagonal matrix with diagonal entries li and Q ∈ ℝp×p is the orthogonal matrix whose i-th column is the eigenvector that corresponds to the eigenvalue li. As discussed above, a large number of covariance estimators regularizes S by modifying its eigenvalues with the explicit goal of better estimating the eigenspectrum. In this light Stein (1975) proposed the class of orthogonally invariant estimators of the following form:

| (4) |

Typically, these estimators shrink the sample eigenvalues so that the modified eigenspectrum is less spread than that of the sample covariance matrix. In many estimators, the shrunk eigenvalues are required to maintain the original order as those of S: λ̂1 ≥ ··· ≥ λ̂p.

One well-known example of Steinian-type shrinkage estimators is the linear shrinkage estimator as given by

| (5) |

where the target matrix F = cI for some c > 0 (Ledoit and Wolf, 2004; Warton, 2008). For the linear estimator the relationship between the sample eigenvalues li and the modified eigenvalues λ̂i is affine:

Another example, Stein’s estimator (Stein, 1975, 1986), denoted by Σ̂Stein, is given by λ̂i = li/di, i = 1, …, p, with di = (n − p + 1 + 2li Σj≠i(li − lj)−1)/n. The original order in the estimator is preserved by applying isotonic regression (Lin and Perlman, 1985).

1.2 Regularization by imposing a condition number constraint

Now we proceed to introduce the condition number-regularized covariance estimator proposed in this paper. Recall that the condition number of a positive definite matrix Σ is defined as

where λmax(Σ) and λmin(Σ) are the maximum and the minimum eigenvalues of Σ, respectively. (Understand that cond(Σ) = ∞ if λmin(Σ) = 0.) The condition number-regularized covariance estimation problem (2) can therefore be formulated as

| (6) |

An implicit condition is that Σ be symmetric and positive definite2.

The estimation problem (6) can be reformulated as a convex optimization problem in the matrix variable Σ−1 (see Section 2). Standard methods such as interior-point methods can solve the convex problem when the number of variables (i.e., entries in the matrix) is modest, say, under 1000. Since the number of variables is about p(p + 1)/2, the limit is around p = 45. Such a naive approach is not adequate for moderate to high dimensional problems. One of the main contributions of this paper is a significant improvement on the solution method for (6) so that it scales well to much larger sizes. In particular, we show that (6) reduces to an unconstrained univariate optimization problem. Furthermore, the solution to (6), denoted by Σ̂cond, has a Steinian-type shrinkage of the form as in (4) with eigenvalues given by

| (7) |

for some τ★ > 0. Note that the modified eigenvalues are nonlinear functions of the sample eigenvalues, meet the order constraint of Section 1.1, and even when n < p, the nonlinear shrinkage estimator Σ̂cond is well-conditioned. The quantity τ★ is determined adaptively from the data and the choice of the regularization parameter κmax. This solution method was first considered by two of the authors of this paper in a previous conference proceeding (Won and Kim, 2006). In this paper, we give a formal proof of the assertion that the matrix optimization problem (6) reduces to an equivalent unconstrained univariate minimization problem. We further elaborate on the proposed method by showing rigorously that τ★ can be found exactly and easily with computational effort of order O(p) (Section 2.1).

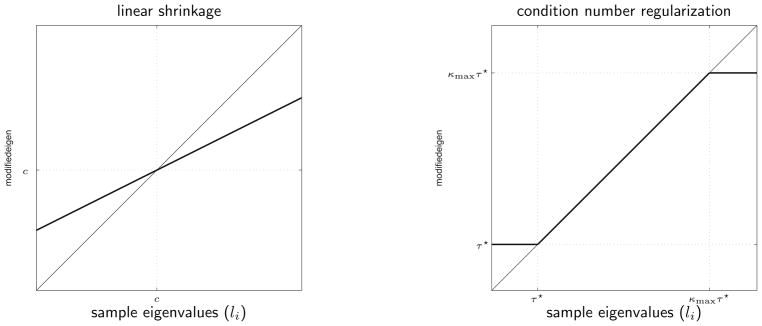

The nonlinear shrinkage in (7) has a simple interpretation: the eigenvalues of the estimator Σ̂cond are obtained by truncating the sample eigenvalues larger than κmaxτ★ to κmaxτ★ and those smaller than τ★ to τ★. Figure 1 illustrates the functional form of (7) in comparison with that of the linear shrinkage estimator. This novel shrinkage form is rather surprising, because the original motivation of the regularization in (6) is to numerically stabilize the covariance estimate. Note that this type of shrinkage better preserves the eccentricity of the sample estimate than the linear shrinkage which shrinks it toward a spherical covariance matrix.

Figure 1.

Comparison of eigenvalue shrinkage of the linear shrinkage estimator (left) and the condition number-constrained estimator (right).

Other major contributions of the paper include a detailed analysis of the regularization path of the shrinkage enabled by a geometric perspective of the condition number-regularized estimator (Section 2.2), and an adaptive selection procedure for choosing the regularization parameter κmax under the maximum predictive likelihood criterion (Section 3) and properties thereof.

We also undertake a Bayesian analysis of the condition number constrained estimator (Section 4). A detailed application of the methodology to real data, which demonstrates the usefulness of the method in a practical setting, is also provided (Section 6). In particular, Section 6 studies the use of the proposed estimator in the mean-variance portfolio optimization setting. Section 5 undertakes a simulation study in order to compare the risk of the condition number-regularized estimator to those of others. Risk comparisons in higher dimensional settings (as compared to those given in the preliminary conference paper) are provided in this Section. Asymptotic properties of the estimator are also investigated in Section 5.

2 Condition number-regularized covariance estimation

2.1 Derivation and characterization of solution

This section gives the derivation of the solution (7) of the condition number-regularized covariance estimation problem as given in (6) and shows how to compute τ★ given κmax. Note that it suffices to consider the case κmax < l1/lp = cond(S), since otherwise, the condition number constraint is already satisfied and the solution to (6) simply reduces to the sample covariance matrix S.

It is well known that the log-likelihood (1) of a multivariate Gaussian covariance matrix is a convex function of Ω = Σ−1. Note that Ω is the canonical parameter for the (Wishart) natural exponential family associated with the likelihood in (1). Since cond(Σ) = cond(Ω), it can be shown that the condition number constraint on Ω is equivalent to the existence of u > 0 such that uI ⪯ Ω ⪯ κmaxuI, where A ⪯ B denotes that B − A is positive semidefinite (Boyd and Vandenberghe, 2004, Chap. 7). Therefore the covariance estimation problem (6) is equivalent to

| (8) |

where the variables are a symmetric positive definite p×p matrix Ω and a scalar u > 0. The above problem in (8) is a convex optimization problem with p(p + 1)/2 + 1 variables, i.e., O(p2).

The following lemma shows that by exploiting structure of the problem it can be reduced to a univariate convex problem, i.e., the dimension of the system is only of O(1) as compared to O(p2).

Lemma 1

The optimization problem (8) is equivalent to the unconstrained univariate convex optimization problem

| (9) |

where

and

| (10) |

in the sense that the solution to (8) is a function of the solution u★ to (9), as follows.

with Q defined as in (3).

Proof

The proof is given in the Appendix.

Characterization of the solution to (9) is given by the following theorem.

Theorem 1

Given κmax ≤ cond(S), the optimization problem (9) has a unique solution given by

| (11) |

where α★ ∈ {1, …, p} is the largest index such that 1/lα★ < u★ and β★ ∈ {1, …, p} is the smallest index such that 1/lβ★ > κmaxu★. The quantities α★ and β★ are not determined a priori but can be found in O(p) operations on the sample eigenvalues l1 ≥ … ≥ lp. If κmax > cond(S), the maximizer u★ is not unique but Σ̂cond = S for all the maximizing values of u.

Proof

The proof is given in the Appendix.

Comparing (10) to (7), it is immediately seen that

| (12) |

Note that the lower cutoff level τ★ is an average of the (scaled and) truncated eigenvalues, in which the eigenvalues above the upper cutoff level κmaxτ★ are shrunk by a factor of 1/κmax.

We highlight the fact that a reformulation of the original minimization into a univariate optimization problem makes the proposed methodology very attractive in high dimensional settings. The method is only limited by the complexity of spectral decomposition of the sample covariance matrix (or the singular value decomposition of the data matrix). The approach proposed here is therefore much faster than using interior point methods. We also note that the condition number-regularized covariance estimator is orthogonally invariant: if Σ̂cond is the estimator of the true covariance matrix Σ, then UΣ̂condUT is the estimator of the true covariance matrix UΣUT, for U orthogonal.

2.2 A geometric perspective on the regularization path

In this section, we shall show that a simple relaxation of the optimization problem (8) provides an intuitive geometric perspective on the condition number-regularized estimator. Consider the function

| (13) |

defined as the minimum of the objective of (8) over a range uI ⪯Ω ⪯ vI, where 0 < u ≤ v. Note that the relaxation in (13) above differs from the original problem in the sense that the optimization is no longer with respect to the variable u. In particular, u and v are fixed in (13). By fixing u, the problem has now been significantly simplified. Paralleling Lemma 1, it is easily shown that

Recall that the truncation range of the sample eigenvalues is therefore given by (1/v, 1/u). Now for given u, let α ∈ {1, …, p} be the largest index such that lα > 1/u and β ∈ {1,…, p} be the smallest index such that lβ < 1/v, i.e., the set of indexes where truncation at either end of the spectrum first starts to become a binding constraint. With this convention it is easily shown that J(u, v) can now be expressed in simpler form:

| (14) |

| (15) |

where

| (16) |

Comparing (16) to (10), we observe that (Ω*)−1, the covariance estimate whose inverse achieves the minimum in (13), is obtained by truncating the eigenvalues of S greater than 1/u to 1/u, and less than 1/v to 1/v.

Furthermore, note that the function J(u, v) has the following properties:

J does not increase as u decreases and v increases simultaneously. This follows from noting that simultaneously decreasing u and increasing v expands the domain of the minimization in (13).

J(u, v) = J(1/l1, l/lp) for u ≤ 1/l1 and v ≥ 1/lp. Hence J(u, v) is constant on this part of the domain. For these values of u and v, (Ω★)−1 = S.

J(u, v) is almost everywhere differentiable in the interior of the domain {(u, v) : 0 < u ≤ v}, except for on the lines u = 1/l1,…, 1/lp and v = 1/l1,…, 1/lp. This follows from noting the the indexes α and β changes their values only on these lines. Hence the contribution to the three summands in (14) changes at these values.

We can now see the following obvious relation between the function J(u, v) and the original problem (8): the solution to (8) as given by u★ is the minimizer of J(u, v) on the line v = κmaxu, i.e., the original univariate optimization problem (9) is equivalent to minimizing J(u, κmaxu). We denote this minimizer by u★(κmax) and investigate how u★(κmax) behaves as κmax varies. The following proposition proves that u★(κmax) is monotone in κmax. This result sheds light on the regularization path, i.e., the solution path of u★ as κmax varies.

Proposition 1

u★(κmax) is nonincreasing in κmax and v★(κmax), ≜ κmaxu★(κmax) is nondecreasing, both almost surely.

Proof

The proof is given in the Appendix.

Remark

The above proposition has a very natural and intuitive interpretation: when the constraint on the condition number is relaxed to allow higher value of κmax, the gap between u★ and v★ widens so that the ratio of v★ to u★ remains at κmax. This implies that as κmax is increased, the lower truncation value u★ decreases and the higher truncation value v★ increases. Proposition 1 can be equivalently interpreted by noting that the optimal truncation range τ★(κmax), κmaxτ★(κmax) of the sample eigenvalues is nested.

In light of the solution to the condition number-regularized covariance estimation problems in (7), Proposition 1 also implies that once an eigenvalue li is truncated for κmax = ν0, then it remains truncated for all κmax < ν0. Hence the regularized eigenvalue estimates are not only continuous, but they are also monotonic in the sense that they approach either end of the truncation spectrum as the regularization parameter κmax is decreased to 1. This monotonicity of the condition number-regularized estimates gives a desirable property that is not always enjoyed by other regularized estimators, such as the lasso for instance (Personal communications: Jerome Friedman, Department of Statistics, Stanford University).

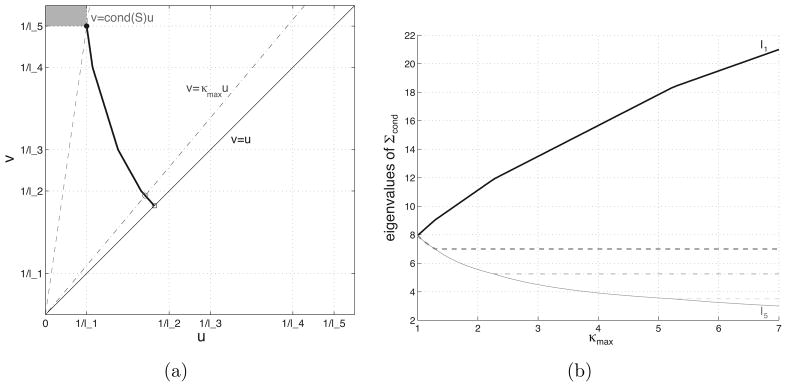

With the above theoretical knowledge on the regularization path of the sample eigenvalues, we now provide an illustration of the regularization path; see Figure 2. More specifically, consider the plot of the path of the optimal point (u★(κmax), v★(κmax)) on the u–v plane from (u★(1), u★(1)) to (1/l1, 1/lp) when varying κmax from 1 to cond(S). The left panel shows the path of (u★(κmax), v★(κmax)) on the u–v plane for the case where the sample eigenvalues are (21, 7, 5.25, 3.5, 3). Here a point on the path represents the minimizer of J(u, v) on a line v = κmaxu (hollow circle). The path starts from a point on the solid line v = u (κmax = 1, square) and ends at (1/l1, 1/lp), where the dashed line v = cond(S) · u passes (κmax = cond(S), solid circle). Note that the starting point (κmax = 1) corresponds to Σ̂cond = γI for some data-dependent γ > 0 and the end point (κmax = cond(S)) to Σ̂cond = S. When κmax > cond(S), multiple values of u★ are achieved in the shaded region above the dashed line, yielding nevertheless the same estimator S. The right panel of Figure 2 shows how the eigenvalues of the estimated covariance vary as a function of κmax. Here we see that as the constraint is made stricter the eigenvalue estimates decrease monotonically. Furthermore, the truncation range of the eigenvalues simultaneously decreases and remains nested.

Figure 2.

Regularization path of the condition number constrained estimator. (a) Path of (u★(κmax), v★(κmax)) on the u-v plane, for sample eigenvalues (21, 7, 5.25, 3.5, 3) (thick curve). (b) Regularization path of the same sample eigenvalues as a function of κmax. Note that the estimates decreases monotonically as the condition number constraint κmax decreases to 1.

3 Selection of regularization parameter κmax

Sections 2.1 and 2.2 have already discussed how the optimal truncation range (τ★, κmaxτ★) is determined for a given regularization parameter κmax, and how it varies with the value of κmax. This section proposes a data-driven (or adaptive) criterion for selecting the optimal κmax and undertakes an analysis of this approach.

3.1 Predictive risk selection procedure

A natural approach is to select κmax that minimizes the predictive risk, or the expected negative predictive likelihood as given by

| (17) |

where Σ̂ν is the estimated condition number-regularized covariance matrix from independent samples x1, ···, xn, with the value of the regularization parameter κmax set to ν, and X̃ ∈ ℝp is a random vector, independent of the given samples, from the same distribution. We approximate the predictive risk using K-fold cross validation. The K-fold cross validation approach divides the data matrix into K groups so that with nk observations in the k-th group, k = 1, …, K. For the k-th iteration, each observation in the k-th group Xk plays the role of X̃ in (17), and the remaining K − 1 groups are used together to estimate the covariance matrix, denoted by . The approximation of the predictive risk using the k-th group reduces to the predictive log-likelihood

The estimate of the predictive risk is then defined as

| (18) |

The optimal value for the regularization parameter κmax is selected as ν that minimizes (18), i.e.,

Note that the outer infimum is taken since is constant for ν ≥ cond(S[−k]), where S[−k] is the k-th fold sample covariance matrix based on the remaining K − 1 groups.

3.2 Properties of the optimal regularization parameter

We proceed to investigate the behavior of the selected regularization parameter κ̂max, both theoretically and in simulations. We first note that κ̂max is a consistent estimator for the true condition number κ. This fact is expressed below as one of the several properties of κ̂max:

-

(P1)

For a fixed p, as n increases, κ̂max approaches κ in probability, where κ is the condition number of the true covariance matrix Σ.

This statement is stated formally below:

Theorem 2

For a given p,

Proof

The proof is given in Supplemental Section A.

We further investigate the behavior of κmax in simulations. To this end, consider iid samples from a zero-mean p-variate Gaussian distribution with the following covariance matrices:

Identity matrix in ℝp.

diag(1, r, r2, …, rp), with condition number 1/rp = 5.

diag(1, r, r2, …, rp), with condition number 1/rp = 400.

Toeplitz matrix whose (i, j)th element is 0.3|i−j| for i, j = 1, …, p.

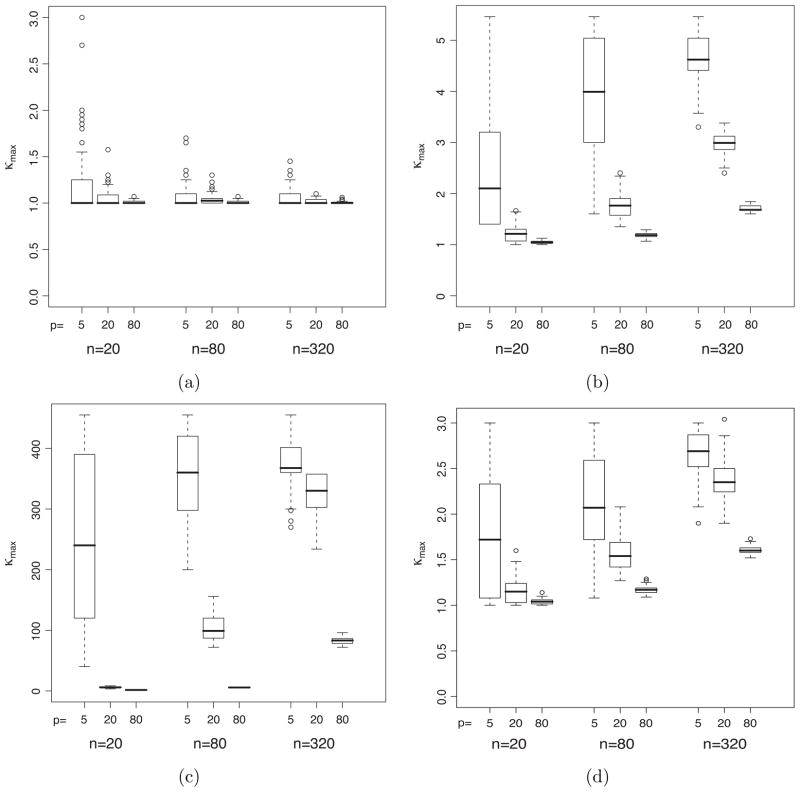

We consider different combinations of sample sizes and dimensions of the problem as given by n = 20, 80, 320 and p = 5, 20, 80. For each of these cases 100 replicates are generated and κ̂max computed with 5-fold cross validation. The behavior of κ̂max is plotted in Figure 3 and leads to insightful observations. A summary of the properties of the κ̂max is given in (P2)–(P4) below:

Figure 3.

Box plots summarizing the distribution of κ̂max for dimensions p = 5, 20, 80 and for sample sizes n = 20, 80, 320 for the following covariance matrices (a) identity (b) diagonal exponentially decreasing, condition number 5, (c) diagonal exponentially decreasing, condition number 400, (d) Toeplitz matrix whose (i, j)th element is 0.3|i−j| for i, j = 1, 2,…, p.

-

(P2)

If the condition number of the true covariance matrix remains finite as p increases, then for a fixed n, κ̂max decreases.

-

(P3)

If the condition number of the true covariance matrix remains finite as p increases, then for a fixed n, κ̂max converges to 1.

-

(P4)

The variance of κ̂max decreases as either n or p increases.

These properties are analogous to those of the optimal regularization parameter δ̂ for the linear shrinkage estimator (5), found using a similar predictive risk criterion (Warton, 2008).

4 Bayesian interpretation

In the same spirit as the Bayesian posterior mode interpretation of the LASSO (Tibshirani, 1996), we can draw parallels for the condition number regularized covariance estimator. The condition number constraint given by λ1(Σ)/λp(Σ) ≤ κmax is similar to adding a penalty term gmax(λ1(Σ)/λp(Σ)) to the likelihood equation for the eigenvalues:

The above expression allows us to qualitatively interpret the condition number-regularized estimator as the Bayes posterior mode under the following prior

| (19) |

for the eigenvalues, and an independent Haar measure on the Stiefel manifold, as the prior for the eigenvectors. The aforementioned prior on the eigenvalues has useful interesting properties which help to explain the type of eigenvalue truncation described in previous sections. We note that the prior is improper but the posterior is always proper.

Proposition 2

The prior on the eigenvalues in (19) is improper, whereas the posterior yields a proper distribution. More formally,

and

where λ = (λ1,…, λp) and C = {λ : λ1 ≥ ··· ≥ λp > 0}.

Proof

The proof is given in Supplemental Section A.

The prior above puts the greatest mass around the region {λ ∈ ℝp : λ1 = ··· = λp} which consequently encourages “shrinking” or “pulling” the eigenvalues closer together. Note that the support of both the prior and the posterior is the entire space of ordered eigenvalues. Hence the prior simply by itself does not immediately yield a hard constraint on the condition number. Evaluating the posterior mode yields an estimator that satisfies the condition number constraint.

A clear picture of the regularization achieved by the prior above and its potential for “eigenvalue shrinkage” emerges when compared to the other types of priors suggested in the literature and the corresponding Bayes estimators. The standard MLE implies of course a completely flat prior on the constrained space C. A commonly used inverse Wishart conjugate prior Σ−1 ~ Wishart(m, cI) yields a posterior mode which is a linear shrinkage estimator (5) with δ = m/(n + m). Note however that the coefficients of the combination do not depend of the data X, and are a function only of the sample size n and the degrees of freedom or shape parameter from the prior, m. A useful prior for covariance matrices that yields a data-dependent posterior mode is the reference prior proposed by Yang and Berger (1994). For this prior, the eigenvalues are inversely proportional to the determinant of the the covariance matrix, as given by , and also encourages shrinkage of the eigenvalues. The posterior mode using this reference prior can be formulated similarly to that of condition number regularization:

An examination of the penalty implied by the reference prior suggests that there is no direct penalty on the condition number. In Supplemental Section B we illustrate the density of the priors discussed above in the two-dimensional case. In particular, the density of the condition number regularization prior places more emphasis on the line λ1 = λ2 thus “squeezing” the eigenvalues together. This is in direct contrast with the inverse Wishart or reference priors where this shrinkage effect is not as pronounced.

5 Decision-theoretic risk properties

5.1 Asymptotic properties

We now show that the condition number-regularized estimator Σ̂cond has asymptotically lower risk than the sample covariance matrix S with respect to entropy loss. Recall that the entropy loss, also known as Stein’s loss, is defined as follows:

| (20) |

Let λ1, …, λp, with λ1 ≥ ··· ≥ λp, denote the eigenvalues of the true covariance matrix Σ and Λ = diag(λ1, …, λp). Define λ = (λ1, …, λp), , and κ = λ1/λp.

First consider the trivial case when p > n. In this case, the sample covariance matrix S is singular regardless of the singularity of Σ, and

(S, Σ) = ∞, whereas the loss and therefore the risk of Σ̂cond are always finite. Thus, Σ̂cond has smaller entropy risk than S.

(S, Σ) = ∞, whereas the loss and therefore the risk of Σ̂cond are always finite. Thus, Σ̂cond has smaller entropy risk than S.

For p ≤ n, if the true covariance matrix has a finite condition number, it can be shown that for a properly chosen κmax, the condition number-regularized estimator asymptotically dominates the sample covariance matrix. This assertion is formalized below.

Theorem 3

Consider a class of covariance matrices

(κ, ω), whose condition numbers are bounded above by κ and with minimum eigenvalue bounded below by ω > 0, i.e.,

(κ, ω), whose condition numbers are bounded above by κ and with minimum eigenvalue bounded below by ω > 0, i.e.,

Then, the following results hold.

-

Consider the quantity Σ̃(κmax, ω) = Qdiag(λ̃1, …, λ̃p)QT, where

and the sample covariance matrix given as S = Qdiag(l1, …, lp)QT, Q orthogonal, l1 ≥ … ≥ lp. If the true covariance matrix Σ ∈

(κmax, ω), then ∀ n, Σ̂(κmax, ω) has a smaller entropy risk than S.

(κmax, ω), then ∀ n, Σ̂(κmax, ω) has a smaller entropy risk than S. -

Consider a true covariance matrix Σ whose condition number is bounded above by κ, i.e., Σ ∈ ∪ω>0

(κ, ω). If

, then as p/n → γ ∈ (0, 1),

(κ, ω). If

, then as p/n → γ ∈ (0, 1),

where τ★ = τ★(κmax) is given in (12).

Proof

The proof is given in Supplemental Section A.

Combining the two results above, we conclude that the estimator Σ̂cond = Σ̂(κmax, τ★(κmax)) asymptotically has a lower entropy risk than the sample covariance matrix.

5.2 Finite sample performance

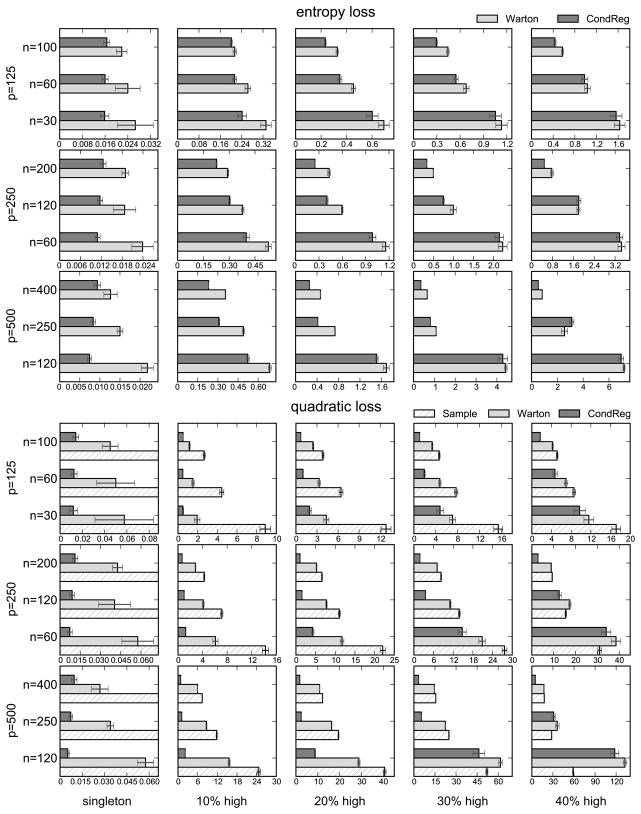

This section undertakes a simulation study in order to compare the finite-sample risks of the condition number-regularized estimator Σ̂cond with those of the sample covariance matrix (S) and the linear shrinkage estimator (Σ̂LS) in the “large p, small n” setting. The regularization parameter δ for Σ̂LS is chosen as prescribed in Warton (2008). The optimal Σ̂cond is calculated using the adaptive parameter selection method outlined in Section 3. Since Σ̂cond and Σ̂LS both select the regularization parameters similarly, i.e., by minimizing the empirical predictive risk (18), a meaningful comparison between two estimators can be made. We consider two loss functions traditionally used in covariance estimation risk comparisons: (a) entropy loss as given in (20) and (b) quadratic loss .

Condition number regularization applies shrinkage to both ends of the sample eigenvalue spectrum and does not affect the middle part, whereas linear shrinkage does this to the entire spectrum uniformly. Therefore, it is expected that Σ̂cond works well when a small proportion of eigenvalues are found at the extremes. Such situations rise very naturally when only a few eigenvalues explain most of the variation in data. To understand the performance of the estimators in this context the following scenarios were investigated. We consider diagonal matrices of dimensions p = 120, 250, 500 as true covariance matrices. The eigenvalues (diagonal elements) are dichotomous, where the “high” values are (1 − ρ)+ ρp and the “low” values are 1 − ρ. For each p, we vary the composition of the diagonal elements such that the high values take only one (singleton), 10%, 20%, 30%, and 40% of the total number of p eigenvalues. The sample size n is chosen so that γ = p/n is approximately 1.25, 2, or 4. Note that for a given p, the condition number of the true covariance matrices is held fixed at 1 + pρ/(1 − ρ) regardless of the composition of eigenvalues. For each of the simulation scenarios, we generate 1000 data sets and compute 1000 estimates of the true covariance matrix. The risks are calculated by averaging the losses over these 1000 estimates.

Figure 4 presents the results for ρ = 0.1, and represents a large condition number. It is observed that in general Σ̂cond has less risk than Σ̂LS, which in turn has less risk than the sample covariance matrix (entropy loss is not defined for the sample covariance matrix). This phenomenon is most clear when the eigenvalue spectrum has a singleton in the extreme. In this case, Σ̂cond gives a risk reduction between 27 % and 67 % for entropy loss, and between 67 % and 91 % for quadratic loss. The risk reduction tends to be more pronounced in high dimensional scenarios, i.e., for p large and n small. The performance of Σ̂cond over Σ̂LS is maintained until the “high” eigenvalues compose up to 30 % of the eigenvalue spectrum. Comparing the two loss functions, risk reduction of Σ̂cond is more distinct in quadratic loss. We note that for quadratic loss with large p and large proportion of “high” eigenvalues, there are cases that the sample covariance matrix can perform well.

Figure 4.

Average risks (with error bars) over 1000 runs with respect to two loss functions when ρ= 0.1. sample=sample covariance matrix, Warton=linear shrinkage (Warton, 2008), CondReg=condition number regularization. Risks are normalized by the dimension (p).

As an example of a moderate condition number, results for the ρ = 0.5 case is given in Supplemental Section C. General trends are the same as with the ρ = 0.1 case.

In summary, the risk comparison study provides a numerical evidence that condition number regularization has merit when the true covariance matrix has a bimodal eigenvalue distribution and/or the true condition number is large.

6 Application to portfolio selection

This section illustrates the merits of the condition number regularization in the context of financial portfolio optimization, where a well-conditioned covariance estimator is necessary. A portfolio refers to a collection of risky assets held by an institution or an individual. Over the holding period, the return on the portfolio is the weighted average of the returns on the individual assets that constitutes the portfolio, in which the weight associated with each asset corresponds to its proportion in monetary terms. The objective of portfolio optimization is to determine the weights that maximize the return on the portfolio. Since the asset returns are stochastic, a portfolio always carries a risk of loss. Hence the objective is to maximize the overall return subject to a given level of risk, or equivalently to minimize risk for a given level of return. Mean-variance portfolio (MVP) theory (Markowitz, 1952) uses the standard deviation of portfolio returns to quantify the risk. Estimation of the covariance matrix of asset returns thus becomes critical in the MVP setting. An important and difficult component of MVP theory is to estimate the expected return on the portfolio (Luenberger, 1998; Merton, 1980). Since the focus of this paper lies in estimating covariance matrices and not expected returns, we shall focus on determining the minimum variance portfolio which only requires an estimate of the covariance matrix; see, e.g., Chan et al. (1999). For this we shall use the condition number regularization, linear shrinkage and the sample covariance matrix, in constructing a minimum variance portfolio. We compare their respective performance over a period of more than 14 years.

6.1 Minimum variance portfolio rebalancing

We begin with a formal description of the minimum variance portfolio selection problem. The universe of assets consists of p risky assets, denoted 1,…, p. We use ri to denote the return of asset i over one period; that is, its change in price over one time period divided by its price at the beginning of the period. Let Σ denote the covariance matrix of r = (r1,…, rp). We employ wi to denote the weight of asset i in the portfolio held throughout the period. A long position in asset i corresponds to wi > 0, and a short position corresponds to wi < 0. The portfolio is therefore unambiguously represented by the vector of weights w = (w1,…, wp). Without loss of generality, the budget constraint can be written as 1Tw = 1, where 1 is the vector of all ones. The risk of a portfolio is measured by the standard deviation (wTΣw)1/2 of its return.

Now the minimum variance portfolio selection problem can be formulated as

| (21) |

This is a simple quadratic program that has an analytic solution w★ = (1TΣ−11)−1Σ−11. In practice, the parameter Σ has to be estimated.

The standard portfolio selection problem described above assumes that the returns are stationary, which is of course not realistic. As a way of dealing with the nonstationarity of returns, we employ a minimum variance portfolio rebalancing (MVR) strategy as follows. Let , t = 1, …, Ntot, denote the realized returns of assets at time t (the time unit under consideration can be a day, a week, or a month). The periodic minimum variance rebalancing strategy is implemented by updating the portfolio weights every L time units, i.e., the entire trading horizon is subdivided into blocks each consisting of L time units. At the start of each block, we determine the minimum variance portfolio weights based on the past Nestim observations of returns. We shall refer to Nestim as the estimation horizon size. The portfolio weights are then held constant for L time units during these “holding” periods, i.e., during each of these blocks, and subsequently updated at the beginning of the following one. For simplicity, we shall assume the entire trading horizon consists of Ntot = Nestim + KL time units, for some positive integer K, i.e., there will be K updates. (The last rebalancing is done at the end of the entire period, and so the out-of-sample performance of the rebalanced portfolio for this holding period is not taken into account.) We therefore have a series of portfolios w(j) = (1T(Σ̂(j))−11)−1(Σ̂(j))−11 over the holding periods of [Nestim +1+(j − 1)L, Nestim + jL], j = 1,…, K. Here Σ̂(j) is the covariance matrix of the asset returns estimated from those over the jth holding period.

6.2 Empirical out-of-sample performance

In this empirical study, we use the 30 stocks that constituted the Dow Jones Industrial Average as of July 2008 (Supplemental Section D.1 lists these 30 stocks). We used the closing prices adjusted daily for all applicable splits and dividend distributions downloaded from Yahoo! Finance (http://finance.yahoo.com/). The whole period considered in our numerical study is from the trading date of December 14, 1992 to June 6, 2008 (this period consists of 4100 trading days). We consider weekly returns: the time unit is 5 consecutive trading days. We take

To estimate the covariance matrices, we use the last Nestim weekly returns of the constituents of the Dow Jones Industrial Average.3 The entire trading horizon corresponds to K = 48 holding periods, which span the dates from February 18, 1994 to June 6, 2008. In what follows, we compare the MVR strategy where the covariance matrices are estimated using the condition number regularization with those that use either the sample covariance matrix or linear shrinkage. We employ two linear shrinkage schemes: that of Warton (2008) of Section 5.2 and that of Ledoit and Wolf (2004). The latter is widely accepted as a well-conditioned estimator in the financial literature.

Performance metrics

We use the following quantities in assessing the performance of the MVR strategies. For precise formulae of these metrics, refer to Supplemental Section D.3.

Realized return. The realized return of the portfolio over the trading period.

Realized risk. The realized risk (return standard deviation) of the portfolio over the trading period.

Realized Sharpe ratio. The realized excess return, with respect to the risk-free rate, per unit risk of the portfolio.

Turnover. Amount of new portfolio assets purchased or sold over the trading period.

Normalized wealth growth. Accumulated wealth yielded by the portfolio over the trading period when the initial budget is normalized to one, taking the transaction cost into account.

Size of the short side. The proportion of the short side (negative) weights to the sum of the absolute weights of the portfolio.

We assume that the transaction costs are the same for the 30 stocks and set them to 30 basis points. The risk-free rate is set at 5% per annum.

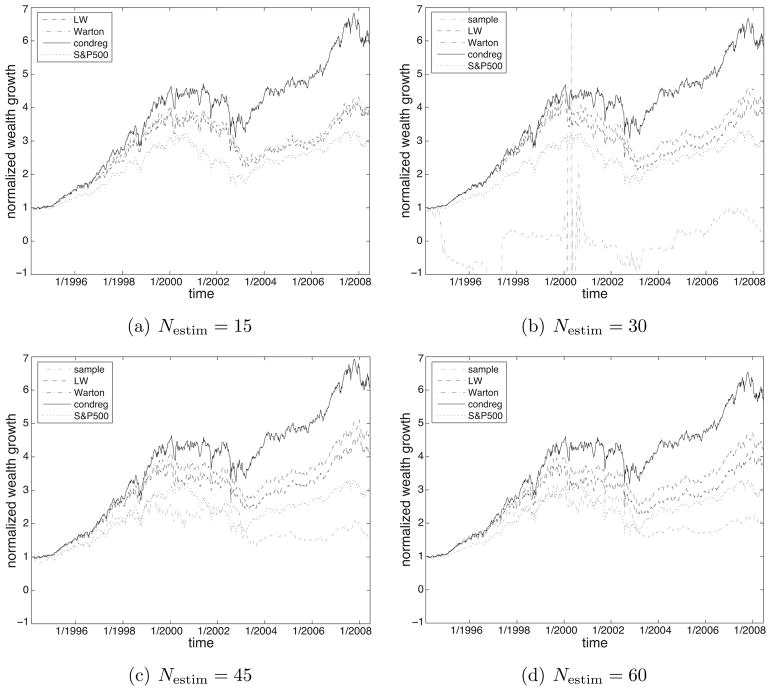

Comparison results

Figure 5 shows the normalized wealth growth over the trading horizon for four different values of Nestim. The sample covariance matrix failed in solving (21) for Nestim = 15 because of its singularity and hence is omitted in this figure. The MVR strategy using the condition number-regularized covariance matrix delivers higher growth as compared to using the sample covariance matrix, linear shrinkage or index tracking in this performance metric. The higher growth is realized consistently across the 14 year trading period and is regardless of the estimation horizon. A trading strategy based on the condition number-regularized covariance matrix consistently performs better than the S&P 500 index and can lead to profits as much as 175% more than its closest competitor.

Figure 5.

Normalized wealth growth results of the minimum variance rebalancing strategy for various estimation horizon sizes over the trading period from February 18, 1994 through June 6, 2008. sample=sample covariance matrix, LW=linear shrinkage (Ledoit and Wolf, 2004), Warton=linear shrinkage (Warton, 2008), condreg=condition number regularization. For comparison, the S&P 500 index for the same period (i.e., index tracking), with the initial price normalized to 1, is also plotted.

A useful result appears after further analysis. There is no significant difference between the condition number regularization approach and the two linear shrinkage schemes in terms of the realized return, risk, and Sharpe ratio. Supplemental Section D.4 summarizes these metrics for each estimator respectively averaged over the trading period. For all values of Nestim, the average differences of the metrics between the two regularization schemes are within two standard errors of those. Hence the condition number regularized estimator delivers better normalized wealth growth than the other estimators but without compromising on other measures such as volatility.

The turnover of the portfolio seems to be one of the major driving factors of the difference in wealth growth. In particular, the MVR strategy using the condition number-regularized covariance matrix gives far lower turnover and thus more stable weights than when using the linear shrinkage estimator or the sample covariance matrix. (See Supplemental Section D.5 for plots.) A lower turnover also implies less transaction costs, thereby also partially contributing to the higher wealth growth. Note that there is no explicit limit on turnover. The stability of the MVR portfolio using the condition number regularization appears to be related to its small size of the short side (reported in Supplemental Section D.6). Because stock borrowing is expensive, the condition number regularization based strategy can be advantageous in practice.

Supplementary Material

Acknowledgments

We thank the editor and the associate editor for useful comments that improved the presentation of the paper. J. Won was partially supported by the US National Institutes of Health (NIH) (MERIT Award R37EB02784) and by the US National Science Foundation (NSF) grant CCR 0309701. J. Lim’s research was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education, Science and Technology (grant number: 2010-0011448). B. Rajaratnam was supported in part by NSF under grant nos. DMS-09-06392, DMS-CMG 1025465, AGS-1003823, DMS-1106642 and grants NSA H98230-11-1-0194, DARPA-YFA N66001-11-1-4131, and SUWIEVP10-SUFSC10-SMSCVISG0906.

Appendix

Proof of Lemma 1

Recall the spectral decomposition of the sample covariance matrix S = QLQT, with L = diag(l1,…, lp) and l1 ≥ … ≥ lp ≥ 0. From the objective function in (8), suppose the variable Ω has the spectral decomposition RMRT, with R orthogonal and M = diag(μ1,…, μp), μ1 ≤ … ≤ μp. Then the objective function in (8) can be written as

with equality in the last line when R = Q (Farrell, 1985, Ch. 14). Hence (8) amounts to

| (22) |

with the optimization variables μ1, …, μp and u.

For the moment we shall ignore the order constraints among the eigenvalues. Then problem (22) becomes separable in μ1,…, μp. Call this related problem (22*). For a fixed u, the minimizer of each summand of the objective function in (22) without the order constraints is given as

| (23) |

Note that (23) however satisfies the order constraints. In other words, for all u. Therefore (22*) is equivalent to (22). Plugging (23) in (22) removes the constraints and the objective function reduces to a univariate one:

| (24) |

where

The function (24) is convex, since each is convex in u.

Proof of Theorem 1

The function is convex and is constant on the interval [1/(κmaxli), 1/li]. Thus, the function has a region on which it is a constant if and only if

or equivalently, κmax > cond(S). Therefore, provided that κmax ≤ cond(S), the convex function Jκmax (u) does not have a region on which it is constant. Since Jκmax (u) is strictly decreasing for 0 < u < 1/(κmaxl1) and strictly increasing for u > 1/lp, it has a unique minimizer u★. If κmax > cond(S), the maximizer u★ may not be unique because Jκmax (u) has a plateau. However, since the condition number constraint becomes inactive, Σ̂cond = S for all the maximizers.

Now assume that κmax ≤ cond(S). For α ∈ {1,…, p − 1} and β ∈ {2, …, p}, define the following two quantities.

By construction, uα,β coincides with u★ if and only if

| (25) |

Consider a set of rectangles {Rα,β} in the uv-plane such that

Then condition (25) is equivalent to

| (26) |

in the uv-plane. Since {Rα,β} partitions {(u, v) : 1/l1 < u ≤ 1/lp and 1/l1 ≤ v < 1/lp} and (uα,β, vα,β) is on the line v = κmaxu, an obvious algorithm to find the pair (α, β) that satisfies the condition (26) is to keep track of the rectangles Rα,β that intersect this line. To understand that algorithm takes O(p) operations, start from the origin of the uv-plane, increase u and v along the line v = κmaxu. Since κmax > 1, if the line intersects Rα,β, then the next intersection occurs in one of the three rectangles: Rα+1,β, Rα,β+1, and Rα+1,β+1. Therefore after finding the first intersection (which is on the line u = 1/l1), the search requires at most 2p tests to satisfy condition (26). Finding the first intersection takes at most p tests.

Proof of Proposition 1

Recall that, for κmax = ν0,

and

where α = α(ν0) ∈ {1,…, p} is the largest index such that 1/lα < u★(ν0) and β = β(ν0) ∈ {1,…, p} is the smallest index such that 1/lβ > ν0u★(ν0). Then

and

The lower and upper bounds u★(ν0) and v★(ν0) of the reciprocal sample eigenvalues can be divided into four cases:

-

1/lα < u★(ν0) < 1/lα+1 and 1/lβ−1 < v★(ν0) < 1/lβ.

We can find ν > ν0 such thatandTherefore,and -

u★(ν0) = 1/lα+1 and 1/lβ−1 < v★(ν0) < 1/lβ.

Suppose u★(ν) > u★(ν0). Then we can find ν > ν0 such that α(ν) = α(ν0) + 1 = α + 1 and β(ν) = β(ν0) = β. Then,Therefore,orwhich is a contradiction. Therefore, u★(ν) ≤ u★(ν0).

Now, we can find ν > ν0 such that α(ν) = α(ν0) = α and β(ν) = β(ν0) = β. This reduces to case 1.

-

1/lα < u★(ν0) < 1/lα+1 and v★(ν0) = 1/lβ−1.

Suppose v★(ν) < v★(ν0). Then we can find ν > ν0 such that α(ν) = α(ν0) = α and β(ν) = β(ν0) − 1 = β − 1. Then,Therefore,orwhich is a contradiction. Therefore, v★(ν) ≥ v★(ν0).

Now, we can find ν > ν0 such that α(ν) = α(ν0) = α and β(ν) = β(ν0) = β. This reduces to case 1.

u★(ν0) = 1/lα+1 and v★(ν0) = 1/lβ−1. 1/lα+1 = u★(ν0) = v★(ν0)/ν0 = 1/(ν0lβ−1). This is a measure zero event and does not affect the conclusion.

Footnotes

A preliminary version of the paper has appeared in an unrefereed conference proceedings previously.

This procedure was first considered by two of the authors of this paper in a previous conference paper and is further elaborated in this paper (see Won and Kim (2006)).

This problem can be considered a generalization of the problem studied by Sheena and Gupta (2003), who consider imposing either a fixed lower bound or a fixed upper bound on the eigenvalues. Their approach is however different from ours in a fundamental sense in that it is not designed to control the condition number. Hence such a solution does not correct for the overestimation of the largest eigenvalues and underestimation of the small eigenvalues simultaneously.

Supplemental Section D.2 shows the periods determined by the choice of the parameters.

Supplemental materials Accompanying supplemental materials contain additional proofs of Theorems 2 and 3, and Proposition 2; additional figures illustrating Bayesian prior densities; additional figure illustrating risk simulations; details of the empirical minimum variance rebalancing study.

Contributor Information

Joong-Ho Won, School of Industrial Management Engineering, Korea University, Seoul, Korea.

Johan Lim, Department of Statistics, Seoul National University, Seoul, Korea.

Seung-Jean Kim, Citi Capital Advisors, New York, NY, USA.

Bala Rajaratnam, Department of Statistics, Stanford University, Stanford, CA, USA.

References

- Banerjee O, El Ghaoui L, D’Aspremont A. Model Selection Through Sparse Maximum Likelihood Estimation for Multivariate Gaussian or Binary Data. Journal of Machine Learning Research. 2008;9:485–516. [Google Scholar]

- Boyd S, Vandenberghe L. Convex Optimization. Cambridge University Press; 2004. [Google Scholar]

- Chan N, Karceski N, Lakonishok J. On portfolio optimization: Forecasting covariances and choosing the risk model. Review of Financial Studies. 1999;12(5):937–974. [Google Scholar]

- Daniels M, Kass R. Shrinkage estimators for covariance matrices. Biometrics. 2001;57:1173–1184. doi: 10.1111/j.0006-341x.2001.01173.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dempster AP. Covariance Selection. Biometrics. 1972;28(1):157–175. [Google Scholar]

- Dey DK, Srinivasan C. Estimation of a covariance matrix under Stein’s loss. The Annals of Statistics. 1985;13(4):1581–1591. [Google Scholar]

- Farrell RH. Multivariate calculation. Springer-Verlag; New York: 1985. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Sparse inverse covariance estimation with the graphical lasso. Biostatistics. 2008;9(3):432–441. doi: 10.1093/biostatistics/kxm045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haff LR. The variational form of certain Bayes estimators. The Annals of Statistics. 1991;19(3):1163–1190. [Google Scholar]

- Hero A, Rajaratnam B. Large-scale correlation screening. Journal of the American Statistical Association. 2011;106(496):1540–1552. [Google Scholar]

- Hero A, Rajaratnam B. Hub discovery in partial correlation graphs. Information Theory, IEEE Transactions on. 2012;58(9):6064–6078. [Google Scholar]

- James W, Stein C. Estimation with quadratic loss. Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability; Stanford, California, United States. 1961. pp. 361–379. [Google Scholar]

- Khare K, Rajaratnam B. Wishart distributions for decomposable covariance graph models. The Annals of Statistics. 2011;39(1):514–555. [Google Scholar]

- Ledoit O, Wolf M. Improved estimation of the covariance matrix of stock returns with an application to portfolio selection. Journal of Empirical Finance. 2003 Dec;10(5):603–621. [Google Scholar]

- Ledoit O, Wolf M. A well-conditioned estimator for large-dimensional covariance matrices. Journal of Multivariate Analysis. 2004;88:365–411. [Google Scholar]

- Ledoit O, Wolf M. Nonlinear shrinkage estimation of large-dimensional covariance matrices. The Annals of Statistics. 2012 Jul;40(2):1024–1060. [Google Scholar]

- Letac G, Massam H. Wishart distributions for decomposable graphs. The Annals of Statistics. 2007;35(3):1278–1323. [Google Scholar]

- Lin S, Perlman M. A Monte-Carlo comparison of four estimators of a covariance matrix. Multivariate Analysis. 1985;6:411–429. [Google Scholar]

- Luenberger DG. Investment science. Oxford University Press; New York: 1998. [Google Scholar]

- Markowitz H. Portfolio selection. Journal of Finance. 1952;7(1):77–91. [Google Scholar]

- Merton R. On estimating expected returns on the market: An exploratory investigation. Journal of Financial Economics. 1980;8:323–361. [Google Scholar]

- Michaud RO. The Markowitz Optimization Enigma: Is Optimized Optimal. Financial Analysts Journal. 1989;45(1):31–42. [Google Scholar]

- Peng J, Wang P, Zhou N, Zhu J. Partial correlation estimation by joint sparse regression models. Journal of the American Statistical Association. 2009;104(486):735–746. doi: 10.1198/jasa.2009.0126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourahmadi M, Daniels MJ, Park T. Simultaneous modelling of the cholesky decomposition of several covariance matrices. Journal of Multivariate Analysis. 2007 Mar;98(3):568–587. [Google Scholar]

- Rajaratnam B, Massam H, Carvalho C. Flexible covariance estimation in graphical Gaussian models. The Annals of Statistics. 2008;36(6):2818–2849. [Google Scholar]

- Sheena Y, Gupta A. Estimation of the multivariate normal covariance matrix under some restrictions. Statistics & Decisions. 2003;21:327–342. [Google Scholar]

- Stein C. Technical Report 6. Dept. of Statistics, Stanford University; 1956. Some problems in multivariate analysis Part I. [Google Scholar]

- Stein C. Estimation of a covariance matrix. Reitz Lecture, IMS-ASA Annual Meeting.1975. [Google Scholar]

- Stein C. Lectures on the theory of estimation of many parameters (English translation) Journal of Mathematical Sciences. 1986;34(1):1373–1403. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society Series B (Methodological) 1996;58(1):267–288. [Google Scholar]

- Warton DI. Penalized Normal Likelihood and Ridge Regularization of Correlation and Covariance Matrices. Journal of the American Statistical Association. 2008;103(481):340–349. [Google Scholar]

- Won JH, Kim S-J. Maximum Likelihood Covariance Estimation with a Condition Number Constraint. Proceedings of the Fortieth Asilomar Conference on Signals, Systems and Computers; 2006. pp. 1445–1449. [Google Scholar]

- Yang R, Berger JO. Estimation of a covariance matrix using the reference prior. The Annals of Statistics. 1994;22(3):1195–1211. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.