Abstract

Background/Study Context

Older adults, especially those with reduced hearing acuity, can make good use of linguistic context in word recognition. Less is known about the effects of the weighted distribution of probable target and non-target words that fit the sentence context (response entropy). The present study examined the effects of age, hearing acuity, linguistic context, and response entropy on spoken word recognition.

Methods

Participants were 18 older adults with good hearing acuity (M age = 74.3 years), 18 older adults with mild-to-moderate hearing loss (M age = 76.1 years) and 18 young adults with age-normal hearing (M age = 19.6 years). Participants heard sentence-final words using a word-onset gating paradigm, in which words were heard with increasing amounts of onset information until they could be correctly identified. Degrees of context varied from a neutral context to a high context condition.

Results

Older adults with poor hearing acuity required a greater amount of word onset information for recognition of words when heard in a neutral context compared to older adults with good hearing acuity and young adults. This difference progressively decreased with an increase in words’ contextual probability. Unlike the young adults, both older adult groups’ word recognition thresholds were sensitive to response entropy. Response entropy was not affected by hearing acuity.

Conclusions

Increasing linguistic context mitigates the negative effect of age and hearing loss on word recognition. The effect of response entropy on older adults’ word recognition is discussed in terms of an age-related inhibition deficit.

Speech rates in everyday conversation average between 140 to 180 words per minute (wpm), and can often exceed 210 wpm as in the case of a radio or television newsreader speaking from a prepared script (Miller, Grosjean & Lomanto, 1984; Stine, Wingfield, & Myers, 1990). One reason why speech can be comprehended in spite of such rapid input rates is that words in fluent discourse can often be recognized before their full acoustic duration has been completed. This was first formally observed by Marslen-Wilson (1975), who found that listeners asked to “shadow” recorded speech (i.e., repeating the words aloud as they are being heard) often corrected without awareness errors in pronunciation or grammar in the recording before the erroneous word had been fully completed.

An attempt to explore this process has employed word-onset gating, a paradigm in which a listener is presented with increasing amounts of a word’s onset duration until the word can be correctly identified (Cotton & Grosjean, 1984; Grosjean, 1980, 1985). Using this paradigm the listener might, for example, hear the first 50 ms of a recorded word, then the first 100 ms of that word, then the first 150 ms, and so on, until the word can be correctly identified. Studies of word-onset gating have confirmed that words within a sentence context can often be recognized within as little as 200 ms of their onset, or when half, or less than half, of their full acoustic duration has been heard (Grosjean, 1980; Marslen-Wilson, 1984). Interestingly, words heard even without a linguistic context require on average only 130 ms more. To put these figures in perspective, 202 ms represents the mean duration of a word-initial consonant-vowel cluster in English (Sorensen, Cooper, & Paccia, 1978).

This rapid identification of words even without a constraining linguistic context is made possible by the dramatic reduction in the number of possible word candidates that share the same initial sounds as the target word when the word-onset duration is progressively increased (Marslen-Wilson & Tyler, 1980; Tyler, 1984; Wayland, Wingfield, & Goodglass, 1989). This number of lexical possibilities is further reduced when word prosody (e.g., syllabic stress) is taken into account in matching a word onset with potential lexical candidates (Lindfield, Wingfield, & Goodglass, 1999).

As might be expected, studies of word-onset gating have shown that the amount of word-onset required for correct word identification is increased when speech quality or signal clarity is poor (Grosjean, 1985; Nooteboom & Doodeman, 1984). Given these findings, one would expect that (1) an individual with hearing loss, even in the milder ranges, would require a larger gate size for correct word recognition relative to a person with normal hearing acuity, and (2) the negative effect of hearing loss on word recognition would progressively decrease as the transitional probability of a word within a linguistic context is incrementally increased.

In contrast to word-onset gating, the predominant paradigm for the study of spoken word recognition has involved presentation of an entire word masked by background noise, with the level of the background noise progressively diminished until the word can be correctly identified. Such studies have verified that a more favorable signal-to-noise ratio is required for word recognition by older relative to younger adults and for those with hearing impairment relative to those with better hearing. Further, as would be expected, these differences are reduced when stimulus words are heard within a linguistic context relative to when the same words are heard in the absence of any linguistic constraints (e.g., Benichov, Cox, Tun, & Wingfield, 2012; Dubno, Ahlstrom, & Horwitz, 2000; Hutchinson, 1989; Pichora-Fuller, Schneider & Daneman, 1995; Sommers & Danielson, 1999).

A critical finding in the Benichov et al. (2012) study, which included participants ranging in age from 19 to 89 years, was that chronological age contributed significant variance to word-recognition thresholds even when hearing acuity and measures of cognitive function were taken into account. This is consistent with a number of studies using word-onset gating that have shown that older adults, even those with relatively good hearing acuity, require a larger word onset duration for correct word recognition than do young adults (Craig, 1992; Craig, Kim, Rhyner, & Chirillo, 1993; Elliott, Hammer, & Evan, 1987; Perry & Wingfield, 1994; Wingfield, Aberdeen, & Stine, 1991). To the extent that correct word identification requires the inhibition of lexical possibilities that no longer match the input (i.e., as onset duration or signal-to-noise ratios are increased), this age-related deficit would be consistent with an argument that lexical discrimination is hampered by an inhibitory deficit in older adults (Hasher & Zacks, 1988; Sommers & Danielson, 1999). This latter point motivated us to examine the effects of response competition on ease of word recognition by older adults with good and poor hearing acuity. Such competition can arise from the number of words that share phonology with a target word (e.g., Sommers, 1996; Tyler, 1984; Wayland et al., 1989). Our interests are in the effects of word recognition on the likelihood of encountering a target word in a particular semantic context and the potential competition from the distribution of lexical alternatives that may also fit this context.

Target Expectancy Versus Response Entropy

It is a well-established principle of perception that the more probable a stimulus, the less sensory information will be needed for its correct identification (Howes, 1954; Morton, 1969). Numerous studies, such as those previously cited, have demonstrated this principle in young and older adults, with an artificially or naturally degraded stimulus word, when a preceding linguistic context has been used to manipulate the expectancy of the target word. For example, hearing the sentence, “He wondered if the storm had done much…” will lead one to expect a highly probable word, such as damage. This can be compared to the less constraining sentence, “He was soothed by the gentle…” which would have a greater degree of response uncertainty. When a large sample of adults was asked to complete the first sentence with a likely ending, 97% of respondents gave damage. The most frequent response to the second example was music, but given by only 23% of respondents (Bloom & Fischler, 1980; see also Block & Baldwin, 2010; Lahar, Tun, & Wingfield, 2004). Based on such norms, it can be shown that the amount of word onset duration (Wingfield et al., 1991) or signal-to noise ratio (Benichov et al., 2012) necessary for the correct recognition of sentence-final words is inversely proportional to their expectancy based on their preceding linguistic context.

A feature of the Bloom and Fischler (1980) norms is that, in addition to listing the dominant sentence completion responses, the norms also list the full range of alternative responses that were given, along with the percentage of respondents giving these responses. In the first example above, the sentence-final word harm was also given, although by very few respondents. By contrast, the second example produced over 12 different responses, with varying degrees of popularity. These data allow one to know not only the transitional probability of a target word in a sentence context, but also the uncertainty implied by the number and probability distribution of alternative possibilities drawn from various respondents’ sentence completions. We refer to the former as target expectancy and the uncertainty determined by the number and strength of potential responses as response entropy (cf., Shannon & Weaver, 1949; Treisman, 1965; van Rooij & Plomp, 1991). Maximum entropy occurs within a given situation when all possible responses are equally likely. When some possibilities are more predictable than the others, entropy is reduced.

In the present experiment we tested three groups of participants. The first was a group of older adults with a mild-to-moderate hearing loss. This degree of loss is of special interest as it represents the most common category of loss among hearing impaired older adults (Morrell, Gordon-Salant, Pearson, Brant, & Fozard, 1996). The second group was composed of older adults who were matched for age, vocabulary knowledge and years of formal education with the first group, but who had retained good hearing acuity for their age. A final group consisted of young adults with age-normal hearing. The task was the previously described gating paradigm, in which a word is heard in a series of presentations, each time with an increasing amount of word onset duration, until it can be correctly identified.

Extrapolating from data obtained when signal-to-noise ratio is used as a measure of ease of word recognition (e.g., Benichov et al., 2012), one would expect that older adults, and older adults with hearing loss, would require a larger word onset duration to identify a spoken word in the absence of a constraining linguistic context relative to young adults with age-normal hearing. One would also expect this difference to be reduced, or potentially eliminated, by presenting the target word as the sentence-final word in a constraining linguistic context (cf., Benichov et al., 2012; Dubno et al., 2000; Pichora-Fuller et al., 1995; Wingfield et al., 1991). Our primary question in this study, however, is the extent to which response entropy, calculated as the number of potential competitors and the uniformity of their probability distributions (Shannon, 1948), may affect the onset duration needed to correctly identify a target word.

To the extent that older adults have a special difficulty in suppressing interference from potential competitors, as a reflection of a more general inhibitory deficit (Hasher & Zacks, 1988; Sommers & Danielson, 1999), one would expect to see a greater negative effect of response entropy on word recognition for older adults relative to the younger-adult group. Following the same logic, however, there would be no reason, a priori, to expect older adults with poor hearing, relative to age-matched older adults with better hearing, to suffer a greater negative effect on word recognition due to the word’s presence in a semantic environment with high response entropy. By contrast, target expectancy (but not response entropy) would be expected to have a differentially greater impact on word recognition for those with poor hearing than those with good acuity, relative to each groups’ recognition baseline for words heard in a neutral, non-constraining context.

Method

Participants

Participants were 36 older adults, 18 (13 females, 5 males) with good hearing acuity, and 18 (9 females, 9 males) with a mild-to-moderate hearing loss. Audiometric assessment was conducted using a GSI 61 clinical audiometer (Grason-Stadler, Inc., Madison, WI) using standard audiometric techniques in a sound attenuating testing room.

The good-hearing group had a mean better-ear pure tone average (PTA) of 20.3 dB HL (SD = 6.2) averaged over 1,000, 2,000 and 4,000 Hz, a frequency range known to be important for the perception of speech (Humes, 1996). Their mean speech recognition threshold (SRT) using recorded Central Institute for the Deaf (CID) W-1 spondee words (Auditec, St. Louis, MO) was 18.8 dB HL (SD = 3.2). Although above the level for young adults with age-normal hearing acuity, these values lie within a range typically considered to be clinically normal for speech (viz., PTA < 25 dB HL; Katz, 2002). The participants in the hearing-loss group had a mean better-ear PTA of 38.9 dB HL (SD = 6.9) and mean SRT of 38.3 dB HL (SD = 9.1), placing them in a mild-to-moderate hearing loss range (Katz, 2002). Fifteen of the 18 good-hearing older adults and 13 of the 18 poor-hearing adults had symmetrical hearing defined as an inter-aural difference of less than or equal to 15 dB HL (Korsten-Meijer, Wit, & Albers, 2006). The remaining older good- and poor-hearing participants had inter-aural asymmetries less than or equal to 20 dB HL. None of the participants in the hearing loss group were regular users of hearing aids (Kochkin, 1999).

The good- and poor-hearing older adults were similar in age (good-hearing, M = 74.3 years, SD = 5.2; poor-hearing, M = 76.1 years, SD = 5.0; t[34] = 1.05, n.s.), years of formal education (good-hearing, M = 16.2 years, SD = 1.6; poor-hearing, M = 17.3 years, SD = 2.0; t[34] = 1.87, n.s.), and verbal ability, as estimated by Shipley vocabulary scores (Zachary, 1986) (good-hearing, M = 16.2, SD = 2.7; poor-hearing, M = 17.3, SD = 2.1; t[34] = 1.30, n.s.). All participants reported themselves to be in good health, with no history of stroke, Parkinson’s disease, or other neuropathology that might compromise their ability to carry out the experimental task.

For purposes of comparison we also included a group of 18 young adults (13 females, 5 males; M age = 19.6 years, SD = 1.2) all of whom had age-normal hearing, as measured by PTA (M = 5.7 dB HL, SD = 2.8) and SRT (M = 7.1 dB HL, SD = 4.3). At time of testing, the young adults had completed fewer years of formal education (M = 13.5 years, SD = 1.0) than either the good-hearing, t(34) = 6.19, p <0.001, or poor-hearing, t(34) = 7.38, p <0.001, older adults. As is common (e.g., Verhaeghen, 2003), the young adults had somewhat lower vocabulary scores (M = 14.3, SD = 1.7) than either the good-hearing, t(34) = 2.59, p <0.05, or poor-hearing, t(34) = 4.65, p <0.001, older adults. All participants in the three groups were native speakers of American English.

Stimulus Materials

The stimuli, taken from Bloom and Fischler’s (1980) norms, consisted of 20 one- and two-syllable words. Each word was recorded as the final word in three different sentence contexts that varied in the degree to which they would affect a listener’s expectation for hearing the target word.

These expectancies were based on the responses of 100 young adults who were given sentence frames that had the final word missing. The instructions were to give a single word that would complete each sentence with a likely ending. This procedure produces so-called “cloze” (Taylor, 1953) norms presumed to reflect the combined influence of the syntactic and semantic constraints imposed by the sentence contexts (e.g., Treisman, 1965). The Bloom and Fischler (1980) norms list the full range of responses given by their participants for each sentence frame, and the frequency (probability) with which each of these responses was given. These are given for all responses with a probability greater than 0.01. Although based on responses by young adults, these norms have been shown to be predictive of word-selection by children (Stanovich, Nathan, West, & Vala-Rossi, 1985), university undergraduates (Block & Baldwin, 2010), and young, middle-aged and older adults in the United States and Canada (Lahar et al., 2004).

Based on the above-cited cloze values, the sentence and target-word sets chosen for the study ranged from a low cloze probability of 0.02 to a high of 0.85. Stimuli were selected such that each target word could be heard in one of three categories of target expectancy: low expectancy (probability range 0.02 to 0.05; M = 0.03), medium expectancy (probability range 0.09 to 0.21, M = 0.13) or high expectancy (probability range 0.23 to 0.85, M = 0.52). There was also a neutral context condition, in which the target word was preceded by the carrier phrase, “The word is….” Target words were 16 one-syllable and 4 two-syllable common nouns and adjectives with high frequencies of occurrence in English; mean log-transformed Hyperspace Analogue to Language (HAL) frequencies ranged from 9.31 to 13.58 (Balota, et al., 2007; Lund & Burgess, 1996).

The sentences, neutral carrier phrases, and target words were spoken by a female speaker of American English at a natural speaking rate, and recorded onto computer sound files using SoundEdit software (Macromedia, Inc., San Francisco, CA) that digitized at a sampling rate of 22 kHz. In order to insure that recognition thresholds would be affected only by the linguistic context, and not by accidental differences in the way the target word was spoken, a single recording of each target word was recorded and inserted via speech editing into the sentence-final position of each of its three sentence contexts and the neutral carrier phrase. The sentence-final words were spliced on to the ends of the sentences and neutral carrier phrases without an artificial pause so as to sound like a natural continuation of the sentence. Stimuli were equated for root mean square amplitude across conditions.

Procedure

Each participant heard each of the 20 words in only one of its four context conditions (neutral, low, medium, high context). Sentences and target words were counterbalanced across participants such that, by the end of the experiment, each target word had been heard an equal number of times in each of its sentence contexts.

Each target word plus sentence frame was presented in a series of successive presentations with the gate size of the target word increased in 50 ms increments until the target word was correctly identified. The recognition threshold was defined as the gate size (in ms) at which the participant first gave the correct response. Stimuli were presented binaurally over Eartone 3A (E-A-R Auditory Systems, Aero Company, Indianapolis, IN) insert earphones via a Grason Stadler GS-61 clinical audiometer at 25 dB above each individual’s SRT for his or her better ear (25 dB Sensation Level [SL]). The mean duration of the recorded target words was 605.3 ms, representing an average of 12.6 50-ms gates for full word inclusion. One word, “good,” was removed from analysis in all its context conditions because it was inadvertently uttered as encouragement on several occasions during the course of the experiment.

Results

Target Expectancy and Recognition Thresholds

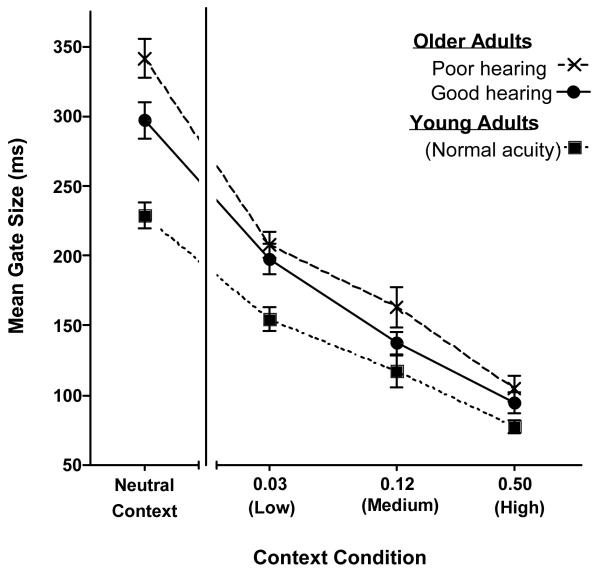

Figure 1 shows the mean gate size (ms) needed by the three participant groups for correct recognition of target words when heard with a non-constraining neutral context (“The word is…”) and when heard with low, medium and high contextual constraints. Mean cloze probabilities for the low, medium and high contextual constraints are plotted on a logarithmic scale.

Figure 1.

Mean gate size required for correct word recognition when a word was heard with a neutral context or with low, medium or high degrees of contextual constraint based on prior linguistic context. Numbers on the abscissa are mean cloze values for target words. Error bars represent standard errors for the mean.

As would be expected from prior work using word-onset gating (Wingfield et al., 1991) and presentation of words in background noise (Benichov et al. 2012), recognition scores decrease progressively with increasing degrees of contextual constraint on a log-linear scale for all three participant groups. This appearance was confirmed by a 4 (Context: neural, low, medium, high) X 3 (Group: poor-hearing older adults, good-hearing older adults, good-hearing young adults) mixed-design analysis of variance (ANOVA), with context as a within-participants variable and group a between-participants variable. A Greenhouse-Geisser adjustment to degrees of freedom was used when Mauchly’s test for sphericity was found to be significant. The progressive reduction in the mean gate size needed for word identification with increasing context as seen in Figure 1 was confirmed by a significant main effect of context, F(2.04,104.14) = 251.13, p< 0.001, ηp2 = 0.83. There was also a significant main effect of participant group, F(2,51) = 19.99, p< 0.001, ηp2 = 0.44, and a significant Context X Group interaction, F(4.08,104.14) = 4.36, p < 0.01, ηp2 = 0.15.

The source of this two-way interaction was due primarily to the differences in recognition thresholds for the three participant groups in the neutral context condition. As can be seen on the left side of Figure 1, the older adults with poor hearing acuity required a significantly greater amount of word onset for correct word recognition than did the older adults with better hearing acuity, t(34) = 2.28, p < 0.05. As also might be expected, the young adults with age-normal hearing required significantly fewer gates for correct recognition than did either the older adults with good hearing acuity, t(34) = 4.22, p < 0.001, or poor hearing acuity, t(34) = 6.67, p < 0.001. A 3 (Context) X 3 (Group) ANOVA conducted on the data for the low, medium and high context conditions, with the neutral context condition excluded, confirmed main effects of context, F(1.46,74.45) = 89.48 p < .001, ηp2 = 0.64, and participant group, F(2,51) = 10.35, p < .001, ηp2 = 0.29, but no Context X Group interaction, F(2.92,74.45) = 1.06, n.s.

Effects of Response Entropy

Response entropy was calculated for each of the target words in each of the three context conditions (low, medium and high context), with entropy (H) calculated as the total number of different responses given in the Bloom and Fischler (1980) norms and the probability distribution of the responses. This is shown in the equation,

where x is a response, for which there are n possible responses (x1, x2,…, xn). For each xi, there is a probability p that xi will occur. The subscript b represents the base of the logarithm used, where we use the base 2 in keeping with the traditional measurement of statistical information represented in bits (Shannon, 1948). For example, given the stem, “Bob would often sleep during his lunch…,” Bloom and Fischler (1980) report that participants gave the responses, “hour,” “break,” and “period” with probabilities of 0.54, 0.41, and 0.05, respectively. Applying the above equation, we find this sentence has a response entropy of 1.22 bits, calculated as: H (in bits) = −[(0.54 log2 0.54) + (0.41 log20.41)+(0.05 log20.05)]. This can be compared to a higher entropy situation where the distribution of probabilities is more uniform, such as “My aunt likes to read the daily…” where “paper,” “newspaper,” and “news” were produced with probabilities of 0.47, 0.31, and 0.22, respectively, which yields an entropy of 1.52 bits.

The upper part of Table 1 shows two Pearson product-moment zero-order correlations (r) for each of the three participant groups. The first row shows the correlations between recognition thresholds (a; the mean gate sizes required for correct recognition) and the probability of the target word based on the Bloom and Fischler (1980) norms (b; target expectancy). As would be inferred from the data shown in Figure 1, this correlation was significant for all three participant groups. The direction of the correlations for all three groups is negative, reflecting an increase in target expectancy being associated with a decrease in the gate sizes necessary for correct recognition.

Table 1.

Zero-order and partial correlations for recognition thresholds (a), target expectancy (b), and response entropy (c)

| Predictive Factors | Young Adults | Older Adults |

|

|---|---|---|---|

| Good Acuity | Poor Acuity | ||

| Zero-order correlations (r) | |||

| ab | −0.41** | −0.53*** | −0.42*** |

| ac | 0.23 | 0.47*** | 0.35** |

| Partial correlations (r) | |||

| ab.c | −0.37** | −0.46*** | −0.35** |

| ac.b | 0.13 | 0.39** | 0.27* |

Note. p< 0.05,

p< 0.01,

p< 0.001

The second row in the upper part of Table 1 shows the zero-order correlations between recognition thresholds and calculated response entropy (c), where the two older adult groups show a significant positive correlation, reflecting an increase in the gate sizes need for correct recognition being associated with an increase in response entropy. Unlike the results for target expectancy, in this case only the older adults show a significant correlation; the young adults do not.

Because target expectancy and response entropy will generally be inter-related, as was true for these data, r(55) = −0.29, p < 0.05, a series of partial correlations were calculated in order to confirm the above pattern of zero-order correlations when this mutual relationship is removed (Bruning & Kintz, 1977). The partial correlations shown in the upper row of the lower portion of Table 1 confirm that the relationship between recognition thresholds (a) and target expectancy (b) remain significant for all three participant groups when the relationship between target expectancy and response entropy (c) is held constant (ab.c). Similarly, the correlations in the lower row between recognition thresholds (a) and response entropy (c) for the two older adult groups remain significant when the relationship between target expectancy is partialed out (ac.b). As was seen with the zero-order correlations, the young adults do not show this same relationship between recognition thresholds and response entropy.

Discussion

Sentence-final completion norms such as those developed by Bloom and Fischler (1980), have served a valuable function for examining the facilitating effects of target expectancy on word recognition in a range of participant populations for both spoken and written stimuli (cf., Benichov et al., 2012; Morton, 1964, 1969; Nebes, Boller, & Holland, 1986; Perry & Wingfield, 1994; Wingfield et al., 1991). This build-up of expectancy determined by a sentence context is presumed to occur rapidly and contemporaneously even as the words of the sentence are arriving at a normally rapid speech rate. Support for this position can be seen in latencies to word-corrections in shadowing studies (Marslen-Wilson, 1975), latencies in cross-modal lexical priming (Marslen-Wilson & Zwitserlood, 1989), and an increase in the amplitude of the N400 event-related potential (ERP) recorded from scalp electrodes when a sentence-final word is heard that violates contextually-determined expectations based on cloze norms (Block & Baldwin, 2010).

The microstructure of the facilitative effects of linguistic context on word recognition has remained an issue for research, with two positions dominating the literature. The first is that a linguistic context increases the level of activation of words in the mental lexicon that fit that context, but importantly, that this activation (or priming) occurs before the target word has been presented (e.g., Morton, 1964, 1969). An alternative model assumes that when the initial phoneme is heard all words beginning with that sound (the word-initial cohort) are activated, with the constraining effects of linguistic context coming into play only after this initial phonological cohort has been activated (e.g., Marslen-Wilson & Zwitserlood, 1989). At issue is not whether a linguistic context facilitates recognition, but where in the time course of the context-phonology sequence the effect of context comes into play. Of special note in our present data is the finding that even a low level of linguistic constraint yields a significant reduction in the mean gate size needed for correct word recognition relative to words heard in a neutral context. This beneficial effect of even a small degree of linguistic context is not unique to the gating paradigm, but appears also for spoken words presented in background noise (Benichov et al., 2012) and in a measure of tachistoscopic duration thresholds for written words (Morton, 1964). This would not be revealed in studies that simply contrast words heard in a neutral context with words heard in a high-constraint sentence context (Dubno et al., 2000; Grant & Seitz, 2000; Pichora-Fuller et al., 1995).

Ease of recognition of individual words is known to be affected by features such as the relative frequency of occurrence of the word in the language (Grosjean, 1980), as well as the number of words that share overall phonology with the target word (the word’s “neighborhood density”). This latter factor is embodied in the influential neighborhood activation model (NAM) of word recognition (Luce & Pisoni, 1998). A variation on this model, a so-called onset-cohort model, gives special weight to the discriminative value of word onsets (Marslen-Wilson, 1984; Tyler, 1984). It is the case that word onsets are especially effective in “triggering” an elusive word when word-finding fails in both normal aging and dementia (Nicholas, Barth, Obler, Au, & Albert, 1997), as well as in patients with aphasia consequent to focal brain damage (Goodglass et al., 1997; Wingfield, Goodglass, & Smith 1990).

Gating studies with normal-hearing young adults have affirmed that the identity of a word can be more readily established from hearing the onset phonology of a word than phonology from a non-initial position. It has been argued that this advantage is due to the greater ease of alignment of a heard fragment with possible word candidates as the perceptual system attempts to match a stimulus input with stored sound-forms in the mental lexicon (cf., Nooteboom & van der Vlugt, 1988; Wingfield, Goodglass & Lindfield, 1997). Neither the neighborhood density nor the onset cohort models of word recognition has emphasized a role for word-prosody in narrowing the number of potential word candidates (cf., Luce, 1986; Luce, Pisoni & Goldinger, 1990; Marslen-Wilson & Tyler, 1980; Marslen-Wilson & Zwitserlood, 1989). It can be shown, however, that recognition performance gains significant benefit from the presence of information about syllabic stress that is often contained in word onsets (Lindfield et al., 1999). Further, this ability is similar for both young and older adults, and especially so when one takes into account differences in hearing sensitivity that can affect the extraction of complex acoustic information in word onsets that can sometimes signal the syllabic stress pattern of the entire word (Wingfield, Lindfield & Goodglass, 2000).

Studies of lexical decisions primed by different portions of word phonology have supported a word-onset priority (Marslen-Wilson & Zwitserlood, 1989), as have studies using eye-tracking while listening to spoken instructions, with these latter studies finding this pattern to hold across age groups (Ben-David et al., 2011). Other studies have supported overall goodness-of-fit models (Connine, Blasko, & Titone, 1993; Slowiaczek, Nusbaum, & Pisoni, 1987). We cannot adjudicate between these two models with these present data, as both models accommodate the finding that word recognition in connected speech reflects an interaction between bottom-up information, represented by the sensory input, and top-down information based on linguistic knowledge, with the latter taking on greater importance when the sensory information is weak (Rönnberg, Rudner, & Lunner, 2011). This compensatory influence of top-down input was seen in the way in which the negative effect of hearing loss on recognition of words preceded only by a neutral carrier phrase was mitigated when the same words were heard preceded by a linguistic context.

As distinct from most studies that have examined effects of the expectancy of a target word on word identification, in the present study we considered also potential interference from the numbers and probability strengths of the range of lexical alternatives, along with the target word, that also fit the semantic context introduced by the stimulus sentence. Following Shannon and Weaver (1949), we have referred to this as response entropy.

We did not, in this experiment, have an independent measure of inhibition, although the case for an inhibition deficit in older adults is a strong one that appears in a number of domains, to include, but not limited to, word recognition (cf., Hasher & Zacks, 1988; Lindfield, Wingfield, & Bowles, 1994; Sommers, 1996; Sommers & Danielson, 1999; Zacks, Hasher, & Li, 1999). Our examination of the influence of response entropy on word recognition can be seen as consistent with the notion of an age-related inhibition deficit. This was observed in the finding that word recognition in the presence of high response entropy had a stronger negative influence on the older adults’ recognition thresholds than was seen for the young adults. That both good- and poor-hearing older adults showed a significant positive relationship between their recognition thresholds and response entropy, while the young adults with normal hearing acuity did not, suggests in turn that the entropy effect was dependent on age, rather than hearing acuity. Our findings are in line with those of Rogers, Jacoby, and Sommers (2012), who found a robust age-related pattern of contextual reliance that led to false hearing after controlling for hearing acuity.

Our results differ from those obtained by Stine and Wingfield (1994), who found no age effects in relative susceptibility to response competition while using procedures similar to those used in this study. In that study, competition was manipulated by the presence of a single competitor that was strongly or weakly predictable from a sentence context. We attribute this discrepancy in results to our choice of metric. Entropy is driven not by a single competitor, but rather, by the number of competitors and the uniformity of their probability distributions; the presence of a single highly-predictable competitor could, in some cases, reduce entropy. It can be argued that the entropy measure used in this present paper better captures the totality of semantic uncertainty than a single competitor, and was thus more sensitive to the effect of adult aging as reported here.

Acknowledgments

This research was supported by NIH Grant R01 AG019714 from the National Institute on Aging. We also acknowledge support from training grants T32 AG000204 (A.L.) and T32 NS007292 (C.R), and support from the W.M. Keck Foundation.

References

- Balota DA, Yap MJ, Cortese MJ, Hutchison KA, Kessler B, Loftis B, Treiman R. The English Lexicon project. Behavior Research Methods. 2007;39:445–459. doi: 10.3758/bf03193014. [DOI] [PubMed] [Google Scholar]

- Ben-David BM, Chambers C, Daneman M, Pichora-Fuller MK, Reingold E, Schneider BA. Effects of aging and noise on real-time spoken word recognition: Evidence from eye movements. Journal of Speech, Language and Hearing Research. 2011;54:243–262. doi: 10.1044/1092-4388(2010/09-0233). [DOI] [PubMed] [Google Scholar]

- Benichov J, Cox LC, Tun PA, Wingfield A. Word recognition within a linguistic context: Effects of age, hearing acuity, verbal ability, and cognitive function. Ear and Hearing. 2012;33:250–256. doi: 10.1097/AUD.0b013e31822f680f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Block CK, Baldwin CL. Cloze probability and completion norms for 498 sentences: Behavioral and neural validation using event-related potentials. Behavior Research Methods. 2010;42:665–670. doi: 10.3758/BRM.42.3.665. [DOI] [PubMed] [Google Scholar]

- Bloom PA, Fischler I. Completion norms for 329 sentence contexts. Memory & Cognition. 1980;8:631–642. doi: 10.3758/bf03213783. [DOI] [PubMed] [Google Scholar]

- Bruning JL, Kintz . Computational handbook of statistics. 2nd Ed Scott, Foreseman; Glenview, IL: 1977. [Google Scholar]

- Connine CM, Blasko DG, Titone D. Do the beginnings of spoken words have a special status in auditory word recognition? Journal of Memory and Language. 1993;32:193–210. [Google Scholar]

- Cotton S, Grosjean F. The gating paradigm: A comparison of successive and individual presentation formats. Perception & Psychophysics. 1984;35:41–48. doi: 10.3758/bf03205923. [DOI] [PubMed] [Google Scholar]

- Craig CH. Effects of aging on time-gate isolated word-recognition performance. Journal of Speech and Hearing Research. 1992;35:234–238. doi: 10.1044/jshr.3501.234. [DOI] [PubMed] [Google Scholar]

- Craig CH, Kim BW, Rhyner PMP, Chirillo TKB. Effects of word predictability, child development, and aging on time-gated speech recognition performance. Journal of Speech and Hearing Research. 1993;36:832–841. doi: 10.1044/jshr.3604.832. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Ahlstrom JB, Horwitz AR. Use of context by young and aged adults with normal hearing. Journal of the Acoustical Society of America. 2000;107:538–546. doi: 10.1121/1.428322. [DOI] [PubMed] [Google Scholar]

- Elliott LL, Hammer M, Evan K. Perception of gated highly familiar spoken monosyllabic nouns by children, teenagers and older adults. Perception and Psychophysics. 1987;42:150–157. doi: 10.3758/bf03210503. [DOI] [PubMed] [Google Scholar]

- Goodglass H, Wingfield A, Hyde MR, Gleason JB, Bowles NL, Gallagher RE. The importance of word-initial phonology: Error patterns in prolonged naming efforts by aphasic patients. Journal of the International Neuropsychological Society. 1997;3:128–138. [PubMed] [Google Scholar]

- Grant KW, Seitz PF. The recognition of isolated words and words in sentences: Individual variability in the use of sentence context. Journal of the Acoustical Society of America. 2000;107:1000–1011. doi: 10.1121/1.428280. [DOI] [PubMed] [Google Scholar]

- Grosjean F. Spoken word recognition processes and the gating paradigm. Perception and Psychophysics. 1980;28:267–283. doi: 10.3758/bf03204386. [DOI] [PubMed] [Google Scholar]

- Grosjean F. The recognition of words after their acoustic offset: Evidence and implications. Perception and Psychophysics. 1985;38:299–310. doi: 10.3758/bf03207159. [DOI] [PubMed] [Google Scholar]

- Hasher L, Zacks RT. Working memory, comprehension and aging: A review and a new view. The Psychology of Learning and Motivation. 1988;22:193–225. [Google Scholar]

- Howes D. On the interpretation of word frequency as a variable affecting speed of recognition. Journal of Experimental Psychology. 1954;48:106–112. [PubMed] [Google Scholar]

- Humes LE. Speech understanding in the elderly. Journal of the American Academy of Audiology. 1996;7:161–167. [PubMed] [Google Scholar]

- Hutchinson KM. Influence of sentence context on speech perception in young and older adults. Journals of Gerontology. 1989;44:P36–PP44. doi: 10.1093/geronj/44.2.p36. [DOI] [PubMed] [Google Scholar]

- Katz J. Handbook of Clinical Audiology. 5th ed Lippincott Williams & Wilkins; Philadelphia, PA: 2002. [Google Scholar]

- Kochkin S. “Baby Boomers” spur growth in potential market, but penetration rate decline. Hearing Journal. 1999;52:33–48. [Google Scholar]

- Korsten-Meijer AG, Wit HP, Albers FW. Evaluation of the relation between audiometric and psychometric measures of hearing after tympanoplasty. European archives of oto-rhino-laryngology. 2006;263:256–262. doi: 10.1007/s00405-005-0983-5. [DOI] [PubMed] [Google Scholar]

- Lahar CJ, Tun PA, Wingfield A. Sentence-final word completion norms for young, middle-aged, and older adults. Journal of Gerontology: Psychological Sciences. 2004;59B:P7–P10. doi: 10.1093/geronb/59.1.p7. [DOI] [PubMed] [Google Scholar]

- Lane H, Grosjean F. Perception of reading rate by speakers and listeners. Journal of Experimental Psychology. 1973;97:141–147. doi: 10.1037/h0033869. [DOI] [PubMed] [Google Scholar]

- Lindfield KC, Wingfield A, Bowles NL. Identification of fragmented pictures under ascending versus fixed presentation in young and elderly adults: Evidence for the inhibition-deficit hypothesis. Aging and Cognition. 1994;1:282–291. [Google Scholar]

- Lindfield KC, Wingfield A, Goodglass H. The contribution of prosody to spoken word recognition. Applied Psycholinguistics. 1999;20:395–405. [Google Scholar]

- Luce PA. A computational analysis of uniqueness points in auditory word recognition. Perception and Psychophysics. 1986;39:155–158. doi: 10.3758/bf03212485. [DOI] [PubMed] [Google Scholar]

- Luce PA, Pisoni DB. Recognizing spoken words: The neighborhood activation model. Ear and Hearing. 1998;19:1–36. doi: 10.1097/00003446-199802000-00001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luce PA, Pisoni DB, Goldinger SD. Similarity neighborhoods of spoken words. In: Altmann GT, editor. Cognitive models of speech processing: Psycholinguistic and computational perspectives. MIT Press; Cambridge, MA: 1990. pp. 122–147. [Google Scholar]

- Lund K, Burgess C. Producing high-dimensional semantic spaces from lexical co-occurrence. Behavior Research Methods, Instruments & Computers. 1996;28:203–208. [Google Scholar]

- Marslen-Wilson WD. Sentence perception as an interactive parallel process. Science. 1975;189:226–228. doi: 10.1126/science.189.4198.226. [DOI] [PubMed] [Google Scholar]

- Marslen-Wilson WD. Function and process in spoken word recognition. In: Bouma H, Bouwhuis DG, editors. Attention and performance X. Erlbaum; Hillsdale, NJ: 1984. [Google Scholar]

- Marslen-Wilson WD, Tyler LK. The temporal structure of spoken language understanding. Cognition. 1980;8:1–71. doi: 10.1016/0010-0277(80)90015-3. [DOI] [PubMed] [Google Scholar]

- Marslen-Wilson WD, Zwitserlood P. Accessing spoken words: The importance of word onsets. Journal of Experimental Psychology: Human Perception and Performance. 1989;15:576–585. [Google Scholar]

- Miller JL, Grosjean F, Lomanto C. Articulation rate and its variability in spontaneous speech: A reanalysis and some implications. Phonetica. 1984;41:215–225. doi: 10.1159/000261728. [DOI] [PubMed] [Google Scholar]

- Morrell C, Gordon-Salant S, Pearson J, Brant L, Fozard J. Age- and gender-specific reference ranges for hearing level and longitudinal changes in hearing level. Journal of the Acoustical Society of America. 1996;100:1949–1967. doi: 10.1121/1.417906. [DOI] [PubMed] [Google Scholar]

- Morton J. The effects of context on the visual duration threshold for words. British Journal of Psychology. 1964;55:165–180. doi: 10.1111/j.2044-8295.1964.tb02716.x. [DOI] [PubMed] [Google Scholar]

- Morton J. Interaction of information in word recognition. Psychological Review. 1969;76:165–178. [Google Scholar]

- Nebes RD, Boller F, Holland A. Use of semantic context by patients with Alzheimer’s disease. Psychology and Aging. 1986;1:261–269. doi: 10.1037//0882-7974.1.3.261. [DOI] [PubMed] [Google Scholar]

- Nicholas M, Barth C, Obler LK, Au R, Albert ML. Naming in normal aging and dementia of the Alzheimer’s type. In: Goodglass H, Wingfield A, editors. Anomia: Neuroanatomical and cognitive correlates. Academic Press; San Diego, CA: 1997. pp. 166–188. [Google Scholar]

- Nooteboom SG, Doodeman GJN. Speech quality and the gating paradigm. In: van den Broeke MPR, Cohen A, editors. Proceedings of the Tenth International Congress on Phonetic Sciences. Foris; Dordrecht: 1984. [Google Scholar]

- Nooteboom SG, van der Vlugt MJ. A search for a word beginning superiority effect. Journal of the Acoustical Society of America. 1988;84:2018–2032. [Google Scholar]

- Perry AR, Wingfield A. Contextual encoding by young and elderly adults as revealed by cued and free recall. Aging and Cognition. 1994;1:120–139. [Google Scholar]

- Pichora-Fuller M, Schneider BA, Daneman M. How young and old adults listen to and remember speech in noise. Journal of the Acoustical Society of America. 1995;97:593–608. doi: 10.1121/1.412282. [DOI] [PubMed] [Google Scholar]

- Rogers CS, Jacoby LL, Sommers MS. Frequent false hearing in older adults: the role of age differences in metacognition. Psychology and Aging. 2012;27:33–45. doi: 10.1037/a0026231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rönnberg J, Rudner M, Lunner T. Cognitive hearing science: The legacy of Stuart Gatehouse. Trends in Amplification. 2011;20:1–9. doi: 10.1177/1084713811409762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shannon CE. A mathematical theory of communication. The Bell System Technical Journal. 1948;27:379–423. 623–656. [Google Scholar]

- Shannon CE, Weaver W. The mathematical theory of communication. The University of Illinois Press; Urbana, IL: 1949. [Google Scholar]

- Slowiaczek LM, Nusbaum HC, Pisoni DB. Phonological priming in auditory word recognition. Journal of Experimental Psychology: Learning, Memory and Cognition. 1987;13:64–75. doi: 10.1037//0278-7393.13.1.64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sommers MS. The structural organization of the mental lexicon and its contribution to age-related declines in spoken-word recognition. Psychology and Aging. 1996;11:333–341. doi: 10.1037//0882-7974.11.2.333. [DOI] [PubMed] [Google Scholar]

- Sommers MS, Danielson SM. Inhibitory processes and spoken word recognition in young and older adults: The interaction of lexical competition and semantic context. Psychology and Aging. 1999;14:458–472. doi: 10.1037//0882-7974.14.3.458. [DOI] [PubMed] [Google Scholar]

- Sorensen JM, Cooper WE, Paccia JE. Speech timing of grammatical categories. Cognition. 1978;6:135–153. doi: 10.1016/0010-0277(78)90019-7. [DOI] [PubMed] [Google Scholar]

- Stanovich KE, Nathan RG, West R, Vala-Rossi M. Children’s word recognition in context: Spreading activation, expectancy, and modularity. Child Development. 1985;56:1418–1428. [Google Scholar]

- Stine EAL, Wingfield A. Older adults can inhibit high-probability competitors in speech recognition. Aging and Cognition. 1994;1:152–157. [Google Scholar]

- Stine EAL, Wingfield A, Myers SD. Age differences in processing information from television news: The effects of bisensory augmentation. Journal of Gerontology: Psychological Sciences. 1990;45:P1–P8. doi: 10.1093/geronj/45.1.p1. [DOI] [PubMed] [Google Scholar]

- Tyler LK. The structure of the initial cohort: evidence from gating. Perception and Psychophysics. 1984;36:417–427. doi: 10.3758/bf03207496. [DOI] [PubMed] [Google Scholar]

- Taylor WL. “Cloze” procedure: A new tool for measuring readability. Journalism Quarterly. 1953;30:415–433. [Google Scholar]

- Treisman AM. Effect of verbal context on latency of word selection. Nature. 1965;206:218–219. doi: 10.1038/206218a0. [DOI] [PubMed] [Google Scholar]

- van Rooij JCGM, Plomp R. The effect of linguistic entropy on speech perception in noise in young and elderly listeners. Journal of the Acoustical Society of America. 1991;90:2985–2991. doi: 10.1121/1.401772. [DOI] [PubMed] [Google Scholar]

- Verhaeghen P. Aging and vocabulary score: A meta-analysis. Psychology and Aging. 2003;18:332–339. doi: 10.1037/0882-7974.18.2.332. [DOI] [PubMed] [Google Scholar]

- Wayland SC, Wingfield A, Goodglass H. Recognition of isolated words: The dynamics of cohort reduction. Applied Psycholinguistics. 1989;10:475–487. [Google Scholar]

- Wingfield A, Aberdeen JS, Stine EAL. Word onset gating and linguistic context in spoken word recognition by young and elderly adults. Journal of Gerontology: Psychological Sciences. 1991;46:127–129. doi: 10.1093/geronj/46.3.p127. [DOI] [PubMed] [Google Scholar]

- Wingfield A, Goodglass H, Lindfield KC. Word recognition from acoustic onsets and acoustic offsets: Effects of cohort size and syllabic stress. Applied Psycholinguistics. 1997;18:85–100. [Google Scholar]

- Wingfield A, Goodglass H, Smith KL. Effects of word-onset cuing on picture naming in aphasia: A reconsideration. Brain and Language. 1990;39:373–390. doi: 10.1016/0093-934x(90)90146-8. [DOI] [PubMed] [Google Scholar]

- Wingfield A, Lindfield KC, Goodglass H. Effects of age and hearing sensitivity on the use of prosodic information in spoken word recognition. Journal of Speech, Language, and Hearing Research. 2000;43:915–925. doi: 10.1044/jslhr.4304.915. [DOI] [PubMed] [Google Scholar]

- Zacks RT, Hasher L, Li KZH. Human memory. In: Craik FIM, Salthouse TA, editors. Handbook of aging and cognition. Erlbaum; Mahwah, NJ: 1999. pp. 200–230. [Google Scholar]

- Zachary RA. Shipley Institute of Living Scale: Revised Manual. Western Psychological Services; Los Angeles: 1986. [Google Scholar]