Abstract

The HEALTHY Study was a 3-year school-based intervention designed to change the behaviors of middle school students to reduce their risk for developing type 2 diabetes mellitus. This report examines the relation between exposure to communications campaign materials and behavior change among students in the HEALTHY intervention schools. Using data from campaign tracking logs and student interviews, the authors examined communications campaign implementation and exposure to the communications campaign as well as health behavior change. Campaign tracking documents revealed variability across schools in the quantity of communications materials disseminated. Student interviews confirmed that there was variability in the proportion of students who reported receiving information from the communication campaign elements. Correlations and regression analysis controlling for semester examined the association between campaign exposure and behavior change across schools. There was a significant association between the proportion of students exposed to the campaign and the proportion of students who made changes in health behavior commensurate with study goals. The results suggest that, in the context of a multifaceted school-based health promotion intervention, schools that achieve a higher rate of exposure to communication campaign materials among the students may stimulate greater health behavior change.

The relatively recent dramatic rise in the prevalence of type 2 diabetes mellitus among adolescents and young adults has stimulated innovative efforts to identify effective means to treat and prevent this potentially debilitating metabolic disorder (Alberti et al., 2004; Kaufman, 2002; Rosenbloom, Joe, Young, & Winter, 1999). As an efficient environment within which to deliver preventive interventions, schools continue to attract attention from researchers interested in demonstrating the potential for ameliorating the current trend in diabetes mellitus (Dobbins, De Corby, Robeson, Husson, & Tirilis, 2009; Cook-Cottone, Casey, Feeley, & Baran, 2009). One of the most comprehensive school-based diabetes prevention studies was the HEALTHY Study (Hirst et al., 2009); a multisite intervention trial funded by the National Institute of Diabetes and Digestive and Kidney Diseases within the National Institutes of Health that targeted at-risk middle school students over a 3-year period.

The HEALTHY Study

The HEALTHY Study was a multifaceted school-based intervention designed to change the behaviors and school environment of middle school students to reduce their risk for developing type 2 diabetes mellitus. The immediate goals of the intervention were to improve dietary and physical activity behaviors with the intention of promoting a healthful body composition, as reflected in body mass index. Students were recruited into the study at the beginning of the sixth grade and were followed until the end of the eighth grade. There were no statistically significant differences between the control and intervention students on any of the baseline measures, including gender, ethnicity, body mass index, waist circumference blood pressure, and fasting insulin and glucose (The HEALTHY Study Group, 2009). Between the sixth and eighth grades, half of the 42 participating schools received the intervention, which permeated the school through changes in physical education, nutrition services, and classroom instruction. Considerable effort was expended to promote standard and consistent delivery of (a) a physical education–based intervention that encouraged more effective instruction to increase students’ moderate to vigorous physical activity; (b) a systematic modification of foods served throughout the total school environment; and (c) a classroom-based educational component that provided students with the rationale for the environmental changes occurring in the schools.

Primary outcome analyses for the HEALTHY Study (HEALTHY Study Group, 2010) revealed significantly greater reductions in intervention schools compared with controls in body mass index z score, waist circumference >90 percentile, and fasting insulin (all ps < .05). There also was a trend for a lower prevalence of obesity (p = .05) in intervention schools. The purpose of this report is to examine the degree to which the communications campaign, which functioned to integrate the various intervention elements and promote visibility of the intervention as a whole, was implemented across the intervention schools and to examine the relation between exposure to the communications campaign and self-reported behavior change among students.

The HEALTHY Study Communications Campaign

A brief description of the communications campaign is presented here, focusing on the elements of the campaign that were consistent across all five semesters. A full description of the communications campaign is available elsewhere (DeBar et al., 2009). Throughout the intervention, which spanned five school semesters (spring of students’ sixth-grade year through spring of students’ eighth-grade year), each intervention school received a centrally mandated set of communications campaign elements. One constant was the use of posters to visibly disseminate the behavioral theme of the intervention, which changed each semester, and to expose students to images of adolescents engaging in and promoting healthful dietary and physical activity behaviors. Study posters decorated the walls in the halls, cafeterias, gymnasiums, and other locations where students were likely to congregate. Each intervention school also prominently displayed a large canvas banner with the study logo throughout the intervention. In addition to these images, promotional messages were periodically broadcast to the students through the public address system or, in a few schools, through closed-circuit television. Theme-relevant activities were delivered each semester in the form of cafeteria learning labs (interactive instructional activities delivered in conjunction with the meal service) and events-in-a-box (prepackaged one-time events that involved the whole school and promoted the study themes). These latter one-time events integrated the communications campaign with the other ongoing elements of the intervention and so promoted a cohesive study identity across the various intervention pieces. Students were recruited to act as intervention ambassadors (also known as student peer communicators), and these students delivered many of the public address announcements and assisted with cafeteria learning labs. During the last three intervention semesters, all students were invited to participate in photo shoots, from which school-specific posters were produced. These student-generated media replaced some of the centrally produced images featuring professional models.

The shift to student-generated media reflected an ongoing challenge to the communication campaign, which was to create images that would be relevant to all of the students at all of the participating schools. To be eligible for the study, schools had to be comprised of at least 50% minority students and/or 50% of students eligible for free or reduced-price meals. Between sites, the relative representation of specific subgroups ranged considerably, from sites in Pennsylvania and North Carolina, where African Americans predominated, to sites in California and Texas, where Hispanics were more evident. Throughout the study, Spanish-language versions of campaign materials were available, but some schools had policies in place that prevented implementing the Spanish-language materials. Over time, the demographic differences across sites necessitated a decentralized approach to the communications campaign. Thus, the student-generated media phase was a response to the realities of the existing differences in target populations across the seven study field centers.

Process Evaluation of the HEALTHY Study

Because the intervention took place in 21 intervention middle schools distributed among seven sites across the United States, considerable effort was expended to ensure a high degree of uniformity in intervention delivery. A prominent feature of this effort was the extensive process evaluation data collection that occurred throughout the study (Schneider et al., 2009). Interviews, observations, and logs were rigorously implemented and regularly summarized to track and monitor the intervention implementation, including the activities of the communication campaign.

Despite the staff’s best efforts at standardization, some variability in implementation across intervention sites was inevitable, given the differences in geography, architecture, demographics, weather, administrative restrictions, and other variables outside of study control. These differences across study sites led to a change in the design of the communications campaign from early in the study, when all materials were centrally designed and produced, to later in the study, when more control over communications campaign materials was ceded to local sites. Although still required to adhere to a standard set of image and content guidelines, later in the study sites had flexibility in determining the specific content, size, and number of print materials displayed. Consequently, a highly structured data collection process documented the variability in implementation across time and across schools, thus yielding a detailed picture of the degree to which the HEALTHY Study was successful in achieving consistent fidelity to the study protocol.

The attention paid to documenting the intervention implementation in the HEALTHY study reflects a growing recognition in the scientific community that process evaluation is an essential component of meaningful evaluations of community-based interventions (Armstrong et al., 2008). A 2005 Cochrane Review of school-based interventions designed to reduce obesity (Summerbell et al., 2005), notes that the majority of the interventions reviewed lacked a process evaluation. In more recent studies, the inclusion of process monitoring in school-based research is more common. Process evaluation enables investigators to open up the “black box” of interventions (Steckler & Linnan, 2002). By doing so, process evaluation may identify weak links in an intervention that account for a lack of effect and/or highlight intervention components that are especially critical to the intervention success. In addition, tracking intervention implementation yields valuable information about the feasibility of planned programs, and results in lessons learned that offer guidance to future program planners (Murtagh et al., 2007).

Using Process Evaluation to Assess the Effect of the Communications Campaign

As one component of a multifaceted intervention, the communications campaign was intended to “set the agenda” (McCombs & Shaw, 1973) within the schools. By directing students’ attention to healthy lifestyles, the communications campaign was expected to create a context within which students would be receptive to the changes to nutrition services and physical education. As such, the HEALTHY communications campaign was an example of the use of health communication, which has been defined as “the production and exchange of information to inform, influence or motivate individual, institutional and public audiences about health issues” (Maibach, Abroms, & Marosits, 2007, p. 4). The HEALTHY study used health communication to (a) inform students about the benefits of nutritious eating and physical activity participation and (b) to encourage and motivate students to engage in these healthy behaviors. It was assumed, however, that the communications campaign would succeed in its objectives only to the extent that students were exposed to, and attended to, the campaign elements. The study investigators therefore included an assessment of exposure to communication campaign components within the process evaluation design.

The present report serves both a descriptive and an analytic purpose. Our descriptive intent was to provide data on the consistency of communications campaign implementation across the 21 intervention schools included in the study. Our analytic intent was to examine whether differences in exposure to the campaign between schools might be associated with differences in the proportion of students who report behavior changes. We investigated the hypothesis that a greater proportion of students would report behavior change within those schools that achieved the higher rates of exposure.

Method

Subjects

The characteristics of the study cohort are detailed in Hirst and colleagues’ (2009) study. In brief, the students in the intervention schools comprised a sample of 2,307 children that was 54.8% Hispanic, 20.3% African American, 17.1% White, and 7.8% other. At baseline, 50.3% of the students in the intervention school sample were determined to be at or above the 85th percentile for body mass index, and 30.1% of the students in the intervention school were at or above the 95th percentile for body mass index.

Instruments and Procedures

This report draws on two components of the process evaluation for the HEALTHY Study. Other elements of the process evaluation are described elsewhere (Schneider et al., 2009). All procedures were reviewed and approved by the institutional review boards at all seven participating study sites as well as the Institutional Review Board of the Coordinating Center. Students who participated in the data collection and/or acted as models for the student-generated media portion of the intervention signed an assent form and parents provided signed consent for their child’s participation.

Campaign Tracking Log

A tracking log was used to document the timing and number of each of the communication campaign elements introduced into the school environment. The HEALTHY Study’s staff completed worksheets to document each time a campaign element was introduced into the school (e.g., posters, public address announcement, cafeteria learning lab). A worksheet was filled out each time a staff member hung new posters, each time a student delivered a public address announcement, and each time a cafeteria learning lab or event-in-a-box was conducted in the school. At the end of each semester, the information from the worksheets was compiled in a summary document used to track the communications campaign activities for that semester. As an additional source of information, study research assistants, who were specifically excluded from participating in intervention activities, conducted periodic walk-through observations of each school site to document visible campaign elements. These data were transferred to the summary document at the end of the semester. The worksheets and walk-through observations complemented one another, since there were intervening events outside of study control that affected whether materials introduced into the school environment remained visible (e.g., vandalism, weather damage, removal of materials by school custodial staff).

For those communication campaign elements that were one-time events, such as the cafeteria learning labs and the events-in-a-box, the campaign tracking log documented their implementation (i.e., delivered/not delivered) each semester. Using the information gathered by the worksheets and posted to the summary document at the end of each semester, campaign elements that were continuous and incremental, including the display of posters and the delivery of public address announcements, were quantified as the number introduced to the school in a semester. The presence or absence of the exterior study banner, intended to be prominently hung throughout the 3 years of the study, was documented each time the research assistants conducted a walk-through, and was represented by the proportion of observations per semester on which the banner was observed.

Student Interviews

At each intervention school, a convenience sample of consented students was interviewed every semester. The interview participants were selected from the roster of consented students at the school. Each study site was permitted to use a system of selection that was feasible within the given constraints of their particular environment as long as an effort was made to select students without any systematic bias. A minimum of 20 students or 10% of the consented students (whichever was larger) at each school was interviewed each semester. The interviews were conducted by research assistants and/or study coinvestigators trained in qualitative data collection techniques, and took place at each intervention school. Each interview lasted approximately 10 minutes, and interviewers took detailed notes to record as accurately as possible students’ responses.

The interview was comprised of a checklist as well as yes/no (3 items) and open-ended (14 items) questions eliciting students’ recall and perceptions of multiple intervention components. Students were asked to recall any sources of study information that they had seen that semester in answer to the question: “Where in your school did you see or hear what the HEALTHY program was saying?” Responses were coded on a checklist to indicate whether a student was exposed to study information from posters, classmates, the cafeteria, cafeteria learning labs, and public address announcements.

In addition to items assessing the students’ exposure to the communications campaign, the interviewer also asked about any changes in health behavior that might have resulted from the study. Specifically, students were asked, “Has the HEALTHY program changed choices you make outside of school?” (yes/no) and “If YES, how so?” Responses to the latter question were coded by interviewers as being relevant to the following behaviors emphasized in the intervention: eating; exercise; reducing time spent watching television or sitting around (less TV); drinking more water; and drinking less soda. Interviewers were instructed to check the affirmative for a behavioral category if the student respondent reported a healthful change in a behavior related to that goal.

Data Analysis

To assess fidelity to the communications campaign protocol, the frequency of one-time events reported in the campaign tracking summaries was compared to the frequency prescribed by the study’s Manual of Procedures. Fidelity to the protocol with respect to the number of posters hung in the school was assessed by comparing the number hung to the minimum recommended in the Manual of Procedures. For each semester, each school was categorized as having met, missed, or exceeded the minimum recommendation.

Following the completion of each round of student interviews, a trained research assistant summarized the data from each school into a key point summary. This summary, in which the proportion of students responding in the affirmative to each survey item was reported, was sent to the Qualitative Data Core at the University of North Carolina, Chapel Hill, and was entered into a computerized database. This resulted in 105 summaries over the 3 years of the study. Thus, data that were analyzed for this report reflect summary data from each wave of interviews at each school; individual student responses were not retained. For example, for a single semester at a single school, the dataset contains one summary report in which the proportion of students who responded “yes” or “no” to each item is documented. The data from these summaries were used to create a measure of overall campaign exposure formed by averaging the proportions of students who reported being exposed to each campaign element. The resulting variable, representing the average proportion of students exposed to campaign elements, was used in subsequent analyses.

In addition to examining descriptive statistics (means and standard deviations) for exposure to communications campaign information and self-reported behavior changes, the association between exposure and behavior change was investigated. For each behavior, the correlation between the index of exposure and the proportion of students reporting that behavior change was examined by semester. In addition, regression equations controlling for semester were used to investigate the overall association between exposure and behavior change across the whole study.

Results

Communications Campaign Dose

Schoolwide Events and Materials

Examination of the campaign tracking log summaries demonstrated that all intervention schools implemented the one-time events mandated by the study protocol each semester. Thus, there was no variance between schools in the dose of cafeteria learning labs and/or events-in-a-box delivered within the schools.

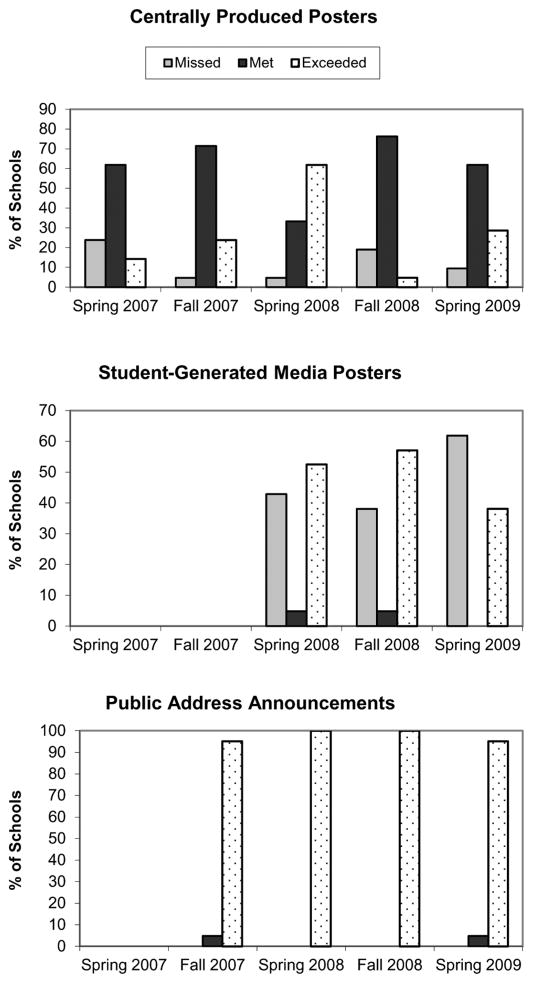

Figure 1 shows the proportion of schools that missed, met, and exceeded the recommended doses of centrally produced posters, student-generated media, and public address announcements across the five semesters of the intervention. Table 1 provides the means and standard deviations for each campaign element. Omitting the first intervention semester, during which production issues delayed deployment of the posters, the proportion of schools that met or exceeded the minimum recommended doses for centrally produced posters was quite high (more than 90% for three out of four semesters; more than 80% for the fourth semester). The implementation of centrally produced posters followed a curvilinear pattern, with the proportion of schools exceeding the minimum, increasing from 14.3% in the first semester to 61.9% in the third semester and then decreasing to 28.3% in the final semester. Greater variability emerged in implementation of the student-generated media. Across the three semesters during which this component was introduced, close to 50% of the intervention schools missed the minimum recommended dose. Reflecting the greater flexibility afforded to sites in deploying the student-generated media posters, schools were almost evenly divided between those that missed or exceeded the recommended minimum. Almost all schools exceeded the minimum in terms of the number of public address announcements delivered each semester. Thus, the poster component of the communications campaign was the most variable element in terms of the implementation across schools.

Figure 1.

Proportion of schools (n = 21) that missed, met, or exceeded recommended doses of communication campaign elements.

Table 1.

Recommended minimums,a means, and standard deviations for posters and public address announcements

| Spring 2007 | Fall 2007 | Spring 2008 | Fall 2008 | Spring 2009 | |

|---|---|---|---|---|---|

| Centrally produced posters | |||||

| Recommended minimum | 90 | 120 | 60 | 60 | 60 |

| Mean | 87.2 | 121.3 | 77.2 | 60.7 | 65.2 |

| Standard deviation | 13.0 | 4.7 | 34.6 | 8.2 | 12.7 |

| Student-generated media posters | |||||

| Recommended minimum | N/A | N/A | 240 | 100 | 100 |

| Mean | 224.4 | 214.9 | 231.0 | ||

| Standard deviation | 63.7 | 138.0 | 182.1 | ||

| Public address announcements | |||||

| Recommended minimum | N/A | 8 | 8 | 8 | 8 |

| Mean | 15.6 | 16.8 | 22.6 | 17.1 | |

| Standard deviation | 10.1 | 12.5 | 19.7 | 10.4 |

Recognizing the wide variability in setting characteristics across schools, the study protocol mandated a minimum number of campaign elements to be implemented at each school; sites were permitted to exceed that minimum to accommodate to specific school sizes, layouts, and/ or policies.

N/A = not applicable; these elements were introduced later in the intervention.

All schools broadcast at least the recommended number of public address announcements each semester, but variability in the dose was introduced by schools that exceeded the recommended number. Thus, whereas the fidelity to the Manual of Procedures was very high in terms of schools implementing the minimum requirements of the protocol, there was a considerable range across schools in the quantity of public address announcements were deployed in the schools.

Self-Reported Exposure to Communications Campaign

Using the student interviews that were conducted each semester, we obtained an estimate of how salient each communications campaign element was to the students (Table 2). When the data were aggregated across all five intervention semesters and all 21 intervention schools, the most commonly reported source of information about the HEALTHY Study was posters (66%), followed closely by the cafeteria messaging (49%). The least reported sources of study information were cafeteria learning labs (17%) and classmates (17%).

Table 2.

Mean proportion of students interviewed who reported exposure to study information by source and semester from the HEALTHY intervention schools (N = 21 schools)

| Communications campaign component

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Posters

|

Classmates

|

Cafeteria messaging

|

Cafeteria learning labs

|

Public address announcements

|

||||||

| Semester | M | SD | M | SD | M | SD | M | SD | M | SD |

| Spring 2007 | 73 | 21 | 42 | 28 | 65 | 18 | 42 | 29 | 44 | 23 |

| Fall 2007 | 51 | 16 | 7 | 4 | 42 | 22 | 13 | 18 | 13 | 16 |

| Spring 2008 | 62 | 16 | 13 | 13 | 37 | 16 | 10 | 19 | 18 | 16 |

| Fall 2008 | 76 | 12 | 14 | 8 | 54 | 20 | 12 | 19 | 20 | 12 |

| Spring 2009 | 67 | 17 | 9 | 7 | 46 | 15 | 7 | 7 | 18 | 18 |

| Mean across semesters | 66 | 19 | 17 | 19 | 49 | 20 | 17 | 22 | 22 | 18 |

Some interesting patterns emerged when the data were examined by semester. Across all categories, the proportion of students who reported being exposed to study information was highest in the first semester. The majority reported being exposed to information from posters (73%) and the cafeteria messaging (65%), while around 40% of students reported receiving information from classmates, the cafeteria learning lab, or public address announcements. Over time, exposure decreased in all categories, with the least decline in the category of posters, which stayed nearly constant throughout the study. There was a considerable drop in reports of getting information from classmates (from 42% in the first semester to 9% in the last) and in exposure to the cafeteria learning lab (from 42% to 7%).

As suggested by the patterns in Table 2, there was a correlation between semester and self-reported exposure to the communications campaign elements. Using all 105 survey summaries for all intervention schools across all five semesters, the proportion of students reporting exposure to each source of information was correlated with semester (coded as 1 to 5). The correlations were significant for classmates (r = −.42, p < .001), cafeteria learning labs (r = −.44, p < .001), and public address announcements (r = −.34, p < .001). Exposure overall declined with time as well (r = −.32, p < .001). The negative correlations indicate that as the intervention progressed the proportion of students reporting exposure to each campaign element decreased. Reports of exposure to posters and cafeteria messaging did not drop significantly over time, suggesting that these communications elements may have retained their salience over time better than the other program elements.

Reported Behavior Change

Table 3 shows the means and standard deviations of the self-reported changes in behavior outside of school. For most behaviors, the highest proportion of students reported changing behavior in the first semester. The one exception was changes in eating behavior (i.e., modifications that were consistent with the study goals), which peaked in the third and fourth semesters. Looking at the combined data across all five intervention semesters, the most frequently reported behavior change was in the area of changes in eating consistent with study goals (66%), and the least frequently reported change was in time spent watching TV (21%). The differences across semester, however, were substantial, with 74% of respondents indicating that they had increased their water intake in the first semester (during which the intervention theme targeted choosing water over sugared beverages), compared with 29% in the last semester.

Table 3.

Proportion of students interviewed who reported changing behavior outside of school (N = 21 schools)

| Behavior

|

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Eating

|

Exercise

|

Less TV

|

Water

|

Soda

|

|||||||

| Semester | Theme | M | SD | M | SD | M | SD | M | SD | M | SD |

| Spring 2007 | Water versus added-sugar beverages | 59 | 19 | 60 | 19 | 34 | 20 | 74 | 11 | 57 | 19 |

| Fall 2007 | Physical activity versus sedentary behavior | 54 | 14 | 55 | 17 | 23 | 11 | 36 | 15 | 18 | 12 |

| Spring 2008 | High-quality food versus low-quality food | 77 | 10 | 38 | 14 | 12 | 11 | 27 | 15 | 15 | 8 |

| Fall 2008 | Energy balance | 77 | 15 | 49 | 17 | 22 | 11 | 25 | 17 | 14 | 10 |

| Spring 2009 | Strength balance and choice for life | 64 | 13 | 42 | 16 | 13 | 11 | 29 | 14 | 15 | 9 |

| Average across semesters | 66 | 17 | 49 | 18 | 21 | 15 | 38 | 23 | 24 | 20 | |

Note. Eating refers to changes in dietary practices consistent with study goals, exercise refers to increased exercise participation, less TV refers to reduced time spent watching TV and/or sitting around, water refers to increased water intake, and soda refers to decreased soda intake.

Analyses of the correlations between semester (coded as 1 to 5) and the proportion of students reporting each behavior were significant across all behaviors. The only behavior change that was reported with greater frequency toward the end of the study as compared to baseline was eating behavior consistent with study goals (r = .28, p < .01). Negative trends were found for exercise (r = −.32, p < .01), less TV (r = −.40, p < .001), water (r = −.62, p < .001), and soda (r = −.59, p < .001). The negative correlations indicate that fewer students reported behavioral changes as the intervention progressed.

Association Between Self-Reported Exposure and Behavior Change

Data from the student interviews were used to examine the association between exposure to campaign elements and self-reported change in behavior outside of school (see Table 4). Simple correlations, computed separately for each semester, revealed strong positive correlations between self-reported exposure to intervention information and all of the behavioral categories assessed (eating, exercise, less TV, water, and soda) during the first semester of the intervention. Several of the correlations were nonsignifi-cant in later semesters, although it is interesting to note that the association between reported changes in exercise behavior and exposure to the communications campaign was significant in every semester except the spring of 2008, when the emphasis was on nutrition (see Table 3). It is also noteworthy that all of the behaviors except eating were positively associated with exposure to the campaign in the last intervention semester. When the significance levels were adjusted using the Bonferroni correction for multiple comparisons, significant associations remained between exposure to the campaign and exercise behavior change for the first, second, and last semester and between exposure and both eating and soda drinking for the first semester.

Table 4.

Associations of self-reported exposure to communications campaign with change in behavior across semesters: Simple correlations and regression analysis controlling for semester (N = 21 schools)

| Behavior

|

||||||

|---|---|---|---|---|---|---|

| Semester | Theme | Eating | Exercise | Less TV | Water | Soda |

| Spring 2007 | Water versus added-sugar beverages | 0.82***† | 0.69***† | 0.59** | 0.64** | 0.79***† |

| Fall 2007 | Physical activity versus sedentary behavior | 0.27 | 0.72***† | 0.47† | 0.53* | 0.19 |

| Spring 2008 | High-quality food versus low-quality food | 0.28 | 0.34 | 0.17 | 0.42 | 0.02 |

| Fall 2008 | Energy balance | 0.43* | 0.65** | 0.39 | 0.06 | 0.43 |

| Spring 2009 | Strength balance and choice for life | 0.26 | 0.68***† | 0.51* | 0.41* | 0.46* |

| Regression analysis | ||||||

| F | 16.31 | 28.77 | 32.00 | 63.29 | 95.59 | |

| ΔR2 | 0.16 | 0.26 | 0.22 | 0.17 | 0.30 | |

| B (SE) | 0.45 (0.09) | 0.61 (0.09) | 0.48 (0.08) | 0.63 (0.10) | 0.74 (0.08) | |

Note. Eating refers to changes in dietary practices consistent with study goals, exercise refers to increased exercise participation, less TV refers to reduced time spent watching TV and/or sitting around, water refers to increased water intake, and soda refers to decreased soda intake. All regression analyses are significant at p < .001.

p < .05;

p < .01;

p < .001.

Remained statistically significant using the Bonferroni correction for multiple tests.

To control for the effect of semester, linear regression analyses were conducted separately for each behavior. The outcomes of these analyses (see Table 4) show that when the intervention as a whole is considered there was a significant positive association between exposure to the communications campaign and behavior change for all of the five behaviors assessed. All of the regressions were statistically significant even when adjusted for multiple comparisons.

Discussion

The purpose of this study was to report on the process evaluation of the communications campaign portion of the HEALTHY Study and to examine associations between self-reported exposure to sources of information in the school and behavior change. The results indicate that fidelity to the study protocol was quite high across all 21 schools in the study, with all schools receiving the one-time events planned in the protocol and virtually all schools exceeding the minimum requirements every semester for public address announcements. There was, however, considerable variability between schools in the magnitude of their deployment of posters.

The interviews with students conducted every semester suggest variability across semesters in the proportion of students who reported being exposed to study information from the communications campaign. Variability was also apparent in the proportion of students claiming to have made a behavioral change outside of school related to the HEALTHY Study goals. In most cases, exposure and behavior change were most common during the first intervention semester. This finding may be related to the novelty of the study during the first semester. Over time, there may have been some habituation to the messaging from the study, despite attempts to keep the images fresh and to change the intervention theme. Moreover, the students themselves matured over time and may have been more receptive to study information in the sixth grade as compared with the eighth grade. In terms of the decline in the proportion of students reporting behavior change, some portion of this trend could be related to a ceiling effect in that students who changed behavior during the first semester may in subsequent semesters report having made no additional changes.

It should be noted that the reliability and validity of the measures obtained through the student interviews has not been documented. These assessments are likely to be subject to recall bias, self-presentation bias, and other sources of error that tend to influence self-report information. It is possible that the same students who would be likely to recall multiple sources of exposure to study information would also be likely to report having made behavioral changes. We attempted to minimize this threat to internal validity by asking about exposure first and then, when asking about behavioral change, requiring students to provide specific examples of how they had changed their behavior.

Results of the analyses indicate that schools in which a greater proportion of students reported being exposed to the communications campaign achieved higher rates of self-reported behavior change. This evidence is consistent with the hypothesis that greater exposure to the health communication messages facilitated greater adoption of the health behaviors being promoted by the physical education, nutrition services and classroom components of the intervention. Similar findings have emerged from a study of exposure to sun protection messages among skiers (Walkosz et al., 2008), but a review of the literature failed to identify any school-based studies that have conducted similar analyses. Our findings suggest that the communications campaign was effective in that students recalled being exposed to the campaign components. Moreover, schools in which a higher proportion of students reported exposure to the communications campaign also had a higher proportion of students reporting behavioral change commensurate with study goals.

There are, however, alternative explanations, such as the possibility that students who already were predisposed to adopt healthy behaviors would be more likely to attend to the study messages. The relation between anticipated agreement and exposure has been noted in the political arena (Iyengar, Hahn, Krosnick, & Walker, 2008). The correspondence between exposure and behavior change might also be a function of a positive response bias, in which students who had a higher desire to provide socially desirable responses would be more likely to respond in the affirmative to exposure and behavior change questions. It should be noted, however, that in the present study interviewers asked students to provide specific examples of the behaviors that they claimed to have changed, and that only students who were able to do so were recorded as having made a change.

Data from the campaign tracking logs demonstrated very high fidelity to the portions of the communications campaign that represented one-time events or items that were intended to be present in the school environment consistently. Records showed that all intervention schools received the planned cafeteria learning labs and events-in-a-box. These events represented discrete activities that were mandated and scheduled centrally, and the rigorous monitoring and reporting of event implementation essentially left no room for error in the implementation. Similarly, each school was required to display a large exterior banner with the study logo throughout the life of the project. Presence of the banner was regularly documented in all 21 intervention schools. Consequently, there was high uniformity in the implementation of these school-wide events and materials.

The variability in deployment of other communications campaign elements was reflected in the range of self-reported exposure obtained from interviews with the students. It should be noted that these differences were likely a function not only of how many materials were displayed in the school environment but how effectively the items were exhibited and how much competing material was present in the environment. Anecdotal reports suggest that smaller numbers of posters may have had greater effect at some schools because the administration maintained strict controls on the overall amount of visual material posted in these schools. Similarly, some schools had closed-circuit television delivered directly to classrooms, and were thus able to provide the public address announcements in an audio and visual format, which was likely to be far more attention-grabbing than the audio-only messages delivered at most schools.

There were some patterns over time that suggested the students may have been responsive to the changes in study theme. The proportion of students who reported changing their eating behavior rose from 59% in the first semester to 77% in the third and fourth semesters, and then dropped back down to 66%. This pattern is especially interesting in light of the behavioral themes of the HEALTHY study, which focused on nutrition in semesters three and four. The rise in the proportion of students who reported making dietary changes during the semesters that the intervention focused on nutrition is encouraging, and suggests that students were responding to the information being disseminated by the intervention. The data also indicate a correspondence between study theme and exercise behavior. We found a significant positive correlation between self-reported change in exercise and exposure to the communications campaign for each semester except when the theme was high-quality food versus low-quality food.

This study has several strengths, including the rigorous study design employing standardized data collection methods across 3 years of the intervention and the diversity of the participating research sites. Moreover, the intervention schools were selected on the basis of the high proportion of students qualified for subsidized meals and were therefore heavily populated by children from families with relatively low socioeconomic status, many of whom were from minority ethnic groups. The results may therefore be generalizable to the groups most at risk for type 2 diabetes mellitus.

Limitations

Despite its considerable strengths, the study does have some limitations. The primary weakness of the study is the use of self-report as the method for obtaining data related to exposure to the campaign and also behavior change. Self-report data are subject to recall bias as well as unintended biases related to a desire for positive self-presentation. Students may have been motivated to give socially desirable responses to research assistants who, although not involved with the intervention, were frequently present on the school grounds and were visibly identified with the program through branded tee shirts and other study materials. It is also possible that the same students who would be most likely to report remembering the communication campaign materials would also be most likely to report making changes in their health behavior. The analytic method used, in which data within each school were aggregated to provide an overall gauge of campaign exposure and behavior at the school level may have attenuated the effect of any unintentional bias in reporting, but the limitation should be noted nevertheless.

Beyond the standard issues with self-report, limitations inherent in the specific methods and measures used should be acknowledged, and may weaken the internal validity of the present study. The approaches used to assess exposure to campaign elements and behavior change have not been previously validated. Moreover, the sample of students recruited for the interviews was not randomly selected. It is unlikely there was any systematic bias in sample selection across sites, as each site developed a recruitment method suited to their own local constraints. However, there may have been some self-selection operating to influence the student interview samples (e.g., students who were frequently absent from school would have been less likely to be interviewed), and it is unknown how this process may have affected the data.

Another limitation was the nonsystematic variability in intervention implementation across sites. Owing to contextual issues, site-to-site differences in the quantity of communications campaign materials displayed did emerge. For example, some school administrators only approved small number of images for display, whereas others were less discriminating. These unplanned differences between schools enables our analysis, because completely uniform implementation would have minimized the variability in exposure necessary to examine the association between exposure and behavior. Nevertheless, it should be noted that these unmeasured sources of influence on intervention implementation may limit the conclusions that can be drawn from our analyses.

An unavoidable limitation in the case of multicomponent intervention studies is the difficulty in attributing the effects of the communication campaign on behavior from the effects of the other campaign elements. The use of process evaluation data to identify an association between intervention component fidelity and behavior change is one way of attempting to disentangle the effect of a single intervention component. It should be noted, however, that the observed associations took place within the context of the integrated intervention. We therefore caution against concluding that a communications campaign alone, without the supporting environmental changes, would result in meaningful behavior change.

Summary of Lessons Learned

The greatest effect was in the first semester. After that the novelty of the communications campaign began to decline.

Fidelity was high to planned communications campaign activities.

The data suggest that in intervention schools with higher rates of communication campaign exposure more students reported changing their health behavior. Thus, communication campaign elements may be a valuable adjunct to school-based health promotion efforts.

Studies that span multiple sites that differ demographically should consider enabling local customization of communications materials to make them relevant to the intended participants.

Conclusions

In conclusion, our findings suggest that a communications campaign may be a valuable adjunct to a school-based lifestyle change program. Schools in which a greater proportion of students reporting being exposed to the communications campaign elements also had a greater proportion of students who reported making health behavior changes consistent with the study goals. Although we saw some decline in students’ reported awareness of communication elements across the course of this 3-year intervention, it is instructive that those elements (student-generated media) that were revamped midway through the study to allow students greater participation in their creation and display may have resulted in better sustained awareness of the campaign among students. Overall, the results suggest that future school-based interventions should consider the potential usefulness of a communications campaign as a means of enhancing intervention impact.

Acknowledgments

The authors thank the administration, faculty, staff, students, and their families at the middle schools and school districts that participated in the HEALTHY study. This work was completed with funding from National Institute of Diabetes and Digestive and Kidney Diseases/National Institutes of Health (NIDDK/NIH) grant numbers U01-DK61230, U01-DK61249, U01-DK61231, and U01-DK61223 to the STOPP-T2D collaborative group.

The following individuals and institutions constitute the HEALTHY Study Group (*indicates principal investigator or director): Study Chair: Children’s Hospital Los Angeles: F.R. Kaufman. Field Centers: Baylor College of Medicine: T. Baranowski,* L. Adams, J. Baranowski, A. Canada, K.T. Carter, K.W. Cullen, M.H. Dobbins, R. Jago, A. Oceguera, A.X. Rodriguez, C. Speich, L.T. Tatum, D. Thompson, M.A. White, C.G. Williams; Oregon Health & Science University: L. Goldberg,* D. Cusimano, L. DeBar, D. Elliot, H.M. Grund, S. McCormick, E. Moe, J.B. Roullet, D. Stadler; Temple University: G. Foster* (Steering Committee Chair), J. Brown, B. Creighton, M. Faith, E.G. Ford, H. Glick, S. Kumanyika, J. Nachmani, L. Rosen, S. Sherman, S. Solomon, A. Virus, S. Volpe, S. Willi; University of California at Irvine: D. Cooper,* S. Bassin, S. Bruecker, D. Ford, P. Galassetti, S. Greenfield, J. Hartstein, M. Krause, N. Opgrand, Y. Rodriguez, M. Schneider; University of North Carolina at Chapel Hill: J. Harrell,* A. Anderson, T. Blackshear, J. Buse, J. Caveness, A. Gerstel, C. Giles, A. Jessup, P. Kennel, R. McMurray, A-M. Siega-Riz, M. Smith, A. Steckler, A. Zeveloff; University of Pittsburgh: M.D. Marcus,* M. Carter, S. Clayton, B. Gillis, K. Hindes, J. Jakicic, R. Meehan, R. Noll, J. Vanucci, E. Venditti; University of Texas Health Science Center at San Antonio: R. Treviño,* A. Garcia, D. Hale, A. Hernandez, I. Hernandez, C. Mobley, T. Murray, J. Stavinoha, K. Surapiboonchai, Z. Yin. Coordinating Center: George Washington University: K. Hirst,* K. Drews, S. Edelstein, L. El Ghormli, S. Firrell, M. Huang, P. Kolinjivadi, S. Mazzuto, T. Pham, A. Wheeler. Project Office: National Institute of Diabetes and Digestive and Kidney Diseases: B. Linder,* C. Hunter, M. Staten. Central Biochemistry Laboratory: University of Washington Northwest Lipid Metabolism and Diabetes Research Laboratories: S.M. Marcovina.* HEALTHY intervention materials are available for download at http://www.healthystudy.org/.

Past and present HEALTHY study group members on the social-marketing based communications committee on whose work this report was based were: Lynn DeBar (Chair), Tara Blackshear, Jamie Bowen, Sarah Clayton, Tamara Costello, Kimberly Drews, Eileen Ford, Angela Garcia, Katie Giles, Bonnie Gillis, Healthy Murphy Grund, Art Hernandez, Ann Jessup, Megan Krause, Barbara Linder, Jeff McNamee, Esther Moe, Chris Nichols, Margaret Schneider, Brenda Showell, Sara Solomon, Diane Stadler, Mamie White, and Alissa Wheeler. The committee was supported by the creative teams at the Academy for Educational Development and Planit Agency.

All parties that contributed significantly to this research have been included in the above acknowledgements.

Contributor Information

MARGARET SCHNEIDER, University of California at Irvine, Irvine, California, USA.

LYNN DEBAR, Kaiser Permanente Center for Health Research, Portland, Oregon, USA.

ASHLEY CALINGO, University of North Carolina at Chapel Hill, Chapel Hill, North Carolina, USA.

WILL HALL, University of North Carolina at Chapel Hill, Chapel Hill, North Carolina, USA.

KATIE HINDES, University of Pittsburgh Graduate School of Public Health, Pittsburgh, Pennsylvania, USA.

ADRIANA SLEIGH, Oregon Health & Science University, Portland, Oregon, USA.

DEBBE THOMPSON, United States Department of Agriculture/Agriculture Research Service (USDA/ARS) Children’s Nutrition Research Center, Baylor College of Medicine, Houston, Texas, USA.

STELLA L. VOLPE, Division of Biobehavioral and Health Sciences, School of Nursing, University of Pennsylvania, Philadelphia, Pennsylvania, USA

ABBY ZEVELOFF, University of North Carolina at Chapel Hill, Chapel Hill, North Carolina, USA.

TRANG PHAM, Biostatistics Center, George Washington University, Rockville, Maryland, USA.

ALLAN STECKLER, University of North Carolina at Chapel Hill, Chapel Hill, North Carolina, USA.

References

- Alberti G, Zimmet P, Shaw J, Bloomgarden Z, Kaufman F, Silink M. Type 2 diabetes in the young: The evolving epidemic. Diabetes Care. 2004;27:1798–1811. doi: 10.2337/diacare.27.7.1798. [DOI] [PubMed] [Google Scholar]

- Armstrong R, Waters E, Moore L, Riggs E, Cuervo LG, Lumbiganon P, Hawe P. Improving the reporting of public health intervention research: Advancing TREND and CONSORT. Journal of Public Health. 2008;30:103–109. doi: 10.1093/pubmed/fdm082. [DOI] [PubMed] [Google Scholar]

- Cook-Cottone C, Casey CM, Feeley TH, Baran J. A meta-analytic review of obesity prevention in the schools. Psychology in the Schools. 2009;46:SI695–SI719. [Google Scholar]

- DeBar LL, Schneider M, Ford EG, Hernandez A, Showell B, Drews K, White M. Social marketing-based communications to integrate and support the HEALTHY study intervention. International Journal of Obesity (London) 2009;33(Suppl 4):S52–S59. doi: 10.1038/ijo.2009.117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dobbins M, De Corby K, Robeson P, Husson H, Tirilis D. School-based physical activity programs for promoting physical activity and fitness in children and adolescents aged 6. Cochrane Database of Systematic Reviews. 2009;(1):Art. No. CD007651. doi: 10.1002/14651858.CD007651. [DOI] [PubMed] [Google Scholar]

- The HEALTHY Study Group. HEALTHY study rationale, design and methods: Moderating risk of type 2 diabetes in multi-ethnic middle school students. International Journal of Obesity. 2009;33:S4–S20. doi: 10.1038/ijo.2009.112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster GD, Linder B, Baranowski T, Cooper DM, Goldberg L, Hirst K The HEALTHY Study Group. A school-based intervention for diabetes risk reduction. New England Journal of Medicine. 2010;363:443–453. doi: 10.1056/NEJMoa1001933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirst K, Baranowski T, DeBar L, Foster GD, Kaufman F, Kennel P, Yin Z. HEALTHY study rationale, design and methods: Moderating risk of type 2 diabetes in multi-ethnic middle school students. International Journal of Obesity (London) 2009;33(Suppl 4):S4–S20. doi: 10.1038/ijo.2009.112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iyengar S, Hahn KS, Krosnick JA, Walker J. Selective exposure to campaign communication: The role of anticipated agreement and issue public membership. Journal of Politics. 2008;70(1):186–200. [Google Scholar]

- Kaufman F. Type 2 diabetes mellitus in children and youth: A new epidemic. Journal of Pediatric Endocrinology and Metabolism. 2002;15:737–744. doi: 10.1515/JPEM.2002.15.s2.737. [DOI] [PubMed] [Google Scholar]

- Maibach EW, Abroms LC, Marosits M. Communication and marketing as tools to cultivate the public’s health: A proposed “people and places” framework. BMC Public Health. 2007;7:88. doi: 10.1186/1471-2458-7-88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCombs M, Shaw DL. The agenda-setting function of the mass media. Public Opinion Quarterly. 1973;37:62–75. [Google Scholar]

- Mutagh MJ, Thompson RG, May CR, Rapley T, Heaven BR, Graham RH, Eccles MP. Qualitative methods in a randomized controlled trial: The role of an integrated qualitative process evaluation in providing evidence to discontinue the intervention in one arm of a trial of a decision support tool. Quality and Safety in Health Care. 2007;16:224–229. doi: 10.1136/qshc.2006.018499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenbloom AL, Joe JR, Young RS, Winter WE. Emerging epidemic of type 2 diabetes in youth. Diabetes Care. 1999;22:345–354. doi: 10.2337/diacare.22.2.345. [DOI] [PubMed] [Google Scholar]

- Schneider M, Hall W, Hernandez A, Hindes K, Montez G, Pham T, Steckler A on behalf of the HEALTHY Study Group. Rationale, Design and Methods of the HEALTHY Process Evaluation. International Journal of Obesity. 2009 doi: 10.1038/ijo.2009.118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steckler A, Linnan L. Process evaluations for public health interventions and research. San Francisco, CA: Jossey-Bass; 2002. [Google Scholar]

- Summerbell CD, Waters E, Edmunds LD, Kelly S, Brown T, Campbell KJ. Interventions for preventing obesity in children. Cochrane Database of Systematic Reviews. 2005;(3):Art No. CD001871. doi: 10.1002/14651858.CD001871.pub2. [DOI] [PubMed] [Google Scholar]

- Walkosz BJ, Buller DB, Andersen PA, Scott MD, Dignan MB, Cutter GR, Maloy JA. Increasing sun protection in winter outdoor recreation: A theory-based health communication program. American Journal of Preventive Medicine. 34:502–509. doi: 10.1016/j.amepre.2008.02.011. [DOI] [PMC free article] [PubMed] [Google Scholar]