Abstract

Objective

To develop an empirically based framework of the aspects of randomised controlled trials addressed by qualitative research.

Design

Systematic mapping review of qualitative research undertaken with randomised controlled trials and published in peer-reviewed journals.

Data sources

MEDLINE, PreMEDLINE, EMBASE, the Cochrane Library, Health Technology Assessment, PsycINFO, CINAHL, British Nursing Index, Social Sciences Citation Index and ASSIA.

Eligibility criteria

Articles reporting qualitative research undertaken with trials published between 2008 and September 2010; health research, reported in English.

Results

296 articles met the inclusion criteria. Articles focused on 22 aspects of the trial within five broad categories. Some articles focused on more than one aspect of the trial, totalling 356 examples. The qualitative research focused on the intervention being trialled (71%, 254/356); the design, process and conduct of the trial (15%, 54/356); the outcomes of the trial (1%, 5/356); the measures used in the trial (3%, 10/356); and the target condition for the trial (9%, 33/356). A minority of the qualitative research was undertaken at the pretrial stage (28%, 82/296). The value of the qualitative research to the trial itself was not always made explicit within the articles. The potential value included optimising the intervention and trial conduct, facilitating interpretation of the trial findings, helping trialists to be sensitive to the human beings involved in trials, and saving money by steering researchers towards interventions more likely to be effective in future trials.

Conclusions

A large amount of qualitative research undertaken with specific trials has been published, addressing a wide range of aspects of trials, with the potential to improve the endeavour of generating evidence of effectiveness of health interventions. Researchers can increase the impact of this work on trials by undertaking more of it at the pretrial stage and being explicit within their articles about the learning for trials and evidence-based practice.

Keywords: STATISTICS & RESEARCH METHODS, QUALITATIVE RESEARCH, Clinical trials < THERAPEUTICS

Article summary.

Article focus

Qualitative research is undertaken with randomised controlled trials.

A systematic review of journal articles identified 296 reporting the qualitative research undertaken with trials in 2008–2010.

The 22 ways in which qualitative research is used in trials are reported, with examples.

Key messages

Qualitative research addressed a wide range of aspects of trials focusing on the intervention being trialled (71%); the design, process and conduct of the trial (15%); the outcomes of the trial (1%); the measures used in the trial (3%); and the target condition for the trial (9%).

A minority of the qualitative research was undertaken at the pretrial stage (28%, 82/296).

The value of the qualitative research to the trial itself was not always made explicit within the articles.

Strengths and limitations of this study

One strength of the framework developed here is that it was based on published international research which is available to those making use of evidence of effectiveness.

One limitation is that not all qualitative research undertaken with trials is published in peer-reviewed journals.

Background

Qualitative research is often undertaken with randomised controlled trials (RCTs) to understand the complexity of interventions, and the complexity of the social contexts in which interventions are tested, when generating evidence of the effectiveness of treatments and technologies. In the 2000s, the UK Medical Research Council framework for the development and evaluation of complex interventions highlighted the utility of using a variety of methods at different phases of the evaluation process, including qualitative research.1–3 For example, qualitative research can be used with RCTs, either alone or as part of a mixed methods process evaluation, to consider how interventions are delivered in practice.4 The potential value of understanding how actual implementation differs from planned implementation includes the ability to explain null trial findings or to identify issues important to the transferability of an effective intervention outside experimental conditions. Excellent examples exist of the use of qualitative research with RCTs which explicitly identify the value of the qualitative research to the trial with which it was undertaken. These include its use in facilitating interpretation of pilot trial findings,5 and improving the conduct of a feasibility trial by highlighting both the reasons for poor recruitment and the solutions that increased recruitment.6 That is, qualitative research is undertaken with RCTs in order to enhance the evidence of effectiveness produced by the trial or to facilitate the feasibility or efficiency of the trial itself.

Researchers have discussed the variety of possible ways in which qualitative research can be used with trials, presenting these within a temporal framework of qualitative research undertaken before, during and after a trial.7–9 However, qualitative research may be used quite differently in practice and it is important to consider how qualitative research is actually used in trials, as well as its value in terms of contributing to the generation of evidence of effectiveness of treatments and services to improve health and healthcare. Consideration of how qualitative research is being used can identify ways of improving this endeavour and help future researchers maximise its value. For example, an excellent study of how qualitative research was used in trials of interventions to change professional practice or the organisation of care identified methodological shortcomings of the qualitative research and a lack of integration of findings from the qualitative research and trial.7 Additionally, systematic organisation of the range of ways in which researchers use qualitative research with trials, such as the temporal framework, can help to educate researchers new to this endeavour about the possible uses of qualitative research, and help experienced researchers to decide how qualitative research can best be used when designing and undertaking trials. A review of practice also offers an opportunity for the research community to reflect on how they practice this endeavour. Our objective was to develop an empirically-based framework to map the aspects of trials addressed by qualitative research in current international practice, and to identify the potential value of this contribution to the generation of evidence of effectiveness of health interventions.

Methods

We undertook a ‘systematic mapping review’ of published journal articles reporting qualitative research undertaken with specific trials rather than qualitative research undertaken about trials in general. The aim of this type of review, also called a ‘mapping review’ or ‘systematic map’, is to map out and categorise existing literature on a particular topic, with further review work expected.10 Formal quality appraisal is not expected and synthesis is graphical or tabular. This mapping review involved a systematic search for published articles of qualitative research undertaken with trials. The aim was not to synthesise the findings from these articles but to categorise them into an inductively developed framework.

The search strategy

We searched the following databases for articles published between 2001 and September 2010: MEDLINE, PreMEDLINE, EMBASE, the Cochrane Library, Health Technology Assessment, PsycINFO, CINAHL, British Nursing Index, Social Sciences Citation Index and ASSIA. We used two sets of search terms to identify articles using qualitative research in the context of a specific trial. We adapted the Cochrane Highly Sensitive Search Strategy for identifying randomised trials in MEDLINE.11 The search terms for qualitative research were more challenging. We started with a qualitative research filter,12 but this returned many articles which were not relevant to our study. We made decisions about the terms to use for the final search in an iterative manner, balancing the need for comprehensiveness and relevance13 (see online supplementary appendix 1 for search terms). We identified 15 208 references, reduced to 10 822 after electronic removal of duplicates. We downloaded these references to a reference management software program (EndNote X5).

Inclusion and exclusion criteria

Our inclusion criteria were articles published in English between 2001 and September 2010, reporting the findings of empirical qualitative research studies undertaken before, during or after a specific RCT in the field of health. These could include qualitative research published as a stand-alone article or reported within a mixed methods article. We undertook the search in October 2010 and searched up to September 2010, which was the last month of the publications available. Our exclusion criteria were that an article was not a journal article (eg, conference proceedings and book chapter), no abstract available, not a specific trial (eg, qualitative research about hypothetical trials or trials in general), not qualitative research (qualitative data collection and analysis were required for inclusion), not health (eg, education), not a report of findings of empirical research (eg, published protocol, methodological paper or editorial), not reported in English and not human research.

Screening references and abstracts

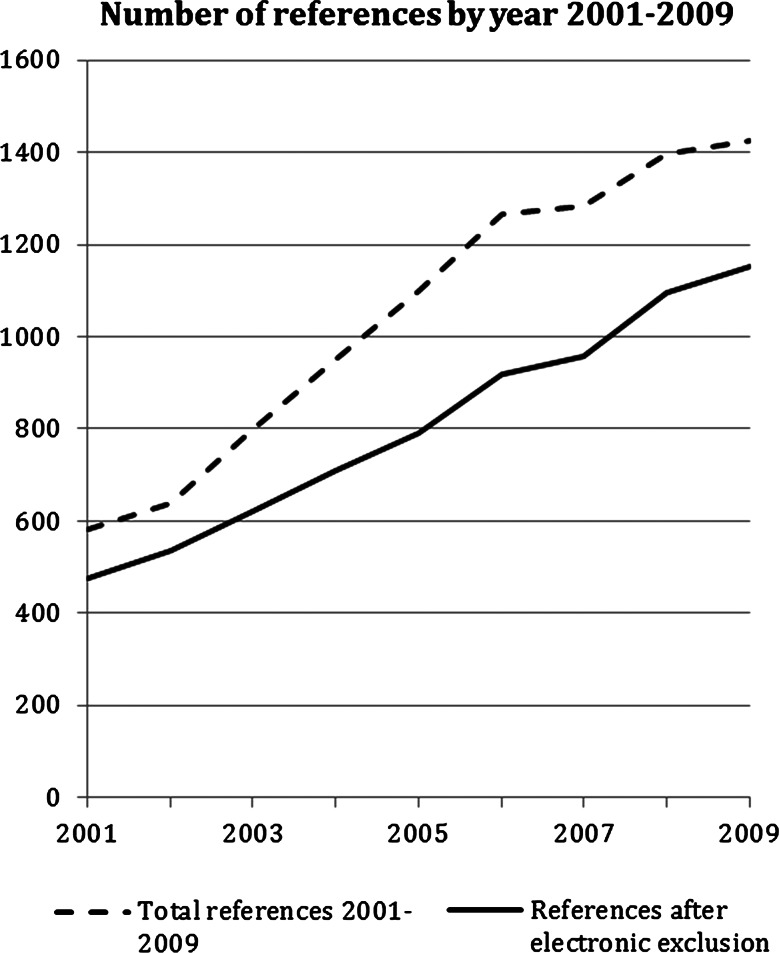

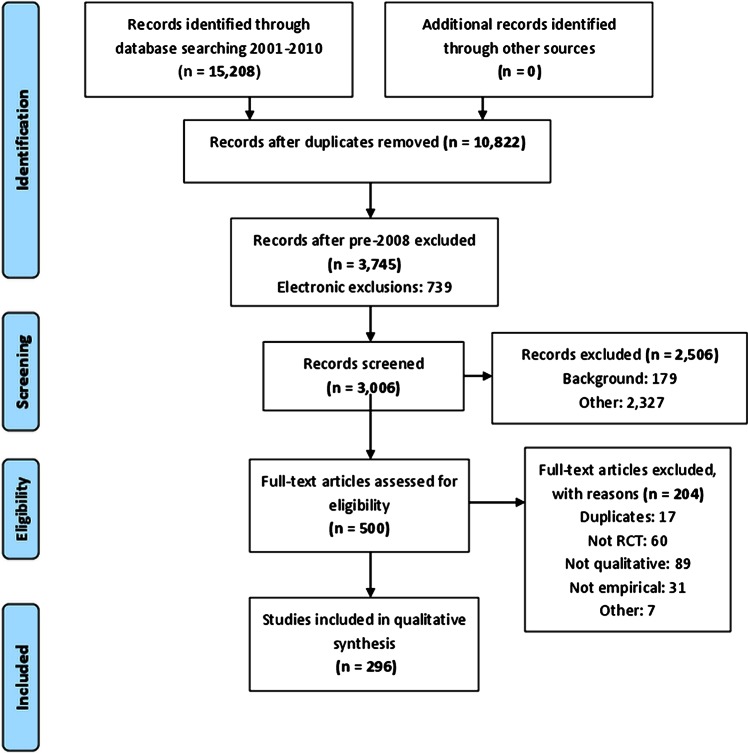

We applied the exclusion criteria electronically to the 10 822 references and abstracts by searching for terms using EndNote. The number of references identified by us increased steadily between 2001 and 2009 (figure 1). The year 2010 is not reported in figure 1 because we did not search the full year. Owing to the large number of references identified, and the need to read abstracts and full articles for further selection and categorisation, we made the decision to focus on articles published between January 2008 and September 2010. In this short time period, there were 3745 references and abstracts, of which 739 were excluded by electronic application of exclusion criteria. One of the research team (SJD) read the abstracts of the remaining 3006 references and excluded a further 2506. A sample of 100 exclusions was checked by AOC and KJT and there was full agreement with the exclusion decisions made by SJD. The most common reasons for exclusion were that the abstract did not refer to an RCT, did not use qualitative research or did not report empirical research (figure 2). Five hundred abstracts remained after this screening process.

Figure 1.

Number of references identified for qualitative research undertaken with randomised controlled trials between 2001 and 2009.

Figure 2.

The PRISMA flow diagram for articles 2008–2010. PRISMA, Preferred Reporting Items for Systematic Reviews and Meta-Analysis.

Framework development

It was not possible to use the temporal framework of before, during and after the trial7–9 to categorise the qualitative research because it was not possible to distinguish between ‘during the trial’ and ‘after the trial’ with any confidence. Authors of articles rarely described when the qualitative data collection or the analysis was undertaken in relation to the availability of the trial findings. We could only report the percentage undertaken before the trial. To develop a new framework, we undertook a process similar to ‘framework analysis’ for the analysis of qualitative data.14 As a starting point, we read about 100 abstracts and listed the stated aim of the qualitative research within the abstract to identify categories and subcategories of the focus of the articles. After team discussions, we finalised our preliminary framework and one team member (SJD) applied it to the stated aim of the qualitative research in our 500 abstracts, open to emergent categories which were then added to the framework. Then the team members selected different categories to lead on and read the full articles within their categories, meeting weekly with the team to discuss exclusions (we excluded another 204 articles at this stage) and recategorisation of articles, and added or collapsed categories and subcategories and relationships between categories. At this stage, we felt that the preliminary categorisation based on the stated aim of the article did not describe the actual focus of the qualitative research. For example, articles which were originally categorised as ‘exploring patients’ views of the intervention’ were put into new categories based on the focus of the qualitative research reported such as ‘identifying the perceived value and benefits of the intervention’. Each article was allocated mainly to one subcategory, but some were categorised into two or more subcategories because the qualitative research focused on more than one issue within the article.

Data extraction

We developed 22 subcategories from reading the 296 abstracts and articles. We extracted descriptive data on all 296 articles, including country of first author and qualitative research undertaken prior to the trial. We undertook further detailed data extraction on up to six articles within each subcategory, totalling 104 articles. These articles were selected randomly for most subcategories, although in the large intervention subcategories, we selected six which showed the diversity of content of the subcategory. We extracted further descriptive information about the methods used. During data extraction, we identified the value of the qualitative research for generating evidence of effectiveness and documented this. For example, if the focus of the qualitative research was to identify the acceptability of an intervention in principle, then the value might have been that a planned trial was not started, because it became clear that it would have failed to recruit due to patients finding the intervention unacceptable. However, the value of the qualitative research was rarely articulated explicitly by the authors of these articles. We identified potential value based on the framing of the article in the introduction section, issues alluded to in the discussion section, and our own subjective assessment of potential value. We recognise that qualitative research has value in its own right and that we adopted a particular perspective here: the potential value of qualitative research undertaken with trials to the generation of evidence of effectiveness, viewing its utility within an ‘enhancement model’.15 That is, we identified where it enhanced the trial endeavour rather than made an independent contribution to knowledge.

The process was time consuming and resource intensive. It took 30 months from testing search terms to completion of analysis and write-up as part of a wider study which included interviews with researchers, surveys of lead investigators and a document review.

Results

Size of the evidence base

We identified 296 articles published between 2008 and September 2010. There was no evidence of increasing numbers per year in this short time period: 113 articles in 2008, 105 in 2009 and 78 in the first 9 months of 2010 (equivalent to 104 in a full year). For the 104 articles included in the data extraction, most of the first authors were based in North America (40) and the UK (30), with others based in Scandinavian countries (9), Australia and New Zealand (9), South Africa (6), and a range of other countries in Africa, Asia and Europe (10).

Framework of the focus of the qualitative research

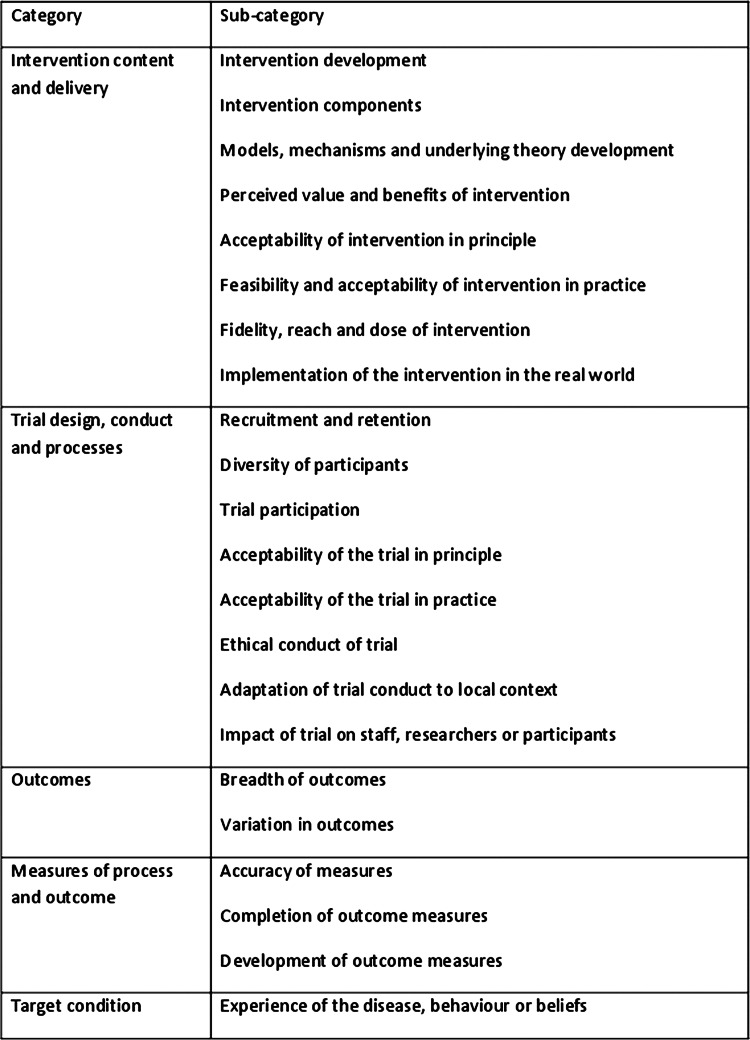

The final framework consisted of 22 subcategories within five broad categories related to different aspects of the trial in terms of the intervention being tested, how the trial was designed and conducted, the outcomes of the trial, outcome and process measures used in the trial, and the health condition the intervention was aimed at (figure 3).

Figure 3.

Framework of the focus of qualitative research used in trials.

Distribution of recent practice

Sometimes articles focused on more than one aspect of the trial, with a total of 356 aspects identified in the 296 articles. The qualitative research in these articles mainly related to the content or delivery of the intervention (table 1), particularly focusing on the feasibility and acceptability of the intervention in practice. The next largest category was the design and conduct of the trial, particularly focusing on how to improve recruitment and the ethical conduct of trials. Almost 1 in 10 articles focused on the health condition being treated within the trial. Few articles focused on outcomes and measures. This imbalance between categories may reflect practice or may be due to some types of qualitative research being undertaken with trials not being published or not being identified by our search strategy. We selected an example of research undertaken in each subcategory, summarised in table 1. The selection was based on authors being explicit about the impact of the qualitative research on the specific trial if there was an example of this within a subcategory.

Table 1.

Description, distribution, timing and examples of different uses of qualitative research with trials

| Category | Subcategory | Description | Frequency 356 (100%) in 296 articles N (%) | Timing: percentage of subcategory undertaken at pretrial stage | Example |

|---|---|---|---|---|---|

| Intervention content and delivery | 254 (71%) | ||||

| Intervention development | Pretrial development work relating to intervention content and delivery | 48 (13%) | 100 | Gulbrandsen et al (2008)16 planned to undertake a pragmatic RCT of ‘four habits’, a clinical communication tool designed and evaluated in the USA for use in Norway. They used mixed methods research to identify ways to tailor the intervention content to meet the needs of local healthcare practice. They undertook 3 focus groups with local physicians who had been given the intervention training. They confirmed cultural alignment and informed elements of the training programme for use in the planned trial. | |

| Intervention components | Exploring individual components of a complex intervention as delivered in a specific trial | 10 (3%) | 0 | Romo et al17 undertook an RCT of hospital-based heroin prescription compared with methadone prescription for long-term socially-excluded opiate addicts for whom other treatments had failed. The aim of the qualitative research was to explore patients’ and relatives’ experience of the intervention as delivered within the trial. They undertook in-depth semi-structured interviews with 21 patients receiving the intervention and paired family members. They identified the resulting medicalisation of addiction as a separate component of the intervention. | |

| Models, mechanisms and underlying theory development | Developing models, mechanisms of action and underlying theories or concepts relating to an intervention in the context of a specific trial | 23 (6%) | 4 | Byng et al (2008)18 as part of a cluster RCT of a multifaceted facilitation process to improve care of patients with long-term mental illness undertook interviews with 46 practitioners and managers from 12 cluster sites to create 12 case studies. They investigated how a complex intervention led to developments in shared care for people with long-term mental illness. They identified core functions of shared care and developed a theoretical model: linking intervention specific, external and generic mechanisms to improved healthcare. | |

| Perceived value and benefits of intervention | Exploring accounts of perceived value and benefits of intervention given by recipients and providers of the intervention | 42 (12%) | 7 | Dowrick et al (2008)19 as part of an RCT of reattribution training in general practice for use with patients with medically unexplained symptoms undertook semi-structured interviews with 12 practitioners participating in the trial to explore attitudes to reattribution training among practitioners. They identified perceived direct and indirect benefits, for example, increased confidence in working with this group of patients and crossover into chronic disease management and understanding of what GPs valued about the intervention was seen as a potential mechanism for increasing the successful implementation of the intervention. | |

| Acceptability of intervention in principle | Exploring stakeholder perceptions of the ‘in principle’ acceptability of an intervention | 32 (9%) | 25 | Zhang et al (2010)20 undertook a pretrial study in preparation for a community-based RCT of reduction of risk of diabetes through long-term dietary change from white to brown rice. They undertook a mixed methods study with focus groups of 32 non-trial participants to explore cultural acceptability and prior beliefs about brown rice consumption among potential intervention recipients. They identified the beliefs held about brown rice that made it an unacceptable intervention. The results provided valuable insights to guide the design of patient information for the planned trial. | |

| Feasibility and acceptability of intervention in practice | Exploring stakeholder perceptions of the feasibility and acceptability of an intervention in practice | 83 (23%) | 24 | Pope et al (2010)21 as part of a cluster RCT of provider-initiated HIV counselling and testing of tuberculosis patients in South Africa undertook focus groups involving 18 trial intervention providers after the trial results were known to explore the structural and personal factors that might have reduced the acceptability or feasibility of the intervention delivery by the clinic nurses. The RCT showed a smaller than expected effect and the qualitative research provided insights into contextual factors that could have reduced the uptake of HIV testing and counselling, including a lack of space and privacy within the clinic itself. | |

| Fidelity, reach and dose of intervention | Describing the fidelity, reach and dose of an intervention as delivered in a specific trial | 12 (3%) | 0 | Mukoma et al (2009)22 as part of a school-based cluster RCT of an HIV education programme to delay onset of sexual intercourse and increase appropriate condom use undertook direct classroom observations (26 in 13 intervention schools), 25 semi-structured interviews with teachers (intervention deliverers) and 12 focus groups with pupils (recipients). They explored whether the intervention was implemented as planned, assessed quality and variation of intervention at a local level, and explored the relationship between fidelity of implementation and observed outcomes. They showed that the intervention was not implemented with high fidelity at many schools, and that the quality of delivery, and therefore the extent to which students were exposed to the intervention (dose), varied considerably. Observation and interview data did not always concur with quantitative assessment of fidelity (teachers’ logs). | |

| Implementation of the intervention in the real world | Identifying lessons for ‘real world’ implementation based on delivery of the intervention in the trial | 4 (1%) | 0 | Carnes et al (2008)23 as part of an RCT comparing advice to use topical or oral NSAIDs for knee pain in older people undertook telephone interviews with 30 trial participants to explore patient reports of adverse events and expressed preferences for using one mode of analgesia administration over the other. The trial showed equivalence of effect of topical and oral NSAIDS for knee pain. In the light of these findings, the qualitative research provided a model incorporating trial findings and patient preferences into decision-making advice for use in practice, as well as contributed to an empirically-informed lay model for understanding the use of NSAIDS as pain relief. | |

| Trial design, conduct and processes | 54 (15%) | ||||

| Recruitment and retention | Identifying ways of increasing recruitment and retention | 11 (3%) | 18 | Dormandy et al (2008)24 as part of a cluster trial of screening for haemoglobinopathies interviewed 20 GPs in the trial to explore why general practices joined the trial and stayed in it. They identified how to overcome barriers to recruitment in future trials in primary care. | |

| Diversity of participants | Identifying ways of broadening participation in a trial to improve diversity of population | 7 (2%) | 14 | Velott et al (2008)25 as part of a trial of a community-based behavioural intervention in interconceptional women undertook 2 focus groups with 4–6 facilitators and 13 interviews with trial recruitment facilitators to document strategies used and offer perceptions of success of strategies to recruit low income rural participants. They ensured inclusion of a hard to reach group in the trial. | |

| Trial participation | Improving understanding of how participants join trials and experience of participation | 4 (1%) | 25 | Kohara and Inoue (2010)26 as part of a cancer phase I clinical trial of an anticancer drug used qualitative research to reveal the decision making processes of patients participating in or declining a trial. They undertook interviews with 25 people who did and did not participate and observation of six recruitments and identified how recruiters could be more sensitive to patients. | |

| Acceptability of the trial in principle | Exploring stakeholders’ views of acceptability of a trial design | 5 (1%) | 60 | Campbell et al (2010)27 in relation to a proposed trial of arthroscopic lavage vs a placebo-surgical procedure for osteoarthritis of the knee undertook focus groups and 21 interviews with health professionals and patients to describe attitudes of stakeholders to a trial. In principle, the trial was acceptable, but placebo trials were not acceptable to some stakeholders. | |

| Acceptability of the trial in practice | Exploring stakeholders’ views of acceptability of a trial design in practice | 4 (1%) | 25 | Tutton and Gray (2009)28 as part of a feasibility trial of fluid optimisation after hip fracture undertook two focus groups with 17 staff and an interview with the research nurse to increase knowledge of implementation of the intervention and feasibility of the trial. They identified difficulty while recruiting for the trial in a busy healthcare environment. | |

| Ethical conduct | Strengthening the ethical conduct of a trial, for example, informed consent procedures | 16 (4%) | 12 | Penn and Evans (2009)29 as part of a community vs clinic-based antiretroviral medication in a multisite trial in South Africa undertook observation and interviews with 13 recruiters and 19 students going through two different informed consent processes in order to understand the effectiveness of using a modified informed consent process rather than a standard one. They identified ways of improving ethics and reducing anxiety when enrolling people in such trials. | |

| Adaptation of trial conduct to local context | Addressing local issues which may impact on the feasibility of a trial | 2 (1%) | 50 | Shagi et al (2008)30 as part of a feasibility study for an efficacy and safety phase III trial of vaginal microbicide undertook participatory action research, including interviews and workshops, to explore the feasibility of a community liaison system. They reported on improving the ethical conduct, recruitment and retention for the main trial. | |

| Impact of trial on staff, researchers or participants | Understanding how the trial affects different stakeholders, for example, workload | 5 (1%) | 20 | Grbich et al (2008)31 as part of a factorial cluster trial of different models of palliative care including educational outreach and case conferences undertook qualitative research to explore the effect of the trial on staff. They undertook a longitudinal focus group study (11 in total) with staff delivering the intervention and collecting the data at three time points during the trial. The reported impact on the trial was improved trial procedures and keeping people on board with the trial. | |

| Outcomes | 5 (1%) | ||||

| Breadth of outcomes | Identifies the range of outcomes important to participants in the trial | 1 (<1%) | 0 | Alraek and Malterud (2009)32 as part of a pragmatic RCT of acupuncture to reduce symptoms of the menopause used written answers to an open question on a questionnaire to 127 patients in an intervention arm to describe reported changes in health in the acupuncture arm of the trial, concluding that the range of outcomes in the trial were not comprehensive. | |

| Variation in outcomes | Explains differences in outcomes between clusters or participants in a trial | 4 (1%) | 0 | Hoddinott et al (2010)33 in a cluster RCT of community breastfeeding support groups to increase breastfeeding rates undertook 64 ethnographic in-depth interviews, 13 focus groups and 17 observations to produce a locality case study for each of 7 intervention clusters. Explained variation in the 7 communities and why rates decreased in some as well as increased in others. | |

| Measures of process and outcome | 10 (3%) | ||||

| Accuracy of measures | Assesses validity of process and outcome measures in the trial | 7 (2%) | 43 | Farquhar et al (2010)34 in a phase II pilot RCT of breathlessness intervention for chronic obstructive pulmonary disease used qualitative research to explore the feasibility of using an outcome measure for the main trial. They used longitudinal interviews with 13 patients in the intervention arm on 51 occasions and recordings of participants completing a questionnaire. They rejected the use of the outcome measure for the main trial because of lack of validity in this patient group. | |

| Completion of outcome measures | Explores why participants complete measures or not | 1 (<1%) | 0 | Nakash et al (2008)35 within an RCT of mechanical supports for severe ankle sprains used qualitative research to examine factors affecting response and non-response to a survey measuring outcomes. They undertook interviews with 22 participants, 8 of whom had not responded, and identified reasons for non-response such as not understanding the trial and feeling fully recovered. | |

| Development of outcome measures | Contributes to the development of a new process and secondary outcome measures | 2 (1%) | 0 | Abetz et al (2009)36 within a double-blind placebo RCT of patch treatment in Alzheimer's disease used qualitative research to identify items for an instrument for use in their RCT and check the acceptability of a developed questionnaire on career satisfaction. They undertook 3 focus groups with 24 careers prior to the RCT to identify items and 10 cognitive interviews during the RCT to contribute to assessment of the validity of measures used. | |

| Target condition | Experience of the disease, behaviour or beliefs | Explores the experience of having or treating a condition that the intervention is aimed at, or a related behaviour or belief | 33 (9%) | 6 | Chew-Graham et al (2009)37 within a pragmatic RCT of anti-depressants vs counselling for postnatal depression undertook qualitative research to explore patient and health professional views about disclosure of symptoms of postnatal depression. They undertook interviews with 61 staff and patients from both arms of the trial, offering reflections on implications for clinical practice in this patient group. |

GPs, general practitioners; NSAIDs, non-steroidal anti-inflammatory drugs; RCT, randomised controlled trial.

Timing of the qualitative research

In total, 28% (82/296) of articles reported qualitative research undertaken at the pretrial stage, that is, as part of a pilot, feasibility or early phase trial or study in preparation for the main trial (table 1). Some activities would be expected to occur only prior to the main trial, such as intervention development, and all of these articles were undertaken pretrial. However, other activities which might also be expected to occur prior to the trial, such as acceptability of the intervention in principle, occurred frequently during the main trial.

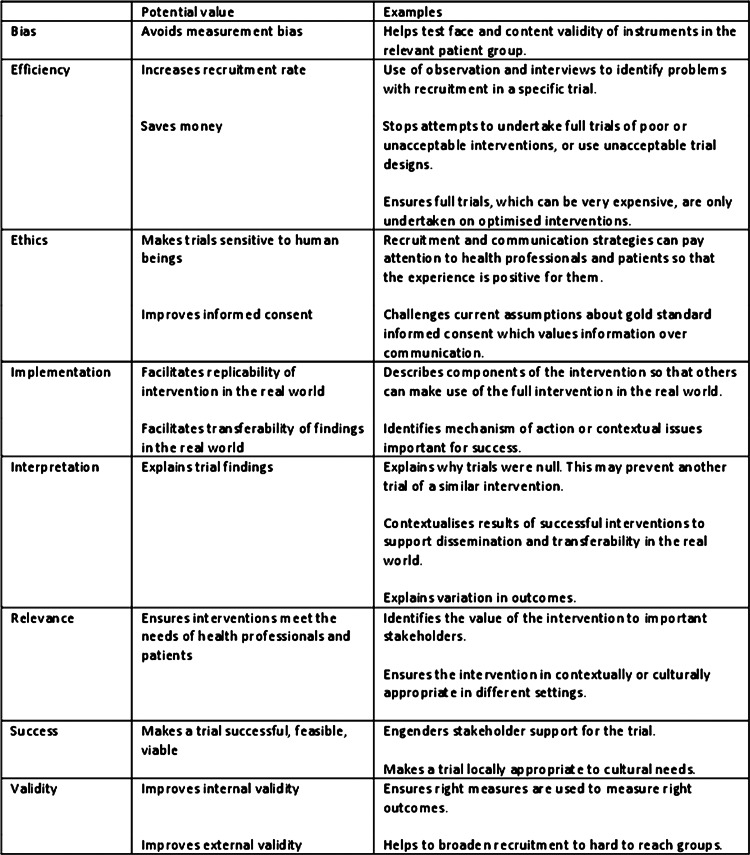

Potential value

We identified the potential value of the qualitative research undertaken within each subcategory (figure 4). The range of potential values identified was wide, offering a set of rationales for undertaking qualitative research with trials, for example, to improve the external validity of a trial by identifying solutions to barriers to recruitment in hard-to-reach groups, or to facilitate transferability of findings in the real world by exploring contextual issues important to the implementation of the intervention. Qualitative research undertaken at the pretrial stage has the potential to impact on the main trial as well as future trials. We identified examples of the qualitative research impacting on the main trial, for example, by changing the outcome measure to be used in the main trial. Qualitative research undertaken with the main trial also has the potential to impact on that trial, for example, by facilitating interpretation of the trial findings. However, in practice, we found few examples of this in the articles. Given that so much of this endeavour occurred at the main trial stage, we mainly identified learning for future trials. We also found that the learning for future trials was not necessarily explicit within the articles.

Figure 4.

Potential value of the qualitative research to the generation of evidence of effectiveness.

Discussion

Summary of findings

A large number of journal articles have been published which report the use of qualitative research with trials. This is an international endeavour which is likely to have increased over the past 10 years. Researchers have published articles focusing on a wide range of aspects of trials, particularly the intervention and the design and conduct of trials. Most of this research was undertaken with main trials rather than a pretrial where it could have optimised the intervention or trial conduct for the main trial. The potential value of the qualitative research to the endeavour of generating evidence of effectiveness of health interventions was considerable, and included improving the external validity of trials, facilitating interpretation of the trial findings, helping trialists to be sensitive to the human beings who participate in trials, and saving money by steering researchers towards interventions more likely to be effective in future trials. However, there were indications that researchers were not capitalising on this potential because lessons learnt were for future trials rather than the trial the qualitative research was undertaken with, and these lessons were not always explicitly articulated within these articles so that researchers not involved in the original research project could utilise them.

Strengths, weaknesses and reflexivity

One strength of the framework developed here is that it was based on published international research which is available to those making use of evidence of effectiveness. The development of the framework was part of a larger study identifying good practice within each subcategory, looking beyond published articles to research proposals and reports, and interviewing researchers who have participated in these studies. The weaknesses are that first, not all qualitative research undertaken with trials is published in peer-reviewed journals7 and some types may be published more than others. However, the framework was grounded in the research which researchers chose to publish, identifying the issues which they or journals perceived as important. Second, some qualitative research undertaken with trials may not refer to the trial in the qualitative article and therefore may not have been included here. This may have affected some of the subcategories more than others and thus misrepresented the balance of contributions within the framework. However, if we could not relate an article to a specific trial, then others will also face this barrier, limiting the value of the research for users of evidence of effectiveness. Third, only English language articles were included. Fourth, the inclusion criteria relied largely on the abstract and some studies may have been excluded at an early stage which should have been included, resulting in an underestimate of the amount of this research that has been published. Fifth, we acknowledge that the generation of subcategories was subjective and some of them could have been divided further into another set of subcategories. Another research group may have developed a different framework. Our research group was interested in whether qualitative research undertaken with trials was actually delivering the added value promised within the literature.1–4 Finally, the actual impact of this qualitative research on trials may be located in articles reporting the trials, although even studies of all documents and publications of these types of studies found a lack of integration of findings from the trial and qualitative research.7

Context of other research

There was a large overlap between our subcategories and the items listed in two temporal frameworks.7 8 However, our framework added a whole category of work around the design and conduct of the trial to one of the existing frameworks.7 It also showed that the timing of qualitative research in relation to a trial is different in practice from that identified in existing frameworks. For example, both of the temporal frameworks include the use of qualitative research in the ‘after’ period to explain variation in outcomes, but this qualitative research occurred during the trials in our study.8 Some of the discussion of the use of qualitative research with trials relates to complex interventions,1–4 but we found that in practice it was also used with drug trials involving complex patient groups17 or occurring in complex environments.30

Our research highlights the difference between the starting place of qualitative research with trials, which may be general (eg, ‘to explore the views of those providing and receiving the intervention’), and the focus of a particular publication, which may be more specific (eg, where exploration of these views identifies problems with acceptability of the intervention). So researchers may not plan to consider the acceptability of an intervention in principle during the main trial but may find that this emerges as an issue and is extremely important because it explains why the trial failed to recruit or the intervention was ineffective. This learning can offer guidance for future trials of similar families of interventions. However, one can also ask whether enough qualitative research is being undertaken at the pretrial stage to reduce the chance of finding unwelcome surprises during the main trial. Another study, which had included unpublished qualitative research,7 found that there was more use of qualitative research before than during the trial, so it may be that this work is being undertaken but not being published.

Previous research has shown that most of the trial and qualitative publications had no evidence of integration at the level of interpretation and that few qualitative studies were used to explain the trial findings.7 Lewin et al identified problems with reporting the qualitative research in that the authors could have been more explicit about how qualitative research helped develop the intervention or explained findings. We found examples where researchers were explicit about learning for the trial,38 but the message that emerges from both Lewin et al's7 research and our own is that this may be something researchers expect to happen more than it actually does in practice.

Qualitative research undertaken with trials is also relevant to systematic reviews, adding value to systematic reviews rather than simply the specific trial.39 Noyes et al identify the value of this research in enhancing the relevance and utility of a systematic review of trials to potential research users and in explaining the heterogeneity of findings in a review. However, they also highlight the problem of retrieving these articles. Our research shows that even when systematic reviewers locate these articles, they will have to do the work in terms of thinking about the relevance of these articles to the trial-based evidence, because the authors themselves may not have been explicit about this.

Implications

Qualitative research can help to optimise interventions and trial procedures, measure the right outcomes in the right way, and understand more about the health condition under study, which then feeds back into optimising interventions for that condition. Researchers cannot undertake qualitative research about all these issues for every trial. They may wish to consider problems they think they might face within a particular trial and prioritise the use of qualitative research to address these issues, while also staying open to emergent issues. The framework presented here may be productively used by researchers to learn about the range of ways qualitative research can help RCTs to report explicitly the implications for future trials or evidence of effectiveness of health interventions so that potential value can be realised. We see this framework as a starting point that hopefully will develop further in the future.

Conclusions

A large amount of qualitative research undertaken with specific trials has been published, addressing a wide range of aspects of trials, with the potential to improve the endeavour of generating evidence of effectiveness of health interventions. Researchers can increase the impact of this work on trials by undertaking more of it at the pretrial stage and being explicit within their articles about the learning for trials and evidence-based practice.

Supplementary Material

Footnotes

Contributions: Contributors AOC and KJT designed the study. AOC, KJT and JH obtained funding. AOC, KJT, SJD and AR collected and analysed the data. JH commented on data collection and analysis. AOC wrote the first draft and all authors contributed to the editing of the drafts. AOC is the guarantor of the manuscript.

Funding: Medical Research Council reference G0901335.

Competing interests: None.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

References

- 1.Campbell M, Fitzpatrick R, Haines A, et al. Framework for design and evaluation of complex interventions to improve health. BMJ 2000;321:694–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Campbell N, Murray E, Darbyshire J, et al. Designing and evaluating complex interventions to improve health care. BMJ 2007;334:455–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Craig P, Dieppe P, Macintyre S, et al. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ 2008;337:979–83 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Oakley A, Strange V, Bonell C, et al. Process evaluation in randomised controlled trials of complex interventions. BMJ 2006;332:413–16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bradley F, Wiles R, Kinmonth AL, et al. Development and evaluation of complex interventions in health services research: case study of the Southampton heart integrated care project (SHIP). BMJ 1999;318:711–15 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Donovan J, Mills N, Smith M, et al. Improving design and conduct of randomised trials by embedding them in qualitative research: ProtecT (prostate testing for cancer and treatment) study. BMJ 2002;325:766–70 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lewin S, Glenton C, Oxman AD. Use of qualitative methods alongside randomised controlled trials of complex healthcare interventions: methodological study. BMJ 2009;339:b3496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Creswell JW, Fetters MD, Plano VL Clark, et al. Mixed methods intervention trials. In: Andrew S, Halcomb EJ, eds. Mixed methods research for nursing and the health sciences. Oxford, UK: Blackwell Publishing Ltd, 2009:161–80 [Google Scholar]

- 9.Sandelowski M. Using qualitative methods in interventions studies. Res Nurs Health 1996;19:359–64 [DOI] [PubMed] [Google Scholar]

- 10.Grant MJ, Booth A. A typology of reviews: an analysis of 14 review types and associated methodologies. Health Info Libr J 2009;26:91–108 [DOI] [PubMed] [Google Scholar]

- 11.Lefebvre C, Manheimer E, Glanville J. Chapter 6: searching for studies. In: Higgins JPT, Green S, eds. Cochrane handbook for systematic reviews of interventions. Version 5.0.2 [updated September 2009] The Cochrane Collaboration, 2009. http://www.cochrane-handbook.org [Google Scholar]

- 12.Grant MJ. Searching for qualitative research studies on the Medline database [oral presentation]. Qualitative Evidence Based Practice Conference 14–16 May 2000 Coventry University, UK, 2000 [Google Scholar]

- 13.Lefebvre C, Manheimer E, Glanville J. Chapter 6: Searching for studies. In: Higgins JPT, Green S, eds. Cochrane handbook for systematic reviews of interventions. Version 5.0.1 [updated September 2008] The Cochrane Collaboration, 2008. http://www.cochrane-handbook.org [Google Scholar]

- 14.Ritchie J, Spencer L. Qualitative data analysis for applied policy research. In: Bryman A, Burgess RG, eds. Analysing qualitative data. Routledge, 1994:173–94 [Google Scholar]

- 15.Popay J, Williams G. Qualitative research and evidence-based healthcare. J R Soc Med 1998;(Suppl 35):32–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Gulbrandsen P, Krupat E, Benth JS, et al. “Four Habits” goes abroad: report from a pilot study in Norway. Patient Educ Couns 2008;72:388–93. [DOI] [PubMed]

- 17.Romo N, Poo M, Ballesta R, the PEPSA team From illegal poison to legal medicine: a qualitative research in a heroin-prescription trial in Spain. Drug Alcohol Rev 2009;28:186–95 [DOI] [PubMed] [Google Scholar]

- 18. Byng R, Norman I, Redfern S, et al. Exposing the key functions of a complex intervention for shared care in mental health: case study of a process evaluation. BMC Health Serv Res 2008;8:274. [DOI] [PMC free article] [PubMed]

- 19. Dowrick C, Gask L, Hughes JG, et al. General practitioners' views on reattribution for patients with medically unexplained symptoms: a questionnaire and qualitative study. BMC Fam Pract 2008;9:46. [DOI] [PMC free article] [PubMed]

- 20. Zhang G, Malik VS, Pan A, et al. Substituting brown rice for white rice to lower diabetes risk: a focus-group study in Chinese adults. J Am Diet Assoc 2010;110:1216–21. [DOI] [PubMed]

- 21. Pope DS, Atkins S, Deluca AN, et al. South African TB nurses' experiences of provider-initiated HIV counseling and testing in the Eastern Cape Province: a qualitative study. AIDS Care 2010;22:238–45. [DOI] [PubMed]

- 22. Mukoma W, Flisher AJ, Ahmed N, et al. Process evaluation of a school-based HIV/AIDS intervention in South Africa. Scand J Public Health 2009;37(Suppl 2):37–47. [DOI] [PubMed]

- 23. Carnes D, Anwer Y, Underwood M, et al. Influences on older people's decision making regarding choice of topical or oral NSAIDs for knee pain: qualitative study. BMJ 2008;336:142–5. [DOI] [PMC free article] [PubMed]

- 24. Dormandy E, Kavalier F, Logan J, et al. Maximising recruitment and retention of general practices in clinical trials: a case study. Br J Gen Pract 2008;58:759–66. [DOI] [PMC free article] [PubMed]

- 25. Velott DL, Baker SA, Hillemeier MM, et al. Participant recruitment to a randomized trial of a community-based behavioral intervention for pre- and interconceptional women findings from the Central Pennsylvania Women's Health Study. Womens Health Issues 2008;18:217–24. [DOI] [PubMed]

- 26. Kohara I, Inoue T. Searching for a way to live to the end: decision-making process in patients considering participation in cancer phase I clinical trials. Oncol Nurs Forum 2010;37:E124–32. [DOI] [PubMed]

- 27. Campbell MK, Skea ZC, Sutherland AG, et al. Effectiveness and cost-effectiveness of arthroscopic lavage in the treatment of osteoarthritis of the knee: a mixed methods study of the feasibility of conducting a surgical placebo-controlled trial (the KORAL study). Health Technol Assess 2010;14:1–180. [DOI] [PubMed]

- 28. Tutton E, Gray B. Fluid optimisation using a peripherally inserted central catheter (PICC) following proximal femoral fracture: lessons learnt from a feasibility study. J Orthopaedic Nursing 2009;13:11–8.

- 29. Penn C, Evans M. Recommendations for communication to enhance informed consent and enrolment at multilingual research sites. Afr J AIDS Res 2009;8:285–94. [DOI] [PubMed]

- 30. Shagi C, Vallely A, Kasindi S, et al. A model for community representation and participation in HIV prevention trials among women who engage in transactional sex in Africa. AIDS Care 2008;20:1039–49. [DOI] [PubMed]

- 31. Grbich C, Abernethy AP, Shelby-James T, et al. Creating a research culture in a palliative care service environment: a qualitative study of the evolution of staff attitudes to research during a large longitudinal controlled trial (ISRCTN81117481). J Palliat Care 2008;24:100–9. [PubMed]

- 32. Alraek TM. Acupuncture for menopausal hot flashes: A qualitative study about patient experiences. J Altern Complement Med 2009;15:153–8. [DOI] [PubMed]

- 33. Hoddinott P, Britten J, Pill R. Why do interventions work in some places and not others: a breastfeeding support group trial. Soc Sci Med 2010;70:769–78. [DOI] [PubMed]

- 34. Farquhar M, Ewing G, Higginson IJ, et al. The experience of using the SEIQoL-DW with patients with advanced chronic obstructive pulmonary disease (COPD): issues of process and outcome. Qual Life Res 2010;19:619–29. [DOI] [PubMed]

- 35. Nakash R, Hutton J, Lamb S, et al. Response and non-response to postal questionnaire follow-up in a clinical trial: a qualitative study of the patient's perspective. J Eval Clin Pract 2008;14:226–35. [DOI] [PubMed]

- 36. Abetz L, Rofail D, Mertzanis P, et al. Alzheimer's disease treatment: Assessing caregiver preferences for mode of treatment delivery. Adv Ther 2009;26:627–44. [DOI] [PubMed]

- 37. Chew-Graham CA, Sharp D, Chamberlain E, et al. Disclosure of symptoms of postnatal depression, the perspectives of health professionals and women: a qualitative study. BMC Fam Pract 2009;10:7. [DOI] [PMC free article] [PubMed]

- 38.Hoddinott P, Britten J, Prescott GJ, et al. Effectiveness of policy to provide breastfeeding groups (BIG) for pregnant and breastfeeding mothers in primary care: cluster randomised controlled trial. BMJ 2009;338:a3026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Noyes J, Popay J, Pearson A, et al. Chapter 20 Qualitative research and Cochrane reviews. Cochrane Handbook 2011, http://www.cochrane-handbook.org (accessed 16 May 2011).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.