Abstract

Do we access information from any object we can see, or do we only access information from objects that we intend to name? In three experiments using a modified multiple object naming paradigm, subjects were required to name several objects in succession when previews appeared briefly and simultaneously in the same location as the target as well as at another location. In Experiment 1, preview benefit—faster processing of the target when the preview was related (a mirror image of the target) compared to unrelated (semantically and phonologically)—was found for the preview in the target location but not a location that was never to be named. In Experiment 2, preview benefit was found if a related preview appeared in either the target location or the third-to-be-named location. Experiment 3 showed the difference between results from the first two experiments was not due to the number of objects on the screen. These data suggest that attention serves to gate visual input about objects based on the intention to name them, and that information from one intended-to-be-named object can facilitate processing of an object in another location.

Keywords: Parallel Processing, Object Naming, Eye Movements, Attention

People do not perceive, think, and act on one object at a time. We can see multiple objects in our environments simultaneously, and we can describe them in sequence rapidly, fluently, and accurately. To do so, we must efficiently manage multiple pieces of information from multiple sources. But how do the mechanisms of visual perception and language production do so?

One line of evidence suggests that we activate linguistic information from visually presented objects, even though we intend to ignore them. However, activation of linguistic information from to-be-ignored objects only seems to arise when there is some uncertainty as to whether the object is, indeed, a distractor. For example, Morsella and Miozzo (2002) presented subjects with two superimposed pictures of objects, one red and one green, and had them name the green object while ignoring the red object—a picture-picture interference paradigm (Tipper, 1985). They found phonological facilitation—subjects named the target object faster when the distractor object was phonologically related than when it was unrelated (see also Navarrete & Costa, 2005; cf., Jescheniak, Oppermann, Hantsch, Madebach & Schriefers, 2009). Meyer and Damian (2007) found similar results regardless of the type of phonological relatedness (i.e., whether target-distractor pairs shared word initial, word final segments, or were homophones). An important aspect of these studies is that because the two objects were superimposed, naming the target object likely encouraged or required some processing of the distractor object as well. Relatedly, Navarrete and Costa (2005) showed that subjects named the color of a pictured object faster when the object name was phonologically similar to the color name than when it was dissimilar; this shows activation of linguistic information when target and distractor appear in the same location.

Distractor objects do not need to superimpose targets to cause activation of their linguistic information. Humphreys, Lloyd-Jones, and Fias (1995) showed that when the target and distractor pictures are partially superimposed but the cue to which one should be named comes at a delay after the offset of the pictures, semantic interference—slower responses when the target and distractor were semantically related than unrelated—is observed. Thus, linguistic information about distractor objects can be activated even when the target and distractor pictures are not superimposed. This is most likely due to the fact that the subject does not know a priori which picture is the target and which picture is the distractor, and therefore some processing of both objects occurs.

Relatedly, in a picture-picture interference paradigm, Glaser and Glaser (1989; Experiment 6) presented subjects with two pictures of objects in different locations, separated by variable SOAs (stimulus onset asynchronies) and required them to name either the first or second object and ignore the other object. Subjects were not able to anticipate the location of the target and the distractor before the first stimulus onset. Results showed semantic interference. Here, the inability to distinguish target from distractor a priori likely leads to access of linguistic information from the distractor.

Finally, semantic interference (Oppermann, Jescheniak, Schriefers, & Gorges, 2010) and phonological facilitation (Madebach, Jescheniak, Oppermann, & Schriefers, 2010) effects from adjacent but not superimposed objects have been observed. In these experiments, subjects were cued to name one picture and told to ignore the other. However, the location of the pictures varied across trials so that subjects were not able to predict which object was the target and which was the distractor. This very likely encouraged processing of both target and distractor, thereby leading to activation of linguistic information from distractors. Furthermore, Oppermann, Jescheniak, and Schriefers (2008) showed that if target and distractor objects were part of an integrated scene (e.g., a mouse holding a piece of cheese next to a picture of cheese) distractor objects caused phonological facilitation, but not when the objects were not integrated (e.g., a picture of a finger next to a picture of cheese). One interpretation of these results is that integration into a thematically coherent scene, like superimposing of pictures and uncertainly of location, allows sufficient distractor processing to lead to activation of linguistic information.

In short, evidence for access of information from distractor objects has been observed, but distractor processing has only been observed when the distractor object cannot be excluded from processing a priori. The difficulty of excluding distractor objects can arise because (a) distractor pictures were superimposed on target pictures (Morsella & Miozzo, 2002; Navarette & Costa, 2005; Tipper, 1985), (b) subjects were unable to anticipate before trial onset which object is the target and which is the distractor because of spatial (Glaser & Glaser, 1989; Madebach et al., 2010; Oppermann et al., 2010) or temporal (Humphreys, Lloyd-Jones, & Fias, 1995) uncertainty, or (c) targets and distractors were integrated into a thematically coherent scene (Oppermann et al., 2008).

One way to interpret this set of results is that the above-described factors that lead to linguistic activation from distractor objects corresponds to the allocation of attention1 to those objects, as compelled by an intention to name them. This may operate through a mechanism that boosts propagation of information from sensory areas to language processing areas in the brain (see Strijkers, Holcomb, & Costa, 2011). When attention is not allocated to distractor objects – when they are not superimposed on targets and when their status as to-be-ignored is known a priori, linguistic activation from distractor objects is not observed (Opperman et al., 2008). The present study directly tests this idea: mainly, whether intention to eventually name a spatially, temporally, and conceptually distinct “distractor” object determines whether information is activated from it when all else is held equal.

The literature described thus far can be characterized as assessing whether exogenous factors (spatial overlap, spatial uncertainty, temporal uncertainty, and thematic integration) cause the influence of an intention to name the target object to be applied also to distractor objects, and thus causes linguistic activation from those objects. Here, we address whether endogenously allocated intention to name might also affect activation of linguistic information from visually perceived objects. That is, one useful way to regulate information efficiently is to only activate linguistic information for objects that speakers intend to name and not activate linguistic information for objects that they do not intend to name (but see Harley, 1984 for intrusions prompted by unintended but visually present environmental stimuli). If so, then we should observe facilitation effects from (spatially, temporally, or conceptually distinct) distractors only when subjects intend to ultimately name those objects and not from distractors that subjects never intend to name.

To assess whether intention to name gates the activation of information from objects, we used a variant of the multiple object naming paradigm (Morgan & Meyer, 2005) with a gaze-contingent display change manipulation (Pollatsek, Rayner, & Collins, 1984; Rayner, 1975). In the standard paradigm, three objects are presented simultaneously on a computer screen in an equilateral triangle, and the subject is instructed to name them in a prescribed order. The object of interest is the image in the second-to-be-named location. While the first image is fixated, the second image is a preview object that changes to the target (named object) when the subject makes a saccade to it. These studies report preview benefit—faster processing of the target when the preview was related compared to unrelated to the target. Preview benefit can be obtained from visually similar objects (Pollatsek et al., 1984), objects that represent the same concept (Meyer & Dobel, 2003; Pollatsek et al., 1984), and objects with phonologically identical (homophonous) names (Meyer, Ouellet, & Hacker, 2008; Morgan & Meyer, 2005; Pollatsek et al., 1984); these studies thus provide evidence that visual, semantic, and phonological representations of the upcoming object are processed concurrently during fixation on the current object (for reviews see Meyer, 2004; Schotter, 2011). In all, this shows access of information from an object far from fixation in extrafoveal vision (approximately 15–20 degrees) that is eventually to be named and therefore should not be ignored, but is not the current target of production.

The question then is whether activation of information from these extrafoveal objects in the multiple-object naming paradigm is determined by the intention to name those objects. One possibility is that intention-to-name does not gate information activation, so that any item within view affects production-relevant behavior. If this were the case, we would expect to see an effect of previews of objects in any location (regardless of whether they were to-be-named or ignored) on processing of a target object. Alternatively, objects may only have information activated about them if they are relevant to the task at hand—an object that needs to be named. If this were the case, we would expect to see an effect on processing of a target object of previews of objects only in locations that subjects anticipate needing to name. To test these possibilities, we modified the multiple object naming paradigm to determine whether information about objects that can be completely excluded from the task affect processing of objects that are intended to be processed.

Experiment 1

In Experiment 1, we tested whether unnamed objects are processed and if so, whether the information obtained from unnamed objects can affect processing of an object in another location. In this experiment, there were four objects on the screen, but only three were to be named. The unnamed object was always in the same location throughout the entire experiment and subjects were told at the outset that they were never to name it. Fifty milliseconds after trial onset, while the subject was looking at the first object, previews appeared in the target (second) location and in the unnamed location for 200 ms; the relationship between those previews and the target was manipulated. Previews in both locations were either related (a mirror image of the target) or unrelated (a different object), yielding a 2 (preview-target relationship) × 2 (location) design. We used a mirror image preview so that the related preview had the greatest opportunity to cause a preview benefit even from the unnamed locations, but so that there would be a visual difference between the preview in the second location and the target on all trials (thus, if preview benefit were obtained for the preview in the second location but not the preview in the unnamed location it could not be due to complete visual overlap). Mirror image previews produce preview benefits almost as large as those obtained from identical previews (Henderson & Siefert, 1999; cf., Pollatsek et al., 1984)2.

Some previous studies of object naming have reported naming latencies when the eyes start on a fixation cross and the target object is named first (Morgan & Meyer, 2005; Pollatsek et al., 1984). However, when subjects name a sequence of objects, and the object of interest is not the first to-be-named object (as here), then it is difficult to precisely measure the voice onset time of a noun when it is embedded between other words. Instead, gaze duration (the sum of all fixations on the object before moving to another object) has been used as the primary index of processing difficulty (Meyer et al., 2008; Morgan & Meyer, 2005). Gaze durations are sensitive to many properties of objects in production studies, such as visual degradation (Meyer, Sleiderink & Levelt, 1998), word frequency (Meyer et al., 1998), word length (Zelinsky & Murphy, 2000), codability (Griffin, 2001), and phonological properties (Meyer & van der Meulen, 2000). Given that fixation times are diagnostic of production effects, in the present experiments, gaze durations on the target are reported instead of naming latencies 3.

Based on prior multiple-object naming research, preview benefit should be observed from previews at the second (target) location. If there were a significant preview benefit from the unnamed object preview, it would suggest that information is not gated by attention as implemented by intention-to-name. On the other hand, if the unnamed location preview does not provide preview benefit to processing the target it would suggest that that object was excluded from processing altogether, presumably because the subject did not need to name it.

Method

Subjects

Twenty-four members of the University of California, San Diego community participated in the experiment. All were native English speakers who were naïve concerning the purpose of the experiment. They all had normal or corrected-to-normal vision. In all experiments, subjects’ ages ranged from 18 to 27.

Apparatus

Eye movements were recorded via an SR Research Ltd. Eyelink 1000 eye tracker in remote setup (so that there was no head restraint, but head position was monitored, as well) with a sampling rate of 500 Hz. Viewing was binocular, but only the right eye was monitored. Following calibration, eye position errors were less than 1°. Subjects were seated approximately 66 cm away from the monitor, a 19 in Viewsonic VX922 LCD monitor (Viewsonic Corporation, Walnut, CA).

Materials and Design

One hundred and ninety-two line drawings of objects with monosyllabic names were selected from the International Picture Naming Project database (IPNP, Bates et al., 2003). Using measures listed in the database4, we selected objects that had a large proportion of usable responses from the IPNP (M = .98, SD = .03), high name agreement for the dominant name (M = 2.58 alternative names, SD = 1.64), and fast response times (M = 931 ms, SD = 161). Object names ranged in log transformed CELEX frequency from 0 to 7.40 (M = 3.32, SD = 1.38) and object images ranged in complexity (as assessed by the jpg file size) from 3730 bytes to 48626 bytes (M = 15758 bytes, SD = 7720). These objects consisted of 48 target items, paired with mirror image and 48 unrelated preview objects, as well as 48 objects that appeared in the first location and 48 that appeared in the third location. Unrelated objects were chosen so that they were not semantically or phonologically related to the target and mirror image previews were created by flipping the target across the vertical midline (so that the right side of the image was on the left and vice versa). Target and unrelated items did not differ significantly in terms of percent usable responses, log frequency, or visual complexity (all ps > .2).

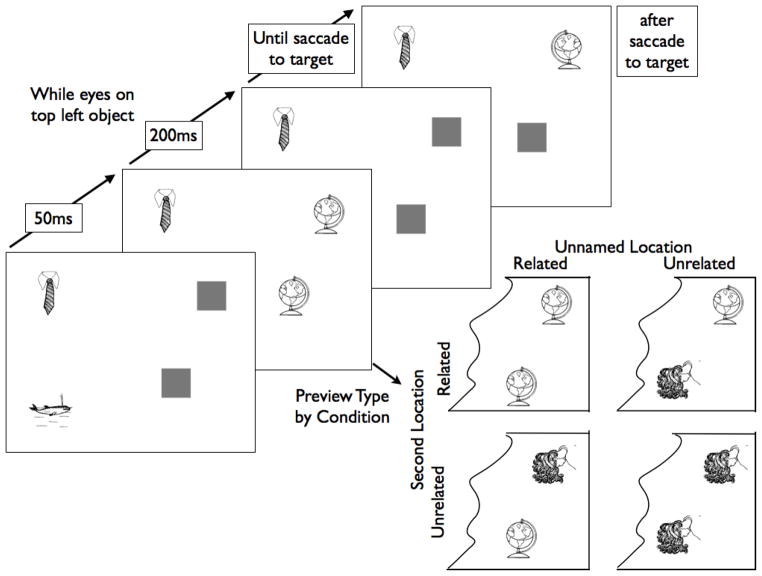

In this experiment, there were four object locations on the screen (see Figure 1) and subjects were instructed to name the top left, then the top right, and then the bottom left image. Previews appeared in the second and unnamed locations (location 4) and were either related (mirror image) or unrelated to the target that appeared when the subject made a saccade to the second location. When the subject’s saccade crossed an invisible boundary located two degrees to the right of the right-hand edge of the first object, the target appeared. The same unrelated object was used in both the second and unnamed locations for each target. The relationship between the preview-target relationship and location yielded a 2×2 design. Previews appeared in both locations to ensure that the same visual and timing manipulations were implemented on the screen across all trials, and so the only difference across conditions was the relationship between the preview in a given location and the target.

Figure 1.

The display during a trial in Experiment 1. Subjects are to name the top-left object, then the top-right object, then the bottom-left object; the bottom-right object is never named. During fixation on the first (top left) object, previews appear in both the second and unnamed locations. Once the subject makes a saccade to the second object the target appears there. On a correct trial, a subject would say “Tie, globe, whale.” The bottom right hand of the figure represents the four possible preview combinations in the experiment: related (globe) and unrelated (hair).

Procedure

To ensure that subjects used the correct names for items, the procedure began with a training session. Each of the 192 line drawings (including the unnamed preview objects) was presented individually on the computer screen and subjects named them using bare nouns. Naming errors were corrected and subjects were asked to use the correct name for the remainder of the experiment. Each object was displayed as a black line drawing in the center of the computer screen until the correct response was made or until the experimenter corrected the subject. It is important to note that the unnamed preview objects were subjected to the same training procedure as the target (mirror image preview) objects and therefore any lack of a preview benefit cannot be due to the fact that they had not been familiarized with the object before the experiment started.

After training, the subject put on a headset microphone and a target sticker was placed on the subject’s forehead (to control for head movement when measuring eye movements). The eye tracker was calibrated using a 9-point calibration, allowing for measurement of eye location in both the x- and y-axes. On each trial, subjects saw a fixation point in the center of the screen. If the eye tracker was accurately calibrated, the experimenter pressed a button, causing the fixation point to disappear and a black box to appear in the top left quadrant of the screen (the location of the first object). Once the subject moved his or her eyes into this region, images appeared in all locations: the two objects appeared in the first- and third- to be named locations and the two gray boxes appeared in the preview locations (the second-to-be named and unnamed locations). The objects were black line drawings on a white background and arranged in four quadrants of the screen with the gray boxes so that the second and unnamed locations were equidistant from the first object location (21 degrees of visual angle, measured from midpoint to midpoint) and the third object location was the same distance (21 degrees) from the second object location. All objects subtended approximately 5 degrees of visual angle.

The target and unnamed locations started as gray boxes and 50 ms after trial onset (fixation in the box that triggered the display to appear) previews appeared in both locations. Previews appeared for 200 ms and then reverted back to gray boxes. When the subject’s eyes crossed an invisible boundary to the right of the first object, the gray box in the second location changed to the target. This occurred regardless of whether the display changes of the preview objects were complete. The display change took (on average) 15 ms to complete and occurred during a saccade (when vision is suppressed), which took approximately 50–60 ms to complete. Although some subjects were aware that display changes occurred (both the briefly presented previews and the changes to the target), they only reported being able to notice what the preview object was about 10% of the time, and most subjects were unable to notice any relationship between the previews and target. Objects remained in view until the subject named them all and the experimenter pressed a button to end the trial. The experimenter coded whether the participant named both the first and second objects correctly online. The instructions did not mention any display changes. The experiment lasted approximately 45 minutes.

Results and Discussion

Data were excluded if subjects (a) misnamed or disfluently named the first or second object (i.e., the first word in the utterance was not the target name; 2% of the data), (b) looked at the unnamed object location, looked back from the target object to the first object, or there was track loss on the target object (31% of the data) or (c) gaze durations on the target object were more than 2.5 standard deviations from the mean, shorter than 325 ms or longer than 1325 ms (7% of the data; gaze durations outside this range would likely not reflect normal, fluent naming). After excluding these data the number of observations did not significantly differ across conditions (all ps > .5). Despite this, these exclusions left different numbers of missing data points across subjects, items, and conditions, so we report inferential statistics based on linear mixed-effects models (LMMs) instead of traditional ANOVAs, which are not robust to unbalanced designs. In the LMMs, preview type at each location was centered and entered as a fixed effect, and subjects and items were entered as crossed random effects (see Baayen, Davidson, & Bates, 2008). In order to fit the LMMs, the lmer function from the lme4 package (Bates & Maechler, 2008) was used within the R Environment for Statistical Computing (R Development Core Team, 2009). We report regression coefficients (b) that estimate the effect size (in milliseconds) of the reported comparison, standard errors, and the t-value of the effect coefficient but not the t-value’s degree of freedom5. For all LMMs reported, we started with a model that included all random effects, including random intercepts and random slopes for all main effects and interactions. We then iteratively removed effects that did not significantly increase the model’s log-likelihood and report the statistics output by this model.

Preview conditions did not significantly affect gaze durations on Object 1 (all ts < 2). This is unsurprising, because as Object 1 is viewed, the target (Object 2) has not yet appeared and therefore there is not yet a relationship between the preview and target. Means and standard deviations of the gaze duration on the target are shown in Table 1. Gaze durations on the target were shorter when the preview in the second location was a mirror image compared to unrelated, but there was no difference in gaze durations when the preview in the unnamed location was a mirror image compared to unrelated.

Table 1.

Gaze durations (in ms) on the target as a function of preview type in the second and unnamed locations in Experiment 1. Standard errors of the mean are in parentheses.

| Second Location Preview | Unnamed Location Preview

|

|||

|---|---|---|---|---|

| Mirror Image | Unrelated | Preview Benefit | Average Preview Benefit | |

| Mirror Image | 683 (9) | 688(9) | 5 | 3 |

| Unrelated | 705 (9) | 706 (9) | 1 | |

| Preview Benefit | 19 | 25 | ||

| Average Preview Benefit | 22 | |||

The LMM revealed a significant preview benefit based on the relatedness of the preview in the second location (b = 18.94, SE = 7.43, t = 2.55; an average preview benefit of 22.5 ms). Subjects looked at the target for less time when the preview in that location was a mirror image of the target that ultimately appeared there than when it was unrelated. There was no effect of the relatedness of the unnamed object (b = 10.26, SE = 7.43, t < 2; an average preview benefit of 3 ms). Thus, information was not obtained from the preview in the unnamed location and therefore the relatedness between that preview and the target (mirror image vs. unrelated) did not affect processing of the target.

The interaction between the identities of the previews in the second and unnamed locations was not significant (b = 7.09, SE = 14.84, t < .5). The lack of interaction shows that the benefit observed from a mirror image versus unrelated preview at the target location was not affected by whether the simultaneous preview from the unnamed location was a mirror image or was unrelated. Thus, mirror image versus unrelated previews from the unnamed location neither directly affected target gaze durations (the absence of a main effect of the preview type at the unnamed location), nor did it modulate the significant effect observed from previews at the target location (the absence of an interaction between the preview types at both locations) – in short, the preview in the unnamed location had no effect on processing of the target at all. Note that the lack of effect of preview benefit from the unnamed location was not due to distance from fixation, as both preview objects were equidistant from the first object.

There is another possible explanation for the lack of an effect of the preview in the unnamed location observed in Experiment 1: Even though information may be processed from the unnamed location, it may be confined to that location and may not affect processing of another object (e.g., Hollingworth & Rasmussen, 2010, but see Henderson & Seifert, 2001). Indeed, this could be seen as consistent with the evidence above showing that linguistic information is activated from distractor pictures that are in the same location as the target. To test this possibility, in Experiment 2 the procedure from Experiment 1 was modified so that the previews appeared in the target and in another, to-be-named location: the location where the third object will eventually appear.

Experiment 2

If the previews from the unnamed location in Experiment 1 were ineffective because information that is activated from unnamed locations is confined to objects processed at that location, then in Experiment 2, mirror image versus unrelated preview in a to-be-named but different (i.e., the third object) location should also be ineffective, because information activated from that location should only affect processing at the third location. If, on the other hand, the effect from the unnamed location in Experiment 1 was absent because the unnamed object was not intended to be named, then in Experiment 2 when the preview in the other location is one that is also eventually to-be-named, we should see an effect of the preview in the third location on processing of the target. Indeed, data from Henderson and Siefert (2001) suggest that when subjects move their eyes to an extrafoveal pair of objects (located side-by-side) and are required to name one of them, processing of the target is facilitated both when a preview of that target is available in the same location as where the target ultimately appears as well as when it switches its location with the other object. Furthermore, it would suggest that the lack of preview benefit from the unnamed object preview in Experiment 1 was because speakers do not activate information from objects that are irrelevant for the task at hand.

Method

Subjects

Twenty members of the University of California, San Diego community participated in the experiment with the same inclusion criteria as Experiment 1.

Design

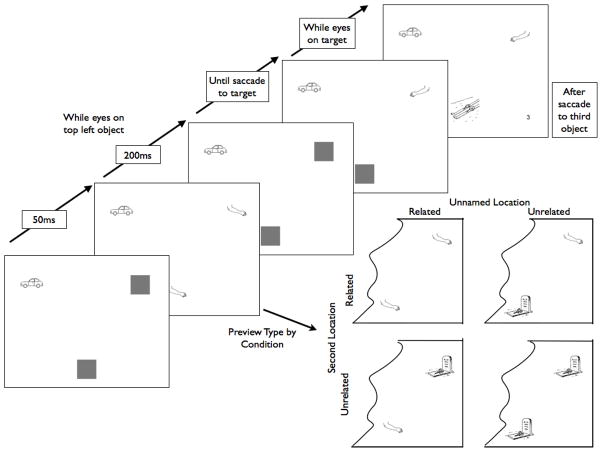

Experiment 2 was like Experiment 1 except that previews appeared in the second and third location instead of the second and unnamed location (see Figure 2). Consequently, there were only three objects on the screen, arranged in an inverted equilateral triangle (21 degrees of visual angle apart), but a digit appeared in a fourth location upon a saccade to the third object to give the subjects a reason to leave the third object (i.e., in order to name the digit). Additionally, the object to be named in the third location did not appear until the saccade toward it, to ensure that the unrelatedness between the preview and the third object did not interfere with processing of the target. Note that when related, the preview in the third location was related (a mirror image of) to the eventual target in the second location. As in Experiment 1, both previews were equidistant from the first object6.

Figure 2.

The display during a trial in Experiment 2. Subjects are to name the top-left object, then the top-right object, then the bottom-left object, then the digit. During fixation on the first (top left) object, previews appear in both the second and third locations. Once the subject makes a saccade to the second object the target appears there. Once the subject makes a saccade to the third location the third object appears there and a digit appears in the bottom right hand corner. On a correct trial, a subject would say “Car, arm, skis.” The bottom right hand of the figure represents the four possible preview combinations in the experiment: related (arm) and unrelated (grave).

Results and Discussion

The same data filtering process and analyses as in Experiment 1 were used. Data were excluded if subjects (a) misnamed or disfluently named the first or second object (3% of the data), (b) looked at the unnamed object location, looked back from the target object to the first object, or there was track loss on the target object (14% of the data), or (c) gaze durations on the target object were more than 2.5 standard deviations from the mean, shorter than 325 ms or longer than 1325 ms (8% of the data). After excluding these data the number of observations did not significantly differ across conditions (all ps > .25).

As in Experiment 1, preview conditions did not significantly affect gaze durations on Object 1 (all ts < 2). Means and standard deviations of gaze duration on the target are shown in Table 2. Gaze durations on the target were shorter when at least one of the two previews was a mirror image of the target than when both the previews were unrelated.

Table 2.

Gaze durations on the target as a function of preview type in the second and third locations in Experiment 2. Standard errors of the mean are in parentheses.

| Second Location Preview | Third Location Preview

|

|||

|---|---|---|---|---|

| Mirror Image | Unrelated | Preview Benefit | Average Preview Benefit | |

| Mirror Image | 600 (8) | 598 (8) | −2 | 11.5 |

| Unrelated | 609 (8) | 634 (8) | 25 | |

| Preview Benefit | 9 | 36 | ||

| Average Preview Benefit | 22.5 | |||

The LMM revealed a main effect of relatedness of the preview in the second location (b = 20.34, SE = 10.13, t = 2.01; an average preview benefit of 22.5 ms). As in Experiment 1, information obtained from the location where the target would ultimately appear facilitated processing of the target if the preview was a mirror image of the target. There was no main effect of relatedness of the preview in the third location (b = 8.60, SE = 6.01, t < 1; an average preview benefit of 11.5 ms), indicating no overall effect from the third location, but there was a significant interaction between the identities of the previews in the second- and third-to-be-named locations (b = 25.19, SE = 12.03, t = 2.10). The interaction indicates that if either preview is a mirror image of the target, processing of the target is significantly faster than if both previews are unrelated. The fact that previews from the third location facilitated gaze durations at the target location shows that subjects obtained information from both to-be-named locations and that information obtained from each can affect subsequent processing.

Thus, mirror image versus unrelated previews at the third location did affect gaze durations of objects at the second location. This shows that information is obtained from at least two objects in parallel, and that the information obtained from an object can affect processing of objects at other locations. Furthermore, this implies that the lack of preview benefit found in Experiment 1 is due to the fact that subjects never needed to name the unnamed object and could therefore exclude that object a priori. Thus, information can be obtained from multiple objects simultaneously and can be used to benefit processing of objects in other locations, provided that that object is intended to be named.

Experiment 3

It is possible that the difference in the pattern of results found between Experiment 1 (no processing of a never-to-be-named object) and Experiment 2 (processing of a to-be-named object) was due to the difference in the number of objects on the screen. This would be so if, when there are three objects on the screen (in Experiment 2), subjects are able to extract more information from all objects in parallel, whereas when there are four objects on the screen (in Experiment 1), the additional object in view leads to less processing of those objects in parallel. To test this possibility, in Experiment 3 we presented three objects on the screen, of which subjects were only required to name two (in addition to a digit). If we found no effect of the preview in the unnamed location it would suggest that intention to name an object in a particular location is the constraining factor leading to whether or not that object is processed. If, however, we found an effect of the preview in the unnamed (third) location it would suggest that the lack of an effect of the unnamed location in Experiment 1 was due to the greater number of objects on the screen.

Method

Subjects

Twenty-four members of the University of California, San Diego community participated in the experiment with the same inclusion criteria.

Design

Experiment 3 was similar to Experiment 2 except that subjects were told they only had to name the first object, the second object, and then a digit in the lower right hand corner of the display. As in Experiment 2, previews appeared in the second and third location, but note that in this experiment, the third object is an unnamed object, similar to Experiment 1. The digit appeared upon a saccade to the second object to give the subjects a reason to leave the second object (i.e., in order to name the digit). As in the prior experiments, both previews were equidistant from the first object (21 degrees).

Results and Discussion

The same data filtering process and analyses as in Experiments 1 and 2 were used. Data were excluded if subjects (a) misnamed or disfluently named the first or second object (< 1% of the data), (b) looked at the unnamed object location, looked back from the target object to the first object, or there was track loss on the target object (19% of the data) or (c) gaze durations on the target object were more than 2.5 standard deviations from the mean, shorter than 325 ms or longer than 1325 ms (8% of the data). After excluding these data the number of observations did not significantly differ across conditions (all ps > .15).

As in Experiments 1 and 2, preview conditions did not significantly affect gaze durations on Object 1 (all ts < 2). Means and standard deviations of gaze durations on the target are shown in Table 3. Gaze durations on the target were shorter when the preview in the target location was a mirror image than when it was unrelated to the target, but there was no difference between whether the preview in the unnamed location was a mirror image or unrelated.

Table 3.

Gaze durations on the target as a function of preview type in the second and unnamed (third) locations in Experiment 3. Standard errors of the mean are in parentheses.

| Second Location Preview | Unnamed (Third) Location Preview

|

|||

|---|---|---|---|---|

| Mirror Image | Unrelated | Preview Benefit | Average Preview Benefit | |

| Mirror Image | 582 (23) | 586(23) | 4 | .5 |

| Unrelated | 597 (23) | 594(25) | −3 | |

| Preview Benefit | 15 | 8 | ||

| Average Preview Benefit | 11.5 | |||

The LMM revealed a main effect of relatedness of the preview in the second location (b = 11.96, SE = 5.52, t = 2.17; an average preview benefit of 11.5 ms). As in Experiments 1 and 2, information obtained from the location where the target would ultimately be was obtained and facilitated processing of the target if the preview was related to (a mirror image of) the target.

There was no main effect of relatedness of the preview in the third (unnamed) location (b = 1.08, SE = 5.52, t < .5; an average preview benefit of .5 ms). As in Experiment 1, information was not obtained from an object that was not intended to be named and therefore did not affect processing of the target. Additionally, there was no interaction between the identities of the previews in the second and third (unnamed) locations (b = −9.61, SE = 11.05, t < 1). The similarity between these results and those found in Experiment 1 (lack of both a main effect of the unnamed preview and the lack of an interaction between the two preview types) shows that intention to name (not number of objects on the screen) is the determining factor as to whether an object will be processed in parallel with another object.

Because the designs of Experiments 2 and 3 were essentially identical (except for the manipulation of intention to name the object in the other preview location) we performed a between-experiment analysis to test whether the pattern of results were statistically different across the two experiments. The results of the analysis reveal a significant three-way interaction between preview in the second location, preview in the third location, and experiment (b = −32.64, SE = 15.17, t = −2.15), demonstrating that the significant interaction in Experiment 2 is significantly different from the null interaction in Experiment 3.

General Discussion

These experiments illustrate two main points. First, preview benefits are only obtained from an object that will eventually be named. Intention to name, which we take to be an internally driven, attentional influence determines the extent to which an extrafoveal object will be processed. Second, given that an object will be named, the information obtained from that object can influence processing of an object in another location. Because both the preview objects were equidistant from the first object in all experiments, distance from fixation cannot account for the presence or absence of preview benefit. Furthermore, Experiment 3 demonstrated that the lack of preview benefit from the unnamed location in Experiment 1 was not due to the number of objects on the screen.

These experiments confirm that speakers activate information about upcoming objects while they are fixating and processing the current object (Malpass & Meyer, 2010; Meyer et al., 2008; Morgan & Meyer, 2005; Morgan et al., 2008). This is supported by the preview benefit provided by the object in the second location in all three experiments. As mentioned above, this raises the question of how a potentially large amount of incoming information can be gated. The present experiments suggest that speakers exclude from processing objects they do not intend to name, when possible (e.g., based on location). This is supported by the lack of preview benefit based on the unnamed location in Experiments 1 and 3 compared to the preview benefit that was provided by the third object in Experiment 2. When an extrafoveal object can be excluded from processing a priori, information from it will not become activated. By this view, intention-to-name is responsible for excluding influences from many items that are available, and restricts activated information to what will be needed to support production.

These studies suggest that objects that are neither fixated nor intended to be named will not cause activation of information and those that are not currently fixated but are intended to be named will cause activation of information before they are fixated. But, given that an upcoming object is intended to be named, how do speakers refrain from naming that object when it is different from a current target? One possibility is that information is activated less quickly from areas outside the fovea, due to acuity limitations, and therefore any information that is obtained from a foveal object (i.e., the first object) would reach threshold before the activation obtained extrafoveally (i.e., the second to-be-named object). Therefore, the representation of the extrafoveal object would not become fully activated until it is fixated and processed foveally (Morgan, van Elswijk, & Meyer, 2008). Furthermore, this priority of the first object could also be considered an attentional restriction, as working memory demands at fixation influence extrafoveal preview benefits (Malpass & Meyer, 2010). Another possibility is that only some types of information can be obtained in parallel. The present study only demonstrated mirror image preview benefits. Prior research has demonstrated that phonological preview benefits arise in the absence of visual or semantic overlap (i.e., homophones; Meyer et al., 2008; Morgan & Meyer, 2005; Pollatsek et al., 1984). The paradigm used in the present experiments could be used to test whether phonological representations of other extrafoveal objects are activated or whether the phonological preview benefits seen in these studies are due to attention shifting to the next object before the eyes (see Rayner, 1998, 2009 for reviews), during which phonological information is activated.

As mentioned in the introduction, the current study cannot determine whether the information obtained from these objects is conceptual, lexical, or phonological, because the mirror image preview and the target shared all of those features. Future research can employ different types of previews to test what type of information is obtained from an extrafoveal object, given that it is intended to be named. Previous studies that have demonstrated semantic interference have used within-category semantic distractors (e.g., lion and tiger), which would activate separate representations that would compete for selection. The present study used previews that activate the same representation (because the preview and target represented the same object) and would therefore yield facilitation, not interference. If within-category preview-target pairs were used we might see semantic interference similar to that seen in picture-picture interference studies.

The present study does not test whether attention is allocated to objects as a consequence of the intention to name them or whether any type of task will cause the representation of an object to be activated. A simple modification to the present paradigm requiring subjects to make some other response (e.g., categorization) to the objects instead of name them would test this. Thus strictly speaking, the present studies show that “intention to process,” here as implemented by an intention to name, leads to activation of information from an object even before it is fixated.

With respect to the prior literature reporting distractor object processing, these results suggest that the reason those objects were processed (phonologically and/or semantically) was because the subjects were not able to exclude the object from being processed a priori. That is, the distractor objects were processed before the system could determine which object was the target (to-be-named) and which was a distractor (to-be-ignored). Similarly, in experiments showing phonological facilitation from a picture when subjects are required to name the color in which it is displayed (e.g., Navarrete & Costa, 2005), it is likely because processing of the object cannot be separated from processing its color and therefore cannot be excluded a priori. The present study suggests that phonological facilitation and semantic interference would not be observed in such experiments if subjects were able to determine a priori which object they needed to processes and which one they needed to ignore.

In sum, the reported experiments show that if an object is to be named, the speaker activates linguistic information about it before he or she looks at it. That activation can benefit processing not only of the object in that location, but also objects that occupy other spatial locations. Parallel activation of objects, now widely reported in multiple object naming studies (Malpass & Meyer, 2010; Meyer & Dobel, 2004; Meyer, Ouellet & Hacker, 2008; Morgan & Meyer, 2005; Morgan, van Elswijk & Meyer, 2008; see Meyer, 2004 and Schotter, 2011 for reviews), can be restricted such that information is not activated from task-irrelevant objects that can be excluded a priori. These observations point to the strategies that mechanisms of perception and production use to manage information when we talk about what we see.

Acknowledgments

This research was supported by Grants HD26765 and HD051030 from the National Institute of Child Health and Human Development and Grant DC000041 from the National Institute on Deafness and Other Communication Disorders. Portions of the data were presented at the 2010 meeting of the Psychonomic Society, St. Louis, MO, November, and at the 2011 European Conference on Eye Movements, Marseille, France, August. We would like to thank Lauren Kita, Jullian Zlatarev, Tara Chaloukian and Samantha Ma for help with data collection and Klinton Bicknell for help with data analysis.

Footnotes

There is a large literature on attention and many debates over what exactly attention is (Pashler, 1999). A discussion of these debates is beyond the scope of the paper and we do not intend this paper to make any conclusions bearing on such a debate. Therefore, for the purposes of this paper, we will use the term attention as an umbrella mechanism, with intention to name as one way of allocating attention, because object naming is not an automatic process (i.e., in order to name an object one must choose to do so and allocate attention to it and this process operates within conscious awareness and control).

It is worth noting that because the mirror image preview shares almost all information [except orientation] with the target, this experiment will not pinpoint which level of representation can cause any observed preview benefit. However, given our goal of determining whether information is activated at all from never to-be-named objects, it was appropriate to use previews that were most likely to cause a preview benefit that was not solely due to visual properties of the object.

We investigated naming latencies on a subset of the subjects from Experiment 2. There were no effects of our manipulation on naming latencies. We expect that this lack of an effect could be, for the most part, due to the target object being named after the first object, which could influence naming latencies in that the subject cannot start to say the name of the second object before they have finished naming the first.

For descriptions of these measures see http://crl.ucsd.edu/experiments/ipnp/method/getdata/uspnovariables.html

There is no consensus thus far in the literature as to what degree of freedom to use; but because the data set is fairly large, a t-value of 2 or greater can be taken to indicate statistical significance.

Additionally, Experiments 2 and 3 used a 20 in Sony Trinitron CRT monitor with a 1280 × 1024 pixel resolution and an 85 Hz refresh rate.

References

- Baayen R, Davidson D, Bates D. Mixed-effects modeling with crossed and random effects for subjects and items. Journal of Memory and Language. 2008;59:390–412. [Google Scholar]

- Bates E, D’Amico S, Jacobsen T, Szekely A, Andonova E, Devescovi A, Herron D, Lu CC, Pechmann T, Pleh C, Wicha N, Federmeier K, Gerdjikova I, Gutierrez G, Hung D, Hsu J, Iyer G, Kohnert K, Mehotcheva T, Orozco-Figueroa A, Tzeng A, Tzeng O. Timed picture naming in seven languages. Psychonomic Bulletin & Review. 2003;10:344–380. doi: 10.3758/bf03196494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates D, Maechler M. lme4: Linear mixed-effects models using S4 classes. R package version 0.999375–32. 2009 http://CRAN.R-project.org/package=lme4.

- Glaser WR, Glaser MO. Context effects on Stroop-like word and picture processing. Journal of Experimental Psychology: General. 1989;118:13–42. doi: 10.1037//0096-3445.118.1.13. [DOI] [PubMed] [Google Scholar]

- Griffin Z. Gaze durations during speech reflect word selection and phonological encoding. Cognition. 2001;82:B1–B14. doi: 10.1016/s0010-0277(01)00138-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harley TA. A critique of top-down independent levels models of speech production: Evidence from non-plan-internal speech errors. Cognitive Science. 1984;8:191–219. [Google Scholar]

- Henderson J, Siefert A. The influence of enantiomorphic transformation on transsaccadic object integration. Journal of Experimental Psychology: Human Perception and Performance. 1999;25:243–255. [Google Scholar]

- Henderson J, Siefert A. Types and tokens in transsaccadic object identification: Effects of spatial position and left-right orientation. Psychonomic Bulletin & Review. 2001;8:753–760. doi: 10.3758/bf03196214. [DOI] [PubMed] [Google Scholar]

- Hollingworth A, Rasmussen IP. Binding objects to locations: the relationship between object files and visual working memory. Journal of Experimental Psychology: Human Perception and Performance. 2010;36:543–564. doi: 10.1037/a0017836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphreys GW, Lloyd-Jones TJ, Fias W. Semantic interference effects on naming using a postcue procedure: Tapping the links between semantics and phonology with pictures and words. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1995;21:961–980. [Google Scholar]

- Jescheniak J, Oppermann F, Hantsch A, Wagner V, Madebach A, Schriefers H. Do perceived context pictures automatically activate their phonological code? Experimental Psychology. 2009;56:56–65. doi: 10.1027/1618-3169.56.1.56. [DOI] [PubMed] [Google Scholar]

- Madebach A, Jescheniak J, Oppermann F, Schriefers H. Ease of Processing constrains the activation flow in the conceptual–lexical system during speech planning. Annual Meeting of the Psychonomic Society; St. Louis, MO, USA. 2010. [DOI] [PubMed] [Google Scholar]

- Malpass D, Meyer A. The time course of name retrieval during multiple- object naming: Evidence from extrafoveal-on-foveal effects. Journal of Experimental Psychology: Learning, Memory and Cognition. 2010;36:523–537. doi: 10.1037/a0018522. [DOI] [PubMed] [Google Scholar]

- Meyer A. The use of eye tracking in studies of sentence generation. In: Henderson JM, Ferreira F, editors. The interface of language, vision, and action: Eye movements and the visual world. New York: Psychology Press; 2004. pp. 191–211. [Google Scholar]

- Meyer A, Damian M. Activation of distractor names in the picture – picture interference paradigm. Memory and Cognition. 2007;35:494–503. doi: 10.3758/bf03193289. [DOI] [PubMed] [Google Scholar]

- Meyer A, Dobel C. Application of eye tracking in speech production research. In: Hyönä J, Radach R, Deubel H, editors. The mind’s eye: Cognitive and applied aspects of eye movement research. Amsterdam: North Holland; 2003. pp. 253–272. [Google Scholar]

- Meyer A, Ouellet M, Hacker C. Parallel processing of objects in a naming task. Journal of Experimental Psychology: Learning, Memory and Cognition. 2008;34:982–987. doi: 10.1037/0278-7393.34.4.982. [DOI] [PubMed] [Google Scholar]

- Meyer A, Sleiderink A, Levelt W. Viewing and naming objects: eye movements during noun phrase production. Cognition. 1998;66:B25–B33. doi: 10.1016/s0010-0277(98)00009-2. [DOI] [PubMed] [Google Scholar]

- Meyer A, van der Meulen F. Phonological priming effects on speech onset latencies and viewing times in object naming. Psychonomic Bulletin & Review. 2000;7:314–319. doi: 10.3758/bf03212987. [DOI] [PubMed] [Google Scholar]

- Morgan J, Meyer A. Processing of extrafoveal objects during multiple-object naming. Journal of Experimental Psychology: Learning Memory and Cognition. 2005;31:428–442. doi: 10.1037/0278-7393.31.3.428. [DOI] [PubMed] [Google Scholar]

- Morgan J, van Elswijk G, Meyer A. Extrafoveal processing of objects in a naming task: Evidence from word probe experiments. Psychonomic Bulletin & Review. 2008;15:561–565. doi: 10.3758/pbr.15.3.561. [DOI] [PubMed] [Google Scholar]

- Morsella E, Miozzo M. Evidence for a cascade model of lexical access in speech production. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2002;28:555–563. [PubMed] [Google Scholar]

- Navarrete E, Costa A. Phonological activation of ignored pictures: Further evidence for a cascade model of lexical access. Journal of Memory and Language. 2005;53:359–377. [Google Scholar]

- Oppermann F, Jescheniak J, Schriefers H. Conceptual coherence affects phonological activation of context objects during object naming. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2008;34:587–601. doi: 10.1037/0278-7393.34.3.587. [DOI] [PubMed] [Google Scholar]

- Oppermann F, Jescheniak J, Schriefers H, Görges F. Semantic relatedness among objects promotes the activation of multiple phonological codes during object naming. Quarterly Journal of Experimental Psychology. 2010;63:356–370. doi: 10.1080/17470210902952480. [DOI] [PubMed] [Google Scholar]

- Pashler H. The Psychology of Attention. Cambridge, MA: MIT Press; 1999. [Google Scholar]

- Pollatsek A, Rayner K, Collins W. Integrating pictorial information across eye movements. Journal of Experimental Psychology: General. 1984;113:426–442. doi: 10.1037//0096-3445.113.3.426. [DOI] [PubMed] [Google Scholar]

- Pollatsek A, Rayner K, Henderson J. Role of spatial location in integration of pictorial information across saccades. Journal of Experimental Psychology: Human Perception & Performance. 1990;16:199–210. doi: 10.1037//0096-1523.16.1.199. [DOI] [PubMed] [Google Scholar]

- Rayner K. The perceptual span and peripheral cues in reading. Cognitive Psychology. 1975;7:65–81. [Google Scholar]

- Schotter E. Eye movements as an index of linguistic processing in language production. Journal of Psychology and Behavior. 2011;9:16–23. [Google Scholar]

- Strijkers K, Holcomb P, Costa A. Conscious intention to speak proactively facilitates lexical access during overt object naming. Journal of Memory and Language. 2011;65:345–362. doi: 10.1016/j.jml.2011.06.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tipper S. The negative priming effect: Inhibitory priming by ignored objects. Quarterly Journal of Experimental Psychology. 37:571–590. doi: 10.1080/14640748508400920. [DOI] [PubMed] [Google Scholar]

- Van der Meulen F, Meyer A, Levelt W. Eye movements during the production of nouns and pronouns. Memory & Cognition. 2001;29:512–521. doi: 10.3758/bf03196402. [DOI] [PubMed] [Google Scholar]

- Zelinsky G, Murphy G. Synchronizing visual and language processing: An effect of object name length on eye movements. Psychological Science. 2000;11:125–131. doi: 10.1111/1467-9280.00227. [DOI] [PubMed] [Google Scholar]