Abstract

Adaptive behaviors increase the likelihood of survival and reproduction and improve the quality of life. However, it is often difficult to identify optimal behaviors in real life due to the complexity of the decision maker’s environment and social dynamics. As a result, although many different brain areas and circuits are involved in decision making, evolutionary and learning solutions adopted by individual decision makers sometimes produce suboptimal outcomes. Although these problems are exacerbated in numerous neurological and psychiatric disorders, their underlying neurobiological causes remain incompletely understood. In this review, theoretical frameworks in economics and machine learning and their applications in recent behavioral and neurobiological studies are summarized. Examples of such applications in clinical domains are also discussed for substance abuse, Parkinson’s disease, attention-deficit/hyperactivity disorder, schizophrenia, mood disorders, and autism. Findings from these studies have begun to lay the foundations necessary to improve diagnostics and treatment for various neurological and psychiatric disorders.

Keywords: reinforcement learning, default network, impulsivity, addiction, Parkinson’s disease, schizophrenia, autism, depression, anxiety

Introduction

Decision making is an abstract term referring to the process of selecting a particular option among a set of alternatives expected to produce different outcomes. Accordingly, it can be used to describe an extremely broad range of behaviors, ranging from various taxes of unicellular organisms to complex political behaviors in human society. Until recently, two different approaches have dominated the studies of decision making. On the one hand, a normative or prescriptive approach addresses the question of what is the best or optimal choice for a given type of decision-making problem. For example, the principle of utility maximization in economics and the concept of equilibrium in the game theory describe how self-interested rational agents should behave individually or in a group, respectively (von Neumann and Morgenstern, 1944). On the other hand, real behaviors of humans and animals seldom match the predictions of such normative theories. Thus, empirical studies seek to identify a set of principles that can parsimoniously account for the actual choices of humans and animals. For example, prospect theory (Kahneman and Tversky, 1979) can predict not only decisions of humans but also those of other animals more accurately than normative theories (Brosnan et al., 2007; Lakshminaryanan et al., 2008; Santos and Hughes, 2009). Similarly, empirical studies have demonstrated that humans often choose their behaviors altruistically, and thus deviate from the predictions from the classical game theory (Camerer, 2003).

Recently, these two traditional approaches of decision-making research have merged with two additional disciplines. First, it is now increasingly appreciated that learning plays an important role in decision making, although this has been ignored in most economic theories. In particular, reinforcement learning theory, originally rooted in psychological theories of learning in animals (Mackintosh, 1974) and optimal control theory (Bellman, 1957), provides a valuable framework to model how decision-making strategies are tuned by experience (Sutton and Barto, 1998). Second, and more importantly for the purpose of this review, researchers have begun to elucidate a number of important core mechanisms in the brain responsible for various computational steps of decision making and reinforcement learning (Wang, 2008; Kable and Glimcher, 2009; Lee et al., 2012). Not surprisingly, economic and reinforcement learning theories are now frequently featured in the neuroscience literature, and play an essential role in contemporary research on the neural basis of decision making (Glimcher et al., 2009). Given the wide range of decision-making problems, this neuroeconomic research also finds its applications in many disciplines in humanities and social sciences, including ethics (Farah, 2005), law (Zeki and Goodenough, 2004), and political science (Kato et al., 2009).

An important lesson from neurobiological research on decision making is that actions are chosen through coordination among multiple brain systems, each implementing a distinct set of computational algorithms (Dayan et al., 2006; Rangel et al., 2008; Lee et al., 2012; van der Meer et al., 2012; Delgado and Dickerson, 2012). As a result, aberrant and maladaptive decision making is common in many different types of neurological and psychiatric disorders. Nevertheless, psychiatric conditions are still diagnosed and treated according to schemes largely based on symptom clustering (Hyman, 2007; Sharp et al., 2012). Thus, as the neural underpinnings of decision making are better elucidated, such knowledge has the increasing potential to revolutionize the diagnosis and treatment of neurological and psychiatric disorders (Kishida et al., 2010; Maia and Frank, 2011; Hasler, 2012; Montague et al., 2012; Redish, 2013).

A main purpose of this review is to exemplify the new insights provided by recent applications of computational and neuroeconomic research on decision making for improved characterization of various neurological and psychiatric disorders. To this end, the main theoretical frameworks used in neuroeconomic research, such as prospect theory and reinforcement learning theory, are briefly described. Next, our current knowledge of the neural systems involved in valuation and reinforcement learning is summarized. I then discuss how these neuroeconomic approaches have begun to reshape our understanding of neurobiological changes associated with different types of neurological and psychiatric disorders. The paper concludes with several suggestions for future research.

Economic Decision Making

Decision Making under Risk

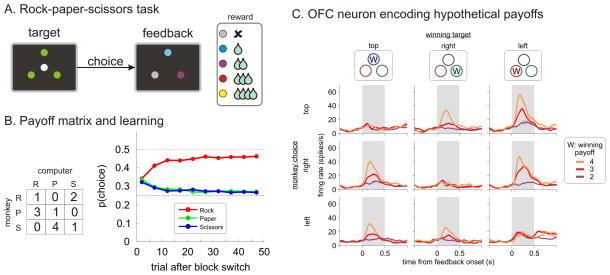

In economics, utility refers to the strength of a decision maker’s preference for a particular option. When the preference of a decision maker between different outcomes satisfies a certain set of properties, such as transitivity, the utility of a given option can be expressed as a real number. In addition, when the outcomes of a choice are uncertain, its utility can be computed as the average of the utilities of different outcomes weighted by their probabilities, and is referred to as expected utility (von Neumann and Morgenstern, 1944). In this framework, the shape of the utility function determines the decision-maker’s attitude towards uncertainty or risk. For example, when the utility function increases linearly with the quantity of a particular good, such as money, the decision maker would be indifferent between the certainty of receiving x and the chance of doubling x or getting nothing with equal probabilities. Such a decision maker is referred to as risk-neutral. In contrast, when the utility function is concave and has a negative second derivative, this implies that the utility of getting x is less than twice the utility of getting x/2, and therefore, this person would avoid the same gamble and is referred to as risk-averse (Figure 1A).

Figure 1.

Models of decision making. A. Utility functions with different types of risk preference. B. Value functions in prospect theory. Solid (dotted) line shows the value function with (without) loss aversion. C. Exponential vs. hyperbolic temporal discount functions. D. Weights assigned to the previous outcomes at different time lags according to two different learning rates in a model-free reinforcement learning algorithm.

A decision maker whose choices are consistent with the principle of maximizing expected utilities is considered rational, regardless of his or her attitude towards risk. Therefore, for rational decision makers, only the probabilities and utilities of different outcomes should influence their choices. However, choices of human decision makers are influenced by other contextual factors, such as the status quo, and different outcomes are weighted by quantities only loosely related to probabilities. In prospect theory (Kahneman and Tversky, 1979), the desirability of a decision outcome is determined by its deviation from a reference point. The precise location of the reference point can change depending on the description of alternative options, and gains and losses from this reference point are evaluated differently by the so-called value function (Figure 1B). In fact, the term “value” is used somewhat more loosely even when preference does not satisfy the formal definition of utility. In prospect theory, the value function is concave for gains and convex for losses, accounting for the empirical findings that humans are risk-averse and risk-seeking for gains and losses, respectively. Namely, most people would prefer a sure gain of $1,000 to a 50% chance of gaining $2,000, while preferring a sure loss of $1,000 to a 50% chance of losing $2,000. In addition, the slope of the value function near the reference point is approximately twice as large for losses than for gains. This accounts for the fact that humans are often more sensitive to a loss than a gain of the same magnitude, which is referred to as loss aversion (Tversky and Kahneman, 1992). Another deficiency in expected utility theory is that in real life, the exact probabilities of different outcomes from a particular choice are often unknown. This type of uncertainty is referred to as ambiguity. The term ambiguity aversion is often used to describe the tendency to avoid an option for which the exact probabilities of different outcomes are not known (Camerer and Weber, 1992).

Intertemporal Choice

For practically all decisions made in real life, rewards from chosen actions become available after substantial delays. Faced with a choice between a small but immediate reward and a larger but more delayed reward, humans and animals tend to prefer the smaller reward if the difference in the reward magnitude is sufficiently small or if the delay for the larger reward is too long. This implies that the utility for a delayed reward decreases with the duration of its delay. Formally, a discount function is defined as the fraction of the utility for delayed reward relative to that of the same reward without any delay (Figure 1C). A discount function that decays steeply with reward delay corresponds to an impatient or impulsive decision maker who assigns a large weight to an immediate reward. A variety of mathematical functions have been proposed for discount functions, including an exponential function (Samuelson, 1937). An important property of an exponential discount function is that the rate of discounting per unit length of time is constant. This implies that exponential discount functions are time-consistent. Namely, when a decision maker prefers one of the two rewards expected after unequal delays, the mere passage of time does not alter this preference. However, the majority of empirical studies in humans and animals found that discount rate decreases for long delays (Mazur, 2000). This can be modeled more accurately by hyperbolic or quasi-hyperbolic discount functions (Green and Myerson, 2004; Phelps and Pollak, 1968; Laibson, 1997; Hwang et al., 2009; Figure 1C). Compared to the exponential discount function, hyperbolic discount functions imply that decision makers are particularly attracted to the immediate reward with no delay (Figure 1C), although the same person might prefer a larger and more delayed reward if some additional delays are added to both options equally. This is referred to as preference reversal (Strotz, 1955–1956).

Although the framework of temporal discounting has been influential, there are some limitations. First, there are cases in which humans display negative time preference, namely, the value of an outcome increases when it is delayed (Loewenstein, 1987). For example, some people prefer to obtain a kiss from their favorite movie stars after a delay than immediately, suggesting that they might derive some satisfaction from the anticipation of pleasurable future outcomes. Similarly, some people prefer to experience painful events sooner rather than later (Berns et al., 2006). Second, when people choose between two different sequences of outcomes, the overall utility of a particular outcome sequence may not correspond to the sum of the discounted utilities of individual outcomes. Instead, human decision makers tend to prefer a sequence of outcomes that improve over time (Loewenstein and Prelec, 1993).

Neural Encoding of Utilities and Values

Brain areas involved in decision making must contain signals related to utilities or values of alternative options. A number of studies using neuroimaging and single-neuron recording methods have found that such quantities are encoded in multiple brain regions during decision making involving uncertain outcomes. For example, neurons modulating their activity according to the expected value of reward available from a particular target location are found in the basal ganglia (Samejima et al., 2005), posterior parietal cortex (Platt and Glimcher, 1999; Dorris and Glimcher, 2004; Sugrue et al., 2004; Seo et al., 2009; Louie et al., 2011), premotor cortex (Pastor-Bernier and Cisek, 2011), and medial prefrontal cortex (Sohn and Lee, 2007; Seo and Lee, 2009; So and Stuphorn, 2010). Many of these brain areas might in fact encode the signals related to utilities of rewards expected from specific actions, even when the probabilities and timing of rewards vary. For example, temporally discounted values are encoded by neurons in the prefrontal cortex (Kim et al., 2008), posterior parietal cortex (Louie and Glimcher, 2010), and the striatum (Cai et al., 2011).

Human neuroimaging experiments have also identified signals related to utilities in multiple brain areas, including the ventromedial prefrontal cortex (VMPFC) and ventral striatum (Kuhnen and Knutson, 2005; Knutson et al., 2005; Knutson et al., 2007; Luhmann et al., 2008; Chib et al., 2009; Levy et al., 2011). Consistent with the results from single-neuron recording studies (Sohn and Lee, 2007), signals related to values of rewards expected from specific motor actions have been identified in the human supplementary motor area (Wunderlich et al., 2009). Activity in the VMPFC and ventral striatum display additional characteristics of value signals used for decision making. For example, the activity in each of these areas is influenced oppositely by expected gains and losses. In addition, activity in these areas is more enhanced for expected losses than for expected gains, and this difference is related to the level of loss aversion across individuals (Tom et al., 2007). Activity in the VMPFC and ventral striatum also reflects temporally discounted values for delayed rewards during inter-temporal choice (Kable and Glimcher, 2007; Pine et al., 2009). Results from neuroimaging and lesion studies also suggest that the amygdala might play a role in estimating value functions according to potential losses. For example, activity in the amygdala changes according to whether a particular outcome is framed as a gain or a loss (De Martino et al., 2006), and loss aversion is abolished in patients with focal lesions in the amygdala (De Martino et al., 2010).

Whether decisions are based on values computed for specific goods or their locations, and which brain areas encode the value signals actually used for action selection, might vary depending on the nature of choices to be made (Lee et al., 2012). The DLPFC might contribute to flexible switching between different types of value signals used for decision making. This is possible, since the DLPFC is connected with many other brain areas that encode different types of value signals (Petrides and Pandya, 1984; Carmichael and Price, 1996; Miller and Cohen, 2001). In addition, individual neurons in the DLPFC can modulate their activity according to value signals associated with specific objects as well as their locations (Kim et al., 2012b). In contrast, neurons in the primate orbitofrontal cortex tend to encode the signals related to utilities assigned to specific goods independent of their locations (Padoa-Schioppa and Assad, 2006).

In addition to the desirability of expected outcomes, the likelihood of choosing a particular action is also influenced by the cost of performing that action. Although the activity of neurons in the orbitofrontal cortex and striatum is often modulated by multiple parameters of reward, the signals related to the cost or efforts associated with a particular action might be processed preferentially in the anterior cingulate cortex. This possibility is consistent with the results from lesion studies (Walton et al., 2003; Rudebeck et al., 2006), as well as single-neuron recording and neuroimaging studies (Croxson et al., 2009; Kennerley et al., 2009; Prévost et al., 2010; Hillman and Bilkey, 2010). However, precisely how the information about the benefits and costs associated with different options is integrated in the brain remains poorly understood (Rushworth et al., 2011).

Reinforcement Learning

Multiple Systems for Reinforcement Learning

In most economic decision-making experiments conducted in laboratories, subjects select from a small number of options with relatively well characterized outcomes. By contrast, choices made in real life are more complex, and it is often necessary to make appropriate changes in our decision-making strategies through experience. First, the likelihood that a particular action would be chosen would change depending on whether its previous outcome was reinforcing or punishing (Thorndike, 1911). Second, new information about the regularities in our environment can be used to improve the outcomes of our choices, even when it is not directly related to rewards or penalties (Tolman, 1948). Reinforcement learning theory provides a powerful framework to formalize how these two different kinds of information can modify the values associated with alternative actions (Sutton and Barto, 1998). In this framework, it is assumed that the decision maker is fully knowledgeable about the current state of his or her environment, which determines the outcome of each action as well as the probability distribution of its future states. This property is referred to as Markovian.

In reinforcement learning theory, a value function corresponds to the decision maker’s subjective estimate for the long-term benefits expected from being in a particular state or taking a particular action in a particular state. These two different types of value functions are referred to as state and action value functions, respectively. Action value functions in reinforcement learning theory play a role similar to that of utilities in economics, but there are two main differences. First, value functions are only estimates, since they are continually adjusted according to the decision-maker’s experience. Second, value functions are related to choices only probabilistically. This can be beneficial, since such apparently suboptimal behaviors can eventually increase the accuracy of value functions, thereby providing a potential solution to the exploration-exploitation dilemma (Sutton and Barto, 1998).

Value functions can be estimated according to several different algorithms, which might be implemented by different anatomical substrates in the brain (Daw et al., 2005; Dayan et al., 2006; van der Meer et al., 2012). These different algorithms are captured by animal learning theories. First, a sensory stimulus (conditioned stimulus, CS) reliably predicting appetitive or aversive outcome (unconditioned stimulus, US) eventually acquires the ability to evoke a predetermined behavioral response (conditioned response, CR) similar to the responses originally triggered by the predicted stimulus (unconditioned response, UR; Mackintosh, 1974). The strength of this association can be referred to as the Pavlovian value of the CS (Dayan, 2006). Second, during instrumental model-free reinforcement learning, or simply habit learning, value function correspond to the value of appetitive or aversive outcome expected from an arbitrary action or its antecedent cues. Computationally, these two types of learning can be described similarly using a simple temporal difference (TD) learning algorithm, analogous to the Rescorla-Wagner rule (Rescorla and Wagner, 1972). In both cases, value functions are adjusted according to the difference between the actual outcome and the outcome expected from the current value functions. This difference is referred to as the reward prediction error. In the case of Pavlovian learning, the value function is updated for the action predetermined by the US, whereas for habit learning, the value function is updated for any arbitrary action chosen by the decision maker (Dayan et al., 2006). The rate in which the reward prediction error is incorporated into the value function is controlled by a learning rate. A small learning rate allows the decision maker to integrate the outcomes from previous actions over a large time scale (Figure 1D). Learning rates can be adjusted according to the stability of the decision-making environment (Behrens et al., 2007; Bernacchia et al., 2011).

Finally, when humans and animals acquire new information about the properties of their environment, this knowledge can be utilized to update the value functions for some actions and improve decision-making strategies, without experiencing the actual outcomes of their actions (Tolman, 1948). This is referred to as model-based reinforcement learning, since the value functions are updated by simulating the outcomes expected from various actions using the decision maker’s internal or mental model of the environment (Sutton and Barto, 1998; Doll et al., 2012). Formally, the knowledge or model of the decision maker’s environment can be captured by transition probabilities for the environment to switch between two different states (Sutton and Barto, 1998). Therefore, when the estimates of these transition probabilities are revised, the likelihood of different outcomes expected from various actions can be recalculated, even if the outcomes from all the states remain unchanged (Packard and McGaugh, 1996). Similarly, if the subjective values of specific outcomes change as a result of selective feeding or taste aversion, the value functions for actions leading to those outcomes can be revised without directly experiencing them (Holland and Straub, 1979; Dickinson, 1985). Therefore, the choices predicted by model-free and model-based reinforcement learning algorithms, as well as their corresponding neural mechanisms, might be different.

Neural Substrates of Model-free Reinforcement Learning

As described above, errors in predicting affective outcomes, namely, reward prediction errors, are postulated to drive model-free reinforcement learning, including both Pavlovian conditioning and habit learning. An important clue for the neural mechanism of reinforcement learning was therefore provided by the observation that the phasic activity of midbrain dopamine neurons encodes the reward prediction error (Schultz, 1998). Dopamine neurons innervate many different targets in the brain, including the cerebral cortex (Lewis et al., 2001), striatum (Bolam et al., 2000; Nicola et al., 2000), and amygdala (Sadikot and Parent, 1990). In particular, the amygdala might be involved in both fear conditioning (LeDoux, 2000) and appetitive Pavlovian conditioning (Hatfield et al., 1996; Parkinson et al., 2000; Paton et al., 2006). Induction of synaptic plasticity in the amygdala that underlies Pavlovian conditioning might depend on the activation of dopamine receptors (Guarraci et al., 1999; Bissière et al., 2003). In addition, the ventral striatum also contributes to several different forms of appetitive Pavlovian conditioning, such as auto-shaping, conditioned place preference, and second-order conditioning (Cardinal et al., 2002).

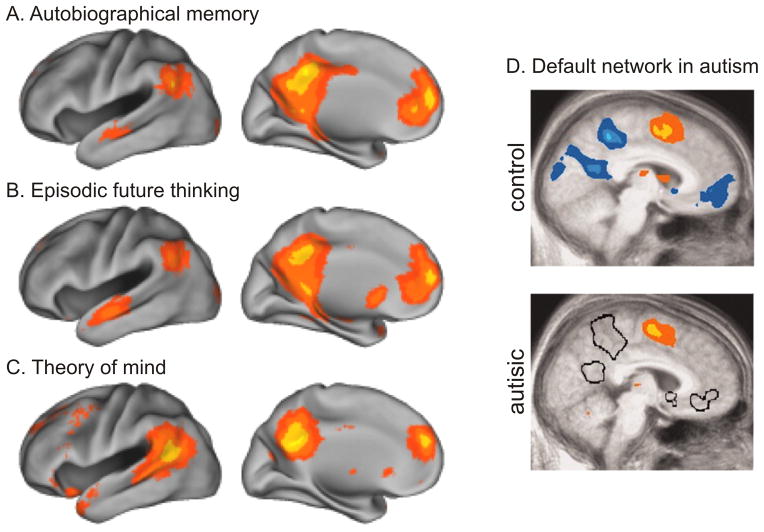

Given the increased range of actions controlled by habit learning, the anatomical substrates for habit learning might be more extensive compared to the areas related to Pavlovian conditioning, and are likely to span both cortical and subcortical areas. Nevertheless, the striatum has received much attention due to its dense innervation by dopamine neurons (Houk et al., 1995). The striatum integrates inputs from almost all cortical areas, and influences the activity of neurons in the motor structures, such as the superior colliculus and pedunculopontine nucleus, largely through disinhibitory mechanisms (Chevalier and Deniau, 1990; Mink, 1996). In addition, striatal neurons in the direct and indirect pathways express D1 and D2 dopamine receptors, respectively, and might influence the outputs of the basal ganglia antagonistically (Kravitz et al., 2010; Tai et al., 2012; but see Cui et al., 2013). Dopamine-dependent, bidirectional modulation of corticostriatal synapses might provide the biophysical substrates for integrating the reward prediction error signals into value functions in the striatum (Shen et al., 2008; Pawlak and Kerr, 2008; Wickens, 2009). Indeed, neurons in the striatum often encode the value functions for specific actions (Samejima et al., 2005; Lau and Glimcher, 2008; Cai et al., 2011; Kim et al., 2009, 2013). In addition, signals necessary for updating the value functions, including the value of the chosen action and reward prediction errors, are also found in the striatum (Kim et al., 2009; Oyama et al., 2010; Asaad and Eskandar, 2011). Moreover, the dorsolateral striatum, or the putamen, might be particularly involved in controlling habitual motor actions (Hikosaka et al., 1999; Tricomi et al., 2009). Although the striatum is most commonly associated with model-free reinforcement learning, additional brain areas are likely to be involved in the process of updating action value functions, depending on the specific type of value functions in question. Indeed, signals related to value functions and reward prediction errors are found in many different areas (Lee et al., 2012). Similarly, using a multivariate decoding analysis, signals related to rewarding and punishing outcomes can be decoded from the majority of cortical and subcortical areas (Figure 2; Vickery et al., 2011).

Figure 2.

Brain areas encoding reward signals during a matching pennies task that was identified with a multi-voxel pattern analysis (Vickery et al., 2011).

Neural Substrates for Model-based Reinforcement Learning

The neural substrates for model-based reinforcement learning are much less well understood compared to those for Pavlovian conditioning and habit learning (Doll et al., 2012). This is not surprising, since the nature of computations for simulating the possible outcomes and their neural implementations might vary widely across various decision-making problems. For example, separate regions of the frontal cortex and striatum in the rodent brain might underlie model-based reinforcement learning (place learning) and habit learning (response learning; (Tolman et al., 1946). In particular, lesions in the dorsolateral striatum and infralimbic cortex impair habit learning, while lesions in the dorsomedial striatum and prelimbic cortex impair model-based reinforcement learning (Balleine and Dickinson, 1998; Killcross and Coutureau, 2003; Yin and Knowlton, 2006). In addition, lesions or inactivation of the hippocampus suppresses the strategies based on model-based reinforcement learning (Packard et al., 1989; Packard and McGaugh, 1996).

To update the value functions in model-based reinforcement learning, the new information from the decision maker’s environment needs to be combined with the previous knowledge appropriately. Encoding and updating the information about the decision maker’s environment might rely on the prefrontal cortex and posterior parietal cortex (Pan et al., 2008; Gläscher et al., 2010; Jones et al., 2012). In addition, persistent activity often observed in these cortical areas is likely to reflect the computations related to reinforcement learning and decision making in addition to working memory (Kim et al., 2008; Curtis and Lee, 2010). Given that persistent activity in the prefrontal cortex is strongly influenced by dopamine and norepinephrine (Arnsten et al., 2012), prefrontal functions related to model-based reinforcement learning might be regulated by these neuromodulators. The neural mechanisms of mental simulations necessary for estimating the hypothetical outcomes predicted from this new knowledge are also poorly understood, but might include the hippocampus. For example, when the animal is at a choice point during a maze learning task, activity of neurons in the hippocampus briefly represent the potential goal locations, which has been interpreted as a neural correlate of mental simulation (Tolman, 1948; Johnson and Redish, 2007). In addition, the orbitofrontal cortex might play an important role in selecting actions according to the value functions estimated by model-based reinforcement learning algorithms, when the subjective values of expected outcomes change (Izquierdo et al., 2004; Valentin et al., 2007).

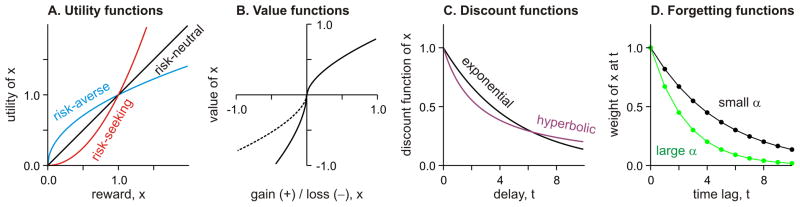

Results from recent neuroimaging and neural recording studies have also shown that the neural substrates involved in updating value functions according to different reinforcement learning algorithms might overlap substantially. For example, reward prediction error signals encoded in the ventral striatum reflect the estimates derived from both model-free and model-based reinforcement learning algorithms (Lohrenz et al., 2007; Daw et al., 2011; Wimmer et al., 2012). Single-neuron recording studies in non-human primates have also found that signals related to actual and simulated outcomes are often encoded by the neurons in the same regions of the prefrontal cortex (Hayden et al., 2009; Abe and Lee, 2011; Figure 3).

Figure 3.

Hypothetical outcome signals in the orbitofrontal cortex. A. Visual stimuli displayed during the choice and feedback epochs of a rock-paper-scissors task used in Abe and Lee (2011). Different colors for feedback stimuli were associated with different amounts of juice reward. B. Payoff matrix (left) and changes in choice probabilities (right) during the same task (R, rock; P, paper; S, scissors). Dotted lines correspond to the Nash-equilibrium strategy (0.5 for rock and 0.25 for paper and scissors, respectively). C. Activity of a neuron in the orbitofrontal cortex that encoded the hypothetical outcomes from unchosen actions. Spike density functions are plotted separately according to the position (columns) and payoffs (line colors) of the winning target and the position of the target chosen by the animal (rows).

Mental Simulation and Default Network

Several cognitive processes closely related to episodic memory, such as self-projection, episodic future thinking, mental time travel, and scene construction (Atance and O’Neill, 2001; Tulving, 2002; Hassabis and Maguire, 2007; Corballis, 2013), might be involved in simulating the outcomes of hypothetical actions. Common to all of these processes is the activation of the memory traces relevant for predicting the likely outcomes of potential actions in the present context. In addition, even when possible outcomes are explicitly specified for each option, the process of evaluating the subjective values of each option might still rely on mental simulation. This might be particularly true during intertemporal choice. In fact, imagining a future planned event during intertemporal choice reduces the rate of temporal discounting (Boyer, 2008; Peters and Büchel, 2010).

It has been proposed that the computations involved in episodic future thinking and mental time travel might be implemented in the default network (Buckner and Carroll, 2007). The default network refers to a set of brain areas that increase their activity when the subjects are not engaged in a specific cognitive task, such as during intertrial intervals, presumably reflecting the activity related to more spontaneous cognitive processes. This network includes the medial prefrontal cortex, posterior cingulate cortex, and medial temporal lobe (Buckner et al., 2008). Mental simulation of hypothetical outcomes might be an important component of such spontaneous cognition. For example, during intertemporal choice, the activity of the posterior cingulate cortex reflects the subjective values of delayed rewards (Kable and Glimcher, 2007). Moreover, activity in the posterior cingulate cortex and hippocampus is higher during intertemporal choice than during a similar decision-making task involving uncertain outcomes without any delays (Luhmann et al., 2008; Ballard and Knutson, 2009). The functional coupling between the hippocampus and the anterior cingulate cortex is also correlated with how much episodic future thinking affects the preference for delayed rewards (Peters and Büchel, 2010).

Social Decision Making

The most complex and challenging forms of decision making take place in a social context (Behrens et al., 2009; Seo and Lee, 2012). During social interactions, outcomes are jointly determined by the actions of multiple decision makers (or players). In game theory (von Neumann and Morgenstern, 1944), a set of strategies chosen by all players is referred to as a Nash equilibrium, if none of the players can benefit from changing their strategies unilaterally (Nash, 1950). In such classical game theoretic analyses, it is assumed that players pursue only their self-interests and are not limited in their cognitive abilities. In practice, these assumptions are often violated, and choices made by humans tend to deviate from Nash equilibriums (Camerer, 2003). Nevertheless, when the same games are played repeatedly, strategies of decision makers tend to approach the equilibriums (Figure 3B). Accordingly, iterative games have been often used in laboratories as a test bed to examine how humans and animals might improve their strategies during social interactions. The results from these studies have demonstrated that both humans and animals apply a combination of model-free and model-based reinforcement learning algorithms (Camerer and Ho, 1999; Camerer, 2003; Lee, 2008; Abe et al., 2011; Zhu et al., 2012).

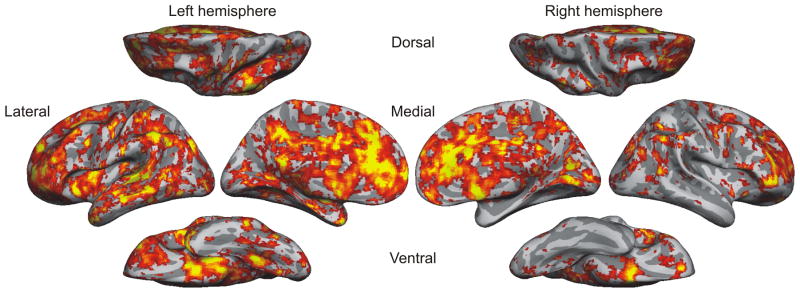

Since the outcomes of social decision making depend on the choices of others, model-based reinforcement learning during social interactions requires accurate models of the strategies used by other decision makers. The ability to make inferences about the knowledge and beliefs of other decision-making agents is referred to as the theory of mind (Premack and Woodruff, 1978; Gallagher and Frith, 2003). Neural signals necessary for updating the models of other players have been identified in the brain areas implicated for the theory of mind, such as the dorsomedial prefrontal cortex and superior temporal sulcus (Hampton et al., 2008; Behrens et al., 2008). Interestingly, most cortical areas included in the default network are activated similarly during the tasks related to episodic or autobiographical memory, prospection, and theory of mind (Gusnard et al., 2001; Spreng et al., 2009, Spreng and Grady, 2010; Figure 4A), although some brain areas might be more specifically related to the theory of mind and other aspects of social cognition (Rosenbaum et al., 2007; Spreng and Grady, 2010; Rabin et al., 2010).

Figure 4.

Functions and dysfunctions of the default network. A–C. Cortical areas activated by the recall of autobiographical memory (A), episodic future thinking (B), and mental simulation of other people’s perspective (C). Reproduced from Buckner et al. (2008). D. Deactivation in the default network (blue, top) is absent in the brains of autistic individuals (black outlines, bottom; Kennedy et al., 2006).

Suboptimal Decision Making in Mental Disorders

Computational and neurobiological studies on decision making have begun to provide much insight into the neural mechanisms that underlie suboptimal decision-making behaviors observed in various psychiatric and neurological disorders. Since multiple algorithms and brain systems are likely to be combined in a flexible manner for optimal decision making according to the demands of specific tasks, it would be challenging to characterize the nature of decision-making deficits in different disorders accurately. Econometric and reinforcement learning models are therefore becoming valuable tools in a new area referred to as computational psychiatry (Kishida et al., 2010; Maia and Frank, 2011; Hasler, 2012; Montague et al., 2012; Sharp et al., 2012; Redish, 2013).

Substance Abuse

Many people continue to abuse addictive substances despite their negative long-term consequences and a large cost on society. Although addictive behaviors are likely to arise from multiple factors (Redish et al., 2008), they are often attributed to the dopamine system and its role in impulsivity (Monterosso et al., 2012). First, addictive drugs increase the level of dopamine in the brain (Koob et al., 1998). Therefore, intake of the addictive substance might provide undiminished signals related to positive reward prediction errors even after repeated drug use, which would continuously strengthen the tendency of substance abuse (Everitt et al., 2001; Redish, 2004). However, contrary to the predictions of this theory, animals can reduce their preference for a particular action, when they receive less cocaine than expected (Marks et al., 2010), and conditioning with cocaine can be blocked by another stimulus already paired with cocaine (Panlilio et al., 2007). Second, it has been proposed that addicted individuals become hypersentivie to the incentive salience assigned to drug-related cues, and this so-called incentive sensitization might be mediated by the action of dopamine in the ventral striatum (Robinson and Berridge, 2003). Third, a low level of D2/D3 receptors has been associated with a high level of impulsivity as well as the tendency to develop habitual drug taking (Dalley et al., 2011).

An important factor contributing to substance abuse might be abnormally steep temporal discounting (Kim and Lee, 2011). Drug-users and alcoholics display steeper discounting during intertemporal choice compared to normal controls (Madden et al., 1997; Kirby et al., 1999; Coffey et al., 2003; de Wit, 2009; MacKillop et al., 2011). Steep temporal discounting might facilitate drug use by reducing the weight given to its negative long-term consequences. Consistent with this possibility, it has been shown that rats with a steeper discounting function are more likely to acquire cocaine self-administration (Perry et al., 2005). In addition, the use of addictive drugs might also increase the steepness of temporal discounting (Simon et al., 2007). During intertemporal choice, a number of brain areas thought to be important for attention and episodic memory, such as the precuneus and anterior cingulate cortex, show reduced activation in methamphetamine-dependent individuals, suggesting that the impaired functions of these brain areas might contribute to more impulsive choices (Hoffman et al., 2008). Although dopamine-related drugs have been shown to influence the steepness of temporal discounting, the results from these behavioral pharmacological studies have not been consistent (Peters and Büchel, 2011). As a result, the precise nature of the neural mechanisms linking the use of addictive drugs and temporal discounting needs to be examined more carefully.

Parkinson’s Disease

In patients with Parkinson’s disease, midbrain dopamine neurons are lost progressively. Since these neurons are a major source of inputs to the basal ganglia, motor deficits found in Parkinson’s patients, such as bradykinesia, rigidity, and tremor, are thought to result from the disruption in the disinhibitory functions of the basal ganglia (DeLong, 1990). In addition, considering the extent to which dopamine neurons contribute to the broad propagation of reward prediction signals in the brain, abilities to improve decision-making strategies through experience might be impaired in patients with Parkinson’s disease. In fact, given the option of learning from positive or negative outcomes of previous choices, Parkinson’s patients tend to learn more from negative outcomes, and this tendency was ameliorated by medication that increase dopamine levels (Frank et al., 2004). Similarly, striatal activity correlated with reward prediction errors was reduced in Parkinson’s patients (Schonberg et al., 2010).

Medication that increases dopamine levels in Parkinson’s patients is not likely to restore the normal pattern of dopamine signals completely, and therefore may cause a side-effect in choice behaviors of treated patients. For example, Parkinson’s patients often get addicted to the drugs used in dopamine replacement therapy (Lawrence et al., 2003). Similar to the mechanisms of other addictive drugs (Redish, 2004), this might result from the amplification of reward prediction error signals, since patients treated with dopaminergic drugs showed higher learning rates during a dynamic foraging task (Rutledge et al., 2009). Patients on dopamine replacement therapy also tend to develop problems with behavioral addictions, such as pathological gambling (Driver-Dunckley et al., 2003; Dodd et al., 2005). Previous studies have also found that compared to normal controls, Parkinson’s patients tend to show steeper temporal discounting during intertemporal choice (Housden et al., 2010; Milenkova et al., 2011). As is the case for the relationship between addiction and temporal discounting, whether and how steeper temporal discounting in Parkinson’s disease is mediated by dopaminergic signaling requires further study (Dagher and Robbins, 2009).

Attention-Deficit/Hyperactivity Disorder

Attention-deficit/hyperactivity disorder (ADHD) is characterized by inattention, hyperactivity, and impulsivity. Although ADHD occurs most frequently in school-age children, it can also be found in adults, often in attenuated forms. At least two forms of impulsivity have been extensively documented for children and adults with ADHD. First, children and adolescents with ADHD show steeper temporal discounting than age-matched control subjects (Rapport et al., 1986; Sonuga-Barke et al., 1992; Schweitzer and Sulzer-Azaroff, 1995; Barkley et al., 2001) or children with autism spectrum disorders (Demurie et al., 2012). Second, individuals with ADHD also tend to display motor impulsivity, and show impairments in suppressing undesirable movements. In particular, during the stop-signal task, the amount of time necessary for inhibitory signals to abort the pre-planned movement, commonly referred to as the stop-signal reaction time, increases in people with ADHD (Oosterlaan et al., 1998; Aron et al., 2003; Verbruggen and Logan, 2008). The time scale for common intertemporal choice often ranges from days to months, whereas the relevant time scale for motor impulsivity is usually less than a second. Despite this large difference in time scale, both changes in temporal discounting and increased motor impulsivity imply alterations in temporal processing. Accordingly, it has been proposed that the behavioral impairments in the ADHD might result fundamentally from timing deficits (Toplak et al., 2006; Rubia et al., 2009; Noreika et al., 2013).

Neurochemically, ADHD might result from lower levels of dopamine and/or norepinephrine in the brain (Volkow et al., 2009; Arnsten and Pliszka, 2011). Accordingly, symptoms of ADHD can often be ameliorated by stimulants, such as methylphenidate, that increase the level of dopamine and norepinephrine (Gamo et al., 2010). For example, stimulant medication decreased the steepness of temporal discounting in children with ADHD (Shiels et al., 2009). In addition, the ability to suppress preplanned but undesirable movements was enhanced by stimulant medication during the stop signal task (Aron et al., 2003). Currently, it remains uncertain whether these effects of medication used to treat ADHD on decision making and response inhibition are mediated by dopaminergic or noradrenergic systems (Gamo et al., 2010). Non-stimulant drugs that increase the level of both dopamine and norepinephrine (Bymaster et al., 2002) also improve response inhibition (Chamberlain et al., 2009). In addition, administration of guanfacine, an agonist for α2 adrenergic receptors, diminishes the preference for immediate reward during an intertemporal choice task in monkeys (Kim et al., 2012a). Most of these drugs also tend to enhance task-related activity in the prefrontal cortex during a working memory task (Gamo et al., 2010), suggesting that the therapeutic effects of ADHD medication might be mediated by improving the functions of the prefrontal cortex.

Schizophrenia

Often considered the most debilitating mental illness, schizophrenia affects approximately 1% of the population across different cultures, and is characterized by positive symptoms, such as hallucinations and delusions, as well as negative symptoms, such as blunted affect, anhedonia, and lack of motivation (Walker et al., 2004). Symptoms of schizophrenia have often been linked to dopamine. In particular, patients with schizophrenia show elevated levels of dopamine D2 receptors (Kestler et al., 2001). Changes in other neurotransmitter systems, such as reduced N-methyl-D-aspartic acid (NMDA) receptor functions, are also implicated, but the precise manner in which multiple neurotransmitter systems interact with one another in schizophrenia still remains poorly understood (Krystal et al., 2003). Neuropathological studies have documented loss of dendrites and spines of pyramidal neurons (Selemon and Goldman-Rakic, 1999; Glantz and Lewis, 2000) and weaker GABAergic actions needed to coordinate neural activity in the DLPFC (Lewis, 2012). In addition, although a large number of candidate genes have been identified, how they are related to the pathophysiology of schizophrenia is not well known. Nevertheless, many of the genes implicated in schizophrenia, such as DISC1 (Brandon et al., 2009), are often linked to disorders in brain development, suggesting that different stages of schizophrenia should be understood as the trajectory of a neurodevelopmental disorder (Insel, 2010).

A number of cognitive functions, such as working memory and cognitive control, are impaired in schizophrenia (Barch and Ceaser, 2012). In addition to disrupted dopaminergic system, dysfunctions of the prefrontal functions (Weinberger et al., 1986) might also be responsible for changes in reinforcement learning and decision-making strategies observed in patients with schizophrenia. For example, during economic decision making tasks, patients with schizophrenia tend to assign less weight to potential losses compared to healthy controls (Heerey et al., 2008), and also display steeper discounting during intertemporal choice (Heerey et al., 2007). Performance of schizophrenia patients was not significantly different from control subjects during relatively simple associative learning task (Corlett et al., 2007; Gradin et al., 2011). Nevertheless, several studies have revealed impairments in feedback-based learning in patients with schizophrenia (Waltz et al., 2007; Strauss et al., 2011). In particular, the results from probabilistic go/no-go task (Waltz et al., 2011) and a computer-simulated matching pennies task (Kim et al., 2007) consistently showed that patients with schizophrenia might be impaired in flexibly switching their choices based on negative feedback and incrementally adjusting their choices according to positive feedback across multiple trials. Consistent with these behavioral results, activity related to reward prediction error in the frontal cortex and striatum is attenuated in patients with schizophrenia (Corlett, 2007; Gradin et al., 2011).

It has been proposed that insufficient suppression of the default network or its hyperactivity might be related to positive symptoms of schizophrenia, such as hallucination and paranoia (Buckner et al., 2008; Anticevic et al., 2012). For example, the amount of task-related suppression is reduced in some areas of the default network (Whitfield-Gabrieli et al., 2009; Anticevic et al., 2013). Given a large overlap between the default network and the brain areas involved in social cognition, hyperactivity or any abnormal activity patterns in the default network might also underlie impairments in social functions among patients with schizophrenia (Couture et al., 2006). In addition, psychotic symptoms of schizophrenia tend to emerge after early adulthood, often many years after impaired cognitive functions can be detected (Cornblatt et al., 1999; Cannon et al., 2000). This is consistent with the hypothesis that clinical symptoms of schizophrenia arise from malfunctions of the prefrontal cortex and default network, since similar to the extended developmental trajectory of the prefrontal cortex (Lewis, 2012), the functional connectivity of the default network continues to increase during adolescence (Fair et al., 2008). Therefore, it would be important to test whether subjects at risk for schizophrenia are impaired in tasks that require model-based reinforcement learning.

Depression and Anxiety Disorders

Depression and anxiety disorder are both examples of internalizing disorders, namely, they are largely characterized by disturbances in mood and emotion (Kovacs and Devlin, 1998; Krueger, 1999). These two conditions show a high level of comorbidity and are accompanied by poor concentration and negative mood states, such as sadness and anger (Mineka et al., 1998). Nevertheless, there are some important differences. Overall, symptoms of anxiety are appropriate for preparing the affected individuals for impending danger, whereas depression might inhibit previously unsuccessful actions and facilitate more reflective cognitive processes (Oatley and Johnson-Laird, 1987). Physiological arousal is an important feature of anxiety, whereas anhedonia and reduced positive emotions occur in depression (Mineka et al., 1998). Both depression and anxiety disorder tend to introduce systematic biases in attentional and mnemonic processes as well as decision making (Mineka et al., 1998; Paulus and Yu, 2012). In particular, individuals with anxiety disorders become hypersensitive to potentially threatening cues without obvious memory bias. In contrast, depressed individuals show a bias to remember negative events (Matt et al., 1992), and to ruminate excessively (Nolen-Hoeksema, 2000).

The possible neural changes responsible for the symptoms of these two mood disorders have been extensively studied, and some candidate brain systems have been identified. In particular, depression might be related to abnormalities in brain monoamines and fronto-striatal systems involved in reinforcement learning (Eshel and Roiser, 2010). On the other hand, the brain areas implicated in anxiety disorders include the amygdala, insula, and anterior cingulate cortex (Craske et al., 2009; Hartley and Phelps, 2012). In addition, excessive rumination and negative self-referential memory observed in depressed individuals might be linked to the function of the default network. Indeed, the default network is over-active in individuals with depression when they are evaluating emotional stimuli (Sheline et al., 2009), and its activity is correlated with the level of depressive rumination (Hamilton et al., 2011). To the extent that the default network contributes to task-relevant mental simulation and spontaneous cognition (Andrews-Hanna et al., 2010), this might also account for the fact that depressed individuals perform better in sequential decision-making tasks and analytical thinking (Andrews and Thomson, 2009; von Helversen et al., 2011). Patients with depression display increased metabolic activity in the subgenual cingulate cortex, and deep brain stimulation of the same brain area produces therapeutic effects (Mayberg et al., 2005). Therefore, the functional coupling between the subgenual cingulate cortex and the default network patients, which is greater in patients with depression (Greicius et al., 2007), might correspond to the interface between excessive self-referential thoughts and their negative emotional consequences.

As reviewed recently (Paulus and Yu, 2012), a large number of studies have examined the performance of individuals with depression or anxiety during the Iowa gambling task (Bechara et al., 1997), but the results from these studies were inconsistent. Obtaining the best outcome during the Iowa gambling task depends on a number of computationally distinct processes, including reinforcement learning, risk preference, and loss aversion (Fellows and Farah, 2005; Worthy et al., 2012). Understanding how each of these processes is influenced in individuals with depression or anxiety therefore still remains an important research area (Angie et al., 2011; Hartley and Phelps, 2012). The available results suggest that individuals with anxiety disorders are more risk-averse than control subjects (Maner et al., 2007), whereas the neural signals related to reinforcement might be reduced, especially in the striatum, in depressed individuals (Pizzagalli et al., 2009).

Although neurochemical basis of mood and anxiety disorders remains poorly understood, much attention has been focused on the possible role of altered serotonin metabolism in depression (Dayan and Huys, 2009). For example, it has been hypothesized that that future rewards might be discounted excessively in individuals with depression due to an abnormally low level of serotonin (Doya, 2002). In fact, the discount rate used to calculate the subjective value of future reward might be controlled by serotonin (Schweighofer et al., 2008). However, temporal discounting might be less steep in individuals with high levels of anhedonia (Lempert and Pizzagalli, 2010). Others have proposed that serotonin is primarily involved in the inhibition of thoughts and actions associated with aversive outcomes (Daw et al., 2002), including the process of heuristically disregarding unpromising branches of decision trees (Dayan and Hyus, 2008; Huys et al., 2012). According to this view, depressed individuals would expect a lower rate of rewards from their actions, because insufficient serotonin would expose the negative outcomes of potential actions that would be normally subject to pruning. More research is needed, however, for understanding the nature of neural processes mediating the effects of various neuromodulators, such as serotonin, during decision making.

Autism

Autism is a neurodevelopmental disorder, characterized by impaired social cognition, poor communicative abilities, repetitive behaviors, and narrow interests (Geschwind and Levitt, 2007). In particular, individuals with autism are impaired in their ability to make inferences regarding the intentions and beliefs of others, namely, theory of mind, as reflected in their poor performance with the false-belief task (Baron-Cohen et al., 1985; Frith, 2001). Such reduced abilities to mentalize the intentions of others might underlie differences in the strategies of autistic individuals and control subjects during socially interactive decision-making tasks. For example, children with autism tend to offer a smaller amount of money as proposers during the ultimatum game, and they are also more likely to accept even very small offers as responders (Sally and Hill, 2006). In addition, whereas control subjects donated more money to charity in the presence of observers, this effect was absent in individuals with autism (Izuma et al., 2011). Autistic individuals are also impaired in their abilities to infer mentalizing strategies of others (Yoshida et al., 2010). Some of these social impairments in autism are ameliorated by oxytocin, but precisely how oxytocin influences affective and social functions of the brain remains poorly understood and must be more carefully characterized (Yamasue et al., 2012).

Although autism has heterogeneous etiology, abnormality in the long-range connections between different association cortical areas is often considered important (Geschwind and Levitt, 2007). Such anatomical changes might underlie reduced inter-hemispheric synchronization in neural activity recorded from toddlers with autism (Dinstein et al., 2011). Anatomical and physiological abnormalities in autism might produce their most prominent effect in the domain of social cognition. Consistent with the possibility that the default network might be important for mental simulation in social contexts, the default network is hypoactive in individuals with autism (Figure 4B; Kennedy et al., 2006). Moreover, functional connectivity among the brain areas within the default network is reduced in autistic individuals (Weng et al., 2010; Anderson et al., 2011; Gotts et al., 2012).

Conclusion

Despite substantial progress in our scientific understanding of psychiatric disorders, there are many challenges and unanswered questions. First, characterization of neural mechanisms responsible for specific disorders is often hindered by the potential side-effects of medication and other treatment. This is particularly true for schizophrenia and mood disorders. Nevertheless, similar problems occur in other conditions as well. For example, the extent to which steep temporal discounting results from or causes substance abuse and the mechanisms of such interactions still remains poorly understood. Second, more rigorous experiments are also required to understand how dysregulation in various neuromodulator systems results in suboptimal and sometimes abnormal parameters in decision making and reinforcement learning. Third, the function of the default network needs to be better understood. The default network might be hypoactive or hyperactive in various psychiatric disorders. However, the precise nature of computations implemented in these brain areas remains unclear. In particular, how default network activity is related to model-based reinforcement learning and mental simulation and how its dysfunctions contribute to specific symptoms remain important research questions.

The infusion of economic and machine learning framework into neuroscience has led to the rapid advance in our understanding on the neural mechanisms for decision making and reinforcement learning. Given that impaired decision making is wide-spread and often the most prominent symptoms in numerous psychiatric disorders, it is imperative for neuroscientists and clinicians to combine their expertise to develop more effective nosology and treatment. In the near future, we might first expect to see more progress in disorders for which the etiologies are better understood, such as substance abuse and Parkison’s disease. Eventually, however, the knowledge gained from neuroscience must guide the search for the prevention and cure of all psychiatric disorders.

Acknowledgments

I am grateful to Amy Arnsten, Min Whan Jung, Matt Kleinman, Ifat Levy, Mike Petrowicz, Joey Schnurr, and Hyojung Seo for their helpful comments on the manuscript. The author’s research is supported by the National Institute of Health (DA029330 and DA027844).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Abe H, Lee D. Distributed coding of actual and hypothetical outcomes in the orbital and dorsolateral prefrontal cortex. Neuron. 2011;70:731–741. doi: 10.1016/j.neuron.2011.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abe H, Seo H, Lee D. The prefrontal cortex and hybrid learning during iterative competitive games. Ann NY Acad Sci. 2011;1239:100–108. doi: 10.1111/j.1749-6632.2011.06223.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson JS, Nielsen JA, Froehlich A, DuBray MB, Druzgal TJ, Cariello AN, Cooperrider JR, Zielinski BA, Ravichandran C, Fletcher PT, Alexander AL, et al. Functional connectivity magnetic resonance imaging classification of autism. Brain. 2011;134:3742–3751. doi: 10.1093/brain/awr263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andrews PW, Thomson JA., Jr The bright side of being blue: depression as an adaptation for analyzing complex problems. Psychol Rev. 2009;116:620–654. doi: 10.1037/a0016242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andrews-Hanna JR, Reidler JS, Sepulcre J, Poulin R, Buckner RL. Functional-anatomic fractionation of the brain’s default network. Neuron. 2010;65:550–562. doi: 10.1016/j.neuron.2010.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angie AD, Connelly S, Waples EP, Kligyte V. The influence of discrete emotions on judgement and decision-making: a meta-analytic review. Cogn Emot. 2011;25:1393–1422. doi: 10.1080/02699931.2010.550751. [DOI] [PubMed] [Google Scholar]

- Anticevic A, Cole MW, Murray JD, Corlett PR, Wang XJ, Krystal JH. The role of default network deactivation in cognition and disease. Trends Cogn Sci. 2012;16:584–592. doi: 10.1016/j.tics.2012.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anticevic A, Repovs G, Barch DM. Working memory encoding and maintenance deficits in schizophrenia: neural evidence for activation and deactivation abnormalities. Schizophr Bull. 2013;39:168–178. doi: 10.1093/schbul/sbr107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnsten AFT, Pliszka SR. Catecholamine influences on prefrontal cortical function: relevance to treatment of attention deficit/hyperactivity disorder and related disorders. Pharmacol Biochem Behav. 2011;99:211–216. doi: 10.1016/j.pbb.2011.01.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnsten AFT, Wang MJ, Paspalas CD. Neuromodulation of thought: flexibilities and vulnerabilities in prefrontal cortical network synapses. Neuron. 2012;76:223–239. doi: 10.1016/j.neuron.2012.08.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aron AR, Dowson JH, Sahakian BJ, Robbins TW. Methylphenidate improves response inhibition in adults with attention-deficit/hyperactivity disorder. Biol Psychiatry. 2003;54:1465–1468. doi: 10.1016/s0006-3223(03)00609-7. [DOI] [PubMed] [Google Scholar]

- Asaad WF, Eskandar EN. Encoding of both positive and negative reward prediction errors by neurons of the primate lateral prefrontal cortex and caudate nucleus. J Neurosci. 2011;31:17772–17787. doi: 10.1523/JNEUROSCI.3793-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Atance CM, O’Neill DK. Episodic future thinking. Trends Cogn Sci. 2001;5:533–539. doi: 10.1016/s1364-6613(00)01804-0. [DOI] [PubMed] [Google Scholar]

- Ballard K, Knutson B. Dissociable neural representations of future reward magnitude and delay during temporal discounting. Neuroimage. 2009;45:143–150. doi: 10.1016/j.neuroimage.2008.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balleine BW, Dickinson A. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37:407–419. doi: 10.1016/s0028-3908(98)00033-1. [DOI] [PubMed] [Google Scholar]

- Barch DM, Ceaser A. Cognition in schizophrenia: core psychological and neural mechanisms. Trends Cogn Sci. 2012;16:27–34. doi: 10.1016/j.tics.2011.11.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barkley RA, Edwards G, Laneri M, Fletcher K, Metevia L. Executive functioning, temporal discounting, and sense of time in adolescents with attention deficit hyperactivity disorder (ADHD) and oppositional defiant disorder (ODD) J Abnorm Child Psychol. 2001;29:541–556. doi: 10.1023/a:1012233310098. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Leslie AM, Frith U. Does the autistic child have a “theory of mind”? Cognition. 1985;21:37–46. doi: 10.1016/0010-0277(85)90022-8. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H, Tranel D, Damasio AR. Deciding advantageously before knowing the advantageous strategy. Science. 1997;275:1293–1295. doi: 10.1126/science.275.5304.1293. [DOI] [PubMed] [Google Scholar]

- Behrens TEJ, Hunt LT, Rushworth MFS. The computation of social behavior. Science. 2009;324:1160–1164. doi: 10.1126/science.1169694. [DOI] [PubMed] [Google Scholar]

- Behrens TEJ, Hunt LT, Woolrich MW, Rushworth MFS. Associative learning of social value. Nature. 2008;456:245–249. doi: 10.1038/nature07538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrens TEJ, Woolrich MW, Watlson ME, Rushworth MFS. Learning the value of information in an uncertain world. Nat Neurosci. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- Bellman RE. Dynamic Programming. Princeton: Princeton University Press; 1957. [Google Scholar]

- Bernacchia A, Seo H, Lee D, Wang XJ. A reservoir of time constants for memory traces in cortical neurons. Nat Neurosci. 2011;14:366–372. doi: 10.1038/nn.2752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berns GS, Chappelow J, Cekic M, Zink CF, Pagnoni G, Martin-Skurski ME. Neurobiological substrates of dread. Science. 2006;312:754–758. doi: 10.1126/science.1123721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bissière S, Humeau Y, Lüthi A. Dopamine gates LTP induction in lateral amygdala by suppressing feedforward inhibition. Nat Neurosci. 2003;6:587–592. doi: 10.1038/nn1058. [DOI] [PubMed] [Google Scholar]

- Bolam JP, Hanley JJ, Booth PAC, Bevan MD. Synaptic organisation of the basal ganglia. J Anat. 2000;196:527–542. doi: 10.1046/j.1469-7580.2000.19640527.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boyer P. Evolutionary economics of mental time travel. Trends Cogn Sci. 2008;12:219–224. doi: 10.1016/j.tics.2008.03.003. [DOI] [PubMed] [Google Scholar]

- Brandon NJ, Millar JK, Korth C, Sive H, Singh KK, Sawa A. Understanding the role of DISC1 in psychiatric disease and during normal development. J Neurosci. 2009;29:12768–12775. doi: 10.1523/JNEUROSCI.3355-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brosnan SF, Jones OD, Lambeth SP, Mareno MC, Richardson AS, Schapiro SJ. Endowment effects in chimpanzees. Curr Biol. 2007;17:1704–1707. doi: 10.1016/j.cub.2007.08.059. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Carroll DC. Self-projection and the brain. Trends Cogn Sci. 2007;11:49–57. doi: 10.1016/j.tics.2006.11.004. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Andrews-Hanna JR, Schacter DL. The brain’s default network: anatomy, function, and relevance to disease. Ann NY Acad Sci. 2008;1124:1–38. doi: 10.1196/annals.1440.011. [DOI] [PubMed] [Google Scholar]

- Bymaster FP, Katner JS, Nelson DL, Hemrick-Luecke SK, Threlkeld PG, Heiligenstein JH, Morin SM, Gehlert DR, Perry KW. Atomoxentine increases extracellular levels of norepinephrine and dopamine in prefrontal cortex of rat: a potential mechanism for effiacy in attention deficit/hyperactivity disorder. Neuropsychopharmacology. 2002;27:699–711. doi: 10.1016/S0893-133X(02)00346-9. [DOI] [PubMed] [Google Scholar]

- Cai X, Kim S, Lee D. Heterogeneous coding of temporally discounted values in the dorsal and ventral striatum during intertemporal choice. Neuron. 2011;69:170–182. doi: 10.1016/j.neuron.2010.11.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camerer CF. Behavioral Game Theory: Experiments in Strategic Interaction. Princeton: Princeton University Press; 2003. [Google Scholar]

- Camerer C, Ho TH. Experience-weighted attraction learning in normal form games. Econometrica. 1999;67:827–874. [Google Scholar]

- Camerer C, Weber M. Recent developments in modeling preferences: uncertainty and ambiguity. J Risk Uncertainty. 1992;5:325–370. [Google Scholar]

- Cannon TD, Bearden CE, Hollister JM, Rosso IM, Sanchez LE, Hadley T. Childhood cognitive functioning in schizophrenia patients and their unaffected siblings: a prospective cohort study. Schizophr Bull. 2000;26:379–393. doi: 10.1093/oxfordjournals.schbul.a033460. [DOI] [PubMed] [Google Scholar]

- Cardinal RN, Parkinson JA, Hall J, Everitt BJ. Emotion and motivation: the role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci Biobehav Rev. 2002;26:321–352. doi: 10.1016/s0149-7634(02)00007-6. [DOI] [PubMed] [Google Scholar]

- Carmichael ST, Price JL. Connectional networks within the orbital and medial prefrontal cortex of macaque monkeys. J Comp Neurol. 1996;371:179–207. doi: 10.1002/(SICI)1096-9861(19960722)371:2<179::AID-CNE1>3.0.CO;2-#. [DOI] [PubMed] [Google Scholar]

- Chamberlain SR, Hampshire A, Müller U, Rubia K, del Campo N, Crag K, Regenthal R, Suckling J, Roiser JP, Grant JE, Bullmore ET, Robbins TW, Sahakian BJ. Atomoxetine modulates right inferior frontal activation during inhibitory control: a pharmacological functional magnetic resonance imaging study. Biol Pscychiatry. 2009;65:550–555. doi: 10.1016/j.biopsych.2008.10.014. [DOI] [PubMed] [Google Scholar]

- Chevalier G, Deniau JM. Disinhibition as a basic process in the expression of striatal functions. Trends Neurosci. 1990;13:277–280. doi: 10.1016/0166-2236(90)90109-n. [DOI] [PubMed] [Google Scholar]

- Chib VS, Rangel A, Shimojo S, O’Doherty JP. Evidence for a common representation of decision values for dissimilar goods in human ventromedial prefrontal cortex. J Neurosci. 2009;29:12315–12320. doi: 10.1523/JNEUROSCI.2575-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coffey SF, Gudleski GD, Saladin ME, Brady KT. Impulsivity and rapid discounting of delayed hypothetical rewards in cocaine-dependent individuals. Exp Clin Psychopharmacol. 2003;11:18–25. doi: 10.1037//1064-1297.11.1.18. [DOI] [PubMed] [Google Scholar]

- Corballis MC. Mental time travel: a case for evolutionary continuity. Trends Cogn Sci. 2013;17:5–6. doi: 10.1016/j.tics.2012.10.009. [DOI] [PubMed] [Google Scholar]

- Corlett PR, Murray GK, Honey GD, Aitken MRF, Shanks DR, Robbins TW, Bullmore ET, Dickinson A, Fletcher PC. Disrupted prediction-error signal in psychosis: evidence for an associative account of delusions. Brain. 2007;130:2387–2400. doi: 10.1093/brain/awm173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cornblatt B, Obuchowski M, Roberts S, Pollack S, Erlenmeyer-Kimling L. Cognitive and behavioral precursors of schizophrenia. Dev Psychopathol. 1999;11:487–508. doi: 10.1017/s0954579499002175. [DOI] [PubMed] [Google Scholar]

- Couture SM, Penn DL, Roberts DL. The functional significance of social cognition in schizophrenia: a review. Schizophr Bull. 2006;32:S44–S63. doi: 10.1093/schbul/sbl029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craske MG, Rauch SL, Ursano R, Prenoveau J, Pine DS, Zinbarg RE. What is an anxiety disorder? Depress Anxiety. 2009;26:1066–1085. doi: 10.1002/da.20633. [DOI] [PubMed] [Google Scholar]

- Croxson PL, Walton ME, O’Reilly JX, Behrens TEJ, Rushworth MFS. Effort-based cost-benefit valuation and the human brain. J Neurosci. 2009;29:4531–4541. doi: 10.1523/JNEUROSCI.4515-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui G, Jun SB, Jin X, Pham MD, Vogel SS, Lovinger DM, Costa RM. Concurrent activation of striatal direct and indirect pathways during action initiation. Nature. 2013 doi: 10.1038/nature11846. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curtis CE, Lee D. Beyond working memory: the role of persistent activity in decision making. Trends Cog Sci. 2010;14:216–222. doi: 10.1016/j.tics.2010.03.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dagher A, Robbins TW. Personality, addiction, dopamine: insights from Parkinson’s disease. Neuron. 2009;61:502–510. doi: 10.1016/j.neuron.2009.01.031. [DOI] [PubMed] [Google Scholar]

- Dalley JW, Everitt BJ, Robbins TW. Impulsivity, compulsivity, and top-down cognitive control. Neuron. 2011;69:680–694. doi: 10.1016/j.neuron.2011.01.020. [DOI] [PubMed] [Google Scholar]

- Daw ND, Gershman SJ, Seymour B, Dayan P, Dolan RJ. Model-based influences on humans’ choices and striatal prediction errors. Neuron. 2011;69:1204–1215. doi: 10.1016/j.neuron.2011.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, Kakade S, Dayan P. Opponent interactions between serotonin and dopamine. Neural Netw. 2002;15:603–616. doi: 10.1016/s0893-6080(02)00052-7. [DOI] [PubMed] [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- Dayan P, Huys QJM. Serotonin, inhibition, and negative mood. PLoS Comput Biol. 2008;4:e4. doi: 10.1371/journal.pcbi.0040004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dayan P, Huys QJM. Serotonin in affective control. Annu Rev Neurosci. 2009;32:95–126. doi: 10.1146/annurev.neuro.051508.135607. [DOI] [PubMed] [Google Scholar]

- Dayan P, Niv Y, Seymour B, Daw ND. The misbehavior of value and the discipline of the will. Neural Netw. 2006;19:1153–1160. doi: 10.1016/j.neunet.2006.03.002. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Dickerson KC. Reward-related learning via multiple memory systems. Biol Psychiatry. 2012;72:134–141. doi: 10.1016/j.biopsych.2012.01.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeLong MR. Primate models of movement disorders of basal ganglia origin. Trends Neurosci. 1990;13:281–285. doi: 10.1016/0166-2236(90)90110-v. [DOI] [PubMed] [Google Scholar]

- De Martino B, Camerer CF, Adolphs R. Amygdala damage eliminates monetary loss aversion. Proc Natl Acad Sci USA. 2010;107:3788–3792. doi: 10.1073/pnas.0910230107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Martino B, Kumaran D, Seymour B, Dolan RJ. Frames, biases, and rational decision-making in the human brain. Science. 2006;313:684–687. doi: 10.1126/science.1128356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Demurie E, Roeyers H, Baeyens D, Sonuga-Barke E. Temporal discounting of monetary rewards in children and adolescents with ADHD and autism spectrum disorders. Dev Sci. 2012;15:791–800. doi: 10.1111/j.1467-7687.2012.01178.x. [DOI] [PubMed] [Google Scholar]

- de Wit H. Impulsivity as a determinant and consequence of drug use: a review of underlying processes. Addict Biol. 2009;14:22–31. doi: 10.1111/j.1369-1600.2008.00129.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dickinson A. Actions and habits: the development of behavioural autonomy. Philos Trans R Soc Lond B Biol Sci. 1985;308:67–78. [Google Scholar]

- Dinstein I, Pierce K, Eyler L, Solso S, Malach R, Behrmann M, Courchesne E. Disrupted neural synchronization in toddlers with autism. Neuron. 2011;70:1218–1225. doi: 10.1016/j.neuron.2011.04.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dodd ML, Klos KJ, Bower JH, Geda YE, Josephs KA, Ahlskog JE. Pathological gambling caused by drugs used to treat Parkinson’s disease. Arch Neurol. 2005;62:1377–1381. doi: 10.1001/archneur.62.9.noc50009. [DOI] [PubMed] [Google Scholar]

- Doll BB, Simon DA, Daw ND. The ubiquity of model-based reinforcement learning. Curr Opin Neurobiol. 2012;22:1075–1081. doi: 10.1016/j.conb.2012.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorris MC, Glimcher PW. Activity in posterior parietal cortex is correlated with the relative subjective desirability of action. Neuron. 2004;44:365–378. doi: 10.1016/j.neuron.2004.09.009. [DOI] [PubMed] [Google Scholar]

- Doya K. Metalearning and neuromodulation. Neural Netw. 2002;15:495–506. doi: 10.1016/s0893-6080(02)00044-8. [DOI] [PubMed] [Google Scholar]

- Driver-Dunckley E, Samanta J, Stacy M. Pathological gambling associated with dopamine agonist therapy in Parkinson’s disease. Neurology. 2003;61:422–423. doi: 10.1212/01.wnl.0000076478.45005.ec. [DOI] [PubMed] [Google Scholar]

- Eshel N, Roiser JP. Reward and punishment processing in depression. Biol Psychiatry. 2010;68:118–124. doi: 10.1016/j.biopsych.2010.01.027. [DOI] [PubMed] [Google Scholar]

- Everitt BJ, Dickinson A, Robbins TW. The neuropsychological basis of addictive behaviour. Brain Res Rev. 2001;36:129–138. doi: 10.1016/s0165-0173(01)00088-1. [DOI] [PubMed] [Google Scholar]

- Fair DA, Cohen AL, Dosenbach NUF, Church JA, Miezin FM, Barch DM, Raichle ME, Petersen SE, Schlaggar BL. The maturing architecture of the brain’s default network. Proc Natl Acad Sci USA. 2008;105:4028–4032. doi: 10.1073/pnas.0800376105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farah MJ. Neuroethics: the practical and the philosophical. Trends Cogn Sci. 2005;9:34–40. doi: 10.1016/j.tics.2004.12.001. [DOI] [PubMed] [Google Scholar]

- Fellows LK, Farah MJ. Different underlying impairments in decision-making following ventromedial and dorsolateral frontal lobe damage in humans. Cereb Cortex. 2005;15:58–63. doi: 10.1093/cercor/bhh108. [DOI] [PubMed] [Google Scholar]

- Frank MJ, Seeberger LC, O’Reilly RC. By carrot or by stick: cognitive reinforcement learning in Parkinsonism. Science. 2004;306:1940–1943. doi: 10.1126/science.1102941. [DOI] [PubMed] [Google Scholar]

- Frith U. Mind blindness and the brain in autism. Neuron. 2001;32:969–979. doi: 10.1016/s0896-6273(01)00552-9. [DOI] [PubMed] [Google Scholar]

- Gallagher HL, Frith CD. Functional imaging of ‘theory of mind’. Trends Cogn Sci. 2003;7:77–83. doi: 10.1016/s1364-6613(02)00025-6. [DOI] [PubMed] [Google Scholar]

- Gamo NJ, Wang M, Arnsten AFT. Methylphenidate and atomoxetine enhance prefrontal function through α2-adrenergic and dopamine D1 receptors. J Am Acad Child Adolesc Psychiatry. 2010;49:1011–1023. doi: 10.1016/j.jaac.2010.06.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geschwind DH, Levitt P. Autism spectrum disorders: developmental disconnection syndromes. Curr Opin Neurobiol. 2007;17:103–111. doi: 10.1016/j.conb.2007.01.009. [DOI] [PubMed] [Google Scholar]

- Glantz LA, Lewis DA. Decreased dendritic spine density on prefrontal cortical pyramidal neurons in schizophrenia. Arch Gen Psychiatry. 2000;57:65–73. doi: 10.1001/archpsyc.57.1.65. [DOI] [PubMed] [Google Scholar]

- Gläscher J, Daw N, Dayan P, O’Doherty P. States versus rewards: dissociable neural prediction error signals underlying model-based and model-free reinforcement learning. Neuron. 2010;66:585–595. doi: 10.1016/j.neuron.2010.04.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glimcher PW, Camerer CF, Fehr E, Poldrack RA. Neuroeconomics: Decision Making and the Brain. London: Academic Press; 2009. [Google Scholar]