Abstract

A recent approach in social neuroscience has been the application of formal computational models for a particular social-cognitive process to neuroimaging data. Here we review preliminary findings from this nascent sub-field, focusing on observational learning and strategic interactions. We present evidence consistent with the existence of three distinct learning systems that may contribute to social cognition: an observational-reward-learning system involved in updating expectations of future reward based on observing rewards obtained by others, an action-observational learning system involved in learning about the action tendencies of others, and a third system engaged when it is necessary to learn about the hidden mental-states or traits of another. These three systems appear to map onto distinct neuroanatomical substrates, and depend on unique computational signals.

Introduction

Since the emergence of functional neuroimaging, a considerable body of literature has implicated a set of brain regions in the processes underlying human social cognition, including the posterior superior temporal sulcus (pSTS) and adjacent temporoparietal junction (TPJ), the amygdala, temporal poles, and medial prefrontal cortex (mPFC) [1–4].

The past decade has also seen the application within cognitive and systems neuroscience of a method that has come to be known as computational or model-based neuroimaging [5]. This approach involves correlating the variables generated by a formal computational model describing a particular cognitive process against neuroimaging and behavioral data generated by participants performing a related cognitive task. This technique offers the prospect of identifying not only those brain regions that are activated during a given task, as in traditional approaches, but also provides insight into “how” a particular cognitive operation is implemented in a given brain area in terms of the underlying computational processes.

The computational approach to neuroimaging was brought to bear initially on the domains of value-based learning and decision-making in non-social situations, as a means of gaining insight into the computations performed by different brain regions in learning to predict rewarding and punishing outcomes and in using those predictions to guide action selection [6–11]. Some of the key findings from this work include the determination that BOLD responses in the human striatum resemble a reward prediction error signal that can be used to incrementally update predictions of future reward [6,12,13], while activity in ventromedial prefrontal cortex (vmPFC) correlates with expectations of future reward for actions or options that are chosen on a particular trial [14–16].

More recently, tentative steps have been taken to extend this approach into the social domain [17,18]. The main objective in doing so has been to gain insight into the nature of the computations being implemented in the brain during social cognition, and in particular to put computational flesh on the bones of the psychological functions previously attributed to different brain regions within the social cognition network. In this review, we highlight two main research questions that have been pursued to date: the neural computations underpinning observational learning, and the neural computations underpinning the ability to make predictions about the intentions of others.

Observational learning

In experiential learning an agent learns about the world through direct experience with stimuli in the world and/or by taking actions in that environment and reaping the consequences. In observational learning in contrast to experiential learning, an agent learns not through direct experience but instead by observing the stimuli and consequences experienced by another agent, as well as the actions they perform in that environment. Observational learning can clearly be advantageous as it allows an agent to ascertain whether particular stimuli or actions lead to rewarding or punishing consequences without expending resources foraging or putting themself at risk of punishment.

Preliminary findings suggest that similar computational mechanisms may underpin both experiential and observational learning. More specifically, studies have reported neural correlates of reward prediction error signals in striatum [19–21] and vmPFC [19] while subjects learn by observing confederates receive monetary feedback in probabilistic reward learning tasks. Importantly, these signals reflect the deviation of a reward from the amount expected given previous play, despite the fact that the subject merely observes the receipt of this reward by the confederate.

Although the outcomes obtained by another individual represent a valuable source of information for an observer, the mere choice of action made by an observee may also influence the vicarious acquisition of instrumental responses. These observed actions may be relevant because they are often motivated by similar preferences for outcome states and may therefore represent an additional source of information regarding the optimality of available actions [19], or, relatedly, because reward may be directly contingent on successful mimicry of the actions of another agent with differing preferences over those states [21]. Action prediction error signals, representing deviations by an observed confederate from the actions expected of them by the subject, may be used to update the subject’s own behavior in computational models of learning, and have been reported in dorsolateral prefrontal cortex (dlPFC) [19,21], dorsomedial prefrontal cortex (dmPFC) [21] and bilateral inferior parietal lobule [21]; a collection of areas which exhibit significant interconnectivity (see [22,23]) The involvement of dlPFC in such learning is consistent with evidence from electrophysiological and neuroimaging studies that this area is involved in the representation of task-relevant contingencies and goals [24] and the manipulation of task-relevant information in working memory [25,26]. Given that posterior parietal cortex has also previously been implicated in attention [27–29], one possibility is that this region contributes to learning through the allocation of attention engendered by surprising or unexpected actions, compatible with some computational accounts in which attention is suggested to modulate learning [30].

Strategic Learning

The studies mentioned thus far have examined how information acquired about the experiences and actions of other individuals can be incorporated into representations used to guide one’s own behavior. However, as social animals we are often engaged in situations where we need to interact with other individuals in order to attain our goals, whether it is by co-operating or by competing with them. In order to succeed in such situations it is often necessary to be able to understand their intentions, and to use this knowledge to guide action selection. At the core of this capacity is the psychological construct known as mentalizing, in which representations are formed about the hidden mental states and intentions of another.

Such mentalizing processes can vary in their complexity. Behrens et al. [10] examined a situation in which, in contrast to the studies of Burke et al. [19], and Suzuki et al. [21], information from a confederate regarding the optimal action to take varied in its reliability, because the confederate’s interests sometimes lay in [30]deceiving the subject. In order to perform well in this type of task, it is prudent to maintain an estimate of the confederate’s fidelity, to be used to modulate the influence of their advice. Neural activity corresponding to an update signal for such an estimate was found in anterior medial prefrontal cortex, as well as in a region of temporoparietal junction.

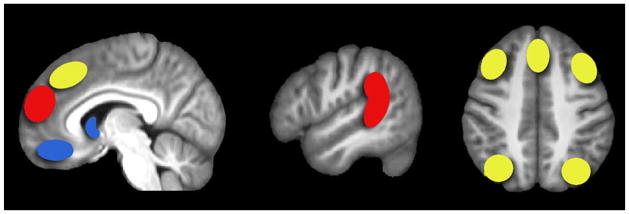

Hampton et al. [17] studied a paradigm in which two human subjects played in a variant of the competitive economic game matching pennies. In this coordination game, players choose between two actions on each round, with one player winning if the two chosen actions are the same, and the other if they are different. Such a game takes on interesting dynamics with repeated play, as players typically vie to predict their opponent’s next choice of action while masking their own intentions, providing the potential for the engagement of complex mentalizing processes. To capture the learning processes underlying action selection in this context, Hampton et al. [17] modified an algorithm called “fictive play”, drawn from the game theoretic literature [31–33]. An agent using a fictive play algorithm iteratively updates the probability that their opponent will choose a particular action based on previous choices of action. There is some evidence that, when engaged in competitive economic games against computer opponents, non-human primates may be capable of incorporating fictitious play into their action selection, as well as more simple strategies such as Win-Stay Lose-Switch and simple reinforcement learning [34–36], However, using even fictitious play ignores the danger that ones opponent is likely to be similarly adept in tracking ones own behavior. Hampton et al. [17] therefore extended the fictive learning algorithm to incorporate the effect an opponent’s predictions of ones own actions has on their action selection, endowing the agent with a “second order” mental state representation [3,37]. Hampton et al. [17] found that activity in vmPFC encoding the expected value of chosen actions incorporated this second-order knowledge, while activity in posterior superior temporal sulcus and anterior dorsomedial frontal cortex correlated with a learning signal that could be used to update the second-order component of this representation.

The types of learning and inference described above involve first- and second-order strategic reasoning, but in principle one could engage in increasing orders of iterative reasoning: thinking about you thinking about me thinking you and so on. Ideally, an agent should tailor the order of their own representation of their opponent’s mental state to be a single order higher than the estimated order of their opponent’s representation of them. To investigate the complex computations underlying the tracking of an opponents sophistication, Yoshida et al. [38,39] employed Bayesian inference over predictive models of strategic behavior, incorporating the increasing cognitive effort required by increasing strategic sophistication. The authors found [39] that the time-varying model-derived uncertainty of subjects’ estimate of the sophistication of their opponent in a strategic game was encoded in a region of anterior dmPFC, overlapping the region of dmPFC found in [10] and [17], further emphasizing the importance of this area to mentalizing-related computations.

Three distinct learning systems in social cognition?

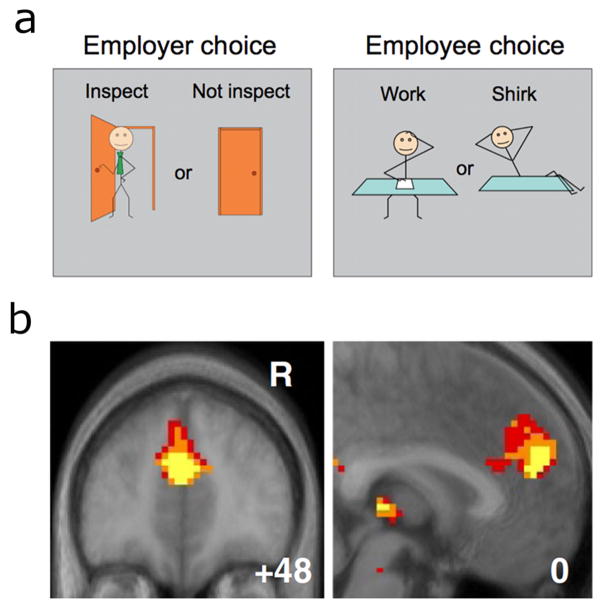

So far we have reviewed evidence suggesting that learning and inference signals are present in the brain when acquiring information about the world through observing other agents, and when engaging in strategic interactions with others. These studies suggest that three separable neural systems support conceptually distinct classes of social learning (Figure 1). The first, an observational reward-learning system consisting of striatum and vmPFC, encodes reward prediction error signals based on observed reinforcer delivery to others [19–21]. These signals may be used to directly update personal values for choice options, allowing a learner to acquire experience vicariously. The second action-observation learning system comprises dlPFC, posterior dmPFC and inferior parietal cortex, and is implicated in forming predictions about the actions of others [19,21]. Such predictions may be integrated deductively into values for ones own actions in addition to, or in the absence of, observed contingent reinforcement. The initial findings presented here appear to suggest that this action learning system is dissociable from a third system that underlies the updating of representations about the traits and/or hidden mental states of others. Learning signals consistent with these computations engage regions previously identified through non-computational neuroimaging approaches as being involved in social inferential processes such as mentalizing and theory of mind [2,3]; specifically the anterior dorsomedial frontal cortex [10,17,39], posterior STS [17] and TPJ [10].

Figure 1.

Illustration of three distinct social learning systems. The observation of reinforcing feedback delivered to others elicits reward prediction error signaling in the striatum and vmPFC (blue). Action observation prediction errors, representing deviations by others from predicted actions, have been reported in posterior dmPFC, bilateral dlPFC, and bilateral inferior parietal lobule (yellow). In contrast, anterior dmPFC and TPJ/posterior STS (red) are implicated in the updating of hidden mental state representations during strategic interactions, as opposed to observable action prediction representations.

Model-free and model-based reinforcement learning and its relation to social-learning

One intriguing possibility is that the distinction made here between different computational systems in social-learning relates to a dichotomy previously made in the experiential learning literature between model-based and model-free reinforcement-learning [28]. Model-free learning involves the direct updating of the values for candidate actions by the reinforcing effect of subsequent feedback, without reference to a rich understanding of the underlying decision problem, and is suggested to capture the type of stimulus-response learning mechanisms found in habitual control [40]. In contrast, model-based learning involves encoding a rich model of the decision problem - in particular a knowledge of states, actions and outcomes and the transition probabilities between successive states. This latter mechanism is proposed to account for goal-directed control mechanisms found in animals including humans [40].

Consistent with the possibility that such a dichotomy also applies in observational learning, Liljeholm et al. [41], using a non-computational outcome devaluation approach, provided evidence for the existence of analogous mechanisms for goal-directed and habitual learned representations in observational learning. Model-free learning is argued to depend on the type of prediction error signals found during reward-learning tasks in the striatum [7,16,42,43], and as noted above, it appears that similar areas are implicated in reward prediction error signaling in observational reward-learning paradigms [19–21]. Similarly, the action-observation prediction error system highlighted above [19,21] bears a striking resemblance both in terms of its neuroanatomical substrates and the nature of the computational learning signals involved to that of a “state prediction error” [43], a key computational signal responsible for updating of the model in model-based reinforcement learning [43,44]. The role of dlPFC in model-based learning has also been stressed by Abe et al. [34], who conducted electrophysiological recording of neurons in rhesus monkeys while they played computer-simulated competitive games. They [33] report that choices of the animals were best explained by a reinforcement learning algorithm that incorporated both the outcome of the monkeys’ actions and the hypothetical outcomes of actions not taken by the monkey. This model-based learning was suggested [34,45] to be subserved by dlPFC, which demonstrated encoding of not only the outcome of the monkey’s actions, but also the hypothetical outcome of actions not taken. Thus, it appears on the basis of the preliminary evidence that at least two of the systems found in social-learning map onto the distinction between model-based and model-free reinforcement learning found in the experiential learning literature.

If observational reward-learning and action-observation learning depend on model-free vs model-based RL respectively, what about strategic learning? Strategic learning and inference could in principle be incorporated within a model-based framework, as a rich model of the world could include representations of other agents and their intentions. However, the update signals suggested to support such computations do not represent deviations from an observable contingency such as an overt action, as in Burke et al. [19] and Suzuki et al. [21], but from a hidden mental state. Furthermore, the neural representation of such signals in anterior dmPFC, posterior STS and TPJ appear to be neuroanatomically dissociable from the signals found in action observation learning.

Uniquely social computations in strategic interactions?

Given that the other forms of social-learning we have identified depend on similar circuits to those involved in experiential learning, an important open question is whether neural circuits involved in strategic learning and inference are specifically dedicated to such inferences in the social domain alone. Resolving this issue is challenging because strategic inference by definition does not apply in a non-social setting. One way to address this might be to have participants play against a computerized opponent designed to respond in a sophisticated manner. However, a computerized opponent, particularly a sophisticated one, might still be treated as a “social agent” by participants. Another avenue would be to consider conditions under which similar higher-order computations to those engaged when manipulating mental state representations are engaged completely outside the domain of strategic interactions. One example would be decision tasks in which there is a hidden structure that needs to be inferred in order to enable optimal decisions to be rendered, such as in multi-dimensional set-shifting [46].

Conclusions and future directions

The computational neuroimaging approach to social neuroscience is still in its infancy. Yet, it is clear that this method holds promise as a means of dissecting the computational processes underpinning learning and inference in social contexts. The conclusions drawn here about distinct neural substrates for different types of social cognitive mechanisms are necessarily tentative by virtue of the very sparse literature in this domain to date. So far, computational approaches have been deployed in the context of learning and decision paradigms that don’t stray too far from the experiential reward-learning paradigms in which these techniques were first applied, such as observational reward learning or economic games. In future it will be necessary to begin to expand this approach into domains of social neuroscience far from these initial roots to areas such as social appraisal and judgment, social perception, empathy and altruistic behavior. If these forays are successful, the subfield of computational social neuroscience will truly come into its own.

Figure 2.

Strategic game used by Hampton et al. [17]. (a) In this task, one participant acted as an “employer” and the other as an “employee”. Each player could perform one of two actions: the employer could “inspect” or “not inspect” her employee while the employee could “work” or “shirk”. The interests of the two players are divergent in that the employee prefers to shirk, while the employer would prefer the employee to work, and the employer would prefer to not inspect her employer than to inspect – yet for an employee it is disadvantageous to be caught shirking if the employer inspects, and disadvantageous for the employer if the employee shirks while she doesn’t inspect. (b) In such a context it is crucial to form an accurate prediction of an opponent’s next action. This could involve simply tracking the probability with which they choose each of the two strategies available to them. However, if an opponent is tracking ones own actions in this way, then it is advantageous for a player to update their prediction of their opponent’s action to incorporate the effect of the player’s own previous action on their opponent. Hampton et al. found that the effect of this update at mPFC (−3, 51, 24 mm) was modulated by the model-predicted degree to which a subject behaves as if his actions influence his opponent.

Highlights.

We review the contribution of computational neuroimaging to social cognitive neuroscience.

We review evidence that suggests the existence of at least three different learning systems in social cognition.

These are an observational reward-learning system, an action-observation learning system and a strategic inference and learning system

Acknowledgments

This work was supported by the Neurobiology of Social Decision Making project of the NIMH Caltech Conte Center for Neuroscience.

References and recommended reading

Papers of particular interest have been highlighted as:

• of special interest

•• of outstanding interest

- 1.Ochsner KN, Lieberman MD. The emergence of social cognitive neuroscience. American Psychologist. 2001;56:717. [PubMed] [Google Scholar]

- 2.Saxe R. Why and how to study Theory of Mind with fMRI. Brain Res. 2006;1079:57–65. doi: 10.1016/j.brainres.2006.01.001. [DOI] [PubMed] [Google Scholar]

- 3.Frith CD. The Social Brain? Philos Trans R Soc Lond B Biol Sci. 2007;362:671–678. doi: 10.1098/rstb.2006.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Adolphs R. The Social Brain: Neural Basis of Social Knowledge. Annu Rev Psychol. 2009;60:693–716. doi: 10.1146/annurev.psych.60.110707.163514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.O’Doherty JP, Hampton A, Kim H. Model-Based fMRI and Its Application to Reward Learning and Decision Making. Annals of the New York Academy of Sciences. 2007;1104:35–53. doi: 10.1196/annals.1390.022. [DOI] [PubMed] [Google Scholar]

- 6.O’Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal Difference Models and Reward-Related Learning in the Human Brain. Neuron. 2003;38:329. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- 7.O’Doherty J, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- 8.Seymour B, O’Doherty JP, Dayan P, Koltzenburg M, Jones AK, Dolan RJ, Friston KJ, Frackowiak RS. Temporal difference models describe higher-order learning in humans. Nature. 2004;429:664–667. doi: 10.1038/nature02581. [DOI] [PubMed] [Google Scholar]

- 9.Seymour B, O’Doherty JP, Koltzenburg M, Wiech K, Frackowiak R, Friston K, Dolan R. Opponent appetitive-aversive neural processes underlie predictive learning of pain relief. Nature neuroscience. 2005;8:1234–1240. doi: 10.1038/nn1527. [DOI] [PubMed] [Google Scholar]

- 10.Behrens TEJ, Hunt LT, Woolrich MW, Rushworth MFS. Associative learning of social value. Nature. 2008;456:245–249. doi: 10.1038/nature07538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Preuschoff K, Quartz SR, Bossaerts P. Human insula activation reflects risk prediction errors as well as risk. The Journal of neuroscience. 2008;28:2745–2752. doi: 10.1523/JNEUROSCI.4286-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.McClure S, Daw N, Montague R. A computational substrate for incentive salience. Trends in Neurosciences. 2003;26 doi: 10.1016/s0166-2236(03)00177-2. [DOI] [PubMed] [Google Scholar]

- 13.O’Doherty J, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable Roles of Ventral and Dorsal Striatum in Instrumental Conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- 14.Daw ND, O’Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15•.Hampton AN, Bossaerts P, O’Doherty JP. The Role of the Ventromedial Prefrontal Cortex in Abstract State-Based Inference during Decision Making in Humans. J Neurosci. 2006;26:8360–8367. doi: 10.1523/JNEUROSCI.1010-06.2006. Investigates the computations supporting the construction of complex mental state representations in a strategic context. Represents an early demonstration that the vmPFC value signal is sensitive to such model-based information. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kim H, Shimojo S, O’Doherty JP. Is Avoiding an Aversive Outcome Rewarding? Neural Substrates of Avoidance Learning in the Human Brain. PLoS Biol. 2006;4:e233. doi: 10.1371/journal.pbio.0040233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hampton AN, Bossaerts P, O’Doherty JP. Neural correlates of mentalizing-related computations during strategic interactions in humans. PNAS. 2008;105:6741–6746. doi: 10.1073/pnas.0711099105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Behrens TEJ, Hunt LT, Rushworth MFS. The Computation of Social Behavior. Science. 2009;324:1160–1164. doi: 10.1126/science.1169694. [DOI] [PubMed] [Google Scholar]

- 19•.Burke CJ, Tobler PN, Baddeley M, Schultz W. Neural mechanisms of observational learning. PNAS. 2010;107:14431–14436. doi: 10.1073/pnas.1003111107. The first neuroimaging study to apply the technique of computational neuroimaging to learning from observed reinforcer delivery to others. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cooper JC, Dunne S, Furey T, O’Doherty JP. Human Dorsal Striatum Encodes Prediction Errors during Observational Learning of Instrumental Actions. Journal of Cognitive Neuroscience. 2011;24:106–118. doi: 10.1162/jocn_a_00114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21•.Suzuki S, Harasawa N, Ueno K, Gardner JL, Ichinohe N, Haruno M, Cheng K, Nakahara H. Learning to Simulate Others’ Decisions. Neuron. 2012;74:1125–1137. doi: 10.1016/j.neuron.2012.04.030. A careful examination of the neural mechanisms underlying learning from both vicarious reinforcer feedback and the observed actions of others. [DOI] [PubMed] [Google Scholar]

- 22.Wood JN, Grafman J. Human prefrontal cortex: processing and representational perspectives. Nature Reviews Neuroscience. 2003;4:139–147. doi: 10.1038/nrn1033. [DOI] [PubMed] [Google Scholar]

- 23.Amodio DM, Frith CD. Meeting of minds: the medial frontal cortex and social cognition. Nature Reviews Neuroscience. 2006;7:268–277. doi: 10.1038/nrn1884. [DOI] [PubMed] [Google Scholar]

- 24.Miller EK. The prefrontal cortex and cognitive control. Nature reviews neuroscience. 2000;1:59–66. doi: 10.1038/35036228. [DOI] [PubMed] [Google Scholar]

- 25.Petrides M. Dissociable Roles of Mid-Dorsolateral Prefrontal and Anterior Inferotemporal Cortex in Visual Working Memory. J Neurosci. 2000;20:7496–7503. doi: 10.1523/JNEUROSCI.20-19-07496.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Owen AM, Evans AC, Petrides M. Evidence for a two-stage model of spatial working memory processing within the lateral frontal cortex: a positron emission tomography study. Cereb Cortex. 1996;6:31–38. doi: 10.1093/cercor/6.1.31. [DOI] [PubMed] [Google Scholar]

- 27.Bisley JW, Mirpour K, Arcizet F, Ong WS. The role of the lateral intraparietal area in orienting attention and its implications for visual search. European Journal of Neuroscience. 2011;33:1982–1990. doi: 10.1111/j.1460-9568.2011.07700.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Culham JC, Kanwisher NG. Neuroimaging of cognitive functions in human parietal cortex. Current opinion in neurobiology. 2001;11:157–163. doi: 10.1016/s0959-4388(00)00191-4. [DOI] [PubMed] [Google Scholar]

- 29.Grefkes C, Fink GR. REVIEW: The functional organization of the intraparietal sulcus in humans and monkeys. Journal of Anatomy. 2005;207:3–17. doi: 10.1111/j.1469-7580.2005.00426.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Pearce JM, Hall G. A model for Pavlovian learning: Variations in the effectiveness of conditioned but not of unconditioned stimuli. Psychol Rev. 1980;87:532–552. [PubMed] [Google Scholar]

- 31.Fudenberg D, Levine DK. The Theory of Learning in Games. MIT Press; 1998. [Google Scholar]

- 32.Camerer C, Ho TH. Experience-weighted Attraction Learning in Normal Form Games. Econometrica. 1999;67:827–874. [Google Scholar]

- 33.Montague PR, King-Casas B, Cohen JD. Imaging valuation models in human choice. Annu Rev Neurosci. 2006;29:417–448. doi: 10.1146/annurev.neuro.29.051605.112903. [DOI] [PubMed] [Google Scholar]

- 34.Abe H, Lee D. Distributed coding of actual and hypothetical outcomes in the orbital and dorsolateral prefrontal cortex. Neuron. 2011;70:731–741. doi: 10.1016/j.neuron.2011.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Seo H, Barraclough DJ, Lee D. Lateral Intraparietal Cortex and Reinforcement Learning during a Mixed-Strategy Game. J Neurosci. 2009;29:7278–7289. doi: 10.1523/JNEUROSCI.1479-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Seo H, Lee D. Neural basis of learning and preference during social decision-making. Current Opinion in Neurobiology. 2012;22:990–995. doi: 10.1016/j.conb.2012.05.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Saxe R. Uniquely human social cognition. Current Opinion in Neurobiology. 2006;16:235–239. doi: 10.1016/j.conb.2006.03.001. [DOI] [PubMed] [Google Scholar]

- 38.Yoshida W, Dolan RJ, Friston KJ. Game Theory of Mind. PLoS Comput Biol. 2008;4:e1000254. doi: 10.1371/journal.pcbi.1000254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39••.Yoshida W, Seymour B, Friston KJ, Dolan RJ. Neural Mechanisms of Belief Inference during Cooperative Games. J Neurosci. 2010;30:10744–10751. doi: 10.1523/JNEUROSCI.5895-09.2010. This paper examines the neural substrates of a Bayesian account of the complex cognitive process by which the sophistication of the mental state representation held by an opponent in a strategic context may be estimated. Activity in anterior dmPFC encoded the model-derived uncertainty of this estimate, while left dlPFC, left superior parietal lobule and bilateral frontal eye fields reflected the sophistication of the subject’s own strategy. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nature neuroscience. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- 41.Liljeholm M, Molloy CJ, O’Doherty JP. Dissociable Brain Systems Mediate Vicarious Learning of Stimulus. Response and Action. Outcome Contingencies. J Neurosci. 2012;32:9878–9886. doi: 10.1523/JNEUROSCI.0548-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.McClure SM, Berns GS, Montague PR. Temporal prediction errors in a passive learning task activate human striatum. Neuron. 2003;38:339–346. doi: 10.1016/s0896-6273(03)00154-5. [DOI] [PubMed] [Google Scholar]

- 43.Glascher J, Daw N, Dayan P, O’Doherty JP. States versus Rewards: Dissociable Neural Prediction Error Signals Underlying Model-Based and Model-Free Reinforcement Learning. Neuron. 2010;66:585–595. doi: 10.1016/j.neuron.2010.04.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Fletcher P, Anderson J, Shanks D, Honey R, Carpenter T, Donovan T, Papadakis N, Bullmore E. Responses of human frontal cortex to surprising events are predicted by formal associative learning theory. Nature Neuroscience. 2001;4:1043–1048. doi: 10.1038/nn733. [DOI] [PubMed] [Google Scholar]

- 45.Abe H, Seo H, Lee D. The prefrontal cortex and hybrid learning during iterative competitive games. Annals of the New York Academy of Sciences. 2011;1239:100–108. doi: 10.1111/j.1749-6632.2011.06223.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Wunderlich K, Beierholm UR, Bossaerts P, O’Doherty JP. The human prefrontal cortex mediates integration of potential causes behind observed outcomes. Journal of Neurophysiology. 2011;106:1558–1569. doi: 10.1152/jn.01051.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]