Abstract

How are the meanings of concepts represented and processed? We present a cognitive model of conceptual representations and processing – the Conceptual Structure Account (CSA; Tyler & Moss, 2001) – as an example of a distributed, feature-based approach. In a first section, we describe the CSA and evaluate relevant neuropsychological and experimental behavioral data. We discuss studies using linguistic and non-linguistic stimuli, which are both presumed to access the same conceptual system. We then take the CSA as a framework for hypothesising how conceptual knowledge is represented and processed in the brain. This neuro-cognitive approach attempts to integrate the distributed feature-based characteristics of the CSA with a distributed and feature-based model of sensory object processing. Based on a review of relevant functional imaging and neuropsychological data, we argue that distributed accounts of feature-based representations have considerable explanatory power, and that a cognitive model of conceptual representations is needed to understand their neural bases.

Prelude

How do we represent and process the meaning of familiar concepts? This question has been at the heart of decades of cognitive psychological research on semantics, or conceptual representations, and has resulted in a variety of responses (e.g., Hart & Kraut, 2007; Smith & Medin, 1981). Here, we will use the terms “semantics” and “conceptual” interchangeably to reflect our view that the meaning system subserves both linguistic and non-linguistic meaning. Thus, our assumption is similar to that of Jackendoff, who considers “semantic structures” (i.e. verbally expressible meaning) to be a part of “conceptual structures” which instantiate meaning (Jackendoff, 1983; Jackendoff, 2002). We will consider cognitive models which assume that the meanings of familiar object concepts are componential in nature; i.e., that concepts are represented by smaller units of meaning (referred to as features, properties or attributes). Distributed models of conceptual representations typically assume that the individual feature nodes are represented in a connectionist system, and the processing of a concept corresponds to the co-activation of its feature nodes (e.g., Caramazza, Hillis, Rapp, & Romani, 1990; Masson, 1995; McRae, de Sa, & Seidenberg, 1997; Moss, Tyler, & Taylor, 2007; Tyler, Durrant-Peatfield, Levy, Voice, & Moss, 1996; Tyler & Moss, 2001; Tyler, Moss, Durrant-Peatfield, & Levy, 2000; Vigliocco, Vinson, Lewis, & Garrett, 2004).

Here we will focus on the distributed model we have developed – the Conceptual Structure Account (CSA; Greer et al., 2001; Moss, Tyler, & Devlin, 2002; Moss et al., 2007; Tyler & Moss, 2001; Tyler et al., 2000) - which shares many assumptions with other distributed models (Caramazza et al., 1990; Masson, 1995; McRae et al., 1997) but also has certain salient distinguishing characteristics. The CSA assumes that conceptual space is structured according to the statistical characteristics of concept features. These statistical characteristics provide concepts with an internal structure and determine how the concept will be processed in damaged and healthy systems. Thus, this type of approach claims that different categories (i.e. individual semantic categories at the superordinate level, such as tool and mammal) or domains (i.e. the broader grouping of living and nonliving things) of knowledge are not explicitly represented. Instead, features associated with different categories or domains of knowledge show consistent differences with respect to a number of critical statistical characteristics. These statistical differences, in turn, result in different patterns of preservation and loss in damaged conceptual systems and different patterns of activation in the healthy conceptual system.

Two aspects of conceptual structure show prominent effects in cognitive behavioral studies: feature distinctiveness (i.e. the extent to which a feature is shared by many concepts or is distinctive to a few concepts) and feature co-occurrence (i.e., the extent to which two features co-occur, commonly measured with correlational strength (see below)). While other feature characteristics undoubtedly play a significant role in conceptual representation and processing (see Cree & McRae, 2003, and Moss et al., 2007, for overviews), we adopt the standard approach of attempting to identify the simplest possible model that explains the maximum amount of behavioral data. We claim that feature co-occurrence and distinctiveness interact to determine conceptual processing as a function task demands, i.e. the information required to perform the task at hand (Moss et al., 2002; Moss et al., 2007; Randall, Moss, Rodd, Greer, & Tyler, 2004; Taylor, Moss, & Tyler, 2007; Taylor, Salamoura, Randall, Moss, & Tyler, 2008; Tyler & Moss, 2001).

Like Jackendoff, we take the view that conceptual structures lie at the interface between the linguistic and sensory-motor systems, providing meaning to linguistic, sensory and motor representations (Jackendoff, 1983). The consequence of this assumption is that conceptual structure can be investigated in experiments with either verbal or non-verbal (e.g. visual objects) stimuli. In our own research, we have adopted a two-pronged approach. In one series of linguistically-based experiments, we have used spoken or written word stimuli to determine whether the conceptual structure variables as specified by the CSA have consequences for the activation of meaning from orthographic or phonological inputs. These experiments were conducted with brain-damaged patients with category-specific semantic impairments and with healthy individuals. A second series of studies investigated similar issues about conceptual processing in the context of concepts as visual objects. These studies allowed us to investigate how concepts are represented and processed in the brain, by integrating the CSA with a well-developed model of visual object processing in the ventral occipitotemporal stream (Murray & Bussey, 1999; Ungerleider & Mishkin, 1982). We consider a cognitive model to be an essential starting point for understanding the neural bases of conceptual representation and processing; it allows the detailed study of conceptual processes, crystallisation of core operating principles and thereby provides a framework for designing functional imaging experiments. Our ultimate goal, shared with many others, is to understand how multidimensional feature characteristics are instantiated in the neural system to underpin meaning.

In the first section of this review, we describe the CSA (Moss, Tyler, & Taylor, 2007; Taylor, Moss, & Tyler, 2007; Tyler & Moss, 2001) and examine normative data on feature statistics. We then go on to review the neuropsychological and experimental behavioral studies of the CSA using linguistic stimuli, followed by a description of studies using non-linguistic input. In the second section of this review, we take the CSA as a framework for hypothesising how conceptual knowledge is represented and processed in the brain. We propose a neuro-cognitive approach which integrates the CSA with a sensory object processing model. We first review non-human primate, then human, evidence for distributed, hierarchically organised sensory object processing. We then describe how the distributed feature-based characteristics of the CSA can be integrated with those of the distributed and feature-based model of hierarchical object processing, and review relevant imaging studies which have employed this approach. We end with a summary and directions for future research.

The Conceptual Structure Account (CSA)

The model

Distributed models of conceptual representations assume that concepts are represented in a connectionist system composed of units, or nodes, representing individual object features (e.g., <has eyes>, <has a nose>) and where conceptual processing corresponds to the co-activation of a concept’s features (Caramazza et al., 1990; Masson, 1995; McRae et al., 1997; Moss et al., 2007; Tyler et al., 1996; Tyler & Moss, 2001; Tyler et al., 2000; Vigliocco et al., 2004). Two statistical characteristics of features are assumed to structure conceptual space and determine how concepts are processed: distinctiveness and feature co-occurrence.

Features vary in the degree to which they are distinctive of a concept: while some features are shared by many concepts (e.g., <has eyes>), others are distinctive to one or just a few concepts (e.g. <has stripes>). While shared features are typically informative about object category or domain (e.g., if an object <has eyes> it is likely to be an animal, a living thing), they are not very useful for discriminating between category or domain members (e.g. it is not possible to differentiate between a lion and a tiger on the basis of <has legs>, <has eyes>). Thus, unique object identification additionally requires distinctive object features to differentiate the object from conceptually similar objects (e.g. knowing that an object <has eyes> and <has stripes> differentiates a tiger from a lion). Feature distinctiveness is related to the concept of cue validity, i.e. the conditional probability that a feature specifies a concept (Rosch & Mervis, 1975).

Feature distinctiveness is calculated based on data from property norm studies. In these experiments, participants list all the features they can think of that belong to the target concept word. Distinctiveness is typically quantified as the inverse of the number of concepts in which a feature occurs, such that features vary along a continuum of distinctiveness ranging from 1 (occurring in one concept, e.g. <meows>) to a very small number when the feature occurs in many concepts (e.g. <has eyes>) (Cree & McRae, 2003; Devlin, Gonnerman, Andersen, & Seidenberg, 1998; McRae & Cree, 2002; McRae, Cree, Seidenberg, & McNorgan, 2005; Moss et al., 2007; Randall et al., 2004). The inverse transformation renders the data more normally distributed and probabilistic in nature, akin to the earlier concept of cue validity (for a discussion, see Mirman and Magnuson, 2009). While distributed models concentrate on graded differences in feature distinctiveness along the entire continuum from high to low distinctiveness, for factorial analyses features are sometimes classified as shared if they occur in three or more concepts, and as distinguishing (e.g. <meows>) if they occur in one or two concepts (McRae et al. 2005, Randall et al 2004).

A second critical statistical property of features is the degree to which they co-occur, with features at one end of the continuum co-occurring very frequently with other features (e.g. <has eyes>, <has tail>) and features at the other end of the continuum co-occurring infrequently (e.g., <has legs>, <has stripes>) (Keil, 1986; McRae et al., 1997; Rosch, Mervis, Gray, Johnson, & Boyes-Braem, 1976; Tyler et al., 2000; Vinson, Vigliocco, Cappa, & Siri, 2003). Feature co-occurrence is estimated with the Pearson’s product moment correlation coefficient, which provides a measure of the correlational strength between features. The correlation coefficient is calculated with concept-feature matrices, where concepts are listed in rows and features in columns, and where each cell contains a production frequency value representing the number of participants in the property norm study who listed that feature for the given concept (very low production frequencies (e.g. ≤5 of 30 participants) are commonly discarded) (Cree, McRae, & McNorgan, 1999; McRae & Cree, 2002; McRae, Cree, Seidenberg, & McNorgan, 2005; McRae, de Sa, & Seidenberg, 1997; Taylor, Moss, & Tyler, 2007; Taylor, Salamoura, Randall, Moss, & Tyler, 2008; Vinson, Vigliocco, Cappa, & Siri, 2003). Thus, the feature columns <has eyes> and <has tail> are relatively highly correlated (Pearson’s r = 0.23, n = 517, p < 0.001) since many participants listed both features for many concepts in the norm set (McRae et al., 2005; McRae et al., 1997). We define the correlational strength of a particular feature (e.g. <has eyes>) as the mean of all significant Pearson’s product moment correlations between the target feature and every other feature in the concept (Randall et al., 2004; Taylor et al., 2008). We note that this measure of co-occurrence differs from feature “intercorrelational strength”, which sums the significant feature correlations within a concept (McRae et al., 1997). Since concepts with a larger number of features are more likely to have more significantly correlated feature pairs, all else being equal (Taylor et al., 2008), and since the semantic effects associated with the number of features (e.g. Pexman, Holyk, & Monfils, 2003) may be independent of the strength with which these features are associated, the studies reported below use the mean correlational strength measure. Thus, the correlational strength measure reflects the strength with which a particular feature is associated with all other features in a concept. This has repercussions for processing in brain-damaged and normal conceptual systems. In the damaged system, strongly correlated features are assumed to benefit from their strong association, or link, and be more robust to the effects of brain damage (Moss et al., 2002; Tyler et al., 2000). In the healthy system, the processing of strongly correlated features is facilitated compared to weakly correlated features in on-line comprehension tasks (McRae et al., 1997; Randall et al., 2004).

The statistical feature characteristics of distinctiveness and correlational strength play different roles in different distributed models of conceptual representation. For example, Caramazza and colleagues’ Organized Unitary Content Hypothesis (OUCH; Caramazza et al., 1990) claims that category members share many features, and that a concept’s core semantic features are strongly intercorrelated. These characteristics lead to a “lumpy” semantic space where tightly clustered features correspond to members of a particular category and are represented close together. Thus, category-specific semantic impairments for a particular category arise when brain damage affects its lumpy region. Feature correlation and distinctiveness also play central roles in the Connectionist Attractor Network model of McRae and colleagues (Cree, McNorgan, & McRae, 2006; McRae & Cree, 2002; McRae, Cree, Westmacott, & de Sa, 1999; McRae et al., 1997). This model was based on findings from a series of behavioural and connectionist modelling experiments. In a first key study, McRae et al. (1997) showed that strongly intercorrelated features are processed faster than weakly intercorrelated features when participants perform on-line feature verification tasks. The behaviour of a computational model instantiating a Hebbian-type learning rule suggested that highly intercorrelated features benefit from a faster rise time in activation compared to weakly correlated features (McRae et al., 1997; see also McRae et al., 1999). A subsequent study found facilitatory effects of distinctive features, suggesting that upon presentation of a concept word, distinctive features have privileged access – that is, they are activated first, followed by the activation of highly correlated features (Cree et al., 2006). Thus, the Connectionist Attractor Network model predicts facilitatory (main) effects of distinctiveness and correlational strength in on-line conceptual processing.

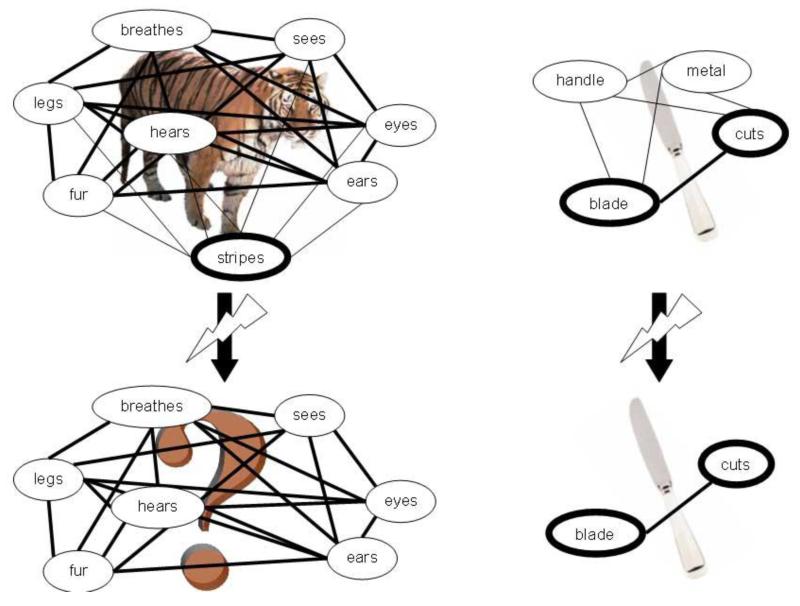

The unique claim of the CSA, and one which differentiates it from similar distributed models of conceptual representations such as those described above, is that correlational strength and distinctiveness interact to determine how concepts are internally structured and processed, and form the basis for an emergent category organisation. For example, concepts belonging to the living and nonliving domains of knowledge tend to have different internal structures, i.e. differing patterns of feature correlational strength and distinctiveness. These differences, as shown in property norm data, are claimed to be responsible for category-specific semantic impairments for living things following brain damage, as well as affecting how concepts in the two domains are processed in on-line comprehension tasks in healthy individuals (Moss et al., 2002; Moss, Tyler, Durrant-Peatfield, & Bunn, 1998; Moss, Tyler, & Jennings, 1997; Moss et al., 2007; Randall et al., 2004; Taylor, Moss, Randall, & Tyler, 2004; Taylor et al., 2007; Taylor et al., 2008; Tyler & Moss, 2001). Specifically, living things are characterised by large clusters of mainly shared features which are also highly correlated (e.g. <has legs>, <has eyes>, <has nose>). They also have relatively fewer distinctive properties which are also less highly correlated with other object features (e.g., the distinctive feature <has stripes> does not commonly co-occur with other tiger features). In contrast, nonliving things have smaller clusters of features with relatively more distinctive features, and these distinctive features tend to be more highly correlated (in part due to strong form-function relationships, e.g. <has a blade> and <cuts>) (Greer et al., 2001; Moss, Tyler, & Jennings, 1997; Moss et al., 2007; Randall et al., 2004; Tyler & Moss, 1997; Taylor et al., 2008). Strong correlations between features are thought to make them more resistant to the effects of brain damage, while distinctive features are important for discriminating between similar concepts and thus for uniquely identifying a given concept. Since the distinctive properties of living things tend to be less strongly correlated than the distinctive features of nonliving things (and shared feature of living and nonliving things), and since weakly correlated features are more susceptible to damage, the CSA proposes that brain damage affects the less correlated distinctive properties of living things to a greater extent than the more correlated distinctive properties of nonliving things (and the highly correlated shared properties of both living and nonliving things). Since distinctive properties are required to distinguish between concepts in order to uniquely identify them, the loss of distinctive living features leads to a category-specific semantic deficit for the identification of living things. Indeed, patients with living things deficits tend to know what category or domain a concept belongs to, indicating spared shared information, but they are unable to differentiate one entity within the living domain from another, indicating a selective impairment of distinctive living features (Moss et al., 2002; Moss et al., 2007; Tyler & Moss, 2001; Tyler et al., 2000; see Figure 1).

Figure 1.

Hypothetical conceptual structures for tiger and knife, where line thickness correlates with correlational strength, and ellipse thickness with distinctiveness. Following brain damage, the weakly correlated features are lost. Since living things’ distinctive features tend to be less strongly correlated than nonliving things’ distinctive features, brain damage is more likely to result in the inability to uniquely identify living things (i.e. a category-specific semantic impairment for living things).

A second central aspect of the CSA which differentiates it from similar accounts is the claim that task requirements interact with conceptual structure to influence conceptual processing. For example, when we need to uniquely identify an object (e.g. naming an object or differentiating it from highly similar objects) we require distinctive feature information, while when we merely need to make broad distinctions, such as differentiating between categories of objects, we only require access to shared features. As we will show below, task context indeed influences the patterns of patients’ and healthy participants’ performance, even when identical stimuli are used. We preface a discussion of these experimental findings with a description of how the statistical characteristics of features are determined, data which provide the foundation for experimental studies.

Normative feature data

What evidence exists to support the CSA’s claims about the conceptual structures of concrete concepts such as living and nonliving things? Recent research is exploring the ability of automatic computational linguistic techniques to extract features from large text corpora (Almuhareb & Poesio, 2005; Baroni, Murphy, Barbu, & Poesio, 2009; Devereux, Pilkington, Poibeau, & Korhonen, in press). However, currently available statistical information about concepts’ features has been derived from property generation data (McRae et al., 2005; Randall et al., 2004; Rogers et al., 2004; Vinson & Vigliocco, 2008). In these normative studies, participants are presented with a concept word, and asked to generate features that belong to the concept. The verbal feature labels are not treated as actual cognitive (or neural) feature representations, but instead are taken to reflect groups of semantic primitives that have become bundled together with repeated exposure.

One of the largest feature production studies to date (n = 541) was conducted by McRae and colleagues in North America (McRae et al., 2005). We recently anglicised these data for use with native British-speaking populations, and calculated corresponding conceptual structure statistics (Taylor, Devereux, Acres, Randall, & Tyler, submitted). Table 1 summarises the key statistical variables for prototypical living and nonliving concept categories. These data support the key claims of the CSA: compared with nonliving things, living things are characterised by larger clusters of features with relatively more shared and fewer distinguishing features (i.e., a greater proportion of shared features). While the correlational strengths of the shared features of living and nonliving things are comparable, the distinguishing features of nonliving things are significantly more highly correlated than the distinguishing features of living things. Since correlational strength is thought to protect features from the effects of brain damage, and since distinguishing features are required to uniquely identify concepts, these differences account for the disproportionate prevalence of category-specific semantic impairments for living over nonliving things (Tyler & Moss, 2001; Moss et al, 2005).

Table 1.

Conceptual structure statistics of prototypical categories of living and nonliving things based on anglicised version of McRae et al.’s (2005) feature production norms.

| Number of features |

Number of shared features |

Number of distinguishing features |

Mean correlational strength of all features |

Mean correlational strength of shared features |

Mean correlational strength of distinguishing features |

Distinctiveness × correlational strength* |

|

|---|---|---|---|---|---|---|---|

| Living things | |||||||

| animals (n = 63) | 13.9 ± 3.8 | 10.7 ± 3.1 | 3.19 ± 2.33 | 0.29 ± 0.08 | 0.23 ± 0.04 | 0.35 ± 0.10 | 0.12 ± 0.17 |

| fruits (n = 26) | 14.6 ± 3.2 | 12.3 ± 2.7 | 2.27 ± 1.59 | 0.30 ± 0.04 | 0.29 ± 0.04 | 0.31 ± 0.06 | 0.23 ± 0.20 |

| vegetables (n = 27) | 13.3 ± 3.6 | 9.7 ± 2.7 | 3.52 ± 2.98 | 0.34 ± 0.09 | 0.28 ± 0.04 | 0.37 ± 0.11 | 0.29 ± 0.52 |

|

Average of these living things |

13.9 ± 3.6 | 10.8 ± 3.0 | 3.06 ± 2.39 | 0.30 ± 0.08 | 0.26 ± 0.04 | 0.35 ± 0.09 | 0.18 ± 0.30 |

| Nonliving things | |||||||

| appliances (n = 15) | 13.4 ± 3.2 | 6.7 ± 3.1 | 6.67 ± 3.46 | 0.49 ± 0.10 | 0.27 ± 0.07 | 0.52 ± 0.10 | 0.13 ± 0.90 |

| tools (n = 37) | 11.6 ± 3.1 | 6.8 ± 2.4 | 4.76 ± 2.64 | 0.42 ± 0.15 | 0.23 ± 0.06 | 0.47 ± 0.14 | 0.41 ± 0.44 |

| vehicles (n = 28) | 12.7 ± 2.8 | 8.3 ± 1.8 | 4.36 ± 2.44 | 0.38 ± 0.09 | 0.28 ± 0.06 | 0.43 ± 0.10 | 0.33 ± 0.24 |

|

Average of these nonliving things |

12.3 ± 3.09 | 7.3 ± 2.4 | 4.98 ± 2.83 | 0.42 ± 0.13 | 0.25 ± 0.07 | 0.47 ± 0.13 | 0.33 ± 0.51 |

| Comparison of above Living vs. Nonliving things | |||||||

| t | 3.167 | 8.573 | 5.112 | 7.782 | .228 | 7.281 | 2.497 |

| p | .001 | < .0001 | < .0001 | < .0001 | ns | < .0001 | .01 |

excludes distinguishing features

We have developed an additional measure of conceptual structure which relates distinctiveness and correlational strength (‘distinctiveness × correlational strength’). The development of this measure was motivated by several factors. The first is the belief that the processing of a concept entails the activation of clusters of features which interact with one another, and that these interactions are driven by the conceptual structure statistics of the feature clusters; thus, a single, concept-specific measure is required that relates the correlational statuses of a concept’s more shared features with its more distinctive features. A second factor is the distributed nature of the phenomena under investigation. For simplicity, the variables listed in Table 1 are based on broad categorisations of features as either shared or distinguishing; however, distributed, feature-based models view both distinctiveness and correlational strength along continua, where relative differences in these variables are thought to generate graded processing effects in the conceptual system. Finally, it has been argued that the correlational strengths of distinguishing features may be spurious (Cree, McNorgan, & McRae, 2006; Taylor et al., 2008). Although this remains to be experimentally confirmed, we have adopted a conservative approach by excluding the most distinguishing features (i.e., those occurring in only one or two concepts) from the new measure.

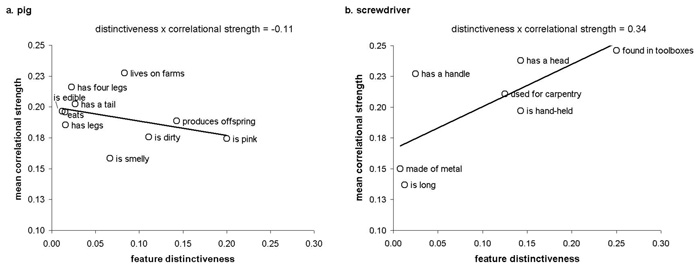

Our “distinctiveness × correlational strength” measure was obtained by producing a single scatterplot for every concept, where feature distinctiveness values are on the x-axis and correlational strengths are on the y-axis. We then performed linear regression analyses to determine the slope of the regression line fitting the scatterplot of distinctiveness × correlational strength values. The ‘distinctiveness × correlational strength’ measure is illustrated in Figure 2. Figure 2a illustrates a concept with a negative ‘distinctiveness × correlational strength’ value, where the concept’s more distinctive features are less highly correlated than the concept’s more shared features. In contrast, Figure 2b illustrates a concept with a positive ‘distinctiveness × correlational strength’ value, where the concept’s more distinctive features are more highly correlated than its shared features. The key aspect of conceptual structure that the ‘distinctiveness × correlational strength’ measure attempts to capture is the relative correlational strengths of a concept’s more distinctive properties compared to its more shared properties. As shown in Table 1, ‘distinctiveness × correlational strength’ values for living things are indeed less than for nonliving things, indicating that the correlational strengths of living things’ distinctive relative to shared features is lower than the correlational strengths of nonliving things’ distinctive relative to shared features. This finding is consistent with the CSA’s claim that the distinctive features of living things are disadvantaged relative to all other feature types. In the sections that follow, we provide experimental evidence of the “distinctiveness × correlational strength’s ability to predict healthy participants’ and brain-damaged patient’s conceptual processing performance.

Figure 2.

Examples of the ‘distinctiveness × correlational strength’ variable designed to capture the relationship between correlational strengths of features with different distinctiveness values within an individual concept, using concepts from the prototypical living and nonliving categories (i.e., animal and tool, respectively): (a) the more distinctive features in the concept “pig” have a weaker correlational strength than the shared features of “pig”, which are more strongly correlated, resulting in a negative slope value for ‘Distinctiveness × correlational strength; (b) the distinctive features of “screwdriver” are more highly correlated than their shared features, resulting in a positive value for ‘Distinctiveness × correlational strength’.

Testing the CSA in studies with linguistic input

Patient studies

Brain-damaged patients with disproportionate impairments in one category or domain of conceptual knowledge provide essential clues about how the conceptual system is organised. In 1984, Warrington and Shallice described four patients recovering from Herpes Simplex Encephalitis (HSE) who all showed disproportionate impairments at processing (e.g., naming, describing) living compared to nonliving things (Warrington & Shallice, 1984). These findings marked the beginning of the investigation into the neural bases of conceptual information. Many other instances of category-specific semantic impairments for living things have since been reported (see Forde & Humphreys, 1999, and Humphreys & Forde, 2001, for overviews), as well as the rarer pattern of a greater deficit for nonliving things (e.g. Hillis & Caramazza, 1991; Warrington & McCarthy, 1987).

In-depth testing of patients with category-specific semantic deficits revealed that the impairments did not always respect the domain boundary. For example, a patient classified with a category-specific semantic impairment for living things also had difficulty defining several categories of nonliving things, including cloths and precious stones. In an attempt to reconcile these findings, Warrington and Shallice (1984) proposed that the semantic system was organised by neuroanatomically distinct conceptual stores of functional and visual semantic information rather than by semantic category. Visual knowledge was claimed to be more relevant for defining living things as well as certain categories of nonliving things (e.g. musical instruments and precious metals), whereas functional information provided more information about nonliving things. Thus, damage to the visual semantic system should disproportionately impair knowledge of living things as well as knowledge of visual properties in general, while damage to the functional semantic system should result in greater impairments for nonliving things and in a general deficit with functional conceptual knowledge. Warrington and McCarthy proposed a further fractionation of the semantic system with separate neuroanatomical stores for different sensory modalities (e.g. visual, auditory systems) as well as their submodalities (e.g. shape, color and size systems within the visual modality; Warrington & McCarthy, 1983; Warrington & McCarthy, 1987).

The Sensory-Functional model of semantic memory stimulated a great deal of neuroscientific research on the organisation of conceptual knowledge in the brain. The fundamental distinctions drawn by the model, however, have been questioned. For example, many living things such as animals also have important functional features – biological functions (e.g. breathing) which can be spared even when artefact knowledge is impaired (which, on the sensory-functional account, is due to the loss of functional features) in patients with category-specific semantic impairments (Tyler & Moss, 1997). Other authors have suggested that domain-specific deficits disappear when other stimulus factors (i.e. frequency, familiarity and visual complexity) are jointly controlled for (Stewart, Parkin, & Hunkin, 1992). Most importantly, the central predictions of the sensory-functional model have not withstood further empirical scrutiny: visual semantics are not always more impaired than functional knowledge in patients with living things deficits (e.g. Hart & Gordon, 1992; Laiacona, Barbarotto, & Capitani, 1993; Moss et al., 1997) and even greater visual than functional semantic deficits have been reported in a patient with a nonliving deficit (Lambon Ralph, Howard, Nightingale, & Ellis, 1998).

Caramazza and colleagues (Caramazza & Shelton, 1998; Shelton & Caramazza, 1999) proposed an alternative account of the neural bases of category-specific semantic impairments, i.e. the Domain-Specific Hypothesis, more recently named the Distributed Domain-Specific Hypothesis (Mahon & Caramazza, 2009). These authors argued that the proposed domain specificity in modality-specific systems as proposed by the Sensory-Functional hypothesis represents but one level of representation supporting conceptual representations. Based largely on patient findings demonstrating a dissociation between (impaired) modality-specific concept knowledge and (spared) conceptual knowledge, Caramazza and Mahon (2006) suggested that a level of conceptual knowledge must exist independent of the modality-specific input (and output) systems. Both levels are assumed to be organised according to innately determined, evolutionary relevant semantic categories corresponding to living animate, living inanimate, conspecifics, and perhaps tools (Caramazza & Mahon, 2006; Mahon, Anzelotti, Schwarzbach, Zampini, & Caramazza, 2009). Thus, according to the Domain Specific Hypothesis, category-specific semantic impairments result from damage to the neural space representing the corresponding object category.

Two aspects of the performance of patients with category-specific semantic impairments for living things provided the impetus for distributed accounts of conceptual representations such as the CSA (Moss & Tyler, 1997; Moss et al., 1998; Moss et al., 1997; Tyler et al., 1996; Tyler & Moss, 1997; Tyler & Moss, 2001; Tyler et al., 2000). First, performance on the “spared” nonliving category is rarely within the normal range (Taylor et al., 2007). Secondly, patients lose specific kinds of feature information, i.e. distinctive (but not shared) feature information regarding living things. The graded nature of these impairments is difficult to reconcile with models postulating neuroanatomically distinct stores of category-specific information, but is a central characteristic of the performance of lesioned connectionist models (see e.g. Plaut & Shallice, 1993).

The CSA makes a number of specific predictions; one is that patients with category-specific impairments for living things will be disproportionately impaired at processing the distinctive features of living things compared to all other feature types (i.e. shared features of living and nonliving things and the distinctive features of nonliving things). We directly tested this prediction with a property verification task in which participants were asked whether living and nonliving things had specific shared features (e.g. “butterfly – does it have legs?”, “ambulance – does it have wheels?”) and distinctive features (e.g. “zebra – does it have black and white stripes?”, “drum – is it round and hollow?”). Using this task, Moss and colleagues demonstrated that HSE patient RC was disproportionately impaired at verifying the distinctive features of living things compared to all other features types (Moss et al., 1998). Since an appreciation of distinctive features is critical to unique identify concepts, the selective loss of distinctive living feature information presumably underlies the inability of patients with category-specific semantic impairments for living things to uniquely identify domain members, as assessed in the verbal modality with naming to verbal description, verbal definitions to spoken concept names, and category fluency (Moss, Rodd, Stamatakis, Bright, & Tyler, 2005; Moss et al., 1998; Moss et al., 1997).

As we have seen, the statistical characteristics of features belonging to living and nonliving things differ: property norm data reveals that living things are typified by many strongly correlated shared features and relatively few weakly correlated distinctive features, compared to nonliving things which have more and more strongly correlated distinctive features. We assume that weakly correlated features – in the case of living things, this is the distinctive features - are more susceptible to the effects of brain damage,. Since the unique identification of concepts requires access to their distinctive features, the selective loss of the distinctive features of living things patterns as a category-specific semantic impairment for living things. In this way, category-specific semantic impairments can arise from a simple, distributed feature-based system with no explicit category or domain boundaries. The findings outlined above are consistent with such a distributed, feature-based representation of conceptual information, i.e. the graded nature of living and nonliving impairments in neuropsychological patients, disproportionately poor performance on unique identification vs. categorisation tasks, and the pattern of loss and preservation of specific features within a concept.

Conceptual processing in the undamaged system

We have also carried out experiments to test the CSA’s predictions with healthy individuals. Key to these studies is the assumption that correlational strength facilitates conceptual processing by speeding the rise time in activation of highly correlated features (McRae et al., 1999; McRae et al., 1997). Based on this premise, the CSA predicts that distinctive living features (which are less highly correlated) are processed more slowly than shared living and nonliving features and distinctive nonliving features, which are all more highly correlated. This prediction was confirmed in a speeded feature verification experiment (Randall et al., 2004).

In more recent studies, we have attempted to investigate the role of conceptual structure in more naturalistic experiments using single concept stimuli. These studies used large-scale regression designs to account for a large variety of relevant variables (including the confounding variables of e.g. wordform and lemma frequency, number of phonemes, and phonological neighbourhood density of concepts) without generating unusual stimulus sets. Principal components analyses (PCA) were used to produce a smaller number of orthogonal covariates for the behavioural analyses. One experiment presented single spoken words for lexical decisions, a task which does not require unique identification of the concept. We hypothesised that since shared features tend to be significantly correlated with many other features while distinctive features tend to be correlated with relatively few other features across the norms, shared features should contribute more than distinctive features to early activation in the network. Furthermore, activation of distinctive features will not be necessary for the task, as semantic activation through correlated shared features should provide sufficient evidence that the stimulus is a meaningful word. This former prediction contrasts with that of Cree and colleagues (Cree et al., 2006), who claim that distinctive features have privileged status within the system and are activated earlier and more strongly than shared features. As predicted by the CSA, lexical decision latencies were significantly facilitated by shared features (Devereux, Taylor, Randall, Ford & Tyler, 2010). Interestingly, the effect of correlational strength was modulated by the speed of a subject’s response. Those participants who responded more slowly were sensitive to correlational strength, suggesting that they may have adopted a more conservative threshold for responding, requiring that the set of interconnected shared features within the concept reach a stable state of co-activation before making a response. Subjects who responded rapidly showed no effect of correlational strength, suggesting that they were content to make their response before the set of interconnected features had become mutually co-activated, basing their response instead on initial feature activation which would have sufficed for “word/nonword” decisions (Devereux, Taylor, Randall, Ford, & Tyler, 2010). This experiment is significant because it shows that the number of shared features and their correlational status within a concept influences the activation of word meanings even in tasks where feature terms are not explicitly presented.

Taken together, the linguistic experiments with healthy individuals suggest that conceptual processing is determined, at least in part, by the interaction of correlational strength and distinctiveness with task requirements. As described above, previous formulations of feature co-occurrence summed the (squared) correlational coefficients of significantly associated feature pairs (e.g. McRae et al., 1997), a measure which confounded number of features with their respective correlational status. When we avoid this confound in our own studies by using an alternative measure – the mean of the correlation coefficient of all significantly associated feature pairs – we find weaker effects of correlational strength, suggesting that the effects of feature co-occurrence may be partly due to the number of features associated with a concept, at least for linguistic material.

In the series of experiments presented below, we approached conceptual processing from another perspective – that of visual objects – to test our hypothesis that the conceptual structure variables determine conceptual processing, irrespective of the input modality.

Testing the CSA in studies with visual objects

Patient studies

The patterns of behavioural impairments in patients with category-specific semantic impairments for living things have also been investigated with visual objects. One of the most striking sources of evidence for a selective loss of distinctive feature knowledge of living things is the drawing performance of patients with category-specific semantic impairments. For example, patient SE’s drawings of nonliving things from memory (e.g. helicopter, anchor) were detailed enough for the identification of each concept, while his depictions of living things contained shared information (e.g. a body, eyes, ears, legs) but were devoid of distinctive feature information rendering the drawings unidentifiable (Moss et al., 1997). Lacking distinctive feature information, patients are unable to uniquely identify object pictures, resulting in a disproportionate picture naming impairment for living over nonliving things. Concurrently, the sparing of shared information allows patients to successfully sort object pictures into their respective superordinate category (Moss et al., 1998).

Studies with healthy participants

Studies with healthy participants allow much more elaborate experiments with better control over potentially confounding or correlated variables. In a recent experiment, we investigated the interaction of distinctiveness, correlational strength and task demands in a large-scale regression study with pictures of concepts (n = 412). Based on the CSA, we predicted that unique concept identification would be facilitated for concepts with many distinctive features, and with highly correlated distinctive features. In contrast, we predicted that categorisation decisions should be facilitated by many shared and highly correlated shared features. To test these predictions, one group of participants named pictured objects (Experiment 1), while another group made domain (living vs. nonliving) button-press responses to the same pictures (Experiment 2). To reduce the number of stimulus variables and render them orthogonal to each other in the statistical analyses, we performed a Principal Components Analysis (PCA) with varimax rotation on 26 variables known to affect object naming – e.g. phonological, visual and lexico-semantic – as well as conceptual structure stimulus variables. This PCA generated three conceptual structure components which we labelled (1) ‘relative distinctiveness’ (loading primarily on mean distinctiveness, the correlational strength of distinguishing features, and negatively on the proportion of shared features); (2) ‘correlational strength of shared features’; and (3) a component loading primarily on a ‘distinctiveness × correlational strength’ measure. The component loadings were entered together with participant and session variables as predictors in mixed-effects models of overt naming and domain decision RTs (Baayen, 2008; Baayen et al., 2008). As predicted by the CSA, uniquely naming an object was facilitated by a larger number and greater correlational strength of distinctive features (as indexed by the ‘relative distinctiveness’ and ‘Distinctiveness × correlational strength’ components). The greater correlational strength of shared features was associated with slower naming responses, suggesting that concepts with many highly correlated shared features activate a large semantic cohort of concepts sharing the same features, thereby interfering with unique concept identification (see also Humphreys, Price, & Riddoch, 1999). In contrast, domain decisions were facilitated by more highly correlated shared features, as well as more shared relative to distinctive features, consistent with the CSA’s predictions (Taylor et al., submitted).

These findings are generally consistent with the CSA’s key claim that feature correlation, distinctiveness and task demands interact to determine how visual concepts are processed in brain-damaged and healthy systems. An important advance in our recent experiments has been the inclusion of relational measures of conceptual structure (e.g. distinctiveness × correlational strength), which reflect our understanding of conceptual processing as the flexible and concerted activation of clusters of interacting features.

We now turn to research which aims to understand how concepts are represented in the brain. Below, we outline a neuro-cognitive approach which integrates the fundamental principles of the CSA with the neural architecture of the hierarchical object processing model in the ventral stream.

Integrating cognitive and neural models

Historically, research on how objects are processed and represented in the brain has focussed on attempts to localise neuroanatomical regions which code for objects or object features particular to a certain category. This research has typically ignored the meaning aspects of objects and has focussed on their sensory properties, which has lead to attention being primarily directed towards posterior sites in the ventral stream. How object meaning is neurally represented has largely been a separate research topic, and one that has focussed on more anterior temporal regions. Attempts to relate these two approaches, to understand how sensory inputs give rise to meaningful representations – how perception becomes conception - is rare.

Our research has been aimed at integrating these two approaches in order to understand how the perceptual properties of objects generate meaning representations. Here, we first describe a model of feature-based, hierarchical sensory processing in the ventral stream which provides a detailed account of the neural bases of object processing in non-human primates. We discuss the extent to which it can be applied to the human system, and then describe a neuro-cognitive approach which instantiates the cognitive model in the neural system within the neurobiological constraints offered by the hierarchical model of object processing. We suggest that this neuro-cognitive approach can account for various seemingly disparate functional imaging and neuropsychological findings and provides a promising framework for studying how the brain represents and processes the meanings of familiar objects.

Hierarchical object processing in non-human primates

The hierarchical model of visual object processing claims that increasingly more complex visual features are coded from posterior to anterior sites in ventral occipitotemporal cortex. Neurons in posterior occipital sites code for simple object features such as line orientation, length and width, whereas neurons in more anterior sites code for increasingly more complex combinations of these visual object features (Goebel, Muckli, & Kim, 2004; Hubel & Wiesel, 1977; Hubel & Wiesel, 1962; Mishkin, Ungerleider, & Macko, 1983; Riesenhuber & Poggio, 1999; Riesenhuber & Poggio, 2002; Tootell, Nelissen, Vanduffel, & Orban, 2004; Ungerleider & Mishkin, 1982; Van Essen, 2004). Area TE in the macaque brain (corresponding to human anterior inferotemporal cortex, or IT) has traditionally been considered the end-stage of the visual processing hierarchy, as it is the most anterior region in ventral temporal cortex to receive purely visual inputs. TE neurons coding for similar combinations of features cluster together in columns perpendicular to the cortical surface, while topographical similarity is not strictly preserved parallel to the cortical surface, where adjacent columns may code for dissimilar features (Tamura, Kaneko, & Fujita, 2005; Tanaka, 1993; Tanaka, 1996; Tanaka, 1997; Tanaka, 2003).

It has been suggested that perirhinal cortex in the anteromedial temporal lobe, rather than TE, represents the culmination of the visual object processing hierarchy of complexity (Bussey & Saksida, 2002; Murray & Richmond, 2001; Murray, Bussey, & Saksida, 2007). This hypothesis is based on the complex visual stimulus response characteristics of perirhinal cortex neurons and the fact that the majority of inputs to the perirhinal cortex are from TE (Suzuki & Amaral, 1994). Thus, perirhinal cortex may code for the most complex conjunctions of features, information which will be especially important when discriminating between objects with many ambiguous visual features, i.e. features which do not readily discriminate between objects because those features are shared by many objects (see Murray, Bussey, & Saksida, 2007, for an overview). Perirhinal cortex appears to code for complex visual feature combinations by binding together information stored at posterior sites (Higuchi & Miyashita, 1996).

Familiar objects are not only coded by their visual object features, but also by other sensory features, such as tactile and auditory features. How are these multimodal object characteristics represented and processed? The starting point appears to be unisensory feature processing in each respective sensory cortex. Similar distributed, feature-based hierarchical streams of feature processing may exist not only in the visual, but also in other sensory modalities, most notably the auditory (Rauschecker & Tian, 2000; Tian, Reser, Durham, Kustov, & Rauschecker, 2001) and somatosensory (Iwamura, 1998) systems. The different sensory streams do not appear to operate in isolation, but receive reciprocal connections from other sensory modalities at potentially every stage of processing (Falchier, Clavagnier, Barone, & Kennedy, 2002; Rockland & Ojima, 2003). These multisensory interactions appear to play a modulatory role (Lakatos, Chen, O’Connell, Mills, & Schroeder, 2007), for example by enhancing the sensory perception of multimodal objects in noisy environments (Macaluso, 2006).

Another approach to multimodal object processing has been to identify sites where different sensory streams converge. Since perirhinal cortex receives input from all other sensory modalities via uni- and polymodal association areas (Suzuki & Amaral, 1994), it has been suggested that this structure is critical for the formation of multimodal object representations (Murray, Malkova, & Goulet, 1998; Simmons & Barsalou, 2003). Indeed, single perirhinal cortex neurons show multimodal response properties, responding both to visual and auditory stimuli (Desimone & Gross, 1979). Moreover, monkeys with bilaterally aspirated perirhinal and entorhinal cortices are severely impaired in relearning a crossmodal tactile-visual delayed non-matching to sample task compared to intact and bilaterally amygdalectomised control animals (Murray et al., 1998; see also Parker & Gaffan, 1998). Thus, perirhinal cortex may bind together not only visual information (Higuchi & Miyashita, 1996), but also polymodal information stored at posterior sites to form multimodal object representations.

In summary, non-human primate research has provided us with a detailed model of object processing which provides a strong foundation for understanding human object processing. Visual objects are represented and processed in a feature-based, hierarchically-organised system extending from posterior occipital to anteromedial temporal cortex, with most complex feature conjunctions processed in perirhinal cortex. Similar feature-based hierarchies may be present in every sensory stream, and the recipient of their outputs, most notably perirhinal cortex, may play a critical role in binding unimodal information into multimodal object representations. However, this hierarchical model has generally ignored the meaning of object stimuli. This central aspect of visual object processing – how sensory inputs are processed as meaningful entities – determines how an organism interacts with objects it encounters in the world; e.g. ‘do we eat it or run from it?’ Towards this end, it is critical to integrate cognitive models of conceptual knowledge and neural models of visual object processing in order to understand how the meanings of objects are represented and processed in the brain.

Hierarchical object processing in humans?

Feature-based, hierarchical models of object processing similar to the non-human primate model have been proposed for the human system (Damasio, 1989; Simmons & Barsalou, 2003). For example, the Conceptual Topography Theory (CTT; Simmons & Barsalou, 2003), a distributed, feature-based model based on Damasio’s convergence zone theory (Damasio, 1989), claims that increasingly more complex sensory and motor object features are processed in their respective cortices in hierarchically organised processing streams, and that convergence zones at different stages of the hierarchy bind features coded in anterior sites (Barsalou, Simmons, Barbey, & Wilson, 2003; Barsalou, Solomon, & Wu, 1999; Damasio, 1989; Kreiman, Koch, & Fried, 2000; Simmons & Barsalou, 2003).

However, the bulk of human functional imaging findings on object processing have not focussed on the hierarchical aspect of object processing derived from non-human primate models. Some investigators have suggested instead that IT is organised according to the kind of visual features that differentiate between different categories of objects, such as animals and tools. On this account, objects in different categories will be processed in regionally distinct portions of ventral temporal cortex to the extent that the features coded there are relevant to the objects (Chao, Haxby, & Martin, 1999; Martin & Chao, 2001; Martin, Ungerleider, & Haxby, 2000; Mummery, Patterson, Hodges, & Wise, 1996). For example, Chao and colleagues (1999) found that animal stimuli activated lateral fusiform gyrus more than tools, while tools activated the medial fusiform more than animals. Since these regions were activated across all of their tasks, Chao and colleagues suggested that these regions code for amodal semantic object form features important for the respective object category (Chao et al., 1999).

As several investigators have pointed out (e.g. Haxby et al., 2001; Kanwisher, 2003), findings of category-specific functional activations in ventral temporal cortex are consistent with a distributed, feature-based model of object representations. That is, columns of neurons coding for stimulus features found more commonly in animals may cluster together in lateral fusiform gyrus, while columns coding for features more commonly found, for example in vehicles may be located in medial fusiform gyrus (cf. Tanaka, 2003). Indeed, Haxby and colleagues (2001) demonstrated that objects in different categories produced distributed and overlapping patterns of activation in ventral temporal cortex. Notably, the distributed patterns of responses discriminated between object categories even when the maximally responsive voxels were removed from the analyses. These results suggest that object form features are topographically coded throughout ventral temporal cortex such that whole objects can be represented by distinct patterns of distributed, high and low amplitude responses (Object Form Topography model; see also Haxby, Gobbini, Furey, Ishai, Schuten, & Pietrini, 2001; Haxby, Ishai, Chao, Ungerleider, & Martin, 2000; Ishai, Ungerleider, Martin, Schouten, & Haxby, 1999; O’Toole, Jiang, Abdi, & Haxby, 2005).

If the human ventral occipitotemporal processing stream is hierarchically organised by visual feature complexity, as has been claimed for non-human primates, then it is plausible to assume that different regions of the stream should be differentially engaged depending on the complexity of visual analyses needed to perform the task at hand. This hypothesis was tested in fMRI studies which asked participants to perform different tasks, requiring access to different kinds of featural information, on the same visual objects. In one task participants named the domain – living vs. man-made – to which an object belonged (domain-level naming), while in a second task they named the identity of the same objects (basic-level naming; e.g., tiger). While the domain-level naming task could be performed on the basis of simple visual features (e.g., curvature), the basic-level naming required much more detailed visual analyses in order to discriminate between visually similar objects (e.g., a tiger from a lion). fMRI activity during domain-level naming was associated with posterior occipital and ventral temporal activation, while activity during basic-level naming extended into the anteromedial temporal lobe including the perirhinal cortex (Tyler et al., 2004; see also Moss et al., 2005). If the ventral stream is organised according to amodal semantic category features, then BOLD activations to the same picture stimuli should have been identical in each task condition. These findings therefore support a hierarchical organisation of visual stimulus processing in the ventral occipitotemporal lobe which extends into the anteromedial temporal lobe, in which increasingly complex feature conjunctions are coded from posterior to anterior regions and are engaged as a function of the complexity of visual analyses required to solve the task. Data from studies of patients with lesions in the anteromedial temporal lobe including the perirhinal cortex further support the hypothesised role of the perirhinal cortex in complex visual discriminations. These patients have greater difficulties discriminating complex visual objects (Lee et al., 2005; Moss et al., 2005; Moss et al., 1998), especially those with many ambiguous features (Barense, Henson, Lee, & Graham, 2010; Barense et al., 2005).

The proposal from non-human primate studies that the human perirhinal cortex is critically involved in processing multimodal object representations has also been supported by recent MRI studies (Taylor, Stamatakis, & Tyler, 2009; Taylor, Moss, Stamatakis, & Tyler, 2006a). In an fMRI study, healthy subjects were presented with a combination of unimodal stimuli and audiovisual stimuli. Unimodal stimuli consisted of two parts of a sound or two parts of a picture, and the crossmodal conditions consisted of a picture and a sound. Subjects had to decide whether the stimuli went together or not. Compared to the unimodal integration conditions, crossmodal integration resulted in more functional activity in the perirhinal cortex (Taylor, Moss, & Tyler, 2006b) and in a region in posterior superior temporal sulcus/middle temporal gyrus (pSTS/MTG; Beauchamp, Argall, Bodurka, Duyn, & Martin, 2004a; Beauchamp, Lee, Argall, & Martin, 2004b). However, only perirhinal cortex activity was sensitive to the meaning of the crossmodal stimuli, suggesting that pSTS/MTG has a heteromodal, but not semantic, function. These findings were confirmed in a voxel-based correlation analysis of patients with various locations of brain damage who performed the unimodal and crossmodal integration tasks: decreased neural integrity of the anteromedial temporal cortex (but not pSTS/MTG) was associated with increasingly poorer crossmodal compared to unimodal integration performance (Taylor et al., 2009).

Taken together, these findings provide support for a distributed, feature-based, hierarchically organised object processing system in the human ventral temporal lobe, similar to the non-human primate system, which codes for increasingly more complex combinations of features from posterior to anterior and anteromedial regions. However, as noted above, this model stops short of explaining the goal of object processing – to determine an object’s meaning. To understand how the meanings of visual objects are achieved additionally requires a cognitive model of conceptual representations.

Towards a neuro-cognitive account

The neural and cognitive models share the basic architectural assumptions that object representations are feature-based, i.e. each object can be represented by the totality of their individual features, and that these features are interconnected in a distributed system. A further shared assumption is functional in nature, namely that both models assume that objects are represented by the co-activation of the object’s constituent features. We do not believe that the verbal labels generated in property generation studies or from computational feature extraction methods represent sensory features as defined by the hierarchical models. Instead, the verbal feature labels most likely reflect groups of neural responses bundled together by virtue of their having been encountered and processed. Starting from these basic principles, we suggest that neuro-cognitive predictions of the neural bases of meaningful object processing can be generated by integrating what is known about the informational requirements of the task, the internal feature-based structure of concepts, and the hierarchical nature of neural object processing.

Unique object identification requires shared and distinctive features to be integrated together. For example, knowing that an object <has stripes> does not allow us to identify what the object is (the object could be a candy cane or a tiger). Instead, the distinctive feature information must be conjoined to the shared properties that provide broad category information: <has eyes>, <has four legs> etc. and <has stripes>. However, the more shared (non-distinctive) properties an object concept has, the more similar it is to other concepts, and the more confusable the object. As described by the CSA and other similar models, living things are characterised by large clusters of mainly shared features, and have relatively fewer distinctive features. Nonliving things, on the other hand, are composed of smaller clusters of features, with relatively fewer shared features and more distinctive features. An integrated neuro-cognitive approach therefore predicts that more confusable objects – those with relatively few distinctive compared to shared features (e.g. living things) – will require more complex combinations of visual features for their identification than less confusable objects – those with a greater proportion of distinctive to shared features (e.g. nonliving things). Since the complexity of visual feature combinations increases from posterior to anterior neural sites, this predicts greater anteromedial temporal lobe involvement for the identification of confusable compared to less confusable objects.

These predictions were tested in an event-related fMRI study (Moss et al., 2005) in which healthy participants performed both a basic-level naming task (e.g., “tiger”) and a domain-level naming task. In contrast to the fMRI study design reported previously (Tyler et al., 2004), Moss and colleagues presented participants with matched sets of living and nonliving things which were carefully equated on a variety of relevant variables. As predicted, basic-level naming of living things activated the anteromedial temporal lobes more than the basic-level naming of nonliving things in both sets of matched stimuli. No such differences were observed for domain-level naming.

Based on these findings, Moss and colleagues predicted that only patients with damage to anteromedial temporal regions would have a marked semantic deficit for living things, while patients without extensive left anteromedial temporal damage may have semantic impairments, but not specific to living things. These predictions were tested in a behavioural study of two groups of neuropsychological patients matched on the severity of their semantic impairment: HSE and semantic dementia patients who both typically suffer from semantic deficits. A conjunction analysis was performed to determine the spatial the extent of these patients’ lesions. This anatomic analysis revealed that the semantic dementia patients suffered from more lateral, and the HSE patients more anteromedial temporal lobe damage. Consistent with our predictions, the HSE, but not semantic dementia, patients performed more poorly with living compared to nonliving concepts across a variety of tasks (i.e. picture naming, property verification, category fluency; Moss et al., 2005, see also Moss et al., 2002, and Moss et al., 1998). Recently, we analysed the performance of 8 HSE patients on a picture naming task with 126 realistic object pictures, which consisted of a mixture of living and nonliving things. Generalised mixed effects models were performed with the predictors lemma frequency of the object name, rated familiarity, rated visual complexity, the number of features in the concept, mean distinctiveness, mean correlational strength, and distinctiveness × correlational strength. With respect to the conceptual structure variables of interest, patients performed more poorly with concepts which had a lower correlational strength of shared features (z = 2.83, p < .01), and, critically, a relatively lower correlational strength of more distinctive features (the distinctiveness × correlational strength variable; z = 2.58, p = .01), characteristics which are typical of living things. In this way, the integrated neuro-cognitive framework provides a distributed cognitive explanation of a hitherto unexplained neuropsychological syndrome: category-specific semantic impairments for living things which are typically associated with anteromedial temporal lobe damage (Forde & Humphreys, 1999; Gainotti, Silveri, Daniele, & Giustolisi, 1995).

This model of the processing of meaningful visual objects in humans can be extended to meaningful, multimodal objects. In the crossmodal integration studies described earlier, sets of both living and nonliving things were included in the unimodal and crossmodal conditions. As explained above, we hypothesised that perirhinal cortex would be critically involved in the crossmodal integration of living compared to nonliving things. This prediction was confirmed both in the fMRI study, where perirhinal cortex BOLD activity was significantly greater during the crossmodal integration of living compared to nonliving things (Taylor et al., 2006b), and in the voxel-based correlation study, where decreased neural integrity of anteromedial temporal lobe regions was associated with significantly poorer crossmodal integration performance with living compared to nonliving things (Taylor et al., 2009). In contrast, neither BOLD activity nor signal intensities in the pSTS/MTG region were associated with semantic domain, suggesting that this region performs a heteromodal, but pre-semantic analysis of the multimodal stimulus inputs.

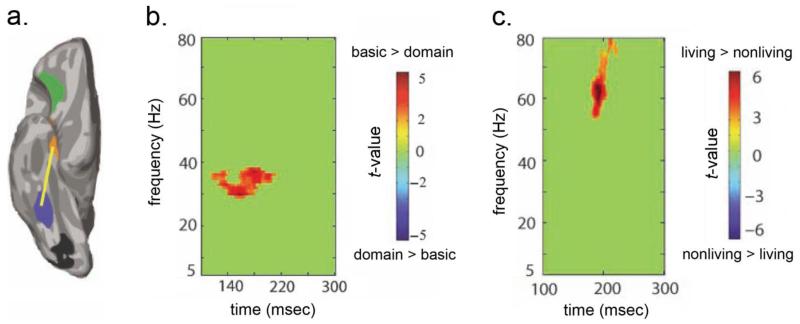

We have developed the neurocognitive model further by taking into account the massively reciprocal connectivity in the ventral occipitotemporal object processing system (Felleman & Van Essen, 1991). This basic principle of neural organisation suggests that object processing may not be a strictly feedforward process, but may also be driven by recurrent, feedback connections. Spatiotemporally sensitive imaging techniques have indeed shown that the initial feedforward sweep through the ventral stream hierarchy is followed by recurrent interactions between frontal cortex and ventral temporal regions (Bar et al., 2006; Lamme & Roelfsema, 2000). Although it remains unclear what drives these recurrent interactions, we recently tested the hypothesis that one factor modulating recurrent activity is the degree of semantic integration required either by the task or the conceptual structure of the stimuli. We recorded magnetoencephalographic (MEG) signals while participants performed a low and high semantic integration demand task (i.e., domain decision and picture naming requiring unique object identification) with visual objects representing more or less confusable concepts (i.e., living and nonliving things). As predicted, source reconstructed time-courses and phase synchronisation measures showed increased recurrent interactions between posterior and anterior regions as a function of both semantic integration manipulations (Clarke, Taylor, & Tyler, in press; see Figure 3). Moreover, a related electroencephalography study using crossmodal stimuli showed that higher integration demands on the perirhinal cortex, in the form of multimodal integration, was associated with earlier top-down feedback from anterior sites (around 50-100ms post-stimulus onset), and that this feedback was modulated by the meaningfulness of the crossmodal stimuli (i.e. semantic congruency) (Naci et al., 2006). Thus, these results support the hypothesis that semantic integration demands – as driven by task or conceptual structure – interact with the hierarchical object processing system to determine the spatiotemporal dynamics of meaningful object processing.

Figure 3.

A magnetoencephalographic (MEG) study study showed significant differences in phase-locking (p < .05 corrected) between (a) regions of interest in the left fusiform gyrus and anterior temporal cortex during experimental conditions with high semantic integration demands, i.e. (b) basic compared to domain-level picture naming and (c) basic-level naming of living compared to nonliving things. These findings indicate that the degree of semantic integration required either by the task or the conceptual structure of the stimuli modulates recurrent activity in the object processing system during meaningful object processing (adapted from Clarke, Taylor, & Tyler, in press, Figures 4 and 5).

Summary and directions for future research

The CSA is a distributed, feature-based account of conceptual representation and processing of concrete concepts. The model claims that semantic feature space is not amorphous, but is internally structured primarily according to feature distinctiveness and feature correlation. The statistical properties of features impart an internal conceptual structure to concepts, and interact with each other and task demands to determine how concepts are processed in healthy and damaged systems. We view this as a multidimensional process: bundles of features become activated during conceptual processing, and their multidimensional feature characteristics determine how quickly which features become activated.

Many important issues on the nature of conceptual representations and processing await empirical investigation. Like Jackendoff (1983), we view the conceptual space as lying at the interface between linguistic and sensory-motor systems. Therefore, one key question concerns the nature of the interface and possible interactions between conceptual information and the linguistic system. This general issue has been addressed by Vigliocco and colleagues in their Featural and Unitary Semantic Space hypothesis (FUSS; Vigliocco, Vinson, Lewis, & Garrett, 2004; Vinson, Vigliocco, Cappa, & Siri, 2003), a distributed, feature-based account of noun and action concepts. The FUSS hypothesis assumes two levels of meaning representation. The first is a ‘conceptual level’, where features are organised according to the perceptual-motor modality in which they are experienced (see also Barsalou, 1999; Martin, 2007; Warrington & McCarthy, 1983; Warrington & Shallice, 1984). The second level is ‘lexico-semantic’, where statistical properties of features are represented (including feature saliency, sharedness/distinctiveness, and feature correlation) and which binds with features at the conceptual level. A two-tiered architecture was postulated in order to allow for different mappings between conceptual and lexico-semantic features, consistent with cross-cultural variability in lexicalisation. Vigliocco and colleagues claim that features at the lexico-semantic level interface with the linguistic system, thereby generating conceptual structure effects in linguistic tasks. This general principle of a conceptual structure-linguistic interface is consistent with our own view, and provides a framework for the challenging task of specifying the mapping between linguistic forms and meaning as a function of conceptual structure and task demands.

Another key and open issue is the relationship between conceptual information and the perceptual and motor systems. Some researchers have suggested that conceptual information is embodied by the sensory and motor systems which process the respective information (Allport, 1985; Barsalou, 1999; Barsalou et al., 2003; Martin, 2007). However, an embodied account of conceptual knowledge has difficultly explaining several different conceptual phenomena, such as the existence of abstract concepts, and dissociations of sensory-based and modality-independent conceptual knowledge in brain-damaged patients (e.g. associative visual agnosia). These and other findings (see e.g. Mahon & Caramazza, 2008) suggest that an additional level of conceptual representation must exist beyond conceptual information grounded in modality-specific input and output fields. Following Damasio (1989), Simmons and Barsalou (2003) and Murray and colleagues (Murray et al., 2007; Murray & Richmond, 2001), we suggest that modality-independent conceptual information may be instantiated by the binding functions of crossmodal convergence zones. As described above, one region fulfilling the requirements of such a conceptual binding site is the perirhinal cortex. The conceptual binding sites may in turn provide the modality-independent format required to interface with the linguistic system (Jackendoff, 1983; Jackendoff, 2002).

Conceptual processing appears to rely on the hierarchically organised sensory streams. However, modality-independence may be achieved by downstream sites of integration such as (i.e. not necessarily limited to) the perirhinal cortex. The neurocognitive account outlines a method for combining the cognitive principles of distributed conceptual processing with the neurobiological constraints of hierarchical sensory system and crossmodal integration binding sites. We believe these kinds of neurocognitive approaches which combine data from distinct research traditions provide a rich framework based upon which we may significantly further our understanding of the neural bases of conceptual knowledge. It will be important for future studies to address the outstanding questions outlined above with multimodal behavioural and imaging methods in healthy and brain-damaged individuals.

Acknowledgements

The work described in this paper was supported by MRC, EPSRC and ERC Advanced Investigator funding to L.K.T., and a Marie Curie Intra-European and Swiss National Science Foundation Ambizione Fellowship to K.I.T. We thank Dr. Billi Randall who has been an important contributor to the research reported here, and Dr. Helen Moss, who was instrumental in the development of this research programme.

Footnotes

Publisher's Disclaimer: NOTICE: This is an Author's Accepted Manuscript of an article published in Language and Cognitive Processes, 2011 (copyright Taylor & Francis), available online at: http://www.tandfonline.com/doi/full/10.1080/01690965.2011.568227

Full citation: Kirsten I. Taylor , Barry J. Devereux & Lorraine K. Tyler (2011): Conceptual structure: Towards an integrated neurocognitive account, Language and Cognitive Processes, 26:9, 1368-1401

To link to this article: http://dx.doi.org/10.1080/01690965.2011.568227

References

- Allport D. Distributed memory, modular subsystems and dysphasia. In: Newman S, Epstein R, editors. Current Perspectives in Dysphasia. Churchill Livingstone; New York: 1985. [Google Scholar]

- Almuhareb A, Poesio M. Concept learning and categorization from the web; Paper presented at the 27th Annual Meeting of the Cognitive Science Society; Stresa, Italy. 2005. [Google Scholar]

- Baayen R. A Practical Introduction to Statistics using R. Cambridge University Press; Cambridge, UK: 2008. Analyzing Linguistic Data. [Google Scholar]

- Baayen R, Davidson D, Bates D. Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language. 2008;59(4):390–412. [Google Scholar]

- Bar M, Kassam KS, Ghuman AS, Boshyan J, Schmidt AM, Dale AM, et al. Top-down facilitation of visual recognition. PNAS. 2006;103(2):449–54. doi: 10.1073/pnas.0507062103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barense M, Henson R, Lee A, Graham K. Medial temporal lobe activity during complex discrimination of faces, objects, and scenes: Effects of viewpoint. Hippocampus. 2010;20:389–401. doi: 10.1002/hipo.20641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barense MD, Bussey TJ, Lee ACH, Rogers TT, Hodges JR, Saksida LM, et al. Feature ambiguity influences performance on novel object discriminations in patients with damage to perirhinal cortex; Cognitive Neuroscience Society - 2005 Annual Meeting Program; 2005.p. 129. [Google Scholar]

- Baroni M, Murphy B, Barbu E, Poesio M. Strudel: A corpus-based semantic model based on properties and types. Cognitive Science. 2009;34(2):222–54. doi: 10.1111/j.1551-6709.2009.01068.x. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behavioral and Brain Sciences. 1999;22:577–660. doi: 10.1017/s0140525x99002149. [DOI] [PubMed] [Google Scholar]

- Barsalou LW, Simmons WK, Barbey AK, Wilson CD. Grounding conceptual knowledge in modality-specific systems. TRENDS in Cognitive Sciences. 2003;7:84–92. doi: 10.1016/s1364-6613(02)00029-3. [DOI] [PubMed] [Google Scholar]

- Barsalou LW, Solomon KO, Wu LL. In: Hiraga MK, Sinha C, Wilcox S, editors. Perceptual simulation in conceptual tasks; Cultural, typological, and psychological perspectives in cognitive linguistics: The proceedings of the 4th conference of the International Cognitive Linguistics Association; Amsterdam. 1999; John Benjamins; [Google Scholar]

- Beauchamp MS, Argall BD, Bodurka J, Duyn JH, Martin A. Unraveling multisensory integration: patchy organization within human STS multisensory cortex. Nature Neuroscience. 2004a;7(11):1190–2. doi: 10.1038/nn1333. [DOI] [PubMed] [Google Scholar]