Abstract

In this study, we used magnetoencephalography and a mismatch paradigm to investigate speech processing in stroke patients with auditory comprehension deficits and age-matched control subjects. We probed connectivity within and between the two temporal lobes in response to phonemic (different word) and acoustic (same word) oddballs using dynamic causal modelling. We found stronger modulation of self-connections as a function of phonemic differences for control subjects versus aphasics in left primary auditory cortex and bilateral superior temporal gyrus. The patients showed stronger modulation of connections from right primary auditory cortex to right superior temporal gyrus (feed-forward) and from left primary auditory cortex to right primary auditory cortex (interhemispheric). This differential connectivity can be explained on the basis of a predictive coding theory which suggests increased prediction error and decreased sensitivity to phonemic boundaries in the aphasics’ speech network in both hemispheres. Within the aphasics, we also found behavioural correlates with connection strengths: a negative correlation between phonemic perception and an inter-hemispheric connection (left superior temporal gyrus to right superior temporal gyrus), and positive correlation between semantic performance and a feedback connection (right superior temporal gyrus to right primary auditory cortex). Our results suggest that aphasics with impaired speech comprehension have less veridical speech representations in both temporal lobes, and rely more on the right hemisphere auditory regions, particularly right superior temporal gyrus, for processing speech. Despite this presumed compensatory shift in network connectivity, the patients remain significantly impaired.

Keywords: aphasia, speech, mismatch negativity, MEG, dynamic causal modelling

Introduction

Aphasia is a common consequence of left hemispheric stroke. While speech production problems are almost always present (Blumstein et al., 1977), less emphasis in the literature has been placed on patients with concomitant impairment of auditory perception of language, even though this is also commonly affected (Pedersen et al., 2004). Patients with profound auditory perceptual deficits have a poorer prognosis (Bakheit et al., 2007) and usually have damage to the left temporo-parietal cortex (Kertesz et al., 1993; Goldenberg and Spatt, 1994), which includes areas that have been shown to be important for speech sound analysis and comprehension (Griffiths and Warren, 2002; Hickok and Poeppel, 2007; Leff et al., 2008; Rauschecker and Scott, 2009; Schofield et al., 2012). Understanding how damage to these regions causes persistent impairment of speech perception is important for planning interventional therapies (Price et al., 2010). Given that speech perception unfolds over the tens and hundreds-of-millisecond timescale, non-invasive functional imaging techniques with high temporal resolution such EEG or magnetoencephalography (MEG) are best placed to investigate this (Luo and Poeppel, 2007).

The mismatch negativity response is an event-related potential that has been used the most for investigating neuronal correlates of speech perception in normal subjects (Kraus et al., 1992; Sharma et al., 1993; Näätänen, 2001). Mismatch negativity is an automatic brain response generated by a fronto-temporal network including the auditory cortex (Alho, 1995) in response to infrequent changes, or deviants, in the acoustic environment with a latency of 100–250 ms after stimulus offset (Näätänen et al., 2007). Previous work in aphasic patients has produced some interesting results, including reduced mismatch negativity responses to speech stimuli but intact mismatch negativity responses to pure tone deviants (Aaltonen et al., 1993; Wertz et al., 1998; Csépe et al., 2001; Ilvonen et al., 2001, 2003, 2004); and evidence that right hemisphere responses to speech sounds may be greater in aphasic patients than in control subjects (Becker and Reinvang, 2007). However, these studies are limited on several counts: firstly, they were based on sensor-level EEG responses that lacked sufficient spatial detail regarding lesion topography and the hemisphere responsible for reduced mismatch negativities; secondly, the reports were generally based on stimuli that were not sophisticated enough to accurately characterize deficits within and across phonemic (speech sound) categories; and lastly, they did not allow a detailed mechanistic account of the neural bases of speech comprehension deficits.

To provide a more robust measure, we employed oddball stimuli with graded levels of vowel deviancy that spanned the formant space both within and across phonemic boundaries (Iverson and Evans, 2007; Leff et al., 2009). We measured mismatch negativity responses generated at the level of the underlying sources with millisecond precision using MEG in a large cohort of healthy control subjects and aphasics with left hemisphere stroke. More importantly, we provide the first systems-level (network) description of impaired phonemic processing in terms of a predictive coding theory of sensory perception (Friston, 2005; Friston and Kiebel, 2009) using dynamic causal modelling of the evoked mismatch negativity responses (Friston et al., 2003; Kiebel et al., 2008b). This allows us to quantify the underlying connection strengths between individual sources of the damaged speech comprehension network.

Briefly, predictive coding theory provides a computational account of processing in cortical hierarchies in which higher level regions represent more abstract and temporally enduring features of the sensorium (Mumford, 1992; Rao and Ballard, 1999). These higher level features are then used to make predictions of activity in lower hierarchical levels, and these predictions are conveyed by backward connections. These predictions are then compared with actual activity in lower levels, and the prediction error computed. Prediction error denotes the mismatch between sensation (sensory input) and expectation (long-term representations). These prediction errors are conveyed to higher regions by the forward connections. This recurrent message passing continues until the representations in all layers are self-consistent.

In previous work using the same stimuli, we have shown that mismatch negativity responses to vowel stimuli arise as a network property of (at least) four interacting regions in the supra-temporal plane: the primary auditory cortex (A1) and superior temporal gyrus (STG; Schofield et al., 2009). In the present study, we hypothesized a difference in the configuration of the speech network between the aphasic patients (who all had long-standing impairment of speech perception, Table 1) and control subjects. We specifically predicted that the aphasics would show deficits at the higher level of the speech hierarchy (STG) as well as impaired left hemisphere function. We also hoped to provide a baseline account of the speech perception network in these subjects, for subsequent investigation of speech comprehension in response to therapy.

Table 1.

Demographic and lesion details of the aphasic stroke patients

| Patient ID | Age at scan (years) | Time since stroke (years) | Type of stroke | Lesion volume (cm3) | Fractional lesion volume (%) |

|

|---|---|---|---|---|---|---|

| Left A1 | Left STG | |||||

| 1 | 69.6 | 1 | Ischaemic | 66.9 | 73.1 | 0 |

| 2 | 62.7 | 1.2 | Ischaemic | 37.4 | 0 | 8.4 |

| 3 | 63 | 8.6 | Ischaemic | 403.6 | 86.5 | 44.7 |

| 4 | 61.5 | 7.6 | Ischaemic | 289.6 | 86.5 | 0.2 |

| 5 | 60.5 | 5.6 | Ischaemic | 163.1 | 83.7 | 8.5 |

| 6 | 67.8 | 7.4 | Haemorrhagic | 52.9 | 0 | 12.3 |

| 7 | 64.9 | 1.2 | Ischaemic | 147.2 | 35.4 | 6.5 |

| 8 | 72.8 | 4.3 | Ischaemic | 59.5 | 15.4 | 19.3 |

| 9 | 50.6 | 3.5 | Ischaemic | 399.3 | 76.0 | 47.6 |

| 10 | 66.5 | 5.3 | Ischaemic | 197.9 | 84.1 | 17.2 |

| 11 | 61.5 | 3.4 | Multi-lacune | 40.4 | 0 | 0 |

| 12 | 63.3 | 0.6 | Ischaemic | 65.2 | 0 | 24.4 |

| 13 | 43.5 | 1.3 | Ischaemic | 65.4 | 0 | 17.5 |

| 14 | 35.8 | 6.2 | Ischaemic | 246.5 | 85.6 | 60.1 |

| 15 | 46.3 | 0.7 | Ischaemic | 27.9 | 0 | 29.3 |

| 16 | 71.1 | 5.1 | Ischaemic | 143.8 | 56.5 | 19.5 |

| 17 | 62.4 | 3.7 | Ischaemic | 155.1 | 85.4 | 0.6 |

| 18 | 68 | 4.6 | Ischaemic | 62.3 | 0 | 8.5 |

| 19 | 54 | 1.9 | Ischaemic | 81.8 | 0 | 18.0 |

| 20 | 60.9 | 3 | Haemorrhagic | 128.8 | 27.5 | 5.0 |

| 21 | 74.7 | 0.6 | Ischaemic | 45.8 | 7.3 | 0 |

| 22 | 45.4 | 2.1 | Ischaemic | 61.6 | 0 | 9.5 |

| 23 | 90.3 | 3.7 | Ischaemic | 24.2 | 0 | 0.1 |

| 24 | 62.7 | 4.9 | Ischaemic | 124.2 | 48.6 | 28.7 |

| 25 | 62.5 | 3.3 | Ischaemic | 116.5 | 9.6 | 74.9 |

| Mean (ischaemic:haemorragic:multi-lacune) | 61.7 | 3.6 | 22:2:1 | 128.3 | 18.4 | 34.4 |

Materials and methods

Participants

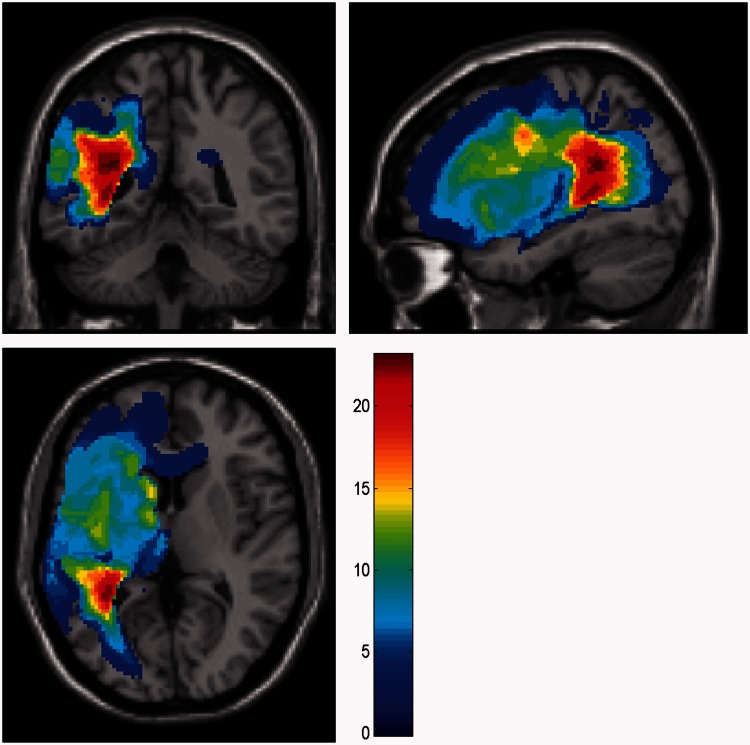

Twenty-five aphasic patients with left hemisphere stroke, normal hearing and impaired speech perception took part in this study [four females; mean age (range): 61.7 (35–90) years; average time since stroke: 3.6 years; average lesion volume: 128.27 ± 21.35 cm3]; and 17 healthy age-matched control subjects [nine females; mean age (range): 59.1 (27–72) years] without any neurological or audiological impairments. All 25 patients were in the aphasic range on a test of picture naming (that is, they had anomia) and all were in the abnormal range on at least one of two tests of speech perception. All were in the aphasic range on the reading section (evidence of a central alexia) and most (21/25) were in the aphasic range on the writing section of the Comprehensive Aphasia Test. The patients’ demographics, stroke and behavioural details are shown in Tables 1 and 2, respectively; their lesion overlap map is shown in Fig. 1. All participants provided informed written consent. The study was approved by the National Hospital for Neurology and Neurosurgery and Institute of Neurology joint research ethics committee.

Table 2.

Behavioural test details of the aphasic stroke patients

| Patient ID | Comprehension |

Object naming | Reading | Writing | Formant threshold (ERB) | Vowel threshold (ERB) | |

|---|---|---|---|---|---|---|---|

| Spoken | Vowel ID | ||||||

| 1 | 61 | 27 | 6 | 19 | 76 | 0.558 | 0.590 |

| 2 | 56 | 38 | 38 | 50 | 70 | 0.525 | 0.494 |

| 3 | 52 | 17 | 22 | 6 | 28 | 0.754 | 0.672 |

| 4 | 52 | 16 | 12 | 32 | 51 | 0.666 | 0.352 |

| 5 | 61 | 34 | 27 | 34 | 64 | 0.785 | 0.449 |

| 6 | 54 | 37 | 30 | 35 | 58 | 0.933 | 0.514 |

| 7 | 52 | 38 | 33 | 33 | 48 | 0.628 | 0.647 |

| 8 | 61 | 34 | 21 | 13 | 28 | 1.012 | 0.697 |

| 9 | 51 | 39 | 0 | 0 | 33 | 0.533 | 0.514 |

| 10 | 38 | 13 | 12 | 18 | 50 | 0.797 | 0.810 |

| 11 | 56 | 40 | 36 | 18 | 17 | 0.672 | 0.590 |

| 12 | 33 | 12 | 0 | 12 | 49 | 0.735 | 0.603 |

| 13 | 35 | 17 | 14 | 0 | 9 | 0.359 | 0.609 |

| 14 | 39 | 38 | 37 | 26 | 41 | 0.660 | 0.685 |

| 15 | 39 | 26 | 41 | 47 | 58 | 0.754 | 0.501 |

| 16 | 37 | 7 | 0 | 2 | 48 | 0.772 | 0.603 |

| 17 | 35 | 21 | 0 | 7 | 9 | 0.577 | 0.494 |

| 18 | 40 | 31 | 26 | 44 | 44 | 0.647 | 0.545 |

| 19 | 41 | 24 | 6 | 18 | 31 | 0.482 | 0.449 |

| 20 | 54 | 40 | 30 | 34 | 71 | 0.647 | 0.384 |

| 21 | 51 | 24 | 14 | 37 | 29 | 0.957 | 1.037 |

| 22 | 52 | 39 | 18 | 66 | 76 | 0.378 | 0.475 |

| 23 | 51 | 40 | 43 | 6 | 62 | 0.660 | 0.533 |

| 24 | 40 | 24 | 41 | 53 | 62 | 0.685 | 0.514 |

| 25 | 36 | 16 | 16 | 42 | 29 | 0.651 | 0.533 |

| Mean | 47 | 28 | 21 | 26 | 46 | 0.674 | 0.573 |

The patients’ behavioural performance on tasks measuring speech comprehension (spoken comprehension test from Comprehensive Aphasia Test and the vowel ID test) and speech output (object naming test) is shown. All patients were impaired on at least one of the two speech comprehension tests and all were in the abnormal range on the object naming test (anomic), confirming the diagnosis of aphasia. All had evidence of a central alexia and most were in the aphasic range for writing. The thresholds for these tests are 57 (spoken comprehension), 36 (vowel ID), 44 (object naming), 63 (reading) and 68 (writing), respectively. Values highlighted in bold indicate patients who are below this threshold. The last two columns indicate the vowel and formant thresholds (in ERBs) for the basic stimulus set from which four stimuli were selected for the MEG experiment. The final row indicates average values for the variables in each column.

Figure 1.

Lesion overlap map for all aphasic stroke patients. Lesion overlap map for the stroke patients is presented in three different planes at the MNI coordinate (−32.5, −43.6, 6.4). Hotter voxels represent areas of common damage in a majority of patients while lighter voxels represent areas of minimal overlap in lesioned area across all the patients according to the colour scheme on the right.

Stimuli

The stimuli consisted of vowels embedded in consonant–vowel–consonant (/b/-V-/t/) syllables that varied systematically in the frequencies of the first (F1) and second (F2) formants. These synthesized stimuli were based on previous studies (Iverson and Evans, 2007; Leff et al., 2009) and were designed to simulate a male British English speaker (the consonants /b/ and /t/ were extracted from natural recordings of such a speaker rather than artificially constructed). The duration of each stimulus was 464 ms with the duration of the vowel equal to 260 ms. All stimuli were synthesized using the Klatt synthesizer (Klatt and Klatt, 1990). A set of 29 different stimuli were created by parametrically varying the two formant frequencies from the corresponding values for the standard stimulus, which was designed to model the vowel /a/ (F1 = 628 Hz and F2 = 1014 Hz). Vowel and formant thresholds were determined for each aphasic patient using this stimulus set (Table 2), but only four stimuli including the standard and three deviants (D1, D2 and D3) were used in the mismatch paradigm conducted in the MEG scanner.

The three deviant stimuli differed from the standard in a non-linear, monotonic fashion and had F1 and F2 equal to 565 and 1144 Hz for D1, 507 and 1287 Hz for D2, and 237 and 2522 Hz for D3. The deviants were selected from a transformed vowel space based on the logarithmic equivalent rectangular bandwidth scale (ERB; Moore and Glasberg, 1983) so that linear changes between stimuli corresponded more closely to perception. The deviants were spaced non-linearly such that the Euclidean distance from the standard, /bart/ was equal to 1.16, 2.32 and 9.30 ERB, respectively.

Four stimuli including the standard and three deviants (D1, D2 and D3) were used in the mismatch paradigm conducted in the MEG scanner. The first deviant (D1) was within the same vowel category for most normal listeners (87% thought it was ‘bart’ while 13% thought it was ‘burt’), but the acoustic difference was noticeable (e.g. it was above the patients’ average behavioural discrimination threshold, Table 1). The second deviant (D2), /burt/ was twice as far away from the standard as D1 and similar to the distance that would make a categorical difference between vowels in English (Patient 26). The third deviant (D3), /beat/ represented a stimulus on the other side of the vowel space (i.e. eight times further away from the standard than D1, as large a difference in F1 and F2 as it is possible to synthesize). Thus, for most participants, D1 would have been perceived as an ‘acoustic’ deviant, while deviants D2 and D3 would have been perceived as ‘phonemic’ deviants.

Behavioural tests

The patients were tested on a set of customized tests examining speech perception and production as well as a standardized test of language, the Comprehensive Aphasia Test (Swinburn et al., 2005). The vowel identification task had five vowels in a /b/-V-/t/ context (i.e. ‘Bart’, ‘beat’, ‘boot’, ‘bought’ and ‘Burt’) in natural (i.e. male speaker of British English) and synthetic versions. Patients heard four repetitions of each of these 10 stimuli, and identified each stimulus as one of the five target words; only a synthetic version of ‘Burt’ was presented due to coding error. Individual discrimination thresholds for synthetic vowels and single formants were measured adaptively (Levitt et al., 1971), finding the acoustic difference that produced 71% correct responses in an AX same–different task.

Magnetoencephalography

Experimental paradigm and data acquisition

Data were acquired using a 274 channel whole-head MEG scanner with third-order axial gradiometers (CTF systems) at a sampling rate of 480 Hz. Auditory stimuli were presented binaurally by using E-A-RTONE 3A audiometric insert earphones (Etymotic Research). The stimuli were presented initially at 60 dB/sound pressure level. Subjects were allowed to alter this to a comfortable level while listening to the stimuli during a test period.

A passive oddball paradigm was used involving the auditory presentation of a train of repeating standards interleaved in a pseudorandom order with presentations of D1, D2 or D3, with a stimulus onset asynchrony of 1080 ms. Within each block, 30 deviants (of each type) were presented to create a standards-to-deviants ratio of 4:1. A minimum of two standards were presented between deviants. A total of four blocks, each lasting 540 s, were acquired for each subject resulting in a total of 120 trials for each of the three deviants. During stimulus presentation subjects were asked to perform an incidental visual detection task and not to pay attention to the auditory stimuli. Static pictures of outdoor scenes were presented for 60 s followed by a picture (presented for 1.5 s) of either a circle or a square (red shape on a grey background). The subjects were asked to press a response button (right index finger) for the circles (92%) and to withhold the response when presented with squares (8%). Patients with a right-sided hemiparesis used their unaffected hand. This go/no-go task provided evidence that subjects were attending to the visual modality. Three patients and one control participant could not do the task. Across the remaining subjects, the mean accuracy was 89.96% for control subjects and 84.79% for patients, with no significant group differences (P > 0.05; t = 0.94, n = 16 control subjects, n = 25 patients).

A Siemens Sonata 1.5 T scanner was used to acquire a high-resolution 1 mm3 T1-weighted anatomical volume image (Deichmann et al., 2004). SPM8 (Wellcome Trust Centre for Neuroimaging; http://www.fil.ion.ucl.ac.uk/spm) was used to spatially normalize this image to standard MNI space using the ‘unified segmentation’ algorithm (Ashburner and Friston, 2005), with an additional step to optimize the solution for the stroke patients (Seghier et al., 2008). Lesion volumes for the patients were estimated using the automated lesion identification algorithm implemented in SPM8.

Data preprocessing

MEG data were analysed as event-related potentials using SPM8 software (Litvak et al., 2011) in MATLAB R2010b (Mathworks Inc.). The preprocessing steps included: high-pass filtering at 1 Hz; removal of eye blink artefacts; epoching from 100 ms prestimulus to 500 ms post-stimulus; baseline correction based on the 100 ms prestimulus baseline; low-pass filtering at 30 Hz; and merging and robust averaging of the resultant data across all sessions (Litvak et al., 2011). The averaged data were low-pass filtered at 30 Hz again to remove any high-frequency noise that may have resulted from the robust averaging procedure.

Source localization

The sources underlying the MEG evoked responses were examined by fitting equivalent current dipoles using the Variational-Bayes Equivalent Current Dipole method (VB-ECD; Kiebel et al., 2008a). This is a Bayesian algorithm that requires the specification of a previous mean and variance over source locations and moments. For each participant, data corresponding to the M100 peak in a time window of 50–100 ms were used for the dipole modelling. The previous means of the location of the sources were taken from previous work on mismatch paradigms and included A1 and posterior STG in both hemispheres (Javitt et al., 1996; Opitz et al., 1999, 2002; Ulanovsky et al., 2003; Schofield et al., 2009). The locations of these sources were adopted from a functional MRI mismatch negativity study (Opitz et al., 2002) with the following coordinates: right A1 (46, −14, 8), left A1 (−42, −22, 7), right STG (59, −25, 8), and left STG (−61, −32, 8).

Our first question was to see whether the data at 100 ms were best explained by a two source, a three source or four source model (see below). We identified the sources using the event-related potential responses to standards only. We did this using the VB-ECD method which utilizes a non-linear algorithm to evaluate the log evidence in favour of each model based on an optimization between the goodness of fit and model complexity (Kiebel et al., 2008a). We set the previous variance in the position of the dipoles to 100 mm and used a prior variance of 100 nA/m2 on the dipole moments. This method was run for 100 different initializations for each family of configurations (i.e. two, three and four dipole configurations), so as to avoid local maxima. The model with the maximum model evidence for each family, for each subject, was taken up to a second-level analysis. We tested the following four families for both control subjects and patients: (i) two dipole model comprising of sources in bilateral A1; (ii) three dipole model comprising of all sources except left A1; (iii) three dipole model comprising of all sources except left STG; and (iv) four dipole model with sources in bilateral A1 and STG.

Bayesian model comparison based on random effects analysis (Stephan et al., 2009) was used to evaluate the best model family configuration. Comparison of these models revealed a consistent result: the four source model had the best model evidence when compared with the two or three source model configurations. The posterior expected probabilities for the comparison of these models for control subjects are: 0.0505, 0.1015, 0.0532 and 0.7948, respectively, and for patients: 0.0345, 0.0345, 0.0348 and 0.8962, respectively. The exceedance probabilities for comparison of Models 1, 2, 3 and 4 for control subjects are 0, 0.0001, 0 and 0.999, respectively and for patients: 0, 0, 0 and 1, respectively. The exceedance probability, e.g. for Model 4, is the probability that its frequency is higher than for any other model while the expected posterior probabilities represent the estimated frequencies with which these models are used in the population. Thus, the four dipole model emerged as the clear winning model family that best explained the data for both groups.

Source localization for the aphasic patients was further constrained by lesion topography in a semi-supervised manner. For each patient, we checked the location of the resultant dipoles of their best model (out of the 100) with respect to their lesion. For the majority (14/25), the VB-ECD method fitted the dipoles from this model outside their lesions. For the remaining patients, a single dipole in the left hemisphere was found to be inside the lesion. To correct for this, we evaluated the model with the next best model evidence until we found a dipole configuration that did not overlap with the lesion topography. For these 11 patients the reduction in model evidence for substituting the more anatomically plausible source had a median Bayes factor of 3.4 ± 1.6, compared with the ‘winning’ model. This value is ∼3 and thus considered trivial [the corresponding posterior probability is 1/(1+3) = 0.75].

We found a four dipole model to best characterize the mismatch negativity; this is consistent with previous MEG studies (Schofield et al., 2009). However, it is certainly possible that given higher signal to noise data, or a different electrophysiological measure (e.g. EEG) other sources might become apparent. In some EEG studies for example a right inferior frontal source has been implicated (e.g. Kiebel et al., 2007). We compared this extended model (five sources) with our existing model and found it to be a much less likely explanation of these data (posterior expected probability of 0.037 when compared to the four source model).

Having identified the four dipole model as the one best explaining the MEG responses to standards at a fixed point in time (∼100 ms), we then used these sources to evaluate the evoked responses to both the standard and deviants over the entire time period (0–300 ms). Firstly, in a standard univariate approach through tests on the event-related potential amplitudes and latencies at each source; and secondly, in a multivariate dynamic causal modelling analysis where interregional interactions are expressed over the whole of peristimulus time (0–300 ms).

The average latencies of the M100 in control subjects and patients were 111.64 ± 19.07 ms and 119.62 ± 20.88 ms, respectively (n = 24 as one patient did not show clear M100 response). In the control subjects, the average coordinates of the dipoles in left A1, right A1, left STG and right STG were (−40 ± 4, −34 ± 3, −1 ± 4), (51 ± 4, −24 ± 4, −1 ± 4), (−53 ± 5, −30 ± 4, 2 ± 4) and (44 ± 3, −29 ± 5, −6 ± 4), respectively. In the patients, the average coordinates of the four sources in left and right A1, and left and right STG were (−48 ± 3, −27 ± 4, −11 ± 4), (48 ± 4, −25 ± 3, −6 ± 3), (−53 ± 4, −40 ± 3, −3 ± 2) and (48 ± 4, −26 ± 3, −6 ± 2), respectively.

Event-related potential source-space analysis of mismatch fields

We measured the amplitude and latencies of the evoked difference waves for the three deviants within a window of 150–250 ms for each dipole within the winning model. We selected a single best source (either A1 or STG) in each hemisphere in a data-driven manner by analysing the response topography according to predefined rules: (i) sources in both hemispheres should have same polarity; and (ii) if both sources in the same hemisphere show clean mismatch responses, then the source with the higher amplitude was selected. In the control subjects, A1:STG was selected as the best source in the following ratio on the left 8:8 and on the right 5:11. In the patient group the ratios for the best source were, 12:12 on the left and 11:13 on the right. These values were entered into a standard 2 × 2 × 3 ANOVA (group × hemisphere × deviant) to test for statistical differences.

Dynamic causal modelling

Dynamic causal modelling of evoked MEG responses has been described previously (Garrido et al., 2007, 2008). Essentially, dynamic causal modelling invokes a neural mass model of the cortical column (Jansen and Rit, 1995) that specifies connections between the different granular layers of the cortex on the basis of known connectivity patterns (Felleman and Van Essen, 1991) e.g. forward connections synapse upon the spiny stellate cells in the granular layer of the target region, and hence have an excitatory effect. Backward connections form synapses above and below the granular layer (i.e. in the supragranular and infragranular layers), and hence can excite both excitatory pyramidal cells and inhibitory interneurons in the target region. Lateral connections (between-hemisphere) innervate all three layers of the target, and hence can also exert excitatory or inhibitory influence upon the target. A generative spatiotemporal forward model of the observed MEG source-space activity based on this connectivity pattern along with different neuronal dynamics for each layer is specified, which optimizes the difference between predicted and observed responses and estimates how well the model fits the observed data (for a review, see Kiebel et al., 2008b, 2009).

Dynamic causal modelling also incorporates (within region) self-connections. These affect the maximal amplitude of post-synaptic responses of each cell population in that region, as described in Equations (2) and (5) of Kiebel et al. (2007). These maximal responses are modulated by a gain parameter, where a gain >1 effectively increases the maximal response that can be elicited from a source. We refer to this as ‘gain control’ because it affects the sensitivity of the region to the input it receives. With other factors being equal, larger event related fields (ERFs) can be better fitted with larger gain values.

For the purpose of this study, we were interested in using dynamic causal modelling to examine the connection strengths and the modulation of connections between the four sources as a function of phonemic deviancy (i.e. D3 and D2 versus D1). This phonemic contrast reflects changes in phonemic perception as deviants D3 and D2 belonged to different vowel categories to both D1 and the standard.

The number of possible connections for a four dipole model (16) determines the number of different dynamic causal models for the network (2 ^ 16 − 1 = 65 535). As this results in a combinatorial explosion of model space, we imposed constraints on the model configuration by firstly, not allowing diagonal connections between A1 and STG in opposite hemispheres, and secondly, by including self-connections in each model. This resulted in eight connections (two forward, two backward and four lateral) that were allowed to vary (while the four self-connections were fixed) and provided us with 255 (2 ^ 8 – 1) models with different permutations of these eight connections between the four sources.

For each group, all the dynamic causal modelling models for each participant were entered into a group-level Bayesian Model average analysis with a random-effects design (Penny et al., 2010). This was used to calculate the average modulation of connection strength (i.e. mean of the gain for the second and third deviants versus the gain for the first deviant) for each connection across all the models in the model space. Gains are measured in log-space, hence for each connection an average relative gain equal to zero indicates that the phonemic boundary did not modulate connection strength; whereas an average gain greater or smaller than 0 indicates that the connection strength was different for the phonemic (D3 and D2) versus the acoustic deviant (D1).

The statistical significance of the Bayesian Model Average results was examined, connection by connection, using a non-parametric proportion test (Penny et al., 2010). For each connection, the distribution of the log gain was reconstructed by taking 10 000 samples from posterior distributions calculated in the Bayesian Model Average analysis. A connection was considered to be significantly positively or negatively modulated by the phonemic contrast (D3 and D2 versus D1) if >90% of samples were greater or smaller than zero, respectively. This corresponds to a posterior probability of Ppost ≥ 0.90, an approach that has been employed previously (Richardson et al., 2011). This analysis was performed for all 12 connections for each group separately (two within-group analyses). Furthermore, we also compared the modulations of these connections as a function of phonemic deviancy between the two groups (a between-group analysis).

We performed a sub-analysis on the dynamic causal modelling data, splitting the group into two: those with damage to left A1 (n = 15) and those with left A1 spared (n = 10), (Table 1). The results of this analysis are available in Supplementary Fig. 2.

Results

Event-related potential source-space analysis of mismatch negativity responses

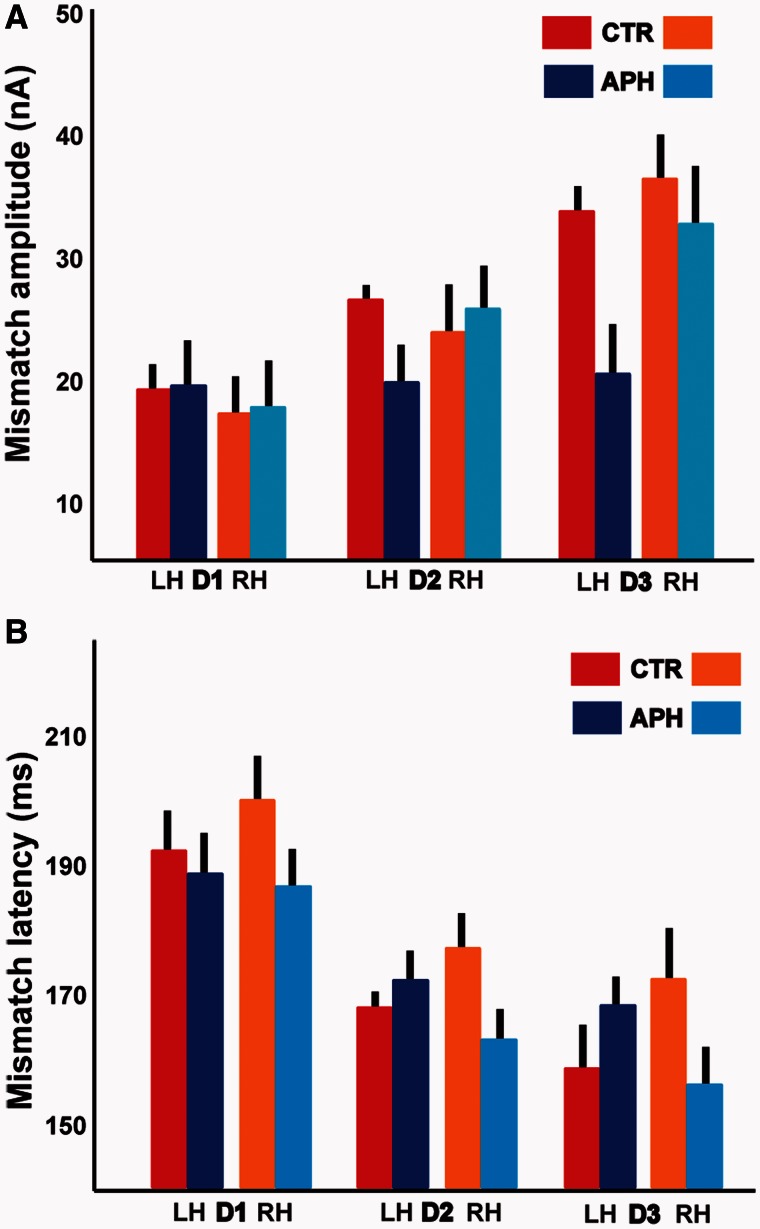

Figure 2A shows the average mismatch negativity response amplitudes for both groups. We observed a significant main effect of hemisphere [F(1,72) = 13.97; P < 0.001], group [F(1,72) = 26.38; P < 0.001] and a significant interaction between hemisphere and group [F(1,72) = 12.60; P < 0.002]. We also found a significant within-subject interaction between amplitude, hemisphere and group [F(1.661,144) = 12.613; P < 0.001]. For the aphasic group, we found a main effect of hemisphere [F(1,46) = 35.15; P < 0.001] but not in the control group [F(1,26) = 0.015; P > 0.9].

Figure 2.

Source-space mismatch negativity analysis. (A) The amplitudes of the mismatch negativity responses in the source-space are shown here for each deviant, hemisphere and patient group according to the colour scheme on the top right. The sources for the left and right hemisphere are collapsed across the best source amongst A1 and STG, as defined by our selection criteria (see ‘Materials and methods’ section). (B) The latencies of the mismatch negativity responses in the source-space are presented for each deviant, hemisphere and patient group based on the same colour scheme. Error bars reflect one SEM. n = 16 for control subjects (CTR) and n = 24 for patients (APH). LH = left hemisphere; RH = right hemisphere.

Interestingly, contrary to previous reports, we found robust mismatch negativity responses in the aphasic subjects but these were not significantly different between D1, D2 and D3 in the left hemisphere. Crucially, a post hoc paired samples t-test revealed that for D2 and D3, there was no significant difference between the left hemisphere amplitude in control subjects and right hemisphere amplitude in aphasics (P > 0.05). The right hemisphere mismatch responses in aphasics were thus comparable with the left hemisphere mismatch responses in healthy control subjects.

The average latencies of the mismatch negativity responses are shown in Fig. 2B for both groups. Here, we did not observe any significant effect of hemisphere or group, or an effect of hemisphere within each group. However, we observed a post hoc trend for an interaction between latency and hemisphere for D3 (P = 0.06). Furthermore, for D2 and D3 specifically, we found no significant difference between the latency of the response in the left hemisphere of control subjects and the latency of the right hemisphere response in aphasics (P = 0.64 and P = 0.36, respectively).

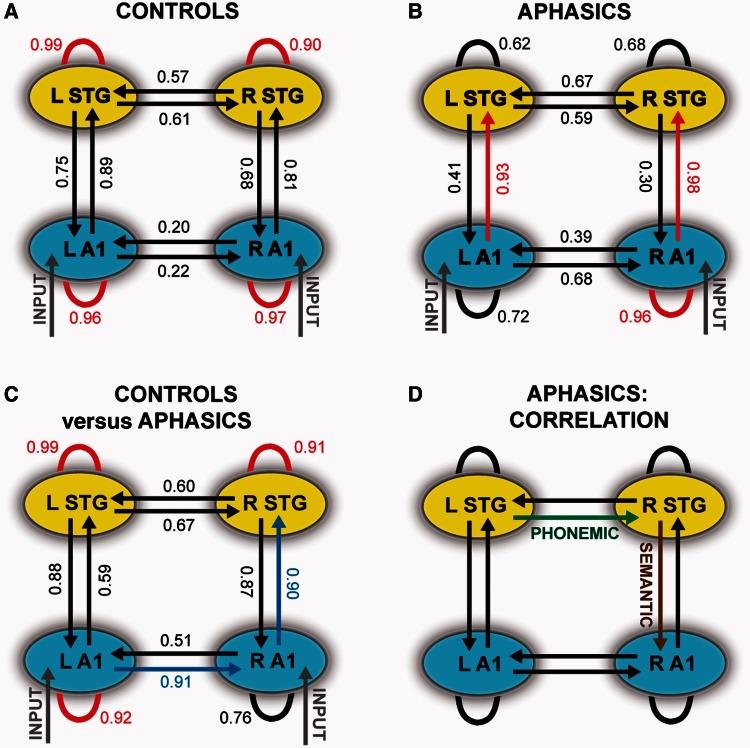

Dynamic causal modelling

Bayesian Model Average results for the modulation of connections as a function of phonemic deviancy (D2 and D3 versus D1) across the control and patient groups are shown in Fig. 3A and B, respectively. In the control subjects, we found that each of the self-connections of the four sources was significantly positively modulated suggesting enhanced sensitivity to phonemic input at all levels of the speech network. The patients, on the other hand, showed a different result: the self-connection of right A1 and the forward connections from bilateral A1 to STG were significantly positively modulated by phonemic deviancy (Fig. 3B).

Figure 3.

Average group-level Bayesian Model Average and correlation results. (A and B) The winning family of models for the best source model consisting of four dipoles is shown here for the control subjects (A) and aphasics (B), respectively. Connections that are significantly positively modulated by the phonemic deviancy (D2 and D3 versus D1) are shown in red while negatively modulated connections are shown in blue. Connections shown in black are not significantly modulated by the phonemic contrast. Auditory input is represented in grey at the level of both primary auditory cortices. (C) Significantly modulated connections for a comparison between control subjects and aphasics are shown. Red connections represent connections that are strongly modulated in control subjects versus aphasics while blue connections are those that are weakly modulated in control subjects versus aphasics. Black connections do not show any significant difference between the two groups. (A–C) The exceedance probabilities (Ppost) are shown alongside for each connection. Significantly modulated connections (Ppost ≥ 0 .90) are shown in red. (D) Significant results of a correlation analysis between behavioural tests and connection strengths for all combinations of connections between the four sources are shown. The lateral connection from left to right STG is negatively correlated with tests measuring phonemic discrimination while the top–down connection from right STG to right A1 is positively correlated with a test of semantic processing (see ‘Results’ section). L = left; R = right.

The sub-analysis of the patient group (based on whether left A1 was damaged or not) demonstrated that both groups contributed to the increased forward connectivity on the right (i.e. increased prediction error mediated by right A1 to right STG), but that those with damage to left A1 were driving the increased forward connection on the left (Supplementary Fig. 2).

A separate analysis to test differences in the modulation of each connection between the two groups was also performed which revealed that control subjects, relative to patients, show stronger modulation of the self-connections of left A1, left STG and right STG, and weaker modulation of the lateral (interhemispheric) connection from left to right A1 and the forward connection from right A1 to right STG as shown in Fig. 3C.

Correlation between dynamic causal modelling connection strengths and aphasics’ auditory comprehension

The aphasics performed a battery of tasks examining auditory comprehension such as the Comprehensive Aphasia Test. We predicted that a correlation between the connection strength of individual connections of the speech network and the behavioural tests may serve as a diagnostic measure to assess the relationship between improvement in speech perception and the underlying network function at the level of single connections.

We found a significant negative correlation between the connection strength of the lateral connection from left STG to right STG (interhemispheric) and three tests of phonemic discrimination that are negatively correlated with each other (Fig. 3D): PALPA (Psycholinguistic Assessments of Language Processing in Aphasia) words (r = −0.71; P < 0.00001) and non-words discrimination test (r = −0.55; P = 0.004), and vowel identification (r = −0.70; P < 0.00001) tests after correcting for multiple comparisons (α = 0.05/12).

Similar analysis of the tests (20 in total) included in the standardized Comprehensive Aphasia Test showed a significant positive correlation between the connection strength of the connection from right STG to right A1 (top–down) and a written word sentence comprehension test (Fig. 3D: r = 0.66; P = 0.001) after correction for multiple comparisons (α = 0.05/20).

Discussion

Although the main aim of this study was to investigate temporal lobe, interregional connectivity in response to a speech mismatch negativity paradigm; the standard univariate mismatch negativity analysis was also informative (Fig. 2). It showed, as some previous EEG studies have, that left-hemisphere damage following a stroke results in smaller amplitude mismatch negativities to speech stimuli (Aaltonen et al. 1993; Wertz et al., 1998; Csépe et al., 2001; Ilvonen et al., 2004); although here, by using MEG, we have been able to confirm that this occurs at the source-level in the left-hemisphere only. In contrast, and consistent with one other report (Becker and Reinvang, 2007), we found that speech mismatch negativities in the right hemisphere were significantly greater than left hemisphere responses for patients, suggesting that some cortical reorganization of speech sound analysis had occurred in response to dominant hemisphere stroke.

We used dynamic causal modelling to characterize this putative reorganization in more detail and investigate how it arises from both within and between region connections. Because the contrast employed throughout the dynamic causal modelling analyses was D2 and D3 versus D1, this means that we could investigate the connectivity effects of phonemic deviancy, over and above the effects of a clearly perceived acoustic deviant (D1, Table 1). We found differences between the patients and control subjects for all self connections barring right A1 (Fig. 3C). These self-connections act as a gain control and reflect sensitivity to auditory inputs. The greater the gain, the greater the regional response will be to any given unit of neural input; in this case, related to phoneme perception. In Näätänen et al.’s (2007) influential mismatch negativity study, reduced neural responses were found to a non-native phonemic deviant. Here we find a similar effect for aphasic patients but expressed at the level of these all important self-connections. Perhaps it is no surprise that both left hemisphere regions were affected, but the addition of right STG suggests that left-hemisphere stroke has a wide-ranging affect on phonemic representations in both hemispheres. In terms of interregional connections, the only difference was a stronger feed-forward connection in the right hemisphere (right A1 → right STG). According to the predictive coding account of hierarchical processing (Mumford, 1992; Rao and Ballard, 1999), these connections code prediction errors; that is, a mismatch between sensation (sensory input) and expectation (long-term representations); the worse (less veridical) the predictions, the greater the prediction error. The other stronger connection was a lateral connection at the lower level of the cortical hierarchy (left A1 → right A1). These two findings suggest that the patients are relying more than the control subjects on the right-hemisphere components of the auditory network for processing speech mismatch negativities; however, this network is unable to process the stimuli as efficiently, as evidenced by the reduced self-connections and the increased feed-forward connection. This result was recapitulated using a dynamic causal modelling analysis of functional MRI data collected on a subset of these patients in response to more naturalistic speech stimuli (idioms and phrasal verbs; Brodersen et al., 2011).

Twenty-four of the 25 aphasic patients had damage to either left A1 or left STG but the patients varied as to whether left A1 was involved (n = 15) or not (n = 10). A sub-analysis of the patient data alone was carried out, splitting the patients along these lines. This analysis demonstrated that the stronger feed-forward connection in the right hemisphere seen in Fig. 3B (right A1 → right STG), was significantly driven by both groups; however, the homologous connection on the left (left A1 → left STG) was mainly driven by those patients with damage to left A1. This result is consistent with the predictive coding account: damage to A1 probably results in nosier sensory encoding in this cortical region leading to increased mismatch with top–down predictions from left STG, leading to increased prediction error.

Most modern models of speech comprehension are hierarchically structured and recognize that auditory signals are processed bilaterally, at least for ‘early’ processing of speech (Scott and Wise, 2004; Hickok and Poeppel, 2007; Rauschecker and Scott, 2009). Differences are clear, however, in the way two key functions are modelled: (i) where higher-order (abstract, modality independent) language representations are stored; and (ii) how sensory and motor cortices interact when perceiving and producing speech. We cannot shed any light on the second point as our task relied on ‘automatic’ perceptual processing and, given the stream of stimuli, passive activation of speech motor systems seems remote. Our data can, however, say something about the effects of higher-order language representations on connectivity. We have shown that these can be manifest in both hemispheres and surprisingly low down in the cortical hierarchy (A1); however, the source(s) of these higher-order representations are almost certainly from areas outside those modelled in our analyses. They could originate from dominant frontal or parietal cortex (Rauschecker and Scott, 2009) or from more ventral and lateral left temporal lobe (Hickok and Poeppel, 2007). Identifying these areas with our current data is too ambitious as the design would need to be optimized to identify semantic regions, probably by including a condition that contained incomprehensible speech sounds. All of our stimuli were comprehensible words and would thus be expected to implicitly activate semantic regions to an equivalent extent.

Our data provide support for certain types of language models in relation to the connections whose strength correlated with behavioural measures (Fig. 3D). All the patients had long-standing impairments of speech comprehension, although they varied on a range of behavioural measures (Table 2). We tested whether any connection strengths correlated with behavioural performance and found two that survived correction for multiple comparisons: a feedback connection in the right hemisphere (right STG → right A1), and a lateral connection between the two STGs (left STG → right STG). The interhemispheric connection between the two STGs correlated negatively with three tests of phonemic perception. This suggests that those patients with better phonemic perception have a greater decoupling (less influence) between the left and right STGs. This decoupling might represent the best that the system can now manage, one in which the left and right STGs maintain their specialized functions, but no longer work in tandem; or it might represent a maladaptive response to left-hemisphere damage that may serve to limit recovery. Analysis of the longitudinal data should resolve this issue. These data provide support for models that posit shared phonemic functionality between left and right secondary auditory cortex. Because we varied the vowel component of our stimuli and found changes in both hemispheres, our data do not support models where left hemisphere auditory regions are specialized for short transients (consonants) and the right more specialized for longer ones (vowels) (Zatorre et al., 2002; Poeppel, 2003).

The feedback (right STG → right A1) connection strength correlated positively with a semantic measure (written word sentence comprehension), that is, the stronger the connection, the better patients were at written word comprehension. It may seem puzzling that a connection driven by an auditory mismatch to vowels of a different category correlated with a reading measure but there is evidence in the literature that reading automatically activates auditory cortex, presumably through obligatory phonemic activation (Haist et al., 2001). We interpret this finding as evidence that a higher order region (STG) in the right hemisphere of these patients is involved in processing abstract phonemic representations; abstract because the stimuli in the behavioural test were written words, while the neurophysiological correlate was a response to speech. It is difficult to be certain, in terms of cognitive models, at what level this effect is being played out. It could be that for the aphasic patients, the right STG is involved in mediating a specific phonological function such as grapheme to phoneme conversion or phonological working memory; or it may mean that a more general process, such as phonological awareness, is now being supported by the right hemisphere.

Our results suggest that right-sided auditory cortices can support phonemic perception in aphasic patients (Crosson et al., 2007). The right hemisphere clearly has some capacity even pre-stroke to do this as, during a Wada test where the dominant hemisphere is effectively anaesthetized, subjects are significantly above chance on tests of auditory language perception (Hickok et al. 2008). Previous functional imaging and brain stimulation studies have also highlighted the interaction between the two hemispheres in recovery from aphasic stroke, the nature of which is dependent on the amount of damage sustained by the left hemisphere (Postman-Caucheteux et al., 2010; Hamilton et al., 2011), and time since stroke (Saur et al., 2006). It is important to note, however, that this is a cross-sectional study in a group of patients who remain impaired, so while the differences in auditory cortical connectivity demonstrated here suggest we are visualizing a process of network plasticity, longitudinal data are required to convincingly prove that this is supporting improved speech perception.

Conclusion

We have carried out a systematic investigation of speech and language function in aphasic patients using sophisticated behavioural, functional imaging and modelling techniques. Our results suggest a mechanism in the right hemisphere that may support speech perception presumed to be traditionally mediated by the left hemisphere as well as a marked difference in the connectivity between the sources of the speech network between aphasics and healthy control subjects. This differential connectivity can be explained on the basis of a predictive coding theory which suggests increased prediction error and decreased sensitivity to phonemic boundaries in the aphasics’ speech network in both hemispheres. Longitudinal data from the same patients, who were participating in a drug and behavioural therapy trial, will help to shed more light on this and any therapy related changes in their speech comprehension.

Funding

The study was funded by a Wellcome Trust Intermediate Clinical Fellowship ref. ME033459MES titled - ‘Imaging the neural correlates of cholinergic and behaviour driven rehabilitation in patients with Wernicke’s aphasia’. The work was supported by the National Institute for Health Research University College London Hospitals Biomedical Research Centre. This work was funded by the James S McDonnell Foundation.

Supplementary material

Supplementary material is available at Brain online.

Acknowledgements

The authors acknowledge Guillaume Flandin for technical support and the use of the UCL Legion High Performance Computing Facility, and associated support services, in the completion of this work.

Glossary

Abbreviations

- A1

primary auditory cortex

- ERB

equivalent rectangular bandwidth

- MEG

magnetoencephalography

- STG

superior temporal gyrus

References

- Aaltonen O, Tuomainen J, Laine M, Niemi P. Cortical differences in tonal versus vowel processing as revealed by an ERP component called mismatch negativity (MMN) Brain Lang. 1993;44:139–52. doi: 10.1006/brln.1993.1009. [DOI] [PubMed] [Google Scholar]

- Alho K. Cerebral generators of mismatch negativity (MMN) and its magnetic counterpart (MMNm) elicited by sound changes. Ear Hear. 1995;16:38–51. doi: 10.1097/00003446-199502000-00004. [DOI] [PubMed] [Google Scholar]

- Ashburner J, Friston KJ. Unified segmentation. Neuroimage. 2005;26:839–51. doi: 10.1016/j.neuroimage.2005.02.018. [DOI] [PubMed] [Google Scholar]

- Bakheit AM, Shaw S, Carrington S, Griffiths S. The rate and extent of improvement with therapy from the different types of aphasia in the first year after stroke. Clin Rehabil. 2007;21:941–9. doi: 10.1177/0269215507078452. [DOI] [PubMed] [Google Scholar]

- Becker F, Reinvang I. Mismatch negativity elicited by tones and speech sounds: changed topographical distribution in aphasia. Brain Lang. 2007;100:69–78. doi: 10.1016/j.bandl.2006.09.004. [DOI] [PubMed] [Google Scholar]

- Blumstein SE, Baker E, Goodglass H. Phonological factors in auditory comprehension in aphasia. Neuropsychologia. 1977;15:19–30. doi: 10.1016/0028-3932(77)90111-7. [DOI] [PubMed] [Google Scholar]

- Brodersen KH, Schofield TM, Leff AP, Ong CS, Lomakina EI, Buhmann JM, et al. Generative embedding for model-based classification of fMRI data. PLoS Comput Biol. 2011;7:e1002079. doi: 10.1371/journal.pcbi.1002079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crosson B, McGregor K, Gopinath KS, Conway TW, Benjamin M, Chang YL, et al. Functional MRI of language in aphasia: a review of the literature and the methodological challenges. Neuropsychol Rev. 2007;17:157–77. doi: 10.1007/s11065-007-9024-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Csépe V, Osman-Sági J, Molnár M, Gósy M. Impaired speech perception in aphasic patients: event-related potential and neuropsychological assessment. Neuropsychologia. 2001;39:1194–208. doi: 10.1016/s0028-3932(01)00052-5. [DOI] [PubMed] [Google Scholar]

- Deichmann R, Schwarzbauer C, Turner R. Optimization of the 3D MDEFT sequence for anatomical brain imaging: technical implications at 1.5 and 3 T. Neuroimage. 2004;21:757–67. doi: 10.1016/j.neuroimage.2003.09.062. [DOI] [PubMed] [Google Scholar]

- Felleman DJ, Van Essen DC. Distributed hierarchical processing in the primate cerebral cortex. Cereb Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Friston KJ. A theory of cortical responses. Philos Trans R Soc Lond B Biol Sci. 2005;360:815–36. doi: 10.1098/rstb.2005.1622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ, Harrison L, Penny WD. Dynamic causal modeling. Neuroimage. 2003;19:1273–302. doi: 10.1016/s1053-8119(03)00202-7. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Kiebel S. Predictive coding under the free-energy principle. Philos Trans R Soc Lond B Biol Sci. 2009;364:1211–21. doi: 10.1098/rstb.2008.0300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garrido MI, Friston KJ, Kiebel SJ, Stephan KE, Baldeweg T, Kilner JM. The functional anatomy of the MMN: a DCM study of the roving paradigm. Neuroimage. 2008;42:936–44. doi: 10.1016/j.neuroimage.2008.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garrido MI, Kilner JM, Kiebel SJ, Stephan KE, Friston KJ. Dynamic causal modelling of evoked potentials: a reproducibility study. Neuroimage. 2007;36:571–80. doi: 10.1016/j.neuroimage.2007.03.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldenberg G, Spatt J. Influence of size and site of cerebral lesions on spontaneous recovery of aphasia and on success of language therapy. Brain Lang. 1994;47:684–98. doi: 10.1006/brln.1994.1063. [DOI] [PubMed] [Google Scholar]

- Griffiths TD, Warren JD. The planum temporale as a computational hub. Trends Neurosci. 2002;25:348–53. doi: 10.1016/s0166-2236(02)02191-4. [DOI] [PubMed] [Google Scholar]

- Haist F, Song AW, Wild K, Faber TL, Popp CA, Morris RD. Linking sight and sound: fMRI evidence of primary auditory cortex activation during visual word recognition. Brain Lang. 2001;76:340–50. doi: 10.1006/brln.2000.2433. [DOI] [PubMed] [Google Scholar]

- Hamilton RH, Chrysikou EG, Coslett B. Mechanisms of aphasia recovery after stroke and the role of noninvasive brain stimulation. Brain Lang. 2011;118:40–50. doi: 10.1016/j.bandl.2011.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickok G, Okada K, Barr W, Pa J, Rogalsky C, Donnelly K, et al. Bilateral capacity for speech sound processing in auditory comprehension: evidence from Wada procedures. Brain Lang. 2008;107:179–84. doi: 10.1016/j.bandl.2008.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nat Rev Neurosci. 2007;8:393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Ilvonen T, Kujala T, Kozou H, Kiesiläinen A, Salonen O, Alku P, et al. The processing of speech and non-speech sounds in aphasic patients as reflected by the mismatch negativity (MMN) Neurosci Lett. 2004;366:235–40. doi: 10.1016/j.neulet.2004.05.024. [DOI] [PubMed] [Google Scholar]

- Ilvonen TM, Kujala T, Kiesiläinen A, Salonen O, Kozou H, Pekkonen E, et al. Auditory discrimination after left-hemisphere stroke: a mismatch negativity follow-up study. Stroke. 2003;34:1746–51. doi: 10.1161/01.STR.0000078836.26328.3B. [DOI] [PubMed] [Google Scholar]

- Ilvonen TM, Kujala T, Tervaniemi M, Salonen O, Näätänen R, Pekkonen E. The processing of sound duration after left hemisphere stroke: event-related potential and behavioral evidence. Psychophysiology. 2001;38:622–8. [PubMed] [Google Scholar]

- Iverson P, Evans BG. Learning English vowels with different first-language vowel systems: perception of formant targets, formant movement, and duration. J Acoust Soc Am. 2007;122:2842–54. doi: 10.1121/1.2783198. [DOI] [PubMed] [Google Scholar]

- Jansen BH, Rit VG. Electroencephalogram and visual evoked potential generation in a mathematical model of coupled cortical columns. Biol Cybern. 1995;73:357–66. doi: 10.1007/BF00199471. [DOI] [PubMed] [Google Scholar]

- Javitt DC, Steinschneider M, Schroeder CE, Arezzo JC. Role of cortical N-methyl-D-aspartate receptors in auditory sensory memory and mismatch negativity generation: implications for schizophrenia. Proc Natl Acad Sci USA. 1996;93:11962–7. doi: 10.1073/pnas.93.21.11962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kertesz A, Lau WK, Polk M. The structural determinants of recovery in Wernicke’s aphasia. Brain Lang. 1993;44:153–64. doi: 10.1006/brln.1993.1010. [DOI] [PubMed] [Google Scholar]

- Kiebel SJ, Daunizeau J, Phillips C, Friston KJ. Variational Bayesian inversion of the equivalent current dipole model in EEG/MEG. Neuroimage. 2008a;39:728–41. doi: 10.1016/j.neuroimage.2007.09.005. [DOI] [PubMed] [Google Scholar]

- Kiebel SJ, Garrido MI, Friston KJ. Dynamic causal modelling of evoked responses: the role of intrinsic connections. Neuroimage. 2007;36:332–45. doi: 10.1016/j.neuroimage.2007.02.046. [DOI] [PubMed] [Google Scholar]

- Kiebel SJ, Garrido MI, Moran R, Chen CC, Friston KJ. Dynamic causal modeling for EEG and MEG. Hum Brain Mapp. 2009;30:1866–76. doi: 10.1002/hbm.20775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiebel SJ, Garrido MI, Moran RJ, Friston KJ. Dynamic causal modelling for EEG and MEG. Cogn Neurodyn. 2008b;2:12136. doi: 10.1007/s11571-008-9038-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klatt DH, Klatt LC. Analysis, synthesis, and perception of voice quality variations among female and male talkers. J Acoust Soc Am. 1990;87:820–57. doi: 10.1121/1.398894. [DOI] [PubMed] [Google Scholar]

- Kraus N, McGee T, Sharma A, Carrell T, Nicol T. Mismatch negativity event-related potential elicited by speech stimuli. Ear Hear. 1992;13:158–64. doi: 10.1097/00003446-199206000-00004. [DOI] [PubMed] [Google Scholar]

- Leff AP, Iverson P, Schofield TM, Kilner JM, Crinion JT, Friston KJ, et al. Vowel-specific mismatch responses in the anterior superior temporal gyrus: an fMRI study. Cortex. 2009;45:517–26. doi: 10.1016/j.cortex.2007.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leff AP, Schofield TM, Stephan KE, Crinion JT, Friston KJ, Price CJ. The cortical dynamics of intelligible speech. J Neurosci. 2008;28:13209–15. doi: 10.1523/JNEUROSCI.2903-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitt H. Transformed up-down methods in psychoacoustics. J Acoust Soc Am. 1971;49(Suppl. 2):467. [PubMed] [Google Scholar]

- Litvak V, Mattout J, Kiebel S, Phillips C, Henson R, Kilner J, et al. EEG and MEG data analysis in SPM8. Comput Intell Neurosci. 2011;2011:852961. doi: 10.1155/2011/852961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luo H, Poeppel D. Phase patterns of neural responses reliably discriminate speech in human auditory cortex. Neuron. 2007;54:1001–10. doi: 10.1016/j.neuron.2007.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore BC, Glasberg BR. Suggested formulae for calculating auditory-filter bandwidths and excitation patterns. J Acoust Soc Am. 1983;74:750–3. doi: 10.1121/1.389861. [DOI] [PubMed] [Google Scholar]

- Mumford D. On the computational architecture of the neocortex. II. The role of cortico-cortical loops. Biol Cybern. 1992;66:241–51. doi: 10.1007/BF00198477. [DOI] [PubMed] [Google Scholar]

- Näätänen R. The perception of speech sounds by the human brain as reflected by the mismatch negativity (MMN) and its magnetic equivalent (MMNm) Psychophysiology. 2001;38:1–21. doi: 10.1017/s0048577201000208. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Paavilainen P, Rinne T, Alho K. The mismatch negativity (MMN) in basic research of central auditory processing: a review. Clin Neurophysiol. 2007;118:2544–90. doi: 10.1016/j.clinph.2007.04.026. [DOI] [PubMed] [Google Scholar]

- Opitz B, Mecklinger A, Friederici AD, Von Cramon DY. The functional neuroanatomy of novelty processing: integrating ERP and fMRI results. Cereb Cortex. 1999;9:379–91. doi: 10.1093/cercor/9.4.379. [DOI] [PubMed] [Google Scholar]

- Opitz B, Rinne T, Mecklinger A, Von Cramon DY, Schröger E. Differential contribution of frontal and temporal cortices to auditory change detection: fMRI and ERP results. Neuroimage. 2002;15:167–74. doi: 10.1006/nimg.2001.0970. [DOI] [PubMed] [Google Scholar]

- Pedersen PM, Vinter K, Olsen TS. Aphasia after stroke: type, severity and prognosis. The Copenhagen aphasia study. Cerebrovasc Dis. 2004;17:35–43. doi: 10.1159/000073896. [DOI] [PubMed] [Google Scholar]

- Penny WD, Stephan KE, Daunizeau J, Rosa MJ, Friston KJ, Schofield TM, et al. Comparing families of dynamic causal models. PLoS Comput Biol. 2010;6:e1000709. doi: 10.1371/journal.pcbi.1000709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poeppel D. The analysis of speech in different temporal integration windows: cerebral lateralization as ‘asymmetric sampling in time’. Speech Commun. 2003;41:245–55. [Google Scholar]

- Postman-Caucheteux WA, Birn R, Pursley R, Butman J, Solomon J, Picchioni D, et al. Single-trial fMRI shows contralesional activity linked to overt naming errors in chronic aphasic patients. J Cogn Neurosci. 2010;22:1299–318. doi: 10.1162/jocn.2009.21261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ, Seghier ML, Leff AP. Predicting language outcome and recovery after stroke: the PLORAS system. Nat Rev Neurol. 2010;6:202–10. doi: 10.1038/nrneurol.2010.15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rao RP, Ballard DH. Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat Neurosci. 1999;2:79–87. doi: 10.1038/4580. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Scott SK. Maps and streams in the auditory cortex: nonhuman primates illuminate human speech processing. Nat Neurosci. 2009;12:718–24. doi: 10.1038/nn.2331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richardson FM, Seghier ML, Leff AP, Thomas MS, Price CJ. Multiple routes from occipital to temporal cortices during reading. J Neurosci. 2011;31:8239–47. doi: 10.1523/JNEUROSCI.6519-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saur D, Lange R, Baumgaertner A, Schraknepper V, Willmes K, Rijntjes M, et al. Dynamics of language reorganization after stroke. Brain. 2006;129:1371–84. doi: 10.1093/brain/awl090. [DOI] [PubMed] [Google Scholar]

- Schofield TM, Iverson P, Kiebel SJ, Stephan KE, Kilner JM, Friston KJ, et al. Changing meaning causes coupling changes within higher levels of the cortical hierarchy. Proc Natl Acad Sci USA. 2009;106:11765–70. doi: 10.1073/pnas.0811402106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schofield TM, Penny WD, Stephan KE, Crinion JT, Thompson AJ, Price CJ, et al. Changes in auditory feedback connections determine the severity of speech processing deficits after stroke. J Neurosci. 2012;32:4260–70. doi: 10.1523/JNEUROSCI.4670-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott SK, Wise RJ. The functional neuroanatomy of prelexical processing in speech perception. Cognition. 2004;92:13–45. doi: 10.1016/j.cognition.2002.12.002. [DOI] [PubMed] [Google Scholar]

- Seghier ML, Ramlackhansingh A, Crinion J, Leff AP, Price CJ. Lesion identification using unified segmentation-normalisation models and fuzzy clustering. Neuroimage. 2008;41:1253–66. doi: 10.1016/j.neuroimage.2008.03.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharma A, Kraus N, McGee T, Carrell T, Nicol T. Acoustic versus phonetic representation of speech as reflected by the mismatch negativity event-related potential. Electroencephalogr Clin Neurophysiol. 1993;88:64–71. doi: 10.1016/0168-5597(93)90029-o. [DOI] [PubMed] [Google Scholar]

- Stephan KE, Penny WD, Daunizeau J, Moran RJ, Friston KJ. Bayesian model selection for group studies. Neuroimage. 2009;46:1004–17. doi: 10.1016/j.neuroimage.2009.03.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swinburn K, Porter G, Howard D. Hove: Psychology Press; 2005. The Comprehensive Aphasia Test. [Google Scholar]

- Ulanovsky N, Las L, Nelken I. Processing of low-probability sounds by cortical neurons. Nat Neurosci. 2003;6:391–8. doi: 10.1038/nn1032. [DOI] [PubMed] [Google Scholar]

- Wertz RT, Auther LL, Burch-Sims GP, Abou-Khalil R, Kirshner HS, Duncan GW. A comparison of the mismatch negativity (MMN) event-related potential to tone and speech stimuli in normal and aphasic adults. Aphasiology. 1998;12:499–507. [Google Scholar]

- Zatorre RJ, Belin P, Penhune VB. Structure and function of auditory cortex: music and speech. Trends Cogn Sci. 2002;6:37–46. doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.