Abstract

Objective

To test the hypothesis that HANDS “big picture summary” can be implemented uniformly across diverse settings and result in positive RN and plan of care (POC) data outcomes across time.

Design

In a longitudinal, multi-site, full test design, a representative convenience sample of 8 medical-surgical units from 4 hospitals (1 university, 2 large community, and 1 small community) in one Midwestern state implemented the HANDS intervention for 24 (4 units) or 12 (4 units) months.

Measurements

1) RN outcomes - percentage completing training, satisfaction with standardized terminologies, perception of HANDS usefulness, POC submission compliance rate. 2) POC data outcomes – validity (rate of optional changes/episode); reliability of terms and ratings; and volume of standardized data generated.

Results

100% of the RNs who worked on the 8 study units successfully completed the required standardized training; all units selected participated for the entire 12- or 24-month designated period; compliance rates for POC entry at every patient handoff were 78% to 92%; reliability coefficients for use of the standardized terms and ratings were moderately strong; the pattern of optional POC change per episode declined but remained reasonable across time; the nurses generated a database of 40,747 episodes of care.

Limitations

Only RNs and medical-surgical units participated.

Conclusion

It is possible to effectively standardize the capture and visualization of useful “big picture” healthcare information across diverse settings. Findings offer a viable alternative to the current practice of introducing new health information layers that ultimately increase the complexity and inconsistency of information for front line users.

Keywords: continuity of care, plan of care, standardization, terminologies, electronic health record, interdisciplinary, nursing

I. INTRODUCTION

Accurate information flow to and from members of the patient’s dynamically changing care team is a fundamental essential to achieving the Health Information Technology for Economic and Clinical Health Act goals for meaningful use of electronic health records (EHRs) (Blumenthal & Tavenner, 2010; Department of Health and Human Services [DHHS], 2010). When systems and tools do not make it easy for the multiple care team members to have the same perspective of care goals and outcomes, the continuity, reliability, safety, and effectiveness of care are compromised. In spite of efforts for two decades to improve health information flow through electronic tools, there continues to be wide variation in the type, method of collection, storage, retrieval, and display of information in EHRs. The additional processing needed to reconcile information that appears in multiple ways can lead to errors attributable to cognitive overload (errors), misinterpretation (errors), and other unintended consequences (errors). Particularly lacking in today’s EHRs are plan of care (POC) tools based on standardized terminologies and processes. We report results of a multi-site study. The focus is on feasibility and utility in real practice conditions of implementing a technology supported “standardized” method, Hands-on Automated Nursing Data System (HANDS), of collecting and presenting POC information as a succinct summary of care goals, interventions, and outcomes and is designed to keep clinicians on the same page. The hypothesis tested was that HANDS, implemented uniformly across diverse settings results in positive RN and POC data outcomes across time.

II. BACKGROUND

A shared understanding among team members is critically important to achieving continuity, safety, and high-quality health outcomes (Joint Commission, 2010). Yet, a recent study of eight of the best electronic health records in the US showed that individual clinicians spend too much time sifting through raw data getting a true picture of patients’ situations. This sifting wastes clinicians’ precious cognitive resources and makes it difficult to see higher order issues (Stead & Lin, 2009). The use of a common model that helps the care team stay on the same page about a patient’s dynamic care story is one way to address the problem (Stead & Lin, 2009), and HANDS is one such model, but until this study it had not been tested in multiple real-practice settings over an extended time.

When errors can result in catastrophic consequences, information reliability is essential to carry out interdependent tasks that produce successful outcomes (Hutchins, 1990, 1995; Weick, 1987; Weick & Roberts, 1993; Weick & Sutcliffe, 2001; Weick & Sutcliffe, 2006). This reliability is particularly important in healthcare settings where care received by a single patient is provided by many clinicians from multiple professions across time. Care success is directly tied to the effectiveness and integration of many clinicians’ actions rather than actions of a single clinician or profession (Institute of Medicine, 2001). Maintaining a current and shared understanding of goals and processes to achieve goals are key attributes to successful information flow in organizations with strong safety cultures and few errors. Accordingly, it is nearly impossible to ensure continuity, safety, and quality of organizational outcomes in the absence of tools and processes that support interdependent members to stay on the same page about care (Hutchins, 1990, 1995; Weick, 1987; Weick & Roberts, 1993; Weick & Sutcliffe, 2001; Weick & Sutcliffe, 2006). In today’s healthcare systems, ensuring that clinicians hold a shared understanding of care is no easy task, given the complexity of care and the large numbers of involved clinicians. The HANDS POC system is capable of providing information needed for healthcare professionals to hold a shared understanding of care.

The importance of the patient care planning has been recognized by the Joint Commission as a means of keeping clinicians on the same page (Joint Commission, 2010). Existing paper and electronic nursing POC documentation, however, are all too often completed to meet medical record requirements and are seen more as a burden than helpful (Allen, 1998; Currell, Urquhart, Grant, & Hardiker, 2009; Ehrenberg & Ehnfors, 2001; Hardey, Payne, & Coleman, 2000; Kärkkäinen, Bondas, & Eriksson, 2005; Karkkainen & Eriksson, 2004; Keenan, Yakel, Tschannen, & Mandeville, 2008; Stokke & Kalfoss, 1999). This practice gap exists because the POC format and content vary. Furthermore, they are difficult to keep current and individualize, contain unimportant information, are oftentimes cumbersome or inaccessible, and do not specify clear lines of responsibility and accountability (Keenan et al., 2008; Lee, 2005; Lee & Chang, 2004; Mason, 1999).

About HANDS

HANDS is an electronically supported POC system designed to turn care planning into a standardized, streamlined, and meaningful process. HANDS supports care across the continuum and the coordination of the multidisciplinary healthcare teams’ actions. HANDS has the potential to overcome the practice gaps that threaten the continuity, reliability, safety, and effectiveness of care in many of today’s healthcare institutions (Keenan et al., 2002; Keenan & Yakel, 2005).

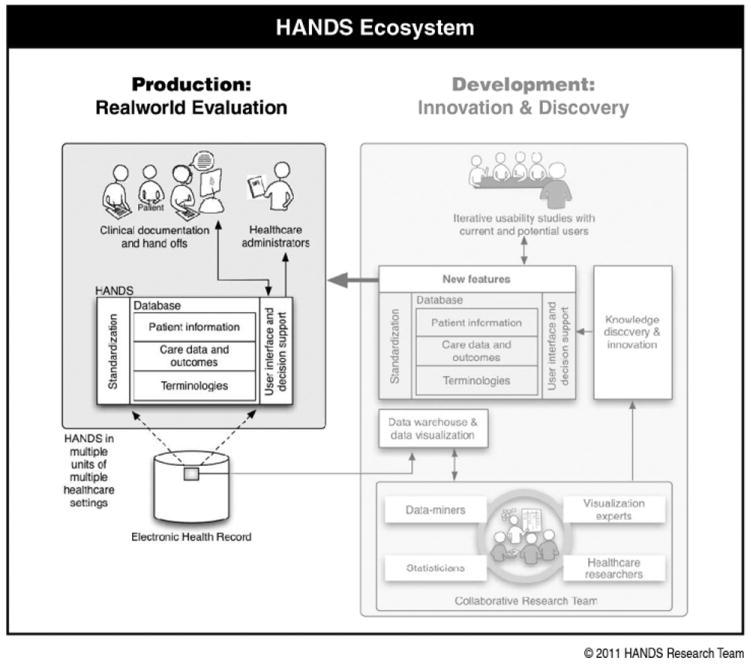

HANDS was first conceived by researchers at the University of Michigan in the mid-1990s to demonstrate benefits that can be achieved when standardized terminologies are implemented consistently in EHRs. An early transformational discovery was that use of standardized terminologies though necessary proved insufficient to produce standardized data. Creating genuinely reliable and valid datasets (standardized) for benchmarking and multiple other purposes requires the collection of the same data elements 1) represented by standardized terminologies, 2) at the same time points, 3) with a standard user interface, and 4) stored in a relational database with a standardized architecture. The absence of one or more of these collection criteria limits the comparability (reliability and validity) of the dataset. Today there are many examples of standardized terminologies in EHRs, but comparability is almost always limited by the absence of one or more of the criteria. The most frequent anecdotal justification for this breakdown is that each healthcare organization is unique and thus the collection of genuine standardized clinical documentation datasets is not desirable or possible. Given the extraordinary benefits of collecting standardized data, we set out to dispel this myth. HANDS includes a standardized set of data elements, user interface, terminologies, time points of data entry, and relational database architecture. For more than a decade, our multidisciplinary team has built, piloted, and refined HANDS (Keenan et al., 2002; Keenan & Yakel, 2005; Keenan et al., submitted; Keenan et al., 2008) and continues to do so (Figure 1).

Figure 1.

The HANDS Ecosystem. Production (left side) environment - represents the people, structures, content, and processes involved in the real-world evaluation of HANDS (the focus of this study). The Development (right side) environment includes the people, structures, content, processes, connection to the Production environment, and interrelationships among them that build, maintain, and expand HANDS (shaded-not the focus of this study).

III. METHODS

A. Study sample and design

For this longitudinal, multi-site, full test, intervention study, we used representative convenience sampling to identify hospitals and medical-surgical units that differed widely on major characteristics to ensure that we could conduct a meaningful hypothesis test. First we received permission from administrators of four diverse organizations (i.e., university, small and large community hospitals) in one Midwestern state to recruit eight medical-surgical units that provided ample variety in patient population mix, size, patient acuity, and nurse-to patient-ratio. They also met the inclusion criteria, based on literature and previous pilot testing (Keenan et al., 2008; Stokke & Kalfoss, 1999): 1) stable staffing, 2) desire of staff RNs to participate, 3) agreement that all RNs who worked on a unit would complete the HANDS training module, and 4) agreement to support the intervention for the study duration (12, 24 months). In total, we recruited four units in Year one (Y1) that used it for 24 months, and four other units in Year two (Y2) that used it for 12 months.

B. The study intervention: HANDS

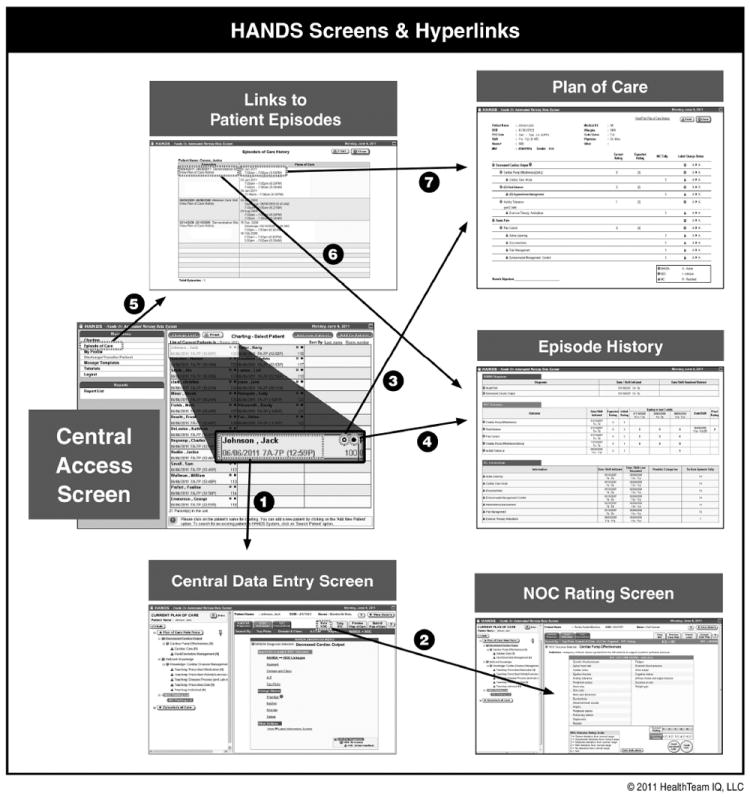

The study intervention, HANDS, consisted of 1) making the HANDS application available for POC electronic documentation, 2) training champions and preparing HANDS for unit use, 3) training remaining unit nurses to document with HANDS and use the POC in handoffs, and 4) unit real-time HANDS use. The HANDS application provides a format for easily entering or updating the patient’s POC to ensure information accuracy at handoffs and simple visualizations of the historical and current POCs (Figure 3).

Figure 3.

Six screens available in HANDS with arrows and numbers showing the connections among them: Central Access Screen provides entry to multiple parts of the system: (1) links to Central Data Entry Screen, the area where plans of care are created, updated, submitted through accessing search modes and templates; (3) links to last submitted Plan of Care, (4) links to the most current Episode History; and (5) links to Links to Patient Episodes where (6) current and all previous plans of care and (7) episode histories are accessible. The Central Data Entry Screen also links to (2) NOC Rating Screen where the clinician enters current and expected ratings.

In addition to basic demographic and medical diagnoses (ICD9s), HANDS supports the clinician to enter or change the applicable nursing diagnoses, outcomes, and interventions that applied to a patient during the clinician’s shifts. After the nurse enters the admission shift POC, for each subsequent handoff, the nurse is provided the POC from the immediate past shift, to which adjustments can be easily entered to reflect care provided during the current shift. The most current set of terms and defining attributes for each classification, NANDA-I (NANDA International, 2003) (167 diagnoses), NOC (Moorhead, Johnson, Maas, & Iowa Outcomes Project, 2004) (330 outcomes), and NIC (McCloskey Dochterman & Bulechek, 2004) (514 interventions) were available and accessible in HANDS.

The software provides many types of decision support to aid location and documentation of proper NANDA-I, NOC, and NIC terms, e.g., search modes by taxonomical structure (domains and classes), key word, alphabetical, and unit top picks. Also starter and mini-update templates by medical diagnosis, a single nursing diagnosis, or constellation of nursing diagnoses are available to add fully or partially to any admission or update POC. The application also has the ability to resolve or remove nursing diagnoses, outcomes, and interventions from the POC. An admission or update POC must be entered on all patients at every formal handoff, which holds an individual nurse accountable for the care documented on the POC for each shift during a patient’s stay. For every update plan, the clinician must minimally enter a new current rating for all NOC outcomes on the POC. The NOC ratings provide the basis for evaluating progress toward expected outcomes. The nurse is prompted to enter an expected and current rating for each NOC on the POC (admission, update) to which it is first added. The “expected” rating is that which the clinician expects the patient to achieve at discharge from one’s unit, and the “current” is the rating at the handoff or change of shift. Each NOC outcome has a rating scale from 1-5 (1=worst, 5=best, with 5 calibrated by the criterion--that which a healthy person of comparable age, gender, ethnic, and cognitive status would receive). The expected NOC rating is only entered once, with the current rating updated at every handoff (Figure 3).

C. Study Procedures

We first trained 3-5 champions per unit (40 hours each) and tailored the application to the unit’s population by creating top-pick lists and unit-specific templates for the patient population. The PI or research associate facilitated the consent process for unit nurses at the first pre-go live training sessions and for newly hired nurses and float staff at a mandatory HANDS training session, which was held regularly throughout the study. The champions and research staff trained the remaining staff nurses (6-8 hrs each) and conducted monthly sessions for newly hired nurses. Nurse subjects consented to all formative and summative non-anonymous study procedures. Subjects were free to opt out of any of the non-anonymous procedures, but none did. Analyses of the anonymous transaction log data required no consent, and thus, we were able to use data entered by all nurses (N=707) who entered 1 or more POC in HANDS.

Once HANDS was implemented on the unit, the nurse was expected to 1) document each patient’s admission POC or update each patient’s POC at the formal handoff to reflect care provided since the previous handoff, 2) enter expected discharge ratings for all NOCs on the POC when first listing a NOC, 3) rerate the current status of each NOC at every handoff, and 4) display the POC on the computer screen at handoff to guide handoff communication (results not reported here).

In addition to the tranasactional anonymized HANDS data, we gathered different types of data to evaluate the HANDS intervention, i.e., interviews, think-alouds, observations (reported elsewhere) (Keenan et al., submitted), and surveys. We conducted think-alouds and interviews for both formative and summative process evaluation to monitor software usability and user issues across time. The RN subjects responded to surveys at baseline (pre HANDS training) and follow-up (at 24 months for Y1 initiated units, 12 months for Y2 initiated units) to evaluate culture and user-related perceptions of HANDS. We used transactional data to identify nursing characteristics and POC-related data entry patterns.

IV. ANALYSIS AND RESULTS

A. General Measures

1. Descriptive

Tables 1 and 2 present RN and unit descriptive findings. As intended, the RN and study units’ characteristics varied. The ranges for usual numbers of RNs employed was 22-120, percentage of RNs with a BSN or higher was 37-64, average years of RN experience in current hospital from 2.2-6.9, and total years of RN experience from 6.1-13.1. The percentage of nurses working 12-hour shifts per month ranged from 0-76. Units’ characteristic ranges included 10-60 beds per unit, 69-345 average number of patient episodes per month; 686-3,858 average number of POCs per month, 6.8-12 average number of POCs per episode, and 53-102 average number of hours per episode.

Table 1.

RN characteristics

| Unita | Usual # RNs employed | % 12h shifts/mod | Total RNs pre go-live | Total consents signed | Total # RNs w/1 or more HANDS POCs | % RN BSN or highere | Yrs Hosp Exp M (SD)e | Yrs RN Exp M (SD)e |

|---|---|---|---|---|---|---|---|---|

| UH: Neurob | 60/71 | 37-54 | 82 | 89 | 156 | 37 | 4.3 (7.1) | 9.8 (10.2) |

| LCH1: Geron | 48 | 5-18 | 53 | 68 | 73 | 48 | 1.7 (3.9) | 11.6 (10.2) |

| LCH2: G Med | 32 | 62-85 | 32 | 49 | 55 | 51 | 3.0 (5.1) | 6.1 (8.9) |

| SCH: Med | 26 | 0-19 | 32 | 41 | 60 | 42 | 1.4 (4.3) | 12.3 (10.7) |

| UH: Cardiacc | 120/93 | 58-76 | 130 | 175 | 188 | 53 | 2.9 (4.3) | 6.7 (8.2) |

| LCH1: G Med | 79 | 54-64 | 88 | 102 | 105 | 57 | 4.4 (5.4) | 9.2 (10.1) |

| LCH1: MICU | 36 | 43-63 | 31 | 46 | 45 | 62 | 6.9 (7.0) | 13.1 (9.3) |

| LCH2: Geron | 22 | 43-75 | 22 | 22 | 25 | 64 | 2.2 (3.3) | 8.7 (8.5) |

| Total | 470 | 592 | 707 |

UH = university hospital, LCH1 = large community hospital eastern side of state; LCH2 = large community hospital western side of state; SCH = small community hospital.

unit increased number of beds in second 12 months;

unit decreased beds in first 12 months;

range of % of 12 hour shifts for monthly time periods

N = column 6; Total # of RNs w 1 or more HANDS POCs in database

Table 2.

Units, episodes, plans of care, % compliance with POC submissions

| Unita | HANDS mos | # Beds | Av # pt episodes/mo | Av # POCs/mo | Av # POCs/ episode | Av episode length (Hrs) | POC submits complianced |

|---|---|---|---|---|---|---|---|

| UH: Neurob | 24 | 32/48 | 255 | 2391 | 9.4 | 85 | 87% |

| LCH1: Geron | 24 | 42 | 307 | 3453 | 11.2 | 92 | 86% |

| LCH2: G Med | 24 | 22 | 135 | 1401 | 10.4 | 108 | 92% |

| SCH: Med | 24 | 28 | 155 | 1049 | 6.8 | 53 | 82% |

| UH: Cardiacc | 12 | 60/44 | 345 | 3852 | 11.2 | 109 | 84% |

| LCH1: G Med | 12 | 42 | 303 | 2898 | 9.6 | 93 | 86% |

| LCH1: MICU | 12 | 10 | 69 | 686 | 9.9 | 90 | 78% |

| LCH2: Geron | 12 | 23 | 114 | 1371 | 12.0 | 112 | 81% |

UH = university hospital, LCH1 = large community hospital eastern side of state; LCH2 = large community hospital western side of state; SCH = small community hospital.

unit increased number of beds in second 12 months;

unit decreased beds in first 12 months;

% of submitted plans of care for total number shifts possible for entire study period

2. Unit Culture Survey

Included in the baseline and follow-up surveys was a 17-item Safety Culture (T. Vogus, personal communication, April 18, 2004; Vogus & Welbourne, 2003) section and a 16-item Trust (Mishra, 1992) section. A total of 558 surveys were completed by RNs (n = 317 at baseline and 241 completed at the 12- or 24-month follow-up). Independent t tests indicated there were no significant differences in respondents’ education, years of nursing experience or the hours spent on a work computer at baseline versus follow-up. On average, across all hospitals, nurses rated their overall culture positively both at baseline (M=3.9, SD=0.42) and follow-up (M=3.9, SD=0.50). Also their overall trust levels were positive both at the baseline (M=3.9, SD=0.49) and follow-up (M= 3.8, SD=0.45). The differences in both the culture and trust subscales across time and within hospitals were, in the main, not statically significant.

B. Hypothesis Testing

The RN Outcomes included: POC training rate, POC submission rate, satisfaction with terminologies, and perceptions of usefulness. The Data Outcomes included validity, reliability, and availability of standardized POC data.

1. HANDS RN Outcomes

Training Rate

All RNs who used HANDS were trained, resulting in a 100% training rate. Only RNs who successfully completed HANDS training module were given password protected access to HANDS. Once access to HANDS was granted, the system automatically posted the RN’s name to all care plans submitted or adjusted by the RN (seen only by unit RNs who had password protected access to HANDS on a given unit). Although remote, it is possible that an RN employed on a unit could have avoided training and either used another colleague’s password or failed to enter plans all together. Given the high levels of compliance with submission POC rates (Table 2), it would be unlikely that an employee’s regular failure to enter POCs would go unnoticed or be ignored. Interesting to us was the fact that, with little prompting, the unit managers dutifully reinforced the data entry mandate. New hires were also proactive and often sought training before unit administrators submitted their names.

POC Submission Rates

We monitored submission rates in two ways. First, HANDS administrative personnel regularly conducted spot checks of the database to assess that unit RNs were consistently submitting POC into the database. Additionally, we computed POC submission compliance rates. Since the nurse was required to designate the shift to which each submitted POC applied, we were able to identify gaps and the length of time between POC submissions. (Though the RN was required to designate the shift to which the POC applied before entry, built-in software logic did not allow a dual entry or entry for which the date and time were out of range.) If a time gap between POCs was up to 12 hours, we assigned 1 missing POC to the gap. If the gap was > 12-24 hours, 2 missing POCs were assigned and so on. We then added together POCs and those missing to create a total number. Then we divided the number missing by the total and multiplied by 100 to compute a percent compliance rate (Table 2). The compliance rates ranged from 78% to 92%. We consider these rates extraordinary, given that we were computing this on the POC submission requirement for every handoff during 1-2 years. POC updating requirements reported in the literature are often once every 24 or 48 hours, and compliance with such mandates is reported to be much lower (Allen, 1998; Currell et al., 2009; Keenan et al., submitted; McCloskey Dochterman & Bulechek, 2004; Vogus, 2004; Vogus & Welbourne, 2003). This outcome is noteworthy given that, during the study period, all units experienced unexpected changes. with some being quite substantial (e.g., one unit moved and downsized, and a second nearly doubled in size).

User Perceptions of HANDS

The baseline and follow-up (at 24 months for units initiated in Y1 and 12 months for units initiated in Y2) surveys included user perception items related to the study intervention. We used the Flashlight Current Student Inventory (Ehrmann & Zuniga, 1997) of pretested stems (e.g., The _____ skills that I am acquiring are useful in my work setting). Three questions addressed care-planning and HANDS directly: “My unit’s current method of care planning is useful in my work setting”; “By using the HANDS care planning process, I am engaging in a practice that is useful in my work setting”; and “Because of the way that HANDS uses nursing languages, the care planning process is useful to my work setting.” Likert-type scales included response options from 1 to 5 (1 = Strongly Disagree, 5 = Strongly Agree). An additional six questions assessed familiarity (3 items) and satisfaction (3 items) with the NANDA-I, NOC, and NIC standardized languages. Responses for the 6 questions consisted of a 5 point Likert-type scale with response options of 1 = Not at all useful/No knowledge/Not at all satisfactory to 5 = Totally useful/Extremely knowledgeable/Totally satisfactory. Cronbach’s alphas were .96 and .94, respectively.

Across all the hospitals, nurses responded that that HANDS was quite useful (Baseline M=3.1, SD=1.0, Follow-up M=3.4, SD=.87), representing significant improvement (t=3.8, p<.001). At baseline, there were significant positive correlations between nurse’s interest in care-planning and documentation with HANDS usefulness (r=.30, p<.001) and the NANDA-I, NOC, and NIC language use (r=. 22, p<.001). There was a significant positive correlation between years of experience and the NANDA-I, NOC, and NIC language use (r=.11, p<.05) as well. At follow-up, the differences associated with education and years of experience were no longer significant, and interest in care-planning and documentation remained significantly positively correlated with HANDS usefulness (r=.30, p<.001), the NANDA-I, NOC, and NIC language use (r=. 29, p<.001), and with the usefulness of the new care-planning (r=.27, p<.001).

There was significant growth in familiarity with NANDA-I, NOC, and NIC languages across all hospitals (Baseline M=2.6, SD=1.0, Follow-up M=3.6, SD=0.73, t=13.5, p<.001) and with their satisfaction with the languages (Baseline M=2.5, SD=1.0, Follow-up M=3.5, SD=0.74, t=12.6, p<.001). At baseline, there were significant positive correlations between nurse’s interest in care-planning and documentation and years of education with both familiarity (r=.15, p<.01 and r=.12, p<.05 respectively) and satisfaction (r=.18, p<.01 and r=.13, p<.05 respectively). Those with more years of experience were significantly negatively correlated with familiarity (r=-.36, p<.01) and satisfaction (r=-.21, p<.05). At follow-up, however, the differences associated with education and years of experience were no longer significant, and interest in care-planning and documentation remained significantly positively correlated with familiarity (r=.32, p<.001) and satisfaction (r=.26, p<.001).

2. Data Outcomes

Validity was defined as patterns of data entry (into HANDS) indicative of sustained purposeful use of HANDS across time. In examining this type of validity, we wanted to know if nurses used HANDS for the intended purpose, to accurately represent the diagnoses, interventions, and outcome states applicable to a patient over the course of an episode. We assert that if an RN’s pattern of use can be identified showing that nurses are making regular optional changes to the POCs within episodes (across time), then this would be evidence suggesting HANDS is being used as intended. We operationalized this concept by examining the pattern of optional POC changes made per episode of care across the study duration.

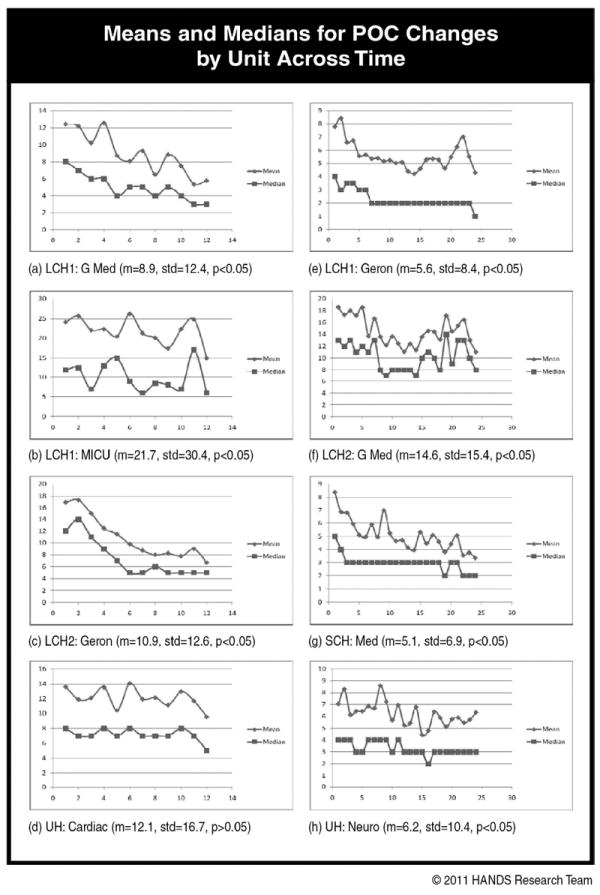

We tested a number of models to examine the pattern of optional POC changes/episode across time for each unit. Because of the Poisson nature of the data as indicated by heteroskedasticity in the residual analysis, ultimately we chose to utilize the means of all episodes (regardless of length) to conduct our analyses and present findings. The POC changes differed by unit, with the intensive care unit appropriately showing the highest number of changes per episode. The variability in the POC changes is also indicated by the medians for each of the units being much lower than the means. The distinct differences between the means and the medians are explained by the fact that a preponderance of episodes were short stays and naturally had fewer POC changes. To analyze whether the number of POC changes made by RNs declined over time, we utilized the Jonckheere-Terpstra (J-T) test, where the null hypothesis is that the number of changes made remains the same and the alternative hypothesis is that the number declines over time. We chose the non-parametric J-T test because our data were discrete in nature and exhibited severe heteroskedasticity, and we found that the number of changes depended on many factors, which makes a good parametric model difficult to find. The outcomes of the J-T test, as well as the overall mean and standard deviation of the number of POC changes per episode for each unit, appear in Figure 4. We concluded that a unit experienced decline in the number of POC changes made over time if the p-value of the J-T test was below 0.05. In all but one unit (UH: Cardiac Surgical), there was a statistically significant decline over time. One interpretation could be that this significant decline in POC indicated some erosion in proper use of the system across time. A look at the means and medians across time in Figure 4, however, suggests an alternative explanation. It is clear in the unit graphs that the number of changes per episode appears to drop considerably from the start to the finish. What also is clear and aligned with previous findings following implementation of a new computerized system is that in the early months after a computer intervention is implemented, clinicians spend more time using the computers when they are learning to use them appropriately in practice (Bjorvell, Wredling, & Thorell-Ekstrand, 2002; Darmer et al., 2006; Larrabee et al., 2001). After approximately six months, the number of changes leveled off and stayed fairly stable or variable for the remaining months of the study (for both 12-month and 24-month units). Additionally, these graphs indicate that RNs continued to make changes to plans within episodes across time rather than defaulting to re-entering the same plan at each hand-off (POC optional changes would have been “0”).

Figure 4.

The units a-d (left column) were in the study for 12 months and units e-h (right column) for 24 months. Graphs are truncated to the maximum POC changes per unit to better represent variation across time. Optional changes were computed by adding all of the following across an entire episode: a count of the items that differed from POC1 to POC2; POC2 to POC3, POC3 to POC4, etc. Item differences were defined as adding, deleting, resolving NNN terms or a change in a “current” NOC rating from that which was entered for the NOC on last POC.

Reliability

We examined reliability in two ways. First, to examine understanding of NNN terms, convenience samples of nurses at three different time points identified correct definitions of 6 terms (2 NANDA-I, 2 NOC, and 2 NIC) from 3-4 choices per term (those frequently used on the unit; incorrect definitions were close in meaning). The results provided evidence that RNs understood the meanings of most of the NNN terms used regularly on their units (Table 3). Second, a convenience sample of nurses from all eight units participated in NOC inter-rater reliability exercises (Table 4). One research assistant and one to two RNs from a unit independently scored the current and expected NOC ratings for a specified group of patients. The results indicate moderate to strong reliability across raters, similar to what had been found in previous studies (Weick & Sutcliffe, 2001). This finding provides evidence that RNs hold a moderately strong common understanding (shared meaning) of NOC ratings.

Table 3.

Term meaning reliability exercises % correct by time period

| Units | 3-9 months post go-live | 15 months post go-live | ||||

|---|---|---|---|---|---|---|

| RNs | M | Range of M for units | RNs | M | Range of M for units | |

| Y1 (4) | 28 | 68% | 60-79% | 39 | 74% | 56-90% |

| Y2 (4) | 42 | 82% | 75-89% | |||

Table 4.

NOC expected and current ratings inter-rater reliability results

| NOC Outcomes Rated | Units | RNs | Pts | Expb W/0a | Exp W/1d | Currentc W/0e | Current W/1b |

|---|---|---|---|---|---|---|---|

| 155 (62 unique labelsa) | 8 | 17 | 34 | .53 | .95 | .44 | .86 |

Some of the same NOC outcomes applied to more than 1 of the 34 patients (POCs) and resulted in the rating of the same NOC for different patients.

is the rating when NOC is first added to POC, and represents value patient expected to achieve by discharge from one’s unit.

is the NOC rating that indicates the exact status of the patient at a given point in time (e.g., every handoff).

NOC ratings of independent judges were within 1 point on a 5-point scale.

NOC ratings of independent judges were exactly the same.

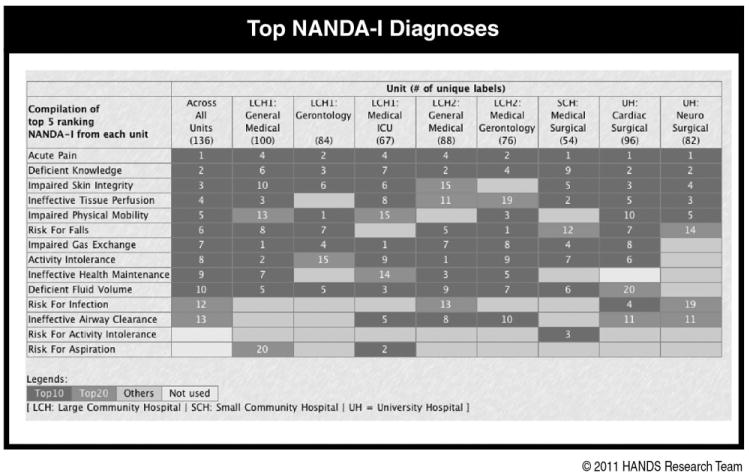

Availability of Standardized Data is indeed the primary intended goal or outcome of using an application like HANDS. If users are provided a tool like HANDS, trained to use it properly, and do so under real-time conditions (because it is useful), then standardized data will be available. In fact, over the two-year data collection period, we accumulated 40,747 episodes of care in HANDS that can be used for benchmarking and determining best practices on medical-surgical units. Figures 5, 6, and 7 are simple examples of what can be derived from the HANDS standardized database.

Figure 5.

Includes the combined top 5 NANDA-I Diagnoses for each of the 8 study units and all units and the rankings of these by unit and all units.

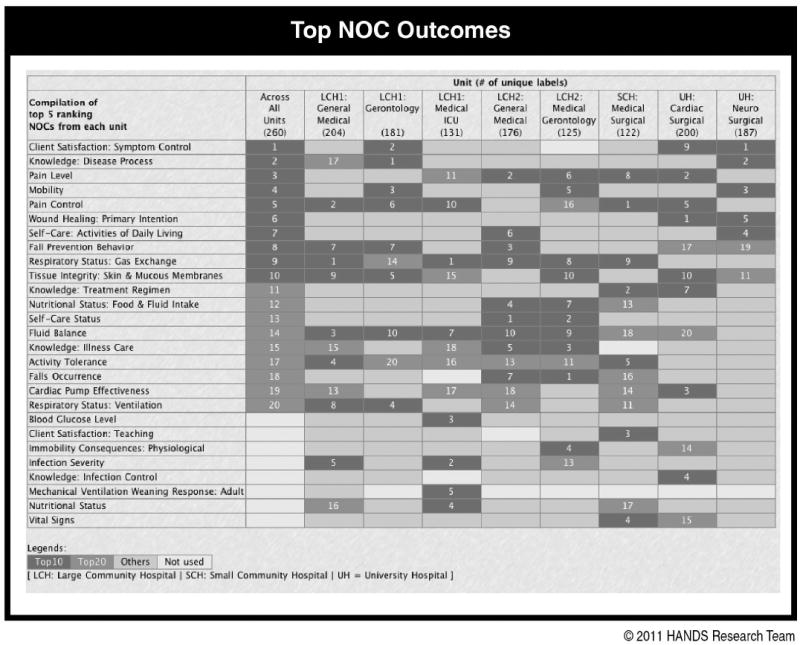

Figure 6.

Includes the combined top 5 NOC Outcomes for each of the 8 study units and all units

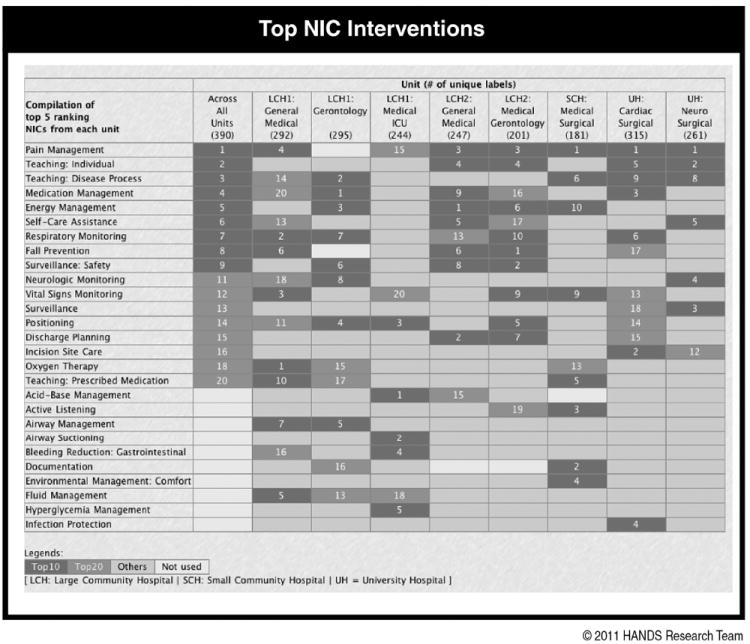

Figure 7.

Includes the combined top 5 NIC Interventions for each of the 8 study units and all units and the rankings of these by unit and all units.

V. Discussion and Conclusions

This study is the first to demonstrate real-time use of a web-based electronic POC EHR system, HANDS, in a multi-institutional setting on multiple nursing care units over a substantial time period. We provide clear and compelling evidence that a standardized HANDS system can be successfully implemented and used consistently across different organizations and different units. The standardization was extensive (training, screens, terminologies, database) and clearly shows that well-conceived EHR systems that adequately represent the work-flow can be used by large numbers of nurses under usual work conditions. The five most important findings were remarkable and beyond what we could have imagined possible. First, 100% of the RNs who worked on the 8 study units successfully completed the required in-person and online training modules for HANDS. Second, all of our original 8 diverse medical surgical study units participated for the entire 12- or 24-month designated study period in spite of the fact that all units experienced unexpected disruptions. Third, compliance rates for entering POCs on every patient at handoffs were high (78% to 92%), when the literature shows updating of POC to occur at much lower rates and with much longer intervals between updates (Griffiths & Hutchings, 1999; Hardey et al., 2000; Kärkkäinen et al., 2005; Karkkainen & Eriksson, 2004, 2005; Keenan et al., submitted). Fourth, although there was evidence that the POC pattern of optional changes per episode declined over time, nurses nonetheless continued to make a reasonable number of changes, suggesting the ongoing validity of the information in the POC. Finally, given the high POC submission rates, we now have a standardized database of 40,747 episodes of care that can be used to examine and evaluate the impact of nursing care on patient outcomes.

The study design and representative sampling strategy coupled with the secure external web deployment of our intervention offer a feasible and highly desirable alternative to the randomized controlled trial for examining EHR interventions (Liu & Wyatt, 2010). The web deployment of the POC tool and training materials allowed us to avoid creating complicated organization-by-organization technical plans for integrating HANDS, thereby maintaining standardization of the intervention across the multiple organizations. HANDS was available through a desktop link on every study unit computer and as such appeared to be a natural part of each organization’s suite of electronic tools for the clinician. We tested the intervention only with RNs, though the “big picture” is intended to be a representation of the entire interdisciplinary team’s POC. This focus was purposeful to ensure that the intervention first worked with RNs whose primary role is the actual delivery of care to the patient on behalf of the entire interdisciplinary team.

This study has also shown that it is possible, under real-time conditions, to collect a standardized dataset, provide standardized visualizations of that data to clinicians, and generate standardized data for other secondary purpose. This finding is powerful, given that the mandate for implementing “standards” alone is not sufficient to bring the desired consistency and format of information needed by front-line EHR users to help them stay on the same page. Scherb (2002) and Westra et al. (Westra, Dey, et al., 2011; Westra, Savik, et al., 2011) provide excellent examples of the problems associated with implementing standardized terminologies without regard for the other standards needed to bring intended value. Scherb (2002) found that the architecture of a database structure severely constrained their ability to analyze and generate visualizations of the relationships among the collected data elements in spite of use of standardized terminologies. Westra et al. (Westra, Dey, et al., 2011; Westra, Savik, et al., 2011) found in conducting statistical and data mining exercises that much of the data gathered by different EHR systems, all of which used the same standardized terminologies, were not useful due to differences in the way the EHR systems collected and stored the data elements.

Although simple, these examples underscore why it is not always realistic to expect that requiring EHR vendors to use standards will lead to the desired consistency of information needed to support care across settings. It is also no surprise that the Health Information Exchanges (HIE), the newest electronic layer of health information, are also not bringing the desired consistency and use of data across the disparate EHR systems (Guerra, 2010; Vest, Zhao, Jasperson, Gamm, & Ohsfeldt, 2011). Adding a new layer that includes multiple new players rather than increasing the general consistency of information will increase the variation in the ways health information is presented. Each HIE vendor will naturally create its own unique way of applying the required standards and by so doing likely will fail to satisfy the genuine needs for collecting standardized data and easily accessing and retrieving its standardized visualizations (Guerra, 2010; Vest et al., 2011).

The continuity of care record and continuity of care document, and HL7s clinical document architecture (Healthcare Information Technology Standard Panel, 2009) are supported by the meaningful use legislation (Blumenthal & Tavenner, 2010; DHHS, 2010), as dataset and architecture standards to promote continuity of care. These standards, though headed in the right direction, are insufficient to ensure that the same information is collected, accessible, and visualized in the same way across EHRs. This discrepancy will continue to fuel unintentional errors as clinicians struggle to reconcile the meaning of important information that is entered, accessed, and visualized in “many shapes and sizes.” The study reported here provides evidence that it is not only possible but desirable to create more effective levels of standardization for health information in EHRs. As such, we offer these ideas for consideration by policymakers who have the power and responsibility to help ensure that EHRs work efficiently in promoting safe and effective care.

Whereas we have provided clear and compelling evidence that an application like HANDS can be successfully implemented and used consistently across different organizations and different units, it is important to point out the study limitations. All study units were hospital-based medical-surgical units. There were no pediatric, psychiatric, or maternity inpatient units represented in our sample, nor were there any ambulatory, long-term care, home care, or hospice organizations. Moreover, the study specifically focused on the RNs’ use and entry of the POC and not on use by the entire interdisciplinary team. Since our goal is that the POC be a tool that accurately represents the “big picture” of the patient’s care for the entire “interdisciplinary team” across time and settings, we plan further studies. Validating the true accuracy and completeness of the POC will also require additional study. We do, however, expect the validity and reliability of the POC data to automatically move to the desired levels once the POC is seen by all members of the team as a genuinely useful and vital tool for keeping the patient’s care team on the same page regardless of where the patient enters the system.

Our follow-up studies are thus designed to address the limitations and specifically are now focused on the following (Figure 1): 1) expanding development and testing of HANDS to include features that support other key members of the patient’s interdisciplinary care team (physicians, pharmacists, social workers); 2) more fully integrating HANDS into intra- and interdisciplinary handoffs; 3) testing HANDS in other types of inpatient units and settings outside hospitals; 4) testing our methods of interfacing HANDS to all EHRs; and 5) showing the valuable secondary uses of the standardized data generated with HANDS that support benchmarking, identification of best practices, and continuous improvement of the HANDS user interface as a means of translating new knowledge into useful evidence at the point of care.

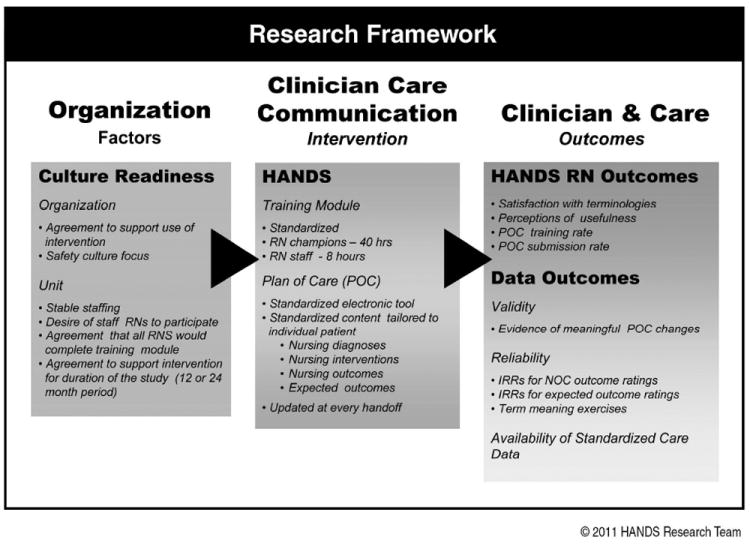

Figure 2.

The Research Framework depicts the 1) antecedent criteria met by the organization and study unit participants; 2) facets of the HANDS intervention; and 3) outcomes evaluated in the study.

Acknowledgments

We would like to acknowledge our anonymous collaborative institutional partners, the hundreds of nurses who participated in this study, and the Agency for Healthcare Research and Quality for funding (R01 HS01 5054 02).

Footnotes

Disclosure: The HANDS software that was used in this study is now owned and distributed by HealthTeam IQ, LLC. Dr. Gail Keenan is currently the President and CEO of this company and has a current conflict of interest statement of explanation and management plan in place with the University of Illinois at Chicago.

Preliminary results of the study were presented at AMIA Fall Symposium 2007 in a panel entitled: Implications of the findings from a 3 yr study of a standardized HIT supported care management system: HANDS.

Contributor Information

Gail M. Keenan, College of Nursing, University of Illinois Chicago, Chicago, IL, USA.

Elizabeth Yakel, School of Information, University of Michigan, Ann Arbor, MI, USA.

Yingwei Yao, College of Nursing, University of Illinois at Chicago, Chicago, IL, USA.

Dianhui Xu, College of Engineering, Electrical and Computer, University of Illinois at Chicago, Chicago, IL, USA.

Laura Szalacha, College of Nursing, Arizona State University, Tempe, AZ, USA.

Dana Tschannen, School of Nursing, University of Michigan, Ann Arbor, MI, USA.

Yvonne Ford, Bronson School of Nursing, Western Michigan University, Kalamazoo, MI, USA.

Yu-Chung Chen, University of Illinois at Chicago, Chicago, IL, USA.

Andrew Johnson, College of Engineering/Computer Science, University of Illinois at Chicago, Chicago, IL, USA.

Karen Dunn Lopez, College of Nursing, University of Illinois, Chicago, IL, USA.

Diana J. Wilkie, College of Nursing, University of Illinois Chicago, Chicago, IL, USA.

References

- Allen D. Record-keeping and routine nursing practice: The view from the wards. Journal of Advanced Nursing. 1998;27(6):1223–1230. doi: 10.1046/j.1365-2648.1998.00645.x. [DOI] [PubMed] [Google Scholar]

- Bjorvell C, Wredling R, Thorell-Ekstrand I. Long-term increase in quality of nursing documentation: Effects of a comprehensive intervention. Scandinavian Journal of Caring Science. 2002;16(1):34–42. doi: 10.1046/j.1471-6712.2002.00049.x. [DOI] [PubMed] [Google Scholar]

- Blumenthal D, Tavenner M. The “meaningful use” regulation for electronic health records. New England Journal of Medicine. 2010;363(6):501–504. doi: 10.1056/NEJMp1006114. [DOI] [PubMed] [Google Scholar]

- Currell R, Urquhart C, Grant MJ, Hardiker NR. Nursing record systems: Effects on nursing practice and healthcare outcomes. Cochrane Database of Systematic Reviews. 2009;(1) doi: 10.1002/14651858.CD002099.pub2. [DOI] [PubMed] [Google Scholar]

- Darmer MR, Ankersen L, Nielsen BG, Landberger G, Lippert E, Egerod I. Nursing documentation audit--the effects of a VIPS implementation programme in Denmark. Journal of Clinical Nursing. 2006;15(5):525–534. doi: 10.1111/j.1365-2702.2006.01475.x. [DOI] [PubMed] [Google Scholar]

- Department of Health and Human Services. Health information technology: Revisions to initial set of standards, implementation specifications, and certification criteria for electronic health record technology. Interim final rule with request for comments. Federal Register. 2010;75:44589–44654. [PubMed] [Google Scholar]

- Ehrenberg A, Ehnfors M. The accuracy of patient records in Swedish nursing homes: Congruence of record content and nurses’ and patients’ descriptions. Scandinavian Journal of Caring Science. 2001;15(4):303–310. doi: 10.1046/j.1471-6712.2001.00044.x. [DOI] [PubMed] [Google Scholar]

- Ehrmann SC, Zuniga RE. The flashlight evaluation handbook. Washington, DC: The TLT Group; 1997. [Google Scholar]

- Griffiths J, Hutchings W. The wider implications of an audit of care plan documentation. Journal of Clinical Nursing. 1999;8(1):57–65. doi: 10.1046/j.1365-2702.1999.00217.x. [DOI] [PubMed] [Google Scholar]

- Guerra A. Five members dominate HIE market, Information Week. 2010 Jul 8; Retrieved from http://www.informationweek.com/news/healthcare/clinical-systems/225702631.

- Hardey M, Payne S, Coleman P. ‘Scraps’: Hidden nursing information and its influence on the delivery of care. Journal of Advanced Nursing. 2000;32(1):208–214. doi: 10.1046/j.1365-2648.2000.01443.x. [DOI] [PubMed] [Google Scholar]

- Healthcare Information Technology Standard Panel. Comparison of CCR/CCD and CDA documents and HITSP products. 2009 Retrieved July 7, 2011, from http://publicaa.ansi.org/sites/apdl/hitspadmin/Matrices/HITSP_09_N_451.pdf.

- Hutchins E. The technology of team navigation. In: Galegher J, Kraut RE, Egido C, editors. Intellectual teamwork: Social and technical bases of collaborative work. Hillsdale, NJ: Lawrence Erlbaum Associates; 1990. [Google Scholar]

- Hutchins E. Cognition in the wild. Cambridge, MA: MIT Press; 1995. [Google Scholar]

- Institute of Medicine. Crossing the quality chasm: A new health system for the 21st century. Washington, DC: National Academy Press; 2001. [PubMed] [Google Scholar]

- Joint Commission. 2010 Hospital accreditation standards. Oakbrook Terrace, IL: Author; 2010. [Google Scholar]

- Kärkkäinen O, Bondas T, Eriksson K. Documentation of individualized patient care: A qualitative metasynthesis. Nursing Ethics. 2005;12(2):123–132. doi: 10.1191/0969733005ne769oa. [DOI] [PubMed] [Google Scholar]

- Karkkainen O, Eriksson K. Structuring the documentation of nursing care on the basis of a theoretical process model. Scandinavian Journal of Caring Science. 2004;18(2):229–236. doi: 10.1111/j.1471-6712.2004.00274.x. [DOI] [PubMed] [Google Scholar]

- Karkkainen O, Eriksson K. Recording the content of the caring process. Journal of Nursing Management. 2005;13(3):202–208. doi: 10.1111/j.1365-2834.2005.00540.x. [DOI] [PubMed] [Google Scholar]

- Keenan GM, Stocker JR, Geo-Thomas AT, Soparkar NR, Barkauskas VH, Lee JL. The HANDS project: Studying and refining the automated collection of a cross-setting clinical data set. Computers, Informatics, Nursing: CIN. 2002;20(3):89–100. doi: 10.1097/00024665-200205000-00008. [DOI] [PubMed] [Google Scholar]

- Keenan GM, Yakel E. Promoting safe nursing care by bringing visibility to the disciplinary aspects of interdisciplinary care. American Medical Informatics Association Annual Symposium Proceedings. 2005;2005:385–389. [PMC free article] [PubMed] [Google Scholar]

- Keenan GM, Yakel E, Dunn Lopez K, Tschannen D, Ford YB, Sorokin O. Patterns of information flow in hospitals: Home grown, variable, and vulnerable. Journal of the American Medical Informatics Association: JAMIA. doi: 10.1136/amiajnl-2012-000894. submitted. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keenan GM, Yakel E, Tschannen D, Mandeville M. Documentation and the nurse care planning process. In: Hughes RG, editor. Patient safety and quality: An evidence-based handbook for nurses. Rockville, MD: Agency for Healthcare Research and Quality (US); 2008. NBK2674 [bookaccession] [PubMed] [Google Scholar]

- Larrabee JH, Boldreghini S, Elder-Sorella K, Turner ZM, Wender RG, Hart JM. Evaluation of documentation before and after implementation of a nursing information system in an acute care hospital. Computers in Nursing. 2001;17(2):56–65. [PubMed] [Google Scholar]

- Lee TT. Nursing diagnoses: Factors affecting their use in charting standardized care plans. Journal of Clinical Nursing. 2005;14(5):640–647. doi: 10.1111/j.1365-2702.2004.00909.x. [DOI] [PubMed] [Google Scholar]

- Lee TT, Chang PC. Standardized care plans: Experiences of nurses in Taiwan. Journal of Clinical Nursing. 2004;13(1):33–40. doi: 10.1111/j.1365-2702.2004.00818.x. [DOI] [PubMed] [Google Scholar]

- Liu JL, Wyatt JC. The case for randomized controlled trials to assess the impact of clinical information systems. Journal of the American Medical Informatics Association: JAMIA. 2010;18:173–180. doi: 10.1136/jamia.2010.010306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mason C. Guide to practice or ‘load of rubbish’? The influence of care plans on nursing practice in five clinical areas in Northern Ireland. Journal of Advanced Nursing. 1999;29(2):380–387. doi: 10.1046/j.1365-2648.1999.00899.x. [DOI] [PubMed] [Google Scholar]

- McCloskey Dochterman JC, Bulechek GM. Nursing interventions classification. 4. St Louis, MO: Mosby; 2004. [Google Scholar]

- Mishra AK. Organizational responses to crisis: The role of mutual trust and top management teams. Ann Arbor, MI: University of Michigan; 1992. [Google Scholar]

- Moorhead S, Johnson M, Maas M Iowa Outcomes Project. Nursing outcomes classification (NOC) St Louis, MO: Mosby; 2004. [Google Scholar]

- NANDA International. NANDA-Nursing diagnoses: Definition and classification 2003-2004. Philadelphia, PA: Author; 2003. [Google Scholar]

- Scherb C. Outcomes research: Making a difference. Outcomes Management. 2002;6(1):22–26. [PubMed] [Google Scholar]

- Stead WW, Lin HS. Computational Technology for Effective Health Care: Immediate Steps and Strategic Directions. Washington, DC: USA National Academies Press; 2009. p. 119. [PubMed] [Google Scholar]

- Stokke TA, Kalfoss MH. Structure and content in Norwegian nursing care documentation. Scandinavian Journal of Caring Science. 1999;13(1):18–25. [PubMed] [Google Scholar]

- Vest JR, Zhao H, Jasperson J, Gamm LD, Ohsfeldt RL. Factors motivating and affecting health information exchange usage. Journal of the American Medical Informatics Association: JAMIA. 2011;18(2):143–149. doi: 10.1136/jamia.2010.004812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogus T, Welbourne TM. Structuring for high reliability: HR practices and mindful processes in reliability-seeking organizations. Journal of Organizational Behavior. 2003;24(877-903) [Google Scholar]

- Weick KE. Organizational culture as a source of high reliability. California Management Review. 1987;29(2):112–127. [Google Scholar]

- Weick KE, Roberts KH. Collective mind in organizations: Heedful interrelating on flight decks. Administrative Science Quarterly. 1993;38(3):357–382. [Google Scholar]

- Weick KE, Sutcliffe KM. Managing the unexpected: Assuring high performance in an age of complexity. San Francisco, CA: Jossey-Bass; 2001. [Google Scholar]

- Weick KE, Sutcliffe KM. Mindfulness and the quality of organization attention. Organization Science. 2006;17(4):514–524. [Google Scholar]

- Westra B, Dey S, Steinbach M, Kumar V, Oancea C, Savik K, Dierich M. Interpretable predictive models for knowledge discovery from home-care electronic health records. Journal of Healthcare Engineering. 2011;2(1):55–71. [Google Scholar]

- Westra B, Savik K, Oancea C, Chormanski L, Holmes JH, Bliss D. Predicting improvements in urinary and bowel incontinence for home health patients using electronic health record data. Journal of Wound Ostomy & Continence Nursing. 2011;38(1):77–87. doi: 10.1097/won.0b013e318202e4a6. [DOI] [PMC free article] [PubMed] [Google Scholar]