Abstract

Our previously developed locomotion-mode-recognition (LMR) system has provided a great promise to intuitive control of powered artificial legs. However, the lack of fast, practical training methods is a barrier for clinical use of our LMR system for prosthetic legs. This paper aims to design a new, automatic, and user-driven training method for practical use of LMR system. In this method, a wearable terrain detection interface based on a portable laser distance sensor and an inertial measurement unit (IMU) is applied to detect the terrain change in front of the prosthesis user. The mechanical measurement from the prosthetic pylon is used to detect gait phase. These two streams of information are used to automatically identify the transitions among various locomotion modes, switch the prosthesis control mode, and label the training data with movement class and gait phase in real-time. No external device is required in this training system. In addition, the prosthesis user without assistance from any other experts can do the whole training procedure. The pilot experimental results on an able-bodied subject have demonstrated that our developed new method is accurate and user-friendly, and can significantly simplify the LMR training system and training procedure without sacrificing the system performance. The novel design paves the way for clinical use of our designed LMR system for powered lower limb prosthesis control.

I. Introduction

Myoelectric (EMG) pattern recognition (PR) has been widely used for identifying human movement intent to control prostheses [1–4]. The PR strategy usually consists of two phases: a training phase for constructing the parameters of a classifier and a testing phase for identifying the user intent using the trained classifier. Our previous study has developed a locomotion-mode-recognition (LMR) system for artificial legs based on a phase-dependent PR strategy and neuromuscular-mechanical information fusion [3, 5]. The LMR system has been tested in real-time on both able-bodied subjects and lower limb amputees. The results have shown high accuracies (>98%) in identifying three tested locomotion modes (level-ground walking, stair ascent, and stair descent) and tasks such as sitting and standing [5–6].

One of the challenges for applying the designed LMR system to clinical practice is the lack of practical system training methods. A few PR training methods have been developed for control of upper limb prosthesis, such as screen-guided training (SGT) [7–8], where users perform muscle contractions by following a sequence of visual/audible cues, and prosthesis-guided training (PGT) [9], where the prosthesis itself provides the cues by performing a sequence of preprogrammed motions. However, neither SGT nor PGT can be directly adopted in the training of LMR system for lower limb prostheses because the computer and prosthesis must coordinate with the walking environment to cue the user to perform locomotion mode transitions during training data collection. Currently the training procedure for the LMR system is time consuming and manually conducted by experts. During the training procedure, experts cue the user's actions according to the user’s movement status and walking terrain in front of the user, switch the prosthesis control mode before the user steps on another type of terrain, and label the collected training data with movement class manually using an external computer. Such a manual approach significantly challenges the clinical value of LMR because usually the experts are not available at home.

To address this challenge, this paper aims to design an automatic and user-driven training method for the LMR system. The basic idea is replacing the expert with a smart system to collect and automatically label the training data for PR training. Our design significantly simplifies the training procedure, and can be applied anytime and anywhere, which paves the way for clinical use of the LMR system for powered lower limb prosthesis control.

II. Automatic Training Method

The LMR system for artificial legs is based on phase-dependent pattern classification [4–5], which consists of a gait phase detector and multiple sub-classifiers corresponding to each phase. In this study, four gait phases are defined: initial double limb stance (phase 1), single limb stance (phase 2), terminal double limb stance (phase 3), and swing (phase 4) [5]. In the LMR system training, the EMG signals and mechanical forces/moments are the inputs of the LMR system [4] and segmented by overlapped analysis windows. For every analysis window, features of EMG signals and mechanical measurements are extracted from each input channel and concatenated into one feature vector. The feature vector must be labeled with the correct movement class and gait phase to train individual sub-classifiers.

The previous approach labels the training data with locomotion mode (class) and gait phase by an experimenter manually. In this design, we replace the experimenter by a smart system that (1) automatically identifies the transition between locomotion modes based on terrain detection sensors and algorithms, (2) switches the control mode of powered artificial legs, (3) labels the analysis windows with the locomotion mode (class index) and gait phase, and (4) trains individual sub-classifiers. Our designed system consists of three parts: a gait phase detector for identifying the gait phase of the current analysis window, a terrain detection interface for detecting the terrain in front of the user, and a labeling algorithm to label the mode and gait phase of current data.

To label every analysis window with locomotion mode (class index), it is important to define the timing of mode transition. The purpose of the LMR system is to predict mode transitions before a critical gait event for safe and smooth switch of prosthesis control mode. Our previous study has defined this critical timing for each type of mode transition [4, 6]. In order to allow the LMR system to predict mode transitions before the critical timings, the transition between locomotion modes is defined to be the beginning of the single stance phase (phase 2) immediately prior to the critical timing during the transition [4].

1) Gait Phase Detection

The real-time gait phase detection is implemented by monitoring the vertical ground reaction force (GRF) measured from the 6 DOF load cell. The detailed algorithm can be found in [5].

2) Terrain Detection Interface

Because the sequence of the user’s locomotion mode in the training trials is predefined, the goal of the terrain detection interface is not to predict the unknown terrain in front of the subject, but to detect the upcoming terrain change in an appropriate range of distances to help identify the transition between the locomotion modes.

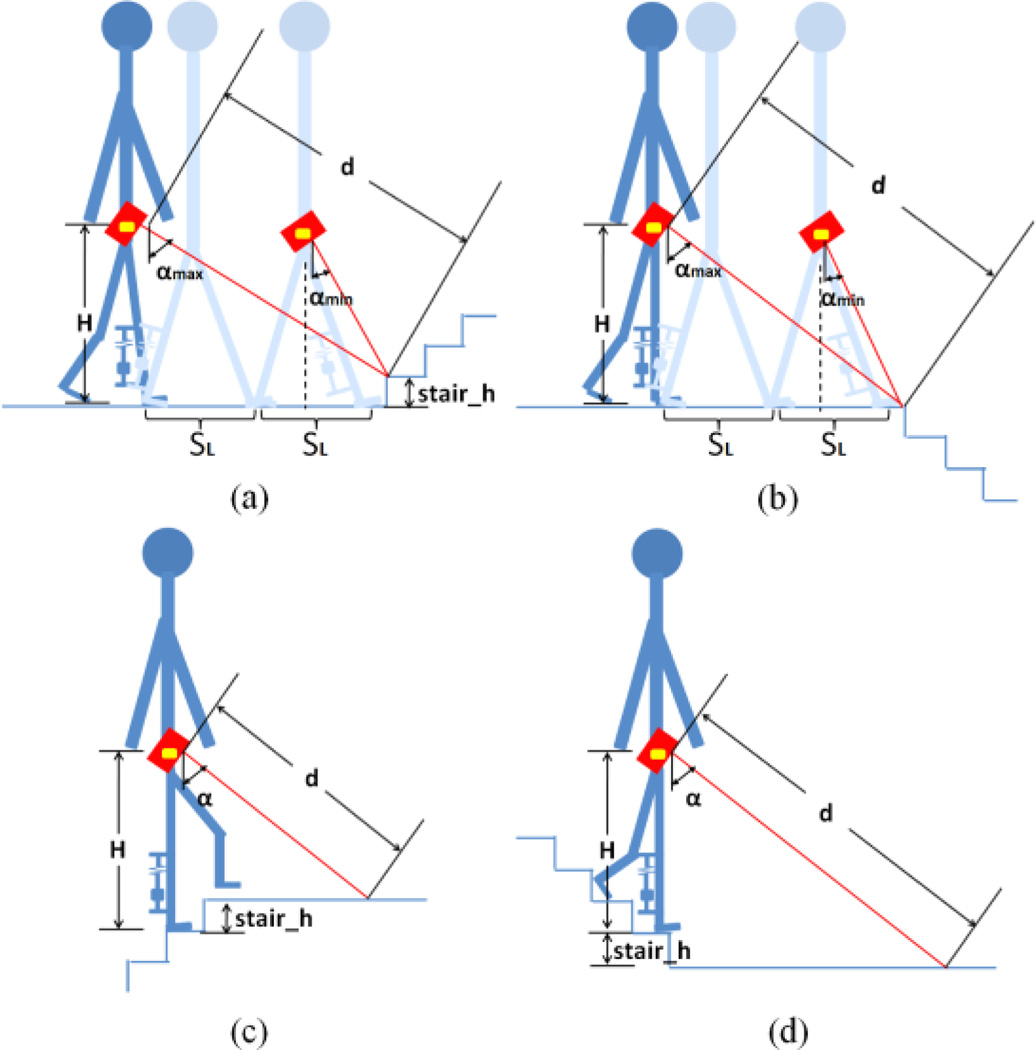

Fig. 1 shows the sensor setup of the terrain detection interface. A portable laser distance sensor and an inertial measurement unit (IMU) are placed on the prosthesis user's waist as suggested in [10], because this sensor configuration has been demonstrated to provide stable signals with very small noises and good performance for recognizing terrain types. Before the training procedure starts, a calibration session is conducted first to measure a few parameters for later use in the training process. During calibration, the user walks at a comfortable speed on the level-ground for about 30 seconds. The average vertical distance from the laser sensor to the level ground (H) and the average step length (SL) of the user are measured.

Fig. 1.

Four types of terrain alterations investigated in this study.

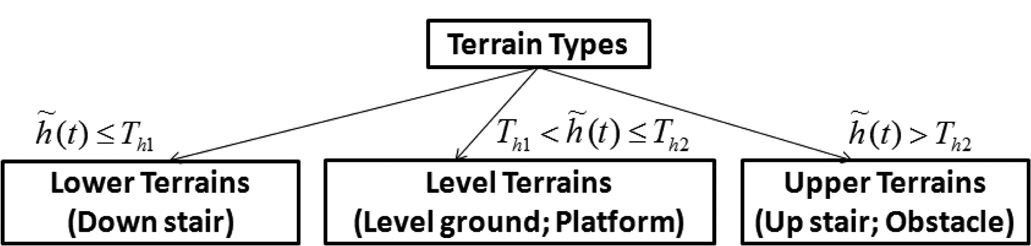

Three types of terrains have been investigated in this study, including terrains that are above current negotiated terrain (upper terrain), terrains with the same height as the current terrain (level terrain), and terrains that are below the current terrain (lower terrain). The terrain types can be discriminated by a simple decision tree as shown in Fig. 2. In Fig. 2, h̃(t) denotes the estimated height of the terrain in front of the subject, which can be calculated by h̃(t) = H −d(t) cos α(t). Here d(t) denotes the distance measured from the laser sensor; α(t) is the angle between the laser beam and the vertical direction, which can be obtained from the IMU; H is the average vertical distance from the laser sensor to the terrain measured in the calibration session. Th1 and Th2 in Fig. 2 represent the thresholds that distinguish the three terrain types. To reduce possible miss identifications, only the decisions in phase 1 (i.e. initial double limb stance phase) of each stride cycle are considered for detection of terrain change.

Fig. 2.

The decision tree that discriminates the terrain types.

In order to accurately identify the transitions between consecutive movement tasks in real-time, the detection of terrain alteration must happen within the stride cycle immediately prior to the transition point. To meet this requirement, the initial angle between the laser beam and the vertical direction (αinit) and the thresholds Th1 and Th2 need to be chosen appropriately. As shown in Fig. 1(a) and (b) for the terrain alterations from level ground to up/down stair, in order to make sure the subject is within the prior cycle to the transition point when the terrain alteration is detected, by assuming the variation of α during level ground walking is very small, the acceptable range of αinit can be estimated by (1) for Fig. 1(a), and (2) for Fig. 1 (b).

For Fig. 1 (c) and (d), which represent the terrain alterations from up/down stair to level terrain, the terrain alterations must be detected within the last step. To satisfy this condition, Th1 and Th2 need to be less than the height of one stair step.

3) Labeling Algorithm

The gait phase of every analysis window is directly labeled with the output of the gait phase detector. On the promise of detecting all the terrain alterations in the required ranges by the terrain detection interface, the transition point between locomotion modes is identified as the first analysis window in phase 2 immediately after the expected terrain change is detected. The transition point from standing to locomotion modes can be automatically identified without the information of terrain type, which is the first analysis window immediately after toe-off (the beginning of phase 2). For two consecutive movement modes, the analysis windows before the transition point are labeled with the former movement class, and the windows after the transition point are labeled with the latter movement mode.

III. Participant and Experiments

A. Participant and Measurements

This study was conducted with Institutional Review Board (IRB) approval at the University of Rhode Island and informed consent of subjects. One male able-bodied subject was recruited. A plastic adaptor was made so that the subject could wear a prosthetic leg on the right side.

Seven surface EMG signals were collected from the thigh muscles on the subject's right leg including adductor magnus (AM), biceps femoris long head (BFL), biceps femoris short head (BFS), rectus femoris (RF), sartorius (SAR), semitendinosus (SEM), and vastus lateralis (VL). The EMG signals were filtered between 20 Hz and 450 Hz with a pass-band gain of 1000. Mechanical ground reaction forces and moments were measured by a 6 degree-of-freedom (DOF) load cell mounted on the prosthetic pylon. A portable optical laser distance sensor and an inertial measurement unit (IMU) were placed on the right waist of the subject. The laser distance sensor could measure a distance ranging from 300 mm to 10000 mm with the resolution of 3 mm. The EMG signals and the mechanical measurements were sampled at 1000 Hz. The signals from the laser sensor and the IMU were sampled at 100 Hz. The input data were synchronized and segmented into a series of 160 ms analysis windows with a 20 ms window increment. For each analysis window, four time-domain (TD) features (mean absolute value, number of zero crossings, waveform length, and number of slope sign changes) were extracted from each EMG channel [11]. For mechanical signals, the maximum, minimum, and mean values calculated from each individual DOF were the features. Linear discriminant analysis (LDA) [12] was used as the classification method for pattern recognition. The system were implemented in Matlab on a PC with 1.6GHz Xeon CPU and 2GB RAM.

B. Experimental Protocol

In this study, four movement tasks (level-ground walking (W), stair ascent (SA), stair descent (SD), and standing (ST)), and five mode transitions (ST→W, W→SA, SA→W, W→SD, and SD→W) were investigated. An obstacle course was built in the laboratory, consisting of a level-ground walk way, 5-step stairs with the height of 160 mm for each step, a small flat platform, and an obstacle block (300 mm high and 250 mm wide).

The experiment consisted of three sessions: calibration session, automatic training session, and real-time testing session. Before the training started, the calibration session was conducted to measure the average vertical distance from the laser sensor to the level ground (H) and the average step length (SL) of the subject. During calibration, the subject walked on the level-ground at a comfortable speed for 30 seconds. H and SL were measured to be 980 mm and 600 mm, respectively. Because Th1 and Th2 need to be less than the height of one stair step (160 mm) as explained in Section II, Th1 and Th2 were set to −120 mm and 120 mm, respectively. From (1) and (2) derived in Section II, the estimated range of αinit was calculated to be (19, 50) degree, and αinit was set to 42 degree.

During training, the subject was asked to perform a sequence of predefined movement tasks. The subject began with standing for about four seconds, switched to level-ground walking on the straight walkway, transited to stair ascent, walked on the platform with a 180 degrees turn, transited to stair descent, and switched back to level-ground walking on the walkway, stopped in front of the obstacle, turned 180 degrees, and repeated the previous tasks in the same way for two more times. In this training trial, besides the movement tasks investigated in this study, there were movements not wanted to be included in the training dataset, such as turning in front of the obstacle, and walking and turning on the platform. These movements were labeled as "not included" (NI) mode.

After training, ten real-time testing trials were conducted to evaluate the performance of the LMR system. Each trial lasted about one minute. All the investigated movement tasks and mode transitions were evaluated in the testing session.

IV. Results & Discussion

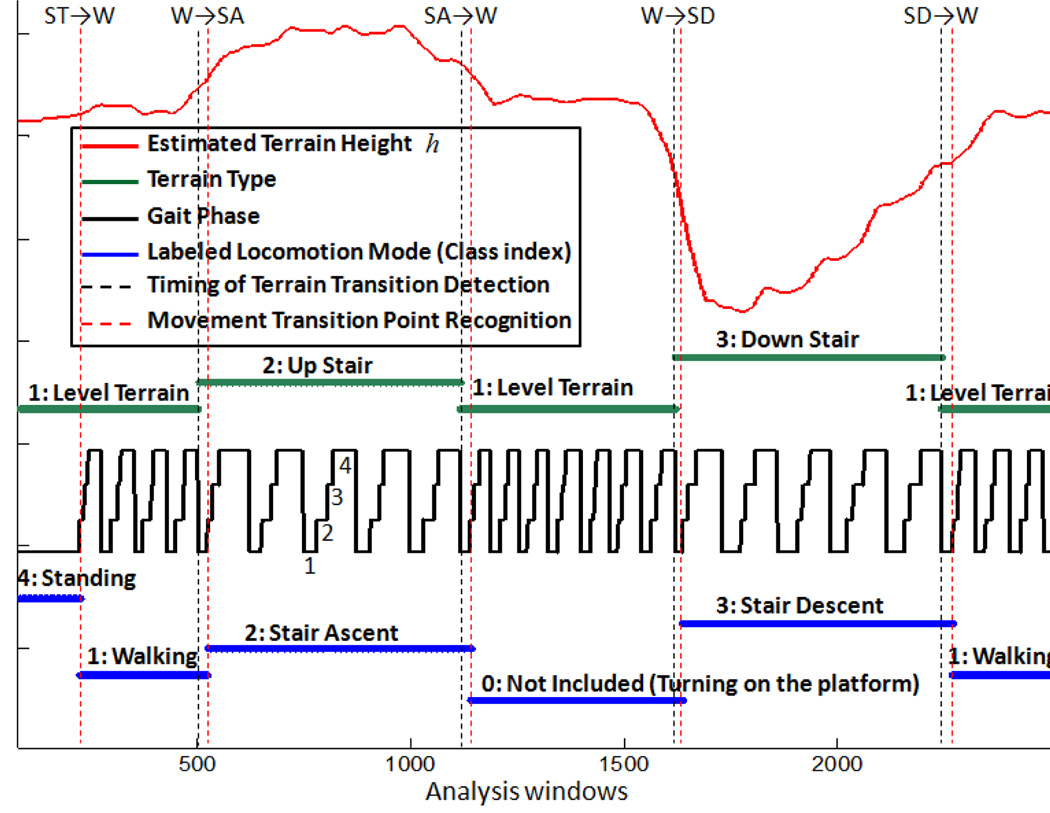

In the training trial, the subject took about 225 seconds to complete all the movement tasks. After the subject finished all the tasks, only 0.11 second was further spent to train the classifiers. All the terrain alterations were accurately identified and all analysis windows were correctly labeled. Fig. 3 shows the automatic labeling of locomotion modes in part of the training trial. It is observed from the figure that all terrain alterations were recognized at the beginning of phase 1 during the transition cycle, which means the actual terrain changes were detected before phase 1 and within the stride cycle prior to the transition. The transition points between consecutive tasks were accurately identified at the beginning of phase 2 during the transition cycle. All movement tasks were labeled with the correct class modes.

Fig. 3.

Automatic labeling of locomotion modes in part of the training trial.

The overall classification accuracy across 10 real-time testing trials was 97.64%. For all the 10 trials, no missed mode transitions were observed. The user intent for mode transitions was accurately predicted 103–653 ms before the critical timing for switching the control of prosthesis. The results indicate that the LMR system using automatic training strategy provides a comparable performance with the system using previous training method.

Table 1 summarizes the comparison between our new automatic training method and the previous training method. From the table we can see the new training method can significantly simplify the training procedure and shorten the total training time.

Table 1.

Comparison between the new automatic training method and the previous training method

| New Automatic Training | Traditional Training | |

|---|---|---|

| Connection to external device | A laser distance sensor and an IMU are required, which are both portable, and can be integrated into the prosthesis system in the future | An external computer is required. |

| Requirement of extra manpower | No. | A professional experimenter is required. |

| Total training time | 30 s calibration time for measuring a few parameters; 225 s for performing movement tasks; 0.11 s for the rest training process; |

225 s for performing movement tasks; 24 s for offline processing of training algorithm; At least 10 minutes for interacting with the experimenter, and manual data labeling |

| Is the system easy to follow? | User-driven: The training can be easily operated by a 'naïve user' unaided. The user only needs to perform all the movement tasks, and the training will be immediately done. |

Experimenter driven: The user needs to follow the guidance from the experimenter. The user needs to pause and wait when the experimenter is processing the data. |

| The way to switch the prosthesis control mode | Automatic switch; Driven by user's motion | Manual switch; Controlled by experimenter |

V. Conclusion

In this paper, an automatic, user-driven training strategy has been designed and implemented for classifying locomotion modes for control of powered artificial legs. The smart system can automatically identify the locomotion mode transitions based on a terrain detection interface, switch the prosthesis control mode, label the training data with correct mode (i.e. class index) and gait phase in real-time, and train the pattern classifiers in LMR quickly. The preliminary experimental results on an able-bodied subject show that all the analysis windows in the training trial were correctly labeled in real-time and the algorithm training process was accomplished immediately after the user completed all the movements. Compared with the system using traditional training strategy, our new training method can significantly simplify the training system and procedure, be easily operated by a naïve user, and shorten the total training time without sacrificing the system performance. These results pave the way for clinically viable LMR for intuitive control of prosthetic legs.

Acknowledgment

The authors thank Lin Du, Quan Ding, and Fan Zhang at the University of Rhode Island, for their suggestion and assistance in this study. The authors gratefully acknowledge the reviewers' comments.

This work is partly supported by National Science Foundation NSF/CPS #0931820, NIH #RHD064968A, NSF# 1149385, NSF/CCF #1017177, and NSF/CCF #0811333.

References

- 1.Parker P, Scott R. Myoelectric control of prostheses. Critical reviews in biomedical engineering. 1986;vol. 13:283. [PubMed] [Google Scholar]

- 2.Englehart K, Hudgins B. A robust, real-time control scheme for multifunction myoelectric control. IEEE Trans Biomed Eng. 2003 Jul;vol. 50:848–854. doi: 10.1109/TBME.2003.813539. [DOI] [PubMed] [Google Scholar]

- 3.Huang H, Kuiken TA, Lipschutz RD. A strategy for identifying locomotion modes using surface electromyography. IEEE Trans Biomed Eng. 2009 Jan;vol. 56:65–73. doi: 10.1109/TBME.2008.2003293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Huang H, Zhang F, Hargrove L, Dou Z, Rogers D, Englehart K. Continuous Locomotion Mode Identification for Prosthetic Legs based on Neuromuscular-Mechanical Fusion. Biomedical Engineering, IEEE Transactions on. 2011:1–1. doi: 10.1109/TBME.2011.2161671. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zhang F, DiSanto W, Ren J, Dou Z, Yang Q, Huang H. A novel CPS system for evaluating a neural-machine interface for artificial legs. 2011:67–76. [Google Scholar]

- 6.Zhang F, Dou Z, Nunnery M, Huang H. 33rd Annual International Conference of the IEEE EMBS. Boston MA, USA: 2011. Real-time Implementation of an Intent Recognition System for Artificial Legs. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Scheme E, Englehart K. A flexible user interface for rapid prototyping of advanced real-time myoelectric control schemes. 2008 [Google Scholar]

- 8.Kuiken TA, Li G, Lock BA, Lipschutz RD, Miller LA, Stubblefield KA, Englehart KB. Targeted muscle reinnervation for real-time myoelectric control of multifunction artificial arms. Jama. 2009 Feb 11;vol. 301:619–628. doi: 10.1001/jama.2009.116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lock BA, Simon AM, Stubblefield K, Hargrove LJ. Prosthesis-Guided Training For Practical Use Of Pattern Recognition Control Of Prostheses. 2011 [Google Scholar]

- 10.Zhang F, Fang Z, Liu M, Huang H. Preliminary design of a terrain recognition system. 2011:5452–5455. doi: 10.1109/IEMBS.2011.6091391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hudgins B, Parker P, Scott RN. A new strategy for multifunction myoelectric control. IEEE Trans Biomed Eng. 1993 Jan;vol. 40:82–94. doi: 10.1109/10.204774. [DOI] [PubMed] [Google Scholar]

- 12.Huang H, Zhou P, Li G, Kuiken TA. An analysis of EMG electrode configuration for targeted muscle reinnervation based neural machine interface. IEEE Trans Neural Syst Rehabil Eng. 2008 Feb;vol. 16:37–45. doi: 10.1109/TNSRE.2007.910282. [DOI] [PMC free article] [PubMed] [Google Scholar]