Abstract

Background

The Patient Navigation Research Program (PNRP) is a cooperative effort of nine research projects, each employing its own unique study design. To evaluate projects such as PNRP, it is desirable to perform a pooled analysis to increase power relative to the individual projects. There is no agreed upon prospective methodology, however, for analyzing combined data arising from different study designs. Expert opinions were thus solicited from members of the PNRP Design and Analysis Committee

Purpose

To review possible methodologies for analyzing combined data arising from heterogeneous study designs.

Methods

The Design and Analysis Committee critically reviewed the pros and cons of five potential methods for analyzing combined PNRP project data. Conclusions were based on simple consensus. The five approaches reviewed included: 1) Analyzing and reporting each project separately, 2) Combining data from all projects and performing an individual-level analysis, 3) Pooling data from projects having similar study designs, 4) Analyzing pooled data using a prospective meta analytic technique, 5) Analyzing pooled data utilizing a novel simulated group randomized design.

Results

Methodologies varied in their ability to incorporate data from all PNRP projects, to appropriately account for differing study designs, and in their impact from differing project sample sizes.

Limitations

The conclusions reached were based on expert opinion and not derived from actual analyses performed.

Conclusions

The ability to analyze pooled data arising from differing study designs may provide pertinent information to inform programmatic, budgetary, and policy perspectives. Multi-site community-based research may not lend itself well to the more stringent explanatory and pragmatic standards of a randomized controlled trial design. Given our growing interest in community-based population research, the challenges inherent in the analysis of heterogeneous study design are likely to become more salient. Discussion of the analytic issues faced by the PNRP and the methodological approaches we considered may be of value to other prospective community-based research programs.

Keywords: Patient navigation, Health disparities, Pooled analysis, Research methodology

Introduction

Patient navigation is a promising approach to reduce cancer disparities and refers to support and guidance offered to persons with abnormal cancer screening or a new cancer diagnosis in order to more effectively access the cancer care system. 1 The primary goals of navigation are to help patients overcome barriers to care and facilitate timely, quality care provided in a culturally sensitive manner. Patient navigation is intended to target those who are most at risk for delays in care, including individuals from racial and ethnic minority and lower income populations.

Although patient navigation is an intervention that has clearly grown in popularity over the past decade, rigorous research on the efficacy of patient navigation is still new.2 A number of studies conducted on patient navigation have been recently summarized.2, 3 Patient navigation has generally shown improvements in timeliness of definitive diagnosis and initiation of cancer care. To date, there have been no published multicenter studies assessing patient navigation.

The Patient Navigation Research Program (PNRP) is the first multi-center program to critically examine the role and benefits of patient navigation. This program is sponsored and funded by the National Cancer Institute’s (NCI) Center to Reduce Cancer Health Disparities (CRHCD), with additional support from the American Cancer Society. The five-year program focuses on four common cancers (breast, cervical, colorectal, and prostate) with screening tests having evidence of disparate outcomes in underserved populations.

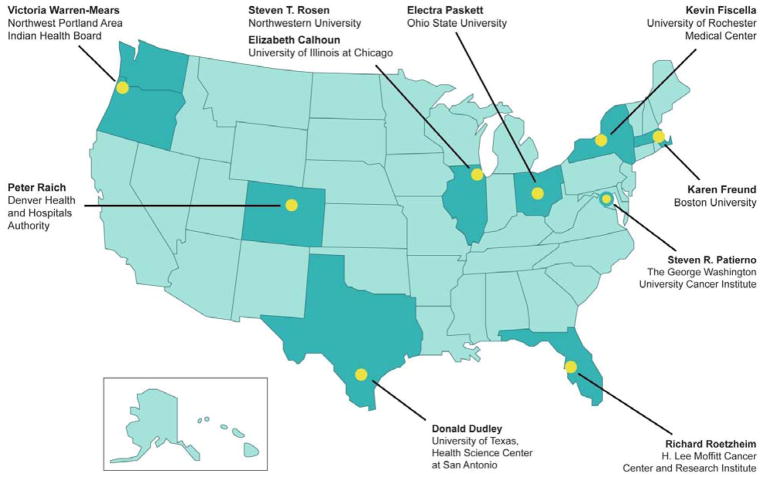

The PNRP is a cooperative effort of nine research projects (see Figure 1), the funding agencies, and an evaluation contractor. Each PNRP project focuses on one or more of the four specified cancers. All projects targeted populations at greater risk of disparate cancer outcomes, such as racial or ethnic minorities, the uninsured or underinsured, or persons of lower socioeconomic status. As a cooperative endeavor, the PNRP utilizes a steering committee consisting of the nine project principal investigators, along with representatives from NCI-CRCHD, the American Cancer Society, and NOVA Research Company (NOVA), the evaluation contractor for the national outcomes studies. In order to advise the steering committee on methodological issues, a design and analysis committee was created composed of investigators having expertise in research design and methods from each project site, NOVA, and NCI-CRCHD.

Figure 1.

Location of PNRP Projects

The cooperative agreement did not require a uniform research design across all projects. This allowed for flexibility in implementing the patient navigation intervention that would be sensitive both to the specific patient populations as well as local system-level factors at each project. In addition, the nature of patient navigation requires involvement of the community with development of the research strategy in collaboration with community partners. Thus, each project had its own research design that included traditional randomized clinical trials (RCT), group-randomized trials (GRT), and non-randomized quasi-experimental designs (QE).

Despite the differences in research designs, all projects included characteristics specified by the steering committee, in order to support a single common evaluation of the PNRP. For example, all projects utilized a common definition of patient navigation and navigators received common national training in this role. In addition, all study designs share common well-defined outcomes with data elements from a single detailed data dictionary.

Navigation was hypothesized to shorten the time interval from cancer screening abnormality to definitive diagnosis (primary outcome) and for patients diagnosed with cancer, the time interval from definitive diagnosis through initial cancer treatment as well as improving satisfaction with care. Each project assessed these time intervals by medical record abstraction and the common data elements from all projects were uploaded to a national database to support a single common evaluation of the PNRP.

While each PNRP project was individually powered to address its hypotheses, a pooled analysis was desirable for many reasons. First, the increased statistical power from pooling data allows a more precise estimate of navigation effects across a variety of settings. In addition, while cancer screening abnormalities are common in primary medical care settings, cancer diagnoses are rare. The ability to address the hypothesis that navigation improves cancer care is strengthened by pooling data across projects to increase statistical power. Pooling of data also increases statistical power to explore navigation effects within patient subgroups at greater risk of adverse cancer outcomes (uninsured, racial-ethnic minorities, and persons of lower socioeconomic status). Pooling increases the generalizability of the findings by demonstrating the effect across a variety of settings and populations. Finally, pooling data enables exploration of heterogeneity of navigation effects (contrasting models of navigation for example), something that is not possible within individual PNRP projects.

For these reasons, a pooled analysis to evaluate the overall PNRP was desirable. The design and structure of PNRP, however, created a challenge in developing a suitable analytic method to evaluate the overall program. The PNRP was clearly different from standard multicenter trials that utilize a single study design with common shared protocols at all centers. In addition, while there was a common definition of navigation, its delivery could differ among the PNRP projects. The design variations across the distinct projects do not lend themselves to traditional methods for analyzing data from a multi-center research trial.

Other fields, notably epidemiology, have recognized the need to analyze pooled data arising from heterogeneous study designs.4, 5 These efforts generally involve retrospective analysis of published studies. To our knowledge, however, there is no agreed upon methodology for analyzing combined data arising from different study designs a priori or before each project has published their primary results, as opposed to retrospective analyses. The Design and Analysis Committee was therefore charged with the task of reviewing potential methodologies for analyzing combined data from PNRP projects. This review took place over a series of conference calls and the results of this assessment are presented below. In the following section, we describe each of the PNRP projects in more detail, and discuss five possible approaches that could be employed in this situation, including the pros and cons of each approach.

Methods

Summary of PNRP Research Designs

The PNRP is a collaborative effort involving nine separate research projects (Table 1). Eight of the nine sites contributed data to the national dataset. The ninth site focused solely on the American Indian / Alaska Native population of the northwestern US, and the data sharing agreements are specific to that setting. Each project designed the implementation of its intervention taking into account factors that were unique to their health care delivery system and patient population. Some projects were able to employ true experimental designs, while others implemented variations of quasi-experimental designs.

Table 1.

Design Characteristics of PNRP Projects

| Boston | Chi-Access | Chi-VA | Denver | Ohio | Rochester | San Ant | Tampa | Wash-DC | |

|---|---|---|---|---|---|---|---|---|---|

| Targeted Cancer Sites | |||||||||

| Breast | X | X | X | X | X | X | X | X | |

| Cervical | X | X | X | X | |||||

| Colorectal | X | X | X | X | X | ||||

| Prostate | X | X | |||||||

| Design | |||||||||

| Randomized – Individual level | X | X | |||||||

| Randomized – Group level | X | X | |||||||

| Non-Randomized | X | X | X | X | X | ||||

| Unit of Assignment | |||||||||

| Individual | X | X | |||||||

| Group | X | X | X | X | X | X | X | ||

| Controls | |||||||||

| Actively Recruited Controls | X | X | X | ||||||

| Medical Records-Based Controls | X | X | X | X | X | X | |||

| Clinics/Care sites Number (#Nav/#Ctl) | 6 (3/3) | 9 (5/4) | 1 | 1 | 16 (8/8) | 11 | 5 (2/3) | 12 (7/5) | 15 (8/7) |

| Intervention Scope | |||||||||

| Abnormality to Diagnosis | X | X | X | X | X | X | X | X | X |

| Diagnosis to Start of Treatment | X | X | X | X | X | X | X | X | |

| Start to Completion of Treatment | X | X | X | X | X | X | X | X | |

| Enrollment as of 6/1/11 | |||||||||

| Total subjects | 3042 | 1023 | 513 | 1249 | 674 | 344 | 1052 | 1320 | 2594 |

| Subjects with cancer | 196 | 118 | 0 | 171 | 27 | 321 | 9 | 53 | 349 |

| Total PNRP Enrollment (all projects) | |||||||||

| Total subjects 11,643 | |||||||||

| Total subjects with cancer 1,048 | |||||||||

Four projects – Denver, Ohio, Rochester and Tampa – implemented true experimental designs, using random assignment of either individuals or clinics to the Intervention or Control conditions. Given adequate sample size, random assignment allows the analysis to make the assumption that treatment conditions formed through this process are equivalent, and observed differences between control and intervention treatment conditions can be attributed to the intervention effect.

Denver and Rochester used a more classical RCT design and randomly assigned individuals to either a control group or the intervention condition.6 Ohio and Tampa used a GRT approach and randomly assigned groups (i.e., clinics) to either a control group or an intervention group. GRT is a comparative study design in which identifiable groups (i.e., study units) are assigned at random to study conditions and observations are made on members of those groups.7 Studies with different units of assignment and observation exist in many disciplines and pose a number of design and analytic problems not present when individuals are randomized to study conditions. A central problem is that the intervention effect must be assessed against the between-group variance rather than the within-group variance.8

Furthermore, the between-group variance is usually larger if based on identifiable groups than if based on randomly constituted groups. This is the result of the positive intraclass correlation expected among responses from members of the same identifiable group9; that correlation reflects an extra component of variation attributable to groups above and beyond that attributable to their members. In addition, the degrees of freedom (df) available to estimate the between-group variance is normally less than that for the within-group variance when there are a limited number of groups per condition. The extra variation and limited df can combine to reduce power and therefore make it difficult to detect important intervention effects in an otherwise well-designed and properly-executed research trial.

Ohio originally identified 12 participating clinics and stratified them according to clinic type – university-based clinic (n = 8) vs. neighborhood health center (n = 4). Within clinic type, individual clinics were rank-ordered on the proportion of African-American patients and pairs were formed by clinics adjacent in rank. Clinics within each pair were then randomly assigned to either Navigation or Control conditions. This method of forming pairs should maintain balance between the two conditions on at least the variable that was used to rank the clinics in the first place. To the extent that this variable is also a surrogate for other variables (e.g., SES, insurance coverage) that might be correlated with the outcome, then this assignment process will permit a more direct interpretation of results. Data on these confounding variables can be examined for balance among the treatment conditions.

Tampa identified 12 clinics nested within five distinct health care organizations (the five organizations having 3, 3, 2, 2, and 2 clinics respectively). There was a further constraint that for health care organizations having 3 clinics, one was randomly assigned to be the Control clinic and the remaining 2 clinics were designated as Navigated clinics. Random assignment of clinics occurred within each health care organization. The Tampa project presents a case where the number of units to be randomly assigned is small and the degree to which equivalency between Control and Navigated conditions was achieved is of less certainty than the situation where several hundred individuals are randomly assigned.

The remaining 5 projects – Boston, Chicago-ACCESS, Chicago-VA, San Antonio and Washington DC, employed a quasi-experimental design,10 with the distinguishing feature that patients (or clinics) were not randomly assigned to conditions. The decision to use non randomized designs reflected the nature of the intervention and the collaborations with community partners to develop and conduct the research. This leaves attribution of outcomes to Patient Navigation open to other plausible explanations. The same kinds of statistical analyses can be applied to data from quasi-experiments as from true experiments; it is the ability to directly interpret the findings and the confidence in the obtained results that separates these two kinds of designs. Random assignment eliminates many of the alternative plausible explanations for the obtained results that may be more difficult to eliminate in quasi-experimental designs, and facilitates generalizability of results to similar populations.

The Design and Analysis Committee considered the various study designs and proposed five possible analytic methods or approaches for a national evaluation of the major outcomes of the PNRP. Each approach was evaluated by the committee in regards to its strengths, weaknesses, and suitability to address the unique methodological issues of the PNRP. Results of that evaluation are presented below (see also Table 2).

Table 2.

Comparison of Potential Analysis Methods

| Analysis Method | Description | PROs | CONs | |

|---|---|---|---|---|

| Approach #1 | Analyze and report each project separately | Each PNRP project would be separately analyzed and reported as an independent study. There would be no attempt to combine data from different projects. |

|

|

| Approach #2 | Individual-level analysis ignoring any intraclass correlation | Combine data from all the projects, analyze using standard methods, ignoring any intraclass correlation All individuals receive equal weight in the analysis. Primary analysis compares mean difference in time to diagnostic resolution for navigated versus control subjects. |

|

|

| Approach #3 | Pool data from projects having similar designs | Data from two projects that used group-randomized designs would be combined and analyzed. Two projects that randomized at individual level would similarly be pooled and analyzed. |

|

|

| Approach #4 | Prospective meta analysis | Data from each project analyzed separately to estimate effect size. Effect sizes would be combined using meta-analytic techniques to obtain overall measure of program effect. |

|

|

| Approach #5 | Simulated Group Randomized Design | For projects that did not randomize by group, pairs of matched, and balanced groups will be created from the individual data simulating a group randomized design. |

|

|

Results

Approach #1: No presentation of combined findings. Each project in the PNRP would be separately analyzed and reported as an independent study

One possible approach to evaluate the PNRP is to make no attempt to combine the data, but instead present each project based on its own analysis. This approach would eliminate the difficulties in assessing the best method to combine data. This approach is useful if the findings of the individual projects are markedly different, allowing readers to more easily see which projects had a significant effect, and which did not. However, this approach does not provide a coherent summary of the overall PNRP program, and leaves the synthesis of the findings to the reader. An understanding of the effects of patient navigation from individual project analyses would only emerge over time as project results were published.

An example of such an analytic approach is the Centers for Medicare and Medicaid Services (CMS) funded program of 15 individual RCTs evaluating care coordination to improve quality of care, re-hospitalization rates and Medicare expenditures for Medicare beneficiaries. In this program, each project developed their own intervention and selected their own target diseases, study population, and established inclusion and exclusion criteria specific to their respective projects.11 CMS developed a uniform evaluation procedure, using claims data and a standardized telephone survey. To summarize the effects of the overall program, the authors chose to present the data as 15 parallel RCTs, outlining the differences in each intervention and study population.

Interpretation of the findings primarily focused on the two projects that demonstrated positive effects, with an attempt to understand these findings in the context of differing study designs. This approach proved useful in the setting where trials utilized markedly different designs and where the nature of the intervention varied significantly from project to project. In contrast, the PNRP program allowed different study designs, but utilized a standard intervention strategy, study population, inclusion, and exclusion criteria.

Approach #2: Pool the data from all projects and analyze at the individual level ignoring any possible intraclass correlation

This analytical approach would combine data from all the projects, analyze at the individual level and ignore any possible intraclass correlation. The primary analysis would be a test of the mean difference in time from abnormal finding to diagnostic resolution for the Control condition compared to the Intervention (Navigated) condition. The analysis could be performed using standard methods based on the general linear model 12, such as t-tests or F-tests with df based on the number of individuals. While separate analyses could be performed for the different cancer sites – breast, cervical, colorectal, prostate – the basic analytical approach would be the same.

The major advantages of this approach are that it would use all data from all projects in a single analysis and that it would rely on familiar methods based on the general linear model.12 Pooled data also yield larger sample sizes and increased power, and thereby permit the exploration of rarer events and the examination of the main effects on hypothesized sub-groups, such as patients with co-morbidities.

However, these advantages are offset by several serious disadvantages. A primary disadvantage is that this approach is inappropriate for GRTs and for quasi-experiments involving non-random assignment of identifiable groups. 7, 13 Another is that larger projects would contribute more information than smaller projects; if the results varied systematically by study size, the effects in the larger projects could wash out the effects in the smaller projects.

Approach #3: Pooling data from projects with similar designs

Some of the projects employed similar research designs allowing the possibility of pooling raw data from projects that used similar research designs. For example, two projects employed a GRT design (Ohio and Tampa) that targeted shared cancer sites (breast, colorectal). This approach would analyze data from these two GRT projects, employ statistical methods that are appropriate to the study design, and stratify on project so that overall results would be examined and differences between projects could also be evaluated. An advantage of this situation is that it would allow stratification by cancer site and project simultaneously. The committee considered whether to include data on cervical cancer (collected at Ohio but not Tampa) and concluded that such data could be included, increasing the generalizability of findings. Individual-level data would be analyzed, but for these two projects, the intraclass correlation expected in the data would be addressed and df would be based on the number of groups, not the number of individuals. The analysis would be based on the general linear mixed model.14

Two projects, Denver and Rochester, designed and conducted projects in which individuals within clinics were randomly assigned to either the Control or Navigated conditions. Data from these 2 projects could be pooled for a single analysis using Approach #2. Project (Denver versus Rochester) would be a stratification variable to test the interaction effect. The approach would use standard methods based on the general linear model12, such as t-tests or F-tests with df based on the number of individuals. An issue complicating this approach in this instance is that the major outcome variables are measuring different time frames; for Denver the time is from abnormal finding to diagnostic resolution in 80% of its population and from cancer diagnosis to initiation of treatment in 20%, while for Rochester the time for most of its patient population is from cancer diagnosis to initiation of treatment and/or completion of primary treatment. Hypothetically, however, it could be possible to pool the data across these two projects since both outcomes are measured in the same unit (# of days). This analytical approach could help answer the general question as to whether patient navigation reduces the time to obtain standard quality cancer care – whether that “care” is diagnostic resolution or initiation of cancer treatment. The challenge would be to find ways to appropriately combine and describe the relative merit of the data in reference to: 1) the overall effects of patient navigation (PN) on quality of cancer care; and 2) specific differential effects of PN on cancer diagnostic resolution and initiation of cancer treatment.

While randomization insures comparability of treatment arms on average, adjustment for patient level covariates is still advisable for several reasons.15 First, despite randomization, imbalances in patient characteristics can occur by chance. Moreover, unadjusted analyses may yield results that are biased toward the null if there is heterogeneity of risk across strata.16 Adjusted analyses may provide more precise estimates and a summary result closer to stratum specific results.16, 17

The primary advantage of approach three is that it would base the analysis on the design of the project: RCTs would be analyzed as RCTs and GRTs would be analyzed as GRTs. Interpretation would be straightforward in both analyses. The primary disadvantages are that this approach would accommodate only 4 of the 9 projects and present two sets of potentially conflicting results. With each analysis based on only two projects, power would also be reduced relative to other options, and questions may arise regarding unequal sample sizes of projects within type of design.

Approach #4: Prospective Meta-Analysis

Meta-analysis is the statistical synthesis of data from separate but similar projects that are combined so that a quantitative summary of the pooled results can be obtained.18–21 An extension of this approach is prospective meta-analysis (PMA) in which studies are identified, evaluated and determined to be eligible before the results of any of the studies become known.22 PMA addresses some of the limitations of retrospective approaches to meta-analysis. For example, retrospective analyses can be influenced by the individual study results, potentially affecting studies that are assessed (publication bias), study selection, what outcomes are assessed, and what treatment and patient subgroups are evaluated. In addition, PMA provides standardization across studies of instruments and variable definitions. PMA is an increasingly utilized approach reported in the literature.23–32

Using this PMA approach, data from each project would be analyzed separately using methods appropriate to the project design and an effect size of the intervention (Patient Navigation) would be calculated. These effect sizes would then be combined across projects using standard meta-analytic techniques to obtain a summary measure of the general effect of Patient Navigation on the timely receipt of standard, quality cancer care.

This approach has several advantages. It recognizes the idiosyncrasies across projects and treats these as random effects. It retains individual projects’ research designs and allows for stratification according to research design quality. The PMA approach also avoids another important disadvantage of retrospective meta-analyses, where the researcher conducting the meta-analysis is dependent upon the data that are published in the literature, or must request additional data from the authors. In the PNRP, all original data would be available for analysis.

The data for each project would be analyzed separately, maintaining each project’s research design and using statistical methods appropriate to the projects’ designs with particular attention to the unit of assignment. Effect sizes could be examined by type of design (Individually randomized, group randomized, non-randomized; or randomized versus non-randomized) to examine differences in the estimated magnitude of the effect of PN as a function of research design type or quality of research design.

A potential disadvantage is the risk that the analyses performed for the PMA might differ from the analyses eventually reported by the individual projects. That risk can be minimized by requiring the individual projects to specify in advance their primary analysis plan. However, if projects plan their primary analysis with the inclusion of data they collected beyond the common dataset, this analysis could not be completely replicated. If the individual projects have very different effect sizes (for example, several with a positive effect of navigation, and several with no effect or a negative effect in the navigated arm) then pooling the effect sizes would not be appropriate and presenting the individual project results would be recommended.

Approach #5: Simulated Group Randomized Design

In this approach, pairs of matched groups would be created within each project to mimic a pair-matched group-randomized trial. The grouping would occur so that the number of observations in each group formed within a particular project is balanced. The matching would be done such that factors most highly associated with outcomes were similarly distributed in both the control and navigated study conditions. Potential matching factors could include cancer site, health insurance coverage, ethnic and racial minority status, gender, and age. The distribution of these factors would first be examined to determine overall balance between navigated and control patients within each individual cancer site. There is no requirement that the number of groups per project be equal or that group as defined for one endpoint be the same group defined for another endpoint.

For projects where group randomization of clinics was used, subjects from each individual clinic would remain together in a single group, and not split into multiple groups even with a large sample size. In those cases where a ‘group’ was the unit of randomization or assignment, it would not be appropriate to split these units to obtain more groups; this would create groups that were not independent and violate the assumptions of the analysis plan. For some clinics that have small sample sizes it may be necessary to combine across clinics and match on cancer site to attain a sufficient number of observations per group. For projects where individual randomization was used, groups would be created based on cancer site and date of index event, in order to create well matched sets of navigated and control pairs. In the case where reasonable matching within strata has not been achieved with respect to the most influential covariates, it may be necessary to employ a mathematical model in the analysis to control for confounding.

Two methods of analysis could be considered for this approach, ANOVA and simple t-tests (or permutation tests for situations where the assumptions of normality are grossly violated). These methods have been described elsewhere.7, 33, 34

Strengths of this approach are that it would utilize all of the data from all eight projects and it would help insure balance in the number of observations in each constructed group within a project and in the number of groups in each condition. Balance is important in group-randomized trials as it helps limit the potential impact of other problems that can occur in GRT data. 35 The size of groups should be similar enough in magnitude that weighting of effects would not be required. Another advantage of this method is that by creating comparable groupings across all PNRP projects, confounding that occurs at the level of groups can be controlled in the analysis. Potential disadvantages are that this is an untested and novel approach, and more importantly, it would not reflect the original design of most of the PNRP projects.

Discussion

The PNRP presents some unusual analytic challenges. The PNRP is clearly distinct from traditional multicenter randomized trials and traditional methods of analysis of multicenter trials could not be applied.36 Instead, we have considered 5 alternative approaches to the analysis of the PNRP data and examined their strengths and weaknesses. However, as community based participatory research gains strength as a methodology to address health disparities, we anticipate that other research groups will face similar challenges when analyzing multi site studies.

Though we considered Approach #1, we judged the weaknesses to outweigh the strengths. The PNRP was created with the overarching goal of estimating the value of patient navigation. The results from individual projects would certainly add to the research literature on this issue, but a unified summary would not emerge and readers would be left to find, evaluate, and synthesize the reports from the individual projects. In addition, this approach would not allow a thorough evaluation of heterogeneity of navigation effects across projects. The circumstance where this method would however be more applicable is the situation where the effect sizes from the different individual projects are in opposite directions, and therefore a synthesized effect size is not appropriate.

Another major disadvantage to Approach #1 was that a combined analysis should have greater power than a series of project-specific analyses. Pooling data allows sufficient sample size to explore effects of navigation across subpopulations of interest. Moreover, because the number of cancers arising in primary care settings is small, navigation programs that target primary care settings are unlikely to have sufficient numbers of cancers diagnosed to explore effects for each cancer site. By pooling data across cancer sites, there is a greater opportunity to examine the effects of navigation programs that target the full cancer continuum, from diagnosis through treatment.

Two analytic approaches that would pool data cross projects were considered unacceptable by the Design and Analysis Committee. First, simply combining all data and conducting an individual-level analysis would ignore intraclass correlation and each project’s unique study design, greatly increasing the likelihood of a type 1 error.7, 13 In addition, projects having large sample size would be weighted greater in Approach #2 which could unduly influence findings.37 Approach #3 would combine data only from projects having similar study designs but was deemed undesirable as it would exclude data from five of the eight projects.

Each of these remaining two approaches has potential strengths and weaknesses. Strengths of the PMA approach included an analytic method that was familiar to most readers, the ability to utilize data from all projects, and the ability to account for unique research designs of each project, the ability to avoid problems common to many meta-analytic efforts by virtue of having complete access to original data, and its prospective approach. A meta-analysis conducted in advance of publication of individual project results could risk inconsistencies with later reports from the separate projects. Inconsistencies can be potentially managed through governance such as enforcing (or at least negotiating) agreed upon analytic methods between that in the pooled analysis and that performed by individual PNRP projects. In addition, differences in analytic methods and resultant discrepancies in reported results between pooled analysis and individual projects would need full disclosure and explanation in subsequent publications.

Advantages of approach #5 which would construct a simulated pair-matched group-randomized trial include utilizing data from all projects, insuring balance between intervention and control conditions, and increased efficiency. Disadvantages include an untested analytic approach not familiar to reviewers or readers, a less transparent approach which lacks adherence to the original designs of the PNRP projects, difficulty achieving sufficient sample size per group, and finally issues of weighting if some projects contribute more groups than others.

Adequate control of confounding is an important analytic issue in all methods. In pooled analyses, confounding can occur at several levels; at the level of the PNRP project and cancer site, at the level of groups that occur within the pooled data (e.g. clinics or hospitals), and at the individual patient level. Factors that are highly correlated are difficult to separate in any analytic strategy. For example, three of the four sites examining cervical cancer are non-randomized while four out of five sites examining colon cancer are randomized. As a result it will be difficult to isolate the potential effects of cancer site from potential effects of the study design. Confounding of group level (e.g. clinic) characteristics is also important in all analytic methods. While group randomized trials have inherent groups that can be assessed, a potential strength of method five is that it creates similar groupings within other study designs allowing for control of confounding at this level.

There were a number of limitations considered in our discussion of analytic approaches. First, the conclusions reached were based on expert opinion and not derived from actual analyses performed. Because data collection is still underway, there was no attempt to perform simulations, for example, that contrasted results from different analytic strategies or assumptions. Finally, the issues faced were unusual and there is little existing literature to put these conclusions into context. Even so, programs that involve different designs and interventions addressing the same problem are not uncommon.

The PNRP faced an unusual situation in which timely program evaluation required the analysis of pooled data arising from projects having heterogeneous study designs. This situation could re-emerge in future multi-site, community based participatory research projects. The PNRP D&A Committee considered several analytic approaches and concluded that a prospective meta-analysis is one appropriate analytic strategy in these situations. A novel simulated group randomized approach was also proposed as an alternative analytic approach. Future research (e.g. data simulations) would help to understand how program evaluation results are influenced by the analytic method used.

Acknowledgments

The Patient Navigation Research Program is supported by NIH Grants U01 CA116892, U01 CA 117281, U01CA116903, U01CA116937, U01CA116924, U01CA116885, U01CA116875, U01CA116925, American Cancer Society #SIRSG-05-253-01. The contents are solely the responsibility of the authors and do not necessarily represent the official views of the Center to Reduce Cancer Health Disparities, NCI/NIH or the American Cancer Society.

Abbreviations

- PNRP

Patient Navigation Research Program

- NCI

National Cancer Institute’s

- CRHCD

Center to Reduce Cancer Health Disparities

- RCT

randomized clinical trials

- GRT

group-randomized trials

- QE

quasi-experimental designs

Patient Navigation Research Program Investigators

Program Office

National Cancer Institute, Center to Reduce Cancer Health Disparities: Martha Hare, Mollie Howerton, Ken Chu, Emmanuel Taylor, Mary Ann Van Duyn.

Evaluation Contractor

NOVA Research Company: Paul Young, Frederick Snyder.

Clinical Centers

Boston University Women’s Interdisciplinary Research Center: PI-Karen L. Freund, Tracy A. Battaglia.

Denver Health and Hospital Authority: PI-Peter Raich, Elizabeth Whitley.

George Washington University Cancer Institute: PI-Steven R. Patierno, Lisa M. Alexander, Paul H. Levine, Heather A. Young, Heather J. Hoffman, Nancy L. LaVerda.

H. Lee Moffitt Cancer Center and Research Institute: PI-Richard G. Roetzheim, Cathy Meade, Kristen J. Wells.

Northwest Portland Area Indian Health Board: PI- Victoria Warren-Mears.

Northwestern University Robert H. Lurie Comprehensive Cancer Center: PI-Steven Rosen, Melissa Simon.

Ohio State University: PI-Electra Paskett.

University of Illinois at Chicago and Access Community Health Center: PI-Elizabeth Calhoun, Julie Darnell.

University of Rochester: PI-Kevin Fiscella, Samantha Hendren.

University of Texas Health Science Center at San Antonio Cancer Therapy and Research Center: PI-Donald Dudley, Kevin Hall, Anand Karnard, Amelie Ramirez.

References

- 1.Jean-Pierre P, Hendren S, Fiscella K, et al. Understanding the Processes of Patient Navigation to Reduce Disparities in Cancer Care: Perspectives of Trained Navigators from the Field. J Cancer Educ. 2010 doi: 10.1007/s13187-010-0122-x. [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wells KJ, Battaglia TA, Dudley DJ, et al. Patient navigation: state of the art or is it science? Cancer. 2008 Oct 15;113(8):1999–2010. doi: 10.1002/cncr.23815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Robinson-White S, Conroy B, Slavish KH, Rosenzweig M. Patient navigation in breast cancer: a systematic review. Cancer Nurs. 2010 Mar-Apr;33(2):127–140. doi: 10.1097/NCC.0b013e3181c40401. [DOI] [PubMed] [Google Scholar]

- 4.Friedenreich CM. Methods for pooled analyses of epidemiologic studies. Epidemiology. 1993 Jul;4(4):295–302. doi: 10.1097/00001648-199307000-00004. [DOI] [PubMed] [Google Scholar]

- 5.Blettner M, Sauerbrei W, Schlehofer B, Scheuchenpflug T, Friedenreich C. Traditional reviews, meta-analyses and pooled analyses in epidemiology. Int J Epidemiol. 1999 Feb;28(1):1–9. doi: 10.1093/ije/28.1.1. [DOI] [PubMed] [Google Scholar]

- 6.Friedman L, Furberg C, DeMets D. Fundamentals of Clinical Trials. 3. New York, New York: Springer-Verlag, Inc; 1998. [Google Scholar]

- 7.Murray D. Design and Analysis of Group-Randomized Trials. New York, NY: Oxford University Press; 1998. [Google Scholar]

- 8.Cornfield J. Randomization by group: a formal analysis. Am J Epidemiol. 1978 Aug;108(2):100–102. doi: 10.1093/oxfordjournals.aje.a112592. [DOI] [PubMed] [Google Scholar]

- 9.Kish L. Survey Sampling. New York, NY: John Wiley & Sons; 1965. [Google Scholar]

- 10.Shadish W, Cook T, Campbell D. Experimental and Quasi-Experimental Designs for Generalized Causal Inference. Boston, MA: Houghton Mifflin Company; 2002. [Google Scholar]

- 11.Peikes D, Chen A, Schore J, Brown R. Effects of care coordination on hospitalization, quality of care, and health care expenditures among Medicare beneficiaries: 15 randomized trials. Jama. 2009 Feb 11;301(6):603–618. doi: 10.1001/jama.2009.126. [DOI] [PubMed] [Google Scholar]

- 12.Searle S. Linear Models. New York, NY: John Wiley & Sons; 1971. [Google Scholar]

- 13.Donner A, Klar N. Design and Analysis of Cluster Randomization Trials in Health Research. London: Arnold; 2000. [Google Scholar]

- 14.Harville DA. Maximum Likelihood Approaches to Variance Component Estimation and to Related Problems. J American Statistical Association. 1977;72(358):320–338. [Google Scholar]

- 15.Moher D, Hopewell S, Schulz KF, et al. CONSORT 2010 explanation and elaboration: updated guidelines for reporting parallel group randomised trials. Bmj. 2010;340:c869. doi: 10.1136/bmj.c869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kent DM, Trikalinos TA, Hill MD. Are unadjusted analyses of clinical trials inappropriately biased toward the null? Stroke. 2009 Mar;40(3):672–673. doi: 10.1161/STROKEAHA.108.532051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Steyerberg EW, Bossuyt PM, Lee KL. Clinical trials in acute myocardial infarction: should we adjust for baseline characteristics? Am Heart J. 2000 May;139(5):745–751. doi: 10.1016/s0002-8703(00)90001-2. [DOI] [PubMed] [Google Scholar]

- 18.Glass G, McGaw B, Smith M. Meta-analysis in Social Research. Beverly Hills, CA: Sage Publications; 1981. [Google Scholar]

- 19.Hedges L, Olkin I. Statistical Methods for Meta-Analysis. New York, NY: Academic Press; 1985. [Google Scholar]

- 20.Borenstein M, Hedges L, Higgins J, Rothstein H. Introduction to Meta-Analysis. West Sussex, UK: John Wiley & Sons, Ltd; 2009. [Google Scholar]

- 21.Grissom R, Kim J. Effect Sizes for Research: A Broad Practical Approach. Mahwah, NJ: Lawrence Erlbaum Associates, Inc; 2005. [Google Scholar]

- 22. [Accessed August 8, 2011.];Cochrane Prospective Meta-Analysis Methods Group. http://pma.cochrane.org.

- 23.Protocol for prospective collaborative overviews of major randomized trials of blood-pressure-lowering treatments. World Health Organization-International Society of Hypertension Blood Pressure Lowering Treatment Trialists’ Collaboration. J Hypertens. 1998 Feb;16(2):127–137. [PubMed] [Google Scholar]

- 24.Margitic SE, Morgan TM, Sager MA, Furberg CD. Lessons learned from a prospective meta-analysis. J Am Geriatr Soc. 1995 Apr;43(4):435–439. doi: 10.1111/j.1532-5415.1995.tb05820.x. [DOI] [PubMed] [Google Scholar]

- 25.Turok DK, Espey E, Edelman AB, et al. The methodology for developing a prospective meta-analysis in the family planning community. Trials. 2011;12:104. doi: 10.1186/1745-6215-12-104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cholesterol Treatment Trialists’ (CTT) Collaboration. Protocol for a prospective collaborative overview of all current and planned randomized trials of cholesterol treatment regimens. Cholesterol Treatment Trialists’ (CTT) Collaboration. Am J Cardiol. 1995 Jun 1;75(16):1130–1134. doi: 10.1016/s0002-9149(99)80744-9. [DOI] [PubMed] [Google Scholar]

- 27.Margitic SE, Inouye SK, Thomas JL, Cassel CK, Regenstreif DI, Kowal J. Hospital Outcomes Project for the Elderly (HOPE): rationale and design for a prospective pooled analysis. J Am Geriatr Soc. 1993 Mar;41(3):258–267. doi: 10.1111/j.1532-5415.1993.tb06703.x. [DOI] [PubMed] [Google Scholar]

- 28.Baigent C, Keech A, Kearney PM, et al. Efficacy and safety of cholesterol-lowering treatment: prospective meta-analysis of data from 90,056 participants in 14 randomised trials of statins. Lancet. 2005 Oct 8;366(9493):1267–1278. doi: 10.1016/S0140-6736(05)67394-1. [DOI] [PubMed] [Google Scholar]

- 29.Valsecchi MG, Masera G. A new challenge in clinical research in childhood ALL: the prospective meta-analysis strategy for intergroup collaboration. Ann Oncol. 1996 Dec;7(10):1005–1008. doi: 10.1093/oxfordjournals.annonc.a010491. [DOI] [PubMed] [Google Scholar]

- 30.Askie LM, Baur LA, Campbell K, et al. The Early Prevention of Obesity in CHildren (EPOCH) Collaboration--an individual patient data prospective meta-analysis. BMC Public Health. 2010;10:728. doi: 10.1186/1471-2458-10-728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Design rational, and baseline characteristics of the Prospective Pravastatin Pooling (PPP) project--a combined analysis of three large-scale randomized trials: Long-term Intervention with Pravastatin in Ischemic Disease (LIPID), Cholesterol and Recurrent Events (CARE), and West of Scotland Coronary Prevention Study (WOSCOPS) Am J Cardiol. 1995 Nov 1;76(12):899–905. doi: 10.1016/s0002-9149(99)80259-8. [DOI] [PubMed] [Google Scholar]

- 32.Province MA, Hadley EC, Hornbrook MC, et al. The effects of exercise on falls in elderly patients. A preplanned meta-analysis of the FICSIT Trials. Frailty and Injuries: Cooperative Studies of Intervention Techniques. Jama. 1995 May 3;273(17):1341–1347. [PubMed] [Google Scholar]

- 33.Donner A. Statistical methodology for paired cluster designs. Am J Epidemiol. 1987 Nov;126(5):972–979. doi: 10.1093/oxfordjournals.aje.a114735. [DOI] [PubMed] [Google Scholar]

- 34.Donner A, Donald A. Analysis of data arising from a stratified design with the cluster as unit of randomization. Stat Med. 1987 Jan-Feb;6(1):43–52. doi: 10.1002/sim.4780060106. [DOI] [PubMed] [Google Scholar]

- 35.Gail MH, Mark SD, Carroll RJ, Green SB, Pee D. On design considerations and randomization-based inference for community intervention trials. Stat Med. 1996 Jun 15;15(11):1069–1092. doi: 10.1002/(SICI)1097-0258(19960615)15:11<1069::AID-SIM220>3.0.CO;2-Q. [DOI] [PubMed] [Google Scholar]

- 36.Worthington H. Methods for pooling results from multi-center studies. J Dent Res. 2004;83(Spec No C):C119–121. doi: 10.1177/154405910408301s25. [DOI] [PubMed] [Google Scholar]

- 37.Bravata DM, Olkin I. Simple pooling versus combining in meta-analysis. Eval Health Prof. 2001 Jun;24(2):218–230. doi: 10.1177/01632780122034885. [DOI] [PubMed] [Google Scholar]