Abstract

In recent years, behavioral economics has gained much attention in psychology and public policy. Despite increased interest and continued basic experimental studies, the application of behavioral economics to therapeutic settings remains relatively sparse. Using examples from both basic and applied studies, we provide an overview of the principles comprising behavioral economic perspectives and discuss implications for behavior analysts in practice. A call for further translational research is provided.

Keywords: behavioral economics, demand, discounting, tutorial

PRACTICE POINTS.

The present tutorial describes behavior analytic concepts relevant to behavioral economics that have implications for effective service delivery.

These concepts consist of: demand functions, reinforcer competition, open versus closed economies, and discounting.

The field of study known as behavioral economics initially began as a purely academic attempt at modeling irrational consumer choices, thereby challenging the notion of the rational consumer of traditional economics. However, recent events have launched behavioral economics from a purely academic pursuit to the forefront of public policy and pop psychology. Mass media books promoting behavioral economic concepts such as Thaler and Sunstein's Nudge: Improving Decisions about Health, Wealth, and Happiness 2008 and Dan Ariely's Predictably Irrational: The Hidden Forces That Shape Our Decisions 2008 and The Upside of Irrationality: The Unexpected Benefits of Defying Logic at Work and at Home 2010 have gained critical acclaim and widespread publicity. Thaler and Sunstein's Nudge caught the interest of President Barack Obama (Grunwald, 2009), prompting him to appoint Sunstein as the administrator of the Office of Information and Regulatory Affairs. Suffice it to say, behavioral economics has become a staple in the understanding of ways to engineer environments to promote sustainable and positive behavior changes. It is because of these attributes that we propose that a behavioral economic approach to service delivery— based upon the principles of behavior analysis, rather than traditional behavioral economics derived from psychology and economics—can lead to a greater understanding of behavior in academic or therapeutic settings.

Before we provide examples of how behavioral economic concepts may be applied to academic or therapeutic settings, it is imperative to understand the assumptions of both traditional and behavioral approaches to economics. Collectively, the term behavioral economics describes an approach to understanding decision making and behavior that integrates behavioral science with economic principles (see Camerer, Loewenstein, & Rabin, 2004). Traditional economics, according to the classic philosopher and economist John Stuart Mill (see Persky, 1995), assumes that humans exhibit behavior commensurate with a homo economicus profile (the “economic human”). As a homo economicus, individuals are assumed to be completely aware of the costs and benefits associated with all possible actions. Thus, people will subsequently behave in a way that fully maximizes their long-term gain (i.e., humans are analogous to walking calculators, constantly considering the pros and cons of their actions and computing the best behavioral alternatives for the situation). All behaviors are, in this sense, carefully calculated and entirely rational. Although this perspective is laudable and gives the benefit of the doubt to the choices made by human consumers, it is clear that people do not always make decisions that maximize their long-term gain. Of course, this is an empirical question, and one that behavioral economics has attempted to reconcile.

Behavioral economists assume a contrarian stance that individuals—no matter their age or intelligence— are rather myopic with respect to what is best for them. Behavioral economics assumes irrationality in decision making. As such, individuals are susceptible to temptations and tend to make poor and rash decisions, even though it is clear there are better options that will improve long-term outcomes. Thaler and Sunstein (2008) propose that the term “Homer” economicus replace the homo economicus of traditional economics when describing humans, as most decision makers resemble the fictional Homer Simpson (e.g., they live for the moment, discount delayed consequences, pay poor attention to detail, and are relatively uninformed of behavioral costs/benefits). An astute observer of human behavior will undoubtedly agree that many behaviors are less-than-rational. Undergraduates check social media pages, rather than take notes during lecture, despite the resulting loss in knowledge acquisition and possible detriment to their chances of doing well in the class. Children choose a brownie over an apple in the lunch line, despite the long-term decrements in health. Teachers and administrators deviate from empirically supported curricula to gain student approval or make lesson plans easier to implement, despite the loss in student learning and subsequent dips in evaluations of teaching efficacy.

Notwithstanding the consensus that behavioral economics accounts for irrational behaviors, a wide continuum exists within this field with respect to the principles that may explain such irrationality.

Notwithstanding the consensus that behavioral economics accounts for irrational behaviors, a wide continuum exists within this field with respect to the principles that may explain such irrationality. On one end of the continuum, theorists take a more cognitive perspective, and contend that irrational behaviors are the result of mentalistic or psychological causes such as stereotype biases, cognitive fallacies, or psychological predispositions (see Camerer, 1999; Kahneman, Slovic, & Tversky, 1982). On the opposite side of the continuum lie the behaviorist's perspective that irrationality is grounded in principles of operant learning (see Madden, 2000; Skinner, 1953), assuming that environmental influences establish particular negative consequences (those associated with irrational or problematic behaviors; e.g., risk taking, cheating on tests, unhealthy food choices) as having more reinforcing value than other more positive consequences (those associated with rational or desirable behaviors; e.g., self-control, studying for a test, healthy food choices). Behavioral economists have termed this approach the “reinforcer pathology” model, suggesting that pathological patterns of responding for differentially valued reinforcers may be a more parsimonious and conceptually systematic explanation for irrational behaviors than mentalistic constructs (Bickel, Jarmolowicz, Mueller, & Gathalian, 2011). For the remainder of this tutorial, we will speak exclusively of behavioral economics using the behaviorist perspective for two reasons. First, while behavioral economics stemming from psychology and economics feature an interesting and dense literature base, the behaviorist perspective is parsimonious and does not require abstract theoretical explanations that are difficult to empirically evaluate and observe. Because behavioral economics is expressed in the language of operant learning, a language that is familiar to behavior analysts, the behaviorist approach provides a framework that is easily understood and recommendations on how environments can be altered in ways that promote positive behavior change can be implemented relatively quickly. Second, recent research has begun to suggest that behaviorist perspectives of economic principles can succinctly explain the findings of the more mentalistic approaches to irrational behaviors (e.g., Kohlenberg, Hayes, & Hayes, 1991; Reed, DiGennaro Reed, Chok, & Brozyna, 2011). By using a perspective that is conceptually systematic with radical behaviorism to explain these irrational behaviors, environmental influences of irrational behaviors are analyzed, which in turn suggest that environmental solutions can be employed to help improve decision making.

The behaviorist approach to behavioral economics was explicitly summarized by Hursh (1980), who proposed that economic concepts could better advance a science of human behavior. Hursh (1984) further advised that operant concepts could help explain principles of behavioral economics. In short, behavior analysis provides both complementary and explanatory solutions to behavioral economic concepts. The concepts outlined by Hursh (1980, 1984) for understanding behavioral economics include (a) demand functions, (b) reinforcer competition, and (c) open versus closed economies. In recent years, behavior analysts have also added the concept of discounting to this list (see Francisco, Madden, & Borrero, 2009).

The present tutorial explores how each of these four concepts can contribute to an understanding of the ecology of applied settings. Also, these concepts can help establish a number of theoretical underpinnings for effective behavior management procedures. We believe that behavioral economics is particularly suited for application in practical settings for several reasons. First, behavioral economics has a large and dense evidence base supporting its use and efficacy in laboratory studies, thus the principles discussed here are well established through empirical research. Second, although behavioral economics has experienced a relative boom in experimental support, its applied utility remains largely undocumented in less controlled therapeutic settings. A secondary purpose of this tutorial, therefore, is to challenge behavioral practitioners and researchers to integrate these principles and concepts into their own practices to broaden the applied knowledge base of behavioral economic concepts in academic and therapeutic settings. We believe that behavioral economics has much to offer, despite the relative paucity of research and seemingly esoteric nature of this topic. Novel research applying behavioral economic principles to challenges in therapeutic settings is well overdue. Thirdly, because behavioral economics considers the interplay of economic systems and multiple ecologies of reinforcement, this approach is an excellent complement to Sheridan and Gutkin's (2000) and Burns' (2011) call for ecological approaches to assessment and intervention conceptualization in treatment settings. By doing so, behavioral economic considerations fall squarely within the behavior analytic approach to therapeutic services that behavior analysts have been advocating for some time (Martens & Kelly, 1993). Finally, and perhaps most importantly, behavioral economic approaches are inherently efficient because they focus on relatively simple environmental factors that can promote positive behavior change. In an era of economic uncertainty and budgetary constraints, cost-effective empirically supported interventions are at a premium. Applying behavioral economic concepts to service delivery settings may be an ideal solution for today's economic challenges.

This tutorial will detail each of the behavioral economic concepts that have been discussed in both the experimental and applied literature. We will describe each concept using lay examples, supplementing these discussions with examples from basic and applied research. Finally, we will provide implications of each concept for behavior analysts in practice when evaluating their own settings or intervention strategies.

General Principles and Basic Terminology

A number of economic terms will be used throughout the remainder of the tutorial—terms such as commodity, consumption, cost, benefit, price, and unit price. Therefore, it is important that these terms be defined before proceeding. We will use a running example throughout this section to aid in defining and elucidating the core concepts associated with behavioral economic analyses. For this example, consider a child who is working to obtain tokens exchangeable for backup reinforcers. Tokens are contingent upon a number of words read correctly per minute during a reading intervention.

Commodity. In traditional economics, a commodity is a good or event that is available in the market for purchase/consumption. In behavioral economics, the term commodity refers to the reinforcer or item for which an individual will work to obtain. Similar to reinforcers, commodities may range from tangible (e.g., toys) to intangible goods (e.g., teacher attention). In our example above, the primary commodity of interest is tokens (obtained via correctly read words). One could also consider the backup reinforcers as a secondary commodity (obtained via expenditure of tokens).

Consumption. In economic analyses, consumption is the process of engaging with the commodity of interest following a purchase, given its cost. In behavioral economics, the term consumption refers to the amount of a commodity obtained in a given session or observation (e.g., number of tokens or praise statements earned). Most often we are concerned with total consumption, or the overall amount of a commodity obtained within a session. Using the previous example, reading a prespecified number of words correctly per minute during the intervention enables the consumption of tokens by the student. Exchanging the tokens for other items or activities results in the consumption of backup reinforcers.

Cost, benefit, and unit price. To consume a commodity, an individual or group of individuals must meet some requirement related to cost, benefit, and unit price. When we speak of cost in behavioral economic terms, we are referring to some requirement an individual has to meet in order to obtain a given commodity (Hursh, Madden, Spiga, DeLeon, & Francisco, 2013). Most commonly, cost is quantified by the number of responses required to obtain the commodity (e.g., ten responses or fixed ratio [FR] 10) but may also be measured in other characteristics, such as the expenditure of effort, the amount of money exchanged, or the amount of time that passes until the delivery of a reinforcer. The term benefit refers to the amount of a commodity that can be obtained (Hursh et al., 2013). For example, ten responses may allow a person to obtain one token. Together, the cost and benefit of a commodity comprise the price of a commodity. In behavioral economics, the price is calculated as a ratio of costs and benefits and is referred to as unit price (for the remainder of this tutorial, price and unit price will be used interchangeably). Within our running example, the cost of each token is to correctly read the specified number of words within a minute. The cost of each backup reinforcer is the number of tokens exchangeable for the item or activity. During the first phase of the intervention, the behavior analyst may require one word read correctly per minute to access a token; thus, the unit price equals the cost (one word read correctly per minute) divided by the benefit (one token), which equals 1.00. As the intervention progresses, the behavior analyst may start to fade the tokens, thereby increasing the unit price of tokens. Rather than one word correct for one token (a unit price of 1.00), the behavior analyst may require two words correct in a minute to access a token (two words divided by one token equals a unit price of 2.00). Exchanging the tokens for other goods also operates using unit prices. For example, if 10 tokens equal 10 minutes of computer time, the unit price is 1.00. However, 20 tokens may access 40 minutes of computer time, equating to a unit price of .50.

The Law of Demand. At the crux of behavioral economics is the law of demand, which suggests that consumption declines when the unit price of a given commodity increases (Samuelson & Nordhaus, 1985). Using the reading intervention example, consider what might happen if the unit price of a token became very high. Suppose that 100 words read correctly per minute resulted in the consumption of one token. At such a high unit price, the student may stop responding and no longer consume reinforcers (tokens and the backup reinforcers). The law of demand suggests that any reinforcer, regardless of the strength of preference for that commodity, will lose its relative reinforcing efficacy if the unit price becomes too large. Loss of reinforcing efficacy suggests that the commodity's classification as a reinforcer will be lost and responding to access that commodity will no longer persist.

Demand Functions

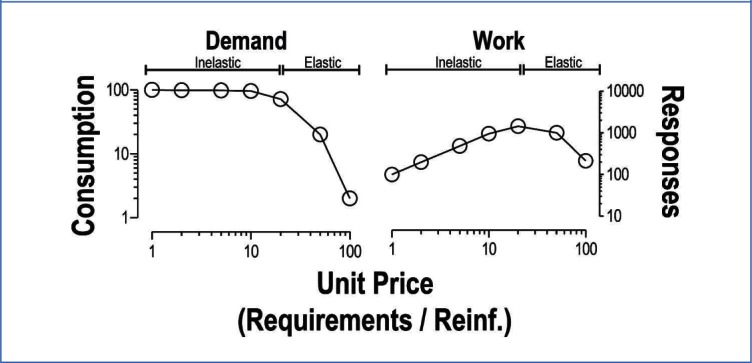

In any kind of economy, the price-setting agent (i.e., the retailer, the behavior analyst) seeks to identify the highest price that consumers will tolerate assuming the commodity of interest is sensitive to the law of demand. In the reading example above, the behavior analyst would be interested in the highest number of words the student will read in order to earn each token. The degree to which consumption remains stable across price increases is considered the consumers' demand. Demand that maintains a stable level of consumption across price increases is considered inelastic. For example, the student above may consume just as many tokens if the tokens cost three words per minute or six words per minute. That is, consumption does not change as a function of price. When a price becomes too high and exceeds the consumer's threshold of acceptability, consumption of the commodity decreases, resulting in elastic demand. This falls within the assumptions of the law of demand. Elastic demand is depicted in the demand curve in the left panel of Figure 1. As Figure 1 illustrates, consumption of the target commodity is plotted on the y-axis as a function of unit price (which is plotted on the x-axis). In the simulated data comprising Figure 1, consumption remains relatively constant until a unit price of approximately 20; this constitutes the inelastic portion of the demand curve. Consumption subsequently decreases as unit prices become higher than 20, indicating elasticity. The unit price at which zero commodities or reinforcers are consumed is termed the breakpoint.

Figure 1.

Left panel depicts consumption as a function of price (a demand function). Right panel depicts responses as a function of price (a work function). See text for details. Note the double logarithmic axes on both panels to standardize the data for simpler visual inspection.

A second way to examine the relationship between consumption and price is with the work function, depicted in the right panel of Figure 1. The work function illustrates how responding—rather than consumption— increases and decreases with increases in price. Similar to the demand curve plot (left panel of Figure 1), responding is depicted as a function of unit price. The reader will note that the point in which the pattern moves from inelastic to elastic is equal across the demand and work functions of Figure 1; a unit price of approximately 20. Conceptually, this indicates that the peak level of responding is associated with the highest unit price that sustained consumption.

Comparisons of reinforcer demand are one way to examine relative reinforcer efficacy. Researchers have likened this measure as an index of “behavior-maintenance potency” (Griffiths, Brady, & Bradford, 1979, p. 192), suggesting that reinforcers under strong demand will maintain behavior at higher response requirements than alternative reinforcers of lesser demand. An important consideration when evaluating reinforcers is that reinforcer efficacy is a multifaceted construct (Bickel, Marsch, & Carroll, 2000; Johnson & Bickel, 2006). Thus, behavior analysts must consider the collective factors of derived response rates, demand elasticity, and breakpoints for each reinforcer examined, taking special precautions to ensure that the context of the evaluation is held constant to permit comparisons of relative reinforcer efficacy using demand curve analyses.

Hursh and colleagues (Hursh, Raslear, Shurtleff, Bauman, & Simmons, 1988) provided a seminal study on the utility of demand curves when considering demand for preferred commodities in their paper using rats working for food pellets. The price was set by manipulating the number of responses necessary to earn access to the food. As the price increased, the rats' consumption of food (demand) and numbers of responses (work) initially increased (inelastic), but eventually resulted in a point of elasticity wherein consumption and responding decreased, similar to the example demand and work functions in Figure 1.

Whether intentional or not, behavior analysts manipulate demand functions on a daily basis. This concept is not restricted to incentive-based programs such as token economies or reinforcement schedules.

Borrero and colleagues (Borrero, Francisco, Haberlin, Ross, & Sran, 2007) applied the logic of a cost-benefit analysis to reinforcer demand using descriptive data on children's severe problem behavior. These researchers first calculated prices for reinforcers by dividing the number of problem behaviors observed (cost) by the number of reinforcers obtained during an observation interval (benefit). This calculation resulted in a unit price for the reinforcers. They then plotted consumption of reinforcers across unit prices and yielded demand curve functions akin to those described above. The findings from the Borrero et al. study suggest that children's problem behavior can be assessed within an economic framework, similar to studies done in basic experimental laboratories. In a proactive approach to treatment conceptualization within an economic framework, Roane, Lerman, and Vorndran (2001) applied demand analyses to the examination of reinforcer efficacy in children with developmental disabilities. Toys previously identified as highly preferred generated higher breakpoints than toys identified as less-preferred as the unit price of these commodities were increased. The highly preferred toys that produced higher breakpoints served as more effective reinforcers in treating problem behavior.

Practical Considerations for Demand Functions

Whether intentional or not, behavior analysts manipulate demand functions on a daily basis. This concept is not restricted to incentive-based programs such as token economies or reinforcement schedules; the mere programming of reinforcers contingent on target behaviors evokes demand functions. Despite the ubiquity of demand characteristics in academic or therapeutic settings, there are two key ways that behavior analysts can effectively capitalize on this concept. First, the notion of a unit price can be applied to clients' individualized treatment plans by implementing a progressive ratio (PR) schedule of reinforcement and assessing the clients' breakpoints. In a PR schedule, the cost of the reinforcer increases across subsequent deliveries; that is, the unit price escalates over time or across repeated responses. For example, you might ask a client to complete a work task (e.g., a math problem, items sorted) to earn access to a reinforcer. On the next trial, the client must complete two work tasks to obtain the reinforcer. Then the client must complete four, eight, and so on, doubling the response requirement each time the client earns the reinforcer (alternatively, the response requirement can increase by 1 each time if the context of the demand warrants a small step size). This progression continues until the client no longer accesses the reinforcer. The last response requirement that resulted in the client accessing a reinforcer is thus considered the breakpoint, and the response requirement just before the breakpoint can be used as a guide to set the price for the reinforcer. In other words, this process helps determine the highest unit price that the consumer (the client) is willing to spend (the amount of work they will complete) to obtain the commodity (access to the reinforcer). This procedure is equivalent to retailers assessing the highest price consumers are willing to spend on a commodity. By determining breakpoints for particular reinforcers, behavior analysts can obtain direct information on how much work the client will complete to obtain the reinforcer. This may help to efficiently inform cost-effective treatment strategies that (a) maintain responding, (b) reduce costs associated with the purchase of reinforcers, and (c) reduce time spent engaging in reinforcer delivery and thereby increases the percentage of the day spent engaged in therapeutic activities.

While the use of PR schedules is intuitively appealing, such procedures present a number of issues when generating demand curves that may prove too demanding or problematic for use in academic or therapeutic settings. First, PR schedules take a fair amount of time and resources to appropriately evaluate relative reinforcer efficacy. Conclusive research has not yet demonstrated the benefits of this procedure outweigh the cost of resources; further research on the efficiency of PR schedules for guiding practical considerations is much needed. Second, although breakpoints and demand curves may be derived from PR schedules, these metrics do not necessarily result in equivalent findings using a series of response requirements independently (i.e., not in a progressive fashion; see Bickel et al., 2000; Johnson & Bickel, 2006). Finally, recent discussions of PR schedules have highlighted the fact that progressively increasing response requirements lack sufficient research regarding the kinds of initial ratio values and step sizes used (see Poling, 2010; Roane, 2008). These limitations may put fragile populations at undue risk given the potentially aversive nature of large step sizes and unsettled applied research on these topics (Poling, 2010).

Due to some possible limitations of PR schedules in practice, we advocate that behavior analysts consider the concept of unit price to guide practice without necessarily conducting long formal assessments.

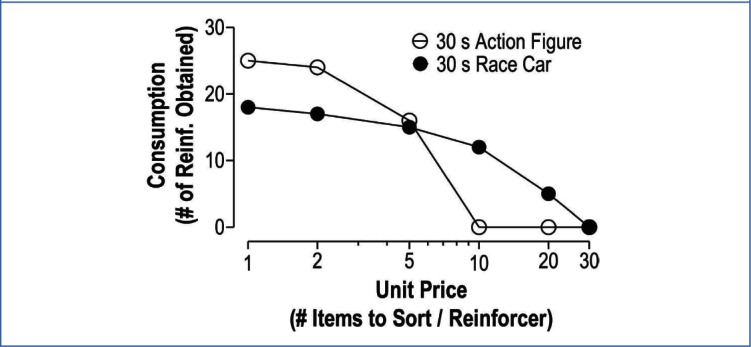

Due to some possible limitations of PR schedules in practice, we advocate that behavior analysts consider the concept of unit price to guide practice without necessarily conducting long formal assessments. The most pertinent practical consideration of unit price is that reinforcers follow the law of demand and will ultimately lose value once unit prices become too large. Key to this assumption is the notion that consumption is not uniform across prices. Reinforcers may be of high demand at low prices, but consumption becomes relatively lower once demand becomes elastic. Thus, relying on reinforcer assessments using only low response requirements (i.e., low unit price) may not fully capture the potency of that reinforcer for larger response requirements. Consider a situation in which a behavior analyst aims to identify potential reinforcers for a child on her caseload. Based on parental report, engagement with an action figure or race car are potential reinforcers. The behavior analyst collects data over several days using various prices associated with a child's educational goal of sorting objects into bins. The price is the number of items required to correctly sort before earning 30 s access to the reinforcer. During one session, the unit price is 1.00 over a repeated number of trials. Other sessions consist of prices of 2.00, 5.00, 10.00, 20.00, and 30.00. The consumption of both reinforcers is plotted as a function of price in Figure 2. As Figure 2 illustrates, both the action figure and race car conform to the law of demand in that elasticity is observed. At a unit price of 1.00, there is relatively more consumption of action figure play, suggesting that this commodity is potentially more reinforcing than the race car. However, as price increases, it is clear that demand is stronger for the race car since consumption persists relative to the action figure. The breakpoint for the race car is at a unit price of 30.00, compared to the breakpoint of 10.00 for the action figure. By examining reinforcer demand across differing unit prices, the behavior analyst can make judgments regarding which reinforcer to use under different price arrangements. Unfortunately, many reinforcer assessments use exclusively low prices (e.g., FR1) and may result in erroneous conclusions about the potency of a reinforcer when prices become higher. This hypothetical example highlights the importance of testing relative reinforcer demand at both low and high prices. For example, had the behavior analyst in the sorting example tested a unit price of 1.00 and 10.00, she would have identified differential demand across prices. The behavior analyst could then evaluate mid-range prices such as 2.00 or 5.00 to identify the point at which the demand became elastic for the action figure.

Figure 2.

Hypothetical demand curve data for two reinforcers (action figure and race car) contingent on sorting across increasing unit prices (that is, the number of items required to sort to obtain 30 s access to the reinforcer). Despite initially higher consumption of the action figure at low unit prices, demand persists at higher unit prices for the race care but not the action figure, highlighting the complex and multifaceted nature of reinforcer demand.

Finally, the consideration of unit price is paramount in the systematic fading of an intervention. Intensive individualized interventions may be hard to sustain over long periods of time. Without careful consideration of demand characteristics, the withdrawal of the intervention may result in rapid decrements in student behavior. The concept of unit price suggests that the thinning of a reinforcement schedule (e.g., increasing the number of responses/duration required to access a reinforcer) should include simultaneous increases in reinforcer magnitude. By increasing reinforcer magnitude while thinning the reinforcement schedule, unit price is held constant, thereby maintaining the desired behavior (e.g., Roane, Falcomata, & Fisher, 2007).

Reinforcer Competition

In economics, commodities compete for consumers' spending or resources. This competition is what fuels the supply and demand effects previously discussed. Multiple commodities are at work in any given environment, and these commodities can interact with each other in several different ways. We can categorize the status of a commodity as being (a) substitutable, (b) complementary, or (c) independent based upon their effects on spending (see Green & Freed; 1993, Hursh, 2000; Madden, 2000). Commodities are substitutable if and when increases in one commodity's unit price conforms to the law of demand (i.e., consumption of that commodity decreases as a function of increased unit price) while there is a simultaneous increase in consumption of a second concurrently available commodity at a lower unit price. An example of substitutability may be a situation wherein a client initially demonstrates indifference for cherry and strawberry flavored candies, the client likes both and chooses each of them equally. However, when strawberry candies undergo a unit price increase (e.g., more responses or tokens are required to access the strawberry candy), preference shifts to cherry candies. Complementary reinforcers are those that feature simultaneous increases or decreases in consumption of both commodities, despite unit price manipulations on only one of the commodities. Consider a situation in which a behavior analyst works to increase her client's physical activity as part of a weight loss program by increasing the price required to play video games. As the unit price of video game access increases, its consumption decreases, along with decreases of consumption of salty snacks despite no unit price manipulation on the snacks. That is, salty snacks often go along with playing video games, so decreases in video game consumption result in concomitant decreases in salty snack consumption. Because consumption changed in the same direction for both commodities in the context of one commodity's increase in unit price, we would functionally define video game play and salty snack foods as complementary reinforcers. Finally, independent reinforcers feature no change in consumption, despite changes in consumption of a concurrently available alternative commodity as a function of unit price manipulations. An example of independence using the previous example would be where increases in the unit price of video game access have no effect on consumption of water. These two reinforcers are not related to each other, so changes in unit price for either one would have no effect on consumption of the other. In sum, these concepts categorize the effects of multiple reinforcers on behavior. When new commodities are introduced into the economic system, it is useful to determine status of new commodities to determine how it interacts with other commodities already at work in the environment.

When the price was equal, the rats preferred root beer. However, as the price of the root beer increased and the Tom Collins mix remained relatively low, the rats exhibited a preference for Tom Collins mix; thus, root beer and Tom Collins mix were considered substitutable.

To understand the role of competitive reinforcers, behavioral economists typically employ demand curve analyses as described above (see Bickel et al., 2000; Johnson & Bickel, 2006). In one example of the substitutability of reinforcers, Rachlin, Green, Kagel, and Battalio (1976) provided rats the choice between root beer and Tom Collins mix when both were associated with an equal and low response requirement (i.e., the number of lever presses necessary to obtain the drink). When the price was equal, the rats preferred root beer. However, as the price of the root beer increased and the Tom Collins mix remained relatively low, the rats exhibited a preference for Tom Collins mix; thus, root beer and Tom Collins mix were considered substitutable. In this comparison, Rachlin and colleagues provided the first demonstration of the interplay between demand and substitutability in operant behavior.

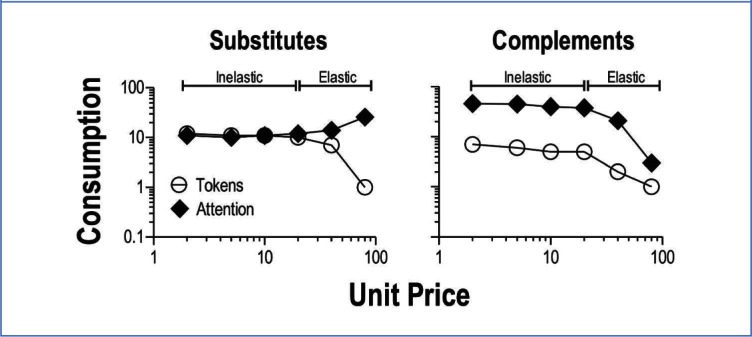

In Figure 3, example illustrations of both substitutable and complementary reinforcers are provided. In the left panel, substitutable reinforcers are illustrated (e.g., tokens and peer attention). As the price of Commodity A (e.g., tokens) increases, consumption of that commodity eventually becomes elastic and decreases. Concurrently, Commodity B (e.g., peer attention)—which has a lower unit price that has not increased—begins to be consumed relatively more often when Commodity A reaches a point of elasticity due to the increase in price. In the right panel of Figure 3, a complementary relation is depicted (e.g., token delivery and praise), where increases in price for Commodity A result in decreased consumption of both Commodities A and B.

Figure 3.

Hypothetical demand curve data representing substitutable (left panel) and complementary (right panel) reinforcers (tokens and attention) contingent on academic behavior when the unit price for one commodity increases (tokens) while the unit price for the alternative commodity (attention) remains fixed. In both cases, inelastic demand shifts to elastic between unit prices of 20 and 40. See text for details. Note the double logarithmic axes on both panels to standardize the data for simpler visual inspection.

In an interesting translation of reinforcer competition, Salvy, Nitecki, and Epstein (2009) examined the degree to which social activities and food serve as substitutable reinforcers for both lean and overweight preadolescent youth. The preparation consisted of having the youth press a computer mouse button to earn access to tater tots or social time with familiar or unfamiliar peers. When the response requirement (the price) for food increased while that for social time with an unfamiliar peer remained constant, the participants worked harder and earned more social time. Alternatively, when the price of social time with unfamiliar peers increased (and that of food remained constant), participants worked harder and earned more food. Interestingly, the researchers found that food and social time with familiar peers (friends) were independent. That is, participants always worked harder to engage in social time with friends, regardless of the price for social time or food. Collectively, these data imply that food and social time with unfamiliar peers may be substitutable reinforcers, and that social time with friends is always more reinforcing than food. This offers behavior analysts important practical implications for designing interventions to counter obesity in school-aged children.

Practical Considerations for Reinforcer Competition

To further elucidate the concepts of reinforcer competition, consider a student's behavior in the classroom. In this classroom, the teacher provides tokens for attending to the blackboard during instruction. The student is very skilled at spelling and diligently attends to instruction during spelling class. Thus, the student earns many tokens during spelling, despite attempts by her peers to whisper to her and pass notes. The student struggles in math, however, and has difficulty understanding the concepts the teacher presents. During math class, the student attends to her peers' whispering and engages in note-passing, thereby earning few tokens but receiving lots of peer attention. This behavior suggests that the two consequences (tokens and peer attention) are substitutable because the increased unit price of attending during math class (i.e., the effort of attending during math is greater than spelling given the student's math abilities) reduced consumption of tokens and increased consumption of peer attention.

Complementary reinforcers are distinguished by examining whether rates of consumption of two rewards both decrease as the price of the behavior increases. In this case, teacher praise and delivery of a token may be viewed as complementary if the increased effort requirement of academic behavior reduces the number of praise statements and tokens obtained by the student. For the student above, math is difficult and the effort required to pay attention during math is high, so the student would need to pay a high price for teacher praise and tokens. If both teacher praise and the number of tokens earned by the student decrease during math, these reinforcers are complementary to one another, that is, a decrease in one is related to a decrease in the other. Should an increase in the price of attending (e.g., due to more effort) in one academic subject be associated with a decrease in token delivery but no change in praise, these reinforcers would be considered independent commodities. This means that tokens and teacher praise are not related to one another, where the teacher delivers praise independently of providing tokens.

Any behavior analyst practicing in classroom settings can attest that neurotypical students tend to enjoy consuming reinforcers with preferred peers. That is, certain reinforcers are more valuable when shared with a friend (i.e., they are complementary). Such reinforcers are not always under the control of the behavior analyst, however, necessitating an analysis of reinforcer competition for effective treatment planning. For example, Broussard and Northup (1997) demonstrated that the disruptive behaviors of some students were motivated by peer, rather than teacher, attention. To effectively intervene, Broussard and Northup capitalized on the notion of complementary reinforcers and provided students with coupons contingent upon appropriate classroom behavior that were exchangeable for preferred activities with a friend. Substitutable reinforcers are also an efficient means of changing classroom behaviors. Work by Nancy Neef and colleagues (e.g., Neef & Lutz, 2001; Neef, Shade, & Miller, 1994) suggests that various dimensions of reinforcers compete against each other in academic-related behavior. For example, an immediate low quality reinforcer may serve as a more potent reinforcer than a delayed high quality reinforcer for some children. When preference for a reinforcer shifts as a function of effort, delay, rate, or quality, these reinforcers would be considered substitutable. By isolating the preferred reinforcer dimensions associated with academic-related behaviors, school-based practitioners can determine the kinds of substitutable reinforcers available in the classroom and manipulate contingencies to favor appropriate responding. For example, if functional behavioral assessments (FBAs) determine that teacher attention maintains disruptive student behavior, the teacher can provide high quality praise immediately contingent upon appropriate student behavior while ignoring or providing low quality attention contingent upon disruption as a way to reduce disruptive behavior.

Open and Closed Economies

One of the most commonly known and recited economic principles is that of “supply and demand.” John Locke succinctly described this principle in 1691 by writing that:

the measure of the value of Money, in proportion to any thing purchasable by it, is the quantity of the ready Money we have, in Comparison with the quantity of that thing and its Vent; or which amounts to the same thing, The price of any Commodity rises or falls, by the proportion of the number of Buyers and Sellers. [sic] (p. 16)

In sum, when a commodity is in short supply, its value increases. Tokens, for example, are effective in changing behavior because they are in short supply. However, if a behavior analyst offered tokens on a noncontingent schedule, there would be a diminished demand for tokens such that the clients no longer emit the behaviors that previously resulted in contingent token delivery.

In his seminal papers on the application of economic principles to the experimental analysis of behavior, Hursh (1980, 1984) described any behavioral experiment— in the present case, any behavioral intervention program—as being an economic system. In such “economies,” the value of the reinforcer depends on its relative availability both within and outside the system. When reinforcers are available only in the target system, the economy is considered “closed.” For example, a setting where staff attention is only available via functional communication would constitute a closed economy. On the contrary, economies that permit supplemental access to the reinforcer outside the target system are considered “open.” In a setting with an open economy, staff attention would be available through many modes of communication, ranging from appropriate functional communication to inappropriate forms of attention-motivated behaviors such as self-injury or aggression. As the notion of supply and demand suggests, supplemental access to the reinforcer outside the target system increases its supply and subsequently decreases its demand. In classroom settings where extra credit points are abundantly available (open economies), assignment completion may be low because there is plenty of opportunity to access class points outside of the bounds of the in-class assignments and homework. In a classroom where there is no extra credit available (closed economies), assignment completion may be high because the students must work within the bounds of the in-class assignments and homework to earn their points.

In a basic example of open and closed economies, LaFiette and Fantino (1989) compared pigeons responding under conditions in which sessions were run for either (a) 1 h while maintained at 80% free-feeding body weight with free postsession access to food (i.e., an open economy) or (b) 23.5 h without a food deprivation procedure (i.e., a closed economy). As predicted, results indicated substantially higher rates of responding in the closed economy conditions. Collier, Johnson, and Morgan (1992) yielded similar open vs. closed economy effects, but also documented a reward magnitude effect in the closed economy wherein smaller reward magnitudes generated higher rates of responding than larger ones.

In academic or therapeutic contexts, all academic or behavioral interventions fall into either an open or closed economy classification. Given the experimental findings from nonhuman studies on this topic and the implications they have on the way classroom contingencies are designed, it is unfortunate that no studies (that we are aware of) have explicitly compared open and closed economies in traditional classrooms with neurotypical students. While very few in number, there are fortunately two articles that address open and closed economies with individuals with developmental disabilities in applied settings using academic tasks as operants.

Roane, Call, and Falcomata (2005) compared responding under open and closed economies for both an adult and an adolescent with developmental disabilities. The behavior of interest for the adult was a vocational task (mail sorting) while the behavior of interest for the adolescent was math problem completion. Prior to the experimental manipulation, the researchers recorded the amount of time the participants spent engaging in preferred activities; video watching for the adult, video game playing for the adolescent. These observations were used to fix the percentage of time the participants could engage in the activity during open economy conditions (approximately 75%). During both open and closed economy conditions, participants could earn access to their preferred activity by meeting response requirements programmed on PR schedules. Participants received supplemental access to preferred activities following experimental sessions in the open economy condition. The degree of post-session supplemental access varied depending on the amount of reinforcement obtained during the sessions, with each daily amount equaling 75% of the pre-experimental observation lengths. In the closed economy, no supplemental access to the preferred activities was provided outside of the sessions. Results replicated those obtained in nonhuman studies; both open and closed economies increase responding from baseline levels, with relatively higher rates of responding occurring in closed economy conditions. Moreover, Roane and colleagues demonstrated that PR breakpoints were substantially higher in the closed economies, supporting the notion that limited supply increases the potency/demand of the reinforcer.

In an extension to Roane et al.'s (2005) study, Kodak, Lerman, and Call (2007) evaluated the effects of reinforcer choice under open (i.e., post-session reinforcement available) and closed economies on math problem completion for three children with developmental disabilities. The general procedure mimicked that of Roane and colleagues, with the exception of a choice of math problems to be completed and the use of edible reinforcers. Two stacks of math problems were present during both open and closed economies; one stack of problems was associated with the top-ranked edible from a preference assessment, and the other stack was associated with the second-ranked edible. Again, responding was highest under closed economy conditions. Interestingly, participants switched preference away from the top-ranked edible to the second-ranked edible when the PR schedules for the top-ranked edible were relatively high in the closed economy condition. These findings provide further confidence in the cross-species generality of the open vs. closed economy phenomena, and provide a more ecologically valid depiction of how open and closed economies might function in applied settings when more than one reinforcer may be concurrently available for a target behavior.

Practical Considerations for Open and Closed Economies

When conducting a functional assessment, the concept of open and closed economies may help illuminate why certain reinforcers are more or less effective for a given client. If a client exhibits a higher rate of a target behavior in the clinical setting, it would be beneficial to assess whether the reinforcer the client is obtaining for that behavior is also available in other settings. If the reinforcer is only accessible in the clinical setting, this may be one factor contributing to the reason why the high rate of target behavior is occurring. In this case, the clinical setting is a closed economy for that particular reinforcer. Making the same reinforcer available outside of the clinical setting would create a more open economy and possibly decrease the target behavior in the classroom. For instance, if a child is engaging in problem behaviors to receive teacher attention during class because that is the only time she receives teacher attention, it might be beneficial for the teacher to try to incorporate a plan that allows the student to also receive teacher attention outside of class (e.g., during playtime, lunch, snack).

Creating economic systems that are either open or closed may also be an important component in the development of a behavior intervention plan. If extra computer time is being used as a reinforcer to increase work completion in the academic or therapeutic setting, this may not be an effective reinforcer if the client is able to spend as much time as he likes on the computer at home. Choosing reinforcers that are unique to a particular context may be helpful in making the reinforcer more effective, thereby resulting in a more successful behavior plan.

Including multiple contexts in behavior plans might be necessary to help account for open and closed economies. One method of consultation available to behavior analysts in practice is conjoint behavioral consultation, where both the caregiver and clinician (behavior analysts or teacher) are involved in the consultation process (Sheridan, Kratochwill, & Bergan, 1996). This method provides an opportunity for behavior analysts to use the concept of open and closed economies when designing interventions with caregivers and clinicians. Using both the clinical and home environments, it can be decided if the same reinforcers should be available in both the home and target settings, or if certain reinforcers should be associated with home, while others are associated with the clinic and/or school. The decisions made about the availability of reinforcers across settings will likely influence behavior (for better or worse). Strategic use of economic principles can nudge behavior toward desirable outcomes. Consider a student who is not completing work at school. A behavior plan could be written that allows a particular reinforcer (that is unavailable at home) to be available at school for completing school work, while a different reinforcer is available at home (and is unavailable at school) for completing homework. If the same reinforcer is being used for completing both schoolwork and homework, and homework is easier or less time consuming for the child than schoolwork, the child may do less work at school because they know they can easily complete their homework, and will be able to have access to the reinforcer at home. There is no hard and fast rule regarding whether an open or closed economy is best because it is likely to differ among individuals, but this may be an important factor to consider in setting up behavioral contingencies in multiple environments.

The concept of open and closed economies has not been examined thoroughly in applied settings. However, the concept itself is well established in economics (e.g., Hillier, 1991). The extra step of evaluating whether or not the reinforcers contingent on behavior are occurring in an open and closed economy is a simple step that may provide important information, ultimately improving the efficacy of behavioral interventions.

Delay Discounting

Behavioral economic studies of intertemporal choice and decision making have repeatedly demonstrated that humans (and nonhumans) are rather myopic when faced with delayed consequences (Madden & Bickel, 2010). In these studies, researchers typically ask participants to choose between receiving hypothetical monetary outcomes at various delays, such as $100 in 10 years, or $150 in 12 years. If the participants are like most people who have taken part in such studies, they probably choose the $150 (in 12 years); after all, $150 is greater than $100. Now, suppose the participants are presented with another choice; this time, they can choose to receive $100 right now or $150 two years from now. When presented with this decision, many individuals who previously chose the larger delayed reward switch to preferring the smaller immediate reward. This phenomenon is termed a preference reversal (see Ainslie, 1974; Tversky & Thaler, 1990) and implies that individuals' values of delayed rewards are myopic in nature (e.g., Kirby & Herrnstein, 1995). Interestingly, both the difference in delay and the difference in reward magnitude are identical in both decision-making tasks; however, preference has reversed.

According to traditional economic theory, humans lawfully make rational choices. Given the results of the decision-making task above, this is clearly not the case. This notion of preference reversals may explain why individuals make many less-than-optimal decisions, such as planning to study for a major test but instead watching a television show, using credit cards with high interest rates to immediately purchase an item that will take a while to pay off, or eating unhealthy foods that taste good now but ultimately harm long-term health. These types of irrational choices can be explained by a phenomenon that behavioral economists call discounting (see Madden & Bickel, 2010). Discounting describes a behavioral pattern in which contextual factors associated with the reward (in this case, delay until the receipt of the reward) diminishes the value of a given outcome.

Mazur (1987) was one of the first researchers to assess rates of delay discounting by using an adjusting delay procedure. In Mazur's procedure, pigeons repeatedly chose between a larger amount of food pellets after an adjusting delay and a smaller amount of food pellets after a fixed delay. If the pigeon chose the larger later reward (LLR), the delay to the LLR would increase on the subsequent trial. On the other hand, if the pigeon chose the smaller sooner reward (SSR), the delay to the LLR would decrease on the subsequent trial. This procedure was used to determine the point at which the pigeon switched from choosing the LLR to the SSR. The value at which switching from the LLR to the SSR is termed an indifference point because it is the point at which the subjective values of both alternatives are deemed equal. Mazur used several different mathematical functions to explain the manner in which the pigeons discounted delayed outcomes, and when plotted, Mazur found that the obtained indifference points followed a hyperbolic function.

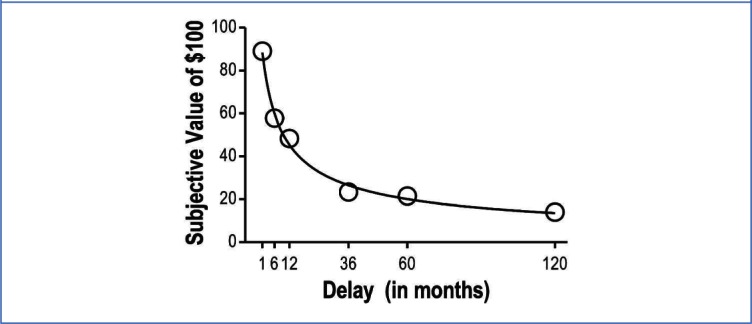

Figure 4 illustrates a typical discounting curve that follows a hyperbolic function. Various delay values are plotted on the x-axis and the subjective value of a reinforcer is plotted on the y-axis. As the delay to the receipt of the reward increases, the subjective value of the reward decreases. Thus, delayed outcomes are subjectively valued less than more immediate outcomes.

Figure 4.

Example of delay discounting. The subjective value of a delayed reward (in this case, $100) is plotted on the y-axis as a function of the delay until the receipt of the reward (in months) on the x-axis. As delay to receipt of reward increases, the subjective value (of $100) decreases.

The most widely used procedures for determining discounting parameters in humans have been variations on a procedure originally created by Rachlin, Raineri, and Cross (1991). In this procedure, participants make choices between two hypothetical outcomes: $1,000 now and $1,000 after varying delays. At the start of each delay, the amount of the two alternatives is set equal. On each consecutive trial, the amount of the alternative delivered immediately decreases by an amount until the alternatives are $1 now vs. $1,000 after the delay. After the descending portion is completed, the procedure repeats in an ascending order until the alternatives are, again, both valued at $1,000. An indifference point is then obtained by averaging the point in which the participant switches from the immediate outcome to the delayed outcome (for the descending sequence) and the point at which the switch is made from the delayed outcome to the immediate outcome (for the ascending sequence). Even though these procedures typically use hypothetical rewards, researchers have found that there is no difference in obtained rates of discounting when real or hypothetical rewards are used (Johnson & Bickel, 2002; Madden et al., 2003).

Green, Myerson, and Ostaszewski (1999) compared discounting rates of typically developing sixth-grade children with discounting rates of older adults. Results suggested that children discounted more steeply, indicating preference for smaller sooner rewards. In an extension of this research, Reed and Martens (2011) assessed discounting rates for 46 typically developing sixth-grade students. The researchers then implemented a class-wide intervention targeting on-task behavior by delivering reinforcement immediately after a class period, or tokens that could be exchanged 24 hours later for a back-up reinforcer for on-task behavior. Reed and Martens found that discounting rates adequately predicted on-task behavior during the intervention. In other words, for those students who showed higher discounting scores, the delayed rewards were less effective in improving on-task behavior than immediate rewards. These studies suggest that children do indeed discount delayed rewards, and such discounting is associated with real-world outcomes of interest to school-based practitioners. These discounting effects also appear to be more pronounced in children diagnosed with attention-deficit hyperactivity disorder (ADHD; Barkley, Edwards, Laneri, Fletcher, & Metevia, 2001; Scheres et al., 2006).

The notion that preferring smaller sooner rewards over larger later ones is irrational provides an impetus for practitioners to design interventions that are conceptually systematic with respect to behavioral economics. In a classic study, Schweitzer and Sulzer-Azaroff (1988) operationally defined self-control as preference for larger delayed rewards. In their study, the researchers offered children the choice between two boxes; one box with one reinforcer, the other with three reinforcers. In a pre-assessment, the researchers documented a discounting effect wherein the children preferred to have an immediately available smaller reward (the box with only one reinforcer) to a delayed and larger reward. They then implemented a self-control training procedure that began by asking the children to choose either box, both of which were immediately available. When both were immediately available, the children chose the box associated with more reinforcers. The procedure progressed by gradually increasing the durations of the delay for the box with more reinforcers across subsequent sessions. At post-assessment, the researchers found that four out of the five children shifted their preference for the delayed reinforcer away from their pre-assessment preference for the immediate reinforcer. These results suggest that viewing self-control as a form of discounting may have implications for classroom instruction and behavior management. More importantly, it may be possible to design behavioral interventions to promote self-control choices in children.

Practical Considerations for Delay Discounting

The issue of delay discounting presents one of the most important, although probably least often considered, aspects of designing an effective behavioral intervention in academic or therapeutic settings. When writing behavioral intervention plans, reinforcers are often delivered at a time that is convenient for staff or caregivers (such as the end of a program or during breaks). The research described above suggests that even relatively short delays can have a significant impact on the efficacy of a reinforcer, especially for children with a low tolerance for delay. The timing of reinforcer delivery needs to be considered when writing behavioral intervention plans. If such considerations are not made and the plan fails to address the caregivers' concerns, it would be difficult to know if the plan is not working because of the reinforcer itself, or if the delay to the reinforcer is affecting the value of the reinforcer.

One obvious method to help reduce the effects of delay is to deliver reinforcers immediately. This may not be a viable solution for all situations, especially in group settings where staff must attend to numerous clients at once. For children with more severe disabilities, this may be a possibility if staff work directly with the student during a large part of the day and are able to immediately reinforce appropriate behavior. In a school setting, the delivery of immediate reinforcement may disrupt the flow of the classroom and interrupt the learning of other students. One method that can decrease delay to reinforcement is to implement a token reinforcement system. This may be the best way to reduce the delay between behavior and reinforcement in the regular classroom. With a token system, the tokens can become conditioned reinforcers that may be exchanged at a later time for other backup reinforcers. The tokens become a stand-in (or bridge) for backup reinforcers that will be delivered later, and they can become reinforcing in themselves as long as other reinforcers are tied to them consistently (see Hackenberg, 2009; Kazdin & Bootzin, 1972). Finally, research from both human (Dixon, Horner, & Guercio, 2003) and nonhuman (Grosch & Neuringer, 1981) studies suggest that the inclusion of an intervening stimulus may increase tolerance to delay by providing an alternative response that can be emitted while waiting for the delivery of the delayed reinforcer. In practical settings, behavior analysts can mediate delay discounting effects by providing clients with activities or timers to assist their waiting behavior.

It is important to note that delay discounting affects a wide variety of important domains related to human behavioral outcomes. Discounting has been observed in health behavior (Chapman, 1996), social relationships (Jones & Rachlin, 2006), and in academic behavior (Schouwenburg & Groenewoud, 2001). Nearly any behavior for which consequences occur in the future is in competition with behaviors for which consequences are more immediately available. By reducing delay to reinforcement, one may be increasing the efficacy of reinforcement; thereby increasing the efficacy of the behavior change procedure.

Conclusion

Behavioral economics represents the interplay between economic principles and behavior change considerations. The notion of behavioral economics in academic or therapeutic settings is most accurately described as a ubiquitous concept rather than a behavior change procedure since these principles are in play regardless of whether change agents have intentionally programmed such contingencies or interventions. As an ever-present concept, behavior analysts in practice should seek to identify the behavioral economic principles actively controlling clients' behaviors and find ways to restructure contingencies to promote desired outcomes. Across disciplines, behavioral economic concepts remain relatively undocumented in nonclinical settings, such as home-based services, regular education classrooms, and the workplace. Applied behavioral economists, behavior analysts, educators, and clinicians alike would profit from integrating such concepts into topics of everyday relevance. While scientific translation across these disciplines remain relatively sparse (Critchfield & Reed, 2004; Reed, 2008), bridging the gap between behavioral science and practice represents an excellent avenue for use-inspired research (Mace & Critchfield, 2010) to improve behavior analytic service delivery. As behavioral economics continues to rise in popularity in the behavioral sciences (Bickel, Green, & Vuchinich, 1995; Camerer, 1999) and public policy (e.g., Grunwald, 2009; Hursh & Roma, 2013), it would behoove applied researchers and practitioners to begin proffering examples of these concepts in both science and practice. The concepts offered in this article are merely starting points for potentially exciting and effective applications in behavior analytic settings.

Footnotes

Derek D. Reed, Department of Applied Behavioral Science, University of Kansas; Christopher R. Niileksela, Department of Psychology and Research in Education, University of Kansas; Brent A. Kaplan, Department of Applied Behavioral Science, University of Kansas.

The authors thank the various clinicians and behavior analysts with whom they have worked with over the years that prompted the writing of this tutorial, as well as Scott Wiggins and Dave Jarmolowicz for their assistance to the authors during the course of manuscript preparation. Finally, they acknowledge the role of their Applied Behavioral Science (ABSC) 509 students for persistently asking for examples of how basic behavioral science translates to practice. The examples derived from these conversations and discussions have been integrated throughout the tutorial.

References

- Ainslie G. Impulse control in pigeons. Journal of the Experimental Analysis of Behavior. 1974;21:485–489. doi: 10.1901/jeab.1974.21-485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ariely D. Predictably irrational: The hidden forces that shape our decisions. New York, NY: Harper-Collins; 2008. [Google Scholar]

- Ariely D. The upside of irrationality: The unexpected benefits of defying logic at work and at home. New York, NY: HarperCollins; 2010. [Google Scholar]

- Barkley R. A., Edwards G., Laneri M., Fletcher K., Metevia L. Executive functioning, temporal discounting, and sense of time in adolescents with attention deficit hyperactivity disorder (ADHD) and oppositional defiant disorder (ODD) Journal of Abnormal Child Psychology. 2001;29:541–556. doi: 10.1023/a:1012233310098. [DOI] [PubMed] [Google Scholar]

- Bickel W. K., Green L., Vuchinich R. E. Behavioral economics. Journal of the experimental Analysis of Behavior. 1995;64:257–262. doi: 10.1901/jeab.1995.64-257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel W. K., Jarmolowicz D. P., Mueller E. T., Gatchalian K. M. The behavioral economics and neuroeconomics of reinforcer pathologies: Implications for etiology and treatment of addiction. Current Psychiatry Reports. 2011;13:406–415. doi: 10.1007/s11920-011-0215-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel W. K., Marsch L. A., Carroll M. E. Deconstructing relative reinforcing efficacy and situating the measures of pharmacological reinforcement with behavioraleconomics: A theoretical proposal. Psychopharmacology. 2000;153:44–56. doi: 10.1007/s002130000589. [DOI] [PubMed] [Google Scholar]

- Borrero J. C., Francisco M. T., Haberlin A. T., Ross N. A., Sran S. K. A unit price evaluation of severe problem behavior. Journal of Applied Behavior Analysis. 2007;40:463–474. doi: 10.1901/jaba.2007.40-463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broussard C., Northup J. The use of functional analysis to develop peer interventions for disruptive classroom behavior. School Psychology Quarterly. 1997;12:65–76. [Google Scholar]

- Burns M. K. School psychology research: Combining ecological theory and prevention science. School Psychology Review. 2011;40:132–139. [Google Scholar]

- Camerer C. Behavioral economics: Reunifying psychology and economics. Proceedings of the National Academies of Science. 1999;96:10575–10577. doi: 10.1073/pnas.96.19.10575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camerer C. F., Loewensein G., Rabin M., editors. Advances in behavioral economics. Princeton, NJ: Princeton University Press & Russell Sage Foundation; 2004. (Eds.) [Google Scholar]

- Chapman G. B. Temporal discounting and utility for health and money. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1996;22:771–791. doi: 10.1037//0278-7393.22.3.771. [DOI] [PubMed] [Google Scholar]

- Collier G., Johnson D. F., Morgan C. The magnitude-of-reinforcement function in closed and open economies. Journal of the Experimental Analysis of Behavior. 1992;57:81–89. doi: 10.1901/jeab.1992.57-81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Critchfield T., Reed D. D. Conduits of translation in behavior-science bridge research. In: Ribes Iñesta E., Burgos J. E., editors. Theory, basic and applied research, and technological applications in behavior science: Conceptual and methodological issues. Guadalajara, Mexico: University of Guadalajara Press; 2004. pp. 45–84. (Eds.) [Google Scholar]

- Dixon M. R., Horner M. J., Guercio J. Self-control and the preference for delayed reinforcement: An example in brain injury. Journal of Applied Behavior Analysis. 2003;36:371–374. doi: 10.1901/jaba.2003.36-371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Francisco M. T., Madden G. J., Borrero J. Behavioral economics: Principles, procedures, and utility for applied behavior analysis. The Behavior Analyst Today. 2009;10:277–294. [Google Scholar]

- Green L., Freed D. E. The substitutability of reinforcers. Journal of the Experimental Analysis of Behavior. 1993;60:141–158. doi: 10.1901/jeab.1993.60-141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green L., Myerson J., Ostaszewski P. Discounting of delayed rewards across the life span: Age differences in individual discounting functions. Behavioural Processes. 1999;46:89–96. doi: 10.1016/S0376-6357(99)00021-2. [DOI] [PubMed] [Google Scholar]

- Griffiths R. R., Brady J. V., Bradford L. D. Predicting the abuse liability of drugs and animal drug self-administration procedures: Psychomotor stimulants and hallucinogens. In: Thompson T., Dews P. B., editors. Advances in behavioral pharmacology. Vol. 2. New York, NY: Academic Press; 1979. pp. 163–208. In. (Eds.) pp. [Google Scholar]

- Grosch J., Neuringer A. Self-control in pigeons under the Mischel paradigm. Journal of the Experimental Analysis of Behavior. 1981;35:3–21. doi: 10.1901/jeab.1981.35-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grunwald M. How Obama is using the science of change. Time Magazine, April. 2009, April 2. Retrieved from http://www.time.com. [PubMed]

- Hackenberg T. D. Token reinforcement: A review and analysis. Journal of the Experimental Analysis of Behavior. 2009;91:257–286. doi: 10.1901/jeab.2009.91-257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillier B. The macroeconomic debate: Models of the closed and open economy. New York, NY: Blackwell; 1991. [Google Scholar]

- Hursh S. R. Economic concepts for the analysis of behavior. Journal of the Experimental Analysis of Behavior. 1980;34:219–238. doi: 10.1901/jeab.1980.34-219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hursh S. R. Behavioral economics. Journal of the Experimental Analysis of Behavior. 1984;42:435–452. doi: 10.1901/jeab.1984.42-435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hursh S. R. Behavioral economic concepts and methods for studying health behavior. In: Bickel W.K., Vuchinich R. E., editors. Reframing health behavior change with behavioral economics. Mahwah, NJ: Lawrence Erlbaum Associates; 2000. pp. 27–62. (Eds.) [Google Scholar]

- Hursh S. R., Madden G. J., Spiga R., DeLeon I. G., Francisco M. T. The translational utility of behavioral economics: The experimental analysis of consumption and choice. In: Madden G. J., Dube W. V., Hackenberg T. D., Hanley G. P., Lattal K. A., editors. APA handbook of behavior analysis: Vol. 2. Translating principles into practice. Washington, DC: American Psychological Association; 2013. pp. 191–224. (Eds.) [Google Scholar]

- Hursh S. R., Raslear T. G., Shurtleff D., Bauman R., Simmons L. A cost-benefit analysis of demand for food. Journal of the Experimental Analysis of Behavior. 1988;50:419–440. doi: 10.1901/jeab.1988.50-419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hursh S. R., Roma P. G. Behavioral economics and empirical public policy. Journal of the Experimental Analysis of Behavior. 2013;99:98–124. doi: 10.1002/jeab.7. [DOI] [PubMed] [Google Scholar]

- Johnson M. W., Bickel W. K. Within-subject comparison of real and hypothetical money rewards in delay discounting. Journal of the Experimental Analysis of Behavior. 2002;77:129–146. doi: 10.1901/jeab.2002.77-129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson M. W., Bickel W. K. Replacing relative reinforcing efficacy with behavioral economic demand curves. Journal of the Experimental Analysis of Behavior. 2006;85:73–93. doi: 10.1901/jeab.2006.102-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones B., Rachlin H. Social discounting. Psychological Science. 2006;17:283–286. doi: 10.1111/j.1467-9280.2006.01699.x. [DOI] [PubMed] [Google Scholar]

- Kahneman D., Slovic A., Tversky A. Judgment under uncertainty: Heuristics and biases. Cambridge, United Kingdom: Cambridge University Press; 1982. [Google Scholar]

- Kazdin A. E., Bootzin R. B. The token economy: An evaluative review. Journal of Applied Behavior Analysis. 1972;5:243–372. doi: 10.1901/jaba.1972.5-343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirby K. N., Herrnstein R. J. Preference reversals due to myopic discounting of delayed reward. Psychological Science. 1995;6:83–89. [Google Scholar]

- Kodak T., Lerman D. C., Call N. Evaluating the influence of postsession reinforcement on choice of reinforcers. Journal of Applied Behavior Analysis. 2007;40:515–527. doi: 10.1901/jaba.2007.40-515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohlenberg B. S., Hayes S. C., Hayes L. J. The transfer of contextual control over equivalence classes through equivalence classes: A possible model of social stereotyping. Journal of the Experimental Analysis of Behavior. 1991;56:505–518. doi: 10.1901/jeab.1991.56-505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaFiette M. H., Fantino E. Responding on concurrent-chains schedules in open and closed economies. Journal of the Experimental Analysis of Behavior. 1989;51:329–342. doi: 10.1901/jeab.1989.51-329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Locke J. Some considerations of the consequences of the lowering of interest, and raising the value of money: In a letter sent to a member of parliament. London: Awnsham and John Churchill; 1691. Retrieved from http://books.google.com. [Google Scholar]

- Mace F. C., Critchfield T. S. Translational research in behavior analysis: Historical traditions and imperative for the future. Journal of the Experimental Analysis of Behavior. 2010;93:293–312. doi: 10.1901/jeab.2010.93-293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Madden G. J. A behavioral economics primer. In: Bickel W. K., Vuchinich R. E., editors. Reframing health behavior change with behavioral economics. Mahwah, NJ: Lawrence Erlbaum Associates; 2000. pp. 3–26. (Eds.) [Google Scholar]

- Madden G. J., Begotka A. M., Raiff B. R., Kastern L. L. Delay discounting of real and hypothetical rewards. Experimental and Clinical Psychopharmacology. 2003;11:139–145. doi: 10.1037/1064-1297.11.2.139. [DOI] [PubMed] [Google Scholar]

- Madden G. J., Bickel W. K. Impulsivity: The behavioral and neurological science of discounting. Washington, DC: American Psychological Association; 2010. [Google Scholar]

- Martens B. K., Kelly S. Q. A behavioral analysis of effective teaching. School Psychology Quarterly. 1993;8:10–26. [Google Scholar]

- Mazur J. E. An adjusting procedure for studying delayed reinforcement. In: Commons M. L., Mazur J. E., Nevin J. A., Rachlin H., editors. Quantitative analyses of behavior: Vol. V. The effect of delay and intervening events on reinforcement value. Hillsdale, NJ: Erlbaum; 1987. pp. 55–73. (Eds.) [Google Scholar]

- Neef N. A., Lutz M. N. Assessment of variables affecting choice and application to classroom interventions. School Psychology Quarterly. 2001;16:239–252. [Google Scholar]

- Neef N. A., Shade D., Miller M. S. Assessing influential dimensions of reinforcers on choice in students with serious emotional disturbance. Journal of Applied Behavior Analysis. 1994;27:575–583. doi: 10.1901/jaba.1994.27-575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Persky J. Retrospectives: The ethology of homo economicus. The Journal of Economic Perspectives. 1995;9:221–231. [Google Scholar]

- Poling A. Progressive-ratio schedule and applied behavior analysis. Journal of Applied Behavior Analysis. 2010;43:347–349. doi: 10.1901/jaba.2010.43-347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rachlin H., Green L., Kagel J. H., Battalio R. C. Economic demand theory and psychological studies of choice. In: Bower G. H., editor. The psychology of learning and motivation. Vol. 10. New York, NY: Academic Press; 1976. pp. 129–154. In. (Ed.) pp. [Google Scholar]

- Rachlin H., Raineri A., Cross D. Subjective probability and delay. Journal of the Experimental Analysis of Behavior. 1991;55:233–244. doi: 10.1901/jeab.1991.55-233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reed D. D. The translation of basic behavioral research to school psychology: A citation analysis. The Behavior Analyst Today. 2008;9:143–149. [Google Scholar]

- Reed D. D., DiGennaro Reed F. D., Chok J., Brozyna G. A. The “tyranny of choice”: Choice overload as a possible instance of effort discounting. The Psychological Record. 2011;61:547–560. [Google Scholar]

- Reed D. D., Martens B. K. Temporal discounting predicts student responsiveness to exchange delays in a classroom token system. Journal of Applied Behavior Analysis. 2011;44:1–18. doi: 10.1901/jaba.2011.44-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roane H. On the applied use of progressive-ratio schedules of reinforcement. Journal of Applied Behavior Analysis. 2008;41:155–161. doi: 10.1901/jaba.2008.41-155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roane H. S., Call N. A., Falcomata T. S. A preliminary analysis of adaptive responding under open and closed economies. Journal of Applied Behavior Analysis. 2005;38:335–348. doi: 10.1901/jaba.2005.85-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roane H. S., Falcomata T. S., Fisher W. W. Applying the behavioral economics principle of unit price to DRO schedule thinning. Journal of Applied Behavior Analysis. 2007;40:529–534. doi: 10.1901/jaba.2007.40-529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roane H. S., Lerman D. C., Vorndran C. M. Assessing reinforcers under progressive schedule requirements. Journal of Applied Behavior Analysis. 2001;34:145–167. doi: 10.1901/jaba.2001.34-145. [DOI] [PMC free article] [PubMed] [Google Scholar]