Abstract

Objectives

Understanding speech in acoustically-degraded environments can place significant cognitive demands on school-age children, who are developing the cognitive and linguistic skills needed to support this process. Previous studies suggest the speech understanding, word learning and academic performance can be negatively impacted by background noise, but the effect of limited audibility on cognitive processes in children has not been directly studied. The aim of the current study was to evaluated the impact of limited audibility on speech understanding and working memory tasks in school-age children with normal hearing.

Design

Seventeen children with normal hearing between six and twelve years of age participated in the current study. Repetition of nonword consonant-vowel-consonant (CVC) stimuli was measured under conditions with combinations of two different signal-to-noise ratios (3 and 9 dB) and two low-pass filter settings (3.2 and 5.6 kHz). Verbal processing time was calculated based on the time from the onset of the stimulus to the onset of the child’s response. Monosyllabic word repetition and recall were also measured in conditions with a full bandwidth and 5.6 kHz low-pass cut-off.

Results

Nonword repetition scores decreased as audibility decreased. Verbal processing time increased as audibility decreased, consistent with predictions based on increased listening effort. While monosyllabic word repetition did not vary between the full bandwidth and 5.6 kHz low-pass filter condition, recall was significantly poorer in the condition with limited bandwidth (low pass at 5.6 kHz). Age and expressive language scores predicted performance on word recall tasks, but did not predict nonword repetition accuracy or verbal processing time.

Conclusions

Decreased audibility was associated with reduced accuracy for nonword repetition and increased verbal processing time in children with normal hearing. Deficits in free recall were observed even under conditions where word repetition was not affected. The negative effects of reduced audibility may occur even under conditions where speech repetition is not impacted. Limited stimulus audibility may result in greater cognitive effort for verbal rehearsal in working memory and may limit the availability cognitive resources to allocate to working memory and other processes.

Introduction

The ability to comprehend speech requires the listener to use a combination of the audible acoustic-phonetic cues from the stimulus, often called bottom-up factors, and the listener’s cognitive skills and knowledge of language and context, known collectively as top-down factors. Depending on the demands of a particular listening environment, contributions of both bottom-up and top-down processes may be used to decode the speech signal. For example, the presence of background noise or other forms of signal degradation places greater demands on a listener’s top-down skills and resources (see Jerger, 2007 for a review). Adults typically have fully functional cognitive and linguistic abilities to support listening in difficult environments, as evidenced by the ability to understand sentences even at a negative signal-to-noise ratio (SNR) where acoustic speech cues are severely degraded (Nilsson, Soli and Sullivan, 2004).

Children, however, are still developing both the cognitive operations and knowledge required to understand language. Thus, their speech understanding is likely to be more negatively impacted than older children and adults when the audibility of the acoustic-phonetic representation of the stimulus is degraded. The relationship between audibility and speech understanding in children has been widely reported. Compared to adults, children require more favorable SNRs (Elliot, 1979; Hnath-Chisolm, Laipply, and Boothroyd, 1998; Johnson, 2000; McCreery, Ito, Spratford et al. 2010), broader bandwidth (Stelmachowicz, Pittman, Hoover et al. 2001; Stelmachowicz, Pittman, Hoover et al. 2002, Mlot, Buss, and Hall, 2010), preserved spectral cues (Eisenberg, Shannon, Martinez et al. 2000) and less reverberation (Neuman, Wroblewski, Hajicek, et al. 2010) to reach maximum levels of speech understanding. Collectively, these results support the conclusion school-age children’s speech understanding is more susceptible to limited audibility than adults, which could extend to impact listening and learning in environments with poor acoustics.

Developmental differences in the ability to understand speech in degraded acoustic conditions have substantial implications for listening and learning in academic settings. The presence of noise in classrooms is a common problem. Knecht and colleagues (2002) measured ambient noise levels in thirty-two unoccupied elementary school classrooms and found that the classrooms had a wide range of ambient noise levels, but that only four were within the limits recommended by the ANSI standard for classroom acoustics. The levels of noise in typical classrooms have been documented to reach sufficient levels to interfere with speech understanding for children with normal hearing (Shield & Dockrell, 2004; Bradley & Sato, 2008). Classroom noise has also been shown to interfere with academic performance in children, including reading, spelling, math and speed of processing (Dockrell & Shield, 2006). Higher levels of classroom noise also predict lower performance on standardized tests of academic achievement in school-aged children (Shield & Dockrell, 2008). Importantly, the negative effects of classroom noise were observed on both verbal and nonverbal outcomes, suggesting that listening in acoustically compromised environments can negatively affect academic performance in children, in addition to the effects related to understanding speech.

An explanation for the decline in a wide range of auditory and cognitive tasks that occur while listening to signals with reduced audibility has been proposed in aging adults as the information degradation hypothesis (Schneider & Pichora-Fuller, 2000; Pichora-Fuller, 2003). This theory states that listening to a degraded auditory input imposes significant cognitive demands on the listener to decode the signal. Given that an individual’s cognitive processing resources are proposed to be finite (Kahneman 1973), listening to degraded signals leaves individuals with fewer cognitive resources for other simultaneous mental operations. While children are not experiencing a decline in cognitive abilities that has been proposed to underlie these deficits in aging, children may experience similar difficulties performing listening tasks with limited audibility due to increased demands on developing cognitive and linguistic skills.

The effects of listening with limited audibility on working memory, which includes the cognitive skills necessary to store and process incoming sensory information (Baddeley, 2003), has been a focus of previous research with adults. Specifically, reduced performance on working memory tasks with degraded signals has been documented in aging adults. Serial word recall measures the listener’s ability to recall a short list of words in correct order from a speech repetition task. Older adults have poorer serial recall in background noise compared to younger adults, even when repetition of the stimuli was near ceiling for both age groups (Surprenant, 2007). Differences in performance between groups of older and younger adults have been attributed to changes in the capacity or efficiency of working memory that occur with aging. Children could be predicted to demonstrate a similar pattern of decreased recall ability when audibility is degraded due to the on-going development of working memory skills during school-age.

Consistent with this prediction, children show similar deficits in auditory learning tasks when audibility is reduced using noise or bandwidth. Pittman (2008) measured novel word-learning in conditions with a restricted bandwidth above 6 kHz and conditions with a bandwidth that extended up to approximately 10 kHz. The rate of novel word learning was reduced for children with normal hearing and children with hearing loss in conditions with restricted bandwidth, requiring nearly three times as many exposures to achieve the same level of novel word learning on average for the restricted bandwidth condition than for extended. These results suggest that even small changes in audibility can affect children’s ability to learn new words. Working memory has been proposed to be a significant mechanism to support word learning and vocabulary development in children (Gathercole, 2006; Majerus et al. 2006; 2009), but the effect of limited audibility on accuracy for working memory tasks has not been studied in children.

Measures of cognitive processing speed also have been widely used to reflect the amount of cognitive processing effort required for listening tasks. Measures in processing speed in previous studies include reaction time from dual-task paradigms (e.g. Hicks & Tharpe, 2002) and verbal rehearsal speed (Gatehouse & Gordon, 1990; Mackersie et al. 1999). Generally, aging adults have shown decreased speed of processing, compared to young adults on speech repetition tasks when accuracy is equated (Gordon-Salant & Fitzgibbons, 1997; Pichora-Fuller, 2003). Processing speed has also been used to quantify differences in listening effort across varying acoustic conditions in adults (Mackersie et al. 1999). In children, Montgomery and colleagues (2008) reported that both working memory capacity and speed of processing were significant predictors of complex sentence comprehension. Children would be predicted to have decreased speed of processing as audibility decreases, reflecting the increased need to use top-down processing skills to understand speech. Both the capacity and efficiency of working memory appear to be related to the ability to understand and process speech in children, but to our knowledge, the impact of limited stimulus audibility on these processes has not been directly manipulated.

In the current study, two different tasks were used to evaluate the influence of noise and restricted stimulus bandwidth on tasks working memory and speed of processing. To assess the influence of audibility on verbal processing time, children were required to repeat nonword consonant-vowel-consonant (CVC) words in conditions with varying audibility due to noise and restricted stimulus bandwidths. Nonword repetition accuracy and verbal processing time were measured to determine the influence of noise and restricted bandwidth on verbal processing speed. Children were expected to demonstrate a decrease in nonword repetition and an increase in verbal processing time as audibility was reduced by background noise and restricted bandwidth, with increases in verbal response time proposed to reflect increase allocation of cognitive resources to speech repetition. To estimate the impact of limited audibility on working memory, repetition and recall of monosyllabic real words were measured using monosyllabic words. Word repetition and recall accuracy were measured under conditions of reduced audibility that would not be anticipated to negatively impact word repetition, but could negatively influence recall. Age and expressive language abilities were expected to predict verbal response time and recall ability, based on previous research (Gathercole, 2006).

Materials and Methods

Participants

Seventeen children between the ages of 6 years, 10 months and 12 years, 11 months (Mean = 9 years, 3 months) participated in the current study. Subjects were recruited from the Human Research Subjects Core at Boys Town National Research Hospital. Participants were paid $12 per hour and given a book for their participation. All listeners had clinically normal hearing in the test ear (15 dB HL or less) as measured by pure tone audiometry at octave frequencies from 250 Hz – 8000 Hz. None of the participants or their parents reported any history of speech, language or learning problems. Children were screened for articulation problems that could influence verbal responses using the Bankson Bernthal Quick Screen of Phonology (BBQSP; Bankson & Bernthal, 1990). The BBQSP is a clinical screening test that uses pictures of objects to elicit productions of words containing target phonemes. Expressive language skills were measured for each participant using the Expressive Vocabulary Test, Form B (EVT; Williams, 2007). All of the remaining children had standard scores within two SD of the normal range for their age [Mean = 101; Range = 86–108].

Stimuli

All stimuli were spoken by an adult female talker and digitally recorded. Nonword consonant-vowel-consonant (CVC) stimuli with a limited range of phonotactic probability used in a previous study (McCreery & Stelmachowicz, 2011) were used for the nonword repetition task. The stimuli were created by taking all possible combinations of CVC using the consonants /b/, /ʧ/, /d/, /f/, /g/, /h/, /ʤ/, /k/, /m/, /n/, /p/, /s/, /ʃ/, /t/, /θ/, /đ/, /v/, /z/, and /Β/ and the vowels /a/, /i/, /I/, /ε/, /u/, /ʊ/, and /ʌ/. The resulting CVC combinations were entered into an online database based on the Child Mental Lexicon (CML; Storkel & Hoover, 2010) to identify all of the CVC stimuli that were real words likely to be within a child’s lexicon and to calculate the phonotactic probability of each nonword using the biphone sum of the CV and VC segments. All of the real words and all of the nonwords that contained any biphone combination that was illegal in English (biphone sum phonotactic probability = 0) were eliminated. Review of the remaining CVCs was completed to remove slang words and proper nouns that were not identified by the calculator. After removing all real words and phonotactically illegal combinations, 1575 nonword CVCs remained. In order to create a set of stimuli with average phonotactic probabilities, the mean and SD of the biphone sum for the entire set was calculated. To limit the variability in speech understanding across age groups, the 735 CVC nonwords with phonotactic probability within +/ − 0.5 SD (range of biphone sum 0.0029 – 0.006) from the mean were included. Two female talkers were recorded for all stimuli at a sampling rate of 44.1 kHz. Three exemplars of each CVC nonword were recorded. Two raters independently selected the best production of each CVC on the basis of clarity and vocal effort. In thirty-seven cases where the two raters did not agree, a third rater listened to the nonwords and selected the best production using the same criteria. To ensure that the stimuli were intelligible, speech repetition was completed with three adults with normal hearing. Stimuli were presented monaurally at 60 dB SPL under Sennheiser HD-25-1 headphones. Any stimulus that was not accurately repeated by all three listeners was excluded. Finally, the remaining words (725) were separated into 25-item lists that were balanced for occurrence of initial and final consonant.

Recordings of monosyllabic real words from the Phonemically-Balanced Kindergarten (PBK-50; Haskins Reference Note 1) were used for the word recall task. Because of previous studies that have shown an impact of phonotactic probability (Gathercole, Frankish, Pickering, et al. 1999) and word frequency (Hulme, Roodenrys, Schweickert, et al. 1997) on word repetition tasks, the phonotactic probabilities and word frequencies of the monosyllabic words were entered into the same online calculator as the nonwords and considered during later analyses. Speech-shaped competing noise was created by taking the Fast Fourier Transform (FFT) of the long-term average speech spectrum of the talker, randomizing the phase at each sample point, and taking the inverse FFT of the resulting stimulus. The result is a noise with the same spectral shape as the talker, but without spectral and temporal dips. The bandwidth of the stimuli was limited using infinite-impulse response (IIR) Butterworth filters in MATLAB. Table 1 displays the bandwidth of each listening condition. The Speech Intelligibility Index (SII; ANSI S3.5 1997) of all conditions was calculated using an octave band method for a non-reverberant environment.

Table 1.

Filter conditions

| Condition | Frequency range |

|---|---|

| Full band (FB) | 0 – 11025 Hz |

| Low-pass (LP) 5.6k | 0 – 5600 Hz |

| Low-pass (LP) 3.2k | 0 – 3200 Hz |

Instrumentation

Stimulus presentation, including control of the levels of speech and noise files during the experiment, and response recording was performed using custom software on a personal computer with a Lynx Two-B sound card. Sennheiser HD-25-1 headphones were used for stimulus presentation. A Shure BETA 53 head-worn boom microphone connected to a Shure M267 microphone amplifier/mixer was used to record subject responses for later scoring. Pictures were presented via a computer monitor during the nonword repetition task to maintain subject interest. The sound levels of the speech and noise signals were each calibrated using a Larson Davis (LD) System 824 sound level meter with a LD AEC 101 IEC 318 headphone coupler. Prior to data collection for each subject, the sound level was verified by playing a pure tone signal through a voltmeter and comparing the voltage to that obtained during the calibration process for the same pure tone.

Procedure

Participants and their parents took part in a consent/assent process as approved by the Institutional Review Boards of Boys Town National Research Hospital and The University of Nebraska-Lincoln. All of the procedures were completed in a sound-treated audiometric test room. Pure tone audiometric testing was completed using TDH-49 earphones. The children completed the BBQSP and EVT. For both experimental tasks, participants were seated at a table in front of the computer monitor. Task order was counter-balanced across subjects to limit potential influences of fatigue and attention. The entire process took approximately 90 minutes per subject.

For the nonword repetition task, the children were instructed that they would hear lists of words that were not real words and to repeat exactly what they heard. Participants were encouraged to guess if they were not sure what they heard. Each subject completed a practice trial in the full bandwidth condition in quiet to ensure that the subject understood the task and directions. Following completion of the practice trial, the experimental task was completed in noise using one 25-item list per condition. List number, filter condition and SNR were randomized using a random sequence generator. The presentation order of the stimuli within each list was also randomized. Although feedback was not provided on a trial-by-trial basis, children were encouraged regardless of their performance after each list. Each subject listened to six experimental conditions comprised of three different bandwidths (FB, LP 5.6 kHz, and LP 3.2 kHz) at two SNRs (3 and 9 dB).

For the free recall task using PBK words, children were instructed to listen for and repeat back the real words that they heard. After a block of five words, the child was asked to repeat as many of the words as they could remember. Each condition used 25 words for a total of 5 recall blocks per condition. A practice condition in quiet was completed with each subject to ensure that they understood the task and directions. Following completion of the practice condition, word repetition and free recall were measured at the same SNR (9 dB) for the FB and LP 5.6 kHz bandwidth conditions. The number of conditions for the free recall task was limited in order to diminish the influence of fatigue and inattention on the listening task.

Responses for the nonword and real word repetition tasks were coded online as correct or incorrect. Free recall and verbal processing time were scored offline using audio recordings of the test session. Online scoring of correct or incorrect responses was also cross-checked offline. For free recall, if a child made a word repetition error, but correctly recalled the errant response, the child was credited for incorrect repetition, but correct recall. Verbal processing time was estimated using custom software designed to measure the latency between the onset of the stimulus and the onset of the subject’s response for each token. Given the potential for phonemic bias against phonemes with spectral characteristics similar to the background noise used in the experiment, such as fricatives (Kessler, Treiman, and Mullennix, 2002), verbal processing times for each token were verified by visual inspection of the waveform.

Results

Nonword repetition and verbal processing time

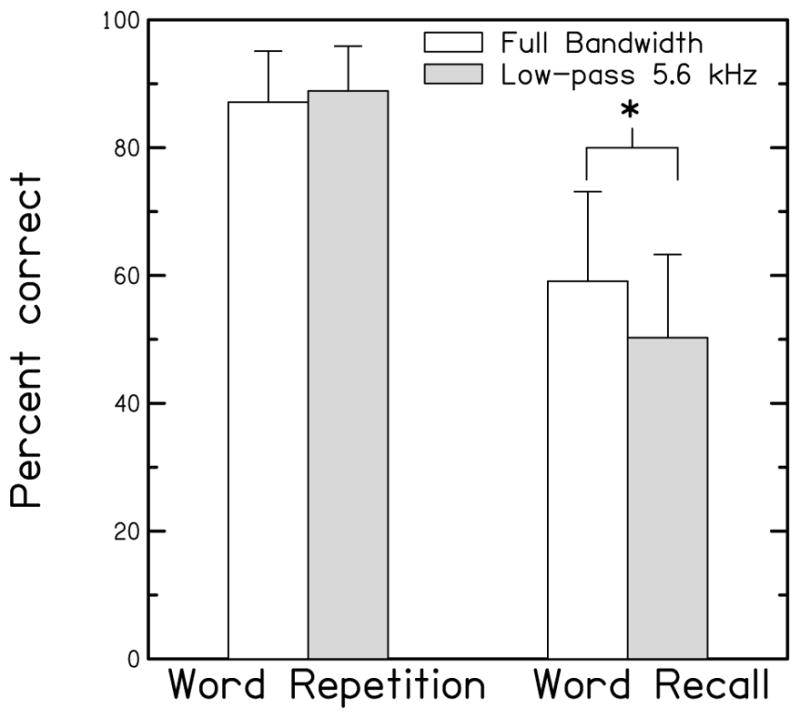

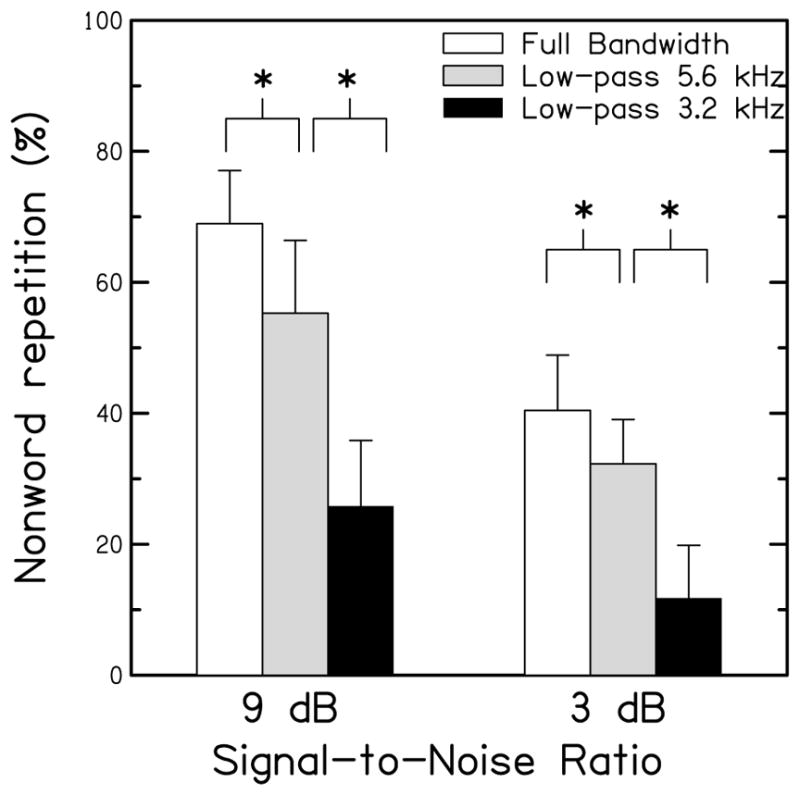

Prior to statistical analysis, proportion correct nonword repetition scores were converted to Rationalized Arcsine Units (RAU; Studebaker, 1985) to normalize variance across conditions. Additionally, verbal processing times less than 250 ms or greater than 3000 ms were eliminated as being either fast guesses or inattentive responses, respectively (Whelan, 2008). This process led to the elimination of 55 verbal processing times (2%) out of 2550 total responses. Because verbal processing times from correct responses are considered valid, verbal response times from the LP 3.2 kHz condition were omitted from statistical analyses due to a limited number of correct responses in those conditions. Mean nonword repetition accuracy as a function of condition is plotted in Figure 1, while mean verbal processing time as a function of condition is plotted in Figure 2.

Figure 1.

Nonword repetition (percent correct) as a function of condition (White bars Full bandwidth; Gray bars Low-Pass 5.6k, Black bars Low-Pass 3.2k) for 9 and 3 dB signal-to noise ratios. Error bars are standard deviations. Asterisks denote statistically significant differences between conditions.

Figure 2.

Verbal response time (ms) as a function of condition (White bars – Full bandwidth; Gray bars Low-Pass 5.6 kHz) for 9 and 3 dB signal-to-noise ratios. Error bars are standard deviations.

The general trend in the data supports the hypothesized effect of decreasing nonword repetition accuracy and increasing verbal processing time as audibility decreases due to the influence of both SNR and bandwidth restriction. To evaluate if these trends in nonword repetition and verbal processing time were statistically significant, repeated-measures ANOVAs were completed with SNR (9 dB and 3 dB) and bandwidth (Full bandwidth, LP 5.6, LP 3.2) as factors for each dependent variable. For nonword repetition, the main effects of bandwidth (F (2,32) = 142.982, p <0.001, η2p = 0.899) and SNR (F (1,16) = 92.278, p <0.001, η2p = 0.852) were statistically significant. The two-way interaction between bandwidth and SNR was not significant (F (2,32) = 1.075, p =0.370, η2p = 0.060). Evaluation of the marginal means for SNR revealed the anticipated effect of significantly higher nonword repetition accuracy for the 9 dB SNR relative to the 3 dB SNR. To evaluate the source of the significant difference in nonword repetition for bandwidth, post hoc testing was completed using Tukey’s Honestly Significant Difference (HSD) with a calculated significant minimum mean difference of 9.47 RAU. Decreasing nonword repetition accuracy was observed across each condition of limited bandwidth, and the differences between FB and LP 5.6 kHz (10.669 RAU) and LP 5.6 kHz and LP 3.2 kHz (28.977 RAU) were both significant when controlling for Type I error. Age in months was not significantly correlated with mean nonword repetition accuracy (r = 0.209, p =.427).

The pattern of statistical results for verbal processing time was similar to that observed for nonword repetition. The main effects of SNR (F (1,16) = 5.096, p=0.038, η2p = 0.242) and bandwidth (F (1,16) = 4.958, p =0.041, η2p = 0.237) were significant with no significant two-way interaction between SNR and bandwidth (F (1,16) = 1.484, p =0.241, η2p = 0.085). Evaluation of the marginal means for SNR revealed the anticipated effect of reduced verbal processing time for the 9 dB SNR compared to the 3 dB SNR. Increasing verbal processing time was observed between conditions of limited bandwidth. The significant mean difference between the FB and LP 5.6 kHz conditions was 86.762 ms. In summary, nonword repetition and verbal processing time were both negatively impacted by noise and restricted bandwidth. Age in months was not correlated with mean verbal processing time (r =0.034, p = .898).

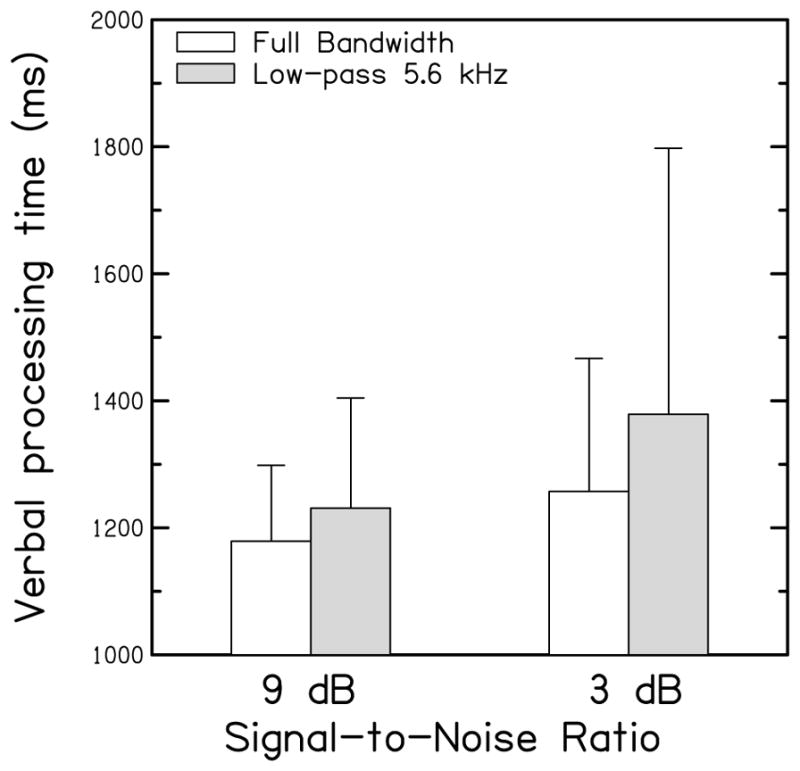

Word repetition and recall

Mean word repetition accuracy and free recall accuracy are plotted as a function of bandwidth condition in Figure 3. Because word repetition and word recall were not independent as they were derived from the same task, a multivariate analysis of variance (MANOVA) for repeated-measures analyzing word repetition and word recall was completed with bandwidth as a factor. The multivariate effect of bandwidth on the combined effect of word repetition and recall was significant (Wilks λ = 0.572; F = 5.614, p =0.015, η2p = 0.378). The univariate test for word repetition revealed no significant differences between bandwidth conditions (F (1,16) = 0.715, p =0.410, η2p = 0.043), whereas the univariate test for recall indicated a significant difference in recall between bandwidth conditions (F (1,16) =10.188, p =0.006, η2p = 0.389). Overall, no differences in word repetition between full bandwidth and low-pass filtered conditions were observed, but recall was significantly higher in the full band condition compared to the low-pass filtered condition.

Figure 3.

Word repetition and recall (percent correct) as a function of stimulus bandwidth (White bars – full bandwidth; Gray bars – Low-pass 5.6 kHz) for a 9 dB signal-to-noise ratio. Error bars are standard deviations. The asterisk denotes a significant difference between conditions bandwidth conditions for recall.

Due to the wide range of performance observed in recall ability across subjects, a linear regression analysis was conducted to evaluate age and expressive vocabulary scores (EVT) as predictors of recall. The bivariate correlation between age (in months) and EVT standard score was not significant (r = 0.169, p = 0.518). A regression model with age and EVT standard score as predictors suggested that both factors accounted for significant variance in word recall (R2=0.365, F (2,14) = 4.022, p = 0.043). The standardized regression coefficients for both age in months (β=0.496) and EVT standard score (β=0.271) were significant and suggested that higher age and language scores were associated with higher recall. To evaluate the influence of the lexical and phonotactic characteristics of the stimuli on word recall, the lexical frequency and biphone sum phonotactic probability of each word was correlated with the proportion correctly recalled. The range of biphone sum phonotactic probabilities for PBK words for the current study ranged from 0.0002 – 0.406, while the range of lexical frequency (per million words) ranged from 1.3 to 5.24. The relationships between the proportion of words correctly recalled and each word’s phonotactic probability (r = −0.122, p = 0.201) and lexical frequency (r = 0.065, p =0.504) were not significant.

Discussion

The goal of the current study was to evaluate the effects of reduced audibility on two processes associated with working memory in children. When the acoustics of the speech signal are degraded, children must rely on linguistic and cognitive skills to support speech understanding. The information-degradation hypothesis (Schneider & Pichora-Fuller, 2000) has been proposed in studied with aging adults to account for challenges associated with listening in degraded conditions that increase with aging. Although not previously applied to children, the information-degradation hypothesis would suggest that children will experience greater degradation in complex listening and learning tasks, because their cognitive and linguistic skills are still developing. Studies of speech understanding (Elliot, 1979), novel word learning (Pittman, 2008), and academic achievement (Shield & Dockrell, 2008) support the predictions that limited audibility may have negative effects on a wide range of auditory tasks in children.

To evaluate the impact of audibility on speed of processing in children, nonword repetition and verbal processing time were measured in six conditions using three different bandwidths and two different SNRs. For this task, nonword repetition accuracy was expected to decrease and verbal processing time was expected to increase as audibility was reduced, reflecting increased cognitive effort. Decreased nonword repetition was observed as in previous studies of nonword repetition of children under conditions of limited audibility (Elliot, 1979; Johnson, 2000; McCreery & Stelmachowicz, 2011). Verbal processing time also increased systematically as audibility decreased, suggesting that children required more time to process the degraded stimuli than in conditions where the stimulus was more audible. The current results suggest that in conditions of reduced audibility, greater processing time is required to process and repeat nonwords, leaving fewer cognitive resources available for other concurrent cognitive processes.

To evaluate the impact of reduced stimulus audibility on working memory, repetition and free recall for monosyllabic words was measured at a 9 dB SNR under two different bandwidths (Full bandwidth and LP 5.6kHz). For the word recall, there were no significant differences in word repetition between bandwidth conditions, but word recall was significantly higher in the full bandwidth condition than in the LP 5.6 kHz filtered condition. These results suggest that limited audibility due to background noise or restricted bandwidth can negatively impact the accuracy of word recall in children, even under conditions where word repetition is not negatively affected.

Age and expressive language skills were both found to contribute uniquely to word recall. Older children and those with higher expressive language scores had higher word recall scores, consistent with developmental studies of working memory (Andrews & Halford, 2002) and language (Baddeley, 2003). Recall was not related to the phonotactic probability or lexical frequency of the words used in the task. However, the PBK words used for the recall task were not developed to provide a range of phonotactic probabilities or lexical frequency characteristics that would be needed to directly evaluate these factors.

Word recall results in the current study have practical implications. First, recall of real words was negatively affected by a slight reduction in audibility. The difference in audibility between the two recall task conditions as measured by the Speech Intelligibility Index (SII; Full bandwidth = 0.6566; Low-pass 1 = 0.6249) was minimal, which is also evident by the lack of change in word repetition for that condition. Although word repetition was unchanged between the two bandwidth conditions, recall decreased with this small change in audibility. Decrements in recall related to small changes in audibility, even for conditions where repetition is not affected, suggest that speech repetition alone does not reflect how audibility can influence cognitive processing of auditory stimuli in children. Even for conditions where speech repetition appears to be intact, children may experience increased processing latencies and decreased working memory performance.

Cognitive processing skills and working memory are increasing across the age range of children in this study. Age would have been expected to predict individual variability across nonword repetition, verbal response time and word recall tasks. However, only word recall was significantly related to age. The disparity across age-related improvements in the different outcomes used in this study could be related to several factors. The small number of subjects across the entire age range of the study may have limited statistical power to examine age effects for nonword repetition and verbal response time. The use of nonword CVC stimuli with average phonotactic probability might have also limited the cognitive demands of the task and limited the observation of age-related differences for those outcomes.

Collectively, the findings of increased verbal processing time and decreased recall accuracy suggest that word repetition tasks, even with background noise, are not reflective of how children understand and process speech. Increased nonword verbal processing time and decreased word recall with acoustic degradation could provide support for future studies examining an underlying mechanism to previous studies that have shown how acoustic degradation negatively impacts word learning (Pittman, 2008). Furthermore, these findings may support further exploration into an underlying mechanism for the deficits observed in learning and academic achievement (Dockrell & Shield, 2006; Shield & Dockrell, 2008) in classrooms where acoustic conditions are poor.

Conclusion

The current study sought to describe the effects of noise and limited bandwidth on nonword verbal processing time and word recall for normal-hearing children. Nonword repetition and verbal processing time were both negatively affected by background noise and limited bandwidth. For real words, repetition was not affected by bandwidth, but recall was significantly poorer when the bandwidth of the stimulus was limited to 5000 Hz. These results suggest that acoustic degradation of the speech stimulus not only influences speech repetition, but also the storage and processing of stimuli in working memory. Working memory abilities have significant implications for the ability to learn new words, as well as academic learning. Future studies should evaluate if similar effects are observed in children with hearing loss, who experience decreased audibility and increased susceptibility to noise compared to peers with normal hearing.

Acknowledgments

The authors would like to thank Kanae Nishi for programming support in the calculation of verbal processing time. We would also like to thank Brenda Hoover and Dawna Lewis for comments on the manuscript. Research was supported by NIH grants F31 DC010505, R01 DC004300, R03 DC009334, and P30 DC4662.

Footnotes

Haskins, H.A. (1949) A phonetically balanced test of speech discrimination for children. Unpublished Master’s thesis. Evanston, IL: Northwestern University.

Contributor Information

Ryan W. McCreery, Boys Town National Research Hospital

Patricia G. Stelmachowicz, Boys Town National Research Hospital

References

- Andrews G, Halford GS. A cognitive complexity metric applied to cognitive development. Cognitive Psychology. 2002;45:153–219. doi: 10.1016/s0010-0285(02)00002-6. [DOI] [PubMed] [Google Scholar]

- ANSI. ANSI S3.5-1997, American National Standard Methods for Calculation of the Speech Intelligibility Index. American National Standards Institute; New York: 1997. [Google Scholar]

- Baddeley A. Working memory and language: an overview. J of Comm Dis. 2003;36:189–208. doi: 10.1016/s0021-9924(03)00019-4. [DOI] [PubMed] [Google Scholar]

- Bankson NW, Bernthal JE. Quick Screen of Phonology. San Antonio, TX: Special Press, Inc; 1990. [Google Scholar]

- Bradley JS, Sato H. The intelligibility of speech in elementary school classrooms. J Acoust Soc Am. 2008;123(4):2078–2086. doi: 10.1121/1.2839285. [DOI] [PubMed] [Google Scholar]

- Dockrell JE, Shield BM. Acoustical barriers in classrooms: the impact of noise on performance in the classroom. Br Educ Res J. 2006;32(3):509–525. [Google Scholar]

- Eisenberg LS, Shannon RV, Martinez AS, Wygonski J, Boothroyd A. Speech recognition with reduced spectral cues as a function of age. J Acoust Soc Am. 2000;107(5 Pt 1):2704–2710. doi: 10.1121/1.428656. [DOI] [PubMed] [Google Scholar]

- Elliott LL. Performance of children aged 9 to 17 years on a test of speech intelligibility in noise using sentence material with controlled word predictability. J Acoust Soc Am. 1979;66(3):651–653. doi: 10.1121/1.383691. [DOI] [PubMed] [Google Scholar]

- Gatehouse S, Gordon J. Response times to speech stimuli as measures of benefit from amplification. Br J Audiol. 1990;24(1):63–68. doi: 10.3109/03005369009077843. [DOI] [PubMed] [Google Scholar]

- Gathercole SE. Nonword repetition and word learning: The nature of the relationship. Applied Psycholinguistics. 2006;27:513–543. [Google Scholar]

- Gathercole SE, Frankish CR, Pickering SJ, Peaker S. Phonotactic influences on short-term memory. J Exp Psychol Learn Mem Cogn. 1999;25(1):84–95. doi: 10.1037//0278-7393.25.1.84. [DOI] [PubMed] [Google Scholar]

- Hicks CB, Tharpe AM. Listening effort and fatigue in school-age children with and without hearing loss. J Speech Lang Hear Res. 2002;45(3):573–584. doi: 10.1044/1092-4388(2002/046). [DOI] [PubMed] [Google Scholar]

- Hnath-Chisolm TE, Laipply E, Boothroyd A. Age-related changes on a children’s test of sensory-level speech perception capacity. J Speech Lang Hear Res. 1998;41(1):94–106. doi: 10.1044/jslhr.4101.94. [DOI] [PubMed] [Google Scholar]

- Hulme C, Roodenrys S, Schweickert R, Brown GD, Martin M, Stuart G. Word-frequency effects on short-term memory tasks: Evidence for a redintegration process in immediate serial recall. J Exp Psychol Learn Mem Cogn. 1997;23(5):1217–1232. doi: 10.1037//0278-7393.23.5.1217. [DOI] [PubMed] [Google Scholar]

- Jerger S. Current state of knowledge: Perceptual processing by children with hearing impairment. Ear Hear. 2007;28(6):754–765. doi: 10.1097/AUD.0b013e318157f049. [DOI] [PubMed] [Google Scholar]

- Johnson CE. Children’s phoneme identification in reverberation and noise. J Speech Lang Hear Res. 2000;43(1):144–157. doi: 10.1044/jslhr.4301.144. [DOI] [PubMed] [Google Scholar]

- Kahneman D. Attention and Effort. Englewood Cliffs, NJ: Prentice Hall; 1973. [Google Scholar]

- Kessler B, Treiman R, Mullennix J. Phonetic Biases in Voice Key Response Time Measurements. J Mem Lang. 2002;47:145–171. [Google Scholar]

- Knecht HA, Nelson PB, Whitelaw GM, Feth LL. Background noise levels and reverberation times in unoccupied classrooms: Predictions and measurements. Am J Audiol. 2002;11(2):65–71. doi: 10.1044/1059-0889(2002/009). [DOI] [PubMed] [Google Scholar]

- Mackersie C, Neuman AC, Levitt H. A comparison of response time and word recognition measures using a word-monitoring and closed-set identification task. Ear Hear. 1999;20(2):140–148. doi: 10.1097/00003446-199904000-00005. [DOI] [PubMed] [Google Scholar]

- Majerus S, Heiligenstein L, Gautherot N, Poncelet M, Van der Linden M. Impact of auditory selective attention on verbal short-term memory and vocabulary development. J Exp Child Psychol. 2009;103(1):66–86. doi: 10.1016/j.jecp.2008.07.004. [DOI] [PubMed] [Google Scholar]

- Majerus S, Poncelet M, Greffe C, Van der Linden M. Relations between vocabulary development and verbal short-term memory: The relative importance of short-term memory for serial order and item information. J Exp Child Psychol. 2006;93(2):95–119. doi: 10.1016/j.jecp.2005.07.005. [DOI] [PubMed] [Google Scholar]

- McCreery R, Ito R, Spratford M, Lewis D, Hoover B, Stelmachowicz PG. Performance-intensity functions for normal-hearing adults and children using computer-aided speech perception assessment. Ear Hear. 2010;31(1):95–101. doi: 10.1097/AUD.0b013e3181bc7702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mlot S, Buss E, Hall JW., III Spectral integration and bandwidth effects on speech recognition in school-aged children and adults. Ear Hear. 2010;31(1):56–62. doi: 10.1097/AUD.0b013e3181ba746b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montgomery JW, Magimairaj BM, O’Malley MH. Role of working memory in typically developing children’s complex sentence comprehension. Journal of psycholinguistic research. 2008;37(5):331–54. doi: 10.1007/s10936-008-9077-z. [DOI] [PubMed] [Google Scholar]

- Neuman AC, Wroblewski M, Hajicek J, Rubinstein A. Combined effects of noise and reverberation on speech recognition performance of normal-hearing children and adults. Ear Hear. 2010;31:336–344. doi: 10.1097/AUD.0b013e3181d3d514. [DOI] [PubMed] [Google Scholar]

- Nilsson M, Soli SD, Sullivan JA. Development of the hearing in noise test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am. 1994;95(2):1085–1099. doi: 10.1121/1.408469. [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller MK. Processing speed and timing in aging adults: psychoacoustics, speech perception, and comprehension. Int J Aud. 2003;42(Suppl 1):S59–67. doi: 10.3109/14992020309074625. [DOI] [PubMed] [Google Scholar]

- Pittman AL. Short-term word-learning rate in children with normal hearing and children with hearing loss in limited and extended high-frequency bandwidths. J Speech Lang Hear Res. 2008;51(3):785–797. doi: 10.1044/1092-4388(2008/056). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roodenrys S, Hinton M. Sublexical or lexical effects on serial recall of nonwords? J Exp Psychol Learn Mem Cogn. 2002;28(1):29–33. doi: 10.1037//0278-7393.28.1.29. [DOI] [PubMed] [Google Scholar]

- Schneider BA, Pichora-Fuller MK. Implications of perceptual deterioration for cognitive aging research. In: Craik FIM, Salthouse TA, editors. The handbook of aging and cognition. Lawrence Erlbaum; Mahwah, NJ: 2000. [Google Scholar]

- Shield BM, Dockrell JE. The effects of environmental and classroom noise on the academic attainments of primary school children. J Acoust Soc Am. 2008;123(1):133–44. doi: 10.1121/1.2812596. [DOI] [PubMed] [Google Scholar]

- Shield BM, Dockrell JE. External and internal noise surveys of London primary schools. Journal of the Acoustical Society of America. 2004;115(2):730–738. doi: 10.1121/1.1635837. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Effect of stimulus bandwidth on the perception of /s/ in normal- and hearing-impaired children and adults. J Acoust Soc Am. 2001;110(4):2183–2190. doi: 10.1121/1.1400757. [DOI] [PubMed] [Google Scholar]

- Stelmachowicz PG, Pittman AL, Hoover BM, Lewis DE. Aided perception of /s/ and /z/ by hearing-impaired children. Ear Hear. 2002;23(4):316–324. doi: 10.1097/00003446-200208000-00007. [DOI] [PubMed] [Google Scholar]

- Storkel HL, Hoover JR. An online calculator to compute phonotactic probability and neighborhood density on the basis of child corpora of spoken American English. Behav Res Methods. 2010;42(2):497–506. doi: 10.3758/BRM.42.2.497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Studebaker GA. A “rationalized” arcsine transform. J Speech Hear Res. 1985;28(3):455–462. doi: 10.1044/jshr.2803.455. [DOI] [PubMed] [Google Scholar]

- Surprenant AM. Effects of noise on identification and serial recall of nonsense syllables in older and younger adults. Neuropsychol Dev Cogn B Aging Neuropsychol Cogn. 2007;14(2):126–143. doi: 10.1080/13825580701217710. [DOI] [PubMed] [Google Scholar]

- Whelan Robert. Effective analysis of reaction time data. Psychol Rec. 2008;58(3):Article 9. [Google Scholar]

- Williams KT. Expressive vocabulary test. 2. Pearson; Minneapolis, MN: 2007. [Google Scholar]