Abstract

Individuals weigh information about both rewarding and aversive stimuli in order to make adaptive decisions. Most studies of the orbitofrontal cortex (OFC), an area where appetitive and aversive neural subsystems might interact, have focused only on reward. Using a classical conditioning task where novel stimuli are paired with reward or aversive air-puff, we discovered that two groups of orbitofrontal neurons respond preferentially to conditioned stimuli associated with rewarding and aversive outcomes; however, information about appetitive and aversive stimuli converges on individual neurons from both populations. Therefore, neurons in OFC might participate in appetitive and aversive networks that track the motivational significance of stimuli even when they vary in valence and sensory modality. Further, we show that these networks, which also extend to the amygdala, exhibit different rates of change during reversal learning. Thus, although both networks represent appetitive and aversive associations, their distinct temporal dynamics might indicate different roles in learning processes.

Keywords: orbitofrontal cortex, reinforcement learning, conditioning, reward, aversive, monkey

Theorists have posited the existence of complementary appetitive and aversive systems in the brain – sometimes referred to as “opponent” networks.1–3 Consistent with this idea, we have reported two populations of neurons in the primate amygdala: one that responds more strongly to stimuli associated with reward, and one that responds more strongly to stimuli associated with aversive events.4 However, it remains unclear how these networks interact – in the amygdala and beyond – and where information about appetitive and aversive stimuli ultimately converges in the brain. The orbitofrontal cortex (OFC), a prefrontal area that is intimately interconnected with the amygdala,5–7 has often been proposed as a candidate area for the integration of information about the positive and negative motivational values of stimuli.8–9

Because OFC has long been implicated in adaptive decision-making and emotional regulation,10–12 it has become an area of great interest to those who are concerned with how the motivational significance of stimuli are represented in the brain. OFC is well situated anatomically to link sensory stimuli with affective properties: it receives projections from higher sensory cortices of all modalities,13–15 and is positioned to receive information related to motivation and emotion via reciprocal connections with areas such as the amygdala, hippocampus and striatum.5–7,16–18 Furthermore, outputs from OFC to the hypothalamus and other subcortical areas could participate in the regulation of physiological responses to motivationally relevant stimuli.19–20

These factors suggest OFC as a locus for the convergence of the appetitive and aversive associations of stimuli, and a possible neural substrate for decision-making based on their motivational significance. However, limiting our understanding, very few neurophysiological studies have investigated the representation of aversive events and associations, rather than just rewards, in this brain area. Likewise, there have been few studies examining the relationship of neural signals in OFC to activity in other brain areas, such as the amygdala, in response to either rewards or aversive events. These gaps in knowledge are an impediment to understanding how information about appetitive and aversive stimuli might come together in OFC, and how these signals might interact with those in other brain areas to facilitate adaptive behavior.

An approach for interrogating valence encoding in OFC

In order to investigate the representation of appetitive and aversive events in OFC, as well as in other key brain areas like the amygdala, our group has used a version of classical conditioning in which visual conditioned stimuli (CSs) are paired with rewarding or aversive unconditioned stimuli (USs; Fig. 1a).4,21–22 During each session, three novel, abstract fractal images are presented in a pseudo-random order and followed, after a delay – the trace interval – by one of three reinforcements: a large liquid reward (after the “strong positive” image), a small liquid reward (after the “weak positive” image), or an aversive air-puff targeted at the monkey’s face (after the “negative” image). Monkeys quickly learned to associate each CS with its outcome. Although no behavior was required other than visual fixation, monkeys developed responses to the images that revealed their expectation of reward – as indicated by anticipatory licking at the reward spout – or expectation of an aversive air-puff, as indicated by anticipatory eye closure, a defensive behavior which we refer to as “blinking.” As shown in Figure 1b,c, monkeys’ behavior effectively discriminated among all three image types.

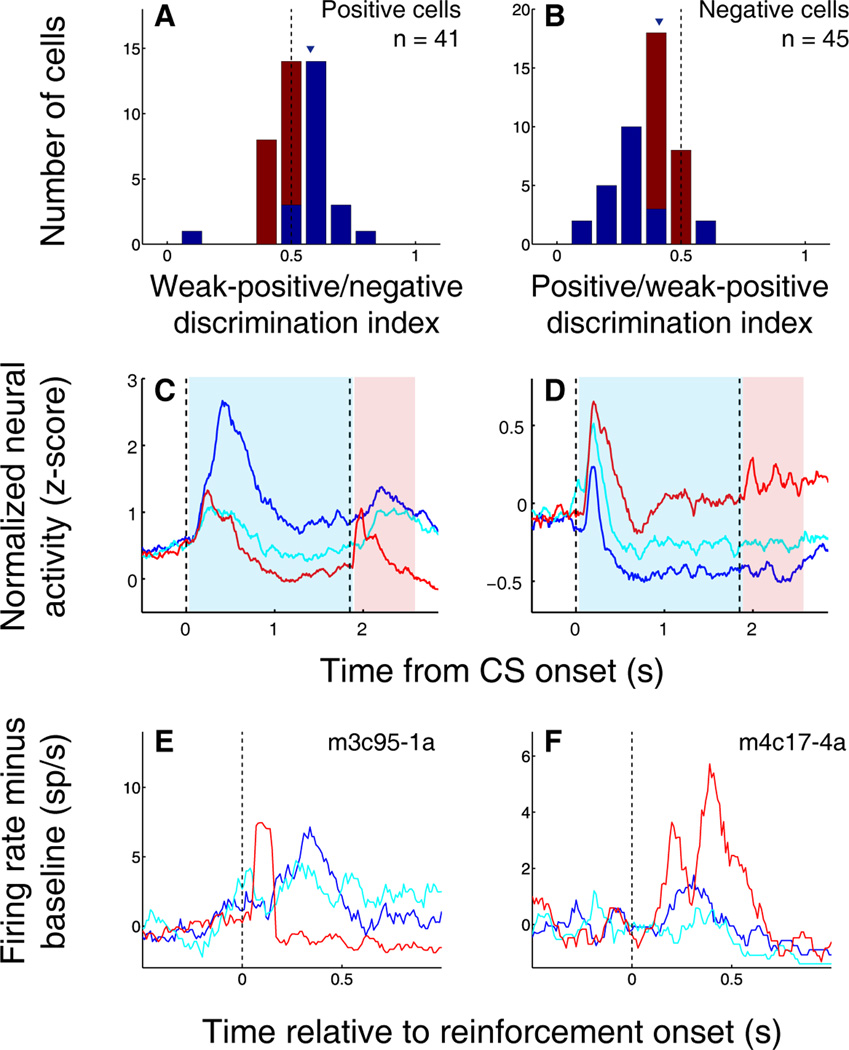

Figure 1.

OFC neurons and behavior differentiate among CSs in a mixed appetitive/aversive trace conditioning task. (A) Task structure. Top and bottom rows, images reverse associations with large rewards and air-puffs. Middle row, image is always associated with small reward. Reinforcement occurs with 80% probability on all trial types. (B,C) Mean probability of licking (B) and blinking (C) as a function of time during the trial. Data shown is for one subject averaged over all sessions. (D,E) Peristimulus time histograms (PSTHs) displaying the average activity across trials for one positive (D) and one negative (E) value-coding cell. PSTHs are aligned on image onset and truncated at the time of reinforcement. Blue, average activity during large reward (positive) trials; cyan, average activity during small reward (weak positive) trials; red, average activity during air-puff (negative) trials. Dashed vertical lines, image onset and offset.

After subjects learned the initial associations, the outcomes for the strong positive and negative images were switched without warning, and monkeys learned the new associations through experience. (The image that was followed by weak reward maintained the same association throughout the session.) The reversal was essential in order to disentangle neural signals related to reinforcement contingencies from signals pertaining to the sensory characteristics of images. Moreover, many experiments have shown that successful reversal learning – whether in rats, monkeys, or humans – relies upon an intact OFC.23–26 Thus, this task allowed us to examine the responses of individual orbitofrontal neurons during a type of learning in which OFC may play a primary role.

While monkeys performed this task, we recorded the activity of single neurons in OFC,21 focusing on cortical areas 13m and 13a.27 We selected these areas for two reasons: first, because they overlap with those regions examined by previous electrophysiological studies of macaque OFC, allowing a direct comparison of the results. Second, these caudal areas of OFC are densely interconnected with limbic areas such as the amygdala, which are implicated in the processing of affective information.5,7 The amygdala has been proposed to interact with the OFC in support of a variety of cognitive and affective processes,28 and our group has previously found robust signals there related to appetitive and aversive events.4,22

Responses to conditioned and unconditioned stimuli

Many studies in rodents and primates have identified orbitofrontal neurons that respond to cues that predict reward.29–36 These responses are modulated by such factors as reward magnitude or probability, subjective reward preference, and delay before reward delivery.31,33,35,37–39 In principle, they could also be modulated by motor responses elicited by rewards or their anticipation, or by the sensory characteristics of rewards themselves.35,40–42 Prior to our recent work, however, only a few studies examined the potential encoding of aversive cue-outcome associations in OFC. In a rare exception, Hosokawa et al. found that some OFC neurons responded differentially to cues related to differently preferred outcomes, including an aversive electrical stimulus; however, because the authors used an operant task, subjects learned to avoid the aversive outcome and rarely actually received a negative reinforcer following cue presentation.43 This was also true for earlier studies using aversive tastes as negative outcomes.29–30 The avoidance of an aversive event may be experienced as rewarding, and can in fact activate reward pathways in the brain.44–45 (Note that blinking, in our task, is a defensive behavior that does not constitute avoidance: it did not change the probability of experiencing the air-puff, although it may have reduced the subjective intensity.) By using classical conditioning, we ensured that reinforcements followed the CS presentations with a consistent probability.

Using the appetitive/aversive conditioning task described above, our group has found many neurons in OFC with responses that differentiated among cues that predicted different outcomes, including outcomes with different valences. These neurons do not have responses related to the motor responses (licking and blinking) occurring in anticipation of or in response to the appetitive and aversive outcomes;4,21 therefore, they do not simply represent the association between a CS and the motor response elicited by the predicted US. We used a two-way ANOVA with main factors of image identity and image value to identify 86 neurons (out of 217 cells recorded in two monkeys) that had a significant effect of image value on firing rate (p < 0.01). Two examples of such “value-coding” neurons are shown in Figure 1d,e, in which neuronal activity is aligned on CS presentation. The cell shown in Fig. 1d responds more strongly to an image that predicts strong reward than to the same image after reversal, when it predicts aversive air-puff; therefore, we categorize it as a positive value-coding cell. Conversely, the cell shown in Fig. 1e has a stronger response to a given image when it is associated with air-puff; therefore, we categorize it as a negative value-coding cell. Notably, in both cases, the response to the image that predicted weak reward was intermediate compared with the response to the strong positive or negative image. Thus, these neurons do not appear to simply represent the sensory characteristics of the outcomes associated with CSs. For example, consider the cell shown in Fig. 1e: its “preferred” US is the aversive air-puff, yet it also responds to a CS predicting a small liquid reward – a US with very different sensory characteristics.

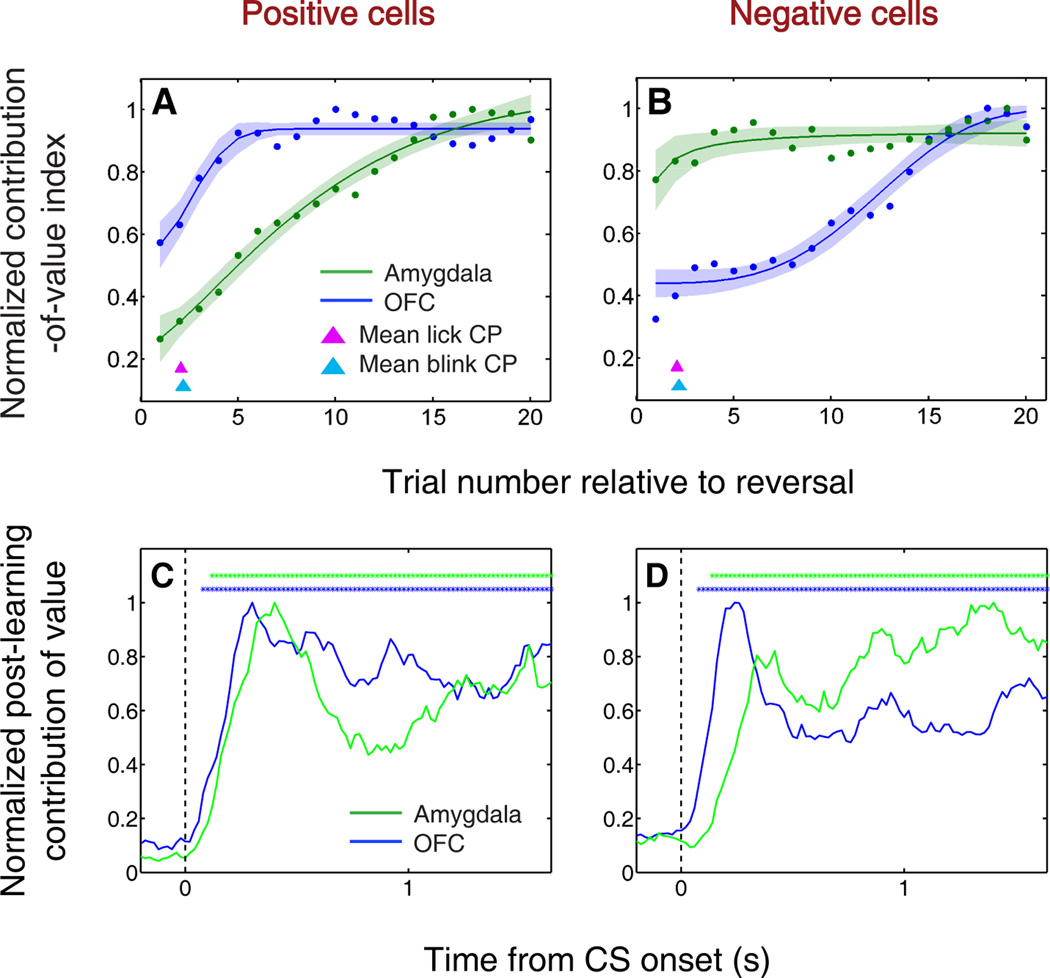

The examples in Fig. 1d,e suggest that, overall, the responses of OFC neurons to CSs incorporate information about both positive and negative outcomes. To find out whether this was generally the case, for each value-coding neuron recorded, we calculated a positive discrimination index, which compared responses to the strong positive and weak positive cues; and a negative discrimination index, which compared responses to the weak positive and negative cues (Fig. 2a,b). This revealed that the majority of these neurons indeed represent CSs in a “graduated” fashion, responding to the weak positive cue at an intermediate level relative to the strong positive and negative cue. This finding is similar to what we have previously shown for value-coding cells in the amygdala.22 Moreover, many individual positive value-coding cells had a significant negative discrimination index (Fig. 2a), and many individual negative value-coding cells had a significant positive discrimination index (Fig. 2b). Thus, even cells that fire most strongly to cues that predict air-puff, for example, still respond differentially to cues associated with different amounts of reward. Notably, nearly all value-coding cells responded during the CS and/or trace intervals on both positive and negative trials, suggesting that few cells process information about only one type of reinforcement association.

Figure 2.

OFC neuronal responses integrate information about appetitive and aversive stimuli. (A) Weak-positive/negative discrimination indices for all positive value-coding neurons. (B) Positive/weak-positive discrimination indices for all negative value-coding neurons. In (A) and (B), blue indicates significant discrimination index (p < 0.05, permutation test) and red indicates non-significant discrimination index. (C,D) Population average PSTH for all positive (C) and negative (D) value-coding neurons. Blue line, positive trials; cyan line, weak positive trials; red line, negative trials. Vertical dashed lines indicates image onset and reinforcement onset. Blue shading, CS-trace interval, activity different among all three trial types (p ≤ 0.001 for all comparisons, Wilcoxon); red shading, reinforcement interval, activity different among all three trial types (p ≤ 0.01 for all comparisons, Wilcoxon). (E–F) PSTHs of neural activity aligned on reward or air-puff onset. Activity is normalized by subtracting the average activity from the 500 ms preceding reinforcement. Vertical dashed line, reinforcement onset. Blue, response to large reward; cyan, response to small reward; red, response to air-puff. (A,B) Positive value-coding cell with excitatory responses to reward and air-puff. (C,D) Negative value-coding cell with excitatory responses to large reward and air-puff.

This analysis provided strong evidence that individual OFC cells, by and large, integrate information about positively and negatively valenced outcomes. The same was true of the overall population response. Figure 2c,d displays the average normalized activity of positive value-coding cells (Fig. 2c) and negative value-coding cells (Fig. 2d) in OFC, aligned on CS presentation. Both populations show clear discrimination among all three levels of CS value throughout the trace interval. Quantitatively, for both positive and negative value-coding cells, activity is significantly different among the three trial types over the entire time interval between CS presentation and reinforcement (blue highlighted areas; Wilcoxon, p < 0.001 for each comparison). Thus, value-coding cells in OFC comprise two distinct populations – those that fire more strongly in response to positively valenced stimuli, and those that fire more strongly in response to negatively valenced stimuli – but it is clear that both of these populations also integrate information about the non-preferred valence into their representations of CS value.

The population activity plots (Fig. 2c,d) further show that the differential firing rates for the three trial types continue into the reinforcement interval (red highlighted areas; Wilcoxon, p < 0.01 for each comparison). As we discuss in the next section, a possible implication is that OFC neurons, as a population, encode a representation of value which is not limited to any one stimulus, such as a CS or US. However, superimposed on this signal, we often observed responses to the USs – rewards and air-puffs – themselves. Our group has previously shown that neurons in the amygdala, many of which encode CS value in much the same way as individual OFC cells,4,22 often have responses to reinforcements of both valences, especially when those reinforcements are particularly salient because they occur unexpectedly.22,46 When characterizing OFC’s responses to primary reinforcement, however, many previous studies have highlighted neural signals related to reward,29,31–32,35 but few have examined responses to aversive events. We found that many OFC neurons – whether positive, negative, or non-value-coding – respond robustly to both rewards and aversive air-puff.21 In fact, the majority of positive value-coding cells (33/41) showed responses to air-puff, and more than half of negative value-coding cells (23/45) responded to large reward delivery. This phenomenon is illustrated by the examples shown in Figure 2e,f, in which neural activity is aligned on reward or air-puff delivery. A typical positive value-coding cell (Fig. 2e) exhibits a fast, robust response to air-puff; meanwhile, a typical negative value-coding cell (Fig. 2f) responds to reward as well as to aversive outcomes.

Among cells that had a significant response to large reward, more positive value-coding cells than negative were excited by reward; and more negative value-coding cells than positive were inhibited by reward (chi square test, p < 0.001). However, the converse was not true: among cells that responded to air-puff, both positive and negative value-coding cells showed more excitatory than inhibitory responses. As we discuss further below, we have proposed that positive and negative value-coding cells could be considered part of two separate, yet interactive sub-circuits – an appetitive and aversive network, respectively – that encode value with opposite signs.47 Consistent with this idea, we conclude that both the appetitive and aversive networks in OFC both receive information about both rewarding and aversive USs; however, in some cases (especially for reward) they encode that information differently. Notably, although many non-value-coding cells responded to rewards and/or air-puffs, more of them failed to respond to either reinforcement when compared with positive and negative value-coding cells (chi square test, p < 0.05). Overall, although there is a wide range of response profiles to reinforcement, many individual OFC cells seem to receive information about outcomes with a different affective valence – and even different modality – than the “preferred” CS and US of the appetitive or aversive network that they comprise.

Encoding of state value in OFC

State value, a key variable in several theoretical accounts of reinforcement learning,48–49 refers to the overall value of an agent’s “situation,” which is comprised of both external stimuli and internal motivational factors. Classic “actor-critic” and “Q-learning” models of reinforcement learning variously define “state” in terms of the probability, and sometimes the temporal delay, of future reward, or with reference to an ongoing rate of reward50–51. In our view, however, estimating the value of one’s state should take into account the current stimuli in the environment; anticipated future reinforcement; and internal conditions such as hunger or fatigue.22,52 Although we have not yet tested the sensitivity of OFC neurons to manipulations of internal motivational factors, the trace conditioning task does allow us to examine OFC neural responses to a range of stimuli in the same task.

We reasoned that if a neuron encodes state value, it should respond to various stimuli in a consistent manner according to its valence preference. In the trace conditioning task, the monkey experiences three stimuli during a completed trial: a fixation point (FP), the CS, and the US. Each stimulus provides new, motivationally relevant information as it appears, and can therefore be considered to initiate a new state. We have already noted that OFC neurons, as a population, respond differentially to the different trial types – large reward, small reward, or air-puff trials – in a manner that continues from CS presentation, through the trace period (when no stimulus is present, but the monkey is expecting an outcome), and into US presentation. This extended representation of motivational significance is clearly visible in the population activity of both the appetitive and aversive neuronal subgroups (Fig 2c,d), and is similar to the activity of the corresponding subpopulations in the amygdala.22

Thus, OFC activity encodes motivational significance consistently across CS and US presentation – but what about stimuli that are not explicitly involved in the conditioning protocol? At the start of the trial, the monkey is required to look at an FP for one second before the appearance of the CS. Because the FP is eventually followed by reward (either large or small) on two-thirds of reinforced trials, it is likely to be experienced as a mildly positive stimulus; this is also consistent with the fact that monkeys choose to look at the FP to begin a trial. Our group has previously examined the responses of individual amygdala neurons to the FP, and found that many of these cells indeed respond to the FP as if it were a weakly positive conditioned stimulus.22 We observe much the same phenomenon in individual neurons of the OFC. Positive value-coding neurons – neurons that fire more strongly to images associated with reward – often increase their firing in response to the FP; an example is shown in Fig. 3a, in which neural activity is aligned on FP presentation. Likewise, many negative value-coding neurons – neurons that fire more strongly to images associated with air-puff – decrease their firing when the FP appears (e.g. Fig. 3b).

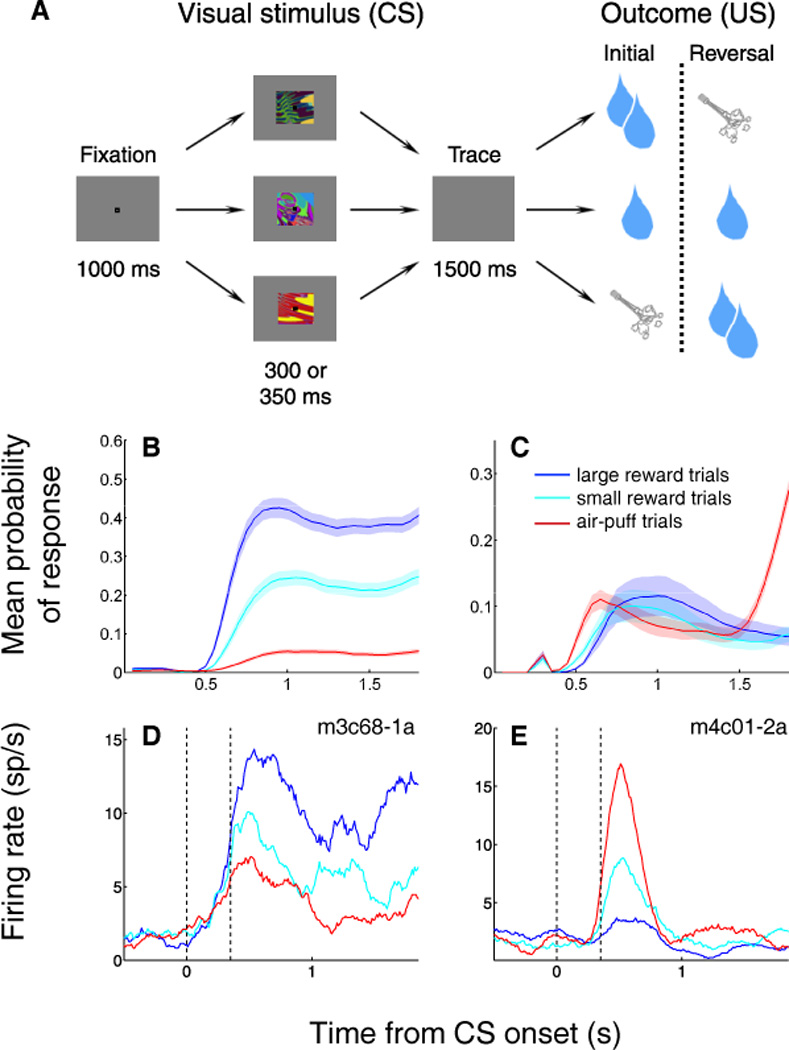

Figure 3.

OFC and amygdala neurons encode the value of states initiated by fixation point presentation. (A,B) PSTHs aligned on fixation point (FP) presentation for two individual OFC neurons. Blue, FP response on positive trials; cyan, FP response on weak positive trials; red, FP response on negative trials. (A) Positive value-coding cell exhibiting an increase in firing rate during FP presentation. (B) Negative value-coding cell exhibiting a decrease in firing rate during FP presentation. (C,D) Percentage of value-coding cells in OFC (C) and amygdala (D) with increases (blue), decreases (red), or no change (black) in firing rate during FP presentation. The number of cells in each category is indicated. In both brain areas, a plurality of positive value-coding cells increase firing in response to the FP, while a plurality of negative value-coding cells decrease firing in response to the FP.

Population-wide, the proportions of cells that increased or decreased firing in response to the FP (Fig. 3c) were significantly different between positive and negative value-coding populations in OFC (chi-square test, p < 0.01). Confirming our previous findings in these new subjects, we also found a similar relationship for positive and negative value-coding cells in the amygdala (Fig. 3d). These responses constitute evidence that many neurons in both OFC and amygdala – whether they are part of the appetitive or aversive network – encode the value of multiple events during the trial, suggesting that they may track the value of the subject’s overall “state.” As we discuss below, a key characteristic of state value – as defined here and in our previous work22 – is that it comprises an ongoing, inclusive representation of motivational value, even when such a representation is not immediately utilized for decision-making.

Learning dynamics of appetitive and aversive networks

As we have seen, there are many similarities between neurons of the appetitive and aversive networks that we have found in OFC21 and amygdala4,22: both types of neuron – positive value-coding and negative value-coding – respond to rewarding and aversive CSs and USs. Both are potentially well-suited for tracking state value. Other than the direction of encoding, how do the two populations differ? Recently, we discovered that appetitive and aversive networks in these two brain areas exhibit distinct time courses of learning-related neural changes47.

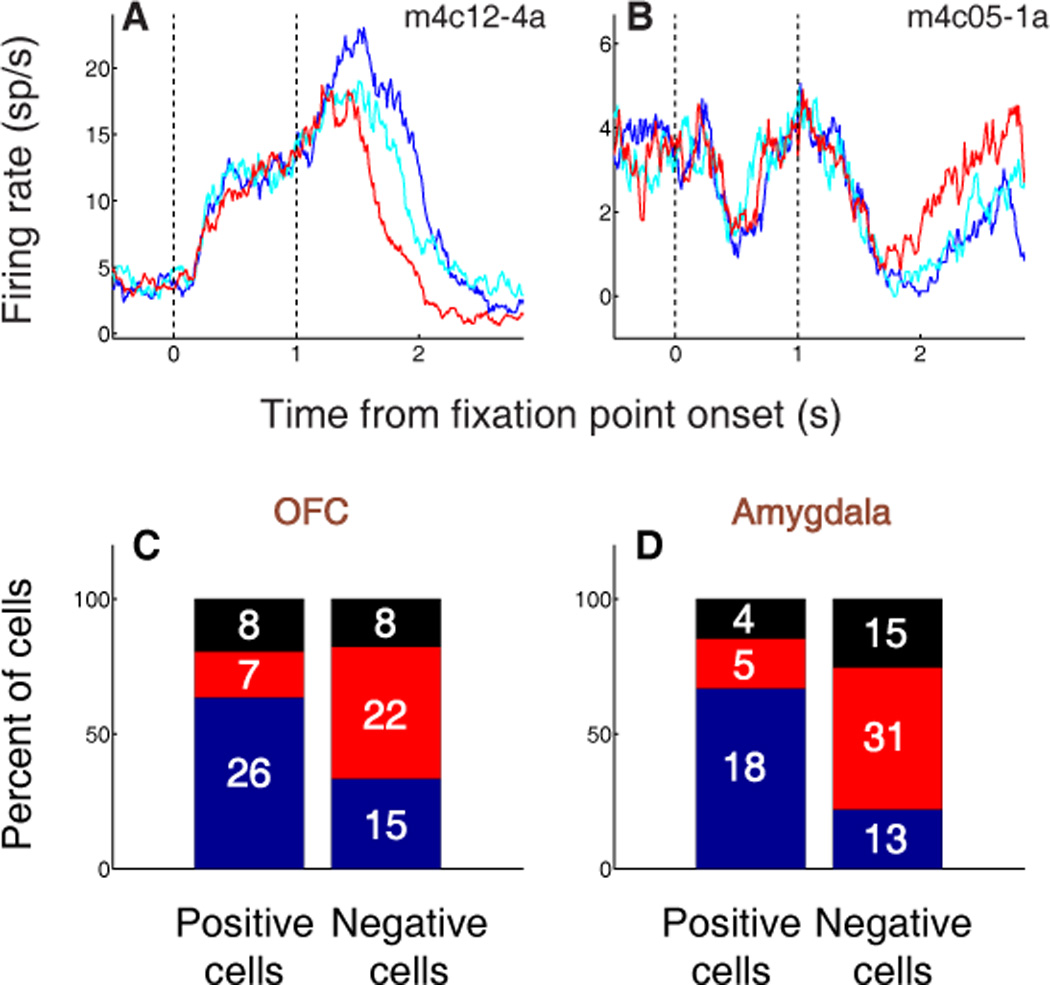

In order to compare the timing of learning-related changes, we recorded simultaneously in OFC and amygdala during the mixed appetitive and aversive reversal learning task. We used a sliding ANOVA to examine the specific contribution of CS value to neural signaling over the course of the trials immediately following reversal (Fig. 4a,b). For each neuronal subpopulation, the contribution of value increased as learning took place. Surprisingly, some groups of neurons “learned” the new reinforcement contingencies more rapidly than others. Among positive value-coding neurons, the contribution of value increased more rapidly and reached a plateau earlier in OFC than in amygdala (Fig. 4a). Negative value-coding neurons in amygdala, in contrast, changed their activity much faster than negative value-coding neurons in OFC (Fig. 4b). These findings suggest that distinct sequences of neural processing lead to the updating of representations within the appetitive and aversive networks.

Figure 4.

Positive and negative value-coding neurons exhibit different time courses of learning-related activity, but encode value earlier in OFC than amygdala after learning. (A,B) Normalized average contribution of image value to neural activity plotted as a function of trial number after reversal for positive value-coding neurons (A) and negative value-coding neurons (B). Contribution-of-value index is derived from a two-way ANOVA with factors of CS value and CS identity, applied over a six-trial window and stepped by one trial at a time. Red and cyan arrowheads indicate mean licking and blinking change points, respectively; the width of each arrowhead’s base indicates SEM. Neural activity is taken from 90–590 ms after CS onset. Curves are best-fit sigmoids (± 95% prediction intervals). (C,D) Normalized average contribution of image value as a function of time for positive value-coding cells (C) and negative value-coding cells (D). Contribution of value is again derived from a two-way ANOVA, now applied to 200 ms bins and stepped by 20 ms across the trial. Asterisks, time points at which the average contribution of value is significant (Fisher p < 0.0001) for OFC (blue) or amygdala (green).

After learning was completed, the timing of value-related signals in the two brain areas told a different story. When we examined the contribution of CS value to neural responses across time during the trial, focusing on post-learning trials, we found that OFC consistently signals expected reinforcement earlier than amygdala (Fig. 4c,d). This effect was significant among both positive (Fig. 4c) and negative (Fig. 4d) value-coding cells. Moreover, relative to amygdala, there were more OFC cells that encoded CS value with the earliest latencies (< 150 ms; χ2 test, p < 0.05). Thus, in contrast to the robust differences between the appetitive and aversive networks during learning, after learning OFC neurons from both networks encoded value earlier during the trial. This latter observation may reflect the primary role of prefrontal areas in emotional regulation with respect to both appetitive and aversive events.

Differing conceptions of value in OFC

Whether it is part of an appetitive or aversive network, a neuron that encodes state value would be expected to represent the value of CSs in a way that is monotonically related to the motivational significance of the USs with which they are associated.22 As discussed above, this is precisely the case for many individual neurons in OFC, as well as for positive and negative value-coding neurons as populations.21 Our findings support the idea that, as suggested by others,34 the activity of OFC neurons often does not simply represent general arousal, motivation, or attention. Rather, as we have argued, these neurons may be best described as representing the association between a CS and the motivational significance of a US across a spectrum of valence (positive to negative) and without regard to sensory characteristics – even USs of different sensory modality, like liquid reward (taste) and air-puff (somatosensory and auditory).21–22

Recently, other authors have emphasized the idea that OFC might represent outcome associations in a sensory-specific fashion: for example, a neuron might encode the association of a CS with a banana-flavored pellet, but not with a grape-flavored pellet, even if they have the same subjective value. Indeed, investigators have reported that lesions of rodent OFC lead to deficits in sensory-specific reinforcement learning, but not in conditioning mediated by general affective representations.41,53 Of course, some of the neurons that we have described in OFC could underlie this type of association: those that respond to cues associated with one type of US, but not other types.21 Consistent with this idea, Padoa-Schioppa and colleagues reported that the activity of some OFC neurons is best explained by “offer value” – the value of only one out of two juice-predicting cues available in the “offer” phase of a choice paradigm.35

However, sensory-specific responses seem not to comprise the majority of value-coding neurons in the areas from which we have recorded. This is also true in the work of Padoa-Schioppa et al., who showed that many OFC neurons have similar responses to cues that predict different flavors of juice, as long as the rewards are equivalent in subjective valuation.35,37 These “chosen value” responses were the single most frequent best-fit variable for OFC activity during the offer period.35 This observation reflects our own finding that most individual value-coding neurons, as well as the appetitive and aversive networks on a population level, have responses that integrate a range of CS and US characteristics including various sensory features – even different sensory modalities – and valences. Overall, these findings support a role for primate OFC in linking cues not just with specific outcomes, but with the general affective properties of those outcomes, whether rewarding or aversive. It remains an open question, however, whether OFC derives this affective information from its own representation of the specific task contingencies – as in “model-based” accounts of learning54 – or receives this information from elsewhere, as suggested by the preponderance of neurons that encode general affective value.

It is possible that disparate findings between studies in rodents and primates might be attributable to species differences. After all, it remains unclear to what degree the areas referred to as “OFC” in rodents are homologous to primate OFC. Investigators studying rodents point out that the pattern of connectivity between orbitofrontal areas and amygdala, striatum and sensory areas is qualitatively similar to that of primate OFC.55–57 However, prefrontal structures, including OFC, are far more developed in primates, including more evolutionarily advanced granular and dysgranular cortex that is entirely absent in rodents.7,27,58 Furthermore, OFC is intimately interconnected with other areas of prefrontal cortex that are well developed in primates, but not in rodents. The areas studied as OFC in rodents might be best compared with the most posterior aspect of OFC in primates – rather than with the granular/dysgranular areas 13, 14 and 1158 – perhaps accounting for some of the apparent differences between the representations found there.

Setting aside species differences, a number of authors have recently considered neural signals in OFC specifically in light of an economic view of value. The neuroeconomic viewpoint posits that stimuli can be valued using a common currency, and that this conversion to a universal form of value –sometimes referred to as “cardinal utility” – might take place in OFC9,41,59–61 The findings of our recent studies are broadly consistent with this idea, given the integration of information about appetitive and aversive stimuli, as well as about outcomes with different sensory properties, by individual neurons within OFC.21 There is evidence of this convergence in humans as well: for example, recent functional imaging findings show that the “goal values” of appetitive and aversive choice options (in this case, liked or disliked foods) are represented in the same region of human medial OFC.62

From a neuroeconomic viewpoint, “value” is described as a currency used to compare goods (or actions) in order to make decisions. The signals that we report are fundamentally consistent with the idea that OFC represents economic value; however, we suggest that OFC represents value in situations that extend beyond explicit choice situations – specifically, by participating in a representation of state value22. As we have discussed, many individual OFC neurons respond to a fixation point in a manner consistent with the idea that it has weakly positive motivational significance. There is only one fixation point presented in every trial, and it plays no obvious role in any explicit choice, other than the “choice” to begin a trial. Thus, the role of OFC is not limited to the representation of cues or outcomes that are currently the object of a decision, or even those that might be the object of a decision in the foreseeable future. Rather, as we propose above, OFC might contribute to economic decisions in a more general role, providing a continual representation of value that could be called upon – either by a downstream brain area, or within OFC itself – when it is needed to support decision-making.

At other times a state value representation might, for example, contribute to affect-related physiological responses – e.g., increased heart rate during emotional arousal – or even to mood. As we have previously suggested, it is also possible that different subpopulations of neurons in OFC encode value in different ways, supporting different affective functions. Ultimately, though, the concepts of state value and economic value may simply be two ways to look at the same signal: if decision-making is defined broadly enough – e.g., as selection of an appropriate behavioral response to a given situation from an array of possibilities – then we are all making decisions all the time. And each of these “micro-decisions” may require the integration and appraisal of value signals represented in OFC – and beyond, across appetitive and aversive networks that span multiple brain areas.

As we have shown, the appetitive and aversive networks both receive information about conditioned stimuli and reinforcements across a spectrum of valence. The existence of two networks that encode value with opposite signs might function, in part, to circumvent the limitations of firing rate (i.e., the fact that there are no negative firing rates), allowing an equally wide-ranging representation of both positive and negative value. Importantly, the two networks are interspersed anatomically, and are likely to work together in support of a variety of affective processes. At the same time, they exhibit distinct patterns of dynamics during reversal learning, and therefore may play different – perhaps complementary – roles in supporting flexible, adaptive shifts in behavior. Further experiments are needed to explore how representation of stimulus value in the appetitive and aversive networks – in OFC, amygdala, and other areas – are affected by learning, as well as by context: both internal (e.g., hunger or satiety) and external (e.g., additional stimuli that temporarily change of the meaning of an established CS). Ultimately, a flexible representation of value that incorporates all of these physiologically relevant factors may be most useful for facilitating adaptive decision-making in the natural environment.

References

- 1.Solomon RL, Corbit JD. An opponent-process theory of motivation. I. Temporal dynamics of affect. Psychological Review. 1974;81:119–145. doi: 10.1037/h0036128. [DOI] [PubMed] [Google Scholar]

- 2.Grossberg S. Some normal and abnormal behavioral syndromes due to transmitter gating of opponent processes. Biological Psychiatry. 1984;19:1075–1118. [PubMed] [Google Scholar]

- 3.Daw ND, Kakade S, Dayan P. Opponent interactions between serotonin and dopamine. Neural Networks. 2002;15:603–616. doi: 10.1016/s0893-6080(02)00052-7. [DOI] [PubMed] [Google Scholar]

- 4.Paton J, Belova M, Morrison S, Salzman C. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–870. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ghashghaei H, Barbas H. Pathways for emotion: interactions of prefrontal and anterior temporal pathways in the amygdala of the rhesus monkey. Neuroscience. 2002;115:1261–1279. doi: 10.1016/s0306-4522(02)00446-3. [DOI] [PubMed] [Google Scholar]

- 6.Carmichael ST, Price JL. Limbic connections of the orbital and medial prefrontal cortex in macaque monkeys. J Comp Neurol. 1995;363:615–641. doi: 10.1002/cne.903630408. [DOI] [PubMed] [Google Scholar]

- 7.Ghashghaei HT, Hilgetag CC, Barbas H. Sequence of information processing for emotions based on the anatomic dialogue between prefrontal cortex and amygdala. Neuroimage. 2007;34:905–923. doi: 10.1016/j.neuroimage.2006.09.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mainen ZF, Kepecs A. Neural representation of behavioral outcomes in the orbitofrontal cortex. Curr Opin Neurobiol. 2009;19:84–91. doi: 10.1016/j.conb.2009.03.010. [DOI] [PubMed] [Google Scholar]

- 9.Montague PR, Berns GS. Neural economics and the biological substrates of valuation. Neuron. 2002;36:265–284. doi: 10.1016/s0896-6273(02)00974-1. [DOI] [PubMed] [Google Scholar]

- 10.Izquierdo A, Suda RK, Murray EA. Bilateral orbital prefrontal cortex lesions in rhesus monkeys disrupt choices guided by both reward value and reward contingency. J Neurosci. 2004;24:7540–7548. doi: 10.1523/JNEUROSCI.1921-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Murray EA, Wise SP. Interactions between orbital prefrontal cortex and amygdala: advanced cognition, learned responses and instinctive behaviors. Curr Opin Neurobiol. 2010;20:212–220. doi: 10.1016/j.conb.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.O'Doherty JP. Lights, camembert, action! The role of human orbitofrontal cortex in encoding stimuli, rewards, and choices. Ann N Y Acad Sci. 2007;1121:254–272. doi: 10.1196/annals.1401.036. [DOI] [PubMed] [Google Scholar]

- 13.Carmichael ST, Price JL. Sensory and premotor connections of the orbital and medial prefrontal cortex of macaque monkeys. J Comp Neurol. 1995;363:642–664. doi: 10.1002/cne.903630409. [DOI] [PubMed] [Google Scholar]

- 14.Cavada C, Company T, Tejedor J, Cruz-Rizzolo RJ, Reinoso-Suarez F. The anatomical connections of the macaque monkey orbitofrontal cortex. A review. Cerebral Cortex. 2000;10:220–242. doi: 10.1093/cercor/10.3.220. [DOI] [PubMed] [Google Scholar]

- 15.Romanski LM, Bates JF, Goldman-Rakic PS. Auditory belt and parabelt projections to the prefrontal cortex in the rhesus monkey. J Comp Neurol. 1999;403:141–157. doi: 10.1002/(sici)1096-9861(19990111)403:2<141::aid-cne1>3.0.co;2-v. [DOI] [PubMed] [Google Scholar]

- 16.Haber SN, Kunishio K, Mizobuchi M, Lynd-Balta E. The orbital and medial prefrontal circuit through the primate basal ganglia. J Neurosci. 1995;15:4851–4867. doi: 10.1523/JNEUROSCI.15-07-04851.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kondo H, Saleem KS, Price JL. Differential connections of the perirhinal and parahippocampal cortex with the orbital and medial prefrontal networks in macaque monkeys. J Comp Neurol. 2005;493:479–509. doi: 10.1002/cne.20796. [DOI] [PubMed] [Google Scholar]

- 18.Barbas H, Blatt GJ. Topographically specific hippocampal projections target functionally distinct prefrontal areas in the rhesus monkey. Hippocampus. 1995;5:511–533. doi: 10.1002/hipo.450050604. [DOI] [PubMed] [Google Scholar]

- 19.Ongur D, An X, Price JL. Prefrontal cortical projections to the hypothalamus in macaque monkeys. J Comp Neurol. 1998;401:480–505. [PubMed] [Google Scholar]

- 20.Rempel-Clower NL, Barbas H. Topographic organization of connections between the hypothalamus and prefrontal cortex in the rhesus monkey. J Comp Neurol. 1998;398:393–419. doi: 10.1002/(sici)1096-9861(19980831)398:3<393::aid-cne7>3.0.co;2-v. [DOI] [PubMed] [Google Scholar]

- 21.Morrison SE, Salzman CD. The convergence of information about rewarding and aversive stimuli in single neurons. J Neurosci. 2009;29:11471–11483. doi: 10.1523/JNEUROSCI.1815-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Belova MA, Paton JJ, Salzman CD. Moment-to-moment tracking of state value in the amygdala. J Neurosci. 2008;28:10023–10030. doi: 10.1523/JNEUROSCI.1400-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Chudasama Y, Robbins TW. Dissociable contributions of the orbitofrontal and infralimbic cortex to pavlovian autoshaping and discrimination reversal learning: further evidence for the functional heterogeneity of the rodent frontal cortex. J Neurosci. 2003;23:8771–8780. doi: 10.1523/JNEUROSCI.23-25-08771.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fellows LK, Farah MJ. Ventromedial frontal cortex mediates affective shifting in humans: evidence from a reversal learning paradigm. Brain. 2003;126:1830–1837. doi: 10.1093/brain/awg180. [DOI] [PubMed] [Google Scholar]

- 25.Schoenbaum G, Setlow B, Nugent SL, Saddoris MP, Gallagher M. Lesions of orbitofrontal cortex and basolateral amygdala complex disrupt acquisition of odor-guided discriminations and reversals. Learn Mem. 2003;10:129–140. doi: 10.1101/lm.55203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Iversen SD, Mishkin M. Perseverative interference in monkeys following selective lesions of the inferior prefrontal convexity. Exp Brain Res. 1970;11:376–386. doi: 10.1007/BF00237911. [DOI] [PubMed] [Google Scholar]

- 27.Ongur D, Price JL. The organization of networks within the orbital and medial prefrontal cortex of rats, monkeys and humans. Cereb Cortex. 2000;10:206–219. doi: 10.1093/cercor/10.3.206. [DOI] [PubMed] [Google Scholar]

- 28.Salzman CD, Fusi S. Emotion, cognition, and mental state representation in amygdala and prefrontal cortex. Annu Rev Neurosci. 2010;33:173–202. doi: 10.1146/annurev.neuro.051508.135256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Thorpe SJ, Rolls ET, Maddison S. The orbitofrontal cortex: neuronal activity in the behaving monkey. Exp Brain Res. 1983;49:93–115. doi: 10.1007/BF00235545. [DOI] [PubMed] [Google Scholar]

- 30.Schoenbaum G, Chiba A, Gallagher M. Orbitalfrontal cortex and basolateral amygdala encode expected outcomes during learning. Nature Neuroscience. 1998;1:155–159. doi: 10.1038/407. [DOI] [PubMed] [Google Scholar]

- 31.Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- 32.Tremblay L, Schultz W. Reward-related neuronal activity during go-nogo task performance in primate orbitofrontal cortex. J Neurophysiol. 2000;83:1864–1876. doi: 10.1152/jn.2000.83.4.1864. [DOI] [PubMed] [Google Scholar]

- 33.Wallis JD, Miller EK. Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. Eur J Neurosci. 2003;18:2069–2081. doi: 10.1046/j.1460-9568.2003.02922.x. [DOI] [PubMed] [Google Scholar]

- 34.Roesch MR, Olson CR. Neuronal activity related to reward value and motivation in primate frontal cortex. Science. 2004;304:307–310. doi: 10.1126/science.1093223. [DOI] [PubMed] [Google Scholar]

- 35.Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kennerley SW, Dahmubed AF, Lara AH, Wallis JD. Neurons in the frontal lobe encode the value of multiple decision variables. J Cogn Neurosci. 2009;21:1162–1178. doi: 10.1162/jocn.2009.21100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Padoa-Schioppa C, Assad JA. The representation of economic value in the orbitofrontal cortex is invariant for changes of menu. Nat Neurosci. 2008;11:95–102. doi: 10.1038/nn2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Roesch MR, Taylor AR, Schoenbaum G. Encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation. Neuron. 2006;51:509–520. doi: 10.1016/j.neuron.2006.06.027. [see comment] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Roesch MR, Olson CR. Neuronal activity in primate orbitofrontal cortex reflects the value of time. J Neurophysiol. 2005;94:2457–2471. doi: 10.1152/jn.00373.2005. [DOI] [PubMed] [Google Scholar]

- 40.Feierstein CE, Quirk MC, Uchida N, Sosulski DL, Mainen ZF. Representation of spatial goals in rat orbitofrontal cortex. Neuron. 2006;51:495–507. doi: 10.1016/j.neuron.2006.06.032. [see comment] [DOI] [PubMed] [Google Scholar]

- 41.Burke KA, Franz TM, Miller DN, Schoenbaum G. The role of the orbitofrontal cortex in the pursuit of happiness and more specific rewards. Nature. 2008;454:340–344. doi: 10.1038/nature06993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kennerley SW, Wallis JD. Encoding of reward and space during a working memory task in the orbitofrontal cortex and anterior cingulate sulcus. J Neurophysiol. 2009;102:3352–3364. doi: 10.1152/jn.00273.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hosokawa T, Kato K, Inoue M, Mikami A. Neurons in the macaque orbitofrontal cortex code relative preference of both rewarding and aversive outcomes. Neurosci Res. 2007;57:434–445. doi: 10.1016/j.neures.2006.12.003. [DOI] [PubMed] [Google Scholar]

- 44.Kim H, Shimojo S, O'Doherty JP. Is avoiding an aversive outcome rewarding? Neural substrates of avoidance learning in the human brain. PLoS Biol. 2006;4:e233. doi: 10.1371/journal.pbio.0040233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Seymour B, et al. Opponent appetitive-aversive neural processes underlie predictive learning of pain relief. Nature Neuroscience. 2005;8:1234–1240. doi: 10.1038/nn1527. [DOI] [PubMed] [Google Scholar]

- 46.Belova MA, Paton JJ, Morrison SE, Salzman CD. Expectation modulates neural responses to pleasant and aversive stimuli in primate amygdala. Neuron. 2007;55:970–984. doi: 10.1016/j.neuron.2007.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Morrison SE, Saez A, Lau B, Salzman CD. Different time courses for learning-related changes in amygdala and orbitofrontal cortex. Neuron. doi: 10.1016/j.neuron.2011.07.016. (In press). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Sutton R, Barto A. Reinforcement Learning. MIT Press; 1998. [Google Scholar]

- 49.Dayan P, Abbott LF. Theoretical Neuroscience. MIT Press; 2001. [Google Scholar]

- 50.Dayan P, Balleine BW. Reward, motivation, and reinforcement learning. Neuron. 2002;36:285–298. doi: 10.1016/s0896-6273(02)00963-7. [DOI] [PubMed] [Google Scholar]

- 51.Dayan P, Niv Y. Reinforcement learning: the good, the bad and the ugly. Curr Opin Neurobiol. 2008;18:185–196. doi: 10.1016/j.conb.2008.08.003. [DOI] [PubMed] [Google Scholar]

- 52.Salzman CD, Fusi S. Emotion, cognition, and mental state representation in amygdala and prefrontal cortex. Annual review of neuroscience. 2010;33:173–202. doi: 10.1146/annurev.neuro.051508.135256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Burke KA, Franz TM, Miller DN, Schoenbaum G. Conditioned reinforcement can be mediated by either outcome-specific or general affective representations. Frontiers in integrative neuroscience. 2007;1:2. doi: 10.3389/neuro.07.002.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- 55.Preuss TM. Do rats have prefrontal cortex? The Rose-Woolsey-Akert program reconsidered. J Cogn Neurosci. 1995;7:1–24. doi: 10.1162/jocn.1995.7.1.1. [DOI] [PubMed] [Google Scholar]

- 56.Schoenbaum G, Roesch M. Orbitofrontal cortex, associative learning, and expectancies. Neuron. 2005;47:633–636. doi: 10.1016/j.neuron.2005.07.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Schoenbaum G, Roesch MR, Stalnaker TA, Takahashi YK. A new perspective on the role of the orbitofrontal cortex in adaptive behaviour. Nat Rev Neurosci. 2009;10:885–892. doi: 10.1038/nrn2753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Wise SP. Forward frontal fields: phylogeny and fundamental function. Trends Neurosci. 2008;31:599–608. doi: 10.1016/j.tins.2008.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Sugrue LP, Corrado GS, Newsome WT. Choosing the greater of two goods: neural currencies for valuation and decision making. Nat Rev Neurosci. 2005;6:363–375. doi: 10.1038/nrn1666. [DOI] [PubMed] [Google Scholar]

- 61.Kable JW, Glimcher PW. The neurobiology of decision: consensus and controversy. Neuron. 2009;63:733–745. doi: 10.1016/j.neuron.2009.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Ishiwari K, Weber SM, Mingote S, Correa M, Salamone JD. Accumbens dopamine and the regulation of effort in food-seeking behavior: modulation of work output by different ratio or force requirements. Behavioural brain research. 2004;151:83–91. doi: 10.1016/j.bbr.2003.08.007. [DOI] [PubMed] [Google Scholar]