Abstract

Background

Changes in resident outcomes may be driven by many factors, including changes in nursing home care processes. Understanding what processes, if any, lead to successful improvements in resident outcomes could create a stronger case for the continued use of these outcome measures in nursing home report cards.

Objective

To test the extent to which improvements in outcomes of care are explained by changes in nursing home processes, a setting where, to our knowledge, this link has not been previously studied.

Research Design/Measures

We describe facility-level changes in resident processes and outcomes before and after outcomes were publicly reported. We then assess the extent to which the changes in outcomes are associated with changes in nursing home processes of care, using the public release of information on nursing home outcomes as a source of variation in nursing home outcomes to identify the process-outcome relationship.

Subjects

All 16,623 U.S. nursing homes included in public reporting from 2000 to 2009 in OSCAR and the nursing home Minimum Data Set

Results

Of the 5 outcome measures examined, only improvements in the percentage of nursing home residents in moderate or severe pain were associated with changes in nursing home processes of care. Furthermore, these changes in the measured process of care explained only a small part of the overall improvement in pain prevalence.

Conclusions

A large portion of the improvements in nursing home outcomes were not associated with changes in measured processes of care suggesting that processes of care typically measured in nursing homes do little to improve nursing home performance on outcome measures. Developing quality measures that are related improved patient outcomes would likely benefit quality improvement. Understanding the mechanism behind improvements in nursing home outcomes is key to successfully achieving broad quality improvements across nursing homes.

Introduction

Establishing a link between processes and outcomes of care has become an essential part of current efforts to improve health care quality. Many quality measures focus on process of care with the implicit assumption, first noted by Donabedian, that improvements in process will lead to improvements in patient outcomes (1). Indeed, the use of processes of care is most often well supported by scientifically rigorous efficacy studies suggesting that if care is appropriately delivered, patient outcomes will improve (2, 3). Despite this evidence base, research on the effectiveness of these processes in real-world settings has found that improved processes do not always translate to improved outcomes at the individual (4–6) or provider level (7, 8). As a result, questions remain about whether measuring and providing incentives to improve processes of care will lead to improved patient outcomes.

An alternative approach is to focus measurement and incentives directly on outcomes because this is the endpoint that patients care most about. This approach is being increasingly adopted across multiple health care settings, including hospitals, nursing homes, and home health agencies (9–11). However, disadvantages of tying incentives to outcome measures are often cited. Unlike process measures, which can directly inform quality improvement activities, outcomes may be difficult to change and may be perceived as being somewhat beyond the control of the provider. For outcome measures to be effective tools for quality improvement, providers must know what processes affect patient outcomes and how these inputs can be targeted to improve outcomes.

Linking processes and outcomes is thus a vital part of the quality-improvement agenda. However, establishing a link between process and outcomes measures has proven challenging. Prior work, primarily in the setting of hospitals (7, 8) and outpatient care (4, 5) has found small effects when using cross-sectional data to test the correlation between process and outcome measures. Potential endogeneity also limits the conclusions as providers who perform well on process measures may also perform well on outcome measures for reasons other than a direct link between process and outcomes—other, unobserved factors may determine both types of performance.

Our objective in this paper is to test the extent to which improvements in outcomes of care are explained by changes in nursing home processes, a setting where, to our knowledge, this link has not been previously studied on a large scale. We also take advantage of a public reporting initiative in nursing homes initiated by the Center for Medicare and Medicaid Services (CMS), Nursing Home Compare, as an exogenous source of outcome improvements in nursing homes to address potential endogeneity. Our goal is not to test an all-encompassing or exhaustive list of organizational changes that could lead to improved patient outcomes. Rather, we focus on the set of process measures that are commonly used in nursing homes as the focus of state surveys of nursing home quality.

Background and Setting

Public reporting is a frequently adopted policy initiative designed to improve health care quality in two ways (12). First, public reporting may motivate improvements in the quality of individual providers, increasing provider-specific quality of care. Second, public reporting may increase the likelihood that patients select high-quality providers, thus increasing the number of patients receiving high-quality care. If either of these effects is realized, quality will improve.

In an effort to improve quality in nursing homes, the CMS began publicly rating all U.S. nursing homes on the quality of care they deliver on their website, Nursing Home Compare, in 2002.1 When Nursing Home Compare was launched it included 10 clinical quality measures covering the care of long-term and post-acute nursing home residents as well as measures of nurse staffing and deficiencies. The 10 clinical quality measures captured information about short-term outcomes (such as the percent of nursing home residents with moderate or severe pain or the percent with pressure sores) and adverse outcomes (such as insertion of bladder catheter or use of physical restraints). In both cases we refer the quality measures as outcome measures as they are clinical endpoints that are thought to be affected by inputs such as processes of care.

A number of studies have documented improvements in these outcomes related to the launch of public reporting (13, 14). However, these quality improvements have been modest and the mechanisms through which nursing homes successfully improved performance is unknown.

Changes in resident outcomes may be driven by many factors, including changes in nursing home processes of care. A number of nursing home processes are routinely tracked in nursing homes by state surveys including the number of patients receiving special services designed to improve quality, such as use of bladder training programs or skin care to prevent pressure ulcers. Some work has found that nursing home process is often related to resident outcomes. For example, use of skin care prevention programs were associated with improved pressure sore outcomes (2, 15). Understanding whether processes of care lead to improvements in resident outcomes could help elucidate the mechanism for the observed improvements and create a stronger case for the continued use of these outcome measures in nursing homes.

Methods

Empirical Approach

Our goal is to examine the extent to which changes in nursing home outcomes can be explained by changes in processes of care. Because the relationship between process and outcomes is potentially endogenous (e.g. unobserved factors such as nursing home finances or nursing home culture could explain nursing home performance on both process and outcome measures) we use nursing home fixed effects to control for omitted variables bias and take advantage of the introduction of public reporting as exogenous shock that motivated changes in the outcomes of care that are reported on Nursing Home Compare. We thus test the extent to which outcome improvements observed under Nursing Home Compare could be explained by changes in nursing home processes of care over the period 2000 to 2009 among all U.S. nursing homes that were included in public reporting for long-term care.

Data

We combined two data sources for this study. First, we used the nursing home Minimum Data Set (MDS) to measure resident outcomes in the 17,237 nursing homes included in Nursing Home Compare for long-stay measures during our study period. The MDS contains detailed clinical data collected at regular intervals for every resident in a Medicare- and/or Medicaid-certified nursing home. These data are collected and used by nursing homes to assess needs and develop a unique plan of care for each resident. Because of the validity and reliability of these data (16, 17) and the detailed clinical information contained therein, they are also the data source for the facility-level clinical quality measures included in Nursing Home Compare.

Second, to measure nursing home processes, we merge the MDS data with the Online Survey, Certification and Reporting (OSCAR) dataset. OSCAR is a state survey of all Medicare- and/or Medicaid-certified nursing facilities that is conducted every 12 to 15 months and is used to determine regulatory compliance. OSCAR provides the most comprehensive national source of facility-level information on the operations, resident characteristics, and regulatory compliance of nursing homes and contains numerous process measures that can be reasonably linked to Nursing Home Compare outcomes. Because quality measures from MDS are measured quarterly and OSCAR-based process measures are measured approximately annually, we merge each OSCAR-based observation with the approximately four MDS-based observations that occurred after the given OSCAR survey date (but prior to the next OSCAR survey date). We thus ensure that processes of care (the inputs) occur prior to the outcomes.2 Six hundred fourteen of the 17,237 nursing homes identified in MDS were dropped, as they were not in the OSCAR data (dropping 3.6% of the original sample), leaving 16,623 nursing homes in the final sample.

Quality measures

Our dependent variables were facility-level chronic care outcome measures published on Nursing Home Compare (see Table 1 for a list of these outcomes). To obtain quality measures both before and after each measure was publicly reported, we calculate the quality measures for each facility from MDS following CMS’s method for Nursing Home Compare (18): Each measure is calculated quarterly based on the three months preceding the score’s calculation; includes one assessment per nursing home resident per quarter; and includes all facilities with at least 30 cases during the target time period. While we initially assessed all chronic care outcomes included in Nursing Home Compare, our final analysis is limited to those outcome variables that significantly improved with the release of Nursing Home Compare (as we are interested in understanding drivers for this improvement) and that had associated processes of care included in OSCAR (as described below).

Table 1.

Outcome measures included in Nursing Home Compare (from the Minimum Data Set) and the process of care associated with these outcomes (from OSCAR data). Release dates of the outcome measures on Nursing Home Compare and means (and standard deviations) of outcome and process measures are included.

| Outcome measure from Nursing Home Compare (and public release date) | Mean (SD) of outcome measure | Associated process of care | Mean (SD) of process measure | |

|---|---|---|---|---|

| Pain | % long-stay residents with moderate or severe pain (released November 2002; April 2002 in pilot states) | 5.8 (5.7) | % of residents in pain management program | 22.1 (21.5) |

| Incontinence | % long-stay residents who have/had a catheter inserted and left in their bladder (released January 2004) | 6.0 (6.2) | % of residents on written bladder training program | 6.3 (13.1) |

| Pressure sores | % high-risk long-stay residents with pressure sores (released November 2002; April 2002 in pilot states) | 12.9 (8.9) | % of residents receiving preventive skin care | 70.7 (30.0) |

| % low-risk long-stay residents with pressure sores (released November 2002; April 2002 in pilot states) | 2.6 (4.8) | % of residents receiving preventive skin care | 70.7 (30.0) | |

| Weight loss | % long-stay residents with significant weight loss (released November 2004) | 9.0 (6.2) | % of residents receiving tube feeds % of residents receiving mechanically altered diets % of residents with assist devices while eating |

6.3 (7.5) 35.1 (13.7) 8.1 (9.2) |

Our main independent variables were facility-level measures of processes of care that could directly influence the outcomes of interest. These are calculated from OSCAR as the percent of residents receiving each process of care. We limited our choice of outcomes and process measures to those that could arguably be tied quite closely to each other, subject to data availability and excluding outcomes that had no associated processes in OSCAR and processes that could not plausibly directly affect measured patient outcomes. The associated outcome and process measures are summarized in Table 1.

Covariates

We controlled for facility-level characteristics. Time-varying facility characteristics included each facility’s average resident age, Cognitive Performance Scale (19) and activities of daily living summary scale (20) in each quarter (calculated from MDS). We also controlled for the percentage of care in each facility-quarter devoted to each of the following special services: radiation therapy, chemotherapy, dialysis, intravenous therapy, respiratory treatments, tracheostomy care, ostomy care, suctioning, and injections (from OSCAR). We included three indicator variables reflecting the four quarters of the year to adjust for typical seasonal patterns in the outcomes measures. Finally, we controlled for time-invariant facility characteristics using facility fixed effects.

Analyses

We first assessed changes in facility-level processes and outcomes (separately) with the release of Nursing Home Compare by estimating the following equation using ordinary least squares:

| (1) |

where facility processes or outcomes (Yj, t) are a function of a post Nursing Home Compare dummy variable (Postt), facility-level covariates (Xj, t), and facility fixed effects (ϕj). The pre-post variable is specific to the timing of the public release of each outcome measure (see Table 1 for public release dates of each measure). In addition, we accounted for the 7-month early release of the initial measures in the six pilot states. The coefficient on the pre-post dummy, α, is the parameter of interest and represents changes in processes and outcomes with implementation of Nursing Home Compare.

We next assessed directly the relationship between changes in processes of care and facility-level outcomes. We did this using the following linear model:

| (2) |

where facility outcomes are a function of all related facility processes, a dummy variable for each time quarter (or quarterly fixed effects), the interaction between process and quarter fixed effects, facility-level covariates, and facility fixed effects. We included quarterly fixed effects to control for secular trends in nursing home quality that occurred over the study period. The interaction between these quarterly fixed effects and the facility processes of care allow us to estimate the relationship between nursing home processes and outcomes in each quarter. We estimate the process-outcome relationship quarterly as this relationship might be changing over time. We run this regression five times—once for each outcome variable.

Based on these models, we employed predictions to demonstrate the process-outcome relationship. First, we calculate two trends in outcomes over time–risk-adjusted observed outcomes in each quarter, and outcomes in each quarter as predicted only by process performance. We first used the results from equation (2) to predict risk-adjusted outcomes in each study quarter (i.e. using the predict command in Stata, which multiplies regression coefficients by observed values in the data). These risk-adjusted predictions capture the total variation over time in outcomes due to any source of change, including changes in measured processes. We then generated a second set of predictions from equation (2) after setting the un-interacted quarterly fixed effects equal to zero but retaining the observed values of the process measures. This set of predictions represents the risk-adjusted outcomes in each study quarter that are associated with the observed processes of care in that quarter. The difference between these two predictions captures outcome-based performance not predicted by our process measures--a residual category that may include unobserved process-based performance as well as other unobserved determinants of reported outcomes.

Second, we estimate how much of the change in outcomes with public reporting was due to process-based performance. We do this using the predicted values of the quarterly estimates of outcomes associated with processes of care described in the previous paragraph (described above as the “second set of predictions”). We first calculate the mean of the predicted quarterly estimates before and after public reporting was initiated. We then calculate the difference between these means. For all five outcomes, we test whether the difference between these two means is significantly different from zero using bootstrapping, sampling observations by nursing home using 500 iterations to calculate the standard errors and 95% confidence intervals around the difference in means.

Results

A total of 16,623 nursing homes were included in the study over the 10-year period of 2000 to 2009. Average nursing home performance on chronic care outcomes and on related processes of care are displayed in Table 1.

Changes in processes of care associated with the release of Nursing Home Compare are displayed in Table 2. Measured processes improved in only two cases—processes associated with pain management and preventive skin care. Paradoxically, process measures associated with preventing weight loss worsened after Nursing Home Compare was launched.

Table 2.

Change in processes of care with the publication of the outcome measures on Nursing Home Compare (NHC)

| Change with NHC* | ||

|---|---|---|

| Mean (95% CI) | P-value | |

| Processes of care, % | ||

| Enrolled in pain management program | 9.00 (8.76 to 9.24) | <.001 |

| On written bladder training program | 0.02 (−0.15 to 0.19) | 0.82 |

| Receiving preventive skin care | 9.43 (8.98 to 9.87) | <.001 |

| Receiving mechanically altered diets | −1.61 (−1.76 to −1.46) | <.001 |

| Receiving tube feeds | −0.87 (−0.92 to −0.81) | <.001 |

| With assist devices while eating | −0.09 (−0.19 to 0.00) | 0.06 |

Results and tests of statistical significance based on the coefficient α in Equation (1)

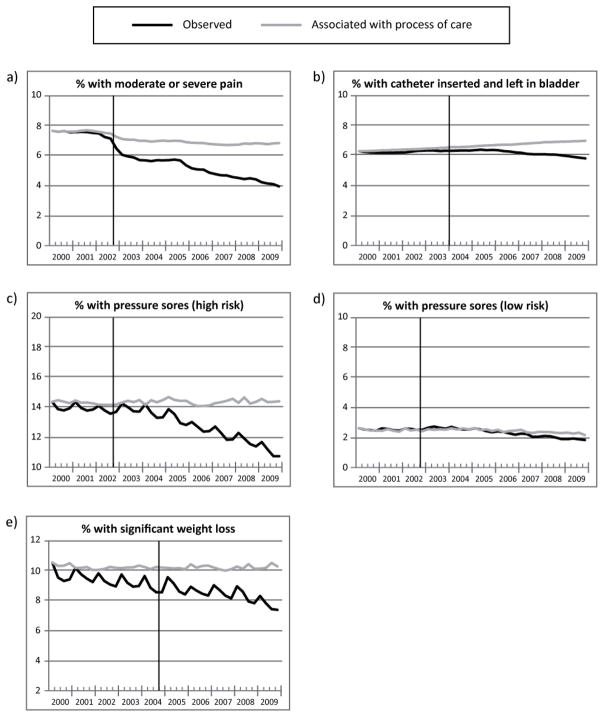

Figure 1 displays the trends in nursing home outcomes over time for each outcome measure, with the black line representing overall outcomes and the grey line representing outcomes associated with measured processes of care. We find that outcomes associated with process of care declined less over our study period than overall changes in outcomes for most measures. For example, the percentage of residents with moderate or severe pain dropped at the time public reporting was initiated, an apparent improvement in quality. When we examine the percentage of residents in pain associated with an increased enrollment of residents in pain management programs, we continue to see a decline in pain levels over the study period but the decline is substantially smaller (Figure 1a). In other words, something other than enrollment in pain management programs is driving much of the reduction in reported pain. Perhaps most striking is that the relationship between process and outcomes became less strong after public reporting was initiated for most outcome measures, indicated by diverging trend lines, suggesting that observed processes are explaining a smaller amount of the variations in outcomes over time and that changes in outcomes are being driven by other unobserved factors.

Figure 1.

Nursing home outcomes overall and predicted by process of care over the study period for five nursing home outcomes. The black line represents overall trends in the outcome over time and the grey line represents trends in the outcome that are predicted by changes in measured processes of care. The vertical line represents when the outcome information was publicly released on Nursing Home Compare.

Graphed quarterly outcomes are based on predictions from Equation (2) where the black line represents predictions directly from the observed data and the grey line represents predictions after the quarterly time dummies were set equal to zero.

Finally, we find that while the launch of Nursing Home Compare was associated with statistically significant overall improvements in outcomes, few of these changes could be explained by changes in processes of care (Table 3). For example, the percentage of residents in moderate or severe pain was 2.3 percentage points lower in the post-Nursing Home Compare period compared to the pre-Nursing Home Compare period (95% CI −2.42 to −2.26; p-value<.001). However, only 0.7 percentage points of the decline in the prevalence of pain that could be explained by changes in process of care, though this decline was still statistically different from zero (95% CI −0.79 to −0.61; p-value <.001). For three of the outcome measures (pressure sores for high-risk residents, pressure sores for low-risk residents, and significant weight loss) none of the statistically significant improvements in outcomes associated with Nursing Home Compare could be explained by changes in process. One outcome, catheter inserted in bladder, improved at the time of Nursing Home Compare, but viewed as a function of changes in processes of care related to bladder training, worsened.

Table 3.

The effect of Nursing Home Compare (NHC) on resident outcomes. The first two columns show the overall (risk-adjusted) change in facility-level outcomes when NHC was launched for each measure. The second two columns show the changes in facility-level outcomes associated with NHC that were associated with changes in nursing home processes of care.

| Change in overall outcome with NHC*

|

Change in outcome associated with change in process with NHC**

|

|||

|---|---|---|---|---|

| mean (95% CI) | P-value | mean (95% CI) | P-value | |

| Moderate or severe pain | −2.21 (−2.29 to −2.12) | <0.001 | −.70 (−.79 to −.61) | <.001 |

| Catheter inserted in bladder | −.37 (−.43 to −.32) | .001 | .38 (.33 to .41) | <.001 |

| Pressure sores (high-risk residents) | −1.05 (−1.14 to −.96) | <.001 | .08 (−.11 to .26) | .42 |

| Pressure sores (low-risk residents) | −.12 (−.15 to −.08) | <.001 | −.05 (−.12 to .02) | .20 |

| Significant weight Loss | −.89 (−.94 to −.84) | <.001 | −.03 (−.15 to .09) | .60 |

Results and tests of statistical significance based on the coefficient α in Equation (1)

Results based on predictions from Equation (2) where quartert was set to equal zero. The reported effect is the difference in mean quarterly outcome predictions before and after Nursing Home Compare. Tests of statistical significance are based on bootstrapping.

Discussion

In this study we test whether changes in nursing home outcomes can be explained by changes in observed processes of care. We find that despite statistically significant improvements in outcomes associated with Nursing Home Compare, process-based performance could explain improvements in only one of the five outcome measures we examined.

There are numerous possible explanations for the larger improvements in outcomes compared to the relatively small changes that are explained by processes of care. First, nursing homes may have instituted other process measures that were not observed in our data. Thus, we selected processes of care monitored in state surveys that are important elements of care and are related to each clinical outcome. Nonetheless, each process measure encompasses only part of the pathway of care that contributes to resident outcomes.

It is possible that additional processes of care that were not measured by OSCAR, and not included in our analyses, are used by nursing homes to improve resident outcomes. It is also possible that outcome improvements could be driven by non-process-based changes in care. There are likely other ways in which nursing homes change the delivery of care to improve outcomes that are unobservable to us. For example, changes in the structure of a nursing home’s staff, staff assignments, staff quality, and staff training could result in improvements in clinical outcomes that are not directly linked to the processes of care we measure (21, 22). There is also evidence that factors such as organizational culture and employee satisfaction affect nursing home quality and resident outcomes (23). While prior work has shown no improvement in nurse staffing levels over the time period when Nursing Home Compare was released (24), it is possible that outcome improvements may result from other non-process measures. However, due to data limitations, we cannot the role of many other factors in observed improvements in nursing home outcomes.

Finally, it is also possible that the observed processes explain a relatively small proportion of the changes in outcomes because there is a weak relationship between processes and outcomes. The process-outcome relationship has been inconsistent in numerous heath care settings. For example, it has been repeatedly found that in the hospital setting changes in hospital processes of care have little effect on patient outcomes such as mortality rates (7, 8, 25). Similarly, interventions to improve processes of care in outpatient settings have not always resulted in improved patient outcomes (4, 5). It is possible that the same is true in nursing homes. There may be a weak relationship between process and outcome for many reasons, including poor measures, inadequate risk-adjustment (26), the quality of the process of care may be poor (e.g., a preventive skin care program may be poorly implemented), or, while efficacious in controlled settings, the processes may be less effective in real-world settings.

We also found that processes of care seemed to explain less of the variation in outcomes after Nursing Home Compare is released, based on the diverging trend between overall and process-driven changes in outcomes. There is no inherent reason why the underlying clinical process-outcomes relationship should have changed dramatically at the time that Nursing Home Compare was implemented and suggests that other explanations for changes in reported outcomes became stronger. There are plausible explanations for this. First, the case mix of nursing home residents may be increasing over time in ways that we do not control for. Because the Nursing Home Compare quality measures that we use are minimally risk adjusted, this might cause more unexplained variance in the outcomes. Second, and more troubling, outcomes may have been coded differently in response to Nursing Home Compare, causing processes of care to be less important in explaining variations in outcomes. The possibility of upcoding or downcoding is consistent with prior research in this field. In the case of pain, past research has found a decline in the incidence of pain when residents were admitted to nursing homes, a finding that was most consistent with downcoding as these changes were not associated with changes in other resident characteristics associated with pain levels (27).

While we cannot definitively say what other factors are driving changes in reported outcomes, our finding of a significant relationship between changes in pain levels and pain-related processes of care is very important. In past studies on nursing home quality improvements after the implementation of Nursing Home Compare, the largest improvements have been in the percent of residents in pain (13, 14). There has been speculation that the documented improvements in pain levels may be an artifact of the data, related in part to the subjective nature of this measure and difficulties with accurate assessment of pain (28, 29), making it particularly prone to changes in data coding. Our findings suggest that these changes in pain levels are explained, at least in part, by the increasing enrollment in pain management programs.

These results have important implications. First, our study adds to accumulating evidence that nursing homes responded to the quality improvement incentive of publicly reporting quality information. At least in the case of pain management and preventive skin care, it appears that nursing homes responded to public reporting by improving their management of at-risk residents. Second, these results also suggest a mechanism for improving resident outcomes under Nursing Home Compare, though only for one measure. This information can be useful in thinking about how to further incentivize nursing homes to improve the care they provide, including the possibility of including well-supported process measures in incentive programs. Third, given the known limitations of currently used outcome measures and the lack of support for commonly used process measures, developing quality measures that are closely linked to patient outcomes would make it more likely that improvements in quality measures would lead to improved patient outcomes. Fourth, our findings suggest that perhaps the data used for incentives should be monitored to ensure its accuracy and validity. Financial incentives are being increasingly used in an attempt to improve health care quality, particularly under health care reform of the Affordable Care Act. Pay-for-performance has been increasingly adopted by state Medicaid agencies (30) and there is an ongoing Medicare-sponsored pay-for-performance demonstration in some states. With increased use of financial incentives to improve quality, the incentives for providers to change the coding of their data will remain. Monitoring of the data that is used to determine these incentives will be key to ensuring true improvements in quality of care. Finally, these results raise the possibility that incentives might be more effective if tied to both processes of care and outcomes. Using these in combination could help ensure not only that providers are doing the right thing to improve resident care but also focusing on what ultimately matters most to consumers—the resulting outcomes.

Acknowledgments

This work was funded by the National Institute on Aging under grant number R01 AG034182. Rachel Werner was supported in part by a VA HSR&D Career Development Award.

Footnotes

Nursing Home Compare was first launched as a pilot program in 6 states in April 2002 (in Colorado, Florida, Maryland, Ohio, Rhode Island, and Washington). Seven months later, in November 2002, Nursing Home Compare was launched nationally

Merging OSCAR and MDS one to one (deleting the remaining three unmatched MDS observations per nursing home-year) did not change our results.

Contributor Information

Rachel M. Werner, Email: rwerner@upenn.edu, Philadelphia VA and Perelman School of Medicine at the University of Pennsylvania, Blockley Hall, room 1204, 423 Guardian Drive, Philadelphia, PA 19104, 215-898-9278.

R. Tamara Konetzka, Email: konetzka@uchicago.edu, University of Chicago, 5841 S Maryland, MC2007, Chicago, IL 60637, 773-834-2202.

Michelle M. Kim, Email: mkim@wharton.upenn.edu, Department of Healthcare Management, (Wharton School of Business), University of, Pennsylvania, 3641 Locust Walk, Philadelphia, PA 19104, 215-573-0199.

References

- 1.Donabedian A. Evaluating the quality of medical care. Milbank Mem Fund Q. 1966;44:166–206. [PubMed] [Google Scholar]

- 2.Bergstrom N, Horn SD, Smout RJ, et al. The National Pressure Ulcer Long-Term Care Study: Outcomes of pressure ulcer treatments in long-term care. J Am Geriatr Soc. 2005;53:1721–1729. doi: 10.1111/j.1532-5415.2005.53506.x. [DOI] [PubMed] [Google Scholar]

- 3.Horn SD, Bender SA, Ferguson ML, et al. The National Pressure Ulcer Long-Term Care Study: pressure ulcer development in long-term care residents. J Am Geriatr Soc. 2004;52:359–367. doi: 10.1111/j.1532-5415.2004.52106.x. [DOI] [PubMed] [Google Scholar]

- 4.Mangione CM, Gerzoff RB, Williamson DF, et al. The association between quality of care and the intensity of diabetes disease management programs. Ann Intern Med. 2006;145:107–116. doi: 10.7326/0003-4819-145-2-200607180-00008. [DOI] [PubMed] [Google Scholar]

- 5.Landon BE, Hicks LS, O’Malley AJ, et al. Improving the management of chronic disease at community health centers. N Engl J Med. 2007;356:921–934. doi: 10.1056/NEJMsa062860. [DOI] [PubMed] [Google Scholar]

- 6.Jha AK, Joynt KE, Orav EJ, et al. The long-term effect of premier pay for performance on patient outcomes. N Engl J Med. 2012;366:1606–1615. doi: 10.1056/NEJMsa1112351. [DOI] [PubMed] [Google Scholar]

- 7.Bradley EH, Herrin J, Elbel B, et al. Hospital quality for acute myocardial infarction: correlation among process measures and relationship with short-term mortality. JAMA. 2006;296:72–78. doi: 10.1001/jama.296.1.72. [DOI] [PubMed] [Google Scholar]

- 8.Werner RM, Bradlow ET. Relationship between Medicare’s Hospital Compare performance measures and mortality rates. JAMA. 2006;296:2694–2702. doi: 10.1001/jama.296.22.2694. [DOI] [PubMed] [Google Scholar]

- 9.Centers for Medicare and Medicaid. [Accessed 09/19/2008];Nursing Home Compare. 2008 Available at: http://www.medicare.gov/Nhcompare/Home.asp.

- 10.Centers for Medicare and Medicaid. [Accessed 3/2/2008];Hospital Compare. 2004 Available at: http://www.hospitalcompare.hhs.gov/

- 11.Centers for Medicare and Medicaid. [Accessed 05/01/2006];Home Health Compare. Available at: http://www.medicare.gov/HHCompare/Home.asp.

- 12.Berwick DM, James B, Coye MJ. Connections between quality measurement and improvement. Med Care. 2003;41:I-30–I-38. doi: 10.1097/00005650-200301001-00004. [DOI] [PubMed] [Google Scholar]

- 13.Werner RM, Konetzka RT, Stuart EA, et al. The impact of public reporting on quality of post-acute care. Health Serv Res. 2009;44:1169–1187. doi: 10.1111/j.1475-6773.2009.00967.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Mukamel DB, Weimer DL, Spector WD, et al. Publication of quality report cards and trends in reported quality measures in nursing homes. Health Serv Res. 2008;43:1244–1262. doi: 10.1111/j.1475-6773.2007.00829.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Spector WD, Takada HA. Characteristics of nursing homes that affect resident outcomes. J Aging Health. 1991;3:427–454. doi: 10.1177/089826439100300401. [DOI] [PubMed] [Google Scholar]

- 16.Gambassi G, Landi F, Peng L, et al. Validity of diagnostic and drug data in standardized nursing home resident assessments: potential for geriatric pharmacoepidemiology. SAGE Study Group. Systematic Assessment of Geriatric drug use via Epidemiology. Med Care. 1998;36:167–179. doi: 10.1097/00005650-199802000-00006. [DOI] [PubMed] [Google Scholar]

- 17.Mor V, Angelelli J, Jones R, et al. Inter-rater reliability of nursing home quality indicators in the U. S BMC Health Services Research. 2003;3:20. doi: 10.1186/1472-6963-3-20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Morris JN, Moore T, Jones R, et al. Validation of Long-Term and Post-Acute Care Quality Indicators. Baltimore, MD: Centers for Medicare and Medicaid Services; 2003. [Google Scholar]

- 19.Morris JN, Fries BE, Mehr DR, et al. MDS Cognitive Performance Scale. J Gerontol. 1994;49:M174–182. doi: 10.1093/geronj/49.4.m174. [DOI] [PubMed] [Google Scholar]

- 20.Morris JN, Fries BE, Morris SA. Scaling ADLs within the MDS. J Gerontol A Biol Sci Med Sci. 1999;54:M546–553. doi: 10.1093/gerona/54.11.m546. [DOI] [PubMed] [Google Scholar]

- 21.Baier RR, Gifford DR, Patry G, et al. Ameliorating pain in nursing homes: A collaborative quality-improvement project. J Am Geriatr Soc. 2004;52:1988–1995. doi: 10.1111/j.1532-5415.2004.52553.x. [DOI] [PubMed] [Google Scholar]

- 22.Luo H, Fang X, Liao Y, et al. Associations of special care units and outcomes of residents with dementia: 2004 National Nursing Home Survey. The Gerontologist. 2010;50:509–518. doi: 10.1093/geront/gnq035. [DOI] [PubMed] [Google Scholar]

- 23.Berlowitz DR, Young GJ, Hickey EC, et al. Quality improvement implementation in the nursing home. Health Serv Res. 2003;38:65–83. doi: 10.1111/1475-6773.00105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Werner RM, Konetzka RT, Kruse GB. Impact of public reporting on unreported quality of care. Health Serv Res. 2009;44:379–398. doi: 10.1111/j.1475-6773.2008.00915.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ryan AM. Effects of the Premier hospital quality incentive demonstration on Medicare patient mortality and cost. Health Serv Res. 2009;44:821–842. doi: 10.1111/j.1475-6773.2009.00956.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mukamel DB, Glance LG, Li Y, et al. Does risk adjustment of the CMS quality measures for nursing homes matter? Med Care. 2008;46:532–541. doi: 10.1097/MLR.0b013e31816099c5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Werner RM, Konetzka RT, Stuart EA, et al. Changes in patient sorting to nursing homes under public reporting: Improved patient matching or provider gaming? Health Serv Res. 2011;46:555–571. doi: 10.1111/j.1475-6773.2010.01205.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wu N, Miller SC, Lapane K, et al. The quality of the quality indicator of pain derived from the minimum data set. Health Serv Res. 2005;40:1197–1216. doi: 10.1111/j.1475-6773.2005.00400.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wu N, Miller SC, Lapane K, et al. Impact of cognitive function on assessments of nursing home residents’ pain. Med Care. 2005;43:934–939. doi: 10.1097/01.mlr.0000173595.66356.12. [DOI] [PubMed] [Google Scholar]

- 30.Werner RM, Konetzka RT, Liang K. State adoption of nursing home pay-for-performance. Medical Care Research & Review. 2010;67:364–377. doi: 10.1177/1077558709350885. [DOI] [PubMed] [Google Scholar]