Abstract

Purpose

Children who experience long periods of auditory deprivation are susceptible to large-scale reorganization of auditory cortical areas responsible for the perception of speech and language. One consequence of this reorganization is that integration of combined auditory and visual information may be altered after hearing is restored with a cochlear implant. Our goal was to investigate the effects of reorganization in a task that examines performance during multisensory integration.

Methods

Reaction times to the detection of basic auditory (A), visual (V), and combined auditory-visual (AV) stimuli were examined in a group of normally hearing children, and in two groups of cochlear implanted children: (1) early implanted children in whom cortical auditory evoked potentials (CAEPs) fell within normal developmental limits, and (2) late implanted children in whom CAEPs were outside of normal developmental limits. Miller’s test of the race model inequality was performed for each group in order to examine the effects of auditory deprivation on multisensory integration abilities after implantation.

Results

Results revealed a significant violation of the race model inequality in the normally hearing and early implanted children, but not in the group of late implanted children.

Conclusion

These results suggest that coactivation to multi-modal sensory input cannot explain the decreased reaction times to multi-modal input in late implanted children. These results are discussed in regards to current models for coactivation to redundant sensory information.

Keywords: Multisensory integration, cochlear implants, redundant signal effect (RSE), race model inequality

1. Introduction

1.1. Central auditory system development during auditory deprivation

The development of the central auditory system (CAS) is both intrinsic and stimulus-driven. Intrinsic development of the auditory pathways occurs as a function of biological programs regardless of whether or not the CAS receives auditory input. On the other hand, a lack of sensory input, such as in deafness, may alter the organization of extrinsically activated sensory pathways, which are necessary for the typical development of oral communication skills. Cochlear implants (CIs) provide a mechanism to bypass peripheral cochlear dysfunction and deliver auditory stimuli directly to the CAS by means of electrical stimulation. Auditory stimulation from a CI can then alter or direct the development of auditory pathways necessary for fostering typical behavioral outcomes. The introduction of cochlear implants (and, more recently, auditory brainstem implants) has made it possible to activate auditory pathways and to minimize the effects of stimulus deprivation on the auditory nervous system.

Children and adults who receive CIs provide a means to examine the facilitation and limitations of plasticity in the CAS. In a series of studies, using the P1 and N1 components of the cortical auditory evoked potential (CAEP) as indices of cortical maturation, Sharma and colleagues have delineated the age cut-offs for a sensitive period for central auditory development in children with cochlear implants (Sharma and Dorman, 2006; Sharma et al., 2005; Sharma et al., 2002a). Sharma et al. (2006; 2002a) examined P1 latency in 245 congenitally deaf children fitted with a CI. Children who received CI stimulation late in childhood (> 7 years) had abnormal P1 latencies,while children who received CI stimulation early in childhood (< 3.5 years) had normal P1 latencies. A group of children receiving a CI between 3.5 and 7 years revealed highly variable P1 latencies. In a follow-up study, Sharma et al. (2005) describe the longitudinal P1 development in a subgroup of these children. In that study, the morphological changes in the CAEP were noted as revealing a typical waveform development pattern in the early implanted group. Conversely, waveform morphologies in the late implanted group maintained a broad, positive peak or showed an abnormal, polyphasic morphology with no developmental changes even after long periods of CI stimulation. In a recent study, Sharma and Dorman (2006) described a normal pattern of N1 CAEP development in early implanted children, while children implanted after age 7 years did not show any evidence of an N1 response even after years of experience with their implant. The results from the Sharma et al. studies are consistent with other studies describing sensitive periods during which the CAS is highly plastic in implanted children (Eggermont et al., 1997; Ponton et al., 1996; Ponton et al., 1996; Ponton et al., 1999; Sharma et al., 2002; Sharma et al., 2002a; Sharma et al., 2002b); and are further supported by similar findings in animal models of the congenitally deaf cat fitted with a cochlear implant (Klinke et al., 2001; Kral et al., 2001) and of the congenitally deaf Shaker-2 mouse (Lee et al., 2003).

1.2. Cortical reorganization of auditory pathways during auditory deprivation

In a recent study, Gilley et al. (2008) performed brain source analyses for the P1 CAEP response in three groups of children: normally hearing children, children implanted early in childhood with normal P1 latencies and children implanted late in childhood with P1 latencies outside of normal limits. Normally hearing children showed, as expected, bilateral activation of the auditory cortical areas (superior temporal sulcus [STS]) and right inferior temporal gyrus (ITG). Children who received cochlear implants early showed activation of STS areas contralateral to their cochlear implant which resembled that of normally hearing subjects (additionally, a minor source of activity was localized to the anterior parietotemporal cortex). However, late-implanted children showed activation outside the auditory cortical areas (viz., insula and parietotemporal areas).

If we assume that generators of early components of the CAEP (i.e., P1 and N1) include input from intracortical, recurrent activity between primary auditory and association areas (Eggermont et al., 1997), then the absence of coherent auditory cortical activity in the late implanted children suggests absent or weak connections between primary and association areas, and subsequently, weak feedback activity to thalamic areas. Results from Gilley et al. (2008) are consistent with Kral’s decoupling hypothesis (Kral et al., 2005), which suggests that a functional disconnection between the primary and higher order cortex underlies the end of the sensitive period in congenitally deaf cats, and presumably, in congenitally deaf, late-implanted children. The complete or partial decoupling of the primary and secondary cortices leaves the secondary cortex open to re-organization by other modalities.

1.3. Functional evidence for cross-modal reorganization during auditory deprivation

Studies by Neville and colleagues (cf. Bavelier and Neville, 2002; H. Neville and Bavelier, 2002) in deaf humans coupled with recent neuro-imaging studies provide clear evidence for cross-modal activation in sensory deprived cortices, suggesting cross-modal reorganization of higher order auditory cortex during deafness (Mitchell and Maslin, 2007). Early evidence for cross-modal reorganization during deafness was revealed in a series of studies examining the effects of deafness on changes in the cortical visual evoked potential (VEP) (H.J. Neville and Lawson, 1987a, 1987b, 1987c). In those studies, Neville and Lawson compared peak amplitudes and latencies of the N1 VEP evoked by apparent motion in deaf persons who were native users of American Sign Language (ASL) to those from two groups of normally hearing persons: 1) non-signers, and 2) a control group of native ASL users that were children of deaf parents. Results revealed a larger amplitude N1 VEP in the deaf signers than hearing non-signers of ASL in response to apparent motion in the visual periphery, but not to stimuli in the central visual field. That these effects were not observed in the normally hearing, native ASL users suggests that changes observed in the N1 VEP were due to auditory deprivation rather than to learning a visual language (H.J. Neville and Lawson, 1987c).

The cross-modal effects observed to visual motion stimuli lead to the working hypothesis that cortical reorganization primarily affects activity in the dorsal stream of visual processing, as opposed to the ventral stream. The ventral stream, or the “what” pathway, is more active in processing visual form, whereas the dorsal stream, or “where” pathway, is typically associated with visual motion (for review see Ungerleider and Haxby, 1994). Armstrong and colleagues (Armstrong et al., 2002) tested the hypothesis that enhancement of visual motion processing in deaf signers was specific to the dorsal stream by comparing VEPs between a group of deaf signers and a group of hearing adults using stimuli designed to specifically differentiate the ventral and dorsal visual pathways. Results of that study revealed enhanced N1 VEPs in deaf participants in response to the motion stimuli, but not to color stimuli meant to elicit responses from the ventral pathways. One possible mechanism for this specificity is that dorsal stream processes appear to have a longer intrinsic developmental timecourse than ventral stream processes (Mitchell and Neville, 2004). This longer developmental timecourse provides a window within which cross-modal reorganization may be driven by the functional demands placed on the visual system by auditory deprivation (Armstrong et al., 2002).

Using functional magnetic resonance imaging (fM-RI) techniques, Bavelier and colleagues (2000) further examined the differential effects of deafness and ASL use on activation in the medial temporal gyrus (MTG), an area of the brain known to be selective for processing of visual motion (Beauchamp et al., 1997). Results from that study revealed larger areas of activation in the MTG of deaf signers when compared to normally hearing non-signers and hearing, native users of ASL. Further, Bavelier and colleagues (Bavelier et al., 2001; Bavelier et al., 2000) provide evidence of enhanced activity in the posterior parietal cortex (PPC) and the STS of deaf signers; areas involved in spatial dynamics and spatial motor planning (Andersen et al., 1997; Snyder et al., 1997). These findings are corroborated by fMRI evidence revealing activation in response to visual motion in the STS (auditory cortex) of deaf signers, but not in hearing nonsigners (Finney et al., 2001). These effects appear to be mediated by attention related mechanisms as revealed by weaker, but still present activation in the auditory cortex when target stimuli were ignored (Fine et al., 2005).

1.4. Effects of deprivation induced reorganization on auditory-visual integration

The ability to integrate information from multiple sensory modalities is an important feature of sensory perception. Multisensory integration implies that input from more than one sensory channel results in percepts that are more than simply additive. Given the evidence, described above, for functional reorganization during auditory deprivation, and given the evidence that neuroplasticity during deprivation is limited by a developmental sensitive period (Gilley et al., 2008; Sharma, Dorman et al., 2002a), it is important to consider how multisensory integration is affected after hearing is restarted with a cochlear implant. In a comprehensive study of 80 prelingually deafened children, Bergeson, Pisoni, and Davis (2005) examined the relative roles of auditory and visual input in speech perception performance between children implanted prior to age 53 months to children implanted after age 53 months. In that study, the authors used the Common Phrases Test (Robbins et al., 1995) presented in auditory alone (A), visual alone (V), and auditory-visual (AV) modalities. After accounting for duration of implant use and communication mode (oral communication [OC] or total communication [TC]), children implanted early in childhood performed better on the A-alone and AV tasks than children implanted late, while the late implanted children performed better on the V-alone task. In order to assess the amount of “gain” in the AV condition, the authors computed a percentage improvement between the AV condition and each of the alone stimulus conditions, referred to as “auditory gain” and “visual gain”. A comparison of performance in V-only and AV conditions revealed that the early implanted children showed greater benefit from the additional auditory input than later implanted children. This auditory gain for early implanted users continued to improve after several years of implant use, while the auditory gain for late implanted users remained relatively stable. These findings suggest that there may be a general lack of auditory integration skills in late implanted CI users.

Schorr, and colleagues (Schorr et al., 2005) examined auditory-visual fusion (the McGurk effect) in 36 prelingually deafened children with CIs and 35 normally hearing children. The McGurk effect occurs when one speech sound is paired with the visual cue from another speech sound differing in place of articulation, resulting in the percept of an altogether different speech sound (McGurk and McDonald, 1976). Consistent bimodal fusion was observed in 57% of hearing children, 38% of children who had received an implant before the age of 2.5 years, but in none of the late implanted children. Typically hearing children generally experienced bimodal fusion on incongruent AV trials but when they did not, they reported perceiving the auditory stimulus. The few implanted children who experienced consistent bimodal fusion were similarly biased to perceive the auditory stimulus, but the majority of CI recipients showed a strong bias toward perceiving the visual stimulus on incongruent AV trials. These results and those from other studies (Hockley and Polka, 1994; McGurk and McDonald, 1976) indicate that integration of auditory and visual speech information is auditory dominant early in development. Given the sensitive period for auditory maturation, this suggests that early auditory experience is necessary for the development of typical integration of auditory and visual speech information.

1.5. Multisensory integration and the race model inequality

A common finding in studies of multisensory integration, including those discussed above, is a superadditive effect for responses to combined stimuli. That is, performance on the A-alone task, or on the V-alone task is not sufficient by itself to explain the increased performance on tasks of combined AV stimuli. This enhanced performance to signals presented simultaneously in two sensory modalities is referred to as the “redundant signal effect” (RSE) (Kinchla, 1974, 1977; Miller, 1982).

One possible mechanism for multisensory integration is a higher order convergence of information processed from unimodal sensory cortices. On this view, primary sensory cortices project to unimodal association areas that integrate information about a single modality, which in turn is projected to multimodal sensory association areas (Kandel et al., 2000 p. 350; Stein and Meredith, 1990). It seems unlikely, however, that such a linear process would result in vastly improved reaction times to detection of multisensory information, although Stein and Meredith (1990) describe several mechanisms that may account for such facilitation. One possible explanation for this phenomenon is that unimodal projections compete for resources in higher-order areas of convergence. Such competition might be thought of as unimodal projections that “race” to provide information to multisensory areas, thus facilitating faster reaction times. This model is referred to as the race model for multisensory processing (Miller, 1982; Raab, 1962).

Under the race model, it is possible that attention mechanisms mediate the facilitation effects observed by responses to redundant signals. As described by Miller (1982), early studies using the “parity criterion” posit that if performance on a multisensory task, when attention is divided between two modalities, is as good as performance when attention is focused on only one modality, then the two sensory modalities must represent independent processing streams. Considering that race models are explained by a statistical facilitation assuming stochastically equal, overlapping response distributions (cf. Raab, 1962), it stands that the observed responses should satisfy the race model inequality (Miller, 1982):

where, FA and FV are the cumulative density functions (CDFs) of response times, t, in the two unimodal stimulus conditions, A and V, and FAV is the CDF of response times, t, to the redundant stimulus condition, AV (Ulrich et al., 2007). Therefore, if the race model is the mechanism by which multisensory information is processed, then we should be able to predict how quickly one might respond to detection of multisensory stimuli based on the known reaction times for detecting each of the stimuli when presented in one modality only.

In practice, these tests of the race model inequality are often violated, in that the gain observed by the RSE is larger than the model can predict (Ulrich et al., 2007). Such a violation would indicate that multisensory integration occurs as a process of coactivation of sensory inputs whereby the sensory streams interact prior to response initiation (e.g., neural summation), and not as separate, parallel processing streams that converge at higher order cortices (Miller, 1982). Indeed, recent fMRI evidence has implicated the right parietotemporal junction as an area of coactivation in the cortex, specifically during trials on which RTs violate the race model (Mooshagian et al., 2008), which corroborates previous findings in animal models (cf. Stein and Stanford, 2008).

Given that extended periods of auditory deprivation result in cross-modal reorganization of the sensory pathways (Fine et al., 2005; Sharma et al., 2007), and that typical fusion of bimodal speech information is limited by a developmental sensitive period in the auditory system (Bergeson et al., 2005; Schorr et al., 2005), it is plausible that multisensory convergence occurs in an altogether different manner after hearing is restarted in an already reorganized system. One way to test this hypothesis is to compare response time distributions and test for violations of the race model inequality. If the race model is violated even after long periods of deprivation, then we might infer that areas of multisensory coactivation are preserved during reorganization, and that deficits in bimodal fusion can be attributed to some other mechanism. If the race model is not violated, it suggests early sensory deprivation negatively affects the typical development of multisensory coactivation mechanisms, and implicates the emergence of atypical mechanisms if multisensory integration still occurs.

2. Methods

2.1. Participants

Participants for this study included 25 children and 8 adults. Children participating in this study were the same as those reported in Gilley et al. (2008). Participants were placed in to one of four categories: Normally hearing (NH) adults, NH children, early implanted children and late implanted children, based on amount of hearing experience (referred to as “listening groups” for statistical analysis).

2.1.1. Normally hearing adults

Eight adults aged 23.7 to 26.16 years (mean = 24.46, SD = 0.89) were screened by questionnaire for normal hearing, speech, language, visual (normal or corrected-to-normal), and neurological development. Only participants with hearing thresholds ≤ 20 dB HL and normal speech, language, and neurological development were included in the study. The NH Adults were included strictly for qualitative comparison of the race model inequality, and are not included in the statistical analyses comparing the three groups of children, which are the experimental focus of the present study.

2.1.2. Normally hearing children

Nine children aged 7.4 to 12.8 years (mean = 10.62, SD = 2.06) with normal hearing, speech, language, visual (normal or corrected-to-normal), and neurological development were categorized as normally hearing children (NH). NH subjects were recruited from a pool of subjects in a previous study (Gilley et al., 2005). All participants were screened for normal speech, language, and neurological development through parent questionnaire prior to testing. Only participants with hearing thresholds ≤ 20 dB HL and normal speech, language, and neurological development were included in the study.

2.1.3. Early implanted children

Eight pre-lingually deafened CI users aged 9.57 to 14.7 years (mean = 11.31, SD = 1.81), implanted at ages 1.73 to 3.9 years (mean = 2.79, SD = 0.78) were categorized as early implanted children. P1 CAEP latencies for the early implanted children fell within the normal limits for P1 development (Sharma, Dorman et al., 2002a). Demographic characteristics of the early implanted children are listed in Table 1.

Table 1.

Age at test, implant fit age, and duration of implant use for CI participants

| Subject | Device | Age | Fit age | Duration |

|---|---|---|---|---|

| Early implanted | ||||

| E1 | Nucleus | 10.86 | 1.73 | 9.13 |

| E2 | Nucleus | 9.78 | 3.96 | 5.82 |

| E3 | Nucleus | 10.77 | 2.73 | 8.04 |

| E4 | Nucleus | 12.69 | 2.2 | 10.49 |

| E5 | MedEl | 9.57 | 2.8 | 6.77 |

| E6 | Nucleus | 9.74 | 2.59 | 7.15 |

| E7 | Nucleus | 14.70 | 2.4 | 12.30 |

| E8 | MedEl | 12.36 | 3.91 | 8.45 |

| Mean | 11.31 | 2.79 | 8.52 | |

| S.D. | 1.81 | 0.78 | 2.10 | |

| Late implanted | ||||

| L1 | Nucleus | 11.6 | 8.50 | 3.10 |

| L2 | Nucleus | 10.2 | 5.00 | 5.20 |

| L3 | Nucleus | 10.8 | 7.45 | 3.35 |

| L4 | Clarion | 11.2 | 5.47 | 5.73 |

| L5 | Nucleus | 11 | 9.49 | 1.51 |

| L6 | Nucleus | 13.3 | 9.63 | 3.67 |

| L7 | Clarion | 12.6 | 9.82 | 2.78 |

| L8 | MedEl | 10 | 5.23 | 4.77 |

| L9 | Nucleus | 11.4 | 9.84 | 1.56 |

| Mean | 11.34 | 7.83 | 3.52 | |

| S.D. | 1.06 | 2.09 | 1.50 | |

2.1.4. Late implanted children

Eight pre-lingually deafened CI users aged 10.0 to 13.3 years (mean = 11.34, SD = 1.06) implanted at ages 5.0 to 9.84 years (mean = 7.84, SD = 2.09) were categorized as late implanted children. P1 CAEP latencies for the late implanted children fell outside of the normal limits for P1 development (i.e., delayed P1 latencies) (Sharma, Dorman et al., 2002a). Demographic characteristics of the late implanted children are listed in Table 1.

Participants were screened by parent questionnaire for additional neurological conditions (e.g., mental retardation, cerebral palsy, autism, etc.) that may affect the CAEP recording. CI users were recruited from the clinical populations at The University of Texas at Dallas Callier Center for Communication Disorders. All participants and/or parents or legal guardians of all participants provided informed, written consent prior to participation in the study. All study procedures were approved by the Institutional Review Board at The University of Texas at Dallas, where testing took place.

2.2. Stimuli and stimulation paradigm auditory alone

A 1000 Hz pure tone with 60 ms duration (5 ms rise/fall times) was presented at 70 dB SPL from a loudspeaker at 0° azimuth located atop a video monitor to be used for presentation of the visual stimulus.

2.3. Visual alone

A white disk subtending 1.2° in diameter at a viewing distance of 100 cm was presented on a black background with 60 ms duration on a flat panel LCD computer monitor. The disk was presented on the center of the screen (x = 0, y = 0).

2.4. Auditory-visual

The auditory and visual stimuli, described above, were presented simultaneously, with stimulus onset asynchronies of 0 ms.

2.5. Presentation

Stimuli were presented using the commercially available software Presentation (Neurobehavioral Systems, Inc., Albany, CA) on a desktop computer with a 24 bit/192 kHz, stereo sound card routed to a GSI-61 audiometer (Grason-Stadler, Inc., Madison, WI), and a GeForce FX 5700 Ultra video card (NVIDIA Corporation, Santa Clara, CA) routed to a Samsung SyncMaster 710MP TFT flat screen video monitor (Samsung Electronics America, Inc., Ridgefield Park, NJ). Auditory alone (A), visual alone (V), and auditory-visual (AV) stimuli were presented randomly with equal probability, and randomly interleaved with interstimulus intervals (ISIs) ranging from 1000 ms to 3000 ms.

2.6. Testing procedure

Participants were seated in a comfortable chair in a sound treated booth with the lights turned off, and were asked to maintain focus on a crossbar at the center of the video monitor. Participants were instructed to press a button on a video game controller as quickly as possible after detecting either an auditory or visual stimulus. Each task condition (A, V, and AV) was described in detail to ensure that the participants understood the task. Stimuli were blocked into sequences of 175–200 trials, and each subject completed 10 blocks (approximately 5 minutes per block). The large number of trials for each stimulus condition allows for sufficient computation of the race model inequality, minimizing the potential for a Type I error that a significant violation will be found when one does not exist (Kiesel et al., 2007). Breaks were encouraged between blocks to maintain concentration during the task.

2.7. Analysis

Stimulus presentation times and corresponding reaction times for detection of the stimuli were stored in an ASCII data file for analysis. Mean reaction times for each subject in each stimulus condition (A, V, and AV) were treated as the response variables in a partially repeated measures ANOVA, with listening group (NH children, early implanted, and late implanted) as the between subject (partially repeated) variable and stimulus condition as the within subject (fully repeated) variable. In order to account for differences in physiological maturation and behavioral experience, the NH Adults were excluded from the ANOVA design, as well as post-hoc comparison of means between the three groups of children. A separate post-hoc analysis of means was performed to examine differences in the NH Adult group.

Miller’s test of the race model inequality (Miller, 1982) was performed for each subject to determine if violations of the race model were present. This test was performed using the algorithm described by Ulrich and colleagues (2007). Probability density functions (PDFs) for reaction time, t, were computed for each subject in each stimulus condition. The PDF is a statistical function that describes the probability that a response will occur at a given reaction time (the PDF can be thought of as a smoothed version of the response histogram). Reaction times for each subject were quantiled in to five-percentile bins prior to calculating the PDF in order to preserve the shape of the function across subjects (Molholm et al., 2002; Ulrich et al., 2007). In order to make predictions about the probability of a reaction occurring at any given time, t, or at any time earlier than t, the integral function of the PDF is calculated. This function is called the cumulative distribution function (CDF) and provides the cumulative probability that a reaction will occur at a given time or earlier.

CDF functions FA(t), FV (t),andFAV (t) for response times in each stimulus condition A, V, and AV, respectively, were grouped by “listening group” for each percentile tested. A joint predicted CDF function, BAV (t) was computed as the summand FA(t)+ FV (t). For each of the percentiles under examination, a separate paired t-test was performed for the functions FAV (t)and BAV (t) to determine if the actual reaction times described by FAV (t) were significantly less than those predicted by BAV (t). As noted by Ulrich and colleagues (2007), “the race model is rejected as insufficient to account for the data if there is a significant violation at any percentile.”

3. Results

3.1. General observations

All but two participants in this study were able to perform the reaction time task without difficulty regardless of the participant’s age or experience with auditory input. The relative ease of this task also means that analyses of percent correct scores are superfluous, as a ceiling effect was observed for nearly all participants. Specifically, the mean accuracy of all 25 participants (not including 8 adults) was computed at 94.9% (s.d. = 6.9%) with most of the error attributed to two subjects (see next paragraph). There was no significant effect of group or condition for percent correct responses. With respect to the long testing times and demanding focus of the task, the observed performance is considered to be highly accurate.

Two participants (one NH child and one late-implanted child) revealed an abnormally high number of missed responses during the testing procedure. In one case, the participant reported that he had become bored of the task and was not paying attention near the end. Indeed, a further look at the responses by block revealed that the majority of the missed events occurred during the last two blocks. Another participant revealed a majority of missed responses to the visual alone task during the early portion of the testing. After stopping the testing, the participant reported that she had not been watching the video screen as instructed. At this point the instructions for the task were repeated, and the participant’s performance on the task was observed as normal for the remainder of the experiment. In both cases, the accuracy of responses while performing the task as instructed were higher than the overall scores of 89.6% and 75.8% for the two participants, respectively.

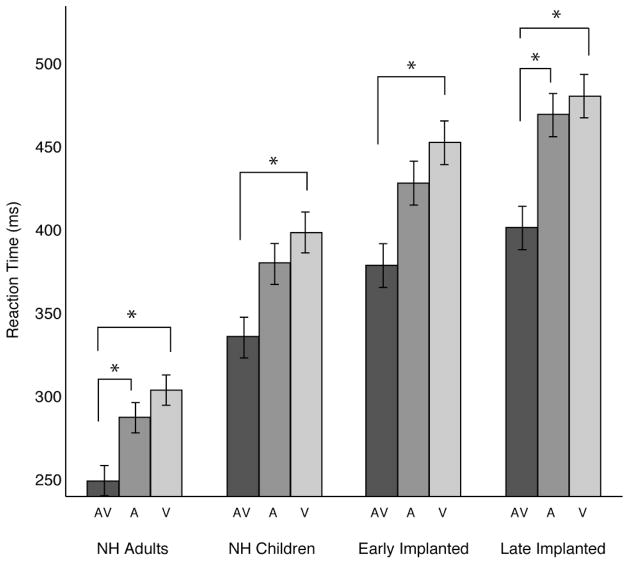

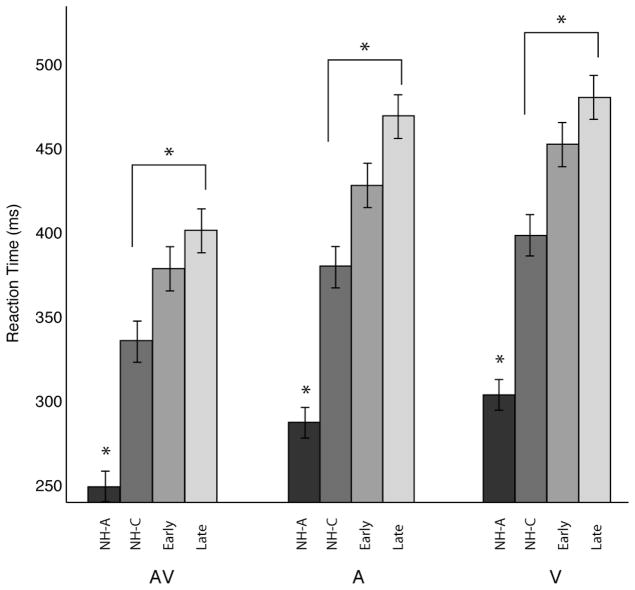

3.2. Reaction time

Figure 1 shows the mean reaction times for each listening group in each test condition. Initial inspection of the reaction times shown in Fig. 1 reveal a general trend of faster reaction times to combined AV stimuli when compared to the A-alone and V-alone conditions. Another noticeable trend from Fig. 1 is that reaction times across test conditions appear to be generally slower in children than in the adults. To further examine this, Fig. 2 shows the reaction times grouped by test condition rather than listening group. It is clear in Fig. 2 that, in general, late implanted children performed more slowly than normally hearing children across all conditions.

Fig. 1.

Mean reaction time and significant within group differences (post-hoc) for stimulus condition. The shaded bars represent the mean reaction time in milliseconds (error bars indicate standard error of the mean) for each stimulus condition (AV, A, and V). Bars are clustered by listening group. An asterisk over a pair of bars represents a significant difference between those two conditions (α < 0.05, Bonferroni corrected). Post-hoc statistics reported in the figure legend represent the group analysis without the NH Adult group. Post-hoc results shown for the NH Adult group are from a separate analysis.

Fig. 2.

Mean reaction time and significant between group differences (post-hoc) for stimulus condition. The shaded bars represent the mean reaction time in milliseconds (error bars indicate standard error of the mean) for each listening group (NH Adults, NH Children, Early Implanted, and Late Implanted). Bars are clustered by stimulus condition. An asterisk over a pair of bars represents a statistically significant difference between those two conditions (p < 0.05, Bonferroni corrected). Post-hoc statistics reported in the figure represent the group analysis without the NH Adult group. A separate post-hoc test including the NH Adults revealed significant differences from all other groups, as indicated by the lone asterisks.

Reaction times from the three groups of children were treated as the response variable in a partially repeated measures ANOVA. Results of the ANOVA revealed a significant main effect of task condition [F (2,44) = 25.57, p < 0.0001] for reaction time. There was no significant main effect for listening group [F (2,22) = 1.62, p = 0.22], nor was there a significant interaction for listening group by task condition [F (4,44) = 0.28, p = 0.89].

Post Hoc analysis of all possible pairwise comparisons (Bonferroni correction for multiple comparisons) between listening groups and task condition was performed to assess any further effects on reaction time performance. Significant pairwise comparisons are displayed under the asterisks in Figs 1 and 2 (Fcrit = 3.41, MSE = 1367.009, df = 44, p < 0.05). Results of the pairwise comparison revealed that reaction time to redundant AV stimuli was significantly faster than V-alone, but not the A-alone conditions in the NH Children and Early Implanted groups. For the Late Implanted group, reaction times to the redundant AV stimuli were faster than both the A-alone and V-alone conditions, which did not differ from one another. None of the listening groups had significantly different reaction times between A-alone and V-alone stimulation. Taken together, these results corroborate previous findings of greater visual dominance for the RSE in late implanted children, while early implanted children and normally hearing children reveal more auditory dominant RSE effects (Bergeson et al., 2005; Schorr et al., 2005).

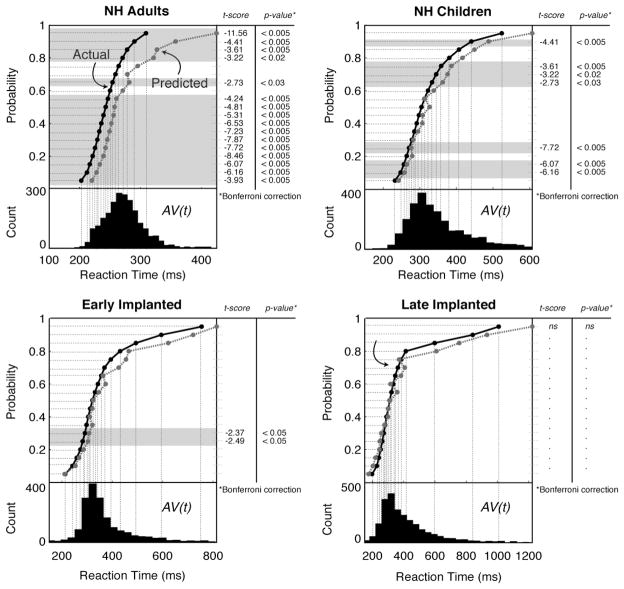

3.3. Miller’s test for the race model inequality

Miller’s test of the race model inequality (Miller, 1982) tests for significant violations of the race model, such that the CDF for the AV condition, F AV (t), is less than the joint predicted CDF, BAV (t). Figure 3 shows the results of these two functions for each listening group. Group response histograms for the redundant AV condition, AV(t), appear below the CDF pairs for each group. The mode for each AV(t) histogram falls within percentiles which violated the race model inequality for those groups in which a violation was present. Paired t-tests of the two functions, F AV (t) and BAV (t)revealed significant violations of the race model inequality in all of the groups, with the exception of the late-implanted group, which did not reveal violations at any of the tested percentiles. Percentiles for which a significant violation occurred are shown by the shaded percentile regions underlying the CDFs in Fig. 3. A significant violation of the race model inequality is evidence for coactivation of cortical pathways that are specific to multisensory input (Miller, 1982; Ulrich et al., 2007).

Fig. 3.

Miller’s test of the race model inequality. For each listening group, the group count histograms for reaction time for the combined AV stimuli are shown in the lower sub-panel. The CDFs for the combined AV stimuli (FAV (t), solid black line labeled “Actual” in the NH Adults panel) and for the predicted AV reaction times (BAV (t) dashed dark-gray line labeled “Predicted” in the NH Adults panel) are shown in the upper sub-panel. Each percentile tested for a violation of the race model inequality is displayed as a series of dashed light-gray lines; over which, the shaded regions show percentiles that violated the race model inequality (i.e., FAV (t) was significantly less than BAV (t)). Significant t-scores and p-values are displayed to the right of each upper sub-panel, aligned with the corresponding percentile rank (shaded regions).

4. Discussion

In the present study, we examined psychophysical responses to basic sensory and multisensory input in a group of children who were deprived of auditory input for varying periods of time during development. These children later had hearing restored via electrical stimulation of the auditory nerve with a CI. At issue was the extent to which possible reorganization of central auditory pathways may affect multisensory processing after introduction of auditory input via implantation.

We examined the behavioral consequences of such reorganization by measuring reaction times to detection of stimuli from different sensory modalities; participants were presented with simple auditory or visual stimuli that occurred either alone or simultaneously. Regardless of age or listening experience, reaction times in response to combined multisensory information were faster than for stimuli presented in only one modality. Thus, neither early sensory deprivation nor cochlear implantation affects the basic RSE, and some degree of multisensory integration occurs.

As listening experience decreased, the overall reaction times increased regardless of the stimulus condition. These findings support the hypothesis that, in a typically functioning nervous system, experience with sensory input determines processing efficiency within the system. However, late implanted children who have worn their devices for several years performed more slowly than early implanted children who had worn their devices for the same amount of time. This indicates that processing efficiency is also limited by a sensitive period for auditory stimulation that is necessary to drive the typical development of functions such as multisensory integration (Bergeson et al., 2005; Schorr et al., 2005). This result is consistent with our previous findings that late implanted children who have worn their CI’s for as long as 8–10 years show abnormal cortical potentials (Sharma and Dorman, 2006). Together these findings suggest that a key component in the attainment of optimal multimodal integration is the development of typical processing latencies in unimodal sensory processing.

Participants in the present study included three groups of children from a previous study, in whom brain source analyses were conducted on the P1 CAEP (Gilley et al., 2008). In that study, children implanted prior to the sensitive period for CAS plasticity revealed brain activity in auditory cortex contralateral to CI stimulation. Conversely, children implanted after the sensitive period revealed P1 sources outside of auditory cortex altogether. These findings were interpreted as evidence that auditory pathways undergo significant reorganization following long periods of auditory deprivation. Given these findings, it is possible that with prolonged periods of deafness, neural circuits biologically reserved for auditory processing or integration of auditory input with other sensory channels are recruited by other brain functions. In such a case, these atypical pathways may be inefficient for processing multisensory information once sensory input is restored. If children in this study are capable of multimodal integration, as evidenced by their basic RSE, but their reaction times do not violate the race model inequality, then we must conclude that they do not achieve this integration by typical coactivation mechanisms and that coactivation mechanisms are dependent upon normal early sensory input. Perhaps the integration of unimodal inputs does occur in multimodal associative cortices when early sensory input is deprived.

One explanation for observing a basic RSE in the absence of coactivation can be extracted from the parallel grains model (PGM) (Miller and Ulrich, 2003). The PGM posits that each stimulus potentially activates some number of independent channels within the modality specific pathways. Each of these channels, or “grains”, contributes to the overall response activity in the neural pathway. At the receiving end of this pathway is a neural integrator, which requires a sufficient number of activated grains in order to reach a decision criterion (e.g., elicitation of a motor response). Within the PGM, an increase in the number of active grains will result in a decreased reaction time (Miller and Ulrich, 2003). In simple reaction time tasks, such as in the present study, the PGM easily explains coactivation processes during violations of the race model (Miller and Ulrich, 2003; Schwarz, 2006). If multimodal associative cortices react as such integrators, then simultaneous input from multiple sources (e.g., arising from multiple, unimodal pathways) would decrease the time necessary to reach the decision criterion, as more active grains contribute to the process when compared to input from only one of the modalities. Such an integrative process would be compromised, however, if grains from one of the input modalities were no longer sufficient to facilitate a faster decision criterion.

In this and other studies, congenitally deaf children with relatively long periods of auditory deprivation show not only lasting changes to the auditory system, but also lasting changes in visual functions, whether they rely on CIs (Bergeson et al., 2005; Schorr et al., 2005) or on hearing aids, or use no assistive devices (Mitchell and Neville, 2002; Quittner et al., 1994). In this instance, the deprivation of auditory input early in life places pressure on the visual system to assume functions that it may normally not, and this may canalize visual development along an atypical path (Mitchell and Maslin, 2007). In this study and others (Bergeson et al., 2005; Schorr et al., 2005) we see evidence that visual processing remains dominant in multimodal integration even when auditory input is restored via a CI. This raises the possibility that multimodal coactivation processes do not develop normally when early input is dominated by one sensory system, and that such an early dominance leads to a long-lasting bias in sensory processing and organization toward that dominant modality.

Acknowledgments

We would like to thank the two anonymous reviewers for their helpful suggestions and expert opinion on this manuscript. We wish to thank the children and their families for their enthusiastic participation in this study. This research was supported by funding from the National Institutes of Health (NIH-NIDCD R01-DC004552 and NIH-NIDCD R01-DC006257) to author AS.

References

- Andersen RA, Snyder LH, Bradley DC, Xing J. Multimodal representation of space in the posterior parietal cortex and its use in planning movements. Annu Rev Neurosci. 1997;20:303–330. doi: 10.1146/annurev.neuro.20.1.303. [DOI] [PubMed] [Google Scholar]

- Armstrong BA, Neville HJ, Hillyard SA, Mitchell TV. Auditory deprivation affects processing of motion, but not color. Brain Res Cogn Brain Res. 2002;14(3):422–434. doi: 10.1016/s0926-6410(02)00211-2. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Brozinsky C, Tomann A, Mitchell T, Neville H, Liu G. Impact of early deafness and early exposure to sign language on the cerebral organization for motion processing. J Neurosci. 2001;21(22):8931–8942. doi: 10.1523/JNEUROSCI.21-22-08931.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D, Neville HJ. Cross-modal plasticity: where and how? Nat Rev Neurosci. 2002;3(6):443–452. doi: 10.1038/nrn848. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Tomann A, Hutton C, Mitchell T, Corina D, Liu G, et al. Visual attention to the periphery is enhanced in congenitally deaf individuals. J Neurosci. 2000;20(17):RC93. doi: 10.1523/JNEUROSCI.20-17-j0001.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauchamp MS, Cox RW, DeYoe EA. Graded effects of spatial and featural attention on human area MT and associated motion processing areas. J Neurophysiol. 1997;78(1):516–520. doi: 10.1152/jn.1997.78.1.516. [DOI] [PubMed] [Google Scholar]

- Bergeson TR, Pisoni DB, Davis RA. Development of audiovisual comprehension skills in prelingually deaf children with cochlear implants. Ear Hear. 2005;26(2):149–164. doi: 10.1097/00003446-200504000-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eggermont JJ, Ponton CW, Don M, Waring MD, Kwong B. Maturational delays in cortical evoked potentials in cochlear implant users. Acta Otolaryngol. 1997;117(2):161–163. doi: 10.3109/00016489709117760. [DOI] [PubMed] [Google Scholar]

- Fine I, Finney EM, Boynton GM, Dobkins KR. Comparing the effects of auditory deprivation and sign language within the auditory and visual cortex. J Cogn Neurosci. 2005;17(10):1621–1637. doi: 10.1162/089892905774597173. [DOI] [PubMed] [Google Scholar]

- Finney EM, Fine I, Dobkins KR. Visual stimuli activate auditory cortex in the deaf. Nat Neurosci. 2001;4(12):1171–1173. doi: 10.1038/nn763. [DOI] [PubMed] [Google Scholar]

- Gilley PM, Sharma A, Dorman M, Martin K. Developmental changes in refractoriness of the cortical auditory evoked potential. Clin Neurophysiol. 2005;116(3):648–657. doi: 10.1016/j.clinph.2004.09.009. [DOI] [PubMed] [Google Scholar]

- Gilley PM, Sharma A, Dorman MF. Cortical reorganization in children with cochlear implants. Brain Res. 2008;1239:56–65. doi: 10.1016/j.brainres.2008.08.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hockley NS, Polka L. A developmental study of audiovisual speech perception using the McGurk paradigm. The Journal of the Acoustical Society of America. 1994;96(5):3309. [Google Scholar]

- Kandel ER, Schwartz JH, Jessell TM. Principles of Neural Science. 4. McGraw-Hill/Appleton & Lange; 2000. [Google Scholar]

- Kiesel A, Miller J, Ulrich R. Systematic biases and Type I error accumulation in tests of the race model inequality. Behav Res Methods. 2007;39(3):539–551. doi: 10.3758/bf03193024. [DOI] [PubMed] [Google Scholar]

- Kinchla R. Detecting target elements in multielement arrays: A confusability model. Perception and Psychophysics. 1974;15(1):149–158. [Google Scholar]

- Kinchla R. The role of structural redundancy in the perception of visual targets. Perception and Psychophysics. 1977;22(1):19–30. [Google Scholar]

- Klinke R, Hartmann R, Heid S, Tillein J, Kral A. Plastic changes in the auditory cortex of congenitally deaf cats following cochlear implantation. Audiol Neurootol. 2001;6(4):203–206. doi: 10.1159/000046833. [DOI] [PubMed] [Google Scholar]

- Kral A, Hartmann R, Tillein J, Heid S, Klinke R. Delayed maturation and sensitive periods in the auditory cortex. Audiol Neurootol. 2001;6(6):346–362. doi: 10.1159/000046845. [DOI] [PubMed] [Google Scholar]

- Kral A, Tillein J, Heid S, Hartmann R, Klinke R. Postnatal cortical development in congenital auditory deprivation. Cereb Cortex. 2005;15(5):552–562. doi: 10.1093/cercor/bhh156. [DOI] [PubMed] [Google Scholar]

- Lee DJ, Cahill HB, Ryugo DK. Effects of congenital deafness in the cochlear nuclei of Shaker-2 mice: an ultrastructural analysis of synapse morphology in the endbulbs of Held. J Neurocytol. 2003;32(3):229–243. doi: 10.1023/B:NEUR.0000010082.99874.14. [DOI] [PubMed] [Google Scholar]

- McGurk H, McDonald JJ. Hearing lips and seeing voices. Nature. 1976;264:746–748. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- Miller J. Divided attention: evidence for coactivation with redundant signals. Cognit Psychol. 1982;14(2):247–279. doi: 10.1016/0010-0285(82)90010-x. [DOI] [PubMed] [Google Scholar]

- Miller J, Ulrich R. Simple reaction time and statistical facilitation: a parallel grains model. Cogn Psychol. 2003;46(2):101–151. doi: 10.1016/s0010-0285(02)00517-0. [DOI] [PubMed] [Google Scholar]

- Mitchell TV, Maslin MT. How vision matters for individuals with hearing loss. Int J Audiol. 2007;46(9):500–511. doi: 10.1080/14992020701383050. [DOI] [PubMed] [Google Scholar]

- Mitchell TV, Neville HJ. Effects of age and experience on the development of neurocognitive systems. In: Zani A, Proverbio AM, editors. The Cognitive Physiology of Mind. New York: Academic Press; 2002. pp. 225–244. [Google Scholar]

- Mitchell TV, Neville HJ. Asynchronies in the development of electrophysiological responses to motion and color. J Cogn Neurosci. 2004;16(8):1363–1374. doi: 10.1162/0898929042304750. [DOI] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ. Multisensory auditory-visual interactions during early sensory processing in humans: a high-density electrical mapping study. Brain Res Cogn Brain Res. 2002;14(1):115–128. doi: 10.1016/s0926-6410(02)00066-6. [DOI] [PubMed] [Google Scholar]

- Mooshagian E, Kaplan J, Zaidel E, Iacoboni M. Fast visuomotor processing of redundant targets: the role of the right temporo-parietal junction. PLoS ONE. 2008;3(6):e2348. doi: 10.1371/journal.pone.0002348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neville H, Bavelier D. Human brain plasticity: evidence from sensory deprivation and altered language experience. Prog Brain Res. 2002;138:177–188. doi: 10.1016/S0079-6123(02)38078-6. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Lawson D. Attention to central and peripheral visual space in a movement detection task: an event-related potential and behavioral study. I. Normal hearing adults. Brain Res. 1987a;405(2):253–267. doi: 10.1016/0006-8993(87)90295-2. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Lawson D. Attention to central and peripheral visual space in a movement detection task: an event-related potential and behavioral study. II. Congenitally deaf adults. Brain Res. 1987b;405(2):268–283. doi: 10.1016/0006-8993(87)90296-4. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Lawson D. Attention to central and peripheral visual space in a movement detection task. III. Separate effects of auditory deprivation and acquisition of a visual language. Brain Res. 1987c;405(2):284–294. doi: 10.1016/0006-8993(87)90297-6. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Don M, Eggermont JJ, Waring MD, Kwong B, Masuda A. Auditory system plasticity in children after long periods of complete deafness. Neuroreport. 1996;8(1):61–65. doi: 10.1097/00001756-199612200-00013. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Don M, Eggermont JJ, Waring MD, Masuda A. Maturation of human cortical auditory function: differences between normal-hearing children and children with cochlear implants. Ear Hear. 1996;17(5):430–437. doi: 10.1097/00003446-199610000-00009. [DOI] [PubMed] [Google Scholar]

- Ponton CW, Moore JK, Eggermont JJ. Prolonged deafness limits auditory system developmental plasticity: evidence from an evoked potentials study in children with cochlear implants. Scand Audiol Suppl. 1999;51:13–22. [PubMed] [Google Scholar]

- Quittner AL, Smith LB, Osberger MJ, Mitchell TV, Katz DB. The impact of audition on the development of visual attention. Psychological Science. 1994;5(6):347–353. [Google Scholar]

- Raab DH. Statistical facilitation of simple reaction times. Trans N Y Acad Sci. 1962;24:574–590. doi: 10.1111/j.2164-0947.1962.tb01433.x. [DOI] [PubMed] [Google Scholar]

- Robbins AM, Renshaw JJ, Osberger MJ. Test of Common Phrases. Indianapolis: Indiana University School of Medicine; 1995. [Google Scholar]

- Schorr EA, Fox NA, van Wassenhove V, Knudsen EI. Auditory-visual fusion in speech perception in children with cochlear implants. Proc Natl Acad Sci U S A. 2005;102(51):18748–18750. doi: 10.1073/pnas.0508862102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarz W. On the relationship between the redundant signals effect and temporal order judgments: parametric data and a new model. J Exp Psychol Hum Percept Perform. 2006;32(3):558–573. doi: 10.1037/0096-1523.32.3.558. [DOI] [PubMed] [Google Scholar]

- Sharma A, Dorman MF. Central auditory development in children with cochlear implants: clinical implications. Adv Otorhinolaryngol. 2006;64:66–88. doi: 10.1159/000094646. [DOI] [PubMed] [Google Scholar]

- Sharma A, Dorman MF, Kral A. The influence of a sensitive period on central auditory development in children with unilateral and bilateral cochlear implants. Hear Res. 2005;203(1–2):134–143. doi: 10.1016/j.heares.2004.12.010. [DOI] [PubMed] [Google Scholar]

- Sharma A, Dorman MF, Spahr A, Todd NW. Early cochlear implantation in children allows normal development of central auditory pathways. Ann Otol Rhinol Laryngol Suppl. 2002;189:38–41. doi: 10.1177/00034894021110s508. [DOI] [PubMed] [Google Scholar]

- Sharma A, Dorman MF, Spahr AJ. A sensitive period for the development of the central auditory system in children with cochlear implants: implications for age of implantation. Ear Hear. 2002a;23(6):532–539. doi: 10.1097/00003446-200212000-00004. [DOI] [PubMed] [Google Scholar]

- Sharma A, Dorman MF, Spahr AJ. Rapid development of cortical auditory evoked potentials after early cochlear implantation. Neuroreport. 2002b;13(10):1365–1368. doi: 10.1097/00001756-200207190-00030. [DOI] [PubMed] [Google Scholar]

- Sharma A, Gilley PM, Dorman MF, Baldwin R. Deprivation-induced cortical reorganization in children with cochlear implants. Int J Audiol. 2007;46(9):494–499. doi: 10.1080/14992020701524836. [DOI] [PubMed] [Google Scholar]

- Snyder LH, Batista AP, Andersen RA. Coding of intention in the posterior parietal cortex. Nature. 1997;386(6621):167–170. doi: 10.1038/386167a0. [DOI] [PubMed] [Google Scholar]

- Stein BE, Meredith MA. Multisensory integration. Neural and behavioral solutions for dealing with stimuli from different sensory modalities. Ann N Y Acad Sci. 1990;608:51–65. doi: 10.1111/j.1749-6632.1990.tb48891.x. discussion 65–70. [DOI] [PubMed] [Google Scholar]

- Stein BE, Stanford TR. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci. 2008;9(4):255–266. doi: 10.1038/nrn2331. [DOI] [PubMed] [Google Scholar]

- Ulrich R, Miller J, Schroter H. Testing the race model inequality: an algorithm and computer programs. Behav Res Methods. 2007;39(2):291–302. doi: 10.3758/bf03193160. [DOI] [PubMed] [Google Scholar]

- Ungerleider LG, Haxby JV. ‘What’ and ‘where’ in the human brain. Curr Opin Neurobiol. 1994;4(2):157–165. doi: 10.1016/0959-4388(94)90066-3. [DOI] [PubMed] [Google Scholar]