Abstract

Visual cortex activity in the blind has been shown in Braille literate people, which raise the question of whether Braille literacy influences cross-modal reorganization. We used fMRI to examine visual cortex activation during semantic and phonological tasks with auditory presentation of words in two late-onset blind individuals who lacked Braille literacy. Multiple visual cortical regions were activated in the Braille naive individuals. Positive BOLD responses were noted in lower tier visuotopic (e.g., V1, V2, VP, and V3) and several higher tier visual areas (e.g., V4v, V8, and BA 37). Activity was more extensive and cross-correlation magnitudes were greater during the semantic compared to the phonological task. These results with Braille naive individuals plausibly suggest that visual deprivation alone induces visual cortex reorganization. Cross-modal reorganization of lower tier visual areas may be recruited by developing skills in attending to selected non-visual inputs (e.g., Braille literacy, enhanced auditory skills). Such learning might strengthen remote connections with multisensory cortical areas. Of necessity, the Braille naive participants must attend to auditory stimulation for language. We hypothesize that learning to attend to non-visual inputs probably strengthens the remaining active synapses following visual deprivation, and thereby, increases cross-modal activation of lower tier visual areas when performing highly demanding non-visual tasks of which reading Braille is just one example.

Keywords: Blindness, Human, Magnetic resonance imaging, Visual cortex/*physiology

Imaging studies (i.e., PET and fMRI) have shown activity in visual cortex of blind people when they read Braille [1,8,18]. Braille literate blind people also exhibit visual cortical activity during a variety of non-Braille tactile [6,7,16] and auditory tasks [2,5,21], which raises the question of whether Braille literacy influences visual cortex cross-modal plasticity. However, sensory deprivation alone may be sufficient to induce visual cortex reorganization because short-term visual deprivation in sighted people made visual cortex more excitable [3] and responsive during discrimination of Braille letters or auditory pitch [12]. In addition, a recent study described visual cortex activation during a tactile discrimination task in two Braille naive, late-onset blind individuals [17]. The activated regions in these late-onset blind individuals involved higher tier visual areas that included a part of Brodmann area 19, which overlapped with V5/MT+, and Brodmann area 37. In the study by Sadato et al. [17], Braille patterns were moved back-and-forth across the finger tips. However, tactile motion stimulation activates V5/MT+ in non-visually deprived sighted people [6,10]. Additionally, non-visual stimulation also activates BA 37 in sighted people [6]. Furthermore, findings in Braille literate late- and early-onset blind people nearly always also include lower tier, visuotopic areas (e.g., V1 and V2). Thus, a question remains as to whether visual cortex cross-modal reorganization relies upon Braille literacy. Performance of highly demanding language tasks exclusive of Braille reading might facilitate activation of lower tier visuotopic cortical areas. We addressed this question by examining visual cortex activation in two Braille naive late-onset blind individuals during semantic and phonological tasks with auditory presentation of words. Results were compared to observations previously obtained with the identical protocol in a control group of normally sighted people and Braille literate late-onset blind people [5].

We imaged two male late-onset blind individuals. One was a 50-year-old who began to lose sight at 29 due to uveitis. He became totally blind at 49, 1-year prior to participating in this study. The second individual was a 19-year-old who began to lose sight at 16 due to detached retinas. He became totally blind 2 months prior to scanning for this study. Neither participant was Braille literate when imaged. Additional data was obtained from eight sighted (two females; 36.1 ± 14, mean age and S.D.) and seven Braille literate late-onset blind individuals (four females; 51.3 ± 11.2). All but one Braille literate late-onset blind participants were right-handed by the Edinburgh handedness assessment [13]. Except for ophthalmologic pathology in the blind individuals, all participants were free of neurological disease and had structural MR images that indicated normal brain anatomy. Informed consent was obtained following guidelines approved by the Human Studies Committee of Washington University. All experiments were conducted in accordance with the Declaration of Helsinki and carried out with written consent of all participants. Consent documents were read to the blind participants.

Two lexical tasks were performed using a long, single event design as previously described [5]. Participants performed either a semantic task that required identifying a concept shared by a list of heard words or a phonological task that required identifying a rhyme sound shared by all of the heard words. Each word list was created using Sound Forge (Sony, Inc.). A word list contained 16 equal loudness words spoken by a female. The digitized words in each list initially had 100 ms silent periods inserted between them. The lists were presented in 10 s. All participants were instructed to close their eyes and listen to a cue word followed by the word list. After hearing a word list cued to “meaning”, participants had to generate immediately and covertly a word that linked to an idea shared by all words in the list. For example, participants might have generated “meal” after hearing “butter, food, eat, sandwich, rye, jam, milk, flour, jelly, etc. . . .”. After hearing a word list cued to “rhyme”, participants had to generate covertly a word that rhymed with all of the heard words. Generated words had to differ from those included in a heard list. A new list of unique words was presented for every trial (i.e., single event) each of which consisted of approximately a 1 s cue interval, 10 s for listening to the word list, and a ~17 s control period. Participant understanding of the tasks was assessed using overt responses during pre-scan practice trials.

Intervals for word presentations and control periods within a trial were synchronized to scanner frame repetition times (TR). The cue and word list intervals occupied four image frames (11.376 s) and six frames were for the control period (17.064 s). The fMRI paradigm consisted of six runs, each of which started with eight initial frames to allow for steady state magnetization followed by 12 trials. The order of experimental conditions was two repeats of three successive trials of the semantic followed by three trials of the phonological task. These cycles yielded six trials of each task per scan and 36 trials for each task in six functional scans. All participants heard the same word lists, but the sequential order was reversed for half of the participants. Task performance was assessed following the last scan by asking participants to recognize whether a particular word had been heard during the scans. The post-scan memory test included 72 heard words, one randomly chosen from each word list, and 72 new words. For each participant, we computed d′ and response bias (β) scores for recognizing old and rejecting new words.

Functional magnetic resonance imaging (fMRI) was acquired with a Siemens Vision 1.5 tesla whole-body scanner and a standard circularly polarized head coil. A custom, single-shot asymmetric spin-echo-planar sequence (EPI) was used (echo time (TE) = 50 ms, TR = 2.844 s, flip angle = 90°, FOV = 240 mm, in-plane resolution = 3.75 mm × 3.75 mm). Twenty-one 6 mm contiguous axial slices were obtained for whole brain coverage. Additional structural images provided the basis for atlas transformation. These included a high-resolution T1-weighted 3D magnetization prepared rapid gradient echo (MP-RAGE) sequence (TR = 1242 ms, TE = 4 ms, flip angle = 12°, inversion time = 300 ms, sagittal slices of 1 mm × 1 mm × 1.25 mm) and a T2-weighted (T2W) spin echo sequence of axial slices (TR = 3800 ms, TE = 22 ms, resolution = 1 mm × 1 mm × 6 mm).

EPI images were preprocessed to correct for head movements and to account for intensity differences due to interpolated acquisition [5–7]. The EPI images were co-registered to the high resolution MP-RAGE image and then spatially normalized to a T1-weighted representative target that conforms to the Talairach system [19]. Atlas transformation of functional (EPI) data was achieved by computing a sequence of affine transforms as follows: EPI → T2W → MP-RAGE → atlas representative target. Slice plane stretch in addition to rigid body motion (six parameters) partially compensated for EPI distortion and accomplished cross-modal registration. Images were transformed to atlas space, resampled in 2 mm isotropic voxels and spatially smoothed (4 mm FWHM) before statistical analyses.

Analysis of individual subject blood oxygen level dependent (BOLD) activity per voxel utilized the general linear model to obtain model free response estimates for each 10-frame event type [5–7]. The design matrix consisted of 20-task trial regres-sors across all runs, and, for each run, high pass filter, linear trend and intercept regressors. Statistical parameter maps were based on t-statistics obtained from cross-correlating the response estimates with a set of assumed hemodynamic response functions (hrf) [9]. A set of hrfs was created by convolving a box-car rectangular representation of four frames for the word list with a delayed gamma function [4] and adding a variable delay from 1 to 5.5 s in 0.5 s steps. A t-statistic map was generated by choosing the maximum absolute t-statistics per voxel. We converted the t-statistic maps for each individual to equally probable z-scores that were thresholded on the basis of Monte Carlo simulations at a multiple-comparisons corrected false-detection rate of p = 0.05 (z = 3 over 45 contiguous face connected voxels). Identification of common regional activation in the Braille naive blind individuals for each task was based on the conjunction of significantly activated nodes on the cortical surface from each participant using a population-average, landmark- and surface-based atlas (PALS) [20]. Responses from blind and sighted individuals were compared using extracted regional time courses of average percent signal change per voxel in volumes for visual cortex regions that were defined by projecting the average fiducial surface representation to volume space assuming 3 mm thick cortex [6,20].

The two Braille naive and seven Braille literate late-onset blind performed similarly in the post-scan memory test. The two Braille naive participants had d′ values of 0.67 and 0.71 and, respectively, β values of 1.8 and 1.6. The Braille literate participants had a d′ mean = 0.71, S.E.M. = 0.11 and a β mean = 1.4, S.E.M. = 0.2. Sighted participants had slightly lower performance: a d′ mean = 0.48, S.E.M. = 0.07 and a β mean = 1.3, S.E.M. = 0.14.

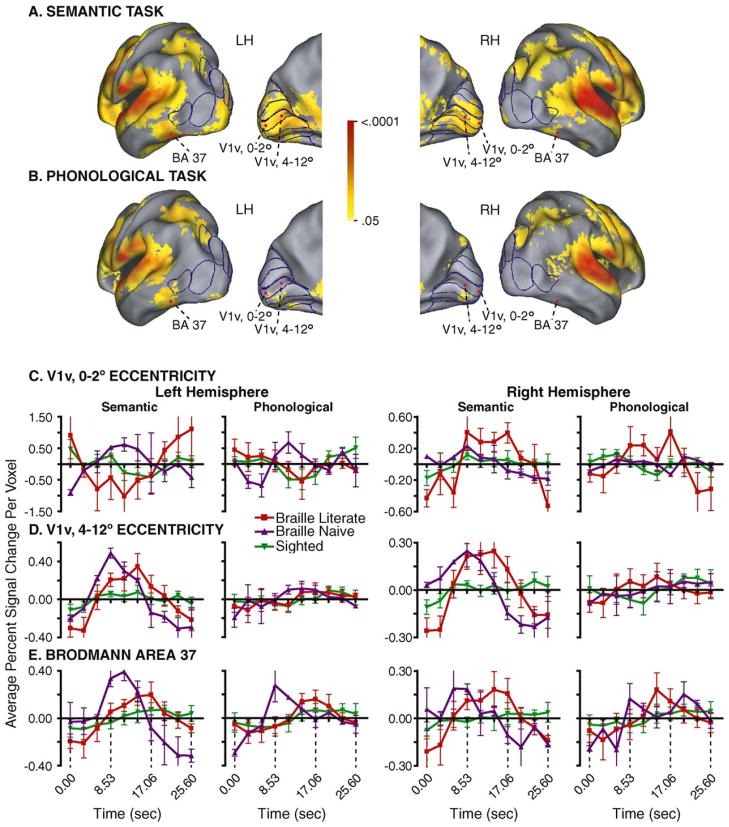

Fig. 1A shows that for both Braille naive late-onset blind participants the semantic task activated multiple lower tier visuotopic areas, which included ventral and dorsal V1 and V2, VP and V3. Some activity was also detected in higher tier visual areas V4v, V3a, V7, V8, and V5/MT+. In addition, activity was noted bilaterally in a non-visuotopic portion of BA 37 that is located in the fusiform gyrus just anterior to defined visual areas (Fig. 1A). The phonological task activated many fewer areas (Fig. 1B). We previously described a similar network of activated visual areas in Braille literate late-onset blind [5]. No comparable distribution of activated occipital regions was found in sighted individuals [5]. These group distinctions were reflected in the contrast between positive BOLD responses in both groups of late-onset blind compared to predominantly flat responses in sighted individuals in all visual cortex regions (Fig. 1C–E).

Fig. 1.

Activated visual cortex in Braille naive late-onset blind and example response time courses in sighted, Braille naive and Braille literate late-onset blind people. (A) Activity distribution during a semantic language task is shown projected to the cortical surface using a population-average, landmark and surface-based atlas (PALS) [20]. The medial hemisphere view shows borders from the PALS atlas for V1, V2, V3, VP, and V4v; the angled lateral hemisphere view shows borders for the superior V7, V3a, and V3 and inferior V5/MT+ and LOC. (B) Activity distribution during a phonological language task. Illustrated cortical distributions in (A) and (B) were created with the following supervised scheme. First, multiple-comparison corrected cross-correlation z-score maps (z = 3 over 45 face-connected voxels) from each Braille naive late-onset blind participant were projected onto the associated surface nodes in 12 normal individuals. The resulting z-score values at each node were averaged across these 12 individuals (scale shows p-values of the nodal average z-scores). Displayed activation is the average of the two participants, provided that both had activation at that node. (C) Time course plots associated with the 0–2° eccentricity partition in V1v. (D) Time course plots associated with the 4–12° eccentricity partition in V1v. (E) Time course plots associated with the non-visuotopic, posterior part of BA 37. Time courses were extracted from volumes whose approximate centers are marked by magenta dots in (A) and (B). Data at each time course interval shows the group mean and S.E.M. Braille naive (NBR, purple), Braille literate (LB, red) and sighted (NS, green). Abbreviations: Brodmann area, BA; ventral primary visual area, V1v.

Braille naive and Braille literate late-onset blind showed mostly comparable positive BOLD response time courses in multiple visual areas during the semantic task. During the phonological task, small positive BOLD responses were noted only in V1v and V2d on the left and V2v and V7 on the right. Distinctions between the late-onset blind participants, however, were found in the foveal eccentricity partition of V1v (Fig. 1C, 0–2° eccentricity) where, on the left, positive responses in Braille naive contrasted with negative responses in Braille literate and sighted individuals during both tasks. At the same eccentricity on the right, larger positive BOLD responses in Braille literate contrasted with small or flat responses in Braille naive and sighted individuals during, respectively, the semantic or phonological task. Both late-onset blind groups showed similar positive BOLD responses during the semantic task and minimal to no responses during the phonological task at more peripheral eccentricities bilaterally in V1v (Fig. 1D, 4–12°).

Both late-onset blind groups showed similar BOLD responses bilaterally in BA37 (Fig. 1E). However, the time to peak of the BOLD response was shorter in the Braille naive participants, especially during the semantic task.

In Braille literate, but not sighted participants, paired t-tests found significantly larger BOLD response cross-correlation magnitudes during the semantic versus the phonological task in nearly all activated visual cortex areas (Table 3 in reference [5]). We assessed response differences between tasks in the Braille naive participants based on cross-correlation magnitude measurements that were separately computed for each run. The semantic task yielded significantly larger responses (Wilcoxin signed rank test, p < 0.05) in nearly all lower and higher tier visual areas. On the left these included V1v, V2v and V3 at 0–2° eccentricity; V1v and V1d at 4–12° eccentricity; V1v, V1d and V2v beyond 12° eccentricity; V4v, V7, and V5/MT+. On the right significantly larger responses to the semantic task were found in all visual areas except V1v, V2v and V3 at 0–2° eccentricity. With a sample of just two participants, this assessment of significant differences in Braille naive people must be viewed as provisional.

Multiple currently indistinguishable explanations possibly underlie the greater distribution and larger BOLD responses for the semantic task. One is that semantic processing occurs in lower tier visuotopic areas after blindness. Another is that the semantic task involved verbal memory, which, as previously reported [1,14], especially activates V1. It is also plausible that the semantic task more readily evoked mental imagery, which is known to activate visual cortex, including V1 [11]. However, there is little a priori justification to exclude use of mental imagery by sighted people and these participants showed no visual cortex activity. Greater difficulty for the semantic compared to the phonological task is another possible explanation of the observed response differences. However, a difficulty confound will need to be assessed in future experiments.

These results with Braille naive individuals possibly con-firm a prior suggestion that visual deprivation alone may be a sufficient inducement to visual cortex reorganization [17]. By extension, this hypothesis plausibly indicates that visual cortex cross-modal plasticity does not rely upon Braille literacy. More probable is that performance of highly demanding non-visual tasks (of which Braille reading is one example) activates visuotopic cortical areas in the blind. Blindness exhorts reliance on auditory perceptions, and thereby encourages learning to attend to auditory stimulation. Indeed, hearing words is the only link to language for Braille naive individuals. Learned skill in attending to auditory inputs has been associated with better retention of task related sounds in blind people [15], which possibly accounts for the higher d′ scores in the late blind compared to the sighted people. We hypothesize that in late-onset blind individuals, there is competition between auditory and tactile inputs. However, in the Braille naive individuals, there is less competition between these inputs. Thus, the effect of practice with non-visual stimulation may drive the reorganization in visual cortical areas. This is consistent with the observation of auditory, but not tactile evoked activity in lower tier visual cortex areas, respectively, in the current and a previous study with Braille naive people [17].

We interpret prior and present results as indicating that processing of non-visual inputs possibly varies by visual cortex area. An example was the differences noted between Braille naive and literate late-onset blind people in left V1 foveal versus peripheral eccentricities. The current results therefore indicate that input competition between tactile and auditory stimulation might influence distinctions between different eccentricity partitions in lower tier visual cortex areas. In a prior study involving discriminating embossed capital letters, we found that the more peripheral eccentricity partitions in lower tier visuotopic areas possibly make differential contributions to non-visual processing [6]. The observed differences in BOLD peak onset times for BA37 may be another instance of processing distinctions between Braille naive and literate late-onset blind. However, further experiments that involve a larger sample of Braille naive late-blind people are needed to determine the processes underlying the observed response distinctions in different visual cortex areas.

Sensory deprivation alone may readily enhance cross-modal properties, especially in parts of visual cortex that are multimodal in sighted people. Thus, in regions with already strong multisensory properties, a competitive shift to non-visual inputs may readily follow visual deprivation. Cross-modal activation of visual cortex after short-term visual deprivation in sighted people [12] indicate that potentially competitive access of non-visual inputs to visual cortex can begin almost immediately. In contrast, cross-modal reorganization of lower tier visual areas, which are not (or at best minimally) cross-modally responsive in sighted people, may be particularly recruited by developing skills in attending to selected non-visual inputs. Such learning might be needed to strengthen more remote connections with multisensory cortical areas. Of necessity, the Braille naive participants in the present study must attend to auditory stimulation just as Braille literate blind people, irrespective of blindness onset age, additionally learn to attend to tactile stimulation. Learning to attend to non-visual inputs, especially when performing demanding tasks, probably strengthens the active synapses induced by visual deprivation, and thereby, increases cross-modal activation of lower tier visuotopic cortical areas.

Acknowledgments

We are indebted to Drs. D. Van Essen, A. Snyder, and M. McAvoy for analysis and data re-construction software. This study was supported by NIH grant NS37237.

References

- 1.Amedi A, Raz N, Pianka P, Malach R, Zohary E. Early ‘visual’ cortex activation correlates with superior verbal memory performance in the blind. Nat Neurosci. 2003;6:758–766. doi: 10.1038/nn1072. [DOI] [PubMed] [Google Scholar]

- 2.Arno P, De Volder AG, Vanlierde A, Wanet-Defalque MC, Streel E, Robert A, Sanabria-Bohorquez S, Veraart C. Occipital activation by pattern recognition in the early blind using auditory substitution for vision. NeuroImage. 2001;13:632–645. doi: 10.1006/nimg.2000.0731. [DOI] [PubMed] [Google Scholar]

- 3.Boroojerdi B, Bushara KO, Corwell B, Immisch I, Battaglia F, Muellbacher W, Cohen LG. Enhanced excitability of the human visual cortex induced by short-term light deprivation. Cereb Cortex. 2000;10:529–534. doi: 10.1093/cercor/10.5.529. [DOI] [PubMed] [Google Scholar]

- 4.Boynton GM, Engel SA, Glover GH, Heeger DJ. Linear systems analysis of functional magnetic resonance imaging in human V1. J Neurosci. 1996;16:4207–4221. doi: 10.1523/JNEUROSCI.16-13-04207.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Burton H, Diamond JB, McDermott KB. Dissociating cortical regions activated by semantic and phonological tasks to heard words: a fMRI study in blind and sighted individuals. J Neurophysiol. 2003;90:1965–1982. doi: 10.1152/jn.00279.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Burton H, McLaren D, Sinclair R. Reading embossed capital letters: a fMRI study in blind and sighted individuals. Hum Brain Mapp. 2005 Sep 2; doi: 10.1002/hbm.20188. [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Burton H, Sinclair R, McLaren D. Cortical activity to vibrotactile stimulation: a fMRI study in blind and sighted individuals. Hum Brain Mapp. 2004;23:210–228. doi: 10.1002/hbm.20064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Burton H, Snyder AZ, Conturo TE, Akbudak E, Ollinger JM, Raichle ME. Adaptive changes in early and late blind: a fMRI study of Braille reading. J Neurophysiol. 2002;87:589–611. doi: 10.1152/jn.00285.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Friston K, Holmes A, Worsley K, Poline J, Frith C, Frackowiak R. Statistical parametric maps in functional imaging: a general linear approach. Hum Brain Mapp. 1995;2:189–210. [Google Scholar]

- 10.Hagen MC, Franzen O, McGlone F, Essick G, Dancer C, Pardo JV. Tactile motion activates the human middle temporal/V5 (MT/V5) complex. Eur J Neurosci. 2002;16:957–964. doi: 10.1046/j.1460-9568.2002.02139.x. [DOI] [PubMed] [Google Scholar]

- 11.Kosslyn SM, Ganis G, Thompson WL. Neural foundations of imagery. Nat Rev Neurosci. 2001;2:635–642. doi: 10.1038/35090055. [DOI] [PubMed] [Google Scholar]

- 12.Pascual-Leone A, Hamilton R. The metamodal organization of the brain. Prog Brain Res. 2001;134:427–445. doi: 10.1016/s0079-6123(01)34028-1. [DOI] [PubMed] [Google Scholar]

- 13.Raczkowski D, Kalat JW, Nebes R. Reliability and validity of some handedness questionnaire items. Neuropsychologia. 1974;12:43–47. doi: 10.1016/0028-3932(74)90025-6. [DOI] [PubMed] [Google Scholar]

- 14.Raz N, Amedi A, Zohary E. V1 Activation in congenitally blind humans is associated with episodic retrieval. Cereb Cortex. 2005;15:1459–1468. doi: 10.1093/cercor/bhi026. [DOI] [PubMed] [Google Scholar]

- 15.Röder B, Rösler F. Memory for environmental sounds in sighted, congenitally blind and late blind adults: evidence for cross-modal compensation. Int J Psychophysiol. 2003;50:27–39. doi: 10.1016/s0167-8760(03)00122-3. [DOI] [PubMed] [Google Scholar]

- 16.Sadato N, Okada T, Honda M, Yonekura Y. Critical period for cross-modal plasticity in blind humans: a functional MRI study. NeuroImage. 2002;16:389–400. doi: 10.1006/nimg.2002.1111. [DOI] [PubMed] [Google Scholar]

- 17.Sadato N, Okada T, Kubota K, Yonekura Y. Tactile discrimination activates the visual cortex of the recently blind naive to Braille: a functional magnetic resonance imaging study in humans. Neurosci Lett. 2004;359:49–52. doi: 10.1016/j.neulet.2004.02.005. [DOI] [PubMed] [Google Scholar]

- 18.Sadato N, Pascual-Leone A, Grafman J, Ibanez V, Deiber MP, Dold G, Hallett M. Activation of the primary visual cortex by Braille reading in blind subjects. Nature. 1996;380:526–528. doi: 10.1038/380526a0. [DOI] [PubMed] [Google Scholar]

- 19.Talairach J, Tournoux P. Coplanar Stereotaxic Atlas of the Human Brain. Thieme Medical; 1988. p. 122. [Google Scholar]

- 20.Van Essen DC. A population-average, landmark- and surface-based (PALS) atlas of human cerebral cortex. NeuroImage. 2005 Sep; doi: 10.1016/j.neuroimage.2005.06.058. in press. [DOI] [PubMed] [Google Scholar]

- 21.Weeks R, Horwitz B, Aziz-Sultan A, Tian B, Wessinger CM, Cohen LG, Hallett M, Rauschecker JP. A positron emission tomographic study of auditory localization in the congenitally blind. J Neurosci. 2000;20:2664–2672. doi: 10.1523/JNEUROSCI.20-07-02664.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]