Abstract

The aim of this paper is to develop a semiparametric model for describing the variability of the medial representation of subcortical structures, which belongs to a Riemannian manifold, and establishing its association with covariates of interest, such as diagnostic status, age and gender. We develop a two-stage estimation procedure to calculate the parameter estimates. The first stage is to calculate an intrinsic least squares estimator of the parameter vector using the annealing evolutionary stochastic approximation Monte Carlo algorithm and then the second stage is to construct a set of estimating equations to obtain a more efficient estimate with the intrinsic least squares estimate as the starting point. We use Wald statistics to test linear hypotheses of unknown parameters and establish their limiting distributions. Simulation studies are used to evaluate the accuracy of our parameter estimates and the finite sample performance of the Wald statistics. We apply our methods to the detection of the difference in the morphological changes of the left and right hippocampi between schizophrenia patients and healthy controls using medial shape description.

Keywords: Intrinsic least squares estimator, Medial representation, Semiparametric model, Wald statistic

1 Introduction

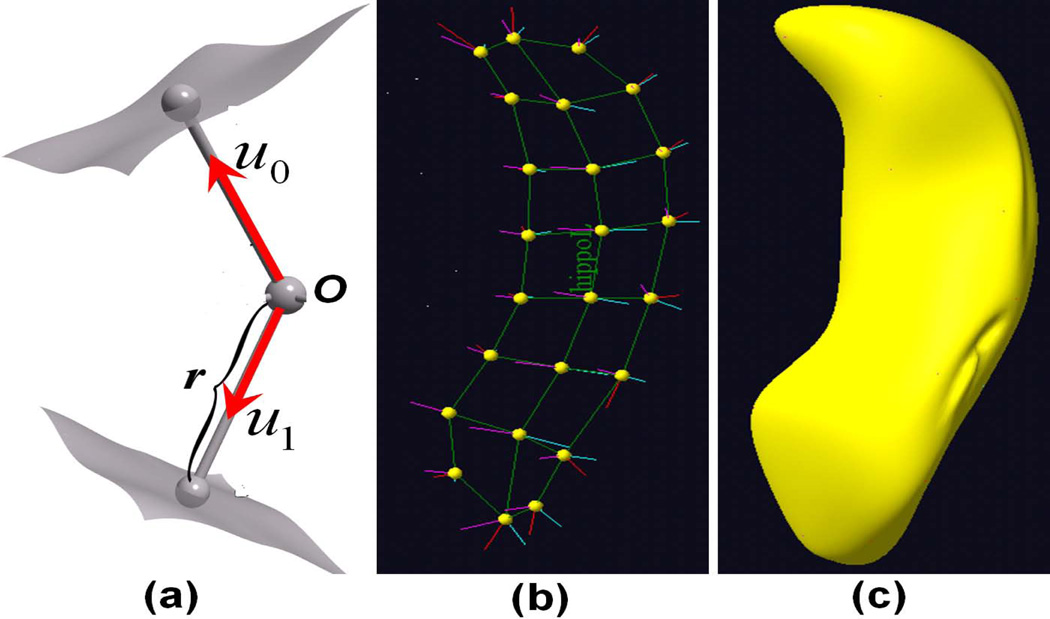

The medial representation of subcortical structures provides a useful framework for describing shape variability in local thickness, bending, and widening for subcortical structures (Fletcher et al., 2004). In the medial representation framework, a geometric object is represented as a set of connected continuous medial primitives, called medial atoms. See Figure 1 for a hippocampus example. For 3-dimensional objects, these medial atoms are formed by the centers of the inscribed spheres and by the associated spokes from the sphere centers to the two respective tangent points on the object boundary. Specifically, a medial atom is formed by a position O, the center of the inscribed sphere; a radius r, the common spoke length; and (s0, s1), the two unit spoke directions (Pizer et al., 2003; Styner et al., 2004). A medial atom can be regarded as a point on a Riemannian manifold, M(1) = R3 × R+ × S2 × S2, where S2 is the sphere in R3 with radius one. A medial representation model consisting of K medial atoms can be described as the direct product of K copies of M(1), i.e., . The existing statistical analytical methods for the medial representation include principal geodesic analysis, the estimation of extrinsic and intrinsic means, and a permutation test for comparing medial representation data from two groups (Fletcher et al., 2004). The scientific interests of some neuroimaging studies, however, typically focus on establishing the association between subcortical structure and a set of covariates, particularly diagnostic status, age, and gender, thus requiring a regression modeling framework for medial representation.

Figure 1.

(a) A medial representation model at an atom, where O is the center of the inscribed sphere, r is the common spoke length, and (s0, s1) are the two unit spoke directions; (b) a skeleton of a hippocampus with 24 medial atoms; (c) the smoothed surface of the hippocampus.

There are several challenging issues including multiple directions on S2 and the complex correlation structure among different components of M(1) in developing medial representation regression models with a set of covariates. Although there is a sparse literature on regression modeling of a single directional response and a set of covariates of interest (Mardia and Jupp, 1983; Jupp and Mardia 1989), these regression models of directional data are based on particular parametric distributions, such as the von Mises-Fisher distribution (Mardia, 1975; Mardia and Jupp, 1983; Presnell et al., 1998). For instance, existing circular regression models assume that the angular response follows the von Mises-Fisher distribution with either the angular mean ηi or the concentration parameter κi being associated with the covariates xi (Gould, 1969; Johnson and Wehrly, 1978; Fisher and Lee, 1992). However, it remains unknown whether it is appropriate to directly apply these parametric models for a single directional measure to simultaneously characterize the two spoke directions at each atom, which are correlated. Moreover, the two spoke directions may be correlated with other components of each atom and this provides further challenges in developing a parametric model to simultaneously model all components of each atom of the medial representation.

The rest of this paper is organized as follows. In Section 2, we formulate the semiparametric regression model and introduce the two-stage estimation procedure for estimating the regression coefficients. Then, we establish asymptotic properties of our estimates and then develop Wald statistics to carry out hypothesis testing. Simulation studies in Section 3 are used to assess the finite sample performance of the parameter estimates and Wald test statistics. In Section 4, we illustrate the application of our statistical methods to the detection of the difference in morphological changes of the hippocampi between schizophrenia patients and healthy controls in a neuroimaging study of schizophrenia.

2 Theory

2.1 Inverse Link functions

Suppose we have an exogenous q × 1 covariate vector xi and a medial representation for a particular sub-cortical structure, denoted by Mi = {mi(d) : d ∈ 𝒟}, for the i–th subject, where d represents an atom of the medial representation. For notational simplicity, we temporarily drop atom d from our notation. We formally introduce a semiparametric regression model for medial representation responses and covariates of interest from n subjects. The regression model involves modeling a conditional mean of a medial representation response mi at an atom given xi, denoted by μi(β) = μ(xi, β), where β is a p × 1 vector of regression coefficients in ℬ ⊂ Rp. Thus, μ(·, ·) is a map from Rq × Rp to M(1) and μi(β) = (μoi(β)T, μri(β), μ0i(β)T, μ1i(β)T)T, which is a 10 × 1 vector and μoi(β), μri(β), μ0i(β), and μ1i(β) are the ‘conditional means’ of the location Oi, the radius ri, and the two spoke directions s0i and s1i respectively, given xi, for the i-th subject. Note that for spoke directions, we borrow the term conditional mean for random variables in Euclidean space.

We need to formalize the notion of conditional mean explicitly. For the location component of a medial representation, we may set μoi(β) = (g1(xi, β1), g2(xi, β2), g3(xi, β3))T, where gk(·, ·) is a known inverse link function and βk is a pk × 1 coefficient vector for k = 1, 2, 3. There are many different ways of specifying gk(xi, βk). The simplest one is the linear inverse link function . We may also represent gk(xi, βk) as a linear combination of basis functions {ψj(xi) : j = 1, …, J}, such as B-splines, that is , in which βkj is the j-th component of βk. In this way, we can approximate a nonlinear function of xi using the linear combination of basis functions. For the radius component, we may use μri(β) = g4(xi, β4), where β4 is a p4 × 1 coefficient vector for a medial representation radius. Since a radius is always positive, a natural inverse link function is , among other possible choices.

As the two spoke directions at each atom of a medial representation are spherical responses, we develop a link function μ0i(β) ∈ S2 for the first spoke direction at a specific atom for notational simplicity. Let xi,d be a qd × 1 vector of all the discrete covariates, xi,c are a qc × 1 vector of all the continuous covariates and their potential interactions with xi,d, β5d and β5c are the regression parameters corresponding to xi,d and xi,c, respectively, and β5 contains all unknown parameters in β5d and β5c. From now on, all covariates have been centered to have mean zero. We assume that all first spoke directions associated with the same discrete covariate vector xi,d are concentrated around a center on the sphere given by

| (1) |

where θ(xi,d) and ϕ(xi,d) are, respectively, the colatitude and the longitude, and β5d includes all unknown parameters θ(xi,d) and ϕ(xi,d) for different xi,d.

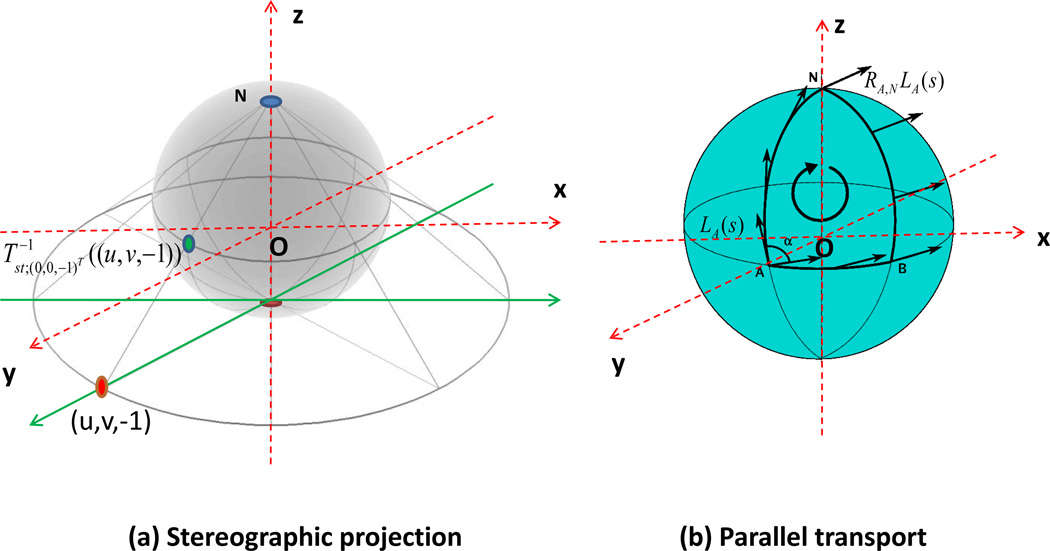

We then describe the stereographic projection of projecting μ0i(β) on the plane with base point g5(xi,d, β5d), denoted by Tst;g5(xi,d,β5d)(μ0i(β)) (Downs, 2003). A graphic illustration of the stereographic projection is given in Figure 2 (a). The stereographic projection Tst;g5(xi,d,β5d)(μ0i(β)) is defined as the point of intersection for the plane passing through g5(xi,d, β5d) with the normal vector g5(xi,d, β5d), which is given by g5(xi,d, β5d)T {(u, v, w)T − g5(xi,d, β5d)} = 0 for (u, v, w) ∈ R3, and the line passing through −g5(xi,d, β5d) and μ0i(β): μ0i(β) − t{g5(xi,d, β5d) + μ0i(β)} for t ∈ (−∞, ∞). With some calculation, it can be shown that Tst;g5(xi,d,β5d)(μ0i(β)) is given by

Figure 2.

Graphic illustration of (a) stereographic projection and (b) parallel transport. In panels (a) and (b), N and O denote the north pole (0, 0, 1) and the origin (0, 0, 0), respectively, and the red dash lines are the x, y, and z-axes. In panel (a), the red point (u, v, −1) is a selected point on the plane z = −1 and the green point is the inverse map of the stereographic projection mapping from (u, v, −1) back to S2. In panel (b), the point A is on S2, LA(s) is in TAS2, and RA,NLA(s) ∈ TNS2 is the parallel transport of LA(s) from A to the north pole N.

Let R be a rotation matrix in SO(3) such that RT = R−1 and det(R) = 1, where det(R) denotes the determinant of R and SO(3) is the set of 3 × 3 rotation matrices. By applying the rotation matrix R to both g5(xi,d, β5d) and μ0i(β), we have

| (2) |

We consider a specific rotation matrix for rotating s1 = (s1,u, s1,v, s1,w)T ∈ S2 to s2 = (s2,u, s2,v, s2,w)T ∈ S2, denoted by Rs1,s2, such that Rs1,s2s1 = s2. We need to calculate and s3 = s1 × s2/‖s1 × s2‖ = (s3,u, s3,v, s3,w)T, where s1 × s2 = (s1,vs2,w − s1,ws2,v, s1,ws2,u − s1,us2,w, s1,us2,v − s1,vs2,u)T and ‖·‖ is the Euclidean norm of a vector. Then, Rs1,s2 is given by

| (3) |

where cη = 1 − cos(η).

The inverse link function μ0i(β) is explicitly given as follows. By letting R = Rg5(xi,d,β5d),(0,0,−1)T in (2), in which (0, 0,−1)T is the south pole of S2, we have

| (4) |

We assume that

| (5) |

where β5c is a qc × 2 matrix. Let be the inverse map of the stereographic projection mapping from the plane with base point (0, 0, −1) back to S2 such that

Please see Fig. 2 (a) for details. Note that Rg5(xi,d,β5d),(0,0,−1)T ∈ SO(3), the inverse link function μ0i(β) is given by

| (6) |

When β5c = 0 indicating no continuous covariate effect, μ0i(β) reduces to g5(xi,d, β5d). Similarly, for the second spoke direction, we introduce β6d and β6c as the regression parameters corresponding to xi,d and xi,c, respectively, and then we define g6(xi,d, β6d) and μ1i(β), respectively, as the center associated with the same discrete covariate vector xi,d and the inverse link function by following (1) and (6). We have discussed various inverse link functions for μ (xi, β), but these link functions can be misspecified for a given data set. To avoid such misspecification, we may estimate these inverse link functions nonparametrically. It is a topic for future research.

2.2 Intrinsic regression model

Now, we introduce a definition of a residual to ensure that μi(β) is the proper conditional mean of mi given xi. For instance, in a classical linear model, the response is the sum of the regression function and the residual, and the conditional mean of the response equals the regression function. Given two points mi and μi(β) on the manifold, we need to define the residual or difference between them. At μi(β), we have the tangent space of M(1), denoted by Tμi(β)M(1), which is a Euclidean space representing a first order approximation of the manifold M(1) near μi(β). We calculate the projection of mi onto Tμi(β)M(1), denoted by Lμi(β)(mi), as follows:

| (7) |

where Lμki(β)(ski) = arccos(μki(β)T ski)s̃ki/‖s̃ki‖, in which s̃ki = ski − {μki(β)T ski}μki(β) for k = 0, 1. Thus, Lμi(β) (mi) can be regarded as the residual or difference between mi and μi(β) in Tμi(β)M(1). Geometrically, Lμi(β)(mi) is associated with the Riemannian Exponential and Logarithm maps on M(1).

We introduce the Riemannian Exponential and Logarithm maps on M(1). Let the tangent vector θ = (θo, θr, θs0, θs1)T ∈ TmM(1), where θo ∈ R3 is the location tangent component, θr ∈ R is the radius tangent component, and θs0 and θs1 ∈ R3 are the two directional tangent components. Let γm(t; θ) be the geodesic on M(1) passing through γm(0; θ) = m ∈ M(1) in the direction of the tangent vector θ ∈ TmM(1). The Riemannian Exponential map, denoted by Expm(·), maps the tangent vector θ at m to a point m1 ∈ M(1) and Expm(θ) = γm(1; θ). The Riemannian Logarithm map, denoted by Lm(m1), maps m1 ∈ M(1) onto the tangent vector θ = Lm(m1) ∈ TmM(1). The Riemannian Exponential map and Logarithm map are inverses of each other, that is Expm(Lm(m1)) = m1.

Because a medial representation is the product space of several spaces, the Riemannian Exponential/Logarithm map for M(1) is the product of the Riemannian Exponential/Logarithm maps for each space. Let be two points in M(1) and θ ∈ TmM(1). We give the explicit form of the Exponential and Logarithm maps for each space of interest. For the space of locations, Expo(θo) = O + θo, and Lo(O1) = O1 − O. For the space of radiuses, Expr(θr) = r exp(θr) and Lr(r1) = log(r1/r). For the space S2, Exps0(θs0) = cos(‖θs0‖2)s0 + sin(‖θs0‖2) θs0/‖θs0‖2. Let . If s0 and s0,1 are not antipodal (s0 ≠ −s0,1), we can get . Thus, for the space M(1), the Riemannian Exponential and Logarithm maps are, respectively, given by

| (8) |

| (9) |

Although the Lμi(β)(mi) ∈ Tμi(β)M(1) are in different tangent spaces, we can use parallel transport to translate them to the same tangent space at an overall base point, denoted by B(β). We choose B(β) = (0, 0, 0, 1, g̅5(β5d)T, g̅6(β6d)T)T, where g̅5(β5d) and g̅6(β6d) are the mean directions of g5(xi,d, β5d) and g6(xi,d, β6d) for all possible xi,d, respectively. We use parallel transport formulated by a rotation matrix,

| (10) |

to translate Lμi(β)(mi) ∈ Tμi(β)M(1) into {R(μi(β) ⇒ B(β))}Lμi(β)(mi) ∈ TB(β)M(1). An illustration of the parallel transport is given in Figure 2 (b). Finally, we define the rotated residual of mi with respect to μi(β) as

| (11) |

The ℰi(β) are uniquely defined in the same tangent space TB(β)M(1), which is a Euclidean space.

The intrinsic regression model for medial representations M(1) at an atom is then defined by

| (12) |

for i = 1, …, n, where the expectation is taken with respect to the conditional distribution of ℰi(β) given xi (Le, 2001). In model (12), the nonparametric component is the distribution of mi given xi, which is left unspecified, while the parametric component is the mean function μi(β), which is assumed to be known. Moreover, our model (12) does not assume a homogeneous variance across all atoms and subjects. This is also desirable for real applications, because between-subject and between-atom variabilities can be substantial.

At atom d, let ℰi(β, d) be {R(μi(β, d) ⇒ B(β, d))}Lμi(β,d)(mi(d)), where μi(β, d) is the conditional mean of mi(d) given xi. Model (12) leads to an intrinsic regression model for M(1)K given by

| (13) |

for all d ∈ 𝒟 and i = 1, …, n. As a comparison, consider a multivariate regression model Yi = Xiβ + εi and E(εi | xi) = E(Yi − Xiβ | xi) = 0, where Yi is a py × 1 vector and Xi is a py × p design matrix depending on xi. It is clear that ℰi(β, d) is closely related to εi = Yi − Xiβ in the multivariate regression model and thus the intrinsic regression model (13) for M(1)K can be regarded as a generalization of a standard multivariate regression.

The key advantage of translating tangent vectors on different tangent spaces to the same tangent space is that we can directly apply most multivariate analysis techniques in Euclidean space to the analysis of ℰi(β) (Anderson, 2003). By using parallel transport to obtain ℰi(β), we can explicitly account for correlation structure among ℰi(β) and then construct a set of estimation equations to calculate a more efficient parameter estimate. Please refer to the next section for details.

2.3 Two-stage estimation procedure

We propose a two-stage estimation procedure for computing parameter estimates for the semi-parametric medial representation regression model (12) as follows.

Stage 1 is to calculate an intrinsic least squares estimate of the parameter β, denoted by β̂I, by minimizing the square of the geodesic distance,

| (14) |

where Dn,i(β) = dist{mi, μi(β)}2 and dist{mi, μi(β)} is the shortest distance between mi and μi(β) on M(1). Since Dn(β) can be written as the sum of four terms: , we can minimize for k = 1, 2, 3, 4 independently when they do not share any common parameters.

Computationally, we develop an annealing evolutionary stochastic approximation Monte Carlo algorithm (Liang, 2011) for obtaining β̂I, whose details can be found in the supplementary report. Moreover, according to our experience, the traditional optimization methods including the quasi-Newton method do not perform well for optimizing Dn(β) and strongly depend on the starting value of β. When μi(β) takes a relatively complicated form, Dn(β) is generally not concave and can have multiple local modes. For instance, since μ1i(β) is a nonlinear function of β and may not be a concave function of β over ℬ, our prior experiences have shown that the quasi-Newton method for optimizing can easily converge to local minima.

The estimate β̂I is closely associated with the intrinsic mean (Bhattacharya and Patrangenaru, 2005) and does not involve the concept of parallel transport. If we replace |arccos(s)|2 by 1 − s in , then our fitting procedure in Stage 1 is effectively a maximum likelihood estimation for a model with the Fisher-distributed errors on the sphere and thus β̂I is an extrinsic estimate. It will be shown in Theorem 1 below that β̂I is a consistent estimate, but β̂I is not efficient, since it does not account for the correlation among the different components of medial representations.

Stage 2 is to calculate a more efficient estimator of β, denoted by β̂E, which is a solution of

| (15) |

where ĥE(xi) = ∂βμi(β̂I){R(μi(β̂I) ⇒ B(β̂I))}−1 = ∂βμi(β̂I){R(B(β̂I) ⇒ μi(β̂I))}, , and V̂ = V(β̂I).

The equation (15) in Stage 2 is invariant to the rotation matrix R(B(β) ⇒ P0), where P0 = (0, 0, 0, 1, 0, 0, 1, 0, 0, 1)T representing the center at the origin (0, 0, 0)T, the unit radius r = 1, and the two spoke directions pointing towards the north pole (0, 0, 1)T. Specifically, we can use the rotation matrix R(B(β) ⇒ P0) to rotate ℰi(β) to {R(B(β) ⇒ P0)}ℰi(β) for all i. Correspondingly, ĥE(xi) and V−1 are, respectively, changed to ĥE(xi){R(B(β) ⇒ P0)}T and {R(B(β) ⇒ P0)}V−1{R(B(β) ⇒ P0)}T. Thus, after applying the rotation R(B(β) ⇒ P0), we can show that ĥE(xi)V−1ℰi(β) equals

which is independent of R(B(β) ⇒ P0).

Model (12) is a conditional mean model (Chamberlain, 1987; Newey, 1993). The conditional mean model implies that E{h(xi)ℰi(β)} = E[h(xi)E{ℰi(β) | xi}] = 0 for any vector function h(·), which may depend on β. After some algebraic calculations, it can be shown that calculating β̂I is equivalent to solving that is, hI(xi) = ∂βμi(β)R(B(β) ⇒ μi(β)). However, it has been shown (Chamberlain, 1987; Newey, 1993) that the optimal function has the form hopt(xi, β) = E{∂βℰi(β) | xi}var{ℰi(β) | xi}−1, which achieves the semiparametric efficiency bound for β. Therefore, hI(xi) is not an optimal function and thus the intrinsic least squares estimate in Stage 1 is not an efficient estimator.

Since E{∂βℰi(β) | xi} and var{ℰi(β) | xi} for each β do not have a simple form, we must estimate them nonparametrically, which leads to a nonparametric estimate of hopt(x, β), denoted by ĥopt(x, β). Although we may solve the estimating equations to calculate the efficient estimator of β, it can be computationally challenging to solve Fn(β) since nonparametrically, estimating the 8 × p matrix E{∂βℰi(β) | xi} and the 8 × 8 inverse matrix of var{ℰi(β) | xi} can be very unstable for a relatively small sample size. Thus, we replace var{ℰi(β) | xi} by var{ℰi(β)} and approximate E{∂βℰi(β) | xi} by ∂βμi(β)R(B(β) ⇒ μi(β)). Moreover, in order to avoid calculating ∂βμi(β)R(B(β) ⇒ μi(β)) and var{ℰi(β)} during each numerical iteration, we calculate them at β̂I and then construct the objective function for calculating β̂E. The two-stage estimation procedure leads to substantial computational efficiency, since solving the complex estimating equations (15) is relatively easy starting from β̂I. An alternative way is to directly minimize , which is much more complex than Dn(β) and thus is computationally difficult.

As a comparison between β̂E and β̂I, we consider a multivariate nonlinear regression model Yi = F(xi, β) + εi with E(εi | xi) = E{Yi − F(xi, β) | xi} = 0 and var(εi | xi) = Σ, where F(xi, β) is a vector of nonlinear functions of xi and β. In this case, ℰi(β) = εi = Yi − F(xi, β), , and ĥE(xi) = ∂βF(xi, β̂I). Then, Σ can be estimated by using . Equation (15) reduces to , whose solution is just β̂E. Under mild conditions, it can be shown that compared with β̂I, β̂E is a more efficient estimator of β and its asymptotic covariance is given by . In the context of highly concentrated spoke data, our intrinsic regression model reduces to the multivariate nonlinear regression model and similar to the multivariate nonlinear regression model, the two-stage approach can increase statistical efficiency in estimating β.

2.4 Asymptotic properties

We establish consistency and asymptotic normality of β̂I and β̂E. The following assumptions are needed to facilitate the technical details, although they are not the weakest possible conditions.

Assumption A1. The data {zi = (xi, mi) : i = 1, …, n} form an independent and identical sequence.

Assumption A2. β* is an interior point of the compact set ℬ ⊂ Rp and is the unique solution for the model, E {hE(x)ℰ(β)} = 0, where hE(x) = ∂βμi(β*){R(B(β*) ⇒ μi(β*))}V(β*)−1. Moreover, β* is an isolated point of the set of all minimizers of the map D(β) = E[dist{m, μ(x, β)}2] on ℬ, denoted by Iℬ.

Assumption A3. In an open neighborhood of β*, μ(x, β) has a second-order continuous derivative with respect to β and ‖Lμ(β)(m)‖, ‖∂μLμ(β)(m)‖, ‖∂βμ(x, β)‖ and are bounded by some integrable function G(z) with E{G(z)2} < ∞.

Assumption A4. In an open neighborhood of β*, the rank of is p and E[{∂βDn,i(β)}⊗2] is positive definite, where a⊗2 = aaT for a given vector a.

Assumption A1 is needed just for notational simplicity and can be easily modified to accommodate independent and non-identically distributed scenarios. Assumption A2 is an identifiability condition. Assumptions A3 and A4 are standard conditions for ensuring the first order asymptotic properties including consistency and asymptotic normality of M-estimators when the sample size is large (van der Vaart and Wellner, 1996). We obtain the following theorems, whose detailed proofs can be found in the Appendix.

Theorem 1. (a) If assumptions A1, A2, and A3 are true, then β̂I and β̂E converge to β* in probability as n → ∞, where β* is the solution of (12).

(b) Under assumptions A1–A4, we have

| (16) |

as n → ∞, where Ip is a p × p identity matrix and → denotes convergence in distribution.

(c) Under assumptions A1–A4, we have

| (17) |

as n → ∞.

Theorem 1 has several important applications. Theorem 1 (a) establishes the consistency of β̂E and β̂I. According to Theorems 1 (b) and (c), we can consistently estimate the covariance matrices of β̂E and β̂I. For instance, the covariance matrix of β̂E, denoted by Σ̂E, can be approximated by

| (18) |

Moreover, we can use Theorem 1 (c) to construct confidence cones of β̂E and its functions. Since Theorem 1 only establishes the asymptotic properties of β̂E when the sample size is large, these properties may be inadequate to characterize the finite sample behavior of β̂E for relatively small samples. In the case of small samples, we may have to resort to higher order approximations, such as saddlepoint approximations and bootstrap methods (Butler, 2007; Davison and Hinkley, 1997).

Our choices of which hypotheses to test are motivated by scientific questions, which involve a comparison of medial representation components across diagnostic groups. These questions usually can be formulated as testing linear hypotheses of β as follows:

| (19) |

where A is an r × p matrix of full row rank and b0 is an r × 1 specified vector. We test the null hypothesis H0 : Aβ = b0 using a Wald test statistic Wn defined by

| (20) |

We are led to the following theorem.

Theorem 2. If the assumptions A1–A4 are true, then the statistic Wn is asymptotically distributed as χ2(r), a chi-square distribution with r degrees of freedom, under the null hypothesis H0.

An asymptotically valid test can be obtained by comparing sample values of the test statistic with the critical value of a χ2(r) distribution at a pre-specified significance level α. However, for a small sample size n, we observed relatively low precision of the chi-square approximation. Instead, we calibrate Wn with a critical value of , which leads to a slightly higher precision of the F approximation, where is the upper α-percentile of the Fr,n−r distribution. That is, we reject H0 if , and do not reject H0 otherwise. The reason that the F approximation outperforms the chi-square approximation is due to the fact that the F approximation explicitly accounts for sample uncertainty in estimating the covariance matrix of Aβ̂E.

3 Simulation studies and real data

3.1 Double directional data with covariates

We generated double directional responses as follows:

where μ0i(β) and μ1i(β) were set according to (6), in which xi,d’s were fixed at 1 and xi,c’s were independently simulated from a N(0, 1) distribution. It is assumed that both μ0i(β) and μ1i(β) were, respectively, centered around g5(xi,d, β5d) = (u0, v0, w0)T and g6(xi,d, β6d) = (u1, v1, w1)T according to (1) such that

In addition, we imposed two constraints as follows:

We generated the errors ℰ0i and ℰ1i in T(0,0,−1)(S2) from a 4-dimensional normal distribution, N(0, 0.5Σ) with Σ being specified as

Subsequently, we rotated ℰ0i onto the tangent space Tμ0i(β) (S2) and ℰ1i onto the tangent space Tμ1i(μ)(S2), and then we used the Exp map defined in the supplementary report to obtain the responses s0i and s1i. We set n = 40, 80, and 120, ρ1 = ρ2 = 0.5, and then we simulated 2000 datasets for each case to compare the biases and the root-mean-square error of the two estimates: β̂I and β̂E. As seen in Table 1, β̂E has smaller root-mean-square error than β̂I for every component of β, but some components of β̂E can be more biased.

Table 1.

Bias (×10−3) and MS (×10−2) of β̂I and β̂E for double directional case. Bias denotes the bias of the mean of the estimates; MS denotes the root-mean-square error. For each parameter, the first row is for β̂I and the second is for β̂E. Moreover, the constraints β5c,1 = β6c,1 and β5c,2 = β6c,2 are imposed.

| n = 40 | n = 80 | n = 120 | ||||

|---|---|---|---|---|---|---|

| Bias | MS | Bias | MS | Bias | MS | |

| β5d,1 = 1.2 | 3.15 | 13.26 | 4.35 | 10.04 | 4.22 | 7.75 |

| 3.40 | 13.10 | 4.36 | 9.82 | 3.98 | 7.60 | |

| β5c,1 = β6c,1 = 1 | 9.29 | 19.19 | 1.74 | 12.76 | 7.43 | 10.31 |

| 8.93 | 18.02 | 0.89 | 12.09 | 7.27 | 9.81 | |

| β5d,2 = 1.2 | 9.44 | 13.69 | 2.05 | 10.19 | 0.86 | 7.80 |

| 9.81 | 13.29 | 0.88 | 9.59 | 0.43 | 7.69 | |

| β5c,2 = β6c,2 = 1 | 6.90 | 18.55 | 5.00 | 13.08 | 0.64 | 10.53 |

| 6.74 | 17.50 | 5.67 | 12.44 | 0.62 | 9.99 | |

| β6d,1 = 0.8 | 5.18 | 16.85 | 3.23 | 9.74 | 2.49 | 7.93 |

| 5.69 | 12.91 | 3.10 | 9.65 | 2.69 | 7.76 | |

| β6d,2 = 0.8 | 2.34 | 14.84 | 1.31 | 9.78 | 0.86 | 8.47 |

| 1.32 | 13.06 | 0.98 | 9.71 | 0.91 | 8.07 | |

We also calculated the mean of the estimated standard error estimates and the relative efficiencies for all the components in β̂E and evaluated the finite sample performance of the Wald statistic Wn for hypothesis testing. The results are quite similar to those from the single directional case in the supplementary file, so we did not present them here to preserve space.

3.2 Schizophrenia study of the hippocampus

We consider a neuroimaging dataset about the medial representation shape of the hippocampus structure in the left and right brain hemisphere in schizophrenia patients and healthy controls, collected at 14 academic medical centers in North America and western Europe. The hippocampus, a gray matter structure in the limbic system, is involved in processes of motivation and emotions, and plays a central role in the formation of memory.

In this study, 238 first-episode schizophrenia patients (53 female, 185 male; mean/standard deviation age, female 25.1/5.69 years; male 23.6/4.55 years) were enrolled who met the following criteria: age 16 to 40 years; onset of psychiatric symptoms before age 35; diagnosis of schizophrenia, schizophreniform, or schizoaffective disorder according to DSM-IV criteria; and various treatment and substance dependence conditions. 56 healthy control subjects (18 female, 38 male; mean/standard deviation age, female 24.8/3.30 years; male 25.3/4.21 years) were also enrolled. Neurocognitive and magnetic resonance imaging (MRI) assessments were performed at the first visit time.

The brain MRI data were first aligned to the Montreal Neurological Institute (MNI) space. Hippocampi were segmented in the MNI space and then their medial representations were reconstructed from those binary segmentations (Styner et al., 2004). Subsequently, these hippocampus medial representations were realigned by using a rigid body variation of the standard Procrustes method. The resulting alignment leads to a shape representation that is invariant to translation and rotation, but not to scale. Scaling information is retained for studying changes in overall size or volume.

The aim of our study was to investigate the difference of medial representation shape between schizophrenia patients and healthy controls while controlling for other factors, such as gender and age. The response of interest was the hippocampus medial representation shape at the 24 medial atoms of the left and right brain hemisphere (Figure 1). Covariates of interest were Whole Brain Volume (WBV), race including Caucasian, African American and others, age in years, gender, and diagnostic status including patient and control.

The covariate vector is xi = (1, genderi, agei, diagi, race1i, race2i, WBVi)T, where diag is the dummy variable for patients versus healthy controls, and race1 and race2 are, respectively, dummy variables for Caucasians and African Americans versus other races. For the location component on the medial representation, we set μO(x, β) = (xT β1, xT β2, xT β3)T, where βk (k = 1, 2, 3) are 7 × 1 coefficient vectors. For the radius component on the medial representation, we set μr(x, β) = exp(xT β4), where β4 is a 7 × 1 coefficient vector. For the directional components on the medial representation, we used μ0(xi, β) as defined in (6), in which xi,d = (genderi, diagi, race1i, race2i)T, xi,c = (agei, WBVi)T, for s0 and for s1. Therefore, we have the coefficient vector . Then we used the two-stage estimation procedure to obtain estimates of β and conducted hypothesis testing using Wald statistics. Since the primary goal of the study is to investigate the difference of medial representation shape between schizophrenia patients and healthy controls, we paid special attention to the terms in β associated with diagnostic status.

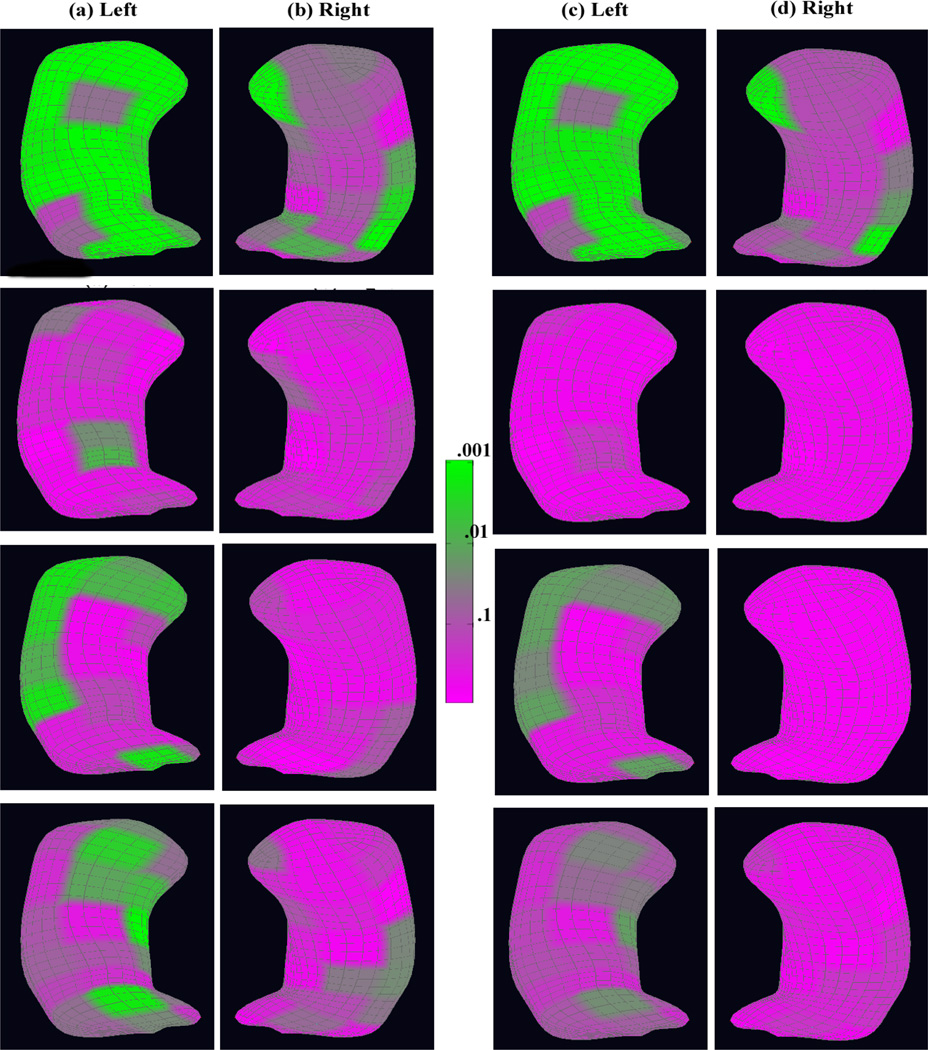

First, we examined the overall diagnostic status effect on the whole medial representation structure. The p-values of the diagnostic status effects across the atoms of both the left and right reference hippocampi are shown in the first row (a) and (b) of Figure 3. The false discovery rate approach (Benjamini and Hochberg, 1995) was used to correct for multiple comparisons, and the corresponding adjusted p-values are shown in the first row (c) and (d) of Figure 3. There was a large significant area in the left hippocampus and also some in the right hippocampus. The significance area remains almost the same after correcting for multiple comparisons, but with an attenuated significance level.

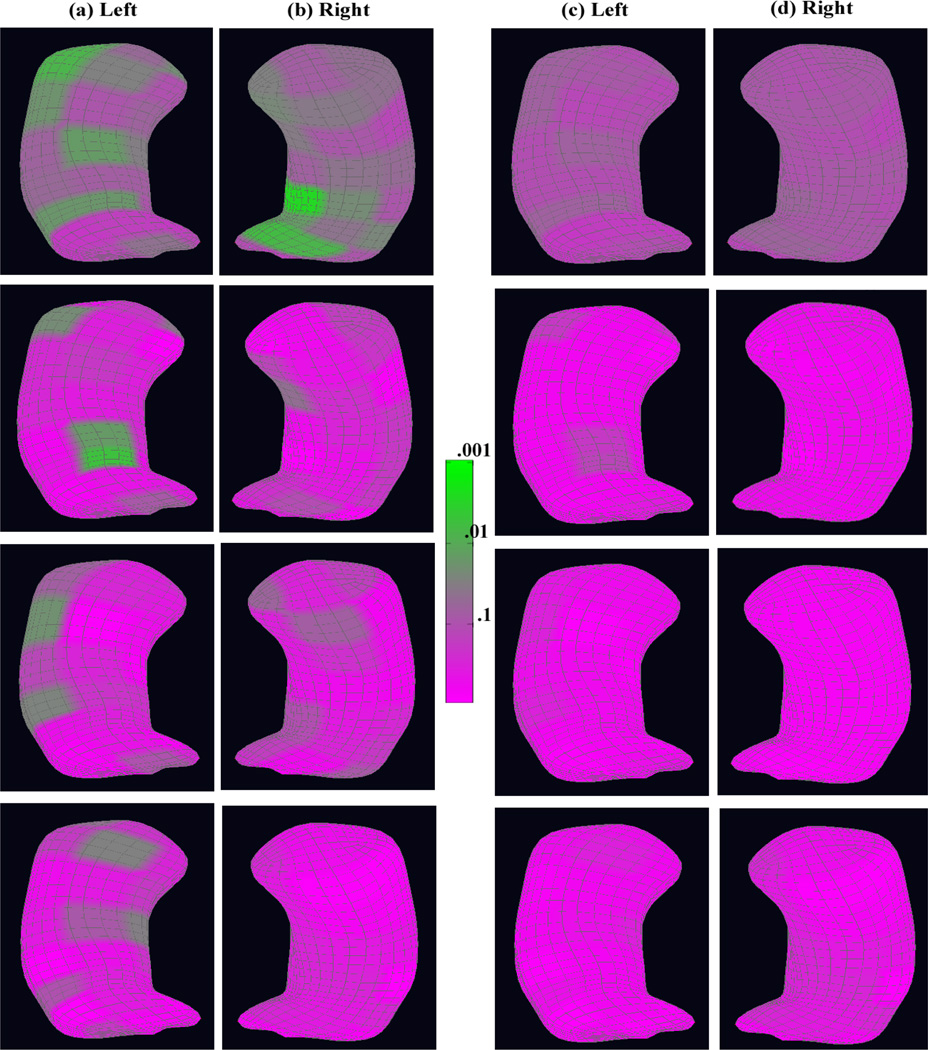

Figure 3.

The coded p–value maps of the diagnostic status effects from the schizophrenia study of the hippocampus: rows 1, 2, 3, and 4 are for the whole medial representation structure, radius, location, and two directions, respectively: at each row, the uncorrected p–value maps for (a) the left hippocampus and (b) the right hippocampus; the corrected p–value maps for (c) the left hippocampus and (d) the right hippocampus after correcting for multiple comparisons.

We also examined each component on the medial representation separately. For the radius component of the medial representation, we presented the p-values of the diagnostic status effects across the atoms in the second row (a) and (b) of Figure 3 and the adjusted p-values in the second row (c) and (d). Before correcting for multiple comparisons, we observed a significant diagnostic status difference in the medial representation thickness at the central atoms near the posterior side in the left hippocampus and in some areas in the right hippocampus, whereas we did not observe much of a significant diagnostic status effect after correcting for multiple comparisons.

For the location component of the medial representation, we showed the p-values of the diagnostic status effects in the third row (a) and (b) of Figure 3 and the corresponding adjusted p-values in the third row (c) and (d). We observed significant diagnostic status differences mainly located around the anterior and lateral side of the left hippocampus though with clearly reduced significance after correcting for multiple comparisons. Similar lateral results have also been observed by Narr et al. (2004).

Similarly, for the two spoke directions on the medial representation, the p-values of the diagnostic status effects are shown in the last row (a) and (b) of Figure 3 and the corresponding adjusted p-values are shown in the last row (c) and (d). Before correcting for multiple comparisons, there was some significant area around the anterior, posterior, and the medial side of the left hippocampus, but not much in the right hippocampus. There was still some significance for the diagnostic status effect around the same areas in the left hippocampus after correcting for multiple comparisons, but nothing in the right hippocampus. The posterior orientation effect of hippocampal differences in schizophrenia has also been shown by Styner et al. (2004) and basically constitutes a local bending change in that region. The anterior effect is novel and located at the intersection of the hippocampal Cornu Ammonis 1 and Cornu Ammonis 2 regions.

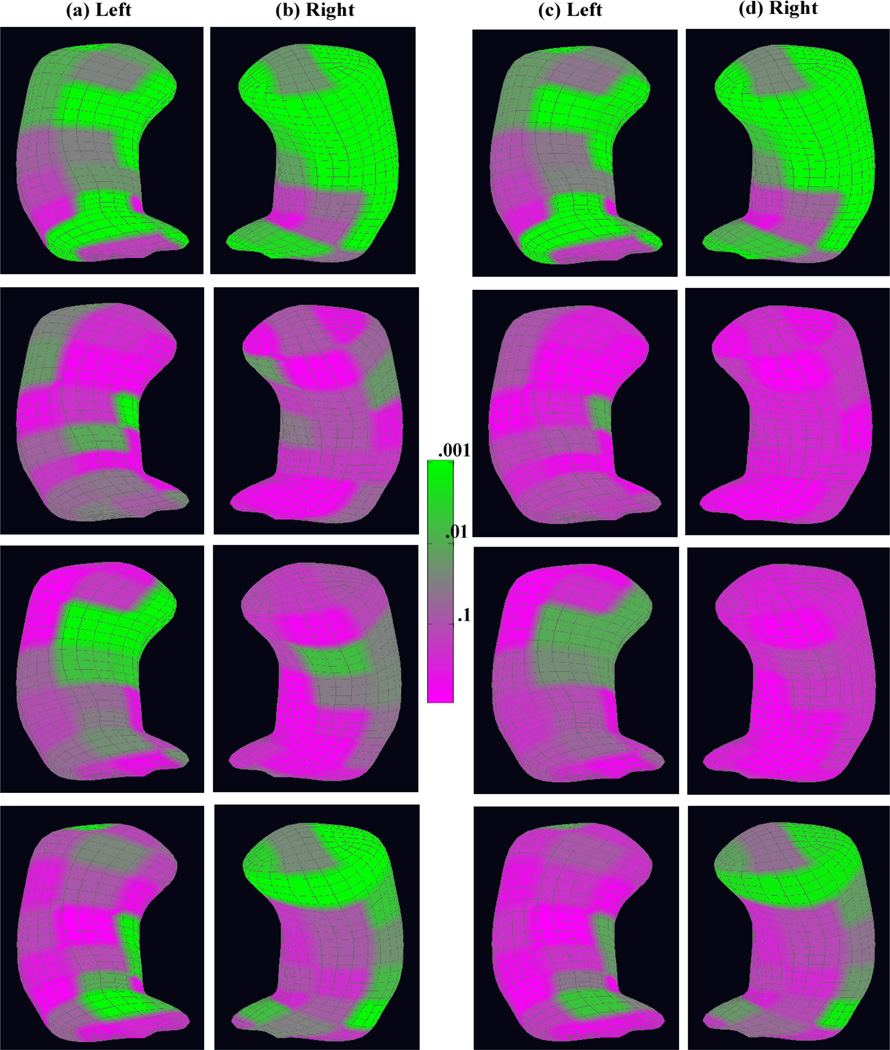

We also examined the overall age effect on the whole medial representation structure. The color-coded p-values of the age effect across the atoms of both the left and right reference hippocampi are shown in the first row (a) and (b) of Figure 4. The false discovery rate approach was used to correct for multiple comparisons, and the corresponding adjusted p-values are shown in the first row (c) and (d) of Figure 4. There was a large significant area in the right hippocampus and also some in the left hippocampus. The significance area remains almost the same after correcting for multiple comparisons, but with an attenuated significance level.

Figure 4.

The color-coded p–value maps of the age effect from the schizophrenia study of the hippocampus: row 1, 2, 3, and 4 are for the whole medial representation structure, radius, location, and two directions, respectively: at each row, the uncorrected p–value maps for (a) the left hippocampus and (b) the right hippocampus; the corrected p–value maps for (c) the left hippocampus and (d) the right hippocampus after correcting for multiple comparisons.

Additionally, we looked at each component on the medial representation separately. For the radius component of the medial representation, the color-coded p-values of the age effect across the atoms are shown in the second row (a) and (b) of Figure 4 and the adjusted p-values are shown in the second row (c) and (d). Before correcting for multiple comparisons, there was a small age effect in the medial representation thickness at the central atoms near the posterior side in the left hippocampus and in some areas in the right hippocampus. However, there was not much of a significant diagnostic status effect after correcting for multiple comparisons.

For the location component of the medial representation, the color-coded p-values of the age effect are shown in the third row (a) and (b) of Figure 4 and the corresponding adjusted p-values are shown in the third row (c) and (d). Significant age effects were mainly located around the anterior and lateral side of the left hippocampus though with clearly reduced significance after correcting for multiple comparisons.

For the two spoke directions on the medial representation, we showed the color-coded p-values of the age effect in the last row (a) and (b) of Figure 4 and the corresponding adjusted p-values are in the last row (c) and (d). Even after correcting for multiple comparisons, we observed significant areas around the anterior, posterior, and the medial side of the right hippocampus and some areas in the left hippocampus.

Finally, following suggestions from a reviewer, we examined the overall diagnostic status effect without accounting for other factors. The p-values of the diagnostic status effects are shown in Figure 5. Inspecting Figure 5 reveals a small significant area in the left and right hippocampi before and after correcting for multiple comparisons. Comparing with Figure 3, we feel that such attenuation in Figure 5 may be caused by omitting other factors such as age that are believed to be associated with the variability of the medial representation of subcortical structures.

Figure 5.

The coded p–value maps of the diagnostic status effects without accounting for other factors from the schizophrenia study of the hippocampus: rows 1, 2, 3, and 4 are for the whole medial representation structure, radius, location, and two directions, respectively: at each row, the uncorrected p–value maps for (a) the left hippocampus and (b) the right hippocampus; the corrected p–value maps for (c) the left hippocampus and (d) the right hippocampus after correcting for multiple comparisons.

4 Discussion

We have proposed a semiparametric model for describing the association between the medial representation of subcortical structures and covariates of interest, such as diagnostic status, age and gender. We have developed a two-stage estimation procedure to calculate the parameter estimates and used Wald statistics to test linear hypotheses of unknown parameters. We have used extensive simulation studies and a real dataset to evaluate the accuracy of our parameter estimates and the finite sample performance of the Wald statistics.

Many issues still merit further research. The two-stage estimation procedure can be easily modified to simultaneously estimate all parameters across all atoms and imposing some structures (e.g., spatial smoothness) on the matrix of regression parameters across all atoms while accounting for the correlations between different components of different atoms. This generalization requires a good estimate of the covariance matrix of ℰi(β) across all atoms. We may consider a shrinkage estimator of the covariance matrix of all ℰi(β) as a linear combination of the identity matrix and the sample covariance matrix V(β) (Ledoit and Wolf, 2004). Moreover, for the matrix of regression parameters across all atoms, we may consider its sparse low-rank matrix factorization to identify the underlying latent structure among all atoms (Witten, Tibshirani, and Hastie, 2009; Dryden and Mardia, 1998; Fletcher et al., 2004), which will be a topic of our future research. It is interesting to develop Bayesian models for the joint analysis of medial representation data of subcortical structures (Angers and Kim, 2005; Healy and Kim, 1996).

Acknowledgments

This work was supported in part by NIH grants UL1-RR025747-01, R21AG033387, P01CA142538-01, MH086633, GM 70335, and CA 74015 to Drs. Zhu and Ibrahim, DMS-1007457 and DMS-1106494 to Dr. Liang, and Lilly Research Laboratories, the UNC NDRC HD 03110, Eli Lilly grant F1D-MC-X252, and NIH Roadmap Grant U54 EB005149-01, NAMIC to Dr. Styner. We thank the Editor, an associated editor, and two references for help suggestions, which have improved the present form of this article.

Appendix: Proofs of Theorems 1 and 2

We need the following lemma throughout the proof of Theorems 1 and 2.

Lemma 1. (i) Under Assumption A1, if f(z, β) is a vector of continuous functions in β for any β in a compact set ℬ and z, then

| (21) |

(ii) In addition to the assumptions in (i), if f(z, β) also satisfies supβ∈ℬ ‖f(z, β)‖2 ≤ G1(z) and E {G1(z)} < ∞, then

| (22) |

| (23) |

(iii) In addition to the assumptions in (ii), if E {G1(z)r} < ∞ for any r > 1, then

| (24) |

in probability, as n → ∞.

(iv) In addition to the assumptions in (ii), if for any δ > 0 in a neighborhood of 0 and some constants C and ψ, then

| (25) |

The assumptions and result (21) of Lemma 1 (i) correspond to Jennrich’s (1969) Theorem 2. The results in Lemma 1 (ii) correspond to Andrews’ (1992) Lemma 3. The results in Lemma 1 (iii) correspond to Andrews’ (1992) Theorem 1. The result in Lemma 1 (iv) is a special case of Andrews’ (1994) Theorems 4 and 5.

Lemma 2. Let E(β, β′) be E {dist(μ(x, β), μ(x, β′))2}. We assume that (i) ℬ is a compact set; (ii) there is a point β ∈ ℬ such that D(β) < ∞ and supβ′∈ℬ E(β, β′) < ∞; (iii) E(β, β′) is a continuous function in β and β′. Then, Iℬ is an non-empty compact set.

Proof of Lemma 2. It follows from the triangle inequality that

Using the Schwarz inequality and the assumptions of Lemma 2, we have

for any β′ ∈ ℬ. Thus, D(β) is a real continuous function of β in a compact set, which yields that Iℬ is an non-empty set. Since ℬ is a compact set, it is trivial that Iℬ is a compact set.

Proof of Theorem 1. We prove Theorem 1 (a) in two parts. The first part proves weak consistency of β̂E. We set f(z, β) = dist(m, μ(β))2 = ℰ(β)T ℰ(β). It follows from Assumption A3 that supβ∈ℬ dist(m, μ(β))2 ≤ G(z)2. Thus, Lemma 1 (ii) and (iii) yield that supβ∈ℬ |n−1Dn(β) − D(β)| → 0 in probability and D(β) is continuous in β uniformly over β ∈ Θ. Since Iℬ is a compact set and β* is an isolated point, β̂I is a consistent estimator of β*. Furthermore, we can show that in probability. Using similar arguments, we can show that β̂E is also a consistent estimator of β*. Using the results of Lemma 1, we can show the asymptotic normality of β̂E and β̂I under conditions A1–A4 (Andrews, 1999).

Proof of Theorem 2. Using standard arguments, we can easily prove Theorem 2. Specifically, as n → ∞, since it follows from Theorem 1 (ii) that , which finishes the proof of Theorem 2.

Footnotes

The content is solely the responsibility of the authors and does not necessarily represent the official views of the NSF or the NIH.

References

- Andrews DWK. Generic uniform convergence. Econometric Theory. 1992;8:241–257. [Google Scholar]

- Andrews DWK. Empirical Process Methods in Econometrics. In: Engle RF, McFadden DL, editors. Handbook of Econometrics. Volume IV. 1994. pp. 2248–2292. [Google Scholar]

- Andrews DWK. Consistent Moment Selection Procedures for Generalized Method of Moments Estimation. Econometrica. 1999;67:543–564. [Google Scholar]

- Anderson TW. An Introduction to Multivariate Statistical Analysis. 3rd ed. Wiley; 2003. Series in Probability and Statistics. [Google Scholar]

- Angers JF, Kim PT. Multivariate Bayesian Function Estimation. Ann. Statist. 2005;33:2967–2999. [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the False Discovery Rate: a Practical and Powerful Approach to Multiple Testing. Journal of the Royal Statistical Society, Ser. B. 1995;57:289–300. [Google Scholar]

- Bhattacharya RN, Patrangenaru V. Large Sample Theory of Intrinsic and Extrinsic Sample Means on Manifolds II. Ann. Statist. 2005;33:1225–1259. [Google Scholar]

- Butler RW. Saddlepoint Approximations with Applications. New York: Cambridge University Press; 2007. [Google Scholar]

- Chamberlain G. Asymptotic Efficiency in Estimation with Conditional Moment Restrictions. J. Economet. 1987;34:305–334. [Google Scholar]

- Davison AC, Hinkley DV. Bootstrap Methods and Their Application. New York: Cambridge University Press; 1997. [Google Scholar]

- Downs TD. Spherical Regression. Biometrika. 2003;90:655–668. [Google Scholar]

- Dryden IL, Mardia KV. Statistical Shape Analysis. Wiley: Chichester; 1998. [Google Scholar]

- Fisher NI, Lee AJ. Regression Models for an Angular Response. Biometrics. 1992;48:665–677. [Google Scholar]

- Fletcher PT, Lu C, Pizer SM, Joshi S. Principal Geodesic Analysis for the Study of Nonlinear Statistics of Shape. Medical Imaging. 2004;23:995–1005. doi: 10.1109/TMI.2004.831793. [DOI] [PubMed] [Google Scholar]

- Gould AL. A Regression Technique for Angular Variates. Biometrics. 1969;25:683–700. [PubMed] [Google Scholar]

- Healy DM, Kim PT. An Empirical Bayes Approach to Directional Data and Efficient Computation on the Sphere. Ann. Statist. 1996;24:232–254. [Google Scholar]

- Jennrich R. Asymptotic Properties of Nonlinear Least Squares Estimators. Ann. of Math. Statist. 1969;40:633–643. [Google Scholar]

- Johnson RA, Wehrly TE. Some Angular-linear Distributions and Related Regression Models. J. Am. Statist. Assoc. 1978;73:602–606. [Google Scholar]

- Jupp PE, Mardia KV. A Unified View of the Theory of Directional Statistics, 1975–1988. International Statistical Review. 1989;57:261–294. [Google Scholar]

- Le H. Locating Frechet means with an application to shape spaces. Adv. Appl. Prob. 2001;33:324–338. [Google Scholar]

- Ledoit O, Wolf M. A Well-conditioned Estimator for Large-dimensional Covariance Matrices. Journal of Multivariate Analysis. 2004;88:365–411. [Google Scholar]

- Liang F. Annealing Evolutionary Stochastic Approximation Monte Carlo for Global Optimization. Statistics and Computing. 2011;21:375–393. [Google Scholar]

- Mardia KV. Statistics of Directional Data (with Discussion) J. R. Statist. Soc. B. 1975;37:349–393. [Google Scholar]

- Mardia KV, Jupp PE. Directional Statistics. John Wiley: Academic Press; 1983. [Google Scholar]

- Narr KL, Thompson PM, Szeszko P, Robinson D, Jang S, Woods RP, Kim S, Hayashi KM, Asunction D, Toga AW, Bilder RM. Regional Specificity of Hippocampal Volume Reductions in First-episode Schizophrenia. NeuroImage. 2004;21:1563–1575. doi: 10.1016/j.neuroimage.2003.11.011. [DOI] [PubMed] [Google Scholar]

- Newey WK. Econometrics, vol. 11 of Handbook of Statistics. North Holland: Amsterdam; 1993. Efficient Estimation of Models with Conditional Moment Restrictions; pp. 419–454. [Google Scholar]

- Pizer SM, Fletcher T, Fridman Y, Fritsch DS, Gash AG, Glotzer JM, Joshi S, Thall A, Tracton G, Yushkevich P, Chaney EL. Deformable M-Reps for 3D Medical Image Segmentation. International Journal of Computer Vision. 2003;55:85–106. doi: 10.1023/a:1026313132218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Presnell B, Morrison SP, Littell RC. Projected Multivariate Linear Models for Directional Data. J. Am. Statist. Assoc. 1998;93:1068–1077. [Google Scholar]

- Styner M, Lieberman JA, McClure RK, Weinberger DR, Jones DW, Gerig G. Morphometric Analysis of Lateral Ventricles in Schizophrenia and Healthy Controls Regarding Genetic and Disease-specific factors. Proc. Natl. Acad. Sci. USA. 2005;102:4872–4877. doi: 10.1073/pnas.0501117102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Styner M, Lieberman JA, Pantazis D, Gerig G. Boundary and Medial Shape Analysis of the Hippocampus in Schizophrenia. Medical Image Analysis. 2004;8:197–203. doi: 10.1016/j.media.2004.06.004. [DOI] [PubMed] [Google Scholar]

- van der Vaart AW, Wellner JA. Weak Convergence and Empirical Processes. New York: Springer-Verlag; 1996. [Google Scholar]

- Witten DM, Tibshirani R, Hastie T. Penalized Matrix Decomposition, with Applications to Sparse Principal Components and Canonical Correlation Analysis. Bio-statistics. 2009;10:515–534. doi: 10.1093/biostatistics/kxp008. [DOI] [PMC free article] [PubMed] [Google Scholar]