Abstract

Introduction

The National Institutes of Health sponsored Patient-Reported Outcome Measurement Information System (PROMIS) aimed to create item banks and computerized adaptive tests (CATs) across multiple domains for individuals with a range of chronic diseases.

Purpose

Web-based software was created to enable a researcher to create study-specific Websites that could administer PROMIS CATs and other instruments to research participants or clinical samples. This paper outlines the process used to develop a user-friendly, free, Web-based resource (Assessment CenterSM) for storage, retrieval, organization, sharing, and administration of patient-reported outcomes (PRO) instruments.

Methods

Joint Application Design (JAD) sessions were conducted with representatives from numerous institutions in order to supply a general wish list of features. Use Cases were then written to ensure that end user expectations matched programmer specifications. Program development included daily programmer “scrum” sessions, weekly Usability Acceptability Testing (UAT) and continuous Quality Assurance (QA) activities pre- and post-release.

Results

Assessment Center includes features that promote instrument development including item histories, data management, and storage of statistical analysis results.

Conclusions

This case study of software development highlights the collection and incorporation of user input throughout the development process. Potential future applications of Assessment Center in clinical research are discussed.

Keywords: Software, Software design, Outcome assessment (health care), Psychometrics, Quality of life, Health surveys, Questionnaires

Background

In 2004, the National Institutes of Health (NIH) launched the NIH Roadmap Initiative to address critical research challenges facing the NIH and to accelerate basic discovery and clinical research [1]. One of the first of these initiatives, the Patient-Reported Outcomes Measurement Information System (PROMIS; www.nihpromis.org) aimed to leverage advances in modern psychometric theory to develop item banks and a computerized adaptive testing (CAT) system that would allow for the precise and efficient measurement of patient-reported outcomes (PROs) in clinical research across a wide range of chronic diseases [2].

PROMIS sought to build item banks that measured key health outcome domains that were relevant and manifested in a wide range of chronic diseases. Items identified from established questionnaires or written for PROMIS by experts were subjected to rigorous qualitative review [3] and were tested in a large, diverse sample from the general population and with clinical patients [4]. Analyzed data were used to create calibrated item banks and short forms using item response theory (IRT)—see Table 1. As PRO-MIS developed a set of publicly accessible CATs, the creation of a method for administering these instruments became a critical component for their adoption within the research and clinical communities. This paper provides an overview of the development of this administration technology.

Table 1.

Number of items in each PROMIS item bank and short form

| Domain | Adult

|

Pediatric

|

||

|---|---|---|---|---|

| Bank | Short form | Bank | Short form | |

| Emotional distress—anger | 29 | 8 | 6 | |

| Emotional distress—anxiety | 29 | 7 | 8 | |

| Emotional distress—depression | 28 | 8 | 8 | |

| Fatigue | 95 | 7 | 10 | |

| Pain—behavior | 39 | 7 | ||

| Pain—interference | 41 | 6 | 8 | |

| Satisfaction with discretionary social activities | 12 | 7 | ||

| Satisfaction with social roles | 14 | 7 | ||

| Sleep disturbance | 27 | 8 | ||

| Sleep-related impairment | 16 | 8 | ||

| Physical function | 124 | 10 | ||

| Mobility | 23 | 8 | ||

| Upper extremity | 29 | 8 | ||

| Asthma | 17 | 8 | ||

| Peer relationships | 15 | 8 | ||

| Global health | 10 | |||

Note: Blank cells represent domains not represented. It is further noted that the adult Physical Function Bank includes upper and lower extremity items in a single bank, whereas pediatric Physical Functioning is conceptualized as separate constructs for “Mobility” and “Upper Extremity.”

The use of IRT and CAT

A primary intent in developing the PROMIS software was to give tailored assessments using CATs. CAT is enabled by IRT to produce custom assessments, in real time, which are fully responsive to how the individual responds to each successive question on a survey. Following each response, an estimate of trait ability based upon the subject’s distinct responses to all previously administered items is calculated by a computer. Reflective of this person’s “score,” all items in the calibrated item bank are reviewed to find the next item which will provide the most additional information for that particular person. This item-selection process continues until a desired level of precision is obtained, resulting in greater test efficiency and reduced test-taker burden [5]. Formulas to perform these calculations are available in myriad texts and articles [6–8]. However, a review and evaluation of existing software systems found that existing applications are delivered using proprietary software associated with specific test delivery organizations. Despite the use of CAT to assess millions of people each year across numerous educational, certification and licensure applications, there were no commercially available software products capable of administering the PRO-MIS instruments. Therefore, the PROMIS network concluded that specific technology would be required to deliver PROMIS CATs and began the process of developing Assessment CenterSM.

Description of Assessment CenterSM

Assessment CenterSM (www.assessmentcenter.net) makes PROMIS instruments available to the research community, allows a mechanism for administering CATs to subjects, and is a central facility for the storage, retrieval, organization, and sharing of study research items and item banks. Although other data collection systems exist, Assessment Center is unique in that it includes features that promote instrument development, study administration, data management, and storage of statistical analysis results.

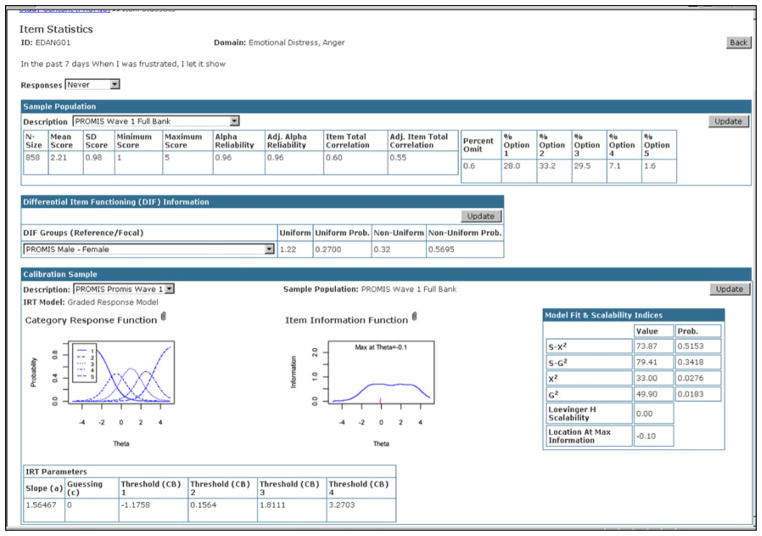

Assessment Center provides features that can be utilized by multiple audiences including researchers, instrument developers and clinicians. In the research environment, scientists can utilize the free, Web-based testing platform to select PRO domains to assess in a study and determine the method of administration (CAT or static short form—see Fig. 1). Assessment Center also allows researchers to incorporate additional instruments into the battery so that all patient-reported instruments (PROMIS and non-PROMIS) are administered to participants in a seamless manner. The system has features that allow researchers to create sophisticated offline and online data collection sites, randomize research participants to study arms and administer questionnaires at multiple time points. Most users of Assessment Center take advantage of the free Web hosting supported by the PROMIS Technology Center grant, where data is stored on a secured server run by Northwestern University. Researchers have 24 × 7 access to independently download their data. Users can also opt to utilize the stand-alone version of the software such that all data is stored on computers under the control of independent researchers.

Fig. 1.

Add an instrument

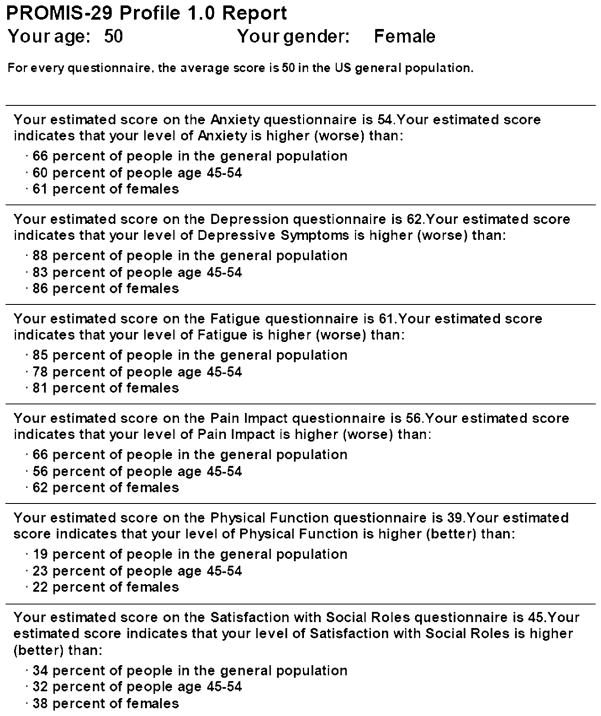

Instrument developers can utilize Assessment Center for item creation and revision. Item histories can be highly detailed to track all modifications. Item- and instrument-level statistics can be stored and reviewed (see Fig. 2). For example, a developer could create a study Website to allow data collection from a large sample and then enter item-and instrument-level statistics including IRT parameters. The calibrated item bank could in turn be included in validation studies. Clinicians can also utilize Assessment Center for selecting PROMIS measures targeting physical functioning, emotional distress, pain, fatigue, sleep disturbance, and social health to track patient self-reports over time. Features that enhance Assessment Center for each of these end-user groups have been included in every software release. The features of each release are listed in Table 2.

Fig. 2.

Item statistics

Table 2.

Assessment center features by release

| Release 1: November 2007 |

| Create a study |

| Add existing PROMIS instruments (short forms, CAT) to a study |

| View item- and instrument-level statistics (IRT parameters, means, scale scores, model fit, reliability indices) |

| Set-up simple study (single time point, single arm) |

| Upload and administer consent forms |

| Create study-specific Websites for data collection |

| Monitor accrual |

| Export interim and end-of-study data |

| Release 2: April 2008 |

| Create custom items and instruments |

| Track item changes through automated item history documentation |

| Review item history |

| Set-up complex study (multiple time points, multiple arms) |

| Cluster items or instruments |

| Randomize item and instrument presentation |

| Establish branching logic within instruments |

| Enable researcher-based data entry |

| Select and customize registration fields |

| Release 2.1 and 2.2: May 2008 |

| Improved stability and usefulness of software |

| Added additional code to permit easy integration testing allowing developers to add a feature and automatically test that all previously entered features remained functional |

| Standardized source code |

| Removed redundant code |

| Server improvements |

| Release 3: December 2008 |

| Review newly created custom CATs to ensure their development adheres to basic theoretical principles of CAT and IRT |

| Select first CAT item to be based on theta or item content |

| Conduct a CAT demonstration and receive a real-time summary report |

| Use administration engine that reduces administration of identical items shared by multiple instruments |

| Select format for how items are presented to participants |

| Enable researcher-based registration |

| Export accrual report for NIH progress reports |

| Export data oriented in horizontal rather than vertical layout |

| Update certain study set-up parameters |

| Release 4: May 2009 |

| Addition of PROMIS profile measures |

| PROMIS Profile and CAT reports with norms and graphical display |

| Enhanced usability features (e.g., preview functionality, item and instrument ordering) |

| Enable CATs with collapsed item categories and zero-based scoring |

| Improve system security and reliability |

| Release 4.1: August 2009 |

| Improved stability and usefulness of software |

| Release 4.5: September 2009 |

| Assessment Center Offline |

| Assessment Center Offline User Manual |

| Release 4.6: December 2009 |

| Improved stability and usefulness of software |

| Modifications Scheduled For Future Release |

| Online monitoring of research participant progress |

| Track PROMIS instrument administration longitudinally |

| Email alerts to research staff and participants |

| Enable multiple language assessments |

| Multiple modes of administration (e.g., integrated voice response) |

| Enable additional CAT models (partial credit model and dichotomous scoring) |

| User interface modifications |

| Enable use of multiple calibrations |

| Provide additional item presentation templates |

To utilize the system, a user completes a short registration process, and then can begin establishing research study parameters. Work within the software is organized by individual research study. Users have their own workspaces within the application which are accessible only to others identified as members of the study team. The application is composed of three primary areas: instrument selection/development, study set-up, and study administration.

In the instrument selection/development area, users are able to access PROMIS instruments. Instrument-level statistics as well as item-level statistics and history are viewable. Users can download PDF versions of instruments for paper-and-pencil administration. They are also able to create their own items and instruments through the selection or modification of existing items and/or creating new items with all modifications automatically cataloged in an item history database. Users may customize instruments per study by selecting item presentation templates and specifying item administration order within an instrument which includes branching, grouping items and the randomization of items and/or groups of items. Finally, users can preview instruments as they would appear in online data collection including simulating CAT administration.

In the study set-up area, users create parameters for study administration, including the name of a study-specific Website, study opening and closing dates, and accrual goals. Customization of instruments (e.g., clustering instruments, randomization) is enabled, as is creation of multiple assessments and study arms. Users also have the ability to enter online consent and pediatric assent forms.

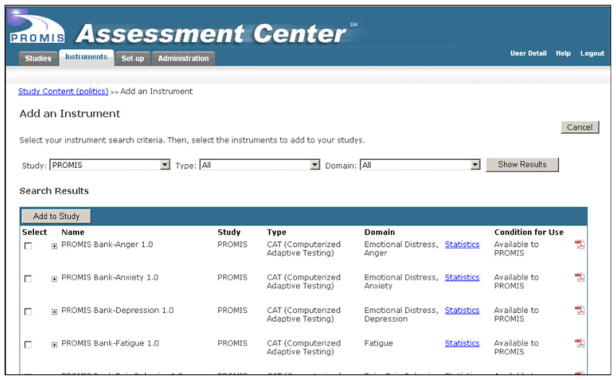

The administration area of Assessment Center is utilized once data collection has commenced. It includes accrual reports, participant registration information, data screens, and a data dictionary/codebook. Export options include assessment data, assessment scores, registration data, consent data, and pivoted assessment data. PROMIS Profile and CAT Reports showing scale levels in both text and graphical formats (see Fig. 3) are also available.

Fig. 3.

PROMIS profile report

Software development

In order to create a software application that would meet end-user needs and have a high potential for being adopted by the clinical research community, proven analysis tools and methodologies for specifying software requirements [9] were applied. Input from users and stakeholders was sought at the outset to avoid common software development pitfalls. The process included identifying software requirements, methodically outlining the functionality of each requirement, drafting programming, testing each feature, and collecting end-user feedback. Each of these steps is described in the following sections.

Joint Application Design

Software requirements were gathered between December 2005 and March 2006 during half-day, on-site Joint Application Design (JAD) sessions conducted by a senior business analyst. JAD can be broadly defined as a workshop in which software users and development teams gather to openly discuss project plans, software requirements and user interface designs [10]. These sessions adhered to widely accepted business analysis principles and Six Sigma tools, a methodology used to enable decision-making and manage quality [11]. The JAD sessions were held at PROMIS Primary Research Sites (PRSs) including the University of Washington, University of Pittsburgh, Duke University, Stanford University, Stony Brook University and NorthShore University HealthSystem. Attendees included a total of 81 principal investigators, co-investigators, psychometricians, statisticians, data managers, project managers, and research assistants. The purpose of the JAD sessions was to collect Voice of the Customer data, conduct Critical-to-Quality and Risk Analyses, and create Process Maps.

Voice of the Customer interviews were conducted to define users’ wants and needs based on the background, general structure, and expertise of each PRS [12]. As different team members explained their professional functions and deliverables, the business analyst was able to determine common themes, goals, and challenges that needed to be addressed by the software. Critical to Quality (CTQ) Analysis is a Six Sigma tool used to analyze factors which must be present in order for new software to be deemed a success by its users. The goal of CTQ Analysis was to determine the perceived end-users of the software and the characteristics of deliverables which would have the greatest positive impact on user satisfaction. PRS respondents stated that the software had to be accurate, secure, valid, easy to use, reliable, widely adopted, and accessible.

JAD sessions included an analysis of risks. Common risks identified by PRS team members included concerns such as: elimination of funding; difficult to use system; data loss; lack of data security; the system does not perform as needed; inadequate training; and the system becomes obsolete. Finally, the JAD session workshops included mapping current processes of all seven PRSs. Process maps detail the current business workflow so that the future workflow can be improved via technology [13, 14]. PRS process maps were reviewed, analyzed, and combined into a comprehensive baseline process map. This was utilized as the main guide for the development of use cases (i.e., a step-by-step look at how a user will interact with the software) [15, 16].

A total of 321 features were identified during the JAD sessions. Each requirement was ranked in importance and was prioritized into two broad development deadlines: PROMIS-I grant period (by 2009) and future (after 2009). A functional specification manual that outlined features and requirements by priority level was compiled to assist in meeting delivery deadlines.

Use case development

A business team consisting of the PROMIS Statistical Coordinating Center’s (SCC) Director of Psychometrics and Informatics, the SCC Project Director and the SCC Informatics Project Manager was formed to lead the next phase of software development. Under the leadership of a business analyst, the team spent time detailing the functionality and configuring the screen layout (i.e., wire-frames) of the user interface. Additional input was sought from PROMIS team members whose roles were associated with particular features and one or more software developers.

After the business team approved a final version of a use case, it was reviewed by two of the PROMIS science officers at the NIH, the SCC principal investigator, a PRS principal investigator, a PRS project manager, and heuristic experts. Suggested revisions informed changes to existing use cases and modifications to the conceptualization and implementation of future use cases.

Agile software strategies including scrum, user acceptability testing (UAT), and quality assurance testing [17, 18] were utilized to promote communication between team members, provide early feedback on developed features, and test the software to ensure it worked as planned. Scrum is a daily meeting held between the development team and business team where all participants discuss recent, current and expected activities and issues related to software development. Scrum enabled the project manager to ensure that no duplication of work was being done, all team members were familiar with their assigned tasks, and that roadblocks were handled efficiently.

UAT was held on a weekly basis with the business team, business analyst, and lead developer. Each week’s completed development work was the focus of the testing sessions. During these sessions, the team would indicate, where applicable, development activities which did not adhere to functionality detailed in the business requirements and request design changes and further development on a particular feature.

Quality Assurance testing (QA) was done on a continuous basis. After the development team completed a feature from a use case, the business analyst would conduct a variety of tests to ensure the feature worked properly and met all specifications. After the analyst was confident in the feature, the project manager would conduct additional QA prior to UAT. QA was also conducted on a larger scale throughout development by the project manager, a senior user, and an outside contractor to ensure newly developed features did not interfere with existing features.

End-user testing and perspectives

In addition to testing and review within the PROMIS network, it was considered critical to also solicit feedback from potential end-users concerning the usability, acceptability, and accessibility of the system.

Usability and acceptability testing

In September 2007, a training workshop was conducted to introduce Assessment Center to clinical researchers that were potential end-users and to solicit their feedback [19]. Data collection included observation, usability testing, and group discussion to determine how well the application responded to the participants’ needs and how likely they would be to use it. Eighteen workshop participants were recruited by targeting six clinical networks that focus on mental health, cancer, rheumatology, cardiology, neurology, and pain.

The workshop began with an introduction to PROMIS and an overview of IRT and CAT, followed by a demonstration of Assessment Center. Project staff observed all training and testing activities to capture participants’ reactions, especially with regard to system problems, strengths, and suggestions for improvements. These comments were included as probes in the afternoon’s group discussions.

Findings from the observations, usability tests and group discussions demonstrated overall enthusiasm for Assessment Center. Participants listed several benefits of the application including its ability to reduce missing data, collect data remotely, organize and provide access to hundreds of measures, assess quality of life with standard measures, add custom questions, and facilitate communication and test administration. Workshop participants appreciated the ability to access short forms that are valid and reliable, though it was noted that PROMIS instruments continue to be tested in validation studies. Most were in favor of adopting Assessment Center immediately. Although the majority of participants found Assessment Center to be straightforward and user-friendly, suggestions were made to improve system functionality, navigation, and interface design. None of the participants felt that the issues they experienced with respect to functionality, navigation, and interface dissuaded them from using Assessment Center [19].

Accessibility testing

The PROMIS PRS at the University of Washington conducted additional reviews to ascertain issues regarding accessibility to Assessment Center for subjects with disabilities [20]. Two reviews, one by two experts on accessibility standards and one by fourteen individuals with visual, motor, or reading impairments, were conducted to assess the usability and accessibility of an example study’s data collection Website for patients/research participants. Experts were asked to review whether Assessment Center complied with Section 508 of the Rehabilitation Act of 1973 [21]. Both experts agreed that all relevant criteria were met including (1) text equivalents provided for non-text elements, (2) no required style sheets, and (3) appropriate use of client-side scripting. Style sheets provide internet browsers with information on how HTML information is to be displayed on the screen [22, 23]. Required style sheets could interfere with assistive technology that modifies the presentation of information to increase the readability of a screen.

In the second accessibility review, fourteen subjects were divided equally between individuals with visual impairments (low vision and blindness), motor/mobility impairments that make navigation on the computer difficult or require alternate input devices, and those with difficulty reading. A semi-structured interview was conducted by an experienced qualitative researcher. Subjects were asked to (1) access an example study’s data collection Website, (2) complete the registration screens, and (3) complete approximately 30 PROMIS items.

Overall, the results suggest that the study-specific Website screens were intuitive and easy to use for individuals with various functional limitations. Participants were able to successfully complete all three tasks without requiring additional instruction. Suggestions for improvement focused on (1) issues with adaptive technology and (2) improving the user-friendliness of screens for non-researchers. For example, participants found minor difficulty with zooming (i.e. registration screen text could not be resized) and requested having date fields masked to increase compliance with requested data entry format.

Software modifications

All suggested changes from the usability and accessibility reviews were compiled and reviewed by the business team. Options for improving training materials or increasing the intuitiveness of where a function was located in the system were discussed. Next, changes were prioritized based on how frequently they were mentioned, their degree of fit with the intended scope of Assessment Center, and the amount of time needed for completion. Most suggested changes were implemented in subsequent releases of Assessment Center (see Table 2 releases 2.1 forward). Remaining changes were included in feature lists for future release (see Table 2, Modifications Scheduled for Future Release).

Research implications of assessment center

There are a number of important implications of the PROMIS initiative and Assessment Center for the clinical research enterprise. Assessment Center provides clinical researchers with limited IRT or information technology background with the tools to take advantage of IRT and CAT methodologies for more efficient and precise measurement of PROs. The design of Assessment Center enables researchers to combine PROMIS item banks with other PRO measures to provide a seamless computerized administration of all study instruments to patients. Remote accessibility of the Web-based Assessment Center potentially reduces the number of face-to-face interviews in a study, or increases the number of possible assessment points, since study participants can access and complete the assessments remotely.

As important as these advantages are for individual clinical researchers, a significant advantage of PROMIS and Assessment Center is the ability to easily share and merge data across trials. Researchers using the PROMIS item banks can directly compare results across trials on the same metric, and crosswalks of PROMIS item banks with legacy scales allows comparison even to those studies that do not use PROMIS tools. Data output from Assessment Center is standardized and currently being formatted to be consistent with common data sharing standards to further facilitate data sharing across studies.

As Assessment Center was constructed to easily integrate additional publicly available measurement tools into the instrument library, plans are underway to add CATs and short forms from the National Cancer Institute funded Cancer PROMIS Supplement [24] and the National Institutes of Neurological Disorders and Stroke (NINDS) funded Quality of Life in Neurological Disorders (HHSN 2652004236-02C; www.neuroqol.org) studies. Additionally, 40 objective and self-report measures to assess cognitive, motor, emotional health, and sensory function for the NIH Toolbox for the Assessment of Neurological Behavior and Function [25] will be included. CATs and short forms developed for patients with spinal cord injury and traumatic brain injury (SCI-CAT—H133N060024, SCI-QOL—5R01HD054659, and TBI-QOL—H133G070138) will also be made available reference.

Although the advantages to clinical research are considerable, there are also important implications of PROMIS and Assessment Center in clinical practice. PROs are typically difficult to obtain and use in clinical practice, due to logistical complexities of consistent administration and the need for immediate scoring and summarization of responses. These challenges limit the utility of PROs during the clinical visit [26–28]. With Assessment Center, patients can complete the PROs before the visit or even in the waiting room with minimal staff burden. A summary of the results can be made available in real time to the clinician who in turn can adjust treatment based on psycho-metrically sound measures. Patient progress, particularly for patients in underserved areas, could be monitored remotely with clinical contact triggered when patient progress is not as expected. Large health organizations could use this type of system to track patient outcomes for quality improvement efforts. With appropriate safeguards and patient protections, respondent data from clinical practice sites could provide a rich repository of data for clinical research [29, 30].

Success of development process

Utilization of this rigorous software development process has been successful. The initial release of Assessment Center was available ahead of the projected timeline. In 2009, Assessment Center was used to collect 1,750,000 responses from 17,000 subjects participating in one of 261 active studies. Today, over 1,000 researchers representing hundreds of institutions and many countries have registered within the software system and nearly 300 researchers have attended training sessions. Indeed, in 2009, a second wave of NIH funding through the Roadmap Initiative has been awarded to continue improving the software and educating end-users both within and outside of the PROMIS network. This funding will enable increased availability of technical support, quality assurance and programmer resources to meet the needs of a growing user base.

Acknowledgments

Primary funding for the Assessment Center application has been provided as part of several federally funded projects sponsored by the National Institutes of Health including the Patient-Reported Outcomes Measurement Information System (PROMIS; U01 AR052177), Neurological Quality of Life (Neuro-QOL; HHSN 2652004236-01C), Refining and Standardizing Health Literacy Assessment (RO1 HL081485-03), the NIH Toolbox for the Assessment of Neurological and Behavioral Function (AG-260-06-01) and a recent award as the PROMIS Technology Center (U54AR057943). The authors would like to acknowledge the editorial assistance of Lani Gershon.

Abbreviations

- CAT

Computerized adaptive test

- CTQ

Critical to quality

- IRT

Item response theory

- JAD

Joint application design

- NIH

National Institutes of Health

- NINDS

National Institutes of Neurological Disorders and Stroke

- PRO

Patient-reported outcomes

- PROMIS

Patient-reported outcome measurement information system

- PRS

Primary research sites

- QA

Quality assurance

- SCC

Statistical coordinating center

- UAT

Usability acceptability testing

Contributor Information

Richard Gershon, Email: gershon@northwestern.edu, Department of Medical Social Sciences, Northwestern University, 625 North Michigan Avenue, Suite 2700, Chicago, IL 60611, USA.

Nan E. Rothrock, Email: n-rothrock@northwestern.edu, Department of Medical Social Sciences, Northwestern University, 625 North Michigan Avenue, Suite 2700, Chicago, IL 60611, USA

Rachel T. Hanrahan, Department of Medical Social Sciences, Northwestern University, 625 North Michigan Avenue, Suite 2700, Chicago, IL 60611, USA

Liz J. Jansky, Westat, Rockville, MD, USA

Mark Harniss, University of Washington, Seattle, WA, USA.

William Riley, National Heart, Lung, and Blood Institute, Bethesda, MD, USA.

References

- 1.Zerhouni E. Medicine. The NIH roadmap. Science. 2003;302(5642):63–72. doi: 10.1126/science.1091867. [DOI] [PubMed] [Google Scholar]

- 2.Cella D, Yount S, Rothrock N, Gershon R, Cook K, Reeve B, et al. The patient-reported outcomes measurement information system (PROMIS): Progress of an NIH roadmap cooperative group during its first two years. Medical Care. 2007;45(5 Suppl 1):S3–S11. doi: 10.1097/01.mlr.0000258615.42478.55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.DeWalt DA, Rothrock N, Yount S, Stone AA. Evaluation of item candidates: The PROMIS qualitative item review. Medical Care. 2007;45(5 Suppl 1):S12–S21. doi: 10.1097/01.mlr.0000254567.79743.e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cella D, Riley W, Stone AA, Rothrock N, Reeve BB, Yount S, et al. Initial item banks and first wave testing of the patient reported outcomes measurement information system (PROMIS) network: 2005–2008. Journal of Clinical Epidemiology. 2009 doi: 10.1016/j.jclinepi.2010.04.011. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cella D, Gershon R, Lai JS, Choi S. The future of outcomes measurement: Item banking, tailored short-forms, and computerized adaptive assessment. Quality of Life Research. 2007;16(Suppl 1):133–141. doi: 10.1007/s11136-007-9204-6. [DOI] [PubMed] [Google Scholar]

- 6.Lai JS, Cella D, Chang CH, Bode RK, Heinemann AW. Item banking to improve, shorten and computerize self-reported fatigue: An illustration of steps to create a core item bank from the FACIT-Fatigue Scale. Quality of Life Research. 2003;12(5):485–501. doi: 10.1023/a:1025014509626. [DOI] [PubMed] [Google Scholar]

- 7.Davis KM, Chang CH, Lai JS, Cella D. Feasibility and acceptability of computerized adaptive testing (CAT) for fatigue monitoring in clinical practice. Quality of Life Research. 2002;11(7):134. [Google Scholar]

- 8.Ware JE, Jr, Kosinski M, Bjorner JB, Bayliss MS, Batenhorst A, Dahlof CG, et al. Applications of computerized adaptive testing (CAT) to the assessment of headache impact. Quality of Life Research. 2003;12(8):935–952. doi: 10.1023/a:1026115230284. [DOI] [PubMed] [Google Scholar]

- 9.O’Carroll PW, O’Carroll PW, Yasnoff WA, Ward ME, Ripp LH, Martin EL. Information architecture. In: Hannah KJ, Ball MJ, editors. Public health informatics and information systems. New York: Springer; 2003. pp. 85–97. [Google Scholar]

- 10.Davidson EJ. Joint application design (JAD) in practice. Journal of Systems and Software. 1999;45(3):215–223. [Google Scholar]

- 11.Schroeder RG, Linderman K, Liedtke C, Choo AS. Six sigma: Definition and underlying theory. Journal of Operations Management. 2008;26:536–554. [Google Scholar]

- 12.Lane JP, Usiak DJ, Stone VI, Scherer MJ. The voice of the customer: Consumers define the ideal battery charger. Assistive Technology. 1997;9(2):130–139. doi: 10.1080/10400435.1997.10132304. [DOI] [PubMed] [Google Scholar]

- 13.Sharp A, McDermott P. Workflow modeling: Tools for process improvement and application development. Boston: Artech House; 2001. [Google Scholar]

- 14.Reijers HA. Design and control of workflow processes: Business process management for the service industry. Berlin; New York: Springer; 2003. [Google Scholar]

- 15.Bittner K, Spence I. Use case modeling. Boston: Addison-Wesley; 2003. [Google Scholar]

- 16.Kulak D, Guiney E. Use cases: Requirements in context. Boston, Mass: Addison-Wesley; 2004. [Google Scholar]

- 17.Cockburn A. Agile software development. Boston: Addison-Wesley; 2002. [Google Scholar]

- 18.Highsmith JA. Agile project management: Creating innovative products. Boston: Addison-Wesley; 2004. [Google Scholar]

- 19.Jansky LJ, Huang JC. A multi-method approach to assess usability and acceptability: A case study of the patient-reported outcomes measurement system (PROMIS) workshop. Social Science Computer Review. 2009;27(2):267–270. [Google Scholar]

- 20.Harniss MK, Amtmann D. Patient reported outcomes measurement information system (PROMIS) network study: Accessibility of the PROMIS computer adaptive testing system. Seattle: University of Washington Center on Outcomes in Rehabilitation Research; 2008. [Google Scholar]

- 21.Section 508 of the Rehabilitation Act, as amended by the Workforce Investment Act of 1998 (P.L. 105–220)(1998).

- 22.Lie HW, Bos B. Cascading style sheets: Designing for the web. Boston: Addison-Wesley Professional; 2005. [Google Scholar]

- 23.Meyer EA. Cascading style sheets: The definitive guide. Sebastopol, CA: O’Reilly & Associates; 2006. [Google Scholar]

- 24.Garcia SF, Cella D, Clauser SB, Flynn KE, Lai JS, Reeve BB, et al. Standardizing patient-reported outcomes assessment in cancer clinical trials: A patient-reported outcomes measurement information system initiative. Journal of Clinical Oncology. 2007;25(32):5106–5112. doi: 10.1200/JCO.2007.12.2341. [DOI] [PubMed] [Google Scholar]

- 25.Gershon RG. NIH toolbox: Assessment of neurological and behavioral function. NIH (Contract HHS-N-260-2006 00007-C) 2007 from http://www.nihtoolbox.org.

- 26.Chang CH. Patient-reported outcomes measurement and management with innovative methodologies and technologies. Quality of Life Research. 2007;16(Suppl 1):157–166. doi: 10.1007/s11136-007-9196-2. [DOI] [PubMed] [Google Scholar]

- 27.Davis KM, Cella D. Assessing quality of life in oncology clinical practice: A review of barriers and critical success factors. Journal of Clinical Outcomes Management. 2002;9(6):327–332. [Google Scholar]

- 28.Ruta D, Coutts A, Abdalla M, Masson J, Russell E, Brunt P, et al. Feasibility of monitoring patient based health outcomes in a routine hospital setting. Quality in Health Care. 1995;4(3):161–165. doi: 10.1136/qshc.4.3.161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.DesRoches CM, Campbell EG, Rao SR, Donelan K, Ferris TG, Jha A, et al. Electronic health records in ambulatory care–a national survey of physicians. New England Journal Medicine. 2008;359(1):50–60. doi: 10.1056/NEJMsa0802005. [DOI] [PubMed] [Google Scholar]

- 30.Overhage JM, Evans L, Marchibroda J. Communities’ readiness for health information exchange: The National Landscape in 2004. Journal of the American Medical Informatics Association. 2005;12(2):107–112. doi: 10.1197/jamia.M1680. [DOI] [PMC free article] [PubMed] [Google Scholar]