Abstract

Two experiments examined the effects of multimodal presentation and stimulus familiarity on auditory and visual processing. In Experiment 1, 10-month-old infants were either habituated to an auditory stimulus, a visual stimulus or to an auditory-visual multimodal stimulus. Processing time was assessed during the habituation phase and discrimination of auditory and visual stimuli was assessed in a subsequent testing phase. In Experiment 2, the familiarity of the auditory or visual stimulus was systematically manipulated by either pre-familiarizing infants to the auditory or visual stimulus prior to the experiment proper. With the exception of the pre-familiarized auditory condition in Experiment 2, infants in the multimodal conditions failed to increase looking when the visual component changed at test. This finding is noteworthy given that infants discriminated the same visual stimuli when presented unimodally, and there was no evidence that multimodal presentation attenuated auditory processing. Possible factors underlying these effects are discussed.

Infants live in a multimodal world where they frequently encounter information presented to multiple sensory modalities. In some situations infants ably process and integrate information across sensory modalities. For example, young infants can associate words with arbitrarily paired objects, they can integrate auditory and visual input when perceiving speech, and auditory input can even facilitate visual processing (e.g. Kuhl & Meltzoff, 1982; Schafer & Plunkett, 1998; Sloutsky & Robinson, 2008; see also Lewkowicz, 2000, and Lickliter & Bahrick, 2000, for reviews). At the same time, there are also many situations when presenting stimuli to multiple sensory modalities interferes with learning. For example, in many situations infants and young children are often better at processing the details of a stimulus when it is presented unimodally than when the same stimulus is presented multimodally (Lewkowicz, 1988a; 1988b; Napolitano & Sloutsky, 2004; Robinson & Sloutsky, 2004; Sloutsky & Napolitano, 2003; Sloutsky & Robinson, 2008). The current study examines possible factors that might account for young infants’ difficulties in processing multimodal information.

There are at least three possible reasons why multimodal presentation might attenuate (or delay) learning. First, multimodal stimuli often contain more information than unimodal stimuli. For example, in a unimodal visual task infants are required to encode and store a visual stimulus; whereas, in multimodal tasks such as word learning, infants are often required to encode and store a simultaneously presented word and object and also form associations across sensory modalities. This increase in processing demands or “cognitive load” in multimodal tasks may make it difficult for infants to process the details of a stimulus (e.g., Casasola & Cohen, 2000; Stager & Werker, 1997).

Intersensory redundancy hypothesis also makes predictions concerning how multimodal stimuli are processed. In many situations multimodal stimuli can provide amodal or redundant information. For example, the rate a ball is bouncing can be experienced both visually and auditorily. According to the intersensory redundancy hypothesis (Bahrick & Lickliter, 2000; see Bahrick, Lickliter, & Flom, 2004 for a review), when infants are presented with multimodal stimuli, such that each modality expresses the same amodal relation, this redundant information is particularly salient. Infants direct their attention to the amodal information before processing information that can only be experienced in a single modality (e.g., the color of an object). Because modality-specific information is initially pushed to the background of attention when it is presented multimodally, the intersensory redundancy hypothesis predicts that multi-modal stimuli containing amodal relations should be acquired first, followed by learning of modality-specific information (but see Lewkowicz & Schwartz, 2002).

Finally, auditory dominance may also account for some of infants’ difficulties in processing multimodal information (Robinson & Sloutsky, 2004; Sloutsky & Napolitano, 2003). The auditory dominance account makes several assumptions. First, because auditory input is typically more transient than visual input, it seems adaptive to first allocate attention to these dynamic stimuli. Second, the auditory dominance account assumes that attention is allocated to multimodal stimuli in a serial manner, with infants first encoding the details of the auditory (or dynamic) stimulus before encoding the details of a visual stimulus. Finally, this account predicts that auditory stimuli that are faster to release attention (e.g., simple or familiar stimuli) should exert less interference than auditory stimuli that are slower to release attention (e.g., complex or unfamiliar stimuli).

Increased processing demands, intersensory redundancy, and auditory dominance all make predictions concerning how multimodal presentation should affect learning; however, the accounts differ in several important ways. For example, while increased cognitive load and intersensory redundancy are agnostic to the direction of interference effects, the auditory dominance account predicts that multimodal presentation will attenuate visual processing more than auditory processing. The three accounts also make different predictions concerning which type of multimodal stimuli will attenuate learning. For example, while the intersensory redundancy hypothesis makes it explicit that infants presented with multimodal stimuli consisting of amodal relations should first process the amodal information before processing the modality-specific information, the cognitive load and auditory dominance accounts do not discriminate between multimodal stimuli that contain amodal and arbitrary relations. Finally, the cognitive load and auditory dominance accounts both predict that increasing the familiarity of the stimuli should decrease processing demands and attenuate interference effects. The primary aim of the current study is to compare the ability of these accounts to explain phenomena associated with multimodal presentation of stimuli.

A second goal of the current study is to test the generalizability of auditory dominance. While several methodologies have been used to examine processing of arbitrary auditory-visual pairings, most of the infant studies supporting auditory dominance have employed fixed-trial familiarization procedures (Robinson & Sloutsky, 2004; 2007a; 2007b; 2008; Sloutsky & Robinson, 2008). One potential concern is that fixed-trial duration procedures may be biased in favor of the auditory modality because infants could accumulate more exposure to auditory stimuli in the course of familiarization. In particular, while looking away from the screen in fixed-trial duration procedures terminates processing of the visual stimulus, infants can still hear the auditory stimulus. It could also be argued that processing of multimodal stimuli is more complex than processing of unimodal stimuli, and therefore an experimental procedure should be used to equate for differential processing demands. Both of these issues were addressed in the current study by using an infant-controlled habituation procedure (see Horowitz, Paden, Bhana, & Self, 1972, for a description of the paradigm).

The current study employed an infant-controlled habituation procedure to examine how multimodal presentation affects auditory and visual processing in 10-month-old infants. In Experiment 1, encoding of the same auditory and visual stimuli was assessed under three different stimulus conditions: (a) multimodal condition, (b) unimodal auditory condition and (c) unimodal visual condition. According to the auditory dominance account, interference effects should be asymmetrical with multimodal presentation attenuating visual processing more than auditory processing. In Experiment 2, we manipulated the familiarity of the auditory and visual stimulus prior to the experiment proper. Increasing the familiarity of the auditory and visual components should decrease processing demands and attenuate interference effects (Fennell, 2006; Robinson & Sloutsky, 2007a; Sloutsky & Robinson, 2008).

EXPERIMENT 1

Method

Participants

Sixty-four 10-month-olds (33 boys and 31 girls, M = 300.86 days, SD = 61.48 days) participated in this experiment. Parents’ names were collected from local birth announcements, and contact information was obtained through local directories. All children were full-term (i.e., > 2500g birth weight) with no auditory or visual deficits, as reported by parents. A majority of infants were Caucasian. Thirty-six additional infants were tested but not included in the current experiment due to fussiness (n = 18) or because they failed to reach the habituation criterion (n = 18).

Materials and Design

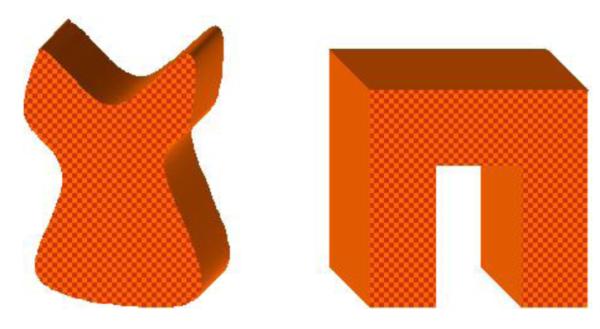

Infants in the current study were either presented with two auditory stimuli (n = 16), two visual stimuli (n = 17), or two auditory-visual pairings (n = 31). The auditory components were melodies (each presented for a total duration of 1 s), with each melody consisting of a sequence of three musical notes (either D, F#, A or D, B, G). Melodies were generated using Creative SoundFont Bank Manager and were saved as 16-bit, 44.1 kHz wav files. These stimuli were presented to infants at 65-70 dB. Visual stimuli (also presented for 1 s) were three-dimensional pictures of shapes created in Microsoft Word and saved as 400 × 400 pixel jpeg files (see Figure 1 for visual stimuli). The same auditory and visual stimuli were used across unimodal and multimodal conditions, and the selection of the training and testing stimuli was counterbalanced across subjects (e.g., half of the infants in the unimodal visual condition were habituated to V1 and tested on V2, and the remaining infants were habituated to V2 and tested on V1).

Figure 1.

Visual stimuli presented in Experiments 1 and 2.

Apparatus

Infants sat on parents’ laps approximately 100 cm away from a 152 cm × 127 cm projection screen. A NEC GT2150 LCD projector presented images to the infants and was mounted on the ceiling approximately 30 cm behind the infant (130 cm away from the projection screen). Two Boston Acoustics 380 speakers presented auditory stimuli to infants. These speakers were 76 cm apart from each other and mounted in the wall at the infant’s eye level. The projector and speakers received visual and auditory input from a Dell Dimension 8200 computer, which was controlled by custom-designed software created in Macromedia Director MX. This computer was also used to record visual fixations. Fixations were recorded online by pressing the spacebar when infants were looking at the stimulus and by releasing the spacebar when infants looked away from the stimulus. Test trials from a random sample of 25% of the infants were coded offline; reliability between online and offline raters across all reported experiments, r = .97.

Procedure

Infants in the multimodal condition were habituated to an auditory-visual compound stimulus. Each habituation trial began with a fixation stimulus (i.e., a red pulsating circle with corresponding beeping sound), which was presented centrally on the projection screen. When the infant looked at the fixation stimulus, it disappeared, and the habituation stimulus was presented. To ensure that differences in encoding auditory and visual stimuli did not stem from infants accumulating more exposure to one modality, stimulus duration was equated by synchronizing the timing and duration of auditory and visual images. Auditory and visual stimuli were simultaneously presented for 1 s with a 0.5 s inter-stimulus interval. The combined 1.5 second iteration (stimulus plus ISI) looped continuously until the infant looked away for 2 consecutive seconds or until the infant accumulated 120 s of looking on a single trial. The habituation phase continued until the infant reached the habituation criterion or until the infant accumulated 12 habituation trials. Habituation criterion was met when the mean looking on three consecutive trials dropped to 50% of initial looking (i.e., averaged looking on first three trials). Only infants who met the habituation criterion were included in the final sample.

After reaching the habituation criterion, infants immediately moved into the testing phase. There were four test trials and the order was randomized for each infant. One test trial was identical to the habitation stimulus in that the auditory and visual components were the same as the habituation components (i.e., Old Target). The other three test stimuli consisted of novel stimuli. On Changed Visual trials, only the visual component changed (i.e., Changed Visual/Old Auditory). On Changed Auditory trials, only the auditory component changed (i.e., Changed Auditory/Old Visual). On Changed Both trials, both components changed (i.e., Changed Auditory/Changed Visual). As in previous research (Robinson & Sloutsky, 2004; Sloutsky & Robinson, 2008), infants were briefly re-familiarized to the habituation stimulus during the testing phase. In particular, during the testing phase the computer randomly presented infants with two of the four test trials. The next three trials were always identical to the habituation stimulus. The computer then randomly presented the remaining two test trials.

Infants in the unimodal visual condition were habituated and tested on the same pulsating images; however, visual stimuli were not paired with sounds during the habituation phase or during the testing phase. As in the multimodal condition, each trial began with a fixation stimulus (pulsating red circle and sound). When infants looked to the fixation stimulus, it disappeared, and was replaced by the habituation stimulus. After reaching the habituation criterion, infants were given two test trials (i.e., Old Target and Changed Visual), which were randomized for each infant.

Infants in the unimodal auditory condition were habituated and tested on the same sounds that were presented in the multimodal condition; however, auditory stimuli were not paired with the visual images presented in Figure 1. Each habituation and testing trial began with a fixation stimulus (pulsating red circle and sound). When infants looked to the fixation stimulus, the red circle stopped pulsating and the auditory stimulus was presented. Auditory processing during the habituation phase and auditory discrimination during the testing phase was assessed by recording infants’ looking to the static red circle. After reaching the habituation criterion, infants were given two test trials (i.e., Old Target and Changed Auditory), which were randomized for each infant.

Results and Discussion

Analyses focused on infants’ processing times during the habituation phase and on infants’ discrimination of auditory and visual stimuli in the testing phase. Accumulated looking time during the habituation phase served as a measure of processing time (see Table 1 for Means and Standard Errors). A one-way ANOVA with condition as a between subjects factor revealed that infants’ accumulated looking times differed across the three conditions, F (2, 61) = 4.37, p = .05. An independent-sample t test revealed that infants in the multimodal condition accumulated more overall looking compared to infants in the unimodal auditory condition, t (45) = 2.73, p = .01. No other effects reached significance.

Table 1.

Mean accumulated looking during the habituation phase and difference scores (compared to old target) during the testing phase. All difference scores are presented in seconds, and Standard Errors of the Mean are reported in parentheses.

| Habituation Phase |

Testing Phase |

|||

|---|---|---|---|---|

| Stimulus Condition (Experiment) |

Accumulated Looking |

Changed Auditory |

Changed Visual |

Changed Both |

|

|

|

|||

| Unimodal Visual (1) |

82.99 (7.67) |

-- | 3.64* (1.95) | -- |

| Unimodal Auditory (1) |

64.73 (7.41) |

2.95* (1.45) | -- | -- |

| Unfamiliar Sounds Unfamiliar Visual (1) |

101.06 (8.73) |

4.12* (1.36) | 1.79 (1.45) | 8.14* (2.23) |

| Pre-familiarized Sounds Unfamiliar Visual (2) |

134.86 (16.68) | 7.11* (1.62) | 2.03* (0.27) | 17.46* (3.57) |

| Pre-familiarized Visual Unfamiliar Sounds (2) |

112.56 (14.71) | 7.42* (1.95) | 0.46 (0.95) | 5.16* (2.79) |

Note: Denotes difference scores > 0, p < .05.

Test trials were analyzed to assess discrimination of auditory and visual stimuli across the different conditions. Recall that infants in the multimodal condition were randomly presented with four test items: Old Target, Changed Visual, Changed Auditory, and Changed Both. Three difference scores were created by subtracting accumulated looking to Old Target from the three changed trials. If infants discriminated visual stimuli then looking on Changed Visual should exceed looking to Old Target, thus, resulting in difference scores greater than zero. If infants discriminated auditory stimuli then looking on Changed Auditory should exceed Old Target, thus, resulting in difference scores greater than zero.

Difference scores in the multimodal condition were submitted to a one-way ANOVA with test trial as a repeated measure (see Table 1 for Mean difference scores and Standard Errors). The analysis revealed an effect of test trial, F (2, 60) = 3.97, p = .02. A paired t test revealed that infants increased looking more when both components changed at test (i.e., Changed Both trials) than when only the visual component changed, t (30) = 2.51, p = .02. Difference scores were also submitted to one-sample t tests to determine which test items differed from zero. Difference scores on Changed Auditory trials, t (30) = 3.03, p = .005, and on Changed Both trials, t (30) = 3.65, p = .001, both significantly differed from zero, whereas, difference scores on Changed Visual trials did not differ from zero, t (30) = 1.23, p = .23.

Although discrimination analyses suggest that infants in the multimodal condition did not discriminate the visual stimuli, they did discriminate these stimuli when presented unimodally (see Table 1 for Means and Standard Errors). Difference scores in the unimodal visual condition were calculated by subtracting looking times to Old target from Changed Visual. Infants in this condition significantly increased looking when the visual component changed at test, with difference scores significantly different from zero, t (16) = 1.87, p = .04. Difference scores in the unimodal auditory condition were calculated by subtracting looking times to Old Target from Changed Auditory. Difference scores in this condition were also significantly different from zero, t (15) = 2.04, p = .03. Furthermore, an independent-sample t test revealed comparable discrimination across the unimodal conditions, t (31) = 0.28, p = .78.

In summary, while infants discriminated auditory and visual stimuli when presented unimodally, analyses of discrimination data suggests that multimodal presentation attenuated visual but not auditory processing. Therefore, results of Experiment 1 point to asymmetric costs of multimodal presentation: while processing of visual input reduced compared to the unimodal baseline, processing of auditory input remained robust. The presence of costs could be explained by all three accounts of multimodal processing, however, only the auditory dominance account can explain the fact that the costs are asymmetric. The goal of Experiment 2 was to further examine the ability of the auditory dominance account to explain multimodal processing.

EXPERIMENT 2

According to the increased processing load account, giving infants an opportunity to process the auditory or visual component prior to presenting it multimodally should reduce processing demands and facilitate learning. For example, there is some evidence that pre-familiarizing infants to visual stimuli before pairing them with auditory stimuli appears to help infants make fine phonetic discriminations and retain word-object pairings (Fennell, 2006; Kucker & Samuelson, 2010). According to the auditory dominance account, infants often allocate attention to the auditory stimulus before shifting attention to the visual stimulus. Pre-familiarizing infants to the sounds prior to pairing them with a visual stimulus should result in the auditory modality releasing attention earlier in the course of processing, thus, speeding up the onset of visual processing (Robinson & Sloutsky, 2007a).

The primary goal of Experiment 2 was to examine the role of auditory and visual familiarity in multimodal processing. The experiment was identical to the multimodal condition of Experiment 1 except that we either pre-familiarized infants to the auditory or visual components prior to the experiment proper. The processing load and auditory dominance both predict that infants in the current experiment should be more likely to discriminate auditory and visual stimuli than infants in the multimodal condition of Experiment 1. However, the serial processing assumption of the auditory dominance account (i.e., auditory input is processed prior to visual input) predicts that pre-familiarizing infants with auditory input may have greater effects than pre-familiarizing infants to visual input. No such prediction is made by the processing load account.

Method

Participants, Stimuli and Procedure

Forty-eight 10-month-olds (29 boys and 19 girls, M = 299.19 days, SD = 60.01 days) participated in this experiment. Participant recruitment and demographics were identical to Experiment 1. Twenty-two additional infants were tested but not included in the current experiment due to fussiness (n = 11) or because they did not reach the habituation criterion (n = 11). The stimuli and experiment were identical to the multimodal condition of Experiment 1, except that the familiarity of the auditory or visual stimulus was manipulated prior to the experiment.

In the current experiment, infants were either given an opportunity to hear the auditory stimuli prior to the experiment proper (n = 21) or they were given an opportunity to see the visual images prior to the experiment proper (n = 27). In the pre-familiarization phase, infants either heard each auditory stimulus 15 times or they saw each visual stimulus 15 times. Stimuli were presented unimodally during the pre-familiarization phase. After pre-familiarization, infants were given a short 2-3 minute break and then they participated in the experiment proper, which was identical to the multimodal condition of Experiment 1.

Results and Discussion

Analyses focused on infants’ processing times during the pre-familiarization and habituation phases and on infants’ discrimination of auditory and visual stimuli in the testing phase. An independent-sample t test revealed that accumulated looking times during the habituation phase did not differ between the two conditions (see Table 1 for Means and Standard Errors). Furthermore, infants’ looking to the screen during the auditory pre-familiarization phase (M = 27.45 s, SE = 2.46) did not differ from infants’ looking to the screen during the visual pre-familiarization phase (M = 23.64 s, SE = 2.40), and there was no evidence that pre-exposure to the auditory or visual input decreased processing time: infants in Experiment 2 did not require less time to process multimodal stimuli than infants in the multimodal condition of Experiment 1 (where both components were unfamiliar).

Difference scores were created by subtracting looking times to Old Target from the three changed trials (see Table 1 for Means and Standard Errors). A Condition (Pre-familiarized visual vs. Pre-familiarized auditory) × Test trial (Changed Visual, Changed Auditory, Changed Both) ANOVA revealed an effect of Test trial, F (2, 92) = 14.53, p = .001, an effect of Condition, F (1, 46) = 4.22, p = .046, and a Condition × Test trial interaction, F (2, 92) = 6.06, p = .003. In the pre-familiarized sound condition, paired-sample t tests confirmed that difference scores on Changed Both trials exceeded those on Changed Auditory trials, t (20) = 3.02, p = .004, and those on Changed Visual trials, t (20) = 4.32, p = .001. Furthermore, differences scores on Changed Auditory trials were greater than those on Changed Visual trials, t (20) = 3.20, p = .003.

In the pre-familiarized visual condition of Experiment 2, paired-sample t tests confirmed that difference scores on Changed Auditory trials exceeded those on Changed Visual trials, t (26) = 3.79, p = .001, and that difference scores on Changed Both trials were marginally greater than those on Changed Visual trials, t (26) = 1.80, p = .084.

Difference scores were also submitted to one-sample t tests to determine which test items differed from zero. In the Pre-familiarized auditory condition, difference scores exceeded zero on Changed Auditory trials, t (20) = 4.38, p = .001, on Changed Visual trials, t (20) = 3.01, p = .006, and on Changed Both trials, t (20) = 4.90, p = .001. In contrast, in the pre-familiarized visual condition, difference scores exceeded zero only on Changed Auditory trials, t (26) = 3.80, p = .001, and on Changed Both trials, t (26) = 1.96, p = .06, whereas, difference scores on Changed Visual trials did not differ from zero, t (26) = 0.48, p = .66.

These findings further extend earlier reported effects of auditory familiarity on visual processing (Robinson & Sloutsky, 2007a; Sloutsky & Robinson, 2008), and they support the auditory dominance account: pre-familiarizing infants to the auditory input had greater effects on visual processing than pre-familiarizing infants to the visual input.

General Discussion

The current study reveals several important findings concerning the effects of multimodal presentation on auditory and visual processing. First, discrimination data suggests that multimodal presentation had asymmetric costs for auditory and visual processing: while multimodal presentation attenuated discrimination of the visual input, it did not attenuate discrimination of auditory input. Second, infants in the multimodal condition took longer to reach the habituation criterion than infants in the unimodal auditory condition (Experiment 1). This finding suggests that some aspects of the visual stimulus (or multimodal stimulus) were attended to during the habituation phase. Finally, while pre-familiarizing infants to the auditory input attenuated auditory dominance effects, pre-familiarizing infants to the visual input did not appear to help infants discriminate the visual stimuli (Experiment 2).

Cognitive load, intersensory redundancy, and auditory dominance all predict that multimodal presentation can affect learning; however, only the auditory dominance account predicts that costs of multimodal presentation should be asymmetrical. Furthermore, auditory dominance can also account for the differences across the pre-familiarized auditory and pre-familiarized visual conditions. In particular, the auditory dominance account assumes that infants process the details of the auditory component prior to shifting their attention to the visual component. Increased familiarity with an auditory stimulus should correspond with faster processing (i.e., faster release of attention), which should allow for more time to process the visual stimulus. While familiarity with the auditory stimulus did not have any significant effect on accumulated looking during the habituation phase, it did correspond with better discrimination of visual stimuli. Recall that this was the only multimodal condition when infants significantly increased looking on Changed Visual trials.

Infants in the multimodal conditions were trained and tested on multimodal stimuli, thus, it is unclear in the current study if interference occurs during the encoding phase, during the testing phase, or both. Although this issue awaits resolution, our previously published findings indicate that auditory dominance effects persist when infants are trained on multimodal stimuli and tested on unimodal visual stimuli (Robinson & Sloutsky, 2007b; 2008). This suggests that at least some of the interference occurs during the encoding stage of processing. In addition, to determine the robustness of auditory dominance, future research will need to manipulate the dynamic nature of auditory and visual stimuli. Will auditory dominance still persist when visual stimuli are dynamic and auditory stimuli are static?

In summary, the current paper tested the ability of three different accounts to explain phenomena associated with multimodal presentation of stimuli. The auditory dominance account can explain why multimodal presentation attenuated visual processing more than auditory processing, and can also account for the finding the pre-familiarized auditory and visual stimuli had different effects on multimodal processing.

Acknowledgments

This research was supported by grants from the NSF (BCS-0720135) and from NIH (R01HD056105) to VMS and from NIH (RO3HD055527) to CWR. The opinions expressed are those of the authors and do not represent views of the awarding organizations. We thank Catherine Best for her helpful comments on an earlier draft of the manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Bahrick LE, Lickliter R. Intersensory redundancy guides attentional selectivity and perceptual learning in infancy. Developmental Psychology. 2000;36:190–201. doi: 10.1037//0012-1649.36.2.190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bahrick LE, Lickliter R, Flom R. Intersensory redundancy guides the development of selective attention, perception, and cognition in infancy. Current Directions in Psychological Science. 2004;13:99–102. [Google Scholar]

- Casasola M, Cohen LB. Infants’ association of linguistic labels with causal actions. Developmental Psychology. 2000;36:155–168. [PubMed] [Google Scholar]

- Fennell CT. Object familiarity affects 14-month-old infants’ use of phonetic detail in novel words. Poster presented at the Biennial International Conference on Infant Studies; Kyoto, Japan. Jun, 2006. [DOI] [PubMed] [Google Scholar]

- Horowitz FD, Paden L, Bhana K, Self P. An infant control method for studying infant visual fixations. Developmental Psychology. 1972;7:90. [Google Scholar]

- Kucker SC, Samuelson LK. Boosting Mapping and Retention in Fast-Mapping. Paper presented at the Biennial International Conference on Infant Studies; Baltimore, Maryland. Mar, 2010. [Google Scholar]

- Kuhl PK, Meltzoff AN. The bimodal perception of speech in infancy. Science. 1982;218:1138–1140. doi: 10.1126/science.7146899. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ. Sensory dominance in infants: 1. Six-month-old infants’ response to auditory-visual compounds. Developmental Psychology. 1988a;24:155–171. [Google Scholar]

- Lewkowicz DJ. Sensory dominance in infants: 2. Ten-month-old infants’ response to auditory-visual compounds. Developmental Psychology. 1988b;24:172–182. [Google Scholar]

- Lewkowicz DJ. The development of intersensory temporal perception: An epigenetic systems/limitations view. Psychological Bulletin. 2000;126:281–308. doi: 10.1037/0033-2909.126.2.281. [DOI] [PubMed] [Google Scholar]

- Lewkowicz DJ, Schwartz BB. Intersensory Perception in Infancy: Response to Competing Amodal & Modality-Specific Attributes. Poster presented at the Biennial International Conference for Infant Studies; Toronto, ON, Canada. Apr, 2002. [Google Scholar]

- Lickliter R, Bahrick LE. The development of infant intersensory perception: Advantages of a comparative convergent-operations approach. Psychological Bulletin. 2000;126:260–280. doi: 10.1037/0033-2909.126.2.260. [DOI] [PubMed] [Google Scholar]

- Napolitano AC, Sloutsky VM. Is a picture worth a thousand words? The flexible nature of modality dominance in young children. Child Development. 2004;75:1850–1870. doi: 10.1111/j.1467-8624.2004.00821.x. [DOI] [PubMed] [Google Scholar]

- Robinson CW, Sloutsky VM. Auditory dominance and its change in the course of development. Child Development. 2004;75:1387–1401. doi: 10.1111/j.1467-8624.2004.00747.x. [DOI] [PubMed] [Google Scholar]

- Robinson CW, Sloutsky VM. Visual processing speed: Effects of auditory input on visual processing. Developmental Science. 2007a;10:734–740. doi: 10.1111/j.1467-7687.2007.00627.x. [DOI] [PubMed] [Google Scholar]

- Robinson CW, Sloutsky VM. Linguistic labels and categorization in infancy: Do labels facilitate or hinder? Infancy. 2007b;11:233–253. doi: 10.1111/j.1532-7078.2007.tb00225.x. [DOI] [PubMed] [Google Scholar]

- Robinson CW, Sloutsky VM. Effects of auditory input in individuation tasks. Developmental Science. 2008;11:869–881. doi: 10.1111/j.1467-7687.2008.00751.x. [DOI] [PubMed] [Google Scholar]

- Schafer G, Plunkett K. Rapid word learning by 15-month-olds under tightly controlled conditions. Child Development. 1998;69:309–320. [PubMed] [Google Scholar]

- Sloutsky VM, Napolitano A. Is a picture worth a thousand words? Preference for auditory modality in young children. Child Development. 2003;74:822–833. doi: 10.1111/1467-8624.00570. [DOI] [PubMed] [Google Scholar]

- Sloutsky VM, Robinson CW. The role of words and sounds in visual processing: From overshadowing to attentional tuning. Cognitive Science. 2008;32:354–377. doi: 10.1080/03640210701863495. [DOI] [PubMed] [Google Scholar]

- Stager CL, Werker JF. Infants listen for more phonetic detail in speech perception than in word-learning tasks. Nature. 1997;388:381–382. doi: 10.1038/41102. [DOI] [PubMed] [Google Scholar]