Abstract

Objective This article describes the application of quality improvement methodology to implement a measurement tool for the assessment of functional status in pediatric patients with chronic pain referred for behavioral intervention. Methods The Functional Disability Inventory (FDI), a validated instrument for assessment of pain-related disability, was chosen as the primary clinical outcome measure. Using improvement science methodology, PDSA (Plan-Do-Study-Act) cycles were run to evaluate: (a) regular FDI administration, (b) two administration methods, (c) regular patient feedback, and (d) documentation methods. Results Within 1 month, psychologists were administering the FDI at least 80% of the time to patients. A high level of reliability using two administration methods (92.8%) was demonstrated. The FDI was feasible to integrate into clinical practice. Modifications to electronic records further enhanced clinician reliability of documentation. Conclusions Quality improvement methods are an innovative way to make process changes in pediatric psychology settings to dependably gather and document evidence-based patient outcomes.

Keywords: Functional Disability Inventory (FDI), pediatric pain, quality improvement

The Institute of Medicine (IOM) issued two landmark reports in 1999 and 2001, (“To Err is Human,” and “Crossing the Quality Chasm”), citing the frequency of patients receiving insufficient, unsafe care and calling for a redesign of the United States healthcare system. [Institute of Medicine (U.S.). Committee on Quality of Health Care in America, 2001; Kohn, Corrigan, Donaldson, & Institute of Medicine (U.S.). Committee on Quality of Health Care in America.] Evidence-based practices typically have taken years to disseminate from “bench” (clinical trials) to “bedside” (clinical practice) (Balas & Boren, 2000) and often are delivered unreliably, with patients receiving recommended care only about 50% of the time (Mangione-Smith et al., 2007; McGlynn et al., 2003). Besides being the “right thing to do,” patients deserve to receive those practices that have been proven effective and to be protected from “local habits” that are not grounded in empirical evidence (Berwick, 2002). Implementing evidence-based assessments to track clinical outcomes is one step toward accomplishing the goals of health care improvement in treating common pediatric conditions such as recurrent and chronic pain.

Despite research progress in the identification of empirically supported assessments for pediatric chronic pain, very little attention has been paid to how these might be efficiently and consistently integrated into actual practice in a way that is clinically meaningful. Clinical decision-making, such as when to end treatment or shift treatment goals, often occurs in the absence of systematic assessment of outcomes and therefore becomes a somewhat arbitrary practice that differs among clinicians. In an era where it is possible to use evidence-based assessment tools to assist in determining patient status and progress in treatment, many clinicians still rely on personal judgment or fail to reliably employ these tools throughout the course of treatment. This problem is compounded in large medical centers with heterogeneous patient populations and multiple providers, who vary in their implementation of evidence-based guidelines. One illustration in a pediatric psychology setting is that of our behavioral pain management program, which is a relatively high-volume service covered by multiple psychologists with no standardized practice of using evidence-based measures for assessing treatment outcomes in pediatric chronic pain. This situation is in fact typical of most healthcare settings and presents the ideal opportunity for implementing a quality improvement-based approach.

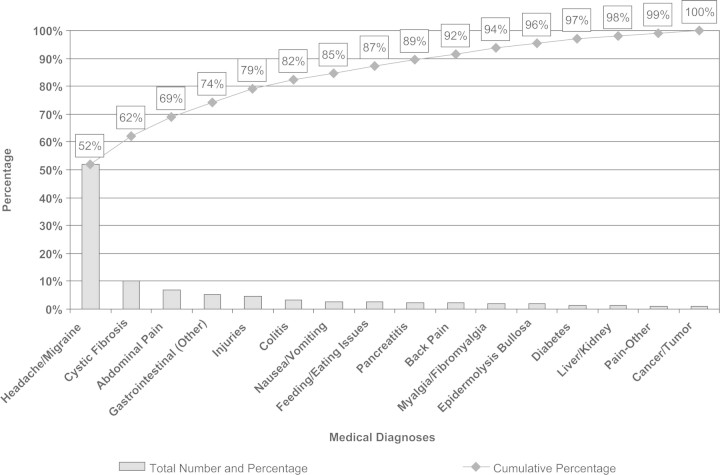

We chose pediatric patients with chronic pain to demonstrate the feasibility of using improvement science methodology to implement an assessment tool measuring clinical outcomes for several reasons: (a) chronic pain is common in children and adolescents (Perquin et al., 2000); and referrals for treatment of chronic headaches, back pain, abdominal pain, and diffuse musculoskeletal pain form a large proportion of referrals to our pediatric psychology service (Fig. 1); (b) there is good research evidence supporting the use of cognitive–behavioral therapy (CBT) to improve functioning and reduce disability in children and adolescents with chronic pain (Eccleston, Malleson, Clinch, Connell, & Sourbut, 2003; Kashikar-Zuck, Swain, Jones, & Graham, 2005; Robins, Smith, Glutting, & Bishop, 2005); and (c) several of our psychologists are already trained in delivering CBT for pediatric pain management.

Figure 1.

Sixteen most common medical diagnoses of patients referred for services during fiscal year 2007 in the Division of Behavioral Medicine and Clinical Psychology.

While evidence-based assessment of clinical outcomes in pediatric chronic pain is an integral part of our research protocols, the integration of comparable measures into broader clinical practice remains a logistical challenge. Quality improvement methodology facilitates the translation of scientific, evidence-based assessment into a clinical practice setting in a unique way that considers contextual and environmental factors. Improvement science and clinical research share the common goal of improving patient outcomes. Nevertheless, these two methodologies often stand in tension with one another. For example, clinical research typically relies upon rigorous methodology, with narrow inclusion and exclusion criteria, large sample sizes, control groups, and lengthy duration (often years). However, this type of research can fail to consider the distinct nuances of systems such as the organizational structure and contextual factors (i.e., the processes of any given unit, clinic, or practice) (Berwick, 2008). Conversely, improvement methodology emphasizes small tests of change, small sample sizes, swift identification and response to problems, context considerations, and a lower threshold for instituting change. For our purposes, improvement methodology is well-suited for the implementation of a common assessment tool for measuring outcomes during behavioral pain management because such an endeavor is significantly impacted by contextual factors (i.e., psychologist “buy in,” the clinical setting, highly diverse patient characteristics, therapists’ treatment preferences) and requires flexible adaptation to our setting.

Unlike the lengthy assessments in clinical research, implementation of evidence-based clinical assessment within a healthcare setting requires a different approach. At the outset, it is crucial for clinicians to first come to consensus about a uniform indicator of clinical improvement that is practical to use in a busy clinic, acceptable to both patients and clinicians, and sensitive to treatment-related gains. While recent reviews highlight the necessity of assessing multiple core outcome domains for pediatric chronic pain (Cohen et al., 2008; McGrath et al., 2008), the magnitude of changing an entire program's clinical assessment strategy requires an initially narrow scope. A salient goal for pain assessment is to focus on patient functioning since (a) a rehabilitative model of behavioral pain management focuses primarily on return to usual levels of functioning (i.e., reduction of disability), while actively coping with and managing pain and (b) for some pain conditions (e.g., fibromyalgia and sickle cell disease), the nature of the illness implies that complete pain relief is highly unlikely. Since the assessment of functional limitations is such an important aspect of CBT for chronic pain, identifying an empirically supported assessment tool was indicated. Several measures of functional status have been used with a chronic pain population, including the Functional Disability Inventory (FDI) (Walker & Greene, 1991), the Child Activity Limitation Interview (CALI) (Palermo, Witherspoon, Valenzuela, & Drotar, 2004), and the Functional Status II (R) (Stein & Jessop, 1990). After reviewing the evidence for each of these measures, psychologists reached consensus and chose the FDI as a primary outcome measure. The FDI has been classified as a “well-established” measure, has good evidence of psychometric validity and reliability (Claar & Walker, 2006; Palermo et al., 2008), has been used in multiple pediatric pain populations, and has limited clinician burden in terms of length, administration, scoring, and interpretation (Palermo et al., 2008).

In this article, we describe the initial work towards applying evidence-based approaches to the assessment of pain-related disability in children and adolescents diagnosed with chronic pain. The primary objective is to illustrate the process of using improvement science methodology to put into practice an efficient, clinically useful measurement tool to evaluate patient functional status before, during, and at the conclusion of treatment among children and adolescents referred for outpatient, behavioral pain management.

Method

Setting and Organizational Structure

Cincinnati Children's Hospital Medical Center (CCHMC) is a large, urban medical center that serves patients from a geographically wide, tri-state area. Within the Division of Behavioral Medicine and Clinical Psychology (BMCP), five psychologists specialize in the treatment of pediatric chronic pain, although other psychologists occasionally treat patients for emotional problems who also experience pain. Our team is comprised of one clinical psychologist treating exclusively patients with chronic pain (81.3% of patients); three clinical psychologists treating patients with chronic illnesses of which a subset were referred for pain management; and one research psychologist who occasionally provides clinical services. Thus, multiple psychologists provide CBT for patients with chronic pain but in different contexts and with varying use of assessment tools for clinical decision-making. All of these psychologists participated in this quality improvement project via weekly in-person meetings.

The pain management service of the BMCP Division provides outpatient behavioral treatment for children and adolescents with chronic or episodic pain. [Chronic pain is defined as pain that has persisted for 3 months or more or beyond the expected period of healing (Merskey, 1994).] A majority of the referrals for pain management come from two interdisciplinary clinics: the Headache Center and the Pain Management Clinic which are housed in the Divisions of Neurology and Anesthesia, respectively. Other pain management referrals come directly from specialists throughout the hospital (e.g., gastroenterology and rheumatology). BMCP receives an average of 12.5 (SD = 6.4) new pain management referrals each month with seasonal variation related to the school year. These services typically involve an initial intake session and 4–8 sessions of biofeedback-assisted relaxation and cognitive–behavioral, pain coping skills training.

Human Subjects Protection

The Institutional Review Board (IRB) was consulted and apprised of the project, which was determined as exempt from review because the project was undertaken with the goal of applying quality improvement methodology to enhance clinical standards of care. Thus, informed consent for human subjects research was waived, provided that no individual information was identified.

Outcome Measure

In order to systematically assess patients’ physical limitations, the FDI (Walker & Greene, 1991) was chosen as a measure of functional disability. It is a 15-item, self-report instrument assessing a child's perception of her difficulty completing common, daily activities due to pain. Items are scored on a 5-point Likert scale, ranging from 0 to 4 and categorized by descriptors “No Trouble,” “A Little Trouble,” “Some Trouble,” “A Lot of Trouble,” and “Impossible.” A total score is created by summing the items and ranges from 0 to 60. Higher scores indicate greater functional disability secondary to pain symptoms.

The FDI is one of the most commonly used measures of pediatric functional impairment and was created specifically for a chronic/recurrent pain population (e.g., gastrointestinal pain) (Eccleston, Jordan, & Crombez, 2006). One of its strengths is its use in multiple pediatric pain populations including: abdominal (Walker & Heflinger, 1998), back (Lynch, Kashikar-Zuck, Goldschneider, & Jones, 2006), Complex Regional Pain Syndrome (Eccleston et al., 2003), headache (Lewandowski, Palermo, & Peterson, 2006; Logan & Scharff, 2005; Long, Krishnamurthy, & Palermo, 2008), and fibromyalgia pain (Kashikar-Zuck, Goldschneider, Powers, Vaught, & Hershey, 2001; Kashikar-Zuck, Vaught, Goldschneider, Graham, & Miller, 2002; Reid, Lang, & McGrath, 1997), suggesting good evidence for its broad utility. The FDI is valid for children above the age of 8 years. Studies report FDI results as a sensitive indicator of changes in symptom severity in the pediatric chronic/recurrent pain literature (Degotardi et al., 2006; Eccleston et al., 2003; Kashikar-Zuck et al., 2005).

The FDI was chosen as our primary outcome measure for several reasons. Recent reviews have supported its efficacy in clinical research on pediatric chronic pain (Eccleston et al., 2006; McGrath et al., 2008; Palermo et al., 2008). The FDI has been used for over 15 years in pediatric patients with chronic pain who are comparable to our clinical patients in terms of age and primary presenting problems. Additionally, the FDI has been incorporated into the research conducted by our pain program. This familiarity with the measure allowed for a relatively uncomplicated translation into clinical practice. Finally, the FDI is simple to score for immediate patient feedback and provides a total score that is easily interpretable. Thus, this measure appeared well-suited to our clinical needs and quality improvement goals.

Overview of Quality Improvement Methodology

The first step undertaken by the team was to operationally define functional outcomes and determine how data were to be collected and displayed. In terms of assessment frequency, several considerations influenced the final decision to collect data at every session. While the FDI shows posttreatment changes in functional disability (Degotardi et al., 2006; Eccleston et al., 2003), it remains unclear when to expect functional changes to occur during the course of therapy and consequently when to assess for them (i.e., the 4th session vs. the 6th session). Moreover, the burden of recalling complex assessment schedules (as in research protocols) was not a realistic solution for clinical practice. Additionally, self-monitoring for the purposes of assessment and treatment have proven to be a valuable tool for children (Peterson & Tremblay, 1999), and even rigorous methods, such as daily diaries, have been relatively well tolerated (Foster, Laverty-Finch, Gizzo, & Osantowski, 1999). Given these factors, the team opted to test whether routine collection of the FDI at each treatment session would be feasible as part of usual clinical care.

PDSA (Plan-Do-Study-Act) methodology was utilized for designing and evaluating tests of change. PDSA refers to the specific method in improvement science (Deming, 1994) that encourages small-scale changes in a procedure, as well as a systematic way to analyze those changes and spread the process throughout the system. PDSA stands for “Plan” (plan a change or a test, identify objectives), “Do” (carry out the improvement plan starting on a small scale), “Study” (analyze the results, extract the findings, summarize the new knowledge), and “Act” (take steps to adopt the change, abandon the idea, or do additional cycles to correct problems). PDSAs are typically implemented (or run) in cycles and are a learning mechanism, as repeated cycles of PDSAs will move a team from ideas to actual changes that result in improvement (Moen, Nolan, & Provost, 1999). The first cycle focuses on testing the change on a small scale and identifying any unanticipated barriers. Adjustments are then made in subsequent cycles which involve spreading the change across the system.

Data were tracked and monitored using run charts for recording FDI administration. Provost and Murray (2007) describe a run chart as a “graphical display of data plotted in some type of order” (pp. 3–1). A run chart was created to record the FDI administration rates across pain psychologists in the division. In this case, the horizontal (or x) axis depicted the week from which data were collected. The vertical (or y) axis represented the percentage of FDI administration, derived by dividing the number of FDIs administered by the total number of pain treatment sessions for that week. By studying the administration rate over time, the reader can learn about the pattern of the process with “minimal mathematical complexity” (p. 3–1), including the impact from any other interventions initiated during that time period.

Improvement Strategies

The team developed four PDSAs designed to: (a) test the feasibility of regular FDI administration, (b) evaluate two administration methods, (c) evaluate the value of sharing FDI results with patients, and (d) design a consistent method of documenting FDI administration in therapy notes. The objective of the first PDSA was to test the feasibility and acceptability of using the FDI in clinical practice. The first cycle of this PDSA involved three psychologists administering the FDI to every patient referred for pain management at every session. At the conclusion of the cycle, the team met and each participating psychologist provided verbal feedback regarding the PDSA in terms of helpfulness, ease of administration, and suggestions for improvement. Feedback from the first cycle revealed several barriers related to feasibility. Failure to administer an FDI (n = 21) was due to clinician forgetfulness 100% of the time during the first cycle. Failed administrations occurred 34.4% during the first session (clinical interview), with the remainder occurring at various times throughout treatment. Additionally, environmental factors such as lack of access to the FDI in treatment rooms, lack of a uniform method of introducing the FDI, and scoring and filing issues were identified.

The second cycle of the PDSA addressed some of these barriers by: (a) creating a clinician script to provide a standard explanation for FDI use with patients, (b) having electronic and hard copies of the FDI available in multiple locations (e.g., chart, treatment rooms, and offices), and (c) involving an additional staff member to assist with maintaining a database and filing system. Two additional psychologists administered the FDI to their patients for the second cycle of the PDSA. At its conclusion, the team reconvened and each participating psychologist verbally provided feedback about their observations from the second cycle. Results indicated that the aforementioned barriers had been satisfactorily addressed in that fewer failed administrations occurred (n = 4) across all five psychologists in the pain program (three failures due to forgetfulness, one due to time constraints). Thus, a third cycle maintained the use of the FDI across all psychologists. In its entirety, the results of all PDSA cycles identified the most salient barrier to regular FDI administration was clinician forgetfulness (96.5%). Psychologists identified factors such as treating patients with a variety of presenting problems (i.e., not all pain), time constraints (i.e., patients arriving late), and excessive paperwork (i.e., during the initial evaluation) as interfering with remembering.

A second PDSA was developed to investigate the practicality of patient self-administration of the FDI to reduce clinician burden of administration and increase patient monitoring of functional status. The objective of this PDSA was to test the feasibility of patients self-administering the FDI in the reception area prior to their therapy sessions. The team speculated that several obstacles might arise including: patient forgetfulness, lack of supplies (i.e., writing utensils, blank FDIs), and inaccurate completion (i.e., by non-pain patients). This PDSAs complexity required preparation activities such as obtaining FDI receptacles and educating front office staff to answer questions about the forms. Psychologists recorded on a paper tracking form for each session (a) whether or not the FDI was completed, and (b) if the FDI was completed by the patient in the waiting area or administered by the psychologist in the session. All data forms were returned to the lead psychologist who compiled the data. Psychologists also recorded inaccurate completion by non-pain patients. At the conclusion of the PDSA, verbal feedback was also obtained and recorded at the weekly team meeting. All psychologists participated in this PDSA.

Results suggested few difficulties were encountered during self-administration of the FDI. During the PDSA, fourteen of 34 (41.2%) patients required one education session about self-administration and then were able to consistently complete the FDI in the reception area for the remainder of treatment. Anecdotally, psychologists stated this appeared true even for patients who experienced a large gap in time between the education session (typically the first) and the subsequent treatment session. About 18 percent (n = 6, 17.6%) of patients required repeated re-education because they did not remember to complete the measure on their own. A number of patients (29.4%) were educated at their first session, but did not return for an additional session over the course of the PDSA to assess self-administration. In a few cases (n = 4), psychologists forgot to instruct patients about self-administration, while patients seen in a separate Pain Management Clinic for their initial evaluation (n = 10) did not participate in this PDSA due to the geographically different location. Thus, this process of self-administration appeared promising and was integrated into regular practice.

A third PDSA was developed to enhance tracking of personal FDI results. Specifically, the goal of this PDSA was to test the feasibility and utility of using individualized run charts to plot FDI data for patients. The team predicted that providing personal run charts would assist the psychologist in more concretely tracking treatment progress in regards to functioning. One psychologist began using the run charts and additional psychologists were added in cycles two and three. Qualitative feedback was obtained from the psychologists in regard to usefulness and efficiency at the conclusion of each cycle. Patient feedback was not solicited as part of this PDSA.

Results indicated that graphical representation of data in run charts were viewed by psychologists as logical, compelling markers of patient progress. The addition of this component to the treatment session was not viewed as prohibitive in terms of efficiency or effort. Psychologists denied any adverse reactions by patients. No further PDSA cycles were run, and the process was integrated into all pain treatment sessions.

The last PDSA focused on improving documentation of FDI administration. The PDSA's objective was to modify electronic progress notes to facilitate the recording of FDI administration. One psychologist collaborated with the division's information technology staff to modify the progress note template. Changes included adding a single prompt to indicate if the FDI was administered (Y/N). The team reviewed the revised progress note template and made edits as needed before implementing the PDSA. Because this PDSA required system-wide changes to the electronic psychology record, all psychologists implemented the change in the first cycle of the PDSA. Psychologists’ verbal feedback after the first cycle highlighted the fact that a different electronic template was used for a first (evaluation) session. As a result, six evaluation sessions (33.3%) did not have FDI data documented during this 1-week cycle because the new electronic template was unavailable. Thus, for the second cycle, the evaluation progress note template was revised in the manner described above. Results of the second cycle indicated that FDI administration was being recorded reliably by all clinicians. The new templates were used to record data 97.1% of the time during the 4-week cycle. Thus, this PDSA was adopted as regular clinical practice.

Results

Patients presenting for outpatient pain management services were typically female (N = 81, 79.4%) and adolescents (age M = 15.2). The primary pain complaints were: headache (32.4%), abdominal pain (18.6%), and back pain (12.7%). On average, 9.7 outpatients were seen per week for pain management services. The average FDI score at the first session was 19.7 (SD = 11.3), which is consistent with results presented in the pediatric pain literature (Gauntlett-Gilbert & Eccleston, 2007; Kashikar-Zuck et al., 2001; Lynch et al., 2006).

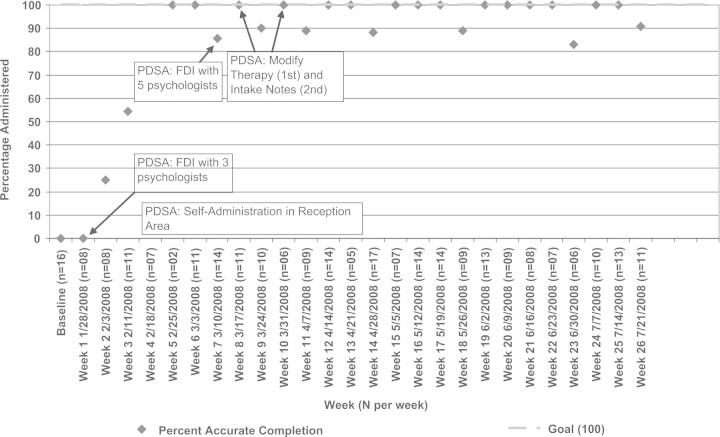

Data was collected for 26 weeks from January through July 2008 and is presented in a run chart (Fig. 2). Three psychologists began administering the FDI in their clinical practice, and within 4 weeks reliably administered it over 80% of the time at each patient session. Within 7 weeks, all five psychologists providing pain management services in the division were regularly utilizing the FDI with their patients. Over the 26 weeks, 225 FDIs were administered out of 254 patient sessions, for a total administration rate of 88.6%. However, 21 of the 29 (72.4%) administration failures occurred during the first month of employing the measurement system when psychologists’ familiarity with the new process was modest.

Figure 2.

Run chart of FDI administration across 26 weeks.

As noted above, in order to facilitate easier documentation and data collection of FDI administration, modifications to the electronic pain therapy and evaluation notes were made at weeks 8 and 10. Following this design change, compliance with administering the FDI increased to 97.0% across the system, suggesting that outcome data were being administered and documented very consistently.

Psychologists provided qualitative feedback that described the FDI as easy to administer and score; nondisruptive to the flow of the treatment session; and valuable in providing a quick measure of functional status to the psychologist and patient. It served both as an indicator of patient progress but also reportedly enabled psychologists to clearly identify areas of deficits (e.g., physical activity and sleep) that could be targeted for specific intervention in treatment.

The PDSA designed to test the efficacy of patient self-administration of the FDI ran in two cycles over the course of 10 weeks (Fig. 2). During this time period, a total of 93 FDIs were collected by two methods, patient self-administration or psychologist administration. Thirty-eight of 93 FDIs were collected by psychologists as part of the patients’ education sessions instructing them to complete the FDI in the reception area at all sessions thereafter. Patients appropriately self-administered the FDI 81.1% (45/55) of the time. The overall FDI administration rate for this PDSA was 92.8%, suggesting that the combination of both self- and psychologist-administration methods allowed for a high degree of reliable data collection. Few FDIs were completed inappropriately by non-pain patients in the reception area (n = 9) over the course of the PDSA.

Feedback about personalized run charts was positive. Psychologists’ observations were that patients became encouraged if their scores/run charts visibly dropped (indicating less functional disability), and they frequently remembered their scores from previous sessions without clinician prompting. Additionally, psychologists reported that even minor changes were seen as a morale booster, particularly when patients’ overall functional status was quite poor.

Discussion

Adapting the best evidence of clinical research to clinical practice is challenging for healthcare providers. Improvement science methods are ideal for translating evidenced-based assessment tools into clinical settings with unique contextual needs. This article describes how to integrate a clinically useful measurement tool into an outpatient, behavioral pain management program. The FDI, a psychometrically sound instrument for clinical research in pediatric pain, was utilized to systematically identify patient functional status. Within 2 months, all psychologists providing pain management services were fairly consistently administering the FDI at every patient session. Additionally, several process changes ensured this regularity of administration. Specifically, one PDSA demonstrated the utility and feasibility of patient self-administration of the FDI as a means of securing patient data. Modification to the electronic psychology record allowed psychologists to better document FDI administration and results. Thus, the team successfully developed a uniform measurement approach, utilized across multiple psychologists with varying clinical practices, that tracks one important aspect of patient progress.

The FDI has been shown to measure functional disability across a range of pain conditions in research, and has now been demonstrated to be feasible for clinical use. Regular FDI results allowed psychologists to tangibly measure functional changes and also identify specific areas for intervention. For example, a decrease in the FDI score from the previous session could cue the psychologist to investigate which activities were better managed and which area(s) remained problematic. If going to school remained a consistent problem, the psychologist might investigate attendance patterns, teacher attitudes, homework incompletion, and grade declines. Based on the ability to engage in repeated functional assessment, the psychologist could determine if a behavioral intervention aimed at improving school attendance would be merited or might re-conceptualize the sequence of skills taught in treatment (i.e., addressing coping in the school setting immediately, rather than make it a lower priority).

In our patient population, a variety of pain conditions were represented (i.e., headache, abdominal and back pain, fibromyalgia). The trade off in choosing a global measure of functioning, however, includes failure to address unique pain-specific elements and the reality that certain pain conditions may be more amenable to improved functional disability than others. The FDI, like any assessment tool, has limitations to consider. This instrument has restricted use with younger ages; non-specific pain questions may not capture the nuances of select illness conditions (i.e. migraine headaches, episodic pain); lack of identified “clinically important difference scores” (p. 992) or clinical cut-offs for interpretation; and little formal research on sensitivity to treatment specific changes (Palermo et al., 2008).

From a clinical perspective, it is evident that additional outcomes should be collected focusing on the quality of pain symptoms and the degree of impairment caused by them. From a process perspective, however, identifying uniform outcomes for a heterogeneous pain population is quite challenging. There may not be clear consensus on the primary outcomes, and if agreement exists, the gap is wide between rigorous but inefficient research instruments and brief, clinically meaningful tools. It is acknowledged that the well-trained clinician considers many factors when judging patient outcomes aside from solely functional disability. As a matter of practice, the team continued to conduct periodic assessments of patients’ pain intensity, frequency, and duration in addition to the FDI.

One notable absence in this work is the perspective of the patient. The initial goal was investigating the feasibility of creating and maintaining a regular outcome measure in treatment. Since psychologists were instrumental in designing PDSA cycles, their feedback and data was imperative to collect at this first stage. The next step will include gathering the patient perspective about the utility of this data, its contributions to treatment, and ways to enhance its meaning and significance. Additionally, data was not available in regards to attendance patterns. Differences in the frequency of treatment may have an impact on patient willingness to complete the FDI, psychologist forgetfulness in terms of administration, and its saliency in treatment.

One of the benefits of actively defining and measuring patient outcomes relates to the evolving nature of managed care. The managed care industry is becoming more rigorous in demanding the use of evidence-based guidelines. National health care is moving away from “fee for service” to “pay for performance,” and in 2010, the American Board of Pediatrics will mandate demonstrated competence in quality improvement as a component of Maintenance of Certification (The American Board of Pediatrics, 2007). Thus, well-defined patient outcomes become essential for evaluative purposes. Additionally, the increasingly pervasive use of electronic medical records, from large institutions to small private practices, necessitates streamlined patient outcomes to accommodate paperless system limitations. Thus, a proactive approach to identifying clinically meaningful changes is much preferred to outcomes defined by a third party.

Several factors contributed to the relatively quick success of our implementation program. Institutional support in terms of both resources and expertise in quality improvement methodology was invaluable. Critical resources provided to the division included a quality improvement consultant and decision support analyst for data management. Additionally, the team had an identified leader who was provided a reduced clinical load, additional improvement science training, and met weekly with the consultant for coaching. Team members were voluntary participants and engaged in all activities in addition to their normal responsibilities; thus, careful consideration of “out-of-meeting” workload for team members was necessary. Finally, results were achieved by the collective team agreement to meet weekly to maintain momentum and make meaningful gains in a timely manner.

Although housed within a large behavioral medicine division, the ability to provide specialized pain management services is limited to a relatively small group of psychologists. However, the demand for these services far exceeds the current clinicians’ availability to provide treatment. Additionally, families’ requests for shorter waitlists for services, facilities in closer proximity to their homes, and flexible scheduling to accommodate school and activities will require the spread of behavior pain management services to a larger subset of psychologists, who may already be seeing the occasional patient with secondary pain problems but are not consistently gathering pain and FDI data, or following evidence-based protocols. One of the team's goals includes creating a clinician toolkit of cognitive-behavioral pain coping skills in order to spread the assessment and treatment of pediatric chronic pain across the division.

In summary, this project demonstrates how quality improvement methodology was utilized to establish a process of assessing patient outcomes in a manner that balances the rigor of empirically-validated tools with the realistic needs of clinical practice, helping psychologists use the science of clinical research in a meaningful way. This project lays the foundation for extending the use of improvement science methods to delivery of evidence-based care to children and adolescents with chronic pain. In this way, we hope to provide the most effective care to change the outcomes for the full range of pediatric chronic pain patients referred to our pediatric psychology service.

Conflicts of interest: None declared.

References

- Balas EA, Boren SA. Yearbook of Medical Informatics. Edmonton, Alberta, Canada: The International Medical Informatics Association (IMIA); 2000. Managing clinical knowledge for health care improvement; pp. 65–70. [PubMed] [Google Scholar]

- Berwick DM. A user's manual for the IOM's ‘Quality Chasm’ report. Health Affairs. 2002;21(3):80–90. doi: 10.1377/hlthaff.21.3.80. [DOI] [PubMed] [Google Scholar]

- Berwick DM. The science of improvement. Journal of the American Medical Association. 2008;299(10):1182–1184. doi: 10.1001/jama.299.10.1182. [DOI] [PubMed] [Google Scholar]

- Claar RL, Walker LS. Functional assessment of pediatric pain patients: psychometric properties of the functional disability inventory. Pain. 2006;121(1–2):77–84. doi: 10.1016/j.pain.2005.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen LL, Lemanek K, Blount RL, Dahlquist LM, Lim CS, Palermo T, et al. Evidenced-based assessment of pediatric pain. Journal of Pediatric Psychology. 2008;33(9):939–955. doi: 10.1093/jpepsy/jsm103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Degotardi PJ, Klass ES, Rosenberg BS, Fox DG, Gallelli KA, Gottlieb BS. Development and evaluation of a cognitive-behavioral intervention for juvenile fibromyalgia. Journal of Pediatric Psychology. 2006;31(7):714–723. doi: 10.1093/jpepsy/jsj064. [DOI] [PubMed] [Google Scholar]

- Deming WE. The new economics for industry, government, education. 2nd. Cambridge, MA: The MIT Press; 1994. [Google Scholar]

- Eccleston C, Jordan AL, Crombez G. The impact of chronic pain on adolescents: A review of previously used measures. Journal of Pediatric Psychology. 2006;31(7):684–697. doi: 10.1093/jpepsy/jsj061. [DOI] [PubMed] [Google Scholar]

- Eccleston C, Malleson PN, Clinch J, Connell H, Sourbut C. Chronic pain in adolescents: Evaluation of a programme of interdisciplinary cognitive behaviour therapy. Archives of Disease in Childhood. 2003;88(10):881–885. doi: 10.1136/adc.88.10.881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster SL, Laverty-Finch C, Gizzo DP, Osantowski J. Practical issues in self-observation. Psychological Assessment. 1999;11(4):426–438. [Google Scholar]

- Gauntlett-Gilbert J, Eccleston C. Disability in adolescents with chronic pain: Patterns and predictors across different domains of functioning. Pain. 2007;131(1–2):132–141. doi: 10.1016/j.pain.2006.12.021. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine (U.S.). Committee on Quality of Health Care in America. Crossing the quality chasm: A new health system for the 21st century. Washington, DC: National Academy Press; 2001. [Google Scholar]

- Kashikar-Zuck S, Goldschneider KR, Powers SW, Vaught MH, Hershey AD. Depression and functional disability in chronic pediatric pain. Clinical Journal of Pain. 2001;17(4):341–349. doi: 10.1097/00002508-200112000-00009. [DOI] [PubMed] [Google Scholar]

- Kashikar-Zuck S, Swain NF, Jones BA, Graham TB. Efficacy of cognitive-behavioral intervention for juvenile primary fibromyalgia syndrome. Journal of Rheumatology. 2005;32(8):1594–1602. [PubMed] [Google Scholar]

- Kashikar-Zuck S, Vaught MH, Goldschneider KR, Graham TB, Miller JC. Depression, coping, and functional disability in juvenile primary fibromyalgia syndrome. Journal of Pain. 2002;3(5):412–419. doi: 10.1054/jpai.2002.126786. [DOI] [PubMed] [Google Scholar]

- Kohn LT, Corrigan JM, Donaldson MS Institute of Medicine (U.S.). Committee on Quality of Health Care in America. To err is human: Building a safer health system. Washington, DC: National Academy Press; 1999. [Google Scholar]

- Lewandowski AS, Palermo TM, Peterson CC. Age-dependent relationships among pain, depressive symptoms, and functional disability in youth with recurrent headaches. Headache. 2006;46(4):656–662. doi: 10.1111/j.1526-4610.2006.00363.x. [DOI] [PubMed] [Google Scholar]

- Logan DE, Scharff L. Relationships between family and parent characteristics and functional abilities in children with recurrent pain syndromes: An investigation of moderating effects on the pathway from pain to disability. Journal of Pediatric Psychology. 2005;30(8):698–707. doi: 10.1093/jpepsy/jsj060. [DOI] [PubMed] [Google Scholar]

- Long AC, Krishnamurthy V, Palermo TM. Sleep disturbances in school-age children with chronic pain. Journal of Pediatric Psychology. 2008;33(3):258–268. doi: 10.1093/jpepsy/jsm129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lynch AM, Kashikar-Zuck S, Goldschneider KR, Jones BA. Psychosocial risks for disability in children with chronic back pain. Journal of Pain. 2006;7(4):244–251. doi: 10.1016/j.jpain.2005.11.001. [DOI] [PubMed] [Google Scholar]

- Mangione-Smith R, DeCristofaro AH, Setodji CM, Keesey J, Klein DJ, Adams JL, et al. The quality of ambulatory care delivered to children in the United States.[see comment] New England Journal of Medicine. 2007;357(15):1515–1523. doi: 10.1056/NEJMsa064637. [DOI] [PubMed] [Google Scholar]

- McGlynn EA, Asch SM, Adams J, Keesey J, Hicks J, DeCristofaro A, et al. The quality of health care delivered to adults in the United States. New England Journal of Medicine. 2003;348(26):2635–2645. doi: 10.1056/NEJMsa022615. [DOI] [PubMed] [Google Scholar]

- McGrath PJ, Walco GA, Turk DC, Dworkin RH, Brown MT, Davidson K, et al. Core outcome domains and measures for pediatric acute and chronic/recurrent pain clinical trials: PedIMMPACT recommendations. Journal of Pain. 2008;9(9):771–783. doi: 10.1016/j.jpain.2008.04.007. [DOI] [PubMed] [Google Scholar]

- Merskey H. Logic, truth and language in concepts of pain. Quality of Life Research. 1994;3(Suppl 1):S69–S76. doi: 10.1007/BF00433379. [DOI] [PubMed] [Google Scholar]

- Moen RD, Nolan TW, Provost LP. Quality improvement through planned experimentation. New York: McGraw-Hill; 1999. [Google Scholar]

- Palermo TM, Long AC, Lewandowski AS, Drotar D, Quittner AL, Walker LS. Evidence-based assessment of health-related quality of life and functional impairment in pediatric psychology. Journal of Pediatric Psychology. 2008;33(9):983–996. doi: 10.1093/jpepsy/jsn038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palermo TM, Witherspoon D, Valenzuela D, Drotar DD. Development and validation of the Child Activity Limitations Interview: A measure of pain-related functional impairment in school-age children and adolescents. Pain. 2004;109(3):461–470. doi: 10.1016/j.pain.2004.02.023. [DOI] [PubMed] [Google Scholar]

- Perquin CW, Hazebroek-Kampschreur AA, Hunfeld JA, Bohnen AM, van Suijlekom-Smit LW, Passchier J, et al. Pain in children and adolescents: A common experience. Pain. 2000;87(1):51–58. doi: 10.1016/S0304-3959(00)00269-4. [DOI] [PubMed] [Google Scholar]

- Peterson L, Tremblay L. Self-monitoring in behavioral medicine: Children. Psychological Assessment. 1999;11(4):458–465. [Google Scholar]

- Provost LP, Murray S. The data guide: Learning from data to improve health care. Austin, TX: Associates in Process Improvement and Corporate Transformation Concepts; 2007. [Google Scholar]

- Reid GJ, Lang BA, McGrath PJ. Primary juvenile fibromyalgia: Psychological adjustment, family functioning, coping, and functional disability. Arthritis and Rheumatism. 1997;40(4):752–760. doi: 10.1002/art.1780400423. [DOI] [PubMed] [Google Scholar]

- Robins PM, Smith SM, Glutting JJ, Bishop CT. A randomized controlled trial of a cognitive-behavioral family intervention for pediatric recurrent abdominal pain. Journal of Pediatric Psychology. 2005;30(5):397–408. doi: 10.1093/jpepsy/jsi063. [DOI] [PubMed] [Google Scholar]

- Stein RE, Jessop DJ. Functional status II(R). A measure of child health status.[erratum appears in Med Care 1991 May;29(5):following 489] Medical Care. 1990;28(11):1041–1055. doi: 10.1097/00005650-199011000-00006. [DOI] [PubMed] [Google Scholar]

- The American Board of Pediatrics. Standards for physician participation in quality improvement projects. Chapel Hill, NC: American Board of Pediatrics; 2007. pp. 1–4. [Google Scholar]

- Walker LS, Greene JW. The functional disability inventory: Measuring a neglected dimension of child health status. Journal of Pediatric Psychology. 1991;16(1):39–58. doi: 10.1093/jpepsy/16.1.39. [DOI] [PubMed] [Google Scholar]

- Walker LS, Heflinger CA. Quality of life predictors of outcome in pediatric abdominal pain patients: Findings at initial assessment and five-year follow-up. In: Drotar D, editor. Measuring health-related quality of life in children and adolescents: Implications for research and practice. Manwah, NJ: Lawrence Erlbaum Associates; 1998. pp. 237–254. [Google Scholar]