Abstract

We have investigated the psychophysical properties of low-frequency hearing, both before and after implantation, to see if we can account for the benefit to speech understanding and melody recognition of adding acoustic stimulation to electric stimulation. In this paper, we review our work and the work of others and describe preliminary results not previously published. We show (a) that it is possible to preserve normal or near-normal nonlinear cochlear processing in the implanted ear following electric and acoustic stimulation surgery – though this is not the typical outcome; (b) that although low-frequency frequency selectivity is generally disrupted following implantation, some degree of frequency selectivity can be preserved, and (c) that neither nonlinear cochlear processing nor frequency selectivity in the acoustic hearing ear is correlated with the gain in speech understanding afforded by combined electric and acoustic stimulation. In another set of experiments, we show that the value of preserving hearing in the implanted ear is best seen in complex listening environments in which binaural cues can play a role in perception.

Combined electric and acoustic stimulation (EAS) of the same cochlea can occur when acoustic hearing is preserved following insertion of an electrode array. Multiple studies have documented, for most patients, the preservation of low-frequency thresholds following electrode insertions of 10–20 mm. On average, mean thresholds at 125–500 Hz are within 10–20 dB of preinsertion levels depending on the electrode array and the nature of the surgical technique [1–10]. Successful hearing preservation surgery allows electric stimulation of basal neural tissue and acoustic stimulation of apical hair cells that transmit low-frequency acoustic information [1–6, 9–12]. EAS has been shown to improve speech understanding in quiet and in noise beyond that achieved by aided acoustic hearing alone or electric hearing alone [2, 3, 6, 8, 9, 13, 14]. Performance in the EAS condition is commonly much higher than the linear sum of the scores in the electric-only condition and in the acoustic-only condition.

Over a period of several years, we have investigated the psychophysical properties of low-frequency hearing, both before and after implantation, to see if we can account for the benefit to speech understanding and melody recognition of adding acoustic stimulation to electric stimulation. In this paper we (a) review our work and the work of others and (b) describe preliminary results not previously published.

Auditory Thresholds

To date, the only commonly used measure of auditory function before and after surgery has been the audiogram. Unfortunately, the audiogram does not predict the benefit gained from adding acoustic to electric stimulation in the same ear [10, 15] or in different ears [16, 17]. Additionally, a number of researchers have shown that patients with comparable ranges and degrees of residual low-frequency hearing do not enjoy comparable benefit from EAS [10, 18]. These data suggest that the pure-tone audiogram may not be the most useful tool for identifying listeners who could benefit the most from adding acoustic to electric stimulation. Motivated by this logic, we have exploited measures of auditory processing beyond tonal detection, i.e., measures of nonlinear cochlear processing and frequency selectivity, to determine whether those measures will assist us in understanding the synergisms associated with EAS.

Nonlinear Cochlear Processing

It is well known that the basilar membrane response in a healthy cochlea is highly compressive with a slope of 0.2 dB/dB. This translates to a 2-dB increase in basilar membrane output for every 10-dB increase in signal input – or a 5:1 compression ratio. This high degree of compression allows for a broad dynamic range of over 120 dB for the healthy cochlea. This compressive function is due to the electromotile properties of healthy outer hair cells which are known to enhance basilar membrane movement yielding a compressive nonlinear system. A number of recent studies have examined whether the degree of basilar membrane compression is equivalent along the length of the cochlear partition. Behavioral estimates of nonlinear cochlear function using psychophysical masking have shown similar estimates of compression at both low (250 Hz) and high (4,000 Hz) frequencies [19, 20]. Using physiologic measures of basilar membrane function with distortion product otoacoustic emissions, Gorga et al. [21] also demonstrated similar degrees of compressive growth for low- (500 Hz) and high-frequency (4,000 Hz) stimuli.

The presence of compression – a byproduct of nonlinear cochlear processing – for the apical cochlea is relevant for EAS since it is the apical cochlea that receives acoustic stimulation in EAS. The cochlear nonlinearity is responsible for several aspects of normal cochlear function, i.e., high sensitivity, a broad dynamic range, sharp frequency tuning, and enhanced spectral contrasts via suppression. Thus, any reduction in the magnitude of the nonlinearity could result in one or more functional deficits, including impaired speech perception.

Gifford et al. [15] examined whether it was possible to preserve nonlinear cochlear function following hearing preservation surgery for 6 recipients of the 20-mm MED-EL EAS array and for 7 recipients of the 10-mm Nucleus Hybrid array. Nonlinear cochlear processing was evaluated at signal frequencies of 250 and 500 Hz using Schroeder phase maskers [19, 22, 23] with various indices of masker phase curvature. We found that is it possible, but not common, to preserve normal nonlinear processing in the apical cochlea following the surgical insertion of electrode arrays 10 and 20 mm into the scala tympani. Only one subject exhibited completely normal nonlinear cochlear function postoperatively at 250 Hz. However, most subjects had some residual nonlinearity (more so at 250 than 500 Hz). Thus, most patients will enjoy some of the benefits of nonlinear cochlear function at low frequencies following hearing preservation surgery.

Nonlinear Auditory Function and Speech Understanding with Electric and Acoustic Stimulation

In the same study we found that variations in nonlinear cochlear processing did not predict the gain in speech understanding for EAS patients when acoustic stimulation was added to electric stimulation. That is to say, patients with no evidence of nonlinear cochlear processing showed as much benefit in speech recognition when acoustic stimulation was added to electric stimulation as patients with normal, or near-normal, nonlinear cochlear processing.

A Sensitive Test for Cochlear Damage following Surgery

Although the Schroeder masking functions used by Gifford et al. [15] did not provide insight into the speech perception benefit gained when acoustic stimulation was added to electric stimulation, the masking functions were a very sensitive measure of damage following insertion of the electrode array. For 5 out of 13 subjects, there was no significant change in low-frequency audiometric thresholds following surgery. These same subjects, however, demonstrated considerable reduction in the degree of nonlinear cochlear processing. Thus, Schroeder phase masking is a very sensitive index of surgically related damage to the cochlea and may be the most appropriate tool to evaluate the success of ‘soft surgery’ for hearing preservation.

Frequency Selectivity

We have obtained estimates of frequency resolution at 500 Hz both before and after implantation for 5 EAS patients. Two subjects were implanted with the MED-EL 20-mm array and 3 subjects were implanted with the Nucleus Hybrid 10-mm array. Mean age was 43.6 years with a range of 34–71 years. In addition to the 5 EAS subjects, we obtained estimates of frequency selectivity for 15 listeners with normal hearing. The mean age of the normal-hearing group was 25.1 years with a range of 21–31 years.

Estimates of frequency selectivity were obtained by deriving auditory filter (AF) shapes using the notched-noise method [24] in a simultaneous-masking paradigm. Each band of noise (0.4 times the signal frequency) was placed symmetrically or asymmetrically around the 500-Hz signal [25]. The signal was fixed at a level of 10 dB SL, and the masker level was varied adaptively. The masker and signal were 400 and 200 ms in duration, respectively. Prior to obtaining masked thresholds, quiet thresholds were measured for a 200-ms, 500-Hz signal. All thresholds were obtained using a 2-down, 1-up tracking rule to track 70.7% correct performance on the psychometric function [26]. A 3-interval forced-choice paradigm was used for all testing.

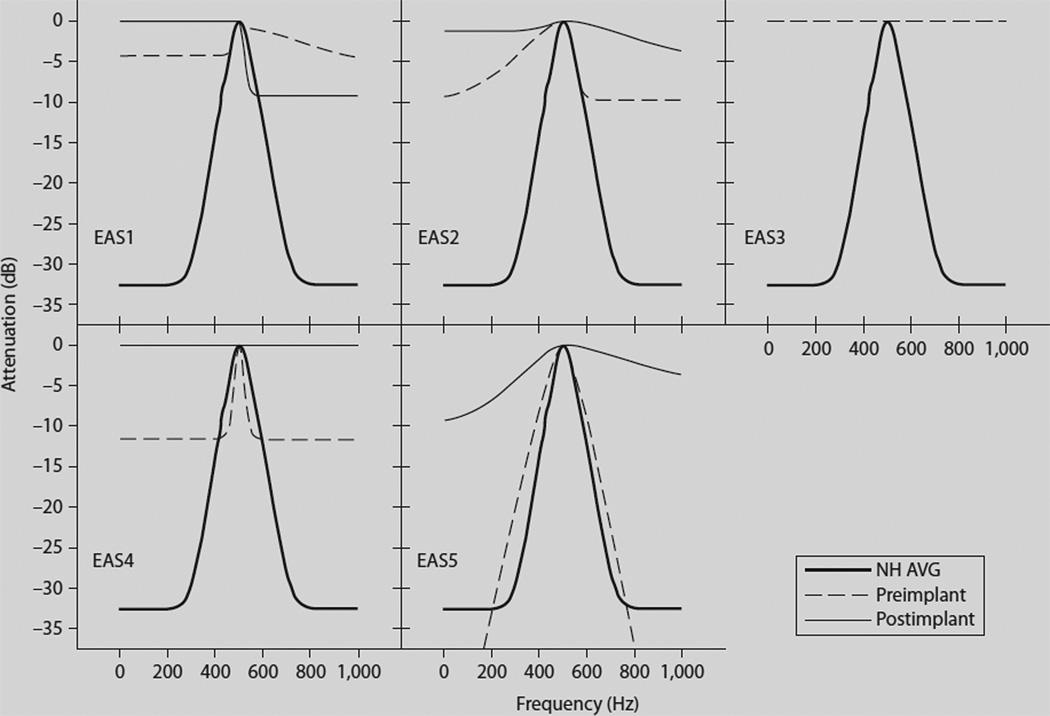

The masked thresholds in the presence of the different notched-noise conditions were utilized to derive filter shapes using a roex (p, k) model [27]. AF shapes are shown in figure 1 for each subject in the pre- and postimplant condition as well as the mean for the normal-hearing listeners. Comparisons across subjects and test points were made in terms of equivalent rectangular bandwidth (ERB) [28] of the AF. Table 1 provides the pre- and postimplant estimates of both psychophysical thresholds at 500 Hz as well as the ERB at 500 Hz. As seen in previous studies with hearing-impaired listeners, the EAS subjects demonstrated considerable intersubject variation in AF width [29, 30]. Two of the subjects (EAS4 and EAS5) demonstrated normal or near-normal frequency selectivity preoperatively – though the dynamic range of the filter was considerably less than normal for EAS4 (fig. 1). All subjects, however, displayed wider than normal AFs postoperatively (table 1; fig. 1). Two subjects, EAS3 and EAS4, demonstrated a complete lack of frequency selectivity postoperatively – with EAS3 demonstrating no frequency selectivity preoperatively, as well.

Fig. 1.

AF shapes for the 5 individual EAS patients (EAS1–EAS5) and the average for the normal-hearing listeners (NH AVG). AF shapes for the pre- and postimplant conditions are represented by dashed and solid lines, respectively. The mean AF shape for the normal-hearing listeners is represented by the bold line in each panel. Subject EAS3 demonstrated no frequency selectivity either before or after implantation and thus her filter shapes are represented by superimposed horizontal lines.

Table 1.

Psychophysical thresholds for 500 Hz and estimates of ERB (in Hz) for the derived AF shapes for the subjects with normal hearing (NH) as well as the pre- and postimplant EAS subjects

| NH subjects | 500-Hz threshold dB SPL |

ERB Hz | EAS subjects | Preimplant 500-Hz threshold dB SPL |

Preimplant ERB Hz |

Postimplant 500-Hz threshold dB SPL |

Postimplant ERB Hz |

|---|---|---|---|---|---|---|---|

| NH1 | 4 | 80.4 | EAS1 | 36 | 338.4 | 49 | 360.0 |

| NH2 | 7 | 97.6 | EAS2 | 26 | 193.4 | 27 | 202.7 |

| NH3 | 4 | 69.0 | EAS3 | 62 | N/A | 66 | N/A |

| NH4 | 6 | 76.3 | EAS4 | 31 | 115.2 | 55 | N/A |

| NH5 | 3 | 120.8 | EAS5 | 35 | 106.5 | 60 | 270.0 |

| NH6 | 6 | 80.2 | |||||

| NH7 | 3 | 95.8 | |||||

| NH8 | 9 | 69.5 | |||||

| NH9 | −3 | 68.3 | |||||

| NH10 | 10 | 111.0 | |||||

| NH11 | 2 | 66.6 | |||||

| NH12 | 20 | 127.0 | |||||

| NH13 | 6 | 43.6 | |||||

| NH14 | 5 | 55.4 | |||||

| NH15 | 4 | 46.2 | |||||

| Mean | 5.73 | 80.52 | mean | 38.00 | 188.38 | 51.40 | 277.57 |

| SD | 4.99 | 25.4 | SD | 13.98 | 107.37 | 15.01 | 78.92 |

Statistical analysis using a one-way ANOVA on ranks revealed a significant difference in the width of the ERB (in Hz) between the normal-hearing and preoperative EAS subjects (H = 8.05, p = 0.005). Thus even prior to surgery the EAS patients had significantly poorer-than-normal frequency selectivity – as would be expected given the patients’ elevated auditory thresholds. A comparison of pre- and postimplant frequency selectivity did not reveal a significant difference in the width of the AF (in Hz) (F = 1.8, p = 0.25). This was likely influenced by the small sample size and the fact that subject EAS3 did not have any measurable frequency selectivity either pre- or postoperatively and thus no change in the width of the AF. Nonetheless, it appears that for some patients frequency selectivity is minimally altered following successful hearing preservation surgery.

Frequency Selectivity and Speech Understanding with Electric and Acoustic Stimulation

The finding that frequency selectivity was poorer than normal, but still present, in some patients is consistent with the finding of Gifford et al. [15] of a diminished, but present, cochlear nonlinearity following surgery. And, consistent with the observations regarding the cochlear nonlinearity, we did not find a significant relationship between AF width and the gain in speech understanding when acoustic stimulation was added to electric stimulation for our initial 5 subjects tested.

In sum, our psychoacoustic tests document that normal nonlinear cochlear function, e.g. sharp frequency tuning, in the region of low-frequency hearing is not necessary for patients to enjoy large benefits in speech understanding when electric and acoustic stimulation are combined.

Frequency Discrimination and Melody Recognition

Finding no significant relationship among measures of auditory function and the gain in speech understanding with EAS, we turn to melody recognition to examine whether performance on measures of auditory function is related to music recognition. Although an increasing number of cochlear implant patients are able to achieve high levels of performance on difficult measures of speech recognition, most cannot recognize familiar melodies when temporal cues are removed [31–35]. This is not surprising given the modest, at best, spectral resolution achieved with electric stimulation [36– 38]. As discussed above, following successful hearing preservation surgery EAS patients have some residual frequency selectivity for low-frequency acoustic stimuli. Thus, EAS patients may be better able to resolve simple and complex pitch patterns necessary for melody recognition than patients who receive only electric stimulation.

Gfeller et al. [32] evaluated the pitch perception abilities of 101 conventional cochlear implant recipients and 13 EAS listeners with binaural acoustic hearing, i.e., patients with low-frequency hearing in both the implanted ear and in the contralateral ear. For the pitch perception task, subjects were asked to determine the direction of pitch change (i.e., higher or lower) for the second pure tone in a ‘pitch pair’. The frequency of the standard tone ranged from 131 to 1,048 Hz. The data demonstrated that the EAS subjects – implanted with a 10-mm Nucleus Hybrid device – identified the direction of pitch change, for frequencies under 663 Hz, better than conventional implant patients.

In another study, Gfeller et al. [39] evaluated melody and musical instrument recognition for 4 EAS listeners and 39 conventional implant recipients. The EAS subjects obtained significantly higher scores on tests of melody recognition and instrument recognition than the patients who received only electric stimulation.

Gfeller’s studies leave little doubt that low-frequency acoustic hearing provides information about pitch that is unavailable from electric stimulation. However, because testing was completed in the sound field using EAS patients with acoustic hearing in both the implanted ear and the nonimplanted ear, it is not clear which partially hearing ear provided the additional information. In other words, it is possible that the acoustic hearing from the implanted ear did not offer any additional benefit over the acoustic hearing provided by the nonimplanted ear.

Several studies have shown that low-frequency acoustic hearing in the ear contralateral to the implant is sufficient to significantly improve melody recognition for implant patients. For example, Dorman et al. [34] described the melody recognition abilities of 15 bimodal patients (implant in one ear and acoustic hearing in the other ear) who had relatively good residual hearing in the nonimplanted ear (e.g. thresholds at 125 and 250 Hz of 35–45 dB). The recognition of melodies without rhythmic cues was assessed for a test set of 5 familiar melodies. Recognition was significantly better in the bimodal condition than in the electric-only condition. Recognition in the bimodal condition, however, was not better than in the acoustic-only condition. Thus, we found no synergistic effect of EAS for the recognition of melodies. All of the benefit of bimodal EAS for melody recognition, relative to a conventional implant, was due to the presence of the acoustic signal. Kong et al. [35] reported similar findings for 5 bimodal subjects with much poorer residual hearing than the patients in the study by Dorman et al. [34].

One Partially Hearing Ear versus Two Partially Hearing Ears

As noted above, EAS patients will have low-frequency acoustic hearing in both the implanted ear and in the contralateral ear. The contribution of the two partially hearing ears to speech recognition is not easy to determine. Most papers have provided speech perception data for the electric-only condition, the ipsilateral EAS condition, and/ or the combined EAS condition (implant plus both partially hearing ears) [2, 3, 6, 8–10]. It has not been common to report performance in the bimodal condition with the ipsilateral ear occluded. This condition is important because it is usually the case that the hearing in the contralateral ear is better than the hearing in the implanted ear, i.e., the ear with the poorer auditory thresholds is usually picked for surgery and/or the thresholds in the operated ear are poorer following surgery than before surgery.

Dorman et al. [40] assessed the bimodal and combined EAS speech perception performance of 22 patients implanted with the 10-mm Nucleus Hybrid electrode array. They found a small, nonsignificant improvement of 9 percentage points in the combined condition relative to the bimodal condition. In a subset of this subject population (n = 7), Gifford et al. [41] found identical scores for the bimodal and combined conditions on measures of sentence recognition in noise using the BKB-SIN test with the speech and noise originating from a single loudspeaker.

On the one hand, the data reported above cast doubt on the benefit to speech understanding of preserved hearing on the operated ear when compared to bimodal stimulation. On the other hand, the test environments in both experiments – stimulus presentation from a single loudspeaker placed at 0° azimuth – minimized the value of binaural cues that could be extracted with two partially hearing ears.

When two ears, rather than one ear, are allowed to participate in a listening test, and when signal and noise are presented from different locations (as is commonly the case in the ‘real world’), then three effects – head shadow, binaural squelch and binaural (or diotic) summation – can influence performance. Head shadow is a physical effect in which the head provides an acoustic barrier resulting in amplitude or level differences between the ears. If one ear is closer to the noise source, the other ear has a higher or better signal-to-noise ratio (SNR). Binaural squelch refers to a binaural effect in which an improvement in the SNR results from a central comparison of time and intensity differences for signals and noise arriving at the two ears. Binaural summation refers to the effect of having redundant information at the two ears.

EAS patients have two acoustically stimulated ears that could code interaural time and intensity differences and two ears to deliver redundant acoustic information. From this point of view, EAS patients should have an advantage over bimodal patients when signal and noise originate from different spatial locations in a sound field. To test this hypothesis, we have collected speech perception data for conventional unilateral implant recipients (n = 25), bilateral cochlear implant recipients (n = 10), bimodal listeners (n = 24), and EAS listeners (n = 5). The 5 EAS listeners were 3 Nucleus Hybrid recipients (2 Hybrid 10 mm, 1 Hybrid-L24 16 mm) and 2 conventional Nucleus N24 (CI24RCA) long-electrode recipients with hearing preservation.

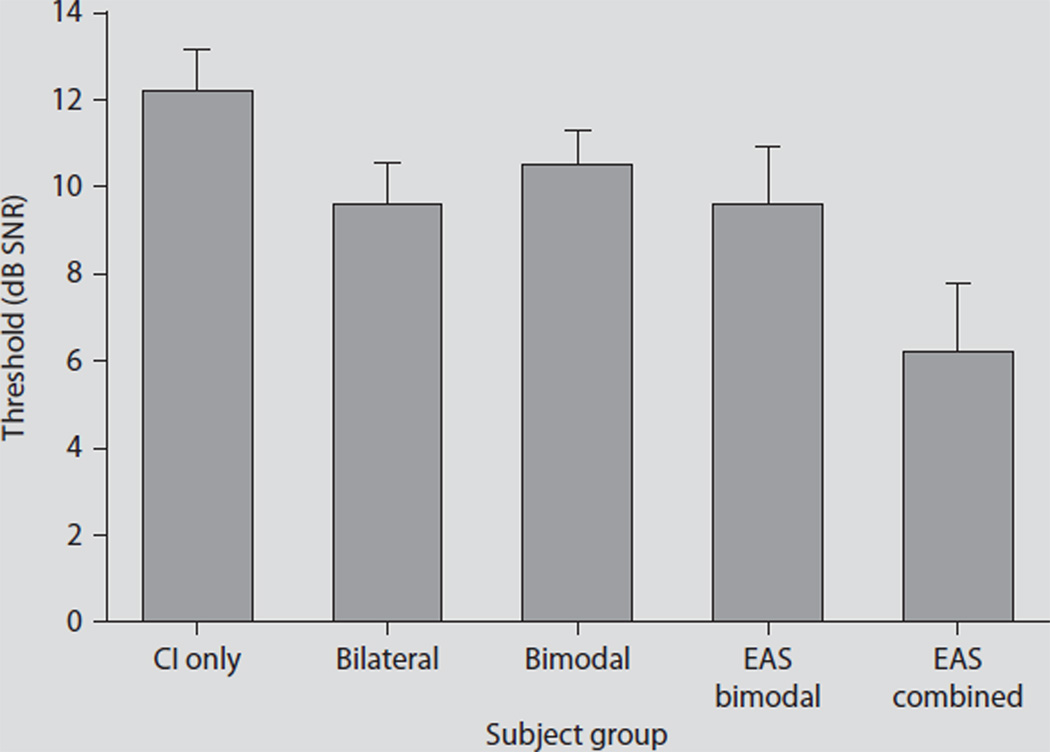

Hearing-in-noise test sentence recognition [42] was assessed in a restaurant noise background [43] originating from the R-SPACE™ 8-loudspeaker array. The 8 loudspeakers were placed circumferentially about the subject’s head at a distance of 60 cm with each speaker separated by 45°. A speech reception threshold (SRT) was obtained using an adaptive procedure to determine the SNR required for 50% correct. The noise level was fixed at 71 dB SPL to simulate the average level of the noise observed during the restaurant recording. Figure 2 displays mean SRT data for the 4 subject groups. The unilateral and bilateral implant mean data are displayed as a reference for electric-only performance.

Fig. 2.

SRT data for unilateral co-chlear implant (CI) recipients (n = 25), bilateral cochlear implant recipients (n = 10), bimodal listeners (n = 24), EAS listeners in the bimodal condition (n = 5, implanted ear occluded), and EAS listeners in the ‘combined’ condition (n = 5, electric plus binaural acoustic hearing). Error bars represent ± one standard error.

The unilateral and bilateral mean SRT scores were 12.2 and 9.6 dB SNR, respectively. For patients with bimodal stimulation, i.e., bimodal patients and EAS patients in the bimodal condition, the SRTs were 10.6 and 9.6 dB SNR, respectively. When the EAS patients were able to access the acoustic hearing in the operated ear, performance improved by 3.4 dB to a mean SRT of 6.2 dB SNR. These preliminary data support our hypothesis that the value of hearing preservation will be best shown in listening environments in which target and masker are spatially separated and environments in which binaural low-frequency cues can play a significant role. Given that every 1-dB improvement in the SNR can translate up to 8–15% improvement in speech recognition performance [42, 44], the addition of acoustic hearing from the implanted ear has the potential to provide large gains in speech intelligibility in complex listening environments.

Summary and Conclusions

There is ample evidence demonstrating that electrodes can be inserted into the scala tympani without destroying residual hearing and that EAS patients can combine information delivered by electric stimulation and acoustic hearing. The data presented in this paper document that it is possible to preserve normal or near-normal nonlinear cochlear processing in the implanted ear following EAS surgery – though this is not the typical outcome. We have also shown that while low-frequency frequency selectivity is generally disrupted following implantation, some degree of frequency selectivity can be preserved. And, in a surprising outcome, we find that neither nonlinear cochlear processing nor frequency selectivity in the acoustically stimulated ear is correlated with the gain in speech understanding when acoustic stimulation is added to electric stimulation.

The goal of hearing preservation surgery is to preserve hearing in the implanted ear. However, to date, it has not been clear whether significant benefit is gained from having two acoustic hearing ears (as in the case of EAS) versus just one (as in the case of bimodal stimulation). Our results demonstrate the benefit of hearing preservation in the implanted ear, i.e., having two acoustic hearing ears, for speech perception in a complex listening environment. Given the preliminary nature of the data, further study is warranted to fully describe the benefits of preserving acoustic hearing following cochlear implantation.

Acknowledgements

This work was supported by NIDCD grant DC006538 to R.H.G. and by NIDCD grant RO1 DC00654-16 to M.F.D. A portion of the results had been presented at the 2006 International Conference on Cochlear Implants and Other Implantable Auditory Technologies, in Vienna, Austria, the 2005 Hearing Preservation Workshop in Warsaw, Poland, and the 2008 Hearing Preservation Workshop in Kansas City, Mo., USA.

References

- 1.Gantz BJ, Turner CW. Combining acoustic and electrical hearing. Laryngoscope. 2003;113:1726–1730. doi: 10.1097/00005537-200310000-00012. [DOI] [PubMed] [Google Scholar]

- 2.Gantz BJ, Turner CW, Gfeller KE, Lowder M. Preservation of hearing in cochlear implant surgery advantages of combined electrical and acoustical speech processing. Laryngoscope. 2005;115:796–802. doi: 10.1097/01.MLG.0000157695.07536.D2. [DOI] [PubMed] [Google Scholar]

- 3.Gantz BJ, Turner CW, Gfeller KE. Acoustic plus electric speech processing: preliminary results of a multicenter clinical trial of the Iowa/Nucleus Hybrid implant. Audiol Neurootol. 2006;11(Suppl 1):63–68. doi: 10.1159/000095616. [DOI] [PubMed] [Google Scholar]

- 4.Skarzynski H, Lorens A, Piotrowska A. Preservation of low-frequency hearing in partial deafness cochlear implantation. Int Congr Ser. 2004;1273:239–242. [Google Scholar]

- 5.Skarzynski H, Lorens A, Piotrowska A, Anderson I. Partial deafness cochlear implantation provides benefit to a new population of individuals with hearing loss. Acta Otolaryngol. 2006;126:934–940. doi: 10.1080/00016480600606632. [DOI] [PubMed] [Google Scholar]

- 6.Gstoettner W, Kiefer J, Baumgartner WD, Pok S, Peters S, Adunka O. Hearing preservation in cochlear implantation for electric acoustic Stimulation. Acta Otolaryngol. 2004;124:348–352. doi: 10.1080/00016480410016432. [DOI] [PubMed] [Google Scholar]

- 7.Gstoettner W, Pok SM, Peters S, Kiefer J, Adunka O. Cochlear implantation with preservation of residual deep frequency hearing. HNO. 2005;53:784–791. doi: 10.1007/s00106-004-1170-5. [DOI] [PubMed] [Google Scholar]

- 8.Gstoettner WK, Van de Heyning P, O’Connor AF, Morera C, Sainz M, Vermeire K, McDonald S, Cavalle L, Helbig S, Valdecasa JG, Anderson I, Adunka OF. Electric acoustic stimulation of the auditory system results of a multi-centre investigation. Acta Otolaryngol. 2008;128:968–975. doi: 10.1080/00016480701805471. [DOI] [PubMed] [Google Scholar]

- 9.Kiefer J, Pok M, Adunka O, Stuerze-becher E, Baumgartner WD, Schmidt M. Combined electric acoustic stimulation of the auditory system: results of a clinical study. Audiol Neurotol. 2005;10:134–144. doi: 10.1159/000084023. [DOI] [PubMed] [Google Scholar]

- 10.Luetje CM, Thedinger BS, Buckler LR, Dawson KL, Lisbona KL. Hybrid cochlear implantation: clinical results and critical review of 13 cases. Otol Neurotol. 2007;28:473–478. doi: 10.1097/RMR.0b013e3180423aed. [DOI] [PubMed] [Google Scholar]

- 11.Von Ilberg C, Kiefer J, Tillein J, Pfennig-dorff T, Hartmann R, Stuerzebecher E, Klinke R. Electric-acoustic stimulation of the auditory system. ORL. 1999;61:334–340. doi: 10.1159/000027695. [DOI] [PubMed] [Google Scholar]

- 12.Gantz BJ, Turner CW. Combining acoustic electrical speech processing: Iowa/Nucleus hybrid implant. Acta Otolaryngol. 2004;124:334–347. doi: 10.1080/00016480410016423. [DOI] [PubMed] [Google Scholar]

- 13.Wilson BS, Lawson DT, Muller JM, Tyler RS, Kiefer J. Cochlear implants: some likely next steps. Annu Rev Biomed Eng. 2003;5:207–249. doi: 10.1146/annurev.bioeng.5.040202.121645. [DOI] [PubMed] [Google Scholar]

- 14.Brill S, Lawson DT, Wolford RD, Wilson BS, Schatzer R. Speech processors for auditory prostheses. Eleventh Quarterly Progress Report on NIH Project N01-DC-8-2105. 2002 [Google Scholar]

- 15.Gifford RH, Dorman MF, Spahr AJ, Bacon SP, Skarzynski H, Lorens A. Hearing preservation surgery: psychophysical estimates of cochlear damage in recipients of a short electrode array. J Acoust Soc Am. 2008;124:2164–2173. doi: 10.1121/1.2967842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ching TY, Inceri P, Hill M. Binaural benefits for adults who use hearing aids and cochlear implants in opposite ears. Ear Hear. 2004;25:9–21. doi: 10.1097/01.AUD.0000111261.84611.C8. [DOI] [PubMed] [Google Scholar]

- 17.Gifford RH, Dorman MF, Spahr AJ, McKarns SA. Combined electric and contralateral acoustic hearing: word and sentence recognition with bimodal hearing. J Speech Hear Res. 2007;50:835–843. doi: 10.1044/1092-4388(2007/058). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wilson B, Wolford R, Lawson D, Schatzer R. Speech processors for auditory prostheses. Third Quarter Progress Report on NIH Project N01-DC-2-1002. 2002 [Google Scholar]

- 19.Oxenham AO, Dau T. Reconciling frequency selectivity and phase effects in masking. J Acoust Soc Am. 2001;110:1525–1538. doi: 10.1121/1.1394740. [DOI] [PubMed] [Google Scholar]

- 20.Plack CJ, Oxenham AJ, Simonson AM, O’Hanlon CG, Drga V, Arifianto D. Estimates of compression at low and high frequencies using masking additivity in normal and impaired ears. J Acoust Soc Am. 2008;123:4321–4330. doi: 10.1121/1.2908297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Gorga MP, Neely ST, Dierking DM, Kopun J, Jolkowski K, Groenenboom K, Tan H, Stiegemann B. Low-frequency and high-frequency cochlear nonlinearity in humans. J Acoust Soc Am. 2007;122:1671. doi: 10.1121/1.2751265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Schroeder MR. Synthesis of low peak- factor signal and binary sequences with low autocorrelation. IEEE Trans Inf Theory. 1971;16:85–89. [Google Scholar]

- 23.Oxenham AO, Dau T. Masker phase effects in normal-hearing and hearing-impaired listeners: evidence for peripheral compression at low signal frequencies. J Acoust Soc Am. 2004;116:2248–2257. doi: 10.1121/1.1786852. [DOI] [PubMed] [Google Scholar]

- 24.Patterson RD. Auditory filter shapes derived with noise stimuli. J Acoust Soc Am. 1976;59:640–654. doi: 10.1121/1.380914. [DOI] [PubMed] [Google Scholar]

- 25.Stone MA, Glasberg BR, Moore BCJ. Simplified measurement of auditory filter shapes using the notched-noise method. Br J Audiol. 1992;26:329–334. doi: 10.3109/03005369209076655. [DOI] [PubMed] [Google Scholar]

- 26.Levitt H. Transformed up-down methods in psychoacoustics. J Acoust Soc Am. 1971;49:467–477. [PubMed] [Google Scholar]

- 27.Patterson RD, Nimmo-Smith I, Weber DL, Milroy R. The deterioration of hearing with age: frequency selectivity, the critical ratio, the audiogram, and speech threshold. J Acoust Soc Am. 1982;72:1788–803. doi: 10.1121/1.388652. [DOI] [PubMed] [Google Scholar]

- 28.Glasberg BR, Moore BCJ. Derivation of auditory filter shapes from notched-noise data. Hear Res. 1990;47:103–138. doi: 10.1016/0378-5955(90)90170-t. [DOI] [PubMed] [Google Scholar]

- 29.Laroche C, Hetu R, Quoc HT, Josserand B, Glasberg B. Frequency selectivity in workers with noise-induced hearing loss. Hear Res. 1992;64:61–72. doi: 10.1016/0378-5955(92)90168-m. [DOI] [PubMed] [Google Scholar]

- 30.Leek MR, Summers V. Auditory filter shapes of normal-hearing and hearing-impaired listeners in continuous broadband noise. J Acoust Soc Am. 1993;94:3127–3137. doi: 10.1121/1.407218. [DOI] [PubMed] [Google Scholar]

- 31.Spahr AJ, Dorman MF, Loiselle L. Performance of patients using different cochlear implant systems: effects of input dynamic range. Ear Hear. 2007;28:260–275. doi: 10.1097/AUD.0b013e3180312607. [DOI] [PubMed] [Google Scholar]

- 32.Gfeller KE, Turner CW, Oleson J, Zhang X, Gantz B, Froman R, Olszewski C. Accuracy of cochlear implant recipients on pitch perception, melody recognition, and speech reception in noise. Ear Hear. 2007;28:412–423. doi: 10.1097/AUD.0b013e3180479318. [DOI] [PubMed] [Google Scholar]

- 33.Drennan WR, Rubinstein JT. Music per- ception in cochlear implant users and its relationship with psychophysical capabilities. J Rehabil Res Dev. 2008;45:779–789. doi: 10.1682/jrrd.2007.08.0118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Dorman MF, Gifford RH, Spahr AJ, McKarns SA. The benefits of combining acoustic and electric stimulation for the recognition of speech, voice and melodies. Audiol Neurotol. 2008;13:105–112. doi: 10.1159/000111782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kong YY, Stickney GS, Zeng FG. Speech and melody recognition in binaurally combined acoustic and electric hearing. J Acoust Soc Am. 2005;117:1351–1361. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]

- 36.Fu Q-J, Shannon RV, Wang X. Effects of noise spectral resolution on vowel and consonant recognition acoustic and electric hearing. J Acoust Soc Am. 1998;104:3586–3596. doi: 10.1121/1.423941. [DOI] [PubMed] [Google Scholar]

- 37.Nelson PB, Jin S-H, Carney AE, Nelson PB, Nelson DA. Understanding speech in modulated interference: cochlear implant users and normal-hearing listeners. J Acoust Soc Am. 2003;113:961–968. doi: 10.1121/1.1531983. [DOI] [PubMed] [Google Scholar]

- 38.Fu Q-J, Nogaki G. Noise susceptibility of cochlear implant users: the role of spectral resolution and smearing. J Assoc Res Otolarygol. 2005;6:19–27. doi: 10.1007/s10162-004-5024-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Gfeller KE, Olszewski C, Turner CW, Gantz BJ, Oleson J. Music perception with cochlear implants and residual hearing. Audiol Neurotol. 2006;11(Suppl 1):12–15. doi: 10.1159/000095608. [DOI] [PubMed] [Google Scholar]

- 40.Dorman MF, Gifford RH, Lewis K, McKarns S, Ratigan J, Spahr A, Shallop JK, Driscoll CLW, Luetje C, Thedinger BS, Beatty CW, Syms M, Novak M, Barrs D, Cowdrey L, Black J, Loiselle L. Word recognition following implantation of conventional and 10 mm hybrid electrodes. Audiol Neurotol. 2009;14:181–189. doi: 10.1159/000171480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Gifford RH, Shallop JK, Driscoll CLW, Beatty CW, Lane JI, Peterson AM. Hearing Preservation Cochlear Implantation with a Long Electrode Array Conf Implant Aud Prostheses. Tahoe City. 2007 [Google Scholar]

- 42.Nilsson M, Soli S, Sullivan J. Development of the hearing in noise test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am. 1994;95:1085–1099. doi: 10.1121/1.408469. [DOI] [PubMed] [Google Scholar]

- 43.Compton-Conley CL, Neuman AC, Killion MC, Levitt H. Performance of directional microphones for hearing aids: real-world versus simulation. J Acoust Soc Am. 2004;15:440–455. doi: 10.3766/jaaa.15.6.5. [DOI] [PubMed] [Google Scholar]

- 44.Plomp R, Mimpen MA. Improving the reliability of testing the speech reception threshold for sentences. Audiology. 1979;18:43–52. doi: 10.3109/00206097909072618. [DOI] [PubMed] [Google Scholar]